All around Meta’s Menlo Park campus, cameras stared at me. I’m not talking about security cameras or my fellow reporters’ DSLRs. I’m not even talking about smartphones. I mean Ray-Ban and Meta’s smart glasses, which Meta hopes we’ll all — one day, in some form — wear.

Technology

Vivo delivered Android 15 (even before Google)

We’re still waiting for Android 15 to make it to the stable channel. Google hasn’t pushed the update to its Pixel phones yet, but that doesn’t mean that the rest of the Android OEMs need to wait. In a surprising move, Chinese phone maker Vivo just released Android 15 to its flagship devices.

Ironically, the two companies that release updates the earliest are taking the longest time to give us Android 15 this year. Samsung, the company that usually releases its updates first has shelved the One UI 7 update until… who knows when? And Google, the company that actually develops Android, has been mum on when it’s going to push the update to the masses.

So, we’re all sitting patiently waiting for this update to come out. We’ve been following rumors about what Android 15 is going to bring. While there are some fun goodies coming, we’re not expecting a massive overhaul like what we saw with the transition to Android 12. So, we’re wondering why it’s taking so long.

Vivo is launching Android 15 first

It’s important to mention that Vivo is launching Android 15 in a stable version. Pixel phones as old as the Pixel 6 have been able to test out the Android 15 developer preview and beta so far.

However, Vivo is pushing the official stable version to the masses, according to Ishan Agarwal, and Alvin, Vivo is actually delivering the update before… well Vivo. The company originally planned to push the update on September 30th. However, it looks like it just couldn’t contain itself. Vivo phone users are already starting to get the update to FunTouch OS 15. Just like OxygenOS and HIOS, the number in FunTouch OS represents the version of Android that it’s running over.

Android 15 is making it to phones like the Vivo X100 phones and the fantastic Vivo X Fold3 Pro. The update is even making it to the iQOO 12. It’s making it to this phone because Vivo owns iQOO.

What is Vivo bringing?

Vivo will bring some neat features. There are features like partial screen sharing, satellite connectivity, AI Eraser for photos, and Private Space. So, if you’re using one of Vivo’s flagship phones, then you should be on the lookout for the new update.

You should get a notification about the update once it hits your device. To manually check, go to your settings and tap on the System update button. This will automatically do a search for the update. If it detects the update, it will prompt you to download and install it.

Technology

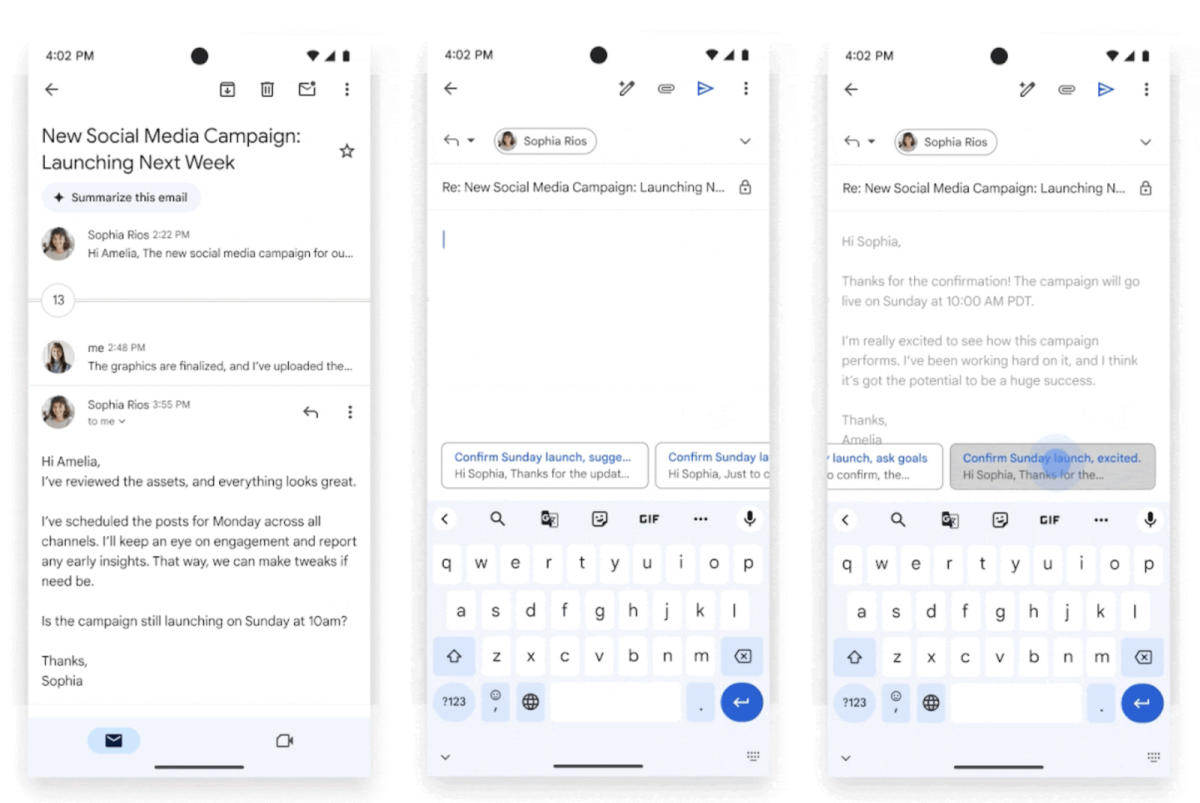

Google launches Gemini’s contextual smart replies in Gmail

When Google rolled out Gemini side panels for Gmail and its other Workspace apps, it revealed that its generative AI chatbot will also be able to offer contextual smart replies for its email service in the future. Now, the company has officially released that feature. Smart replies have existed in Gmail since 2017, giving you a quick, albeit impersonal, way to respond to messages, even if you’re in a hurry or on the go. These machine-generated responses are pretty limited, though, and they’re often just one liners to tell the recipient that you understand what they’re saying or that you agree with whatever they’re suggesting.

The new Gemini-generated smart replies take the full content of the email thread into consideration. While you may still have to edit them a bit if you want them to be as close to something you’d write as possible, they are more detailed and more personable. When you get the feature, you’ll see several response options at the bottom of your screen when you reply through the Gmail app. Just hover over each of them to get a detailed preview before choosing one that you think makes for the best response.

You’ll get access to the feature if you have a Gemini Business, Enterprise, Education or Education Premium add-on, or if you have a Google One AI Premium subscription. Google says it could take up to 15 days before you see Gemini’s smart replies in your app — just make sure you’ve ticked on “Smart features and personalization” in your Gmail app’s Settings page.

Servers computers

Our Blade server Hardware Products Available #shorts

We are best server provider company in India. We provide service in Pan India. Our available Blade server products are Hardware (Brand-HPE/DELL/LENOVO/IBM/FUJITSHU).

Contact us today at 075570 10709 for service.

Visit our website for more details:

www.netgroup.in

Connect with us:

Facebook: https://lnkd.in/g2bEaF2

Instagram: https://lnkd.in/gAawSh6y

Linkedin: https://lnkd.in/gWdfuKk

Google: https://lnkd.in/dNH22GA6

Justdial: https://lnkd.in/d59kNCts

Indiamart: https://lnkd.in/dEdHzQkX

Twitter: https://lnkd.in/gYnHNFRa

Subscribe to our channel for more update.

#netgroup #ITserver #ITservice #servers #server #serverproblems #serverlife #hosting #datacenter #networking #technology #webhosting #dell #lenovo #bladeserver #itsolutions #itcompany #internet #itworks #itissues #itconsulting #connectivity .

source

Technology

AI is changing enterprise computing — and the enterprise itself

Presented by AMD

This article is part of a VB Special Issue called “Fit for Purpose: Tailoring AI Infrastructure.” Catch all the other stories here.

It’s hard to think of any enterprise technology having a greater impact on business today than artificial intelligence (AI), with use cases including automating processes, customizing user experiences, and gaining insights from massive amounts of data.

As a result, there is a realization that AI has become a core differentiator that needs to be built into every organization’s strategy. Some were surprised when Google announced in 2016 that they would be a mobile-first company, recognizing that mobile devices had become the dominant user platform. Today, some companies call themselves ‘AI first,’ acknowledging that their networking and infrastructure must be engineered to support AI above all else.

Failing to address the challenges of supporting AI workloads has become a significant business risk, with laggards set to be left trailing AI-first competitors who are using AI to drive growth and speed towards a leadership position in the marketplace.

However, adopting AI has pros and cons. AI-based applications create a platform for businesses to drive revenue and market share, for example by enabling efficiency and productivity improvements through automation. But the transformation can be difficult to achieve. AI workloads require massive processing power and significant storage capacity, putting strain on already complex and stretched enterprise computing infrastructures.

>>Don’t miss our special issue: Fit for Purpose: Tailoring AI Infrastructure.<<

In addition to centralized data center resources, most AI deployments have multiple touchpoints across user devices including desktops, laptops, phones and tablets. AI is increasingly being used on edge and endpoint devices, enabling data to be collected and analyzed close to the source, for greater processing speed and reliability. For IT teams, a large part of the AI discussion is about infrastructure cost and location. Do they have enough processing power and data storage? Are their AI solutions located where they run best — at on-premises data centers or, increasingly, in the cloud or at the edge?

How enterprises can succeed at AI

If you want to become an AI-first organization, then one of the biggest challenges is building the specialized infrastructure that this requires. Few organizations have the time or money to build massive new data centers to support power-hungry AI applications.

The reality for most businesses is that they will have to determine a way to adapt and modernize their data centers to support an AI-first mentality.

But where do you start? In the early days of cloud computing, cloud service providers (CSPs) offered simple, scalable compute and storage — CSPs were considered a simple deployment path for undifferentiated business workloads. Today, the landscape is dramatically different, with new AI-centric CSPs offering cloud solutions specifically designed for AI workloads and, increasingly, hybrid AI setups that span on-premises IT and cloud services.

AI is a complex proposition and there’s no one-size-fits-all solution. It can be difficult to know what to do. For many organizations, help comes from their strategic technology partners who understand AI and can advise them on how to create and deliver AI applications that meet their specific objectives — and will help them grow their businesses.

With data centers, often a significant part of an AI application, a key element of any strategic partner’s role is enabling data center modernization. One example is the rise in servers and processors specifically designed for AI. By adopting specific AI-focused data center technologies, it’s possible to deliver significantly more compute power through fewer processors, servers, and racks, enabling you to reduce the data center footprint required by your AI applications. This can increase energy efficiency and also reduce the total cost of investment (TCO) for your AI projects.

A strategic partner can also advise you on graphics processing unit (GPU) platforms. GPU efficiency is key to AI success, particularly for training AI models, real-time processing or decision-making. Simply adding GPUs won’t overcome processing bottlenecks. With a well implemented, AI-specific GPU platform, you can optimize for the specific AI projects you need to run and spend only on the resources this requires. This improves your return on investment (ROI), as well as the cost-effectiveness (and energy efficiency) of your data center resources.

Similarly, a good partner can help you identify which AI workloads truly require GPU-acceleration, and which have greater cost effectiveness when running on CPU-only infrastructure. For example, AI Inference workloads are best deployed on CPUs when model sizes are smaller or when AI is a smaller percentage of the overall server workload mix. This is an important consideration when planning an AI strategy because GPU accelerators, while often critical for training and large model deployment, can be costly to obtain and operate.

Data center networking is also critical for delivering the scale of processing that AI applications require. An experienced technology partner can give you advice about networking options at all levels (including rack, pod and campus) as well as helping you to understand the balance and trade-off between different proprietary and industry-standard technologies.

What to look for in your partnerships

Your strategic partner for your journey to an AI-first infrastructure must combine expertise with an advanced portfolio of AI solutions designed for the cloud and on-premises data centers, user devices, edge and endpoints.

AMD, for example, is helping organizations to leverage AI in their existing data centers. AMD EPYC(TM) processors can drive rack-level consolidation, enabling enterprises to run the same workloads on fewer servers, CPU AI performance for small and mixed AI workloads, and improved GPU performance, supporting advanced GPU accelerators and minimize computing bottlenecks. Through consolidation with AMD EPYC™ processors data center space and power can be freed to enable deployment of AI-specialized servers.

The increase in demand for AI application support across the business is putting pressure on aging infrastructure. To deliver secure and reliable AI-first solutions, it’s important to have the right technology across your IT landscape, from data center through to user and endpoint devices.

Enterprises should lean into new data center and server technologies to enable them to speed up their adoption of AI. They can reduce the risks through innovative yet proven technology and expertise. And with more organizations embracing an AI-first mindset, the time to get started on this journey is now.

Robert Hormuth is Corporate Vice President, Architecture & Strategy — Data Center Solutions Group, AMD

Sponsored articles are content produced by a company that is either paying for the post or has a business relationship with VentureBeat, and they’re always clearly marked. For more information, contact

Servers computers

Dell server R720 Review | Dell R720 | Dell PowerEdge R720 | Tech Saqi Mirza

In This video i telling about of Dell PowerEdge R720. Dell R720 is designed to running a wide range of applications and virtualization environments for normal nd professional users .

Dell R720 comes after Dell R710 model .

Dell R720 rack based server is a general purpose server with highly memory (max 768GB) and best I / O capabilities to complete its task . Dell R720 supported Intel® Xeon® E5-2600 series processors, and the capability to support dual RAID controllers,Dell R720 have ability to handle challenging tasks

like database storage , high end computing nd virtual infra structure . .

source

Technology

Welcome to Meta’s future, where everyone wears cameras

I visited Meta for this year’s Connect conference, where just about every hardware product involved cameras. They’re on the Ray-Ban Meta smart glasses that got a software update, the new Quest 3S virtual reality headset, and Meta’s prototype Orion AR glasses. Orion is what Meta calls a “time machine”: a functioning example of what full-fledged AR could look like, years before it will be consumer-ready.

But on Meta’s campus, at least, the Ray-Bans were already everywhere. It was a different kind of time machine: a glimpse into CEO Mark Zuckerberg’s future world where glasses are the new phones.

I’m conflicted about it.

Meta really wants to put cameras on your face. The glasses, which follow 2021’s Ray-Ban Stories, are apparently making inroads on that front, as Zuckerberg told The Verge sales are going “very well.” They aren’t full-fledged AR glasses since they have no screen to display information, though they’re becoming more powerful with AI features. But they’re perfect for what the whole Meta empire is built on: encouraging people to share their lives online.

The glasses come in a variety of classic Ray-Ban styles, but for now, it’s obvious users aren’t just wearing glasses. As I wandered the campus, I spotted the telltale signs on person after person: two prominent circle cutouts at the edges of their glasses, one for a 12MP ultrawide camera and the other for an indicator light.

This light flashes when a user is taking photos and videos, and it’s generally visible even in sunlight. In theory, that should have put my mind at ease: if the light wasn’t on, I could trust nobody was capturing footage of me tucking into some lunch before my meetings.

But as I talked with people around campus, I was always slightly on edge. I found myself keenly aware of those circles, checking to see if somebody was filming me when I wasn’t paying attention. The mere potential of a recording would distract me from conversations, inserting a low hum of background anxiety.

When I put a pair on for myself, the situation changed

Then, when I put a pair on for myself, the situation suddenly changed. As a potential target of recording, I’d been hesitant, worried I might be photographed or filmed as a byproduct of making polite eye contact. With the glasses on my own face, though, I felt that I should be recording more. There’s something really compelling about the experience of a camera right at the level of your eyes. By just pressing a button on the glasses, I could take a photo or video of anything I was seeing at exactly the angle I was seeing it. No awkward fumble of pulling out my phone and hoping the moment lasted. There might be no better way to share my reality with other people.

Meta’s smart glasses have been around for a few years now, and I’m hardly the first person — or even the first person at The Verge — to be impressed by them. But this was the first time I’d seen these glasses not as early adopter tech, but as a ubiquitous product like a phone or smartwatch. I got a hint of how this seamless recording would work at scale, and the prospect is both exciting and terrifying.

The camera phone was a revolution in its own right, and we’re still grappling with its social effects. Nearly anyone can now document police brutality or capture a fleeting funny moment, but also take creepshots and post them online or (a far lesser offense, to be clear) annoy people at concerts. What will happen when even the minimal friction of pulling a phone out drops away, and billions of people can immediately snap a picture of anything they see?

Personally, I can see how incredibly useful this would be to capture candid photos of my new baby, who is already starting to recognize when a phone is taking a picture of her. But it’s not hard to imagine far more malicious uses. Sure, you might think that we all got used to everyone pointing their phone cameras at everything, but I’m not exactly sure that’s a good thing; I don’t like that there’s a possibility I end up in somebody’s TikTok just because I stepped outside the house. (The rise of sophisticated facial recognition makes the risks even greater.) With ubiquitous glasses-equipped cameras, I feel like there’s an even greater possibility that my face shows up somewhere on the internet without my permission.

There are also clear risks to integrating cameras into what is, for many people, a nonnegotiable vision aid. If you already wear glasses and switch to prescription smart glasses, you’ll either have to carry a low-tech backup or accept that they’ll stay on in some potentially very awkward places, like a public bathroom. The current Ray-Ban Meta glasses are largely sunglasses, so they’re probably not most people’s primary set. But you can get them with clear and transition lenses, and I bet Meta would like to market them more as everyday specs.

Of course, there’s no guarantee most people will buy them. The Ray-Ban Meta glasses are pretty good gadgets now, but I was at Meta’s campus meeting Meta employees to preview Meta hardware for a Meta event. It’s not surprising Meta’s latest hardware was commonplace, and it doesn’t necessarily tell us much about what people outside that world want.

Camera glasses have been just over the horizon for years now. Remember how magical I said taking pictures of what’s right in front of your eyes is? My former colleague Sean O’Kane relayed almost the exact same experience with Snap Spectacles back in 2016.

But Meta is the first company to make a credible play for mainstream acceptance. They’re a lot of fun — and that’s what scares me a little.

Technology

NYT Connections today — hints and answers for Sunday, September 29 (game #476)

Good morning! Let’s play Connections, the NYT’s clever word game that challenges you to group answers in various categories. It can be tough, so read on if you need clues.

What should you do once you’ve finished? Why, play some more word games of course. I’ve also got daily Wordle hints and answers, Strands hints and answers and Quordle hints and answers articles if you need help for those too.

SPOILER WARNING: Information about NYT Connections today is below, so don’t read on if you don’t want to know the answers.

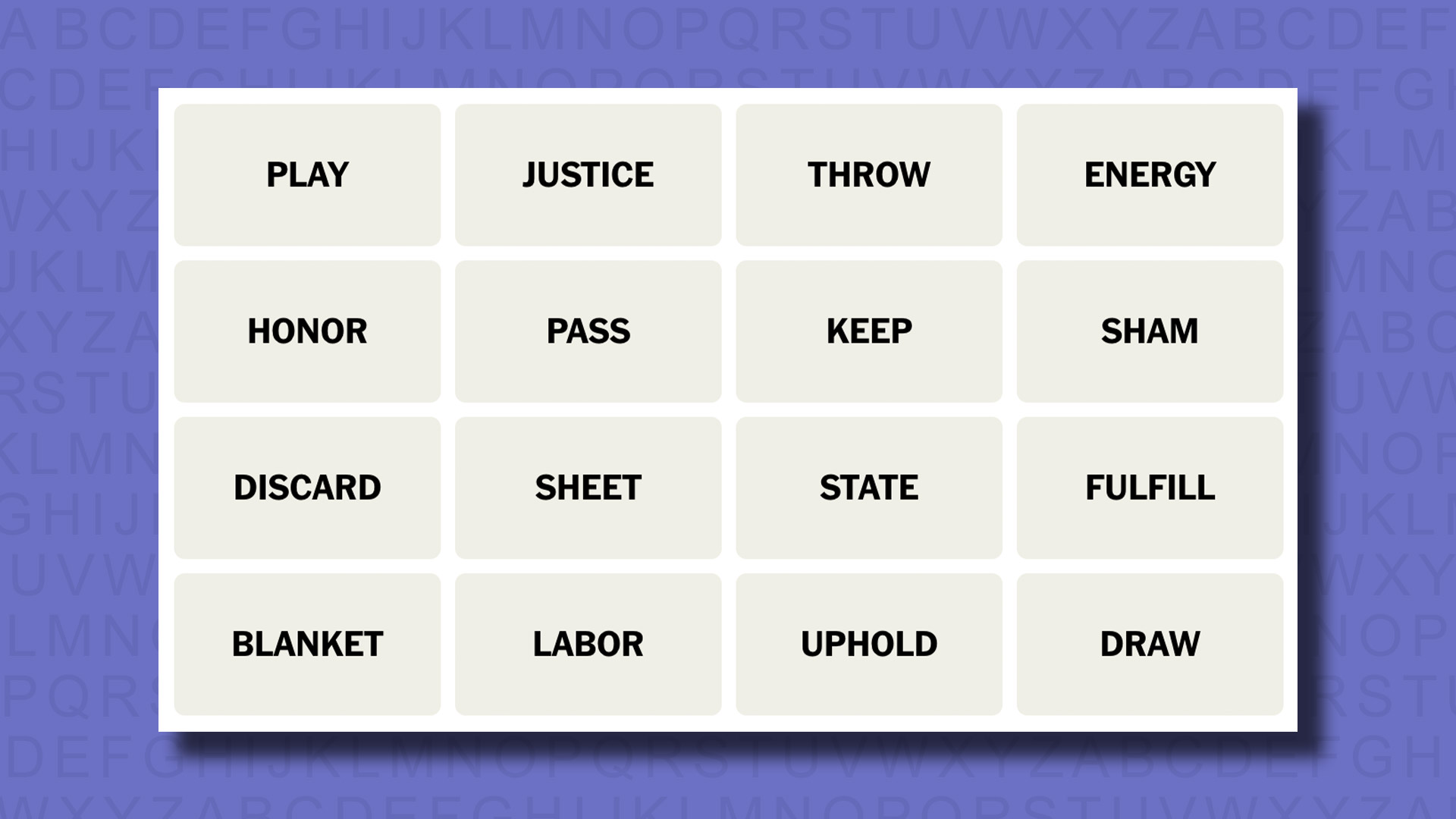

NYT Connections today (game #476) – today’s words

Today’s NYT Connections words are…

- PLAY

- JUSTICE

- THROW

- ENERGY

- HONOR

- PASS

- КЕЕР

- SHAM

- DISCARD

- SHEET

- STATE

- FULFILL

- BLANKET

- LABOR

- UPHOLD

- DRAW

NYT Connections today (game #476) – hint #1 – group hints

What are some clues for today’s NYT Connections groups?

- Yellow: Do as you say

- Green: Covers

- Blue: Snap?

- Purple: They run the country

Need more clues?

We’re firmly in spoiler territory now, but read on if you want to know what the four theme answers are for today’s NYT Connections puzzles…

NYT Connections today (game #476) – hint #2 – group answers

What are the answers for today’s NYT Connections groups?

- YELLOW: MAKE GOOD ON, AS A PROMISE

- GREEN: BEDDING

- BLUE: ACTIONS IN CARD GAMES

- PURPLE: CABINET DEPARTMENTS

Right, the answers are below, so DO NOT SCROLL ANY FURTHER IF YOU DON’T WANT TO SEE THEM.

NYT Connections today (game #476) – the answers

The answers to today’s Connections, game #476, are…

- YELLOW: MAKE GOOD ON, AS A PROMISE FULFILL, HONOR, KEEP, UPHOLD

- GREEN: BEDDING BLANKET, SHAM, SHEET, THROW

- BLUE: ACTIONS IN CARD GAMES DISCARD, DRAW, PASS, PLAY

- PURPLE: CABINET DEPARTMENTS ENERGY, JUSTICE, LABOR, STATE

- My rating: Moderate

- My score: 2 mistakes

Oh NYT, you devious things. I lost two guesses today on the blue group, ACTIONS IN CARD GAMES, because of some classic misdirection. The eventual answers were DISCARD, DRAW, PASS and PLAY, but I instead had KEEP in there as one of the solutions, which does make sense but which obviously wasn’t right.

With two guesses down I was a little worried, so moved on to other categories and instead got the yellow MAKE GOOD ON, AS A PROMISE group, which was pretty straightforward once I focused. I then got the supposedly most difficult one, the purple group, after realizing that ENERGY, JUSTICE, LABOR and STATE were all CABINET DEPARTMENTS. That made blue easier, and I solved green by default.

How did you do today? Send me an email and let me know.

Yesterday’s NYT Connections answers (Saturday, 28 September, game #475)

- YELLOW: COMPOSITE BLEND, COMPOUND, CROSS, HYBRID

- GREEN: EMBED LODGE, PLANT, STICK, WEDGE

- BLUE: ITEMS IN A MONOPOLY BOX DEED, HOTEL, HOUSE, TOKEN

- PURPLE: ___ CONTROL BIRTH, CRUISE, QUALITY, REMOTE

What is NYT Connections?

NYT Connections is one of several increasingly popular word games made by the New York Times. It challenges you to find groups of four items that share something in common, and each group has a different difficulty level: green is easy, yellow a little harder, blue often quite tough and purple usually very difficult.

On the plus side, you don’t technically need to solve the final one, as you’ll be able to answer that one by a process of elimination. What’s more, you can make up to four mistakes, which gives you a little bit of breathing room.

It’s a little more involved than something like Wordle, however, and there are plenty of opportunities for the game to trip you up with tricks. For instance, watch out for homophones and other word games that could disguise the answers.

It’s playable for free via the NYT Games site on desktop or mobile.

-

Womens Workouts6 days ago

Womens Workouts6 days ago3 Day Full Body Women’s Dumbbell Only Workout

-

Technology2 weeks ago

Technology2 weeks agoWould-be reality TV contestants ‘not looking real’

-

News7 days ago

News7 days agoOur millionaire neighbour blocks us from using public footpath & screams at us in street.. it’s like living in a WARZONE – WordupNews

-

Science & Environment1 week ago

Science & Environment1 week ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment1 week ago

Science & Environment1 week agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment1 week ago

Science & Environment1 week agoSunlight-trapping device can generate temperatures over 1000°C

-

Science & Environment1 week ago

Science & Environment1 week agoHyperelastic gel is one of the stretchiest materials known to science

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHow to unsnarl a tangle of threads, according to physics

-

News2 weeks ago

News2 weeks agoYou’re a Hypocrite, And So Am I

-

Science & Environment1 week ago

Science & Environment1 week agoHow to wrap your mind around the real multiverse

-

Science & Environment1 week ago

Science & Environment1 week agoPhysicists are grappling with their own reproducibility crisis

-

Science & Environment1 week ago

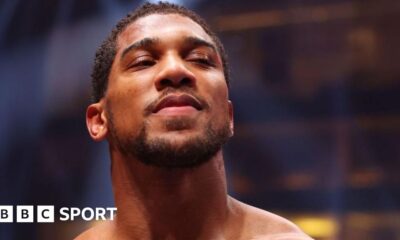

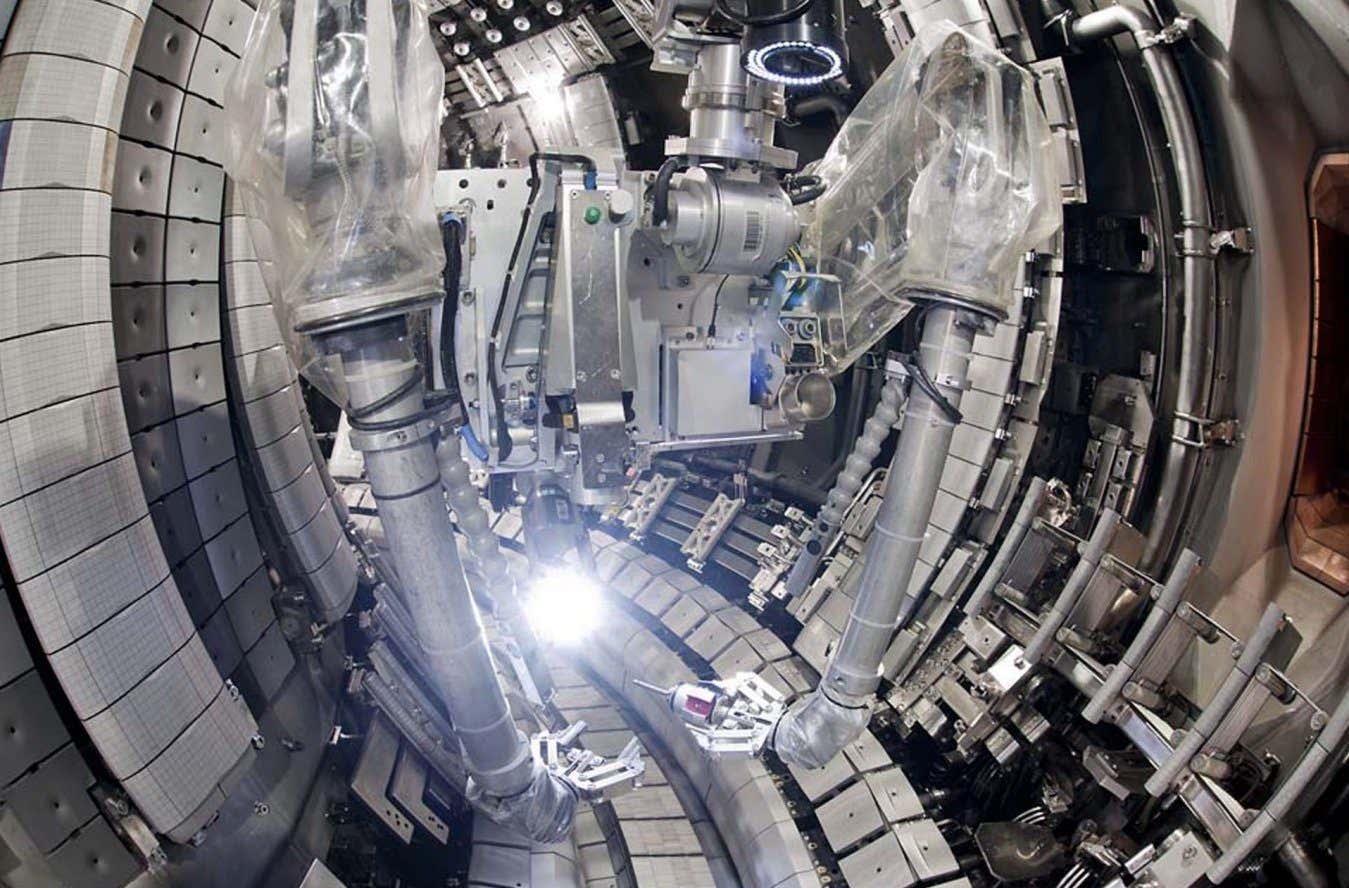

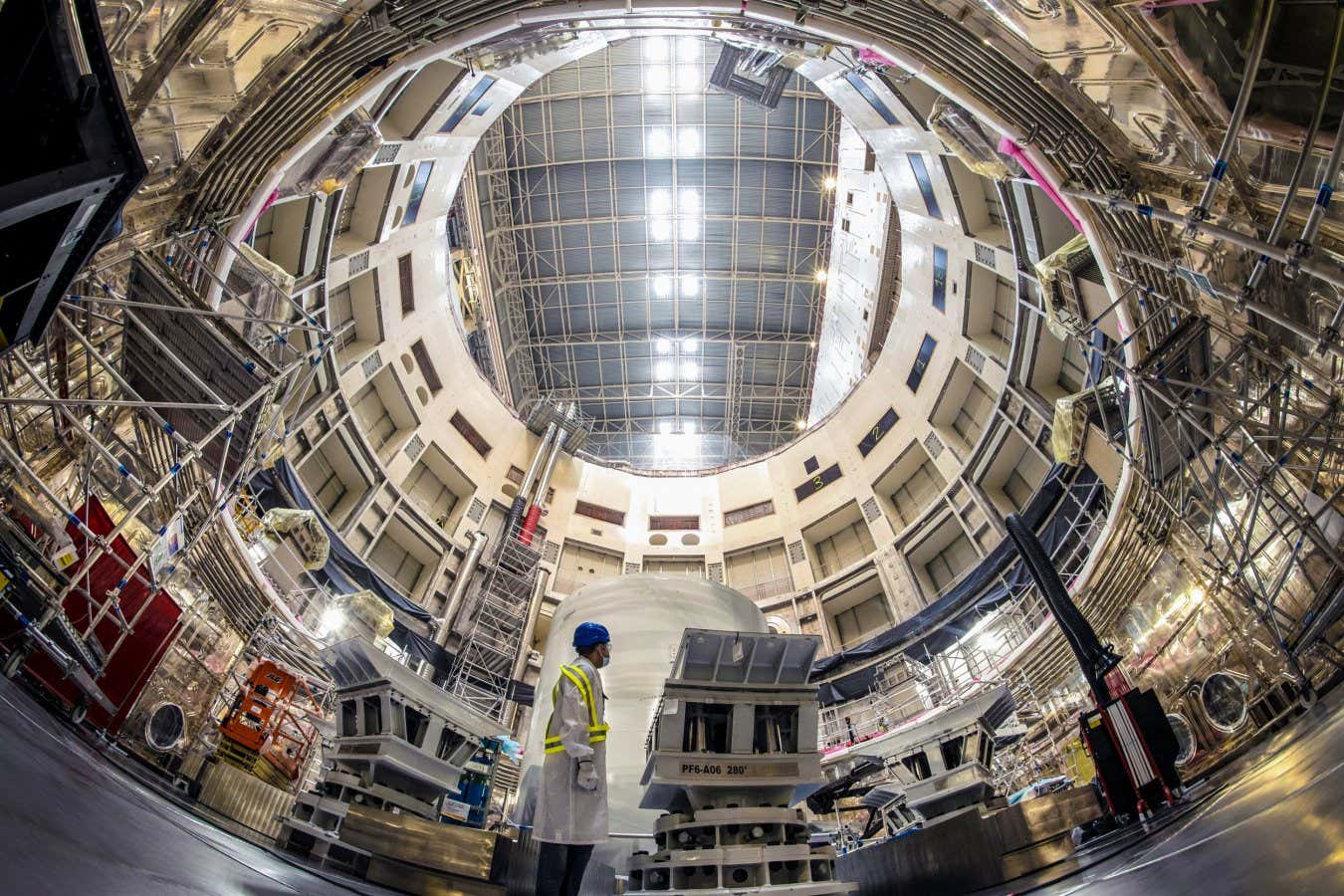

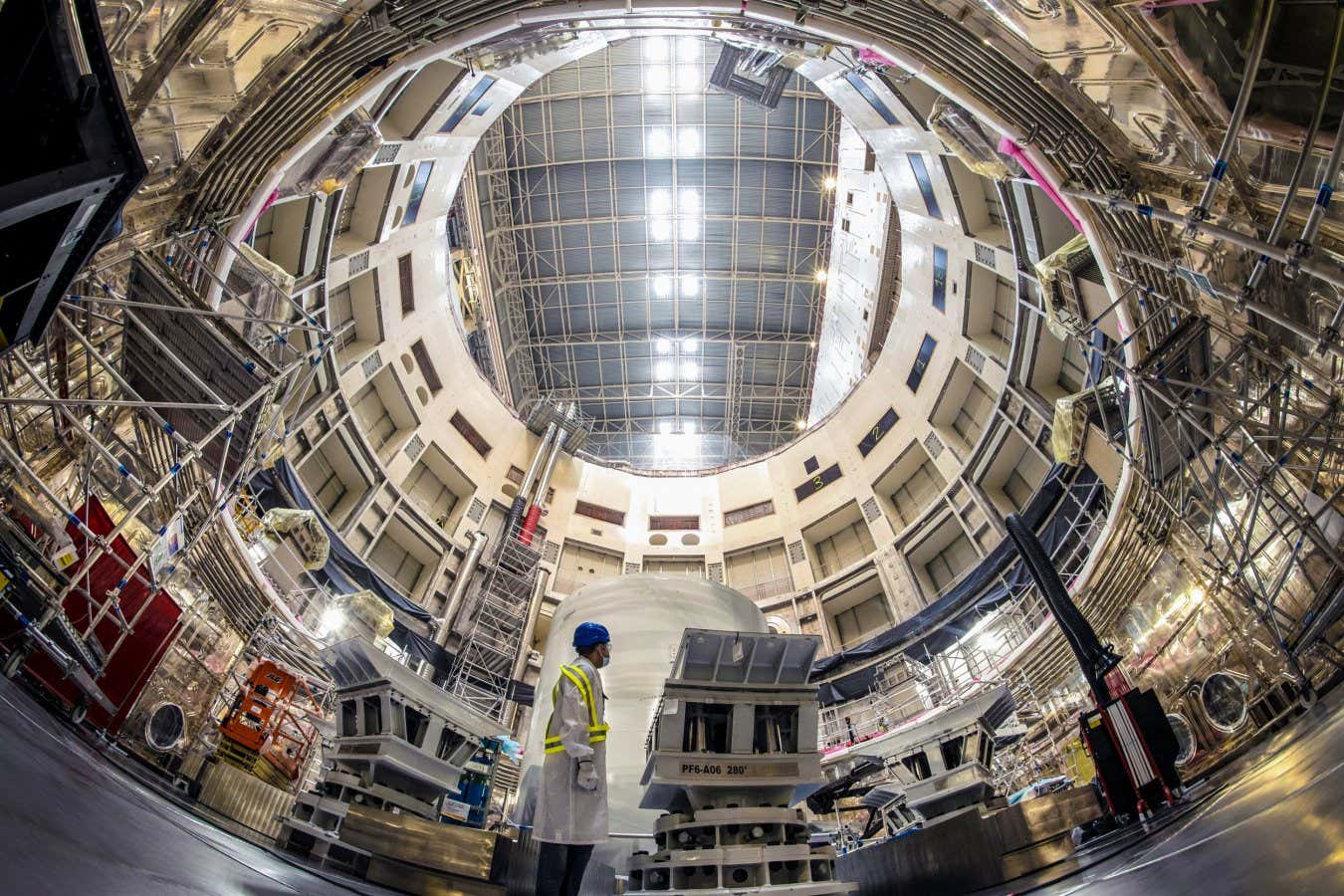

Science & Environment1 week agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

Sport1 week ago

Sport1 week agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

Science & Environment1 week ago

Science & Environment1 week agoLiquid crystals could improve quantum communication devices

-

Science & Environment1 week ago

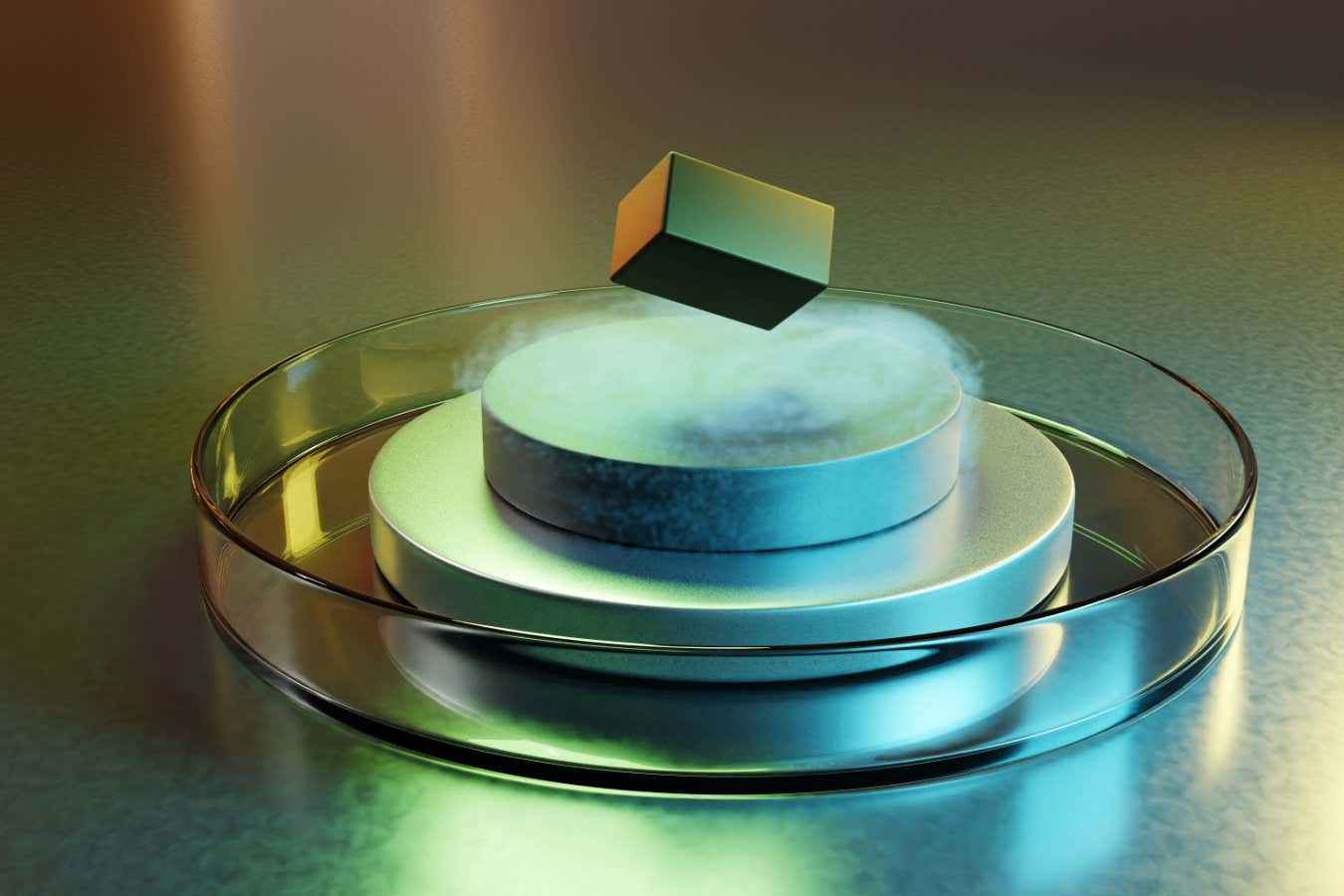

Science & Environment1 week agoQuantum ‘supersolid’ matter stirred using magnets

-

Science & Environment1 week ago

Science & Environment1 week agoWhy this is a golden age for life to thrive across the universe

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum forces used to automatically assemble tiny device

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoCaroline Ellison aims to duck prison sentence for role in FTX collapse

-

Science & Environment1 week ago

Science & Environment1 week agoNuclear fusion experiment overcomes two key operating hurdles

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCardano founder to meet Argentina president Javier Milei

-

News1 week ago

News1 week agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

Womens Workouts1 week ago

Womens Workouts1 week agoBest Exercises if You Want to Build a Great Physique

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoNerve fibres in the brain could generate quantum entanglement

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoLaser helps turn an electron into a coil of mass and charge

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Womens Workouts1 week ago

Womens Workouts1 week agoEverything a Beginner Needs to Know About Squatting

-

Science & Environment7 days ago

Science & Environment7 days agoMeet the world's first female male model | 7.30

-

News2 weeks ago

News2 weeks ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Science & Environment1 week ago

Science & Environment1 week agoHow do you recycle a nuclear fusion reactor? We’re about to find out

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBlockdaemon mulls 2026 IPO: Report

-

News1 week ago

News1 week agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

News7 days ago

News7 days agoFour dead & 18 injured in horror mass shooting with victims ‘caught in crossfire’ as cops hunt multiple gunmen

-

Womens Workouts6 days ago

Womens Workouts6 days ago3 Day Full Body Toning Workout for Women

-

Travel5 days ago

Travel5 days agoDelta signs codeshare agreement with SAS

-

Politics4 days ago

Politics4 days agoHope, finally? Keir Starmer’s first conference in power – podcast | News

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum time travel: The experiment to ‘send a particle into the past’

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoSEC asks court for four months to produce documents for Coinbase

-

Sport1 week ago

Sport1 week agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

Business1 week ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Technology1 week ago

Technology1 week agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

News1 week ago

News1 week agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Womens Workouts1 week ago

Womens Workouts1 week agoKeep Your Goals on Track This Season

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoA slight curve helps rocks make the biggest splash

-

Science & Environment1 week ago

Science & Environment1 week agoWhy we need to invoke philosophy to judge bizarre concepts in science

-

Science & Environment1 week ago

Science & Environment1 week agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

News1 week ago

News1 week agoChurch same-sex split affecting bishop appointments

-

Science & Environment1 week ago

Science & Environment1 week agoTiny magnet could help measure gravity on the quantum scale

-

Technology1 week ago

Technology1 week agoFivetran targets data security by adding Hybrid Deployment

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDecentraland X account hacked, phishing scam targets MANA airdrop

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘Silly’ to shade Ethereum, the ‘Microsoft of blockchains’ — Bitwise exec

-

Business1 week ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Politics1 week ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

Womens Workouts1 week ago

Womens Workouts1 week agoHow Heat Affects Your Body During Exercise

-

News7 days ago

News7 days agoWhy Is Everyone Excited About These Smart Insoles?

-

Politics2 weeks ago

Politics2 weeks agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Technology2 weeks ago

Technology2 weeks agoCan technology fix the ‘broken’ concert ticketing system?

-

Health & fitness2 weeks ago

Health & fitness2 weeks agoThe secret to a six pack – and how to keep your washboard abs in 2022

-

Science & Environment1 week ago

Science & Environment1 week agoBeing in two places at once could make a quantum battery charge faster

-

Science & Environment1 week ago

Science & Environment1 week agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Science & Environment1 week ago

Science & Environment1 week agoHow one theory ties together everything we know about the universe

-

Science & Environment1 week ago

Science & Environment1 week agoA tale of two mysteries: ghostly neutrinos and the proton decay puzzle

-

Science & Environment1 week ago

Science & Environment1 week agoUK spurns European invitation to join ITER nuclear fusion project

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘Everything feels like it’s going to shit’: Peter McCormack reveals new podcast

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoEthereum falls to new 42-month low vs. Bitcoin — Bottom or more pain ahead?

-

News1 week ago

News1 week agoBrian Tyree Henry on his love for playing villains ahead of “Transformers One” release

-

Womens Workouts1 week ago

Womens Workouts1 week agoWhich Squat Load Position is Right For You?

-

News1 week ago

News1 week agoBangladesh Holds the World Accountable to Secure Climate Justice

-

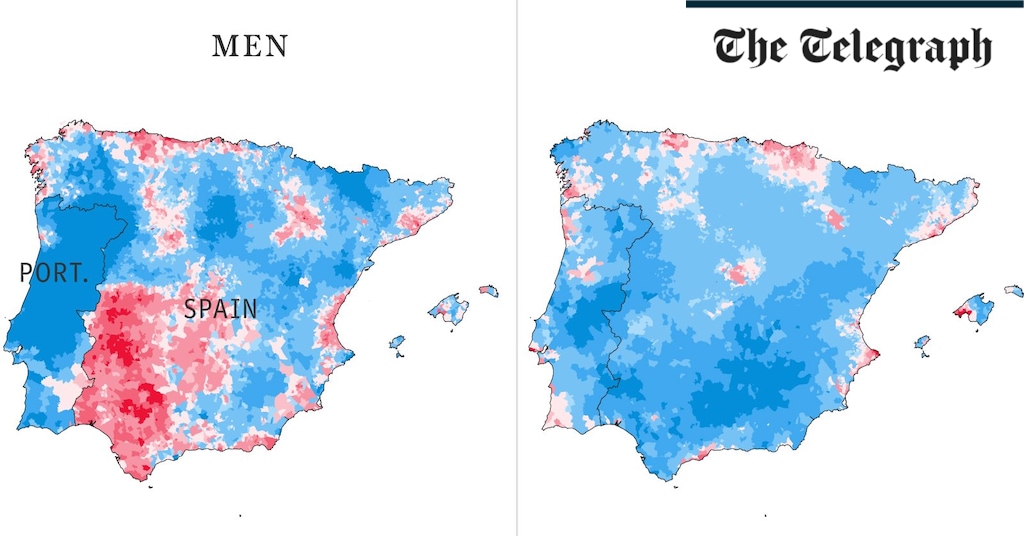

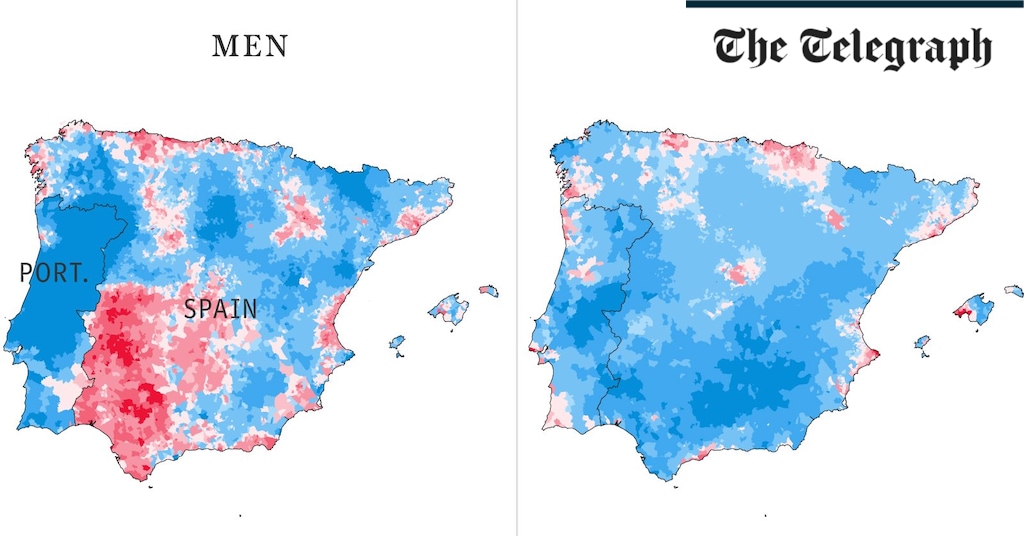

Health & fitness2 weeks ago

Health & fitness2 weeks agoThe maps that could hold the secret to curing cancer

-

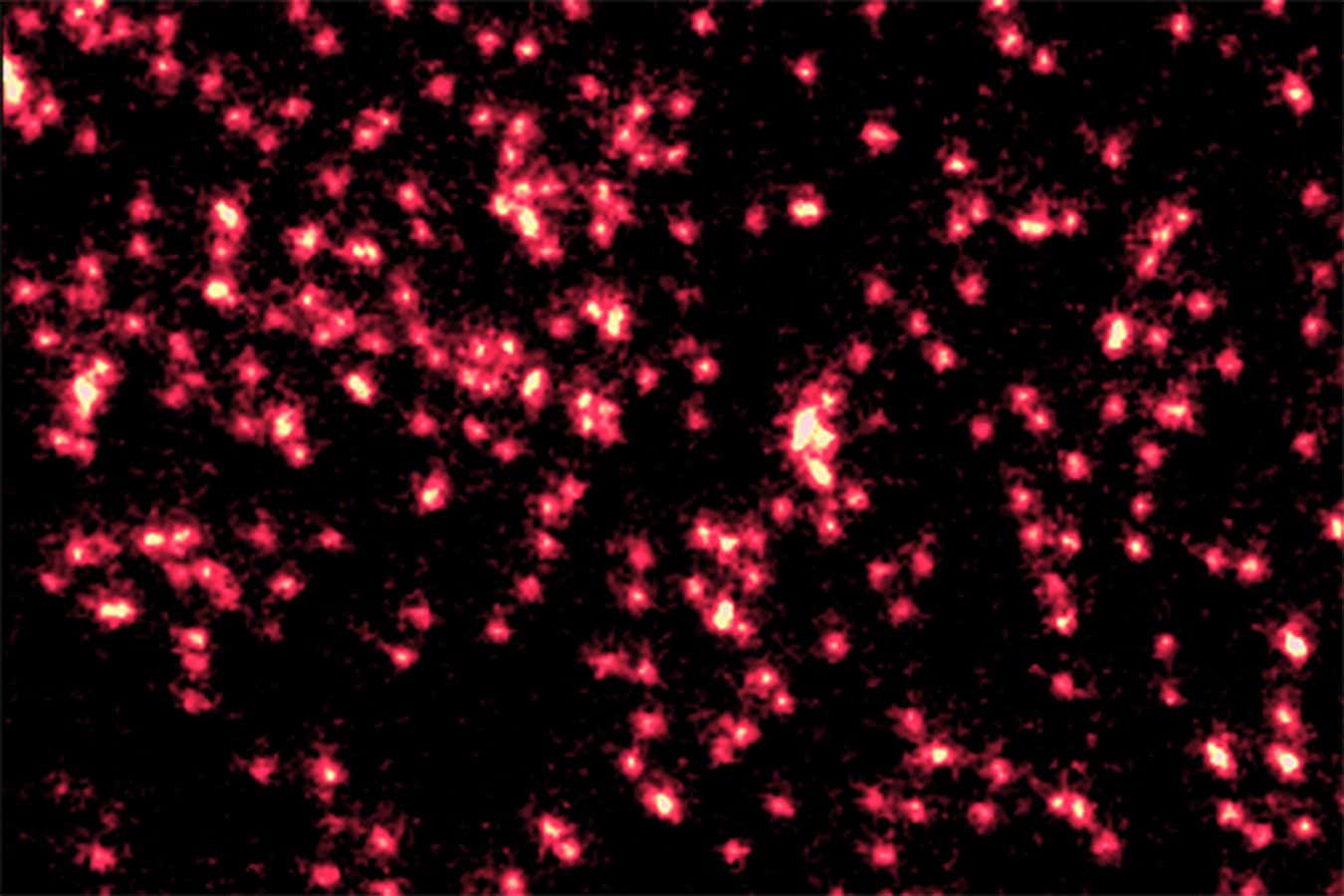

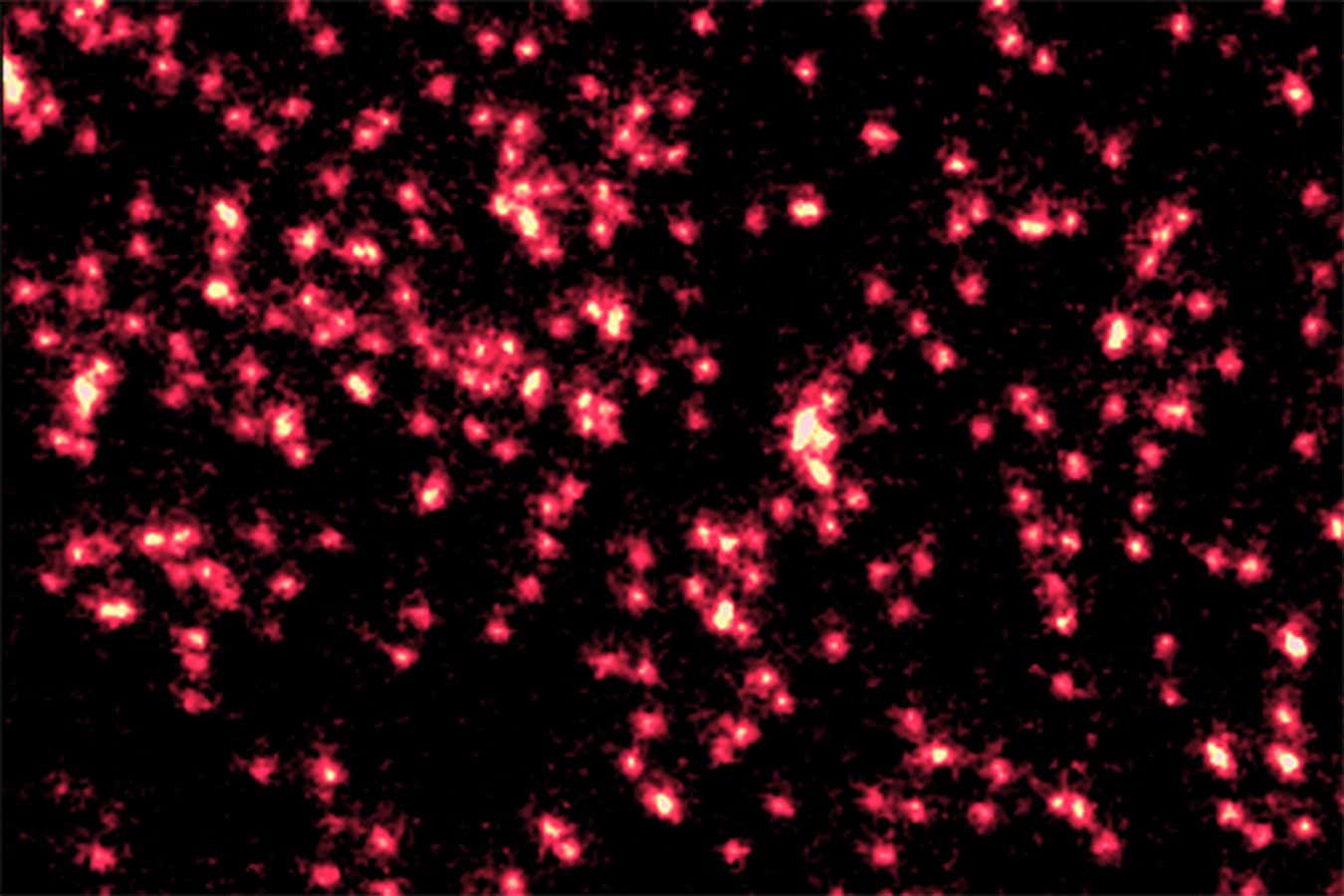

Science & Environment1 week ago

Science & Environment1 week agoSingle atoms captured morphing into quantum waves in startling image

-

Science & Environment1 week ago

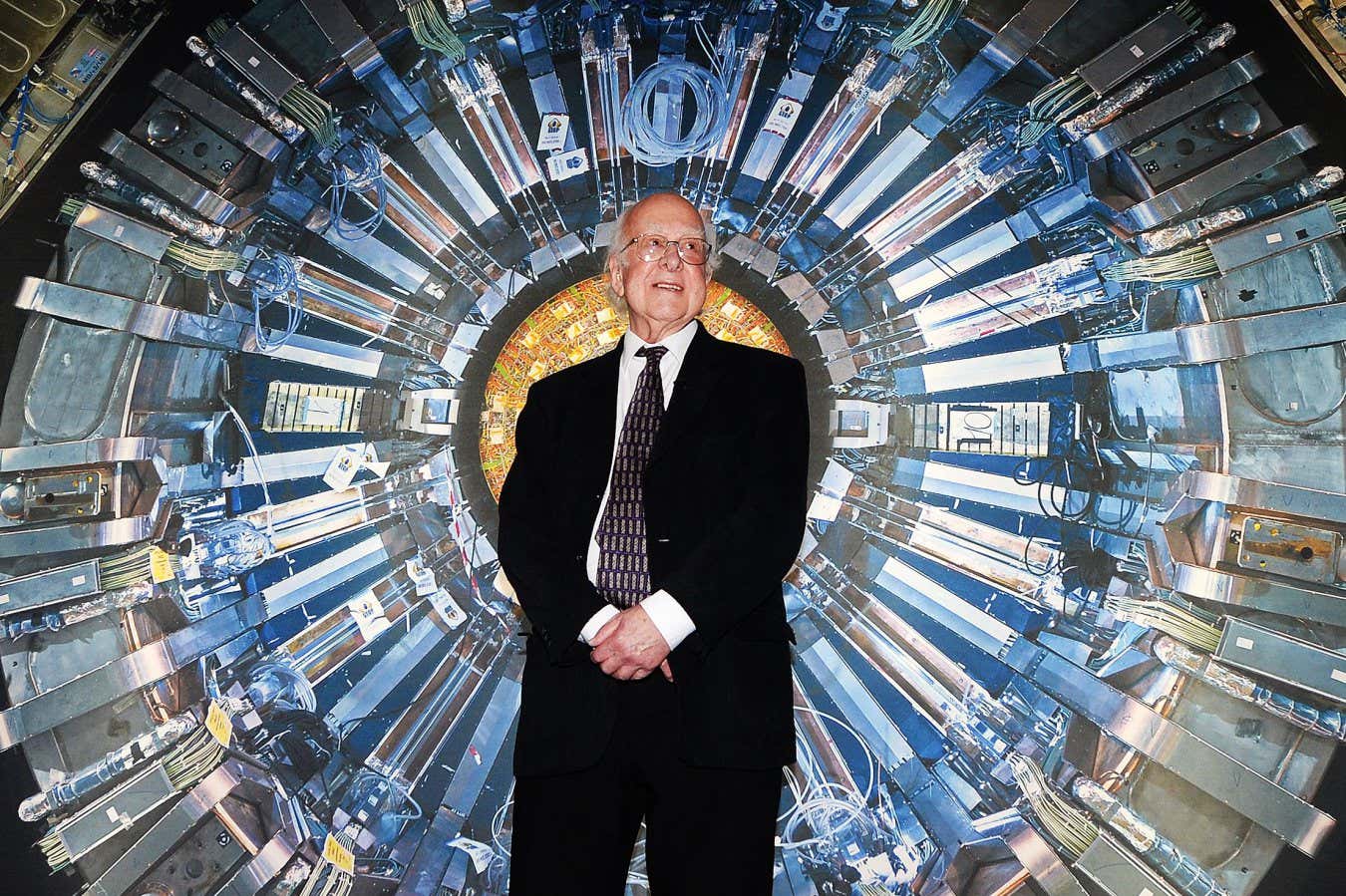

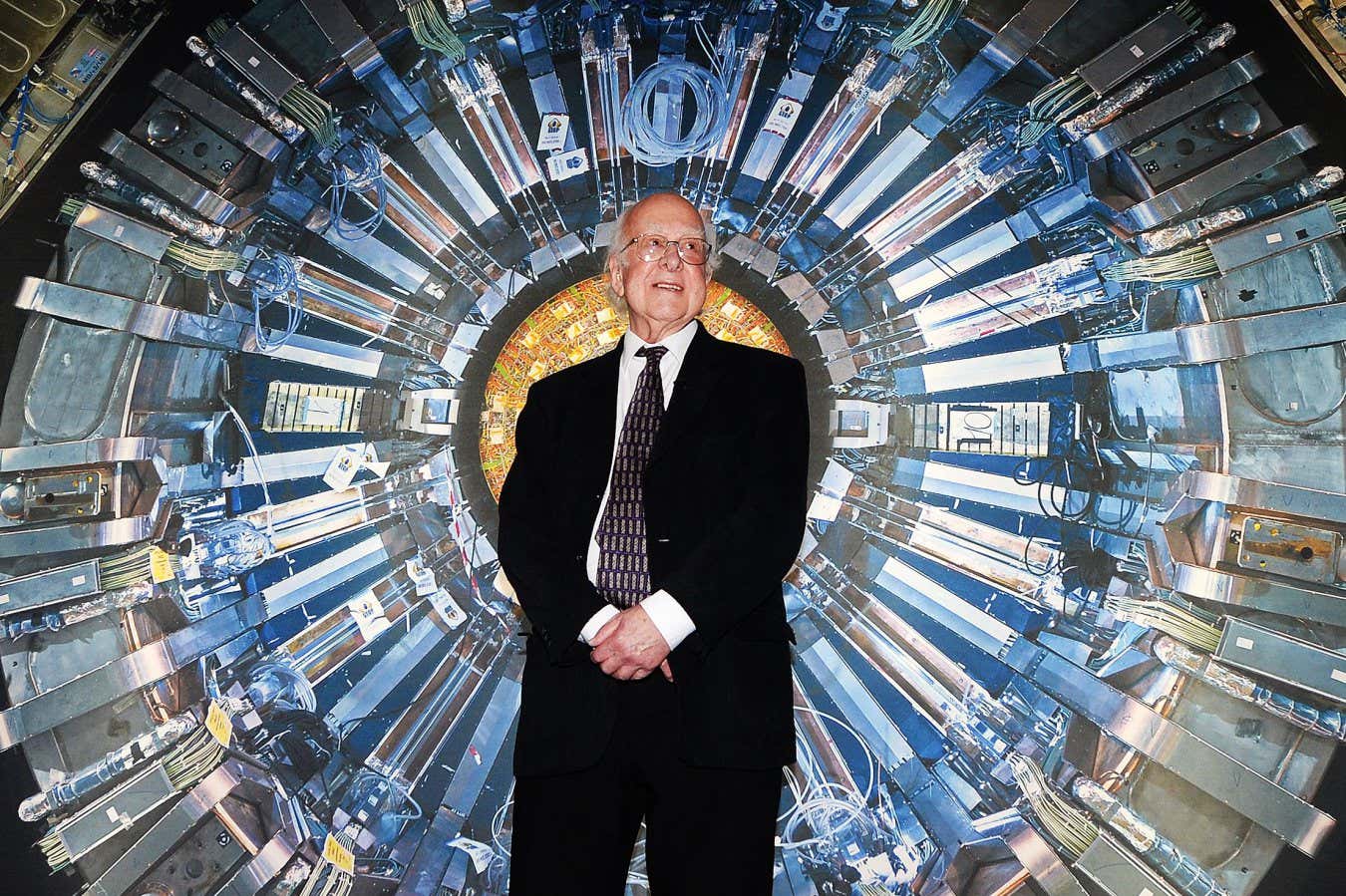

Science & Environment1 week agoHow Peter Higgs revealed the forces that hold the universe together

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoHelp! My parents are addicted to Pi Network crypto tapper

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

Fashion Models1 week ago

Fashion Models1 week agoMixte

-

Politics1 week ago

Politics1 week agoLabour MP urges UK government to nationalise Grangemouth refinery

-

Money1 week ago

Money1 week agoBritain’s ultra-wealthy exit ahead of proposed non-dom tax changes

-

Womens Workouts1 week ago

Womens Workouts1 week agoWhere is the Science Today?

-

Womens Workouts1 week ago

Womens Workouts1 week agoSwimming into Your Fitness Routine

-

News2 weeks ago

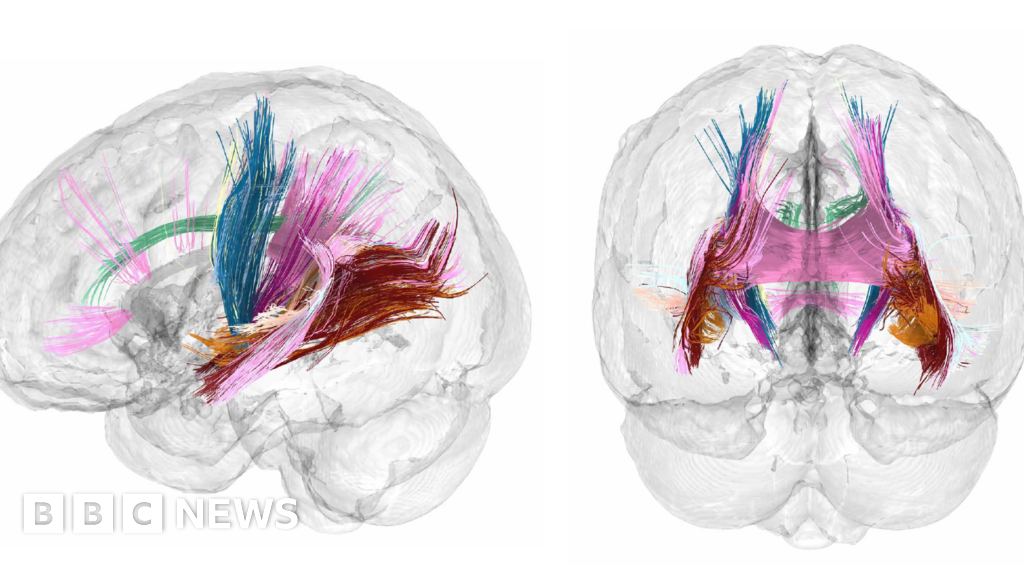

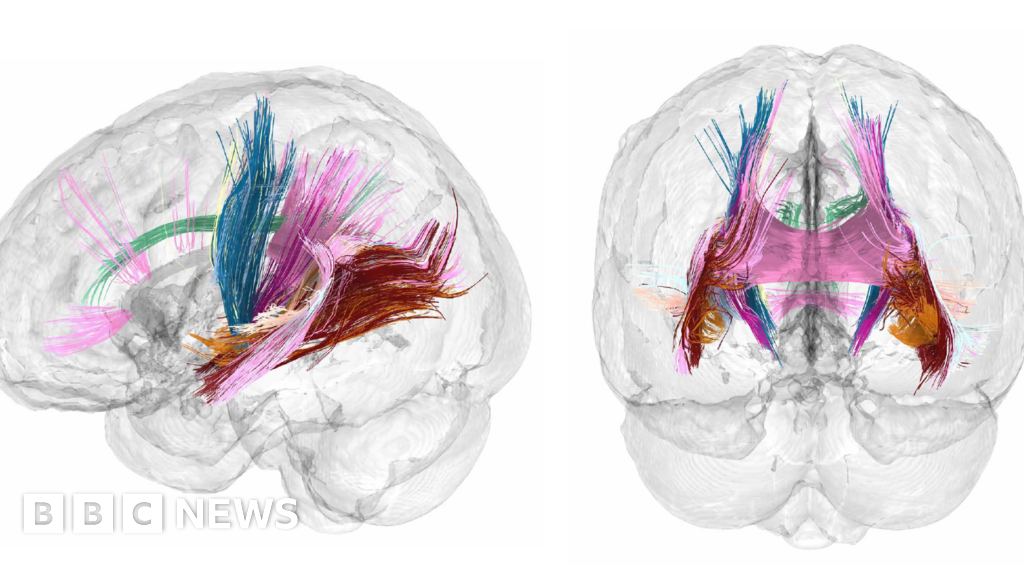

News2 weeks agoBrain changes during pregnancy revealed in detailed map

-

Business2 weeks ago

JPMorgan in talks to take over Apple credit card from Goldman Sachs

You must be logged in to post a comment Login