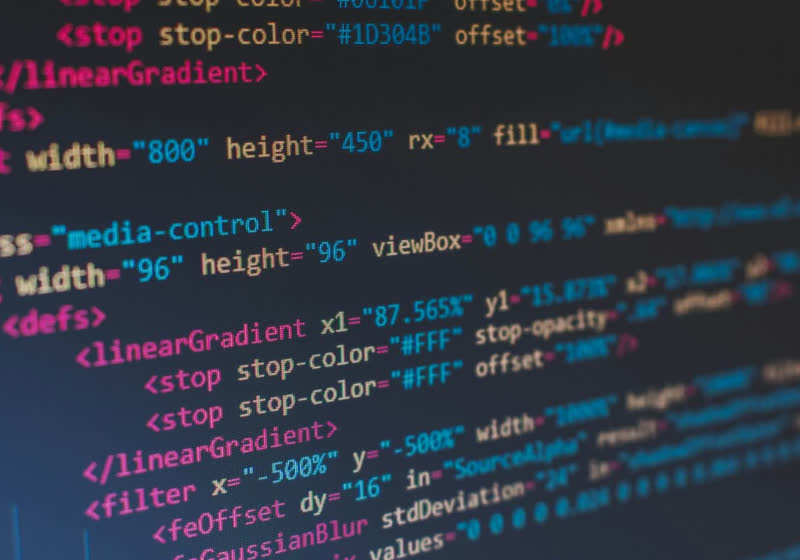

The end of Moore’s Law is looming. Engineers and designers can do only so much to miniaturize transistors and pack as many of them as possible into chips. So they’re turning to other approaches to chip design, incorporating technologies like AI into the process.

Samsung, for instance, is adding AI to its memory chips to enable processing in memory, thereby saving energy and speeding up machine learning. Speaking of speed, Google’s TPU V4 AI chip has doubled its processing power compared with that of its previous version.

But AI holds still more promise and potential for the semiconductor industry. To better understand how AI is set to revolutionize chip design, we spoke with Heather Gorr, senior product manager for MathWorks’ MATLAB platform.

How is AI currently being used to design the next generation of chips?

Heather Gorr: AI is such an important technology because it’s involved in most parts of the cycle, including the design and manufacturing process. There’s a lot of important applications here, even in the general process engineering where we want to optimize things. I think defect detection is a big one at all phases of the process, especially in manufacturing. But even thinking ahead in the design process, [AI now plays a significant role] when you’re designing the light and the sensors and all the different components. There’s a lot of anomaly detection and fault mitigation that you really want to consider.

Heather GorrMathWorks

Heather GorrMathWorks

Then, thinking about the logistical modeling that you see in any industry, there is always planned downtime that you want to mitigate; but you also end up having unplanned downtime. So, looking back at that historical data of when you’ve had those moments where maybe it took a bit longer than expected to manufacture something, you can take a look at all of that data and use AI to try to identify the proximate cause or to see something that might jump out even in the processing and design phases. We think of AI oftentimes as a predictive tool, or as a robot doing something, but a lot of times you get a lot of insight from the data through AI.

What are the benefits of using AI for chip design?

Gorr: Historically, we’ve seen a lot of physics-based modeling, which is a very intensive process. We want to do a reduced order model, where instead of solving such a computationally expensive and extensive model, we can do something a little cheaper. You could create a surrogate model, so to speak, of that physics-based model, use the data, and then do your parameter sweeps, your optimizations, your Monte Carlo simulations using the surrogate model. That takes a lot less time computationally than solving the physics-based equations directly. So, we’re seeing that benefit in many ways, including the efficiency and economy that are the results of iterating quickly on the experiments and the simulations that will really help in the design.

So it’s like having a digital twin in a sense?

Gorr: Exactly. That’s pretty much what people are doing, where you have the physical system model and the experimental data. Then, in conjunction, you have this other model that you could tweak and tune and try different parameters and experiments that let sweep through all of those different situations and come up with a better design in the end.

So, it’s going to be more efficient and, as you said, cheaper?

Gorr: Yeah, definitely. Especially in the experimentation and design phases, where you’re trying different things. That’s obviously going to yield dramatic cost savings if you’re actually manufacturing and producing [the chips]. You want to simulate, test, experiment as much as possible without making something using the actual process engineering.

We’ve talked about the benefits. How about the drawbacks?

Gorr: The [AI-based experimental models] tend to not be as accurate as physics-based models. Of course, that’s why you do many simulations and parameter sweeps. But that’s also the benefit of having that digital twin, where you can keep that in mind—it’s not going to be as accurate as that precise model that we’ve developed over the years.

Both chip design and manufacturing are system intensive; you have to consider every little part. And that can be really challenging. It’s a case where you might have models to predict something and different parts of it, but you still need to bring it all together.

One of the other things to think about too is that you need the data to build the models. You have to incorporate data from all sorts of different sensors and different sorts of teams, and so that heightens the challenge.

How can engineers use AI to better prepare and extract insights from hardware or sensor data?

Gorr: We always think about using AI to predict something or do some robot task, but you can use AI to come up with patterns and pick out things you might not have noticed before on your own. People will use AI when they have high-frequency data coming from many different sensors, and a lot of times it’s useful to explore the frequency domain and things like data synchronization or resampling. Those can be really challenging if you’re not sure where to start.

One of the things I would say is, use the tools that are available. There’s a vast community of people working on these things, and you can find lots of examples [of applications and techniques] on GitHub or MATLAB Central, where people have shared nice examples, even little apps they’ve created. I think many of us are buried in data and just not sure what to do with it, so definitely take advantage of what’s already out there in the community. You can explore and see what makes sense to you, and bring in that balance of domain knowledge and the insight you get from the tools and AI.

What should engineers and designers consider when using AI for chip design?

Gorr: Think through what problems you’re trying to solve or what insights you might hope to find, and try to be clear about that. Consider all of the different components, and document and test each of those different parts. Consider all of the people involved, and explain and hand off in a way that is sensible for the whole team.

How do you think AI will affect chip designers’ jobs?

Gorr: It’s going to free up a lot of human capital for more advanced tasks. We can use AI to reduce waste, to optimize the materials, to optimize the design, but then you still have that human involved whenever it comes to decision-making. I think it’s a great example of people and technology working hand in hand. It’s also an industry where all people involved—even on the manufacturing floor—need to have some level of understanding of what’s happening, so this is a great industry for advancing AI because of how we test things and how we think about them before we put them on the chip.

How do you envision the future of AI and chip design?

Gorr: It’s very much dependent on that human element—involving people in the process and having that interpretable model. We can do many things with the mathematical minutiae of modeling, but it comes down to how people are using it, how everybody in the process is understanding and applying it. Communication and involvement of people of all skill levels in the process are going to be really important. We’re going to see less of those superprecise predictions and more transparency of information, sharing, and that digital twin—not only using AI but also using our human knowledge and all of the work that many people have done over the years.

From Your Site Articles

Related Articles Around the Web

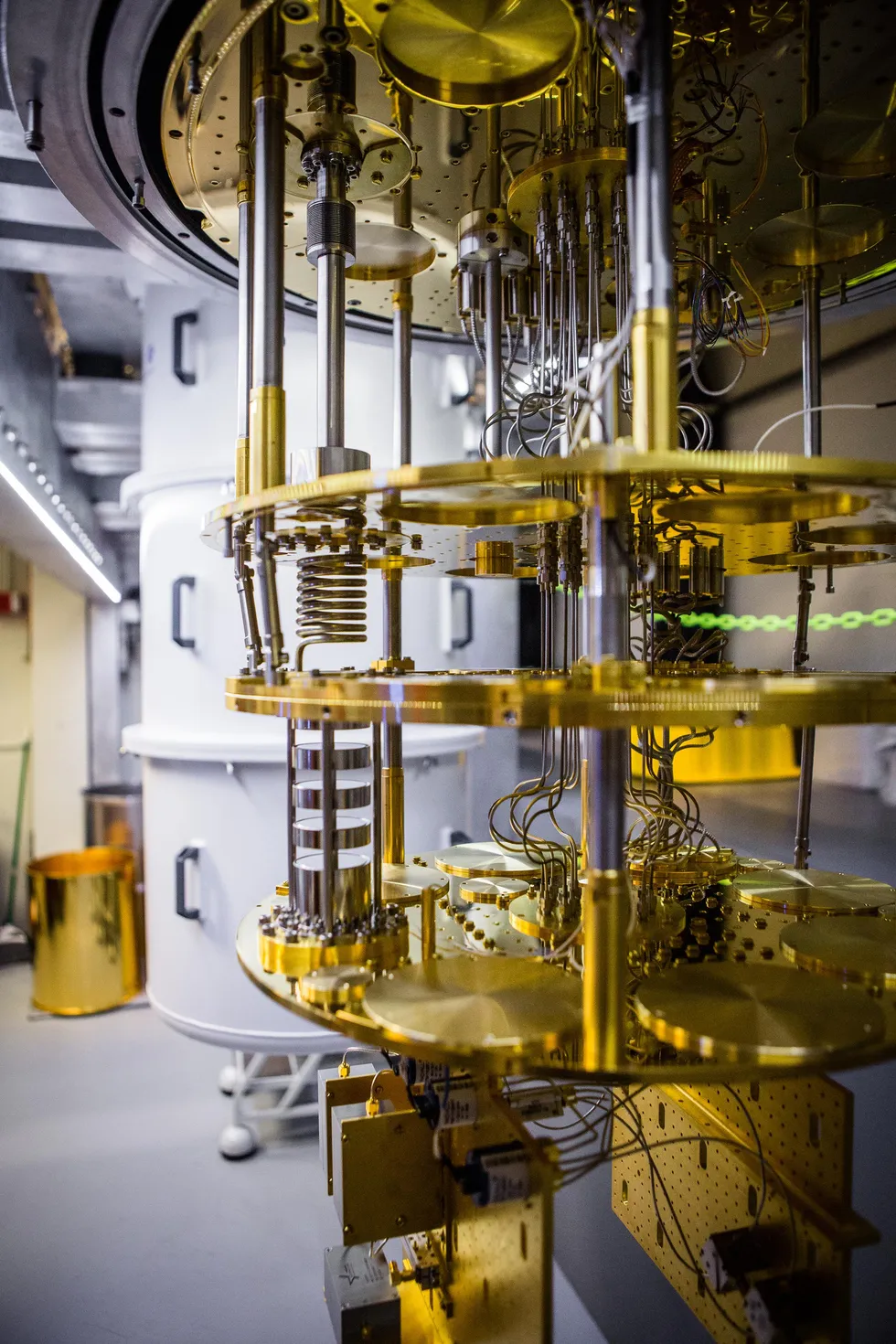

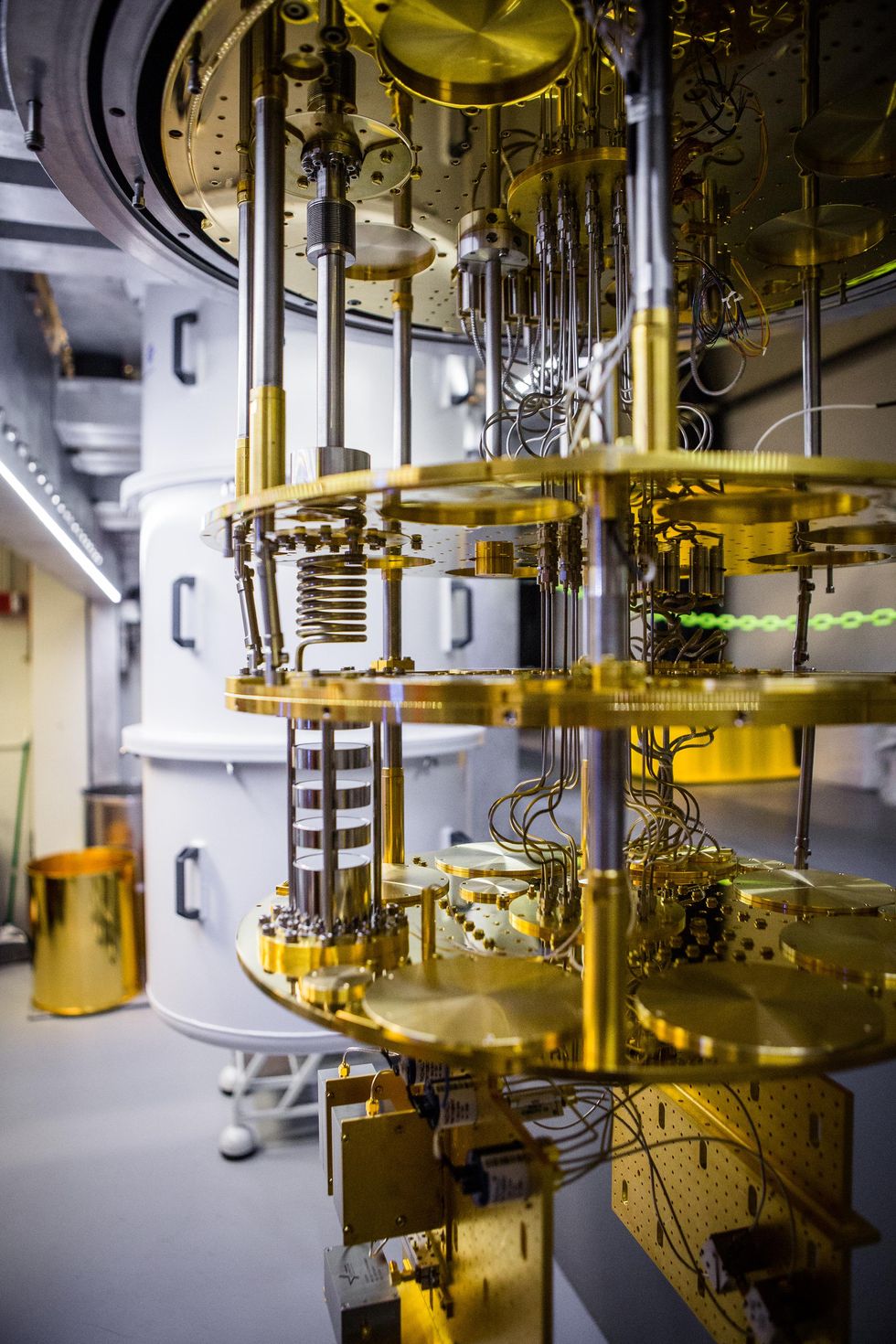

Superconducting qubits are measured at temperatures as low as 20 millikelvin in a dilution refrigerator.Nathan Fiske/MIT

Superconducting qubits are measured at temperatures as low as 20 millikelvin in a dilution refrigerator.Nathan Fiske/MIT

Heather Gorr

Heather Gorr