We all watched the Seahawks beat the Patriots in Super Bowl LX on Sunday night, and in between all the plays, gameday ads filled up our screens. Our roundup for you includes some featuring artificial intelligence, movie trailers and some of the funniest spots of the evening (and Supergirl’s pop-up for Puppy Bowl).

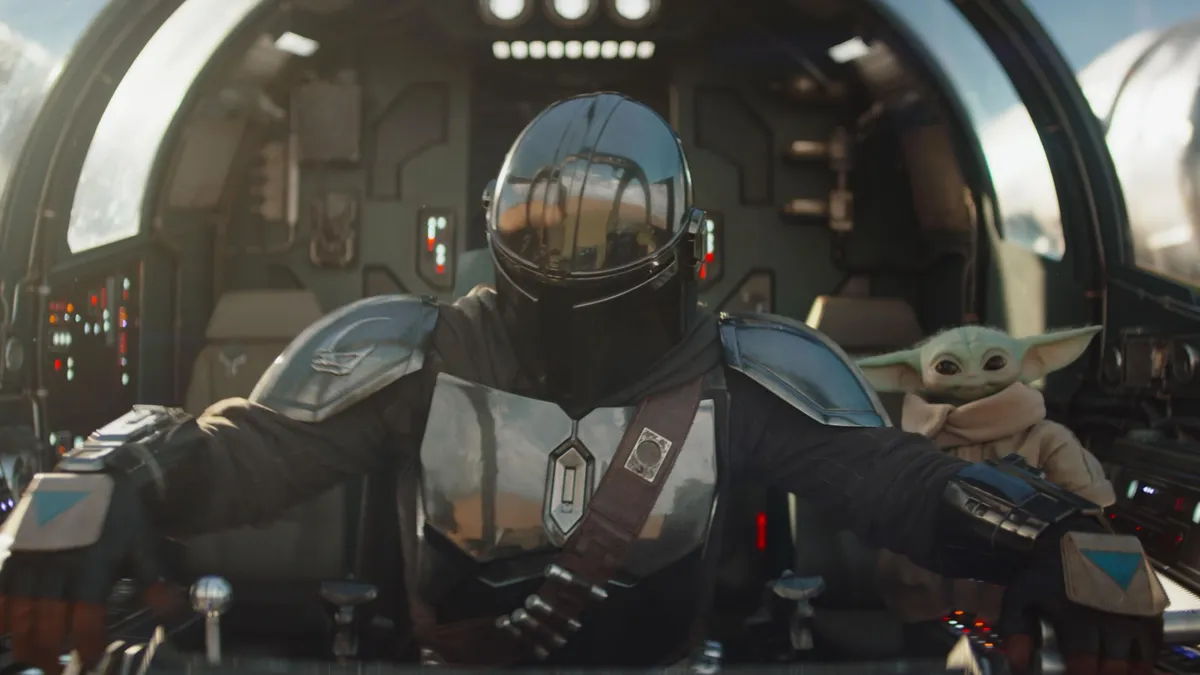

As you’re scrolling through these videos, we urge you to choose your own favorites, but don’t miss Baby Yoda, Ken’s Expedia spot, Melissa McCarthy’s telenovela or DoorDash’s cheeky 50 Cent ad.

The Mandalorian and Grogu

In a short teaser clip, The Mandalorian and Grogu trek through the snow, reminding us “the journey never gets any easier.”

Supergirl for the Puppy Bowl

OK, so this isn’t an official gameday ad, but Supergirl showed up for the Puppy Bowl today, and DC dropped a teaser for the movie, which is due June 26.

Liquid Death’s exploding heads

No, it’s not an episode of The Boys. Liquid Death’s new spot aims to blow your mind with its energy drink — but without losing your head.

DoorDash and 50 Cent’s ‘beef’

Follow 50 Cent on Instagram, and at any moment, you’ll catch him trolling some of his famous peers — mostly to their detriment. This DoorDash gameday ad makes fun of his reputation for beefing with people (with a slick joke about Diddy), while he schools us on the “art of delivering beef.” Take notes.

Manscaped serenade

You’ll have to watch this for yourself and arrive at your own conclusion, but we’re only posting the short version here. Head to YouTube for the extended cut.

Southwest Airlines pokes fun at seating chaos

You know the airline recently switched to assigned seating, and this timely SB commercial jokingly looks back at the mayhem of an era before the new policy.

Scream 7

It’s the return of Sidney Prescott, Ghostface and a fan theory about Stu with this new, flame-filled big-game trailer that makes Sidney’s daughter a target. The movie arrives in theaters Feb. 27.

Google Gemini helps design a dream home

Google adds a human touch to showcase its Gemini AI assistant in this SB spot, where a little kid is genuinely excited to dream up what his new home will look like — including a place for his pup.

Oakley Meta AI glasses

Marshawn Lynch is among the stars in Oakley Meta’s first Super Bowl ad that will debut this Sunday. Check out how the AI-powered glasses capture what Sky Brown, Sunny Choi, IShowSpeed, Spike Lee, Kate Courtney and Akshay Bhatia are doing on and off the ground.

Prepping for FIFA World Cup 2026

Sofia Vergara and Owen Wilson are counting down to World Cup 2026 and its Spanish-language coverage on Peacock and Telemundo. The games begin in June.

Anthropic throws shade at AI competition

We’ve covered how this Claude ad from Anthropic takes shots at OpenAI and its plans to test ads, but Dr. Dre’s What’s The Difference playing in the background kind of nails the message’s tone, in case it wasn’t clear.

Dairy Queen’s Taylor and Swift

It’s a play on words in this DQ spot starring Tyrod Taylor and D’Andre Swift that’s urging fans to order platters for their own halftime snack breaks.

Backstreet Boys for T-Mobile

A T-Mobile store performance from the Backstreet Boys earns tears from Druski, and a couple of cameos from Machine Gun Kelly and The Wrong Paris actor Pierson Fodé.

State Farm teaser livin’ on a prayer

Danny McBride and Keegan-Michael Key rep “Halfway There Insurance” and serenade Hailee Steinfeld in this comedic teaser for State Farm’s ad. The girl group Katseye also makes a brief appearance.

Xfinity’s Wi-Fi saves Jurassic Park?

Xfinity goes the full nostalgia route in its Jurassic Park-themed Super Bowl ad starring Jeff Goldblum, Laura Dern and Sam Neill, where Xfinity stops the dino disaster at the park from ever happening.

Melissa McCarthy in an e.l.f. telenovela

Dramatic. Glamorous. High stakes. Melissa McCarthy. What more can you ask for in a gameday ad/telenovela?

Eos fragrance x Netflix fake-out

In a nod to Netflix’s Is It Cake, this body spray spot from Eos has contestants guessing if the scent is real or if it’s coming from a dessert.

Uber Eats: Matthew McConaughey annoys Bradley Cooper

Uber Eats is back for this year’s Super Bowl LX ad run, and Eagles fan Bradley Cooper isn’t trying to hear what Matthew McConaughey has to say about… food. Will they come to blows? Check out Jerry Rice, Parker Posey and a few other celeb cameos.

Pepsi nabs a polar bear

We’ve seen polar bears working as mascots for years for one particular cola brand, but Pepsi’s blind taste test for this bear has it feeling disloyal.

Super freaky Svedka Vodka

Vodka brand Svedka has used robots in its ads before, but the company hits a couple of firsts with this commercial, which is soundtracked by Rick James’ Super Freak. It’s the first time a vodka ad has rolled out during the Super Bowl in 30 years, and it’s the first brand to use mostly AI to create its ad.

Pokemon’s big anniversary

Who’s hitting the big 3-0? Pokemon! In honor of the franchise turning 30, the company launched a new Super Bowl ad to jumpstart a year-long celebration. Lady Gaga and Jigglypuff duet, Charles Leclerc expresses his respect for Arcanine and a few other Pokemon favorites are spotlighted.

Be a hero with Ring

Ring reminds us that pets are truly family with its tender new ad that showcases its Search Party feature. AI tech to help find and reunite lost pets? Sounds like a win.

Ken travels solo with Expedia

No, Barbie isn’t tagging along with Ken on his jet-setting travel adventures. But he does have Expedia every step of the way in this gameday spot that may be one of our favorites this year. Go, Ken!

Toyota’s superhero belt

Not all commercials are made to be chaotic or cameo-filled surprises, and Toyota’s spot marries nostalgia, family and charm in this tender ad for the RAV4.

Kinder Bueno brings babies and merciful aliens together

Actor William Fichtner commands a space center in this Kinder Bueno ad with intergalactic travel, astronaut babies and cute little aliens that spare the planet for one reason.

Chris Hemsworth is creeped out by Alexa Plus AI

If you haven’t been able to picture Chris Hemsworth afraid of anything, here’s your chance to see how he reacts to Alex Plus in his home. Amazon’s AI assistant works hard to prove its worth and trustworthiness in this ad.

Instacart in its disco era

Instacart recruited Ben Stiller and Benson Boone as disco-loving performers for a series of Super Bowl commercials to introduce its new app feature: Pick bananas the way you like them. Look out for these harmonizing brothers to drop another fresh ad during the big game.

Liquid I.V. and EJae of KPop Demon Hunters

Singer EJae goes a cappella with a version of Against All Odds in this Liquid I.V. teaser dubbed as a Tiny Vanity Concert. Who can’t relate to singing in front of a mirror? The full ad will go live for game week.

Squarespace doesn’t want you to lose it

A laptop doesn’t stand a chance against Emma Stone in the Squarespace spot titled Unavailable. Stone and Yorgos Lanthimos team up in this black-and-white ad directed by Lanthimos, where the actor destroys a few laptops over the domain name emmastone.com being unavailable.

Pringles x Sabrina Carpenter

Sabrina’s new man is constructed entirely out of Pringles in the full Super Bowl spot for the stackable chip brand. He’s tall and mustached, but is he too much of a snack? You’ll have to watch yourself to see if the pop star and her edible lover last.

Budweiser rings in a milestone

The beer brand gets sentimental in celebration of its 150th anniversary this year, and this ad features a Budweiser horse mascot taking flight. (No, that’s not Pegasus.)

Tree Hut

Tree Hut is known for its sugar scrubs and other body care products, and this goopy ad redefines what a smear campaign can look like.

Hims on rich people

Opening with a few lines and images about rich people and health care, this Super Bowl ad from Hims — narrated by Common — asks you to consider your own wellness.

Grubhub teases money… and food

Grubhub has delivered on its promise to “put their mouth where their money is” — and it’s not just about the food. Listen to what George Clooney has to say.

Fanatics Sportsbook and Kardashian Kurse

OK, technically Kendall Jenner isn’t a Kardashian, but you get the drift — and the rumors — with this cheeky Super Bowl ad from Fanatics Sportsbook, a sports betting platform.

Oikos powers you up

Kathryn Hahn impressively pushes a trolley uphill in this Oikos ad that also features Derrick Henry.

Michelob Ultra and Kurt Russell’s wisdom

Ante up for Kurt Russell and Lewis Pullman hitting the slopes in the newest Michelob Ultra big game spot, which also features two Olympians: T.J. Oshie and Chloe Kim.

Nerds hang with Andy Cohen

It might be weird seeing Andy Cohen outside of his Bravo hosting duties and trading banter with Real Housewives of any city, but here he is. Nerds Candy suits up and hits the red carpet with Cohen in this SB spot.

Bud Light keg roll

In this commercial, wedding attendees go after a Bud Light keg in a slow-motion scramble set to Whitney Houston’s I Will Always Love You. It’s Post Malone, Shane Gillis and Peyton Manning versus a particularly steep hill.

Universal Orlando Resort wants to change everything

Through the lens of four different visitors (and ads), the theme park is launching a campaign called This Changes Everything to encourage guests to take “transformative” vacations. You can follow one family in this “Lil’ Bro” Super Bowl spot.

Chris Stapleton and Traveller Whiskey make a moment

The singer strikes a chord in this whiskey ad, recalling Stapleton’s past Super Bowl performance when he was tapped to sing the National Anthem.

Too salty to party with Ritz?

This spot transports viewers to Ritz Island, but this isn’t a reference to the popular reality franchise, Love Island (as far as we can tell). Jon Hamm and Bowen Yang observe a party — and tantalizing Ritz crackers — from afar. They end up joining the function with a bit of help from Scarlett Johansson.

YouTube TV: Don’t support what’s ‘Meh’

Jason and Kylie Kelce contemplate the worst aspects of a world filled with meh in this ad for YouTube TV.

Lay’s potato tear-jerker

Who knew a potato chip ad could be so softhearted? It’s a family affair when it comes to farming potatoes, and sweet memories line the way to retirement.

Volkswagen wants you to jump around

You know what? Hell yeah to House of Pain’s Jump Around, no matter the context. In the VW Big Game ad, the auto company beckons you to get out, get up and get around.

Turbo Tax drama with Adrien Brody

To ease everyone into our least-favorite time of year, Adrien Brody acknowledges that death and taxes are sure things for us, but do they need to be painful?