This story was originally published in The Highlight, Vox’s member-exclusive magazine. To get access to member-exclusive stories every month, join the Vox Membership program today.

Tech

Samsung Galaxy S25 FE Delivers Flagship-Level Performance without the Flagship Price Tag

Samsung’s Galaxy S25 FE, priced at $450 (was $650), delivers the performance of a high-end model at a much lower price. The phone measures 161.3 by 76.6 by 7.4 millimeters, making it the thinnest and lightest Fan Edition device you’ve seen thus far, weighing in just 190 grams.

The frame is extremely sturdy, thanks to its improved Armor Aluminum construction and Corning Gorilla Glass Victus+ on both the front and back. In addition, the IP68 water and dust resistance grade provides all of the necessary protection. Four premium colors are available: Navy, Jetblack, Icyblue, and White, all finished in a stunning Premium Haze appearance.

Sale

Samsung Galaxy S25 FE Cell Phone (2025), 128GB AI Smartphone, Unlocked Android, Large Display, 4900mAh…

- BIG. BRIGHT. SMOOTH : Enjoy every scroll, swipe and stream on a stunning 6.7” wide display that’s as smooth for scrolling as it is immersive.¹

- LIGHTWEIGHT DESIGN, EVERYDAY EASE: With a lightweight build and slim profile, Galaxy S25 FE is made for life on the go. It is powerful and portable…

- SELFIES THAT STUN: Every selfie’s a standout with Galaxy S25 FE. Snap sharp shots and vivid videos thanks to the 12MP selfie camera with ProVisual…

The design is clean and modern, with minimal bezels and a camera module that adds some lift to the back. The 6.7-inch Dynamic AMOLED 2X display is in a class of its own, with FHD+ resolution, a snappy 120Hz refresh rate that adapts to the scenario (either 60Hz or 120Hz, depending on your needs), and screen brightness that can reach 1,900 nits. You get vibrant colors, deep blacks, and as much HDR10+ awesomeness as you can manage. Oh, and the Vision Booster keeps the screen looking fantastic even when you’re outside in direct sunshine.

Under the hood, you’ll find a top-tier Exynos 2400 processor (made on the same 4nm process as other high-end phones this year) teamed with 8GB of memory. That’s a marriage made in heaven, especially for gamers, multitaskers, and anyone who simply enjoys using their phone without becoming upset. You’ll also be glad to learn that there is a larger-than-before vapor chamber to keep the temperature under control, which is important if you enjoy playing games for extended periods of time.

The battery capacity is remarkable at 4,900mAh, allowing for up to 28 hours of video playback in ideal conditions. In the real world, you can easily get a full day out of it, and if it runs out, a quick 45W wired charge will get it back up to speed. When you don’t have a power outlet nearby, there’s 15W wireless charging and some useful reverse wireless PowerShare features.

The rear camera has three sensors: a 50-megapixel main sensor with optical image stabilisation and a lovely f/1.8 aperture, a 12-megapixel ultra-wide sensor that can capture some amazing views (a 123-degree field of view, to be specific), and a telephoto lens that can zoom in on your subject three times (optical zoom, nice and steady). The front-facing camera gets a welcome bump to 12 megapixels, allowing you to shoot some amazing selfies.

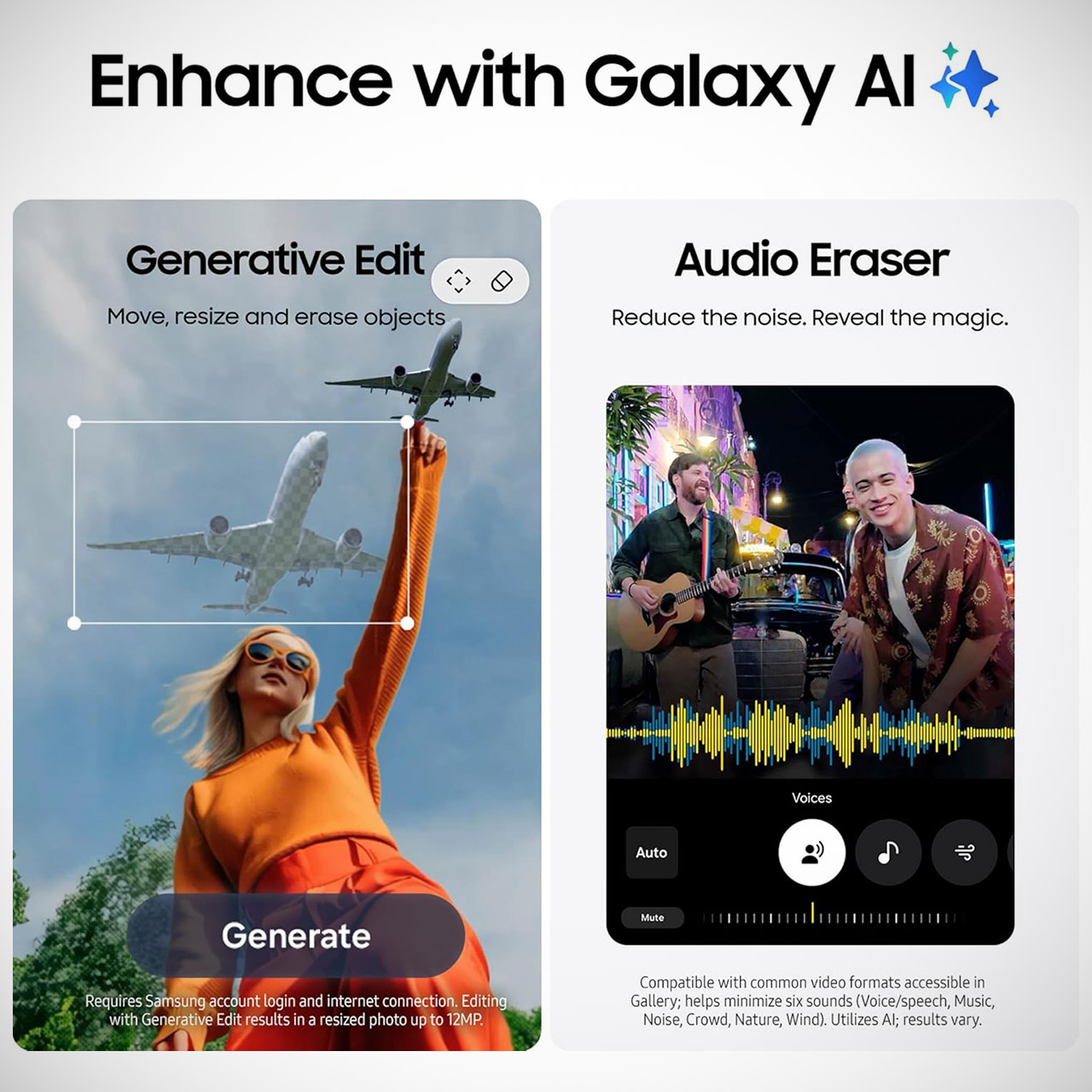

Then there’s Samsung’s ProVisual Engine, which uses some serious AI magic to boost your low-light photographs (Nightography), allows you to juggle things in and out of photos with Generative Edit, and adds new choices for generating slow-motion recordings with the Instant Slow-mo feature. Last but not least, the Exynos chip can still produce excellent 8K video footage with superb colors and detail regardless of lighting conditions.

Tech

AI Boom Fuels DRAM Shortage and Price Surge

If it feels these days as if everything in technology is about AI, that’s because it is. And nowhere is that more true than in the market for computer memory. Demand, and profitability, for the type of DRAM used to feed GPUs and other accelerators in AI data centers is so huge that it’s diverting away supply of memory for other uses and causing prices to skyrocket. According to Counterpoint Research, DRAM prices have risen 80-90 precent so far this quarter.

The largest AI hardware companies say they have secured their chips out as far as 2028, but that leaves everybody else—makers of PCs, consumer gizmos, and everything else that needs to temporarily store a billion bits—scrambling to deal with scarce supply and inflated prices.

How did the electronics industry get into this mess, and more importantly, how will it get out? IEEE Spectrum asked economists and memory experts to explain. They say today’s situation is the result of a collision between the DRAM industry’s historic boom and bust cycle and an AI hardware infrastructure build-out that’s without precedent in its scale. And, barring some major collapse in the AI sector, it will take years for new capacity and new technology to bring supply in line with demand. Prices might stay high even then.

To understand both ends of the tale, you need to know the main culprit in the supply and demand swing, high-bandwidth memory, or HBM.

What is HBM?

HBM is the DRAM industry’s attempt to short-circuit the slowing pace of Moore’s Law by using 3D chip packaging technology. Each HBM chip is made up of as many as 12 thinned-down DRAM chips called dies. Each die contains a number of vertical connections called through silicon vias (TSVs). The dies are piled atop each other and connected by arrays of microscopic solder balls aligned to the TSVs. This DRAM tower—well, at about 750 micrometers thick, it’s more of a brutalist office-block than a tower—is then stacked atop what’s called the base die, which shuttles bits between the memory dies and the processor.

This complex piece of technology is then set within a millimeter of a GPU or other AI accelerator, to which it is linked by as many as 2,048 micrometer-scale connections. HBMs are attached on two sides of the processor, and the GPU and memory are packaged together as a single unit.

The idea behind such a tight, highly-connected squeeze with the GPU is to knock down what’s called the memory wall. That’s the barrier in energy and time of bringing the terabytes per second of data needed to run large language models into the GPU. Memory bandwidth is a key limiter to how fast LLMs can run.

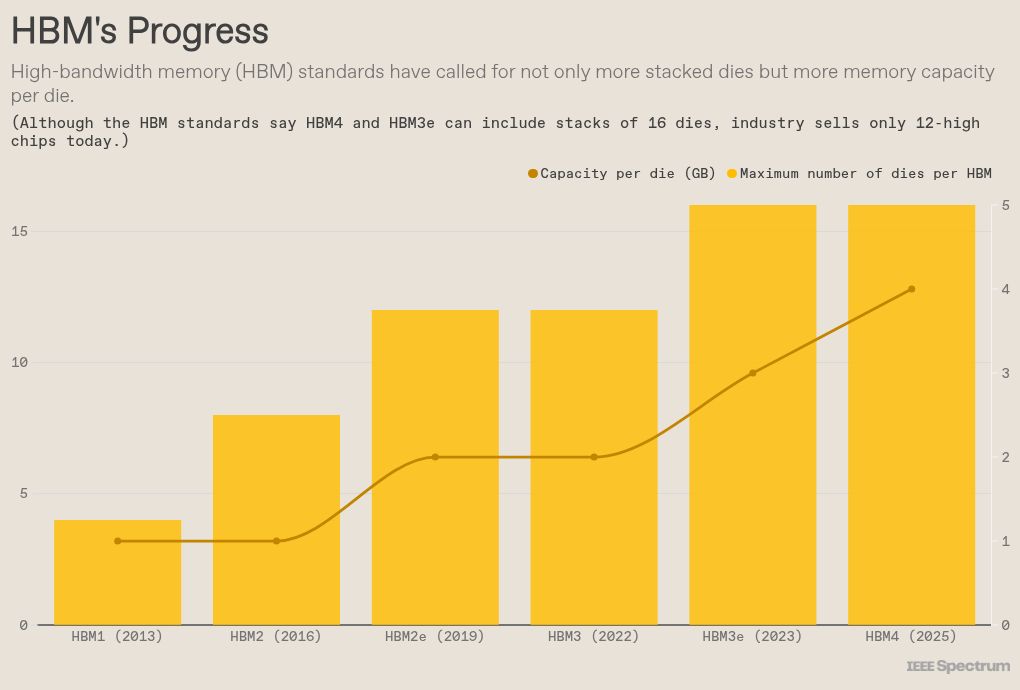

As a technology, HBM has been around for more than 10 years, and DRAM makers have been busy boosting its capability.

As the size of AI models has grown, so has HBM’s importance to the GPU. But that’s come at a cost. SemiAnalysis estimates that HBM generally costs three times as much as other types of memory and constitutes 50 percent or more of the cost of the packaged GPU.

Origins of the memory chip shortage

Memory and storage industry watchers agree that DRAM is a highly cyclical industry with huge booms and devastating busts. With new fabs costing US $15 billion or more, firms are extremely reluctant to expand and may only have the cash to do so during boom times, explains Thomas Coughlin, a storage and memory expert and president of Coughlin Associates. But building such a fab and getting it up and running can take 18 months or more, practically ensuring that new capacity arrives well past the initial surge in demand, flooding the market and depressing prices.

The origins of today’s cycle, says Coughlin, go all the way back to the chip supply panic surrounding the COVID-19 pandemic . To avoid supply-chain stumbles and support the rapid shift to remote work, hyperscalers—data center giants like Amazon, Google, and Microsoft—bought up huge inventories of memory and storage, boosting prices, he notes.

But then supply became more regular and data center expansion fell off in 2022, causing memory and storage prices to plummet. This recession continued into 2023, and even resulted in big memory and storage companies such as Samsung cutting production by 50 percent to try and keep prices from going below the costs of manufacturing, says Coughlin. It was a rare and fairly desperate move, because companies typically have to run plants at full capacity just to earn back their value.

After a recovery began in late 2023, “all the memory and storage companies were very wary of increasing their production capacity again,” says Coughlin. “Thus there was little or no investment in new production capacity in 2024 and through most of 2025.”

The AI data center boom

That lack of new investment is colliding headlong with a huge boost in demand from new data centers. Globally, there are nearly 2,000 new data centers either planned or under construction right now, according to Data Center Map. If they’re all built, it would represent a 20 percent jump in the global supply, which stands at around 9,000 facilities now.

If the current build-out continues at pace, McKinsey predicts companies will spend $7 trillion by 2030, with the bulk of that—$5.2 trillion—going to AI-focused data centers. Of that chunk, $3.3 billion will go toward servers, data storage, and network equipment, the firm predicts.

The biggest beneficiary so far of the AI data center boom is unquestionably GPU-maker Nvidia. Revenue for its data center business went from barely a billion in the final quarter of 2019 to $51 billion in the quarter that ended in October 2025. Over this period, its server GPUs have demanded not just more and more gigabytes of DRAM but an increasing number of DRAM chips. The recently released B300 uses eight HBM chips, each of which is a stack of 12 DRAM dies. Competitors’ use of HBM has largely mirrored Nvidia’s. AMD’s MI350 GPU, for example, also uses eight, 12-die chips.

With so much demand, an increasing fraction of the revenue for DRAM makers comes from HBM. Micron—the number three producer behind SK Hynix and Samsung—reported that HBM and other cloud-related memory went from being 17 percent of its DRAM revenue in 2023 to nearly 50 percent in 2025.

Micron predicts the total market for HBM will grow from $35 billion in 2025 to $100 billion by 2028—a figure larger than the entire DRAM market in 2024, CEO Sanjay Mehrotra told analysts in December. It’s reaching that figure two years earlier than Micron had previously expected. Across the industry, demand will outstrip supply “substantially… for the foreseeable future,” he said.

Future DRAM supply and technology

“There are two ways to address supply issues with DRAM: with innovation or with building more fabs,” explains Mina Kim, an economist with the Mkecon Insights. “As DRAM scaling has become more difficult, the industry has turned to advanced packaging… which is just using more DRAM.”

Micron, Samsung, and SK Hynix combined make up the vast majority of the memory and storage markets, and all three have new fabs and facilities in the works. However, these are unlikely to contribute meaningfully to bringing down prices.

Micron is in the process of building an HBM fab in Singapore that should be in production in 2027. And it is retooling a fab it purchased from PSMC in Taiwan that will begin production in the second half of 2027. Last month, Micron broke ground on what will be a DRAM fab complex in Onondaga County, N.Y. It will not be in full production until 2030.

Samsung plans to start producing at a new plant in Pyeongtaek, South Korea in 2028.

SK Hynix is building HBM and packaging facilities in West Lafayette, Indiana set to begin production by the end of 2028, and an HBM fab it’s building in Cheongju should be complete in 2027.

Speaking of his sense of the DRAM market, Intel CEO Lip-Bu Tan told attendees at the Cisco AI Summit last week: “There’s no relief until 2028.”

With these expansions unable to contribute for several years, other factors will be needed to increase supply. “Relief will come from a combination of incremental capacity expansions by existing DRAM leaders, yield improvements in advanced packaging, and a broader diversification of supply chains,” says Shawn DuBravac , chief economist for the Global Electronics Association (formerly the IPC). “New fabs will help at the margin, but the faster gains will come from process learning, better [DRAM] stacking efficiency, and tighter coordination between memory suppliers and AI chip designers.”

So, will prices come down once some of these new plants come on line? Don’t bet on it. “In general, economists find that prices come down much more slowly and reluctantly than they go up. DRAM today is unlikely to be an exception to this general observation, especially given the insatiable demand for compute,” says Kim.

In the meantime, technologies are in the works that could make HBM an even bigger consumer of silicon. The standard for HBM4 can accommodate 16 stacked DRAM dies, even though today’s chips only use 12 dies. Getting to 16 has a lot to do with the chip stacking technology. Conducting heat through the HBM “layer cake” of silicon, solder, and support material is a key limiter to going higher and in repositioning HBM inside the package to get even more bandwidth.

SK Hynix claims a heat conduction advantage through a manufacturing process called advanced MR-MUF (mass reflow molded underfill). Further out, an alternative chip stacking technology called hybrid bonding could help heat conduction by reducing the die-to-die vertical distance essentially to zero. In 2024, researchers at Samsung proved they could produce a 16-high stack with hybrid bonding, and they suggested that 20 dies was not out of reach.

From Your Site Articles

Related Articles Around the Web

Tech

The end of flu is closer than you think, and this bad season shows why

Let’s start with the bad news.

There’s a decent chance, perhaps as high as 11 percent if you’re unvaccinated, that some time over the course of this winter, you’ll be overcome with chills, followed by extreme fatigue, body aches and cough, and culminating in a sudden spike in fever. Congratulations: you have the flu.

Every winter in the US has its share of flu cases, but this season is shaping up to be particularly bad. Early this week the Centers for Disease Control and Prevention put the flu season in the “moderately severe” category, with an estimated 11 million illnesses, 120,000 hospitalizations, and 5,000 deaths so far. Here in New York, where I live, the city kicked off 2026 by setting records for flu-related hospitalizations.

While what we’re experiencing is not a “super flu,” it is a particularly bad one, thanks in part to the emergence of a subgroup of the well-established H3N2 flu virus called subclade K. It carries a bunch of mutations that seem to have rendered the current flu vaccine somewhat less effective. (Though far from completely ineffective — more on that below.) Nor does it help that only around 44 percent of US adults have taken the flu shot so far, well below vaccination rates before the Covid pandemic. The decline has been particularly sharp for children, who are more vulnerable to the flu, which has resulted in higher than normal pediatric hospitalizations.

As bad as this season is shaping up to be, chances are most of us will suffer through it and then forget until the next year comes around. After all, it’s just the flu, right? But even normal influenza is far more than just a seasonal nuisance. The World Health Organization estimates that there are around 1 billion flu infections in a given year, which can lead to as many as 5 million severe cases and up to 650,000 flu-related respiratory deaths per year, mostly among the very young and the very old.

The burden of flu goes beyond those numbers: CDC research indicates that flu infections can raise the risk of heart attacks and strokes. Plus all those sick days add up to as much as 111 million lost work days in the US alone, while childhood infections lead to more school absences and a knock-on effect for parents forced to stay home.

Oh, and chances are decent that the (inevitable) next global pandemic will come from a mutant flu virus, just like past pandemics in 2009, 1968, 1957, and the granddaddy of them all, 1918, which killed at least 50 million people around the world.

So that’s the bad news. The good news? There are ways to protect yourself right now — and even more promising, glimmers on the scientific frontier of a world without flu.

What works — and what doesn’t — with the flu shot

The simplest way to keep safe is, of course, to get your flu shot. Like right now — even though the flu season is well underway, it’s worth getting your shot if you haven’t yet. Early data from the UK found protection rates against hospital admission of 70 to 75 percent for children and 30 to 35 percent in adults. That’s normal: The standard flu vaccine isn’t great at preventing cases, but it is very effective in reducing the severity of illness. Throw in the fact that you can now easily get an at-home flu test and secure the antiviral Tamiflu early in an illness, and you do have the power to ensure your case is milder.

But it is true flu shots are not our most effective class of vaccine. That largely has to do with the nature of the flu, and how the shots are made.

Influenza is what you might call a “promiscuous” virus. Strains are constantly evolving, and can easily swap genetic material through a process called reassortment to create new, potentially more dangerous viruses. Because of that, international health officials have to create a new vaccine strain every year, hoping that it will match the strain actually circulating months later when vaccines are available for distribution.

If the dominant strain changes during those months, the vaccine will be less effective. And any vaccine that has to be taken over and over again on an annual basis is going to be a harder sell to the public, even before taking into account rising anti-vax sentiment.

There’s already progress being made to reduce the time between when a vaccine strain is selected and when it can be produced, chiefly by using rapid mRNA platforms rather than growing vaccines in eggs, as has been done for decades. But even better: What if it were possible to create a flu vaccine that was effective against a wide variety of different flu strains?

The dream of a universal flu vaccine

A “universal” flu vaccine is one that would be at least 75 percent effective against influenza A viruses and provide durable protection for at least a year (though ideally longer). In other words, it would be a vaccine that would act more like the almost perfectly protective measles vaccine and less like, well, a flu shot.

Such “universal” flu coverage would not be one single breakthrough, but a portfolio of strategies for outsmarting a virus that mutates faster than our annual vaccine calendar. The first bucket is universal (or universal-ish) vaccines: instead of training antibodies mainly against flu’s fast-changing hemagglutinin (HA) “head,” researchers are trying to steer immunity toward viral targets that mutate less.

One major approach focuses on the HA stem or stalk, a region of the virus that changes more slowly; early human trials of stem-focused designs suggest these vaccines can be safe and elicit broadly reactive immune responses. Another vaccine strategy uses mosaic/nanoparticle displays that present HA antigens from multiple strains at once, aiming to teach the immune system to recognize flu’s common features rather than this year’s exact variant; the government’s FluMos program is an example now in early clinical testing.

A third line leans on broader immune mechanisms: targeting neuraminidase (NA) (the N in HN flu viruses), or boosting T-cell responses to internal proteins that rarely change, which may not always prevent infection but could make illness far less severe when the virus drifts.

There’s also the “universal without a vaccine” lane: prevention and treatment that don’t depend on your immune system’s memory. Cidara, a San Diego-based biotech company, has developed a long-acting preventive designed to provide season-long protection by chemically linking multiple copies of a neuraminidase inhibitor to a long-lasting antibody. Preclinical work has shown broad resistance to influenza A and B, and the company’s approach is promising enough that it is now in the process of being acquired by pharma giant Merck.

Even more sci-fi: using gene editing to create all-purpose flu treatments. Scientists in Australia are working on using the gene editing tool Crispr to develop an antiviral nasal spray that could shut down a wide variety of flu viruses.

We shouldn’t have to live with the flu

Historically, the US hasn’t allocated nearly enough money to universal flu prevention research, though in May the Trump administration surprised scientists with plans to spend $500 million on an approach that relies on older vaccine technology. Except in those rare years when a flu pandemic boils over, we tend to treat flu as something we just have to suffer through.

But hundreds of thousands of people globally each year won’t survive their bouts with the flu, and millions more will suffer because of the viruses. We’ve managed to all but knock out past killers like smallpox, the measles, and the mumps (Well, provided we agree to take our vaccines.) There’s reason to believe that influenza can be next.

A version of this story originally appeared in the Good News newsletter. Sign up here!

Tech

Is agentic AI ready to reshape Global Business Services?

Presented by EdgeVerve

Before addressing Global Business Services (GBS), let’s take a step back. Can agentic AI, the type of AI able to take goal-driven action, transform not just GBS but any kind of enterprise? And has it done so yet?

As with many new technologies, rhetoric has outpaced deployment in this case. While 2025 was “supposed to be the year of agentic AI,” it didn’t turn out that way, according to VentureBeat Contributing Editor Taryn Plumb. Leaning on input from Google Cloud and integrated development environment (IDE) company Replit, Plumb reported in a December 2025 VentureBeat post that what has been missing are the fundamentals required to scale.

Given the experience of Large Language Model (LLM)-based generative (gen)AI, this outcome is not surprising. In a survey conducted at the February 2025 Shared Services & Outsourcing Network (SSON) summit, 65% of GBS organizations responded that they had yet to complete a GenAI project. One can safely say that the adoption of the more recently arrived agentic AI is still in its very nascent stages for enterprises, including GBS.

The role of agentic AI in Global Business Services

There are good reasons, nonetheless, to focus on the tremendous potential of agentic AI and its application to the GBS sector.

Stripped of hype, Agentic AI unlocks capabilities in the orchestration layer of software workflows that weren’t practical before. It does so through a range of techniques, including (but not requiring) LLMs. While enterprises may indeed be missing certain fundamentals needed to deploy agentic AI at scale, those prerequisites are not out of reach.

As for GBS and Global Capability Centers (GCCs), they have already been undergoing a makeover, from back-office extensions into increasingly strategic enterprise partners. Agentic AI is a natural fit because one of its standard use cases involves IT operations or customer-service agents, functionality already within the existing GBS and GCC wheelhouse.

So yes, agentic AI could potentially transform the GBS sector. Industry leaders can best move toward scaled deployment by taking a methodical approach.

Five steps for deploying agentic AI in GBS

Agentic AI is not the only game in town. As noted, there’s GenAI, used primarily for content creation. But broadening the scope, we can also point to predictive AI and document AI, used respectively for forecasting and data extraction. (Neither requires LLMs.) Exposure to preexisting AI bodes well for the future of agentic AI.

First, these flavors of AI are mutually supportive, stacked (rather than siloed) in modern systems. Agentic AI, in particular, is positioned to draw upon the others. Second, having lived through the hype cycle of GenAI, industry leaders may be inclined to take a more measured – and productive – approach to agentic AI.

Rather than rushing into a pilot, the industry would do well to prep carefully (steps 1-3). When combined with the right test project (step 4), these actions can pave the way for a scaled-up deployment of agentic AI (step 5):

Know thy processes. Business operations can be complicated. Consider a top global shipping and logistics firm, whose thousands of full-time employees at its seven GBS centers supported more than 80 processes involving highly complex, manually intensive workflows with wide regional variations. Only by first understanding existing processes and workflows does an organization like this stand a chance of being able to rethink or rework them.

Know thy data. Closely related are the data that workflows depend upon. How do these data flow from end to end? What do the pipelines look like? Where are the key APIs? Are the data structured or unstructured? Do the resources include data platforms (systems of record) and vector databases (context engines), both of which AI agents need to make good decisions? What kind of data governance and security prevail? How might those change in an agentic AI scenario?

Identify the problem. In the case of the shipping firm mentioned above, the complexity and variation of the workflows, as well as their manual intensity, exposed it to significant costs, lapses in service level agreements (SLAs), poor customer experience and heightened compliance and legal risks. Once named, a problem logically becomes a potential use case with discrete objectives.

Pilot an operating model. Options include consolidating efforts in a Center of Excellence (COE), democratizing development through citizen-led approaches, and partnering through Build-Operate-Transform-Transform-Transfer (BOTT) models, among others. Without structural clarity, even promising AI pilots are difficult to extend beyond their initial domain. The model should also reflect reality. Likely involving multiple, parallel agents in pursuit of coordinated goals, Agentic AI is still constrained by environment, complexity, risks and governance.

Scale up. Successful pilots lead to their own next steps. Take the fragmented experience of a large multinational bank in Australia. After automating several non-core processes through Automation COE, the bank realized it needed to analyze and improve its most complex workflows. It selected an over-the-top software platform that enabled it to complete more than 100 discovery projects in under 14 months. Pilots thus may grow, becoming enterprise-wide initiatives.

What agentic AI looks like at enterprise scale

Only scale can yield real impact. The shipping provider, with its seven GBS centers, ended up with technology capable of building data pipelines, digitizing complex documents, applying rule-based reasoning across country-specific exceptions and orchestrating work across teams. That foundation led to an AI-first transformation of about 16 initiatives, exponential growth in automation and significant efficiency gains.

By unleashing capabilities at the orchestration layer – enabling contextual perception, cross-domain collaboration, and autonomous action aligned with governance – agentic AI can turbo-charge operations, both AI and human.

Consider a procurement process. While document AI can extract data from purchase orders, obviating certain manual checks, an AI agent could also evaluate vendor risk, cross-reference compliance standards, verify budget availability and even initiate negotiation while keeping audit logs for regulatory reporting. In a financial advisory scenario, while predictive AI can analyze trends, an AI agent could take further action, assisting professionals in particular business units on targeted strategic investments.

Note that the agent isn’t replacing human judgment, but extending it, ensuring decisions are made faster, more consistently and on a scale.

From standalone automation to agentic ecosystems in GBS

GBS is uniquely positioned to lead the enterprise into the agentic AI era. By design, GBS sits at the intersection of processes and data across multiple business units. Finance, HR, supply chain and IT all flow through the shared services model. This central vantage point makes GBS an ideal launchpad for creating agentic AI ecosystems.

An ecosystem differs from standalone automation. Agents don’t perform tasks in isolation. Rather, they work as part of an interconnected system. They share insights, learn from one another and coordinate to optimize outcomes at the enterprise level. Deployed within a GBS or GCC, Agentic AI can accelerate their ongoing transformation, enabling them to leapfrog incremental automation and operate at the level of end-to-end process orchestration.

N. Shashidar is SVP & Global Head, Product Management at EdgeVerve.

Sponsored articles are content produced by a company that is either paying for the post or has a business relationship with VentureBeat, and they’re always clearly marked. For more information, contact sales@venturebeat.com.

Tech

Can AI pass this test? School districts start projects backed by Microsoft and Gates Foundation

REDMOND, Wash. — For more than three years, much of the focus on AI in education has been on the implications of handing students what amounts to a technological cheat code.

But what if this disruptive force could be used to improve education instead?

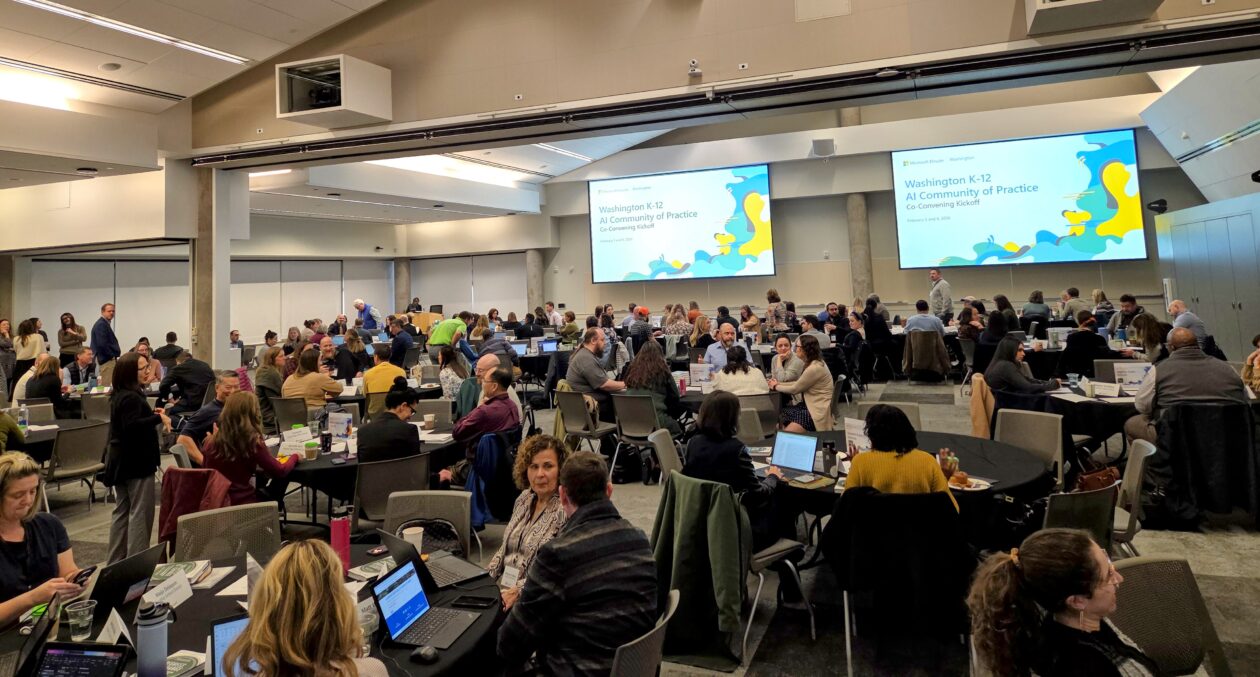

That’s the idea behind an effort now getting under way in Washington state. Last week, school districts gathered at Microsoft in Redmond for the start of a two-year “community of practice” focused on AI in education. More than 150 educators and administrators filled the room.

The event brought together two programs. Microsoft’s Elevate Washington initiative, announced last October, awarded $75,000 grants to 10 districts to plan and implement AI projects. The Gates Foundation is funding a separate cohort of 10 districts focused on AI infrastructure and data systems.

The Microsoft Elevate grants also include up to $25,000 in funding for technology consulting. The districts are expected to share what they learn with each other and across the state.

The focus is on practical applications, such as AI-powered tutoring in Bellevue, K-12 literacy frameworks in Highline and Quincy, and chatbots for students and families in Kennewick.

IEPs in Issaquah

In Issaquah, the goal is to use AI to help special education students manage the psychological burden of moving from teacher to teacher with an individualized education plan (IEP).

The project was inspired by listening sessions with high school students who receive special education services. It can be stressful and burdensome for students to explain their needs to each new teacher, ensuring that their accommodations and goals are being met.

Dr. Sharine Carver, the district’s executive director of special services, said the goal is to “empower students, reduce that psychological burden and put them in the driver’s seat of really understanding their IEP and being able to advocate for themselves.”

Diana Eggers, the district’s director of educational technology, said the IEP project is different in that it goes beyond seeking efficiency in existing activities to instead build AI for a new purpose.

“How can we use AI to reshape what we do?” Eggers said. “We’re not there yet, but we need to figure out how we can do that.”

An ‘unreal’ pace of change

All of the districts are navigating the unknown in one way or another. Jane Broom, senior director of Microsoft Philanthropies, who grew up in Washington public schools, told the group that they are on the front lines of an unprecedented transformation.

“This is the fastest change I’ve ever seen, and this company is one that changes constantly,” she said. “These last two or three years have been pretty unreal.”

The 10 Microsoft grantees range from Seattle, the state’s largest district with about 49,000 students, to Manson, a rural district in Chelan County with about 700 students. Collectively, the grantees serve about 17 percent of Washington’s K-12 students.

Broom pointed to a major gap in AI usage across the state, with more than 30% of the working-age population using AI in the Seattle region vs. less than 10% in some rural areas. Microsoft highlighted this divide when it launched the Elevate program last fall.

Early stages of understanding

The opening session Thursday morning came with an additional reality check: National research presented at the event showed that even the most ambitious districts are still in early stages, and struggling to answer a basic question: is any of this actually working?

Bree Dusseault, principal and managing director at the Center on Reinventing Public Education at the University of Washington, presented findings from a national study of early-adopter school districts. Her team surveyed 119 systems (with 45 responses), interviewed leaders at 14, and profiled 79 for a database of districts pushing ahead on AI adoption.

The picture that emerged was mixed. Districts have moved quickly to put infrastructure in place, but significant gaps remain:

Infrastructure is largely in place. More than 80% of districts in the national survey have the technical basics like devices and broadband. About two-thirds have data privacy protocols and dedicated AI staff. Six in 10 have a formal AI policy.

Evaluation is lagging far behind. Only 24% of the surveyed districts have any system for measuring whether their AI efforts are working. Only 9% have updated learning standards to reflect new student competencies.

The work is overwhelmingly focused on teachers. Every early-adopter district in the study trains teachers and approves them to use AI. Fewer than half provide any training to students. Only 16% engage parents or families.

Students are already moving on their own. A separate RAND/CRPE survey from September 2025 found that 54% of students use AI for schoolwork. Among high schoolers, 61%. But only 19% report getting any guidance on how to use it.

Most early adopters aren’t using AI to rethink education. About two-thirds of these districts are using it to do what they already do more efficiently. Another 30% are using it to support existing reform efforts. Only a handful are trying anything fundamentally new.

Addressing that last point is the idea behind the AI initiatives that got under way last week. The districts in the Microsoft program will work on their projects through the next school year, running through June 2027.

Tech

Tim Ho Wan goes back to basics to continue growing in Singapore

Instead of reinventing the wheel, Hong Kong dim sum chain Tim Ho Wan is doubling down on the basics

Eateries have had a tough couple of years. In 2024, Singapore saw its highest number of F&B business closures in 20 years, with over 3,000 establishments shutting their doors. 2025 witnessed a similar trend, with the Ministry of Trade and Industry reporting that 2,431 businesses closed in the first 10 months of the year.

Apart from local operators, international chains have also shrunk and even succumbed to this rapid pace of closures, with the likes of US’ Eggslut, England’s PizzaExpress and Taiwan’s Hollin proving that scale and recognition are no longer a safety net in Singapore’s F&B scene.

So when Vulcan Post received an exclusive invitation to a media tour and witness the opening of Tim Ho Wan’s tenth store in Hong Kong last week, it was admittedly easy to dismiss the offer as “another store opening.” However, through the tour, we found out how their strategy might be the right counter to Singapore’s tough F&B scene: starting with introducing new products that pay homage to their classics.

Vulcan Post caught up with Yeong Sheng Lee, CEO of Tim Ho Wan, in an exclusive interview to understand how the business plans to continue thriving in Singapore’s cutthroat F&B scene, starting with their products.

Why Singapore is a tough nut to crack for international brands

Having managed multiple F&B brands in Southeast Asia for almost a decade, Yeong Sheng Lee knows just how challenging the food scene really is, especially in Singapore.

While Singapore presents itself as an open market for foreign brands, multiple factors can result in costly miscalculations that make even the biggest of brands struggle. Lee acknowledged that the market has seen an increase in challenges post COVID-19, including rising operational costs, a shortage of labour, and meeting customers who have high standards for both food quality and value.

“What I actually mean by value is that not everyone is looking for things that are cheap, and there’s a clear difference. Cheap is when I always want to go for the lowest pricing, but value is where for the price that I’m paying, am I getting more than what I should actually be expecting?”

When asked if the pessimism shared by Singaporean netizens and entrepreneurs is impeding the local market, Lee remained optimistic that the brand would continue to grow its presence in the country for the long term rather than pulling back like many other chains.

“I wouldn’t actually say it’s pessimism. It’s just a phase that we actually go through, like every other market would actually have its highs and lows. No doubt, while it is actually challenging in Singapore for our team, we remain optimistic and confident about the Singapore market.”

But what is the secret to his confidence? It turns out to be simpler than one might expect.

Redefining Value for the Singaporean Market

As the food industry is an extremely trend-sensitive sector, Lee emphasised the importance of constant innovation to keep up with short attention spans, and this comes from introducing seasonal items, from limited-time-only menus to elevated versions of bestsellers.

For example, the success of Tim Ho Wan’s signature pork bun gave inspiration to a new item. Lee shared that the chefs started working to create a cousin to their signature baked pork bun, and were exploring ingredients that could create a black-coloured crust. They decided on truffle, following the success of truffle food offerings worldwide.

“For the price that I’m paying, I’m getting a black truffle chicken bun, something which would be a strong indication of value to a lot of consumers. So value in this case is what are we actually putting into our products? What are the ingredients we’re using?”

This ethos also translates into their festive menus. For this year’s Chinese New Year menu, Tim Ho Wan introduced limited edition dishes, such as steamed scallops—an ingredient not often found in fast casual chains—deep-fried nian gao (rice cake) and steamed chicken in wine broth. Localised offerings, including Bak Kut Teh dumplings and Musang Wang durian sesame balls, also help to maintain relevance in the local market.

Lee also added that chefs play a major role in menu innovations, and they are constantly experimenting with new seasonal products based on on-hand customer research. This allows them to avoid introducing products that do not resonate with the local crowd, reducing risk in a market where failed launches are costly.

Beyond menu innovations, operations also matter. Lee pointed out that food deliveries have been growing in Singapore, and the business has been working on curating its menu with products—including bento sets—that are more durable for deliveries. Tim Ho Wan has been introducing refreshed layouts of their stores, such as its Westgate outlet, with more open layouts and brighter lighting.

While these initiatives seem mundane at first, they are also crucial for the brand’s survival in a time when many restaurants have been experiencing declining traffic.

Ensuring consistency even as the business scales up

As the brand continued to elevate its offerings, consistency was another factor that Tim Ho Wan had to get right, especially after the brand was acquired by Jollibee Group in Jan 2025.

Instead of giving Tim Ho Wan a total overhaul, the first thing Lee focused on was building upon the brand’s strengths, working with the chefs and backend operation teams to understand how the brand grew and thrived over a decade: from getting their first Michelin Star a year after opening, to expanding to 11 markets in Asia, Australia and the US.

Leveraging on Jollibee’s expertise, Lee helped the company streamline their operations across company-owned establishments, joint ventures and franchises. He also introduced the Experts Chef Program: a training programme where chefs across all markets will come to Hong Kong to learn Tim Ho Wan’s recipes and replicate them to the same standard.

To verify this on the ground, Vulcan Post also spoke to Chef Cheung Yit Sing, who is also Global Product Innovation Director of Tim Ho Wan, to learn more about the training process and how the business foundation is kept.

From working at pushcart restaurants to cooking at hotels and conventions, Chef Cheung has been making dim sum since 1982 and is now in charge of teaching Tim Ho Wan’s recipes to other chefs. He shared that as the creator of a specific dish, he starts every training with a lecture, followed by a demonstration of how it’s made.

The chefs will then get to taste the dish to understand the standard that they need to hit before making it. After learning the recipes, the chefs will then undergo an assessment to be scored for them to maintain their operational benchmarks.

“Currently, our passing mark is at 90 out of 100, because the standard is really high to ensure that when they are able to match the quality when they leave,” Chef Cheung shared in Mandarin, adding that it will take at least a day for the chefs to learn two recipes.

Don’t fix what doesn’t need to be fixed

Globally, Tim Ho Wan has experienced a 5.2% increase in revenue in Q3 2025 compared to the first half of the year, with encouraging results in newer market entries in the United States. Following the opening of their first company-owned outlet in Irvine, California, Lee shared that the country will be a key market for the brand and expressed his ambition to open 20 additional stores by 2028.

In Singapore, Tim Ho Wan saw a 7% growth in the same period, and the brand has nine outlets in the country since its entry 13 years ago. While Lee revealed that the company is constantly looking for new opportunities to introduce more locations, he also emphasised that the team is not rushing to open new locations to meet timelines—a move that differs from other big chains that overstretched after aggressively expanding.

“We will continue looking at opportunities, but at the same time, we are actually investing in building our organisation’s capability and strengthening our systems and processes,” he explained. “It’s a combination of these factors that we continue to look at how we accelerate our growth in Singapore sustainably, because we are not in this for the short term.”

In a market where even well-known international names are struggling to stay afloat, Tim Ho Wan’s strategy stands in contrast to the churn. Rather than expanding aggressively or reinventing its identity, the brand focused on offering classics and modern versions of dim sum, while also staying intentional with its expansions.

Featured Image Credit: Tim Ho Wan

Tech

After Years Reporting on Early Care and Education, I’m Now Living It

In August 2019, I walked into an early learning center in Philadelphia with a blank reporter’s notebook, a camera and a whole lot to learn.

Prior to that, I’d covered K-12 education and a bit of higher ed. The worlds of child care and early childhood education were foreign to me. I didn’t know the lingo or the layout. And, as I’d learn moments later, I didn’t have the slightest idea what it entailed to care for and engage babies and toddlers.

Fast forward six years, and now my favorite part of my work as an education journalist is meeting and writing about young children and their caregivers.

It wasn’t long after that program visit in Philly that I began to feel this way. Back then, few news outlets consistently published stories about the early years. EdSurge won grant funding to cover early childhood, and because no one on staff had covered the field before, we were given a sizable budget for travel. The idea was that I’d learn the beat in context. I went to early care and education programs all over the country — in homes, centers, schools and churches. I saw what an early learning environment looked like. I heard the sounds — oh, the glorious sounds of laughter and squeals of delight, the tears of a toy snagged unjustly or an unwanted nap. I smelled the smells. I noted the physicality of the job. I watched closely enough to realize that, as the children played — whether inside or outside, independently or in groups, structured or unstructured, real or imaginative — they were developing critical life skills.

From Utah to Ohio to New Jersey, I was filled with wonder during those initial months on the early childhood beat. I loved watching the way young children think and move about the world. I could not believe the depths of patience their teachers had. I puzzled over how, despite everything I learned about the significance of the early years for brain development and long-term success, child care was sorely underfunded, leaving families, educators and kids to figure it out for themselves.

It is one thing to write about babies’ brain development and skill acquisition, to cover the backwardness of the U.S. early care and education system, to report on the impossible choices parents are asked to make. It’s another to live it.

When I became pregnant with my first child in 2024, I told my husband that, as soon as we heard the baby’s heartbeat, I wanted to begin our child care search.

We hadn’t even told most friends and family when we began touring early learning centers in Denver. I expected to join long waitlists. I expected it would become our biggest or second-biggest expense, after housing. I’d been writing about these realities for years, after all.

But even I was shocked to be told, by more than one program director, that they likely wouldn’t have a slot for our son — who was due in spring 2025 — until 2027 or 2028.

And when we eventually decided to pursue a nanny share — in which our child and another child receive care from the same nanny in one of the family’s homes — I was prepared for a high-stakes hiring process. But I didn’t realize, until I got into it, just how difficult it would be to find someone with whom I felt I could entrust the single most precious thing in my life. Or how conflicted I would feel to be at my desk, writing about other child care arrangements for other people’s kids, when I could hear my own baby laughing and crying and babbling right upstairs.

Then there is the baby himself.

I think back to what I didn’t know and what I assumed back in 2019, and I shake my head. Little kids don’t just come online one day, around age 4 or 5, even though that’s how the education system in America treats them.

Some weeks into his life, I watched my son discover his hands. And then I watched him use those hands to reach for a bell that hung over his playmat. After he figured out how to touch it, he learned to grasp it, and after he learned to grasp it, he mastered ringing it. Now, at 7 months old, he uses those hands to pick up board books and hold camping mugs and shake rattles and grab my face. He picks up foods like crusty bread and roasted carrots and strips of scrambled egg and brings them to his mouth to eat. I marvel.

I’ve heard experts explain for years that close caregiver relationships are what a child needs most in the first year of life. But in recent months, I’ve come to see firsthand how much comfort and encouragement and joy mine and my husband’s presence provide our son. I see him look to us for reactions. Now that he’s crawling, he follows us from room to room. Now that he’s reaching, I know when he wants to be held. Now that he’s been in a nanny share for some time, I know that he’s built a relationship with the nanny because he lights up when she arrives for the day.

I can’t say for certain that early childhood reporting has made me a better mom. Perhaps, in subtle ways, some kernels of knowledge have carried over. But I feel quite sure — at the very least hopeful — that motherhood will make me a more perceptive reporter, keenly aware of the stakes in early childhood and more empathetic to those the field touches.

On that subject, this is my last piece as a senior reporter for EdSurge. It has been a great run, with nearly 300 published stories over more than seven years. I’ve covered K-12 and early childhood education here with enthusiasm and commitment, even amid company mergers, a global pandemic, layoffs, and many seasons of change in my personal life.

The early childhood beat has grown up a bit in that time too, with many major newsrooms now devoting full-time positions to the field.

EdSurge will continue to cover early care and education after my time here is up. And in my next chapter as a journalist, so will I. I expect our paths will cross again and again.

Tech

‘There is varied interest in different crafts at Remedy into investigating these AI tools’, says Remedy interim CEO, but confirms Control Resonant ‘does not use generative AI content at all’

- Control Resonant does not use generative AI content “at all”

- Remedy interim CEO Markus Mäki confirmed that there is “varied interest” in the tech at the company

- He adds that the company is following AI progression to see if anything can be used “ethically” that would “add player value”

Remedy interim CEO Markus Mäki has confirmed that the studio’s next major title, Control Resonant, does not utilize generative AI. However, he admits that there is interest in the tech at the company.

Speaking during Remedy’s most recent earnings Q&A (via Game Developer), Mäki discussed the divisive technology that has been seeping into every facet of the games industry over the past few years.

“I’m a big believer in player value—so doing things that really add something to the gameplay experience and player experience. I’m also a big believer in the creative people on our team and that they know the best ways to add that value. There is varied interest in different crafts at Remedy into investigating these AI tools,” Mäki said.

Despite there being “varied interest” in these tools, Mäki was firm when he confirmed that the Control sequel does not feature any use of Gen-AI, but that the company is following its progression to see if anything can be used “ethically” that would “add player value”.

“I can say that, for example, Control Resonant does not use generative AI content at all. But making far-reaching promises about the future is pretty hard at this point,” the interim CEO said.

“We are actively following the development [of generative AI tools] and seeing if there is anything that is really ethically in the right place, and is something that can add player value and that our teams want to use. Then, of course, that’s an easier decision.”

It’s getting more difficult to ignore the presence of Gen-AI in the video game industry.

The 2026 State of the Game Industry Report (via GameSpot) states that 52% of game developers believe generative AI is a threat to the industry, a rise from the 30% last year, while only 7% said it had a positive impact.

One anonymous game developer even told surveyors of the report, “I’d rather quit the industry than use generative AI.”

There are the obvious ethical reasons against using AI for creative projects that impact real artists, but also the threat of layoffs that game developers face as companies shift their focus to the technology.

Its usage has even reached the point of causing a rise in the cost of RAM, which has had negative effects on hardware stock.

In other related news, Sony has reportedly obtained a new patent for AI-generated podcasts that would be voiced by its PlayStation characters.

Last month, it patented new AI technology that will take control of PlayStation games when the player gets stuck, and even a touchscreen controller patent that doesn’t feature buttons or thumbsticks.

The best PS5 controllers for all budgets

Follow TechRadar on Google News and add us as a preferred source to get our expert news, reviews, and opinion in your feeds. Make sure to click the Follow button!

And of course you can also follow TechRadar on TikTok for news, reviews, unboxings in video form, and get regular updates from us on WhatsApp too.

Tech

London's Apple Regent Street to reopen on Valentine's Day

Apple has announced that its central London store refurbishment will be completed and Apple Regent Street is to reopen on Valentine’s Day.

Apple Regent Street | Image Credit: Apple

In early January 2025, Apple announced that its Regent Street site was to be closed for unspecified refurbishment. It was part of Apple’s plans to remodel or open around 50 stores worldwide by 2027.

Apple did say that the closure, which began at 6:00 PM GMT on January 11, was temporary. It didn’t give a reopening date at the time, but it has now revealed that the store will open again at 10:00 GMT on Saturday, February 14, 2026.

Continue Reading on AppleInsider | Discuss on our Forums

Tech

Former Tesla product manager wants to make luxury goods impossible to fake, starting with a chip

The fake goods crisis cuts two ways. Luxury brands lose more than $30 billion a year to counterfeits, while buyers in the booming $210 billion second-hand market have no reliable way to verify that what they’re purchasing is genuine. Veritas wants to solve both problems with a solution that combines custom hardware and software.

The startup claims that it has developed a “hack-proof” chip that can’t be bypassed by devices like Flipper Zero, a widely available hacking tool that can be used to tamper with wireless systems. These chips are linked with digital certificates to verify the authenticity of the products.

Vertitas founder Luci Holland has experienced life as both a technologist and an artist. She has worked in different artistic mediums, including mixed media painting and metal sculpture. She has also worked at Tesla as a technical product manager and has held several business development, community growth, and product management roles at tech companies and venture funds.

Holland noted that traditionally, luxury goods makers use various symbols or physical marks to authenticate their products. However, with the growing demand for these goods, counterfeiters have learned to create convincing copies of these marks along with high-quality fake certificates. These goods are often called “superfakes.”

Holland mentioned that she spoke with maisons — established luxury fashion houses — that said that some of their locations had to stop authenticating goods because fakes were becoming too convincing to reliably detect. She said that drawing on her experience in both the tech and art worlds, she wanted to solve the problem.

“For me, as someone who has a background in being a designer and then also has experience in tech, I saw this problem and thought about the different ways we could solve it. I think what’s truly innovative is we’ve used and combined elements from both hardware and software to create this solution that helps protect brands in this way to convey the information,” she said.

“When I think of counterfeiting and I think of the most iconic and legacy brands,” she added, “a lot of these brands have been around for over 100, 150 years. These brands deserve the most advanced protection to protect these designs.”

Techcrunch event

Boston, MA

|

June 23, 2026

Veritas worked with different designers to create a chip that is minimally disruptive to the product creation process. The chip is the size of a small gem and can easily be inserted even after a product is made without compromising its integrity. The chip incorporates NFC, or Near Field Communication — the same short-range wireless technology used in contactless payments. This means you can tap your smartphone on the item to verify its authenticity.

Holland said that for security purposes, the startup developed a custom coil and a bridge structure. If someone attempts to tamper with the product, the chip goes dormant and hides the codes related to the product. On the software side, the product information is linked to the Veritas back end, which monitors scanning behavior to prevent fraud. The company also creates a blockchain-based digital clone of the product for possible digital art gallery shows or metaverse activities.

The company didn’t reveal who it is working with, but said that brands can use its software suite to get information about all the chipped products, add team members to manage items and add product information along with the product story — details that can also be used to connect with their community. The startup said that some partners use this to engage customers through exclusive invitations or early access to new products.

While the counterfeiting market is big, Holland thinks the market still needs education around why it needs robust tech solutions.

“It is shocking to see that some of the shelf solutions, like NFC chips that brands are using, are actually so vulnerable and could easily be bypassed. This is the one thing most people don’t know, and we want to educate the ecosystem to adopt safer solutions,” Holland said.

Veritas said that it raised $1.75 million in pre-seed funding led by Seven Seven Six, along with Doordash co-founder Stanley Tang, skincare brand Reys’ co-founder Gloria Zhu, and former TechCrunch editor Josh Constine. The company plans to use the funding to expand its two-person team.

Seven Seven Six’s Alexis Ohanian said that he was impressed by the combination of design taste and technology expertise of Holland. He thinks that brands know that fake goods are a problem and are constantly looking for robust solutions.

“It’s absolutely an arms race [against fake goods makers], but we’re used to fighting those and consistently winning in tech — and luxury brands need all the help they can get,” Ohanian said.

Tech

Allonic is rebuilding robotics from the inside out

Budapest-based robotics company Allonic has raised $7.2 million in a pre-seed round, marking what investors are calling the largest pre-seed funding round in Hungarian startup history. The raise was led by Visionaries Club with participation from Day One Capital, Prototype, SDAC Ventures, TinyVC, and more than a dozen angels from organisations including OpenAI and Hugging Face. Allonic’s $7.2m pre-seed matters because it breaks a quiet rule in Europe: that truly hard hardware problems are supposed to wait until later rounds, or later continents. This is early money going into the physical layer of robotics, not the AI wrapper around it,…

This story continues at The Next Web

-

Tech6 days ago

Tech6 days agoWikipedia volunteers spent years cataloging AI tells. Now there’s a plugin to avoid them.

-

Politics2 days ago

Politics2 days agoWhy Israel is blocking foreign journalists from entering

-

NewsBeat23 hours ago

NewsBeat23 hours agoMia Brookes misses out on Winter Olympics medal in snowboard big air

-

Sports4 days ago

Sports4 days agoJD Vance booed as Team USA enters Winter Olympics opening ceremony

-

Tech4 days ago

Tech4 days agoFirst multi-coronavirus vaccine enters human testing, built on UW Medicine technology

-

NewsBeat2 days ago

NewsBeat2 days agoWinter Olympics 2026: Team GB’s Mia Brookes through to snowboard big air final, and curling pair beat Italy

-

Business2 days ago

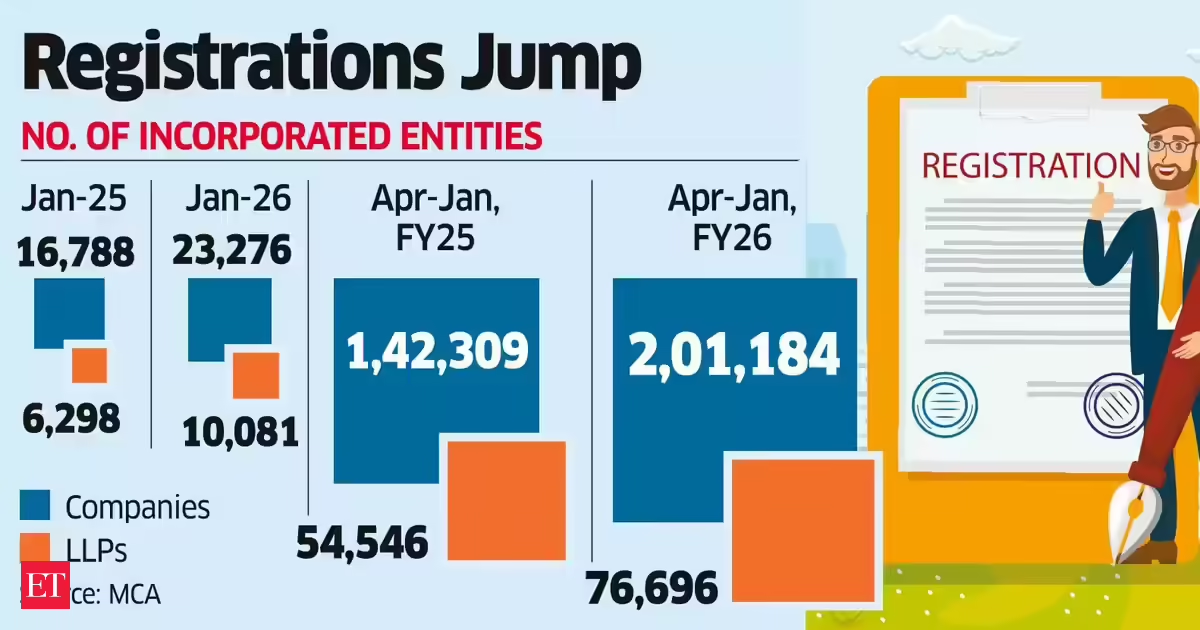

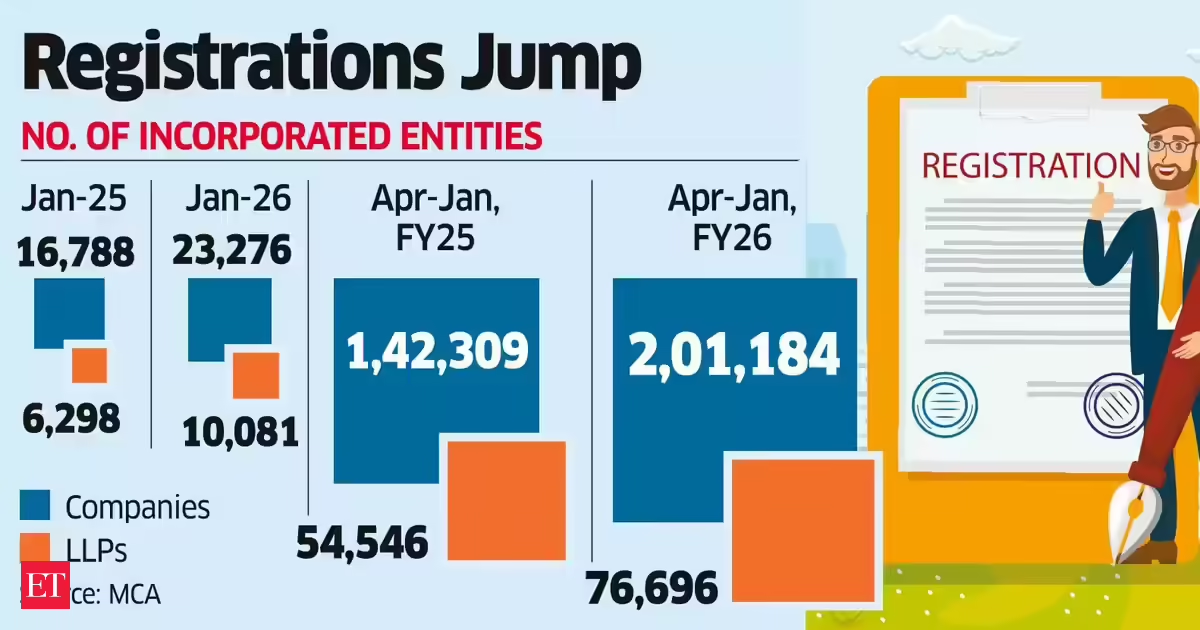

Business2 days agoLLP registrations cross 10,000 mark for first time in Jan

-

Sports2 days ago

Sports2 days agoBenjamin Karl strips clothes celebrating snowboard gold medal at Olympics

-

Sports3 days ago

Former Viking Enters Hall of Fame

-

Politics2 days ago

Politics2 days agoThe Health Dangers Of Browning Your Food

-

Sports4 days ago

New and Huge Defender Enter Vikings’ Mock Draft Orbit

-

Business2 days ago

Business2 days agoJulius Baer CEO calls for Swiss public register of rogue bankers to protect reputation

-

NewsBeat4 days ago

NewsBeat4 days agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Business5 days ago

Business5 days agoQuiz enters administration for third time

-

Crypto World11 hours ago

Crypto World11 hours agoU.S. BTC ETFs register back-to-back inflows for first time in a month

-

NewsBeat1 day ago

NewsBeat1 day agoResidents say city high street with ‘boarded up’ shops ‘could be better’

-

Sports22 hours ago

Kirk Cousins Officially Enters the Vikings’ Offseason Puzzle

-

Crypto World3 hours ago

Crypto World3 hours agoBlockchain.com wins UK registration nearly four years after abandoning FCA process

-

Crypto World11 hours ago

Crypto World11 hours agoEthereum Enters Capitulation Zone as MVRV Turns Negative: Bottom Near?

-

NewsBeat5 days ago

NewsBeat5 days agoStill time to enter Bolton News’ Best Hairdresser 2026 competition