Flavio Briatore has become the latest F1 chief to question Ferrari’s decision to replace Carlos Sainz with Lewis Hamilton.

The grid for the 2025 F1 season will look very different when Hamilton pulls on the red overalls to drive for Ferrari alongside Charles Leclerc.

An agreement was in place little over a year ago for Hamilton to make the blockbuster switch for the 2025 season.

But last year saw Sainz put in a strong display that led to many questioning whether Ferrari made a mistake in letting him go.

Eddie Jordan called the decision to replace Sainz as ‘suicidal’ after an impressive year for Ferrari.

Lewis Hamilton will join Ferrari next seasonReuters

Lewis Hamilton will join Ferrari next seasonReuters

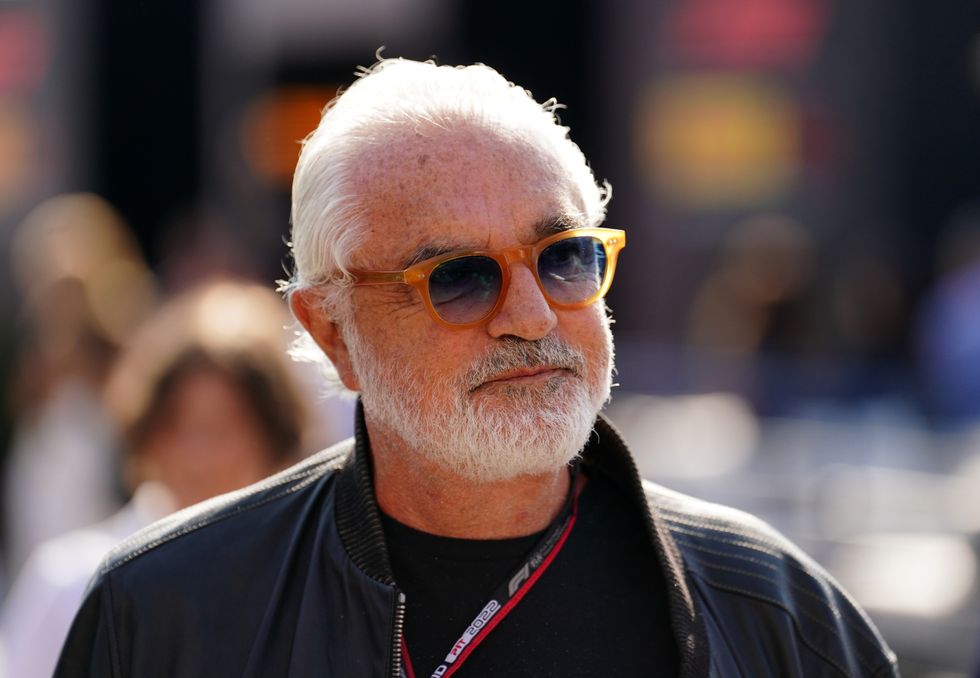

And Briatore, who is executive advisor for Alpine, also can’t understand why Ferrari made the change.

“It will be strange to see Lewis in a Ferrari,” Briatore told Sport Bild.

“Of course I respect such decisions, but I wonder if it makes sense.

“I don’t understand why this great duo [Leclerc and Sainz] has been torn apart.

“It is not up to me to pass judgment on that, but if I was the person in charge at Ferrari, I would not have contracted Hamilton.

“In principle, it’s good for Formula 1 if Lewis drives for Ferrari. And everything that’s good for Formula 1 is good for me too.

“It’s certainly good for television and the ratings too. Let’s wait and see. Time will tell how well he drives in the Ferrari.”

Jordan defended his original comments questioning Hamilton’s switch to Ferrari, pointing to the seven-time world champion’s claim that he doesn’t feel “fast anymore”.

“I got such sh*t for saying that I thought Lewis should not be going to Ferrari because of what he said,” Jordan added.

“When people say things like ‘I don’t think I’m fast enough anymore’, that registers in my head.

“I’m such a person that, yes, I believe in talent; yes, I believe in performance; yes I believe in speed.

Flavio Briatore has questioned Lewis Hamilton’s move to Ferrari

PA

“But I’m a psychological person. I like to know what’s going on inside that brain, because I think fundamentally what’s going on in there very often gets replicated back in the job, whether that’s driving a car, a truck, a train, a crane, it doesn’t matter. It’s how you approach the moment.

“Do I think, this time next year, we will all say: ‘Jesus, Lewis was amazing?’

“I hope I’m able to say that. But at the moment, I have to say Leclerc is quick.

LATEST SPORT NEWS:

Carlos Sainz was axed to make room for Lewis Hamilton

Reuters

“But the way we saw Sainz, who in their right mind [would replace him]? John Elkann must have rocks in his head to have made that decision.

“I’m particularly close to his father, Carlos Sr, and when I spoke to him to say what a great job he’d done, Carlos Sr was in tears.

“Carlos himself was in tears. He still doesn’t quite understand why he was let go.

“And to be quite honest, neither do I.”

+ There are no comments

Add yours