Uncategorised

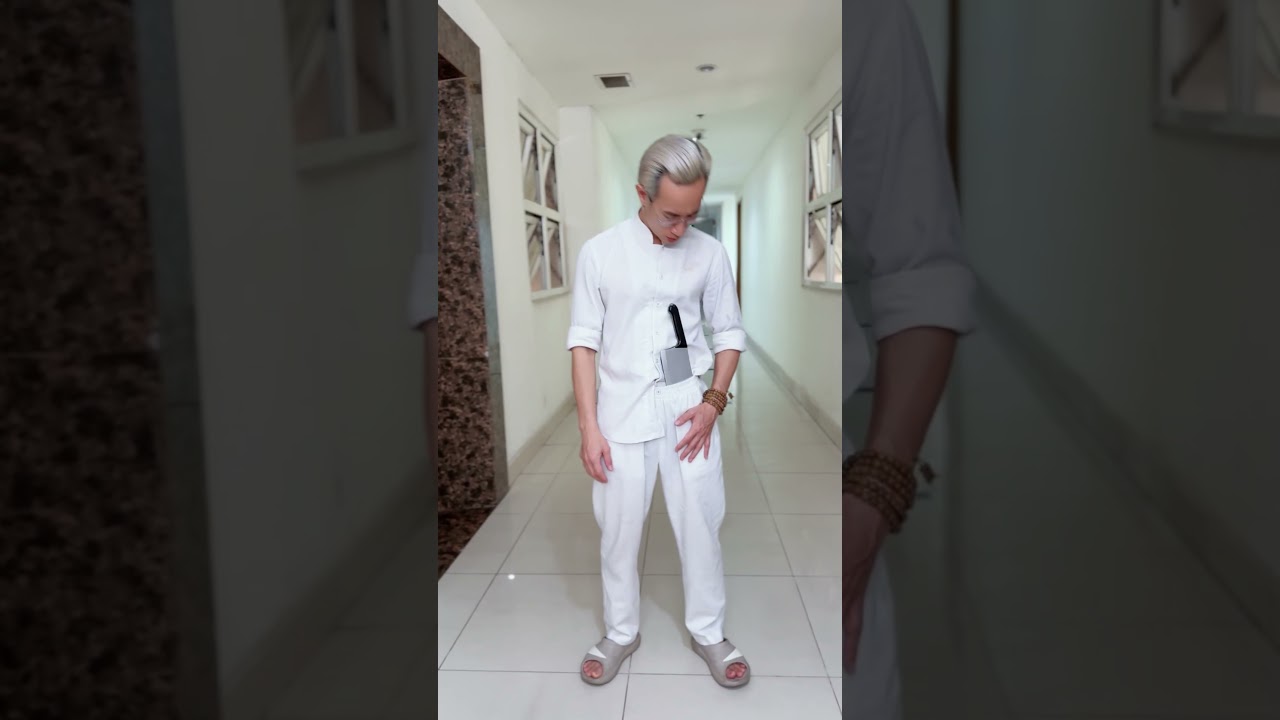

The Unexpected Ending #funny #shorts #comedy #entertainment

Thank you for supporting my channel.

Subscribe to my channel to see interesting and meaningful stories every day.

LIKE + SHARE + COMMENT

Your smile is my happiness.

Love you all!!!

#short #viralshorts #beneagle #kungfu #funny

source