Entertainment

General Hospital TWIST: Forbidden Cousin Romance & Unexpected Rivalries Ignite!

General Hospital has fans upset because the soap just had two cousins locking lips, which is ick. Plus, on top of the expected sizzle between Jason Morgan (Steve Burton) and Britt Westbourne (Kelly Thiebaud) and Nathan West (Ryan Paevey) and Lulu Spencer (Alexa Havins Bruening), we had two pairs of enemies getting much closer as the storm raged.

And this is really intriguing. So, another duo may also be falling apart already. We’re going to dig into the romantic breakups and shakeups as Port Charles keeps riding out this chilly winter weather event.

General Hospital Spoilers: Charlotte Cassadine and Danny Morgan’s Controversial Kiss

So, let’s start with the kissing cousins. I’m not sure if the headwriters forgot their history or just plain don’t care, but yeah, Charlotte Cassadine (Bluesy Burke) and Danny Morgan (Asher Jared Antonyzyn) are cousins—second cousins to be accurate. But why go there at all? Because it’s icky to a lot of people.

If you remember when Sasha Gilmore (Sofia Mattsson) and Cody Bell (Josh Kelly) had feelings and a couple of kisses and then found out they were cousins, they ran in the opposite direction from each other, as they should have done. But Danny and Charlotte are playing kissy face when they would be attending the same family reunions.

Just real quick, in case you forgot or don’t know their lineage, Charlotte’s parents are Lulu and Valentin Cassadine (James Patrick Stuart). And his parents are Helena Cassadine (Constance Towers) and Victor Cassadine (Charles Shaughnessy). That was revealed more than a year ago. And well-established General Hospital history should tell us Victor is the brother of Mikos Cassadine (John Colicos).

General Hospital Spoilers: Family Tree Fun

And Mikos had an affair and Alexis Davis (Nancy Lee Grahn) was the result of that affair. And she had Sam McCall (Kelly Monaco) who is Danny’s mother. So Danny’s great-granddad and Charlotte’s granddad are brothers. That makes them cousins and is making General Hospital fans both furious and disgusted.

Especially since Elizabeth Korte is known as the historian because she’s supposedly this absolute expert on characters and facts and history of the ABC soap. So, either this is a blind spot she doesn’t know, or Elizabeth thinks it’s okay for Charlotte to be kissing her cousin Danny, or Elizabeth Korte thinks fans are too dumb to notice. So, they decided to go with the cousin loving anyway. Let me know what you guys think.

Cody Bell and Molly Lansing-Davis Face Friction Over a Secret Manuscript on GH

All right, on to a couple that’s about to hit the skids before we get into two surprising enemy situations that are turning into sparks flying. So Outback accidentally dumped out Molly Lansing-Davis’ (Kristen Vaganos) purse and there was her manuscript. So Cody decided to read it.

As a writer myself, I’m not okay with somebody reading something I wrote without my permission, but that’s what we’ve got. Bottom line is that Cody read what Molly wrote and now he’s all salty about it. And we all know she was basing one of the main characters in her romance book on Cody.

Now, if you remember, at one point, I think Molly was writing the Codyesque character as the hero, but then Molly decided she didn’t like Cody during the whole Ava Jerome (Maura West) thing, which of course her sister was paying him to do. And I think Molly at that point was writing him as this idiotic sidekick.

But Molly might have pivoted back. But what’s clear in Cody’s reaction this week is he doesn’t like what Molly wrote that was clearly inspired by him, aka the cowboy in her new novel. So Cody is offended and he was giving Molly a nice warm place to stay to ride out the blizzard.

And Cody was also showing Molly a really good time in his nice warm sheets. But unless she can make things right with Cody, it’s about to get really chilly in the Quartermaine stables. All right, now let’s talk about these two enemies-to-lovers romantic vibes we got happening with two different couples across Port Charles.

Nathan West and Lulu Spencer Spark Romantic Rumblings in Port Charles

And of course, you know that Nathan and Lulu kissed, which we totally expected. She told Nathan that Maxie Jones (Kirsten Storms) is going to hate her for this because Lulu has feelings for Nathan. And they are not the enemies-to-lovers that I’m thinking about, just to be very clear on that, because they’re longtime friends.

Carly Corinthos and Valentin Cassadine on GH: From Deadly Enemies to Sudden Romance?

The first enemies-to-lovers I want to talk about is Carly Corinthos (Laura Wright) and Valentin. I’ve been hoping to see some sizzle between these two and now it might finally be manifesting. They are enemies though, or were. I can see Carly holding a little bit of a grudge at least before because Valentin tried to kill Jack Brennan (Chris McKenna) a couple of different times.

One time while they were in Germany, Valentin had Brennan down and would have finished him off, but Carly intervened, saved Jack’s life, and Valentin took off. That was before Carly knew what a lying creep that Jack is, and before he recruited Josslyn Jacks (Eden McCoy) into the WSB behind her back.

Then, of course, Valentin tried to kill Jack with polonium in that champagne, but then Carly drank it instead of Brennan, and she nearly died. So, yeah, definitely some history there between Valentin and Carly, but now both of them are seeing each other very differently.

And I would not be surprised if while they’re riding out the storm, we may see Valentin and Carly also share a kiss, maybe more. I’d be totally down for it because I like their chemistry. But also because if Valentin and Carly get romantic, that’s going to be a real bitter pill for Jack Brennan to swallow when he finds out.

And I’m sure both of them would rub it in his face because while he says he loves Carly, I don’t buy it because his actions don’t really show that—not all the time. At any rate.

Tracy Quartermaine and Martin Gray: An Unexpected Love Connection on General Hospital

All right. So, the other enemies-to-lovers possibility is between two people that well and truly despise each other. And that’s Tracy Quartermaine (Jane Elliot) and Martin Gray (Michael E Knight). Yes, I know Tracy hates him and Martin feels the same way about her, but I feel like something’s there.

And you know that hate can turn to other feelings very fast on a soap. Tracy is lonely and so is Martin. And let’s be real, Tracy fell for Luke Spencer (Anthony Geary) after he conned her. So, it’s not a stretch to think that Tracy could also fall for Martin.

He’s handsome. He’s charming. And he’s a bit of a rogue. He’s a troublemaker. He doesn’t mind coloring in gray areas. And let’s face it, Tracy needs some love and companionship in her life. And now we’ve got Martin and Tracy hanging out during the storm at Drew Cain (Cameron Mathison)’s place.

I think it’d be a whole lot of soapy fun if Tracy winds up in a romance with Martin. If not a relationship, then some sort of situationship. I could see Tracy and Martin sneaking around because she might not want her family to know she’s getting flirty with awful Drew’s lawyer.

And if Tracy does get cozy with Martin, then he might actually go out of his way to help her get back her heirlooms that she wants so badly. Especially with Drew laid up from that stroke his wife Willow Tait (Katelyn MacMullen) gave him. It’s the perfect opportunity to get Tracy’s stuff back and Martin might help her, especially if they start getting cuddly.

General Hospital Recap: Romantic Shakeups for Jason, Britt, and More

So, coming out of this storm, we have Lulu conflicted about kissing Nathan, but she most definitely has feelings for him. And again, I don’t actually think that’s him. We’ll see. Charlotte and Danny need to tap the brakes, get some mouthwash, and never ever play tonsil hockey again with a cousin because they are both Cassadines.

And of course, Britt and Jason are going to get a lot closer as she spills the horror story that her life has become to him. And I expect Britt and Jason to get really steamy. As for Valentin and Carly, I’d be thrilled with a kiss, and Martin and Tracy getting together would be ratings gold.

Entertainment

The 13 best serial killer shows streaming on Netflix

:max_bytes(150000):strip_icc():format(jpeg)/best-serial-killer-shows-netflix-032724-c94ee7e8656d4f5babedae8fb3eb3a91.jpg)

As the streamer corners the market on murder content, here are the serious series worth your time.

Entertainment

Dog the Bounty Hunter’s Stepson Faces Life in Prison After Shooting Son

Duane “Dog the Bounty Hunter” Chapman’s stepson Gregory Zecca is facing life behind bars if convicted of aggravated manslaughter after fatally shooting his teenage son.

Florida’s Collier County Sheriff’s Office posted an update on the July 2025 incident via Facebook on Tuesday, February 3, stating that Zecca accidentally shot his child, Anthony, 13, in the family’s Naples, Florida, apartment while allegedly under the influence of alcohol. Local Southwest Florida news outlet WINK News was first to report on the update.

Per the Sheriff’s Office, Zecca, 39, was taken into custody “on a warrant for aggravated manslaughter of a child with a firearm and using a firearm while under the influence,” which could result in a life sentence.

A Collier County police investigation into Zecca, who is the son of Dog’s wife, Francie Chapman, from a previous marriage, found that Zecca allegedly “consumed alcohol over several hours at a local establishment, purchased more alcohol, and later used both alcohol and marijuana at a friend’s residence.”

Zecca and his son were “watching a UFC fight on TV at the time.”

Law enforcement also reported that Zecca “repeatedly handled a firearm in his son’s presence, practicing drawing it from his waistband and dry-firing.” The firearm had “initially been made safe by removing the magazine and clearing a live round from the chamber.”

Further detail stated that the magazine was put back into the firearm before Zecca “discharged a single shot” that struck Anthony.

Sheriff Kevin Rambosk stated in the update that the event “was a heartbreaking and preventable tragedy.”

Rambosk added, “Our detectives conducted an exhaustive and thorough investigation, examining every element of what happened through witness statements, forensic testing, subpoenas, search warrants, and more.”

Police officials also noted that deputies who arrived at the scene “detected the odor of alcohol on Zecca and observed marijuana in plain view.”

A subsequent toxicology report estimated Zecca’s “blood-alcohol concentration to be approximately 0.116 at the time of the shooting, above Florida’s legal limit of 0.08.”

Zecca was placed on a psychiatric hold following the shooting. A family representative told TMZ one day after the incident occurred that Zecca was “under observation on a 5150 hold,” which meant that he had been involuntarily detained for 72 hours after being deemed “a danger to themselves, to others, or gravely disabled,” per Clear Behavior Health.

The rep also told the outlet at the time that the hold was instigated because Zecca was “overcome with grief” and not because he felt guilty of a crime.

Dog released a statement via the outlet in the shooting’s aftermath. “We are grieving as a family over this incomprehensible tragic accident and would ask for continued prayers as we grieve the loss of our beloved grandson, Anthony,” Dog and Francie both wrote at the time.

Zecca also disabled his Instagram account after the incident. Prior to the account’s disappearance, Zecca had posted photos of himself with Anthony, including one image that showed the pair at a shooting range.

If you or someone you know is struggling or in crisis, help is available. Call or text 988 or chat at 988lifeline.org.

Entertainment

Why Taylor Swift Is Going to ‘Kill’ Travis Kelce for Breaking Item

Travis Kelce may have quite literally landed himself in fiancée Taylor Swift’s bad books.

During a Tuesday, February 2, teaser clip for the following day’s episode of Travis, 36, and older brother Jason Kelce’s podcast, “New Heights,” the Chiefs tight end fell back in his chair, landing with an audible crash on the ground.

A red-faced Travis, who had been enthusiastically laughing at a comment made by Jason, 38, regarding the trotting out of “best in show” dogs, joked after his fall, “Taylor’s going to kill me.”

As both brothers cracked up over the mishap, and Travis regained balance on his chair, Jason laughed even harder at his younger brother’s candid comment regarding Swift, 36.

A caption that accompanied the teaser clip, shared via X, that read, “Warning: This week’s episode is dangerously funny. Tomorrow. Super Bowl preview with two special guests.”

Travis’ light-hearted comment comes just two weeks after he joked that the pop star would “kill” him for yet another reason. While sharing fan reactions to a fresh “New Heights” merch drop, a listener commented, “I can’t believe none of the new hoodies are called ‘New Heights of Man-Hoodie,’” which drew blank expressions from Travis and Jason.

When the duo’s intern reminded them that the comment was a reference to Swift’s “The Life of a Showgirl,” as “New Heights” is name-dropped on the album’s “Wood,” Travis offered a tongue-in-cheek response.

“I didn’t understand that,’ he said. “Taylor’s gonna kill me for not knowing that.”

Despite the jokes, Travis and Swift, who got engaged in August 2025 after dating for two years, have enjoyed quality time together in recent weeks. Stepping out for a rare public date night in Los Angeles on January 12, the pair were seen heading to dinner at Beverly Hills restaurant Funke. Captured in photos obtained by TMZ at the time, Swift rocked a gray blazer while Travis wore a green, brown and tan bowling shirt as they exited a vehicle and entered the restaurant.

The outing comes amid speculation over the future of Travis’ football career, with the NFL star rumored to be edging towards retirement. While no official announcement has been made, his teammates seemed to kick off farewell celebrations during the January 4 Chiefs game against the Las Vegas Raiders.

According to an article published by local news outlet Kansas City Star after the game, which saw the Raiders win 14-12, third-string quarterback Chris Oladokun extended his hand out to Travis in the locker room. “Man, thank you,” Oladokun, 28, reportedly said to him.

Additionally, the outlet reported that linebacker Cole Christiansen also said to Travis: “Whatever happens, it’s been an honor playing with you.”

Travis said on the January 7 episode of “New Heights” that he’s looking forward to “being a regular human” once he’s done playing football. “Every season ends for me, I just put my feet up and I be a human, because I’ve been putting my body through the wringer for the love of it,” he told Jason during the show.

He continued, “I do enjoy playing football and the physical aspect of it, I think there’s something about feeling the wear and tear of the football season, just getting ready for a game knowing your body’s f***ing beat down. I think there’s something to it, it makes you just feel like a mangy animal that’s out here just finding a way to survive.”

Entertainment

Meghan Markle’s As Ever Products Given To Netflix Staff For Free

Netflix employees in Los Angeles are enjoying free products from Meghan Markle‘s lifestyle brand, As Ever, which includes jams, candles, wine, and her signature flower petal sprinkles.

The products, tied to her Netflix series “With Love, Meghan,” reportedly remain at the offices mainly for promotional purposes after most inventory was moved to a warehouse.

Meghan Markle launched the brand in partnership with Netflix, and insiders say it continues to sell out quickly, with plans for global expansion.

Article continues below advertisement

Netflix Staff Taking Home Free Products From Meghan Markle’s Lifestyle Brand, As Ever

According to Page Six, Netflix staff in Los Angeles have been enjoying products from Meghan’s lifestyle brand, As Ever.

Two storage rooms at the streamer’s Hollywood campus reportedly hold jars of jam, candles, wine, and Meghan’s signature flower petal sprinkles, and staff are said to be taking the products home freely.

“Apparently, there are two storage rooms packed with As ever product. They’re literally just giving it away to employees,” a source told the news outlet. “One (staffer) walked out with 10 products for free.”

Despite the giveaways, most of As Ever’s inventory was moved to a separate warehouse long ago. What remains at Netflix is primarily for promotional purposes, including gifting and sampling.

Article continues below advertisement

The presence of the products at the offices stems from Meghan’s partnership with Netflix, which helped launch the lifestyle range after she abandoned the original name, American Riviera Orchard, due to a trademark dispute.

The products were tied to her Netflix series, “With Love, Meghan,” which will not return for a third season after ratings dropped.

Article continues below advertisement

The Duchess’s Lifestyle Brand Denied Struggling To Sell After Glitch Reveals Massive Unsold Inventory

Last month, Meghan’s team denied claims that the business was struggling after a website glitch briefly revealed hundreds of thousands of unsold items: about 220,000 jars of jam, 30,000 jars of honey, 90,000 candles, 80,000 tins of flower sprinkles, and roughly 70,000 bottles of wine, including rosé, sauvignon blanc, and brut sparkling wine.

As Ever’s products carry premium price tags, too. The duchess’s jams and teas sell for $14, honey is $32 per jar, candles go for $64, rosé costs $35, and sparkling wine is $89 a bottle.

The brand’s current Valentine’s Day set combines chocolate, strawberry, and raspberry spreads, hibiscus tea, a water lotus and sandalwood-scented candle, and flower sprinkles for $185.

Article continues below advertisement

Reddit users discovered a glitch allowing them to calculate maximum stock levels by adding large quantities of products to their carts, but sources close to Meghan say the brand is preparing for international expansion, not struggling.

A source told People Magazinethat “the glitch that led to this data being revealed points to a business that isn’t just successful — it’s flying, literally off the shelf.”

Article continues below advertisement

Meghan Markle’s Lifestyle Brand Soars As Debut Rosé Sells Out

Insiders claim the Duchess of Sussex, who retains a first-look deal with Netflix alongside her husband, Prince Harry, is now focusing on expanding her lifestyle brand and may continue releasing occasional holiday specials.

During an interview for Bloomberg Originas’ “The Circuit with Emily Chang,” Meghan said her debut rosé sold out in under an hour, and the brand increased inventory for a second seasonal drop, which also sold quickly.

“When you sell out that quickly, actually, it’s a double-edged sword, because it’s an incredible thing to happen for any small business and any startup, and at the same time, you don’t get the same metrics and learnings about which products are the most coveted, because it’s all gone immediately,” she explained.

The Duchess Of Sussex Plans Global Expansion Of Her Business

Meghan, during her chat with Emily Chang, shared that the initial launch of products prompted a rapid scale-up due to the goods selling out quickly.

“[We] thought for sure that would at least last for a couple weeks,” she noted. “That sold out in a couple hours.”

Meghan continued, “And suddenly, the conversation goes from, at the start of this year, talking about a few thousand jars and lids, to ‘We need to do a purchase order of a million.’ And that’s a huge jump in just a few months of starting a business.”

When asked about global expansion, the royal swiftly confirmed, “Yes, absolutely.”

Article continues below advertisement

Meghan Markle’s Netflix Series May Not Return For A Third Season

While Meghan’s lifestyle brand grows and flourishes, her lifestyle series “With Love, Meghan” may not come back for a third season.

“It’s not returning as a series. There have been conversations about holiday specials, but there’s nothing in the works yet,” a source told Page Six.

A second insider stated that the Duchess of Sussex will instead focus on her lifestyle brand As Ever, while another noted that “people will see similar cooking and crafting” on her social media pages, but they will be “more bite-sized.”

Speaking to People about the lifestyle series, a source confirmed at there are “currently no plans for a third season.”

Entertainment

Savannah Guthrie pulls out of hosting 2026 Winter Olympics coverage amid ongoing search for missing mother

:max_bytes(150000):strip_icc():format(jpeg)/Savannah-Guthrie-Today-Olympics-2022-020226-ac79469243c24e77b5e146997f31c96e.jpg)

The “Today” anchor’s 84-year-old mother was reported missing Feb. 1.

Entertainment

Carrie Underwood Gets Emotional Over Postpartum Depression Song

Carrie Underwood was brought to tears during one particularly moving American Idol audition.

During the Monday, February 2, episode of the reality singing competition, Underwood and her fellow judges, Lionel Richie and Luke Bryan, listened to season 24 hopeful Hannah Harper perform her original song “String Cheese.”

After explaining that the song was written as a reflection of her experience with postpartum depression, Harper drew an emotional response from Underwood, 42.

“That’s about the most relatable song I think I’ve ever heard,” the American Idol season 4 winner, who shares sons Isaiah, 10, and Jacob, 7, with husband Mike Fisher, said. “You might be my favorite person that’s walked through those doors.”

Underwood continued, “You’re one that I’m going to think about when we leave here. I’m going to be rooting for you. I loved everything about everything you just did.”

The judging trio then approved Harper’s progression to the next round of auditions.

Harper’s song’s lyrics read, “Hot Wheels and little people under my feet / Babies crying, it’s pure chaos, but I don’t miss a beat / Always knew that a nine to five wouldn’t suit me very good / But my hours are extended, that’s just motherhood.”

Of her lyricism, Harper, a mother of three, told Underwood, Richie, 76, and Bryan, 49, that her words flowed after sharing a poignant moment at home with one of her children. “I was sitting on my couch wallowing … and you have boys, you know, everybody wants to touch you,” she explained. “I didn’t want to be touched. I was just having a pity party, praying that the Lord would calm my spirit.”

Harper continued, “My son kept coming up to me, ‘Mom, open this. Mom, open my cheese.’ I’m like, ‘Leave me alone with the dadgum cheese.”

Harper added that once she opened her son’s string cheese, she felt significant maternal responsibility. “I realized that God had put me in that place,” she told the judges. “That where I was in my house was the biggest ministry that I could ever have.”

Underwood said during a 2016 interview with Redbook magazine — when the country music singer was tackling toddler life — that working mothers need to “ask for help.” She told the outlet at the time, “Accepting help is hard for me, but I’m learning. Sometimes I feel guilty that this is my son’s life: We live on a bus and we’re in a hotel room and sometimes we’re in the middle of nowhere and it’s not so great. It’s not all glamorous. We have a nanny who helps out, especially when we’re on the road. But I’d feel guilty asking someone to watch him at home while I run to the grocery store.”

Three years later, Underwood took to Instagram to share further honest insight into her parenting journey, admitting that she can be “hard” on herself. A 2019 gym selfie included the caption, “I go into the gym and I can’t run as fast or as far. I can’t lift as much weight or do as many reps as I could a year ago. I just want to feel like myself again … for my body to feel the way that I know it can.”

After coming to the realization that she needed to go easier on herself, Underwood concluded at the time, “I’m going to take it day by day, smile at the girl in the mirror, and work out because I love this body and all it has done and will continue to do.”

Entertainment

“Full House” actress says she tried to 'grow an orange tree' in her stomach as a kid

:max_bytes(150000):strip_icc():format(jpeg)/fuller-house-Jodie-Sweetin-Andrea-Barber-Candace-Cameron-Bure-bc491ce5257d4e6da2b2018372dab2d8.jpg)

Whatever happened to predictability? This episode of “How Rude, Tanneritos!” was not that.

Entertainment

Christian Bale 'would scream like crazy' while filming “The Bride!” to keep 'from going insane'

:max_bytes(150000):strip_icc():format(jpeg)/The-Bride-Cover-Stills-020226-03-4c8c0a1999cc4091b2b127a994da1bb9.jpg)

Bale reveals it took six hours to transform into Frankenstein’s monster — named “Frank” in this story — every day on set.

Entertainment

CBS pulls “60 Minutes” episode slated to rerun during Super Bowl after new contributor Peter Attia is named in Epstein files

:max_bytes(150000):strip_icc():format(jpeg)/Peter-Attia-01-020326-033b0d4e1b4443b28639c4a21802ce83.jpg)

The longevity influencer has apologized for his correspondence with convicted sex offender Jeffrey Epstein, but said he “never witnessed illegal behavior.”

Entertainment

A Major Romantic Reveal for Faith Leads to an Even More Intense Plot Twist

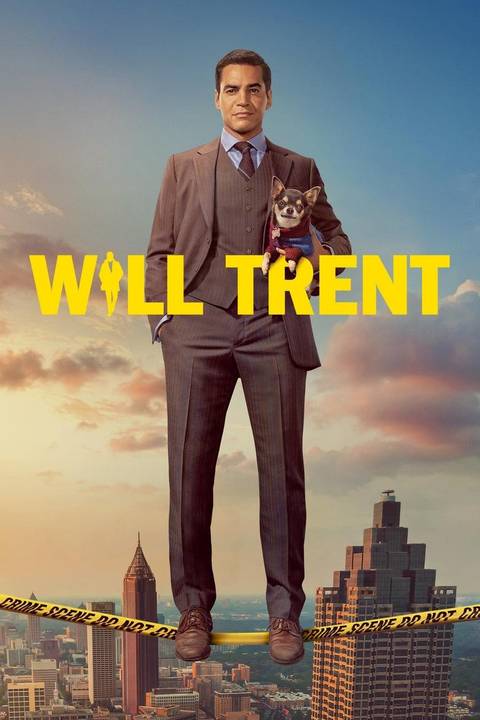

Editor’s Note: The following contains spoilers for Will Trent Season 4, Episode 5.

This week’s episode of Will Trent, Season 4, Episode 5, “Nice to Meet You, Malcolm,” switches things up by putting Faith (Iantha Richardson) front and center instead of Will (Ramón Rodríguez). While investigating the murder of a matchmaker, Faith expresses her recent cynicism due to a string of bad dates, only to then meet her dream guy.

“Nice to Meet You, Malcolm,” balances between Faith’s whirlwind romance and its two major cases, as well as a hilarious subplot of Heller (Todd Allen Durkin) and Franklin (Kevin Daniels) building a stroller for Angie’s (Erika Christensen) baby, before ending on a wild cliffhanger. The episode is a fun and intense installment that sets up some really exciting storylines to come, both in terms of a major case, as well as regarding some ongoing character-centered storylines.

In ‘Will Trent’ Season 4, Episode 5, The GBI Investigates the Murder of a Matchmaker

This week, the GBI investigates the murder of a professional matchmaker named Sawyer Jennings, who ran a business called Jennings Luxury. In the hours before Sawyer was killed, he called his business partner, Liv Somerman (Marguerite Moreau), several times. Faith brings Liv in for questioning, but Liv tells her that she and Sawyer had a good partnership and that the business was doing well. The investigation then takes Will and Faith to Anna Martello (Greyson Chadwick), a matchmaking client who’d met with Sawyer at a diner the night he was killed.

Anna tells Will and Faith that she’d recently been matched with a wealthy man named Brody Evans (Logan Michael Smith). Brody drugged and sexually assaulted Anna, and she told Sawyer, who’d confronted him and had planned to expose him. Brody has an alibi for the time that Sawyer was killed, and Will and Faith realize that Liv killed him. Brody wired Liv $100,000 to keep Sawyer from exposing him, so she killed her own business partner. Liv had gone to a lot of trouble to craft a fake alibi, but thanks to a street camera, Will and Faith are able to prove that she’s guilty.

In ‘Will Trent’ Season 4, Episode 5, The APD Investigates a Bank Robbery That Is More Than Meets the Eye

While the GBI looks into Sawyer’s murder, the APD investigates a bank robbery where one person was killed. The only evidence they have are photos of the robbers wearing animal masks, as well as a tattoo on the arm of someone wearing a cheetah mask. Ormewood (Jake McLaughlin) is now done with chemo, and this is his first case since returning to the field. He feels good – almost too good as he notices that his vision is better than it was before his tumor, and his senses are heightened.

The investigation into the tattoo takes Angie and Ormewood to one family, but they’re unable to narrow it down to one of the ten siblings. During the investigation, they have an elevator run-in with Faith and Will. It’s mostly pleasant, but Will doesn’t know how to be around Angie now, even though he’s still been chatting with Ava (Julia Chan). A hurt Will leaves the elevator while Faith and Angie are talking about the baby. Later, Angie and Ormewood finally find the owner of the bank vault, a man named Wyatt Fernsby (Marc Levasseur). Wyatt is attempting to end his life when they find him, but Angie and Ormewood save him.

Wyatt reveals that he works for Biosentia Pharmaceuticals. The company made him shred documents for work, but he kept a copy in his safe deposit box. Someone from the company found out that he had the copy, so they stole it. Afraid of what his former company would do to him, Wyatt tried to end his life. It’s not yet revealed what is in the documents, but it’s bad enough that Wyatt won’t speak about them without a lawyer present. The case is much more than a bank robbery – it was a company trying to hide its illegal activities by hiring an accomplished team of robbers to steal the evidence for them. Because of this, Angie and Ormewood then take this case to the GBI.

‘Will Trent’ Season 4, Episode 5 Gives Faith a New Love Interest – and Delivers a Shocking Twist

Faith goes to a hotel bar for a date with a guy she met on an app, but he gives her the ick before he even gets there. Faith cancels the date, but she is soon swept off her feet by a charming stranger named Malcolm (DeVaughn Nixon). Faith and Malcolm have the perfect night together. The only problem is, she gave him a fake name and job when they first met, and this lie could end up ruining everything between them. Malcolm just gets better the more Faith learns about him. He’s the wealthy owner of the hotel where they met, and he’s enamored with Faith.

Three dates in, things are looking promising for Faith and Malcolm. He puts in a lot of effort and money to make her feel special, and he remembers the little things that she tells him. For a while, it looks like Faith’s lie will be the thing to make this romance collapse. There’s also another exciting obstacle that this episode sets up. In the elevator, Ormewood teases Faith about spending two nights in a row away from home, but there’s a hint of something else beneath his teasing. Sure enough, while on a stakeout with Will, Ormewood and Will have a surprisingly deep talk. Will suggests that Ormewood’s heightened senses are his way of rediscovering who he is after his body failed him. The two joke about Faith’s new romance, but when Will says offhandedly that Faith is happy, Ormewood looks heartbroken when he asks, “She seem happy to you?”

This stakeout scene has two major reveals. Ormewood’s feelings for Faith seem pretty clear at this point, though not outright stated, but they’re not a surprise. This potential romance has been developing since they moved in together last season, and it makes perfect sense. The second reveal of this scene is a major twist. Ormewood found the robber who wore the cheetah mask with the unique tattoo. While investigating this person, Will and Ormewood learn that the robber is Malcolm, and that Faith is with him at that very moment. Will calls Faith to warn her, and the episode ends there, with Faith now having to find a way to keep from blowing her cover, while dealing with this devastating news.

Will Trent airs Tuesdays at 8:00 P.M. EST on ABC.

- Release Date

-

January 3, 2023

- Directors

-

Howard Deutch, Eric Dean Seaton, Holly Dale, Lea Thompson, Patricia Cardoso, Sheree Folkson, Bille Woodruff, Erika Christensen, Gail Mancuso, Geary McLeod, Jason Ensler, Mark Tonderai, Paul McGuigan

- Writers

-

Inda Craig-Galván, Henry ‘Hank’ Jones, Karine Rosenthal, Adam Toltzis, Antoine Perry

-

Ramón Rodríguez

Will Trent

-

Erika Christensen

Angie Polaski

- This episode sets up a compelling case for both the GBI and the AD, with a major plot twist regarding the central suspect.

- This episode centers the characters and their individual conflicts, delivering some important moments for its best dynamics.

-

Crypto World5 days ago

Crypto World5 days agoSmart energy pays enters the US market, targeting scalable financial infrastructure

-

Crypto World6 days ago

Software stocks enter bear market on AI disruption fear with ServiceNow plunging 10%

-

Politics5 days ago

Politics5 days agoWhy is the NHS registering babies as ‘theybies’?

-

Crypto World5 days ago

Crypto World5 days agoAdam Back says Liquid BTC is collateralized after dashboard problem

-

Video2 days ago

Video2 days agoWhen Money Enters #motivation #mindset #selfimprovement

-

Tech5 hours ago

Tech5 hours agoWikipedia volunteers spent years cataloging AI tells. Now there’s a plugin to avoid them.

-

NewsBeat5 days ago

NewsBeat5 days agoDonald Trump Criticises Keir Starmer Over China Discussions

-

Politics2 days ago

Politics2 days agoSky News Presenter Criticises Lord Mandelson As Greedy And Duplicitous

-

Crypto World4 days ago

Crypto World4 days agoU.S. government enters partial shutdown, here’s how it impacts bitcoin and ether

-

Fashion5 days ago

Fashion5 days agoWeekend Open Thread – Corporette.com

-

Sports4 days ago

Sports4 days agoSinner battles Australian Open heat to enter last 16, injured Osaka pulls out

-

Crypto World4 days ago

Crypto World4 days agoBitcoin Drops Below $80K, But New Buyers are Entering the Market

-

Crypto World2 days ago

Crypto World2 days agoMarket Analysis: GBP/USD Retreats From Highs As EUR/GBP Enters Holding Pattern

-

Crypto World5 days ago

Crypto World5 days agoKuCoin CEO on MiCA, Europe entering new era of compliance

-

Business5 days ago

Entergy declares quarterly dividend of $0.64 per share

-

Sports2 days ago

Sports2 days agoShannon Birchard enters Canadian curling history with sixth Scotties title

-

NewsBeat1 day ago

NewsBeat1 day agoUS-brokered Russia-Ukraine talks are resuming this week

-

NewsBeat2 days ago

NewsBeat2 days agoGAME to close all standalone stores in the UK after it enters administration

-

Crypto World14 hours ago

Crypto World14 hours agoRussia’s Largest Bitcoin Miner BitRiver Enters Bankruptcy Proceedings: Report

-

Crypto World5 days ago

Crypto World5 days agoWhy AI Agents Will Replace DeFi Dashboards