Business

TSLL Vs. TSLS: Trading TSLA Through The Pricing Cycle

Business

A GeoAlpha Refresh | Seeking Alpha

baona/iStock via Getty Images

By Samuel Rines & Christopher Gannatti, CFA

During the latest monthly rebalance, the WisdomTree GeoAlpha Opportunities Index implemented a set of changes that, taken together, sharpen the portfolio’s exposure to the three realities defining the geopolitical backdrop

Business

Oil prices dip as investors assess trajectory of US-Iran tensions

Brent futures fell 12 cents, or 0.2% to $70.23 a barrel by 0110 GMT, while U.S. West Texas Intermediate (WTI) crude slipped 8 cents, or 0.1%, to trade at $65.11 a barrel.

Both benchmarks settled more than 4% higher on Wednesday, posting their highest settlements since January 30, as traders priced in potential supply disruptions amid concerns of U.S.-Iran conflict.

“Tensions between Washington and Tehran remain high, but the prevailing view is that full-scale armed conflict is unlikely, prompting a wait-and-see approach,” said Hiroyuki Kikukawa, chief strategist of Nissan Securities Investment, a unit of Nissan Securities.

“U.S. President Donald Trump does not want a sharp rise in crude prices, and even if military action occurs, it would likely be limited to short-term air strikes,” Kikukawa added.

A little bit of progress was made during Iran talks in Geneva this week but distance remained on some issues, the White House said on Wednesday, adding that Tehran was expected to come back with more details in a couple of weeks.

Iran issued a notice to airmen (NOTAM) that it plans rocket launches in areas across its south on Thursday from 0330 GMT to 1330 GMT, according to the U.S. Federal Aviation Administration website. At the same time, the U.S. has deployed warships near Iran, with U.S. Vice President JD Vance saying Washington was weighing whether to continue diplomatic engagement with Tehran or pursue “another option”.

Satellite images show that Iran has recently built a concrete shield over a new facility at a sensitive military site and covered it in soil, experts say, advancing work at a location reportedly bombed by Israel in 2024.

Meanwhile, two days of peace talks in Geneva between Ukraine and Russia ended on Wednesday without a breakthrough, with Ukrainian President Volodymyr Zelenskiy accusing Moscow of stalling U.S.-mediated efforts to end the four-year-old war.

U.S. crude and gasoline and distillate inventories fell last week, market sources said, citing American Petroleum Institute figures on Wednesday, contrary to expectations in a Reuters poll that crude stocks would rise by 2.1 million barrels in the week to February 13.

Official U.S. oil inventory reports from the Energy Information Administration are due on Thursday.

Business

Why Olympic Fever Is Driving More Users to Online Gambling Platforms

The Olympic Games are undoubtedly the only event that manages to attain the interest of the vast majority of the world’s population. Every four years, billions of viewers around the world watch and get glued to their favorite celebrities and national pride. While the excitement of the World Olympics does wonders for ratings, it also has a significant effect on the online world.

Major sporting events have been known to influence online behavior substantially. This is because, during such events, users look for different information online, look for ways to watch the events, and participate in online discussions about sports. It is, however, essential to note that many users look for ways to make their user experience a lot more interactive. This is where online gambling is involved. Due to the increase in popularity of events at the Olympics, the popularity of gambling taking place concurrently is promoted, thereby influencing the online gambling growth globally.

The Global Impact of the Olympic Games on Digital Entertainment

The viewer count for the Olympic Games is massive, reaching over three billion people around the world. This increases the usage of the internet during this period significantly, as people use sports apps and online viewing to watch the games rather than traditional means of viewing, such as TV. Social media also plays an important part in increasing online usage during the Olympic games, as conversations about the games and highlight reels keep people engaged on the internet for longer periods of time. As shown in Chart 1, global online traffic increases noticeably during Olympic years compared to non-Olympic periods. This also means increasing usage in various segments of the online entertainment business, including gaming and betting sites.

Chart #1: Global Online Traffic Growth During Olympic Seasons (2016–2024)

Chart 1: Illustrating noticeable traffic spikes during Olympic years compared to non-Olympic years.

Why Sports Fans Are Turning to Online Gambling

The Olympics have had some dramatic finishes and some underdog stories that enhance that connection. Many sports fans feel a desire to be more engaged with the outcome of sports events and the Olympics. Thus the bet enhances and complements the current betting on the Olympics. As shown in Chart 2, in the sharp rise in new registrations on sports betting platforms during major sporting events, with the Olympics leading.

This can serve to increase the excitement level of even lesser-known games, as people may show greater interest in games they would normally not care much about. This helps to increase the viewership of many different Olympic sports as wagering on these sports is introduced.

Mobile usage also has an important role to play. Today’s sports betting sites allow you to place your bets within seconds using your mobile phones. Even live betting, in which the odds are constantly changing during games, offers an added fast and interactive experience in line with the unpredictable nature of Olympic sports.

Chart #2: Increase in Sports Betting Registrations During Major Sporting Events

Chart 2: Shows higher user sign-ups during global tournaments, with Olympic periods leading.

The Psychology Behind Olympic Betting Trends

Psychologically, many factors explain this rise in betting activity. One major psychological factor is herd mentality, which is thought to occur because with millions being spectators to the same events, people consider joining in some common activities, including wagering.

Another factor is the excitement that comes along with competition. Although the Olympic Games intensify excitement and competition by emphasizing countries and personal success, resulting in an emotional response, the excitement is natural while watching the Olympics and placing bets, thus contributing to the existing Olympic betting trends.

Fear of Missing Out (FOMO) is another driver. When media coverage and social feeds are full of dramatic finishes and betting stories, some users feel they are missing a unique opportunity if they do not participate. Constant media exposure reinforces the idea that betting is a common and accepted part of the sports-viewing experience during the Olympics.

Technology and Accessibility: Making Gambling Easier Than Ever

Online gambling has become more accessible than ever due to technology. For instance, users can use mobile applications to register, make deposits, and start betting at any given moment. This is particularly significant during the live Olympic events, for they determine the chances of winning.

Additionally, fast and safe payment systems make the experience more seamless. Digital wallets, for instance, make it easier for people to make transactions, although such activities may not be as frequent as they are in traditional systems. However, with live stats, performance data, and live odds, sports betting sites provide easier decision-making for users.

Platforms like Fair Go casino, have improved its services for users, optimizing its systems for increased usage during significant sporting events, mimicking the speed of the Olympics with user-friendly interfaces and mobile capabilities in the growing digital betting arena.

Comparison of User Activity Before and During the Olympics

User behavior on gambling platforms changes noticeably during the Olympic period. Compared to regular months, platforms report higher registration rates, longer session times, and increased betting volumes, clearly reflecting broader Olympic sports wagering patterns.

The diversity of Olympic sports also brings in new user segments. Some people who rarely bet on traditional leagues become active during the Games because of the wide range of events available. This leads to a broader user base and higher overall engagement across the digital betting industry.

Deposit volumes typically rise with varied bets. Although activity often declines after the Games end, it usually remains above pre-Olympic levels for some time, showing a lasting impact on user behavior. Below Table compares user activity before, during, and after the Olympics, highlighting longer session times and higher betting volumes during the Games.

Table: User Activity Trends Around the Olympic Period

| Period | New Users | Avg. Session Time | Betting Volume | Deposits Growth |

| Pre-Olympics | 100,000 | 18 min | $2.1M | +5% |

| During Olympics | 245,000 | 32 min | $6.7M | +28% |

| Post-Olympics | 160,000 | 22 min | $3.4M | +12% |

Risks, Regulations, and Responsible Gambling

Despite its growth, online gambling carries risks that should not be ignored. Increased exposure during major events like the Olympics can lead some users to spend more time and money than they planned. This makes responsible gambling practices essential.

Many countries have introduced regulations to protect users. These include age verification, deposit limits, and self-exclusion options. Reputable platforms also provide tools that help users track their spending and set personal limits.

Education plays an important role as well. Understanding the odds, recognizing warning signs of problem gambling, and knowing when to take a break can reduce potential harm. Responsible use helps ensure that betting remains a form of entertainment rather than a source of financial or emotional stress.

Conclusion

The Olympic Games bring a potent combination of international attention, emotional appeal, and online interaction. Such a setting increases the popularity of online gambling sites, as people attempt to interact with the events to which they attach themselves. The development of mobile technology has made it easier for people to get involved, thus ensuring the continued growth of online gambling.

At the same time, the rise of betting on Olympic sports wagering emphasizes the necessity for a balance. Indeed, while the platforms develop and expand the audience, good practices in gambling and regulation remain necessary to ensure the development of a digital betting market responsibly.

Business

Happy Joe’s Pizza reports “milestone” growth year for franchise

Tom Sacco, CEO, president and chief “happiness” officer at Happy Joe’s Pizza says the brand is a “business enterprise” but states the “calling is so much more” for the communities they serve. (Fox News Digital / Olivia Palombo)

For more than 50 years, Happy Joe’s Pizza has been serving up more than just their famed pizza and ice cream, it’s found something more important: a “safe haven” for children and families, according to the company’s Chief “Happiness” Officer, Tom Sacco.

The brand, which is headquartered in Davenport, Iowa, began in 1972 and was founded by Joe Whitty. A “baker by trade,” he ended up working for Shakey’s Pizza.

“From what I understand, he went to Shakey’s and said, ‘Hey, I’ve got an opportunity with my background, I think I can really improve our pizza dough,’” Tom Sacco, current Happy Joe’s Pizza Chief Executive Officer, President and Chief “Happiness” Officer, recalled.

MCDONALD’S FINDS WINNING RECIPE IN VALUE MEAL DEALS AS DINERS RETURN

“They said, ‘Well, if you don’t think our pizza dough is so good, why don’t you just leave and do your own pizza restaurant,’” Sacco continued.

To this day, the brand continues to use that same dough recipe, according to Sacco.

“I try to be as true, not knowing him, but understanding some of his characteristics and attributes,” Sacco told FOX Business.

Sacco has spent his entire career in the restaurant business and grew up working in his grandfather’s kitchen at eight years old. After getting a bachelor’s and master’s degree and completing law school, he still chose to pursue a career in the industry.

Happy Joe’s Pizza was the first restaurant company in the world to create a Taco Pizza, according to their website. (Happy Joe’s Pizza / Unknown)

After a career working with other restaurants, he joined Happy Joe’s Pizza in 2020.

“I’m very comfortable in the industry, but what really excited me was in the DNA of Happy Joe’s. It’s always about family and it revolves around children,” Sacco said.

Sacco said during a visit to Iowa before he was set to head up the company, he walked around stores asking people if they were familiar with the brand. He explained that those who he spoke with “would go on and tell [him] all these magical memories that they had.”

“I said to myself, ‘You know what? I’m gonna help them because it’s a good brand, but I’m also gonna be selfish,” Sacco shared. “I’m going to help them because I want my family, my grandchildren, to grow up with the same memories that I was told by all these people.”

HOW DOMINO’S ‘REGAINED ITS CROWN’ IN THE PIZZA INDUSTRY

In 2025, the company reported a “milestone year” of growth and impact in the community, according to a release shared with Fox Business.

The brand re-opened a franchise in New Ulm, Minn., with “renewed development efforts” supporting planned growth across Texas and Iowa, among other states. It’s also expanding its West Coast presence, with an opening in Oro Valley, Ariz., in the spring.

“This year showed what is possible when franchisees, team members and guests believe in that mission,” Sacco said in the release. “We expanded into new markets, earned recognition from our industry and created meaningful moments for the communities we love. As we look toward 2026, we are committed to keeping that spirit at the center of everything we do.”

The company debuted a food truck in October 2025, according to reports. (Happy Joe’s Pizza / Unknown)

While the company has reported massive success over the past few years, that doesn’t go without experiencing routine inflationary pressures. Sacco shared that despite these pressures, he “resurrected” the original products that Whitty was using just prior to the start of the COVID-19 pandemic.

“So when everybody was taking a hit, we saw an uptick in sales,” Sacco said. “That uptick continued, really, up until the end of [2025].”

PIZZA HUT TO CLOSE AROUND 250 LOCATIONS

Sacco said that this effort felt like he was “going to go against the grain.”

“It was almost like I was going to go against the grain, not because I needed to do something different, because I believed that when the long-term franchisees were saying, ‘Tom, if there’s anything we can tell you, [it’s to] follow the recipe [Whitty] had,’” said Sacco.

The brand is also deeply involved in philanthropic work and community involvement. According to their website, the company has won numerous awards and created a variety of programs in order to “give back to the communities who have helped make [them] so successful.”

The restaurant has various locations throughout the Quad Cities area and is looking to expand into Texas, Arizona and more, according to Sacco. (Happy Joe’s Pizza / Unknown)

In an emotional moment, Sacco said that while the company is a business, “the calling [of Happy Joe’s Pizza] is so much more.”

The company hosts an annual event called Happy Joe’s Holiday Parties for Children with Special Needs. This year, the company reported that roughly 2,000 children were in attendance. Sacco said that these moments are what “juice him up” for the future.

“It’s so touching to me to see these kids care that much about a pizza,” Sacco said with tears in his eyes. “It’s not the pizza, it’s the magic that the pizza creates for them.”

CLICK HERE TO GET FOX BUSINESS ON THE GO

As the company continues growth efforts in 2026, Sacco said he plans to continue to be a “servant leader.”

“I try to be the best servant leader I can be. I try to lead by example… If you care, that’s what you do,” Sacco said. “I think bringing back caring to Happy Joe’s was one of the missing elements.”

Business

Global Market Today | Asian stocks rise after tech boosts US equities

The MSCI Asia Pacific Index gained for a second day, as shares rallied in Australia and Japan, and South Korea’s benchmark rose to a record. That was after the S&P 500 gained 0.6% Wednesday and the tech-heavy Nasdaq 100 climbed 0.8%. Financial markets remained shut in mainland China, Hong Kong and Taiwan for the Lunar New Year holiday.

The rebound in tech shares offered a sign that concern over the disruption of artificial intelligence were easing, just as several stock pickers surveyed the wreckage for buying opportunities.

The software stock selloff is likely “overdone” as that was a largely knee-jerk reaction, with investors trying to figure out the winners and losers from AI, said Paul Stanley, managing partner at Granite Bay Wealth Management. “While AI is very promising, investors should not assume that all companies will win on the AI front.”

Oil steadied in Asia after jumping Wednesday following a report that American military intervention in Iran may come sooner than expected.

West Texas Intermediate traded above $65 a barrel after gaining 4.6% on Wednesday, while Brent crude closed above $70 for the first time in more than two weeks. Axios reported that any US military operation would likely be a weeks-long campaign and that Israel’s government is pushing for a scenario targeting regime change in the Islamic Republic.

The dollar edged lower versus most of its Group-of-10 peers after Bloomberg’s gauge of the greenback gained 0.5% on Wednesday. Treasuries were little changed after dropping in the New York session, when a $16 billion sale of 20-year bonds drew lackluster demand. US economic data published Wednesday showing the biggest increase in US industrial production in January bolstered investor sentiment, while orders for business equipment rose in December by more than projected and housing starts hit a five-month high.

Business

First American Financial director buys $4m in FAF stock

First American Financial director buys $4m in FAF stock

Business

Small businesses warn of April ‘perfect storm’ as costs surge

Small businesses are bracing for what they describe as an “unprecedented cost crunch” in April, with more than a third warning they may shut down or scale back operations as a raft of higher expenses take effect.

The Federation of Small Businesses (FSB) has written to Rachel Reeves warning that the cumulative impact of rising energy bills, business rates, higher employment costs and changes to statutory sick pay risks undermining economic growth.

A survey by the FSB found that 35 per cent of small firms plan either to close or reduce output over the coming year in response to increased energy standing charges, a rise in the national living wage and higher dividend tax rates.

Tina McKenzie, the FSB’s policy and advocacy chair, said the burden of new costs would directly affect firms’ ability to invest. “Running a small business is about to get a lot more expensive,” she wrote. “If profits are squeezed by government policy, businesses cannot grow.”

The FSB estimates that an employer with nine staff paid at the national living wage will see annual employment costs increase by £25,850 between January and April 2026, a 12.9 per cent jump.

It also calculates that a typical small shop or restaurant will see business rates rise from £4,790 to £5,590 this year, while changes to dividend tax, a common way for owner-managers to draw income, will cost an additional £578 annually on earnings of £50,000.

The removal of the lower earnings limit for statutory sick pay is expected to add further pressure. The FSB estimates the change will cost a nine-employee firm around £990 a year.

Jane Wiest, who runs Initially London, a retailer specialising in monogrammed products, said improving sales had been overshadowed by higher taxes and operating costs.

“We had a strong January, but then these taxes started to hit,” she said. “You’re trying to work out how the money coming in will cover the expenses going out. It makes it hard to hire or invest because you’re carrying this constant burden.”

Sarah Curtis, who operates a historic boatyard in Ipswich, said rising wages and utility bills were making recruitment increasingly difficult.

“There are so many small increases, utilities, wages, rates, and they all add up,” she said. “Small businesses are very reluctant to take on anyone new.”

The FSB argues that the combined effect of cost increases risks deterring hiring and curtailing expansion plans at a time when policymakers are seeking to boost economic growth.

While ministers have defended the measures as necessary to improve worker protections and fund public services, business leaders warn that smaller firms, often operating on tighter margins and with limited access to affordable finance, are particularly exposed.

With April approaching, small employers say they face a stark choice: absorb higher costs, raise prices or pull back on activity, each with potential consequences for jobs and local economies.

Business

OPINION: Baillie Hill refurb is a heritage revelation

OPINION: A new venue in Victoria Park has disproved a long-held assumption about heritage.

Business

Addressing Cybersecurity Challenges for the Underbanked in Southeast Asia

Southeast Asia is facing a significant surge in cybercrime, with an 82% increase reported between 2021 and 2022, primarily driven by the region’s rapid digital economic expansion. The “underbanked” population—comprising approximately 225 million people—is particularly vulnerable to these threats due to limited digital literacy and a reliance on informal financial services.

Key Points

- Heightened Vulnerability: The underbanked are frequently targeted by cybercriminals because they often use less secure financial services and lack the training to identify sophisticated phishing and social engineering tactics.

- Severe Human Impact: Beyond financial loss, cybercrime in the region is linked to “cyber slavery,” where job-seekers are trafficked into “scam farms” to carry out fraudulent operations, particularly in areas with limited regulatory oversight.

- Singapore’s Regulatory Model: Singapore is pioneering a “Shared Responsibility Framework” that holds financial institutions and telecommunication operators liable for scam losses if they fail to fulfill specific security duties.

- Philippine Legislative Efforts: The Philippines has enacted the Anti-Financial Account Scamming Act (AFASA) to allow for the freezing of disputed funds and has launched grassroots programs like Project ACUITY to provide financial literacy training to isolated communities.

Southeast Asia’s rapid digital transformation has driven an alarming 82% increase in cybercrime between 2021 and 2022, disproportionately impacting the underbanked due to limited digital literacy. Scammers exploit these vulnerabilities, resulting in significant financial losses and, in extreme cases, “cyber slavery.”

- Regional Disparities: While countries like Singapore and the Philippines are advancing their defenses, others such as Myanmar, Laos, and Cambodia face challenges due to internal conflict, vague legal frameworks, or limited technological infrastructure.

- Corporate Defense Challenges: Private fintech firms report significant difficulty in shutting down social media impersonators and fraudulent apps, highlighting the need for better cooperation from global platform providers like Meta and Google.

- The Need for Unified Standards: Experts advocate for a centralized regional authority, similar to the European Commission, to standardize cybersecurity laws, facilitate intelligence sharing, and ensure consistent consumer protections across Southeast Asia.

While nations like Singapore and the Philippines have introduced measures such as the Anti-Financial Account Scamming Act to combat these threats, cross-border collaboration is imperative to dismantle international scam networks. Grassroots financial literacy programs are essential to empower consumers, while regional partnerships are critical for establishing standardized defenses to safeguard the expanding digital economy against escalating cyber risks.

The underbanked population in Southeast Asia—which numbered 225 million in 2023—is a primary target for cybercrime, including financial fraud and cyber slavery, due to the following specific vulnerabilities:

- Low Digital Literacy: The document repeatedly cites low digital literacy as a fundamental vulnerability. This lack of familiarity with digital tools and online safety makes these individuals less capable of identifying phishing attempts and social engineering schemes.

- Reliance on Informal Financial Services: The underbanked often depend on informal financial services that are described as “less secure” than traditional banking. These services typically have “lower barriers to entry,” which, while providing access to funds, also makes the users more susceptible to exploitation by cybercriminals.

- Low Reading and General Financial Literacy: In certain regions, such as the Philippines, low reading and financial literacy rates are specifically highlighted as factors that weaken the “line of defense” against cyber threats. This makes it harder for individuals to safeguard personal information or recognize fraudulent financial products.

Scammers prey on the economic hardships of the underbanked by targeting job-seekers, luring them with false employment opportunities. Many are subsequently trafficked into “cyber slavery” at exploitative “scam farms” across the region. Populations in geographically isolated or disadvantaged areas face heightened risks. These communities are often the focus of initiatives like Project ACUITY, as they are more vulnerable to threats such as human trafficking and personal data theft.

Singapore’s Shared Responsibility Framework

Singapore’s Shared Responsibility Framework redistributes the burden of loss for phishing scams by shifting liability from the consumer to financial institutions and telecommunications providers, provided certain security standards are not met.

The redistribution of the financial burden is structured as follows:

1. Liability of Financial Institutions and Telecommunications Providers

The framework moves the primary responsibility for financial losses away from the consumer under specific conditions:

- Failure to Fulfill Duties: Financial institutions are the first line of accountability, followed by telecommunications operators. If these entities fail to fulfill their “prescribed duties” or security standards, they are required to bear the total loss of the scam.

- Incentive for Due Diligence: By making these institutions liable for losses resulting from security lapses, the framework mandates a higher level of due diligence and accountability for the platforms that facilitate transactions and communications.

2. Role and Responsibility of Consumers

While the framework provides a safety net, it does not offer universal reimbursement:

- Requirement of Institutional Fault: Payouts to consumers are only required if there is a demonstrated fault or failure on the part of the financial institution or telecommunications operator.

- Loss Retention: If the institutions have fulfilled all their prescribed security duties and are found to be without fault, the framework does not require them to make payouts. In such cases, the consumer may still be responsible for the loss.

3. Prescribed Security Measures for Institutions

To avoid liability under this framework, financial institutions in Singapore implement specific security measures mentioned in the document:

- App Security: Preventing the installation of banking apps on devices that contain “sideloaded” (unofficial) applications.

- Transaction Cooling Periods: Adding extra steps and wait times to transactions to allow users time to verify the legitimacy of the transfer.

- Communication Protocols: Removing all clickable links from SMS messages and emails sent to customers.

The goal of this framework, as stated in the text, is to ensure that “underbanked” individuals and general consumers are not “always left to foot the bill.” It creates a shared accountability model where the “total loss” is redistributed to the service providers if they fail to maintain the rigorous security standards necessary to prevent phishing.

To combat this, regional stakeholders are moving toward a multistakeholder approach that combines legislative reform, shared corporate responsibility, grassroots educational initiatives, and enhanced cross-border cooperation to dismantle sophisticated scam networks and protect the region’s most at-risk consumers.

Other People are Reading

Business

Reese’s founder’s grandson slams Hershey over alleged ingredient swap

Check out what’s clicking on FoxBusiness.com.

The grandson of the man who invented the Reese’s Peanut Butter Cup is publicly criticizing The Hershey Company, accusing the candy giant of quietly changing the recipe of certain products sold by the iconic brand.

Brad Reese, grandson of founder H.B. Reese, whose company merged with Hershey in the 1960s, published an open letter on LinkedIn Saturday alleging that Hershey has replaced traditional ingredients like milk chocolate and peanut butter with lower-cost substitutes in parts of the Reese’s product line.

“My grandfather built Reese’s on a simple, enduring architecture: milk chocolate + peanut butter,” Brad Reese wrote.

“But today, Reese’s identity is being rewritten, not by storytellers, but by formulation decisions that replace milk chocolate with compound coatings and peanut butter with peanut‑butter‑style crèmes across multiple Reese’s products.”

CHOCOLATE BARS PULLED FROM SHELVES NATIONWIDE OVER SALMONELLA CONCERNS

Brad Reese, grandson of Reese’s founder H.B. Reese, sounded off on The Hershey Company in an open letter Saturday. (Arne Dedert/picture alliance via Getty Images / Getty Images)

Brad Reese told FOX Business he recently purchased Reese’s Unwrapped Chocolate Peanut Butter Creme Mini Hearts candies and immediately noticed a difference.

“I went and bought a bag, and I took a couple bites, and I had to throw the bag in the garbage,” Reese said. “I couldn’t eat it. It was not edible, and I looked at the packaging … and there was no milk chocolate, there was no peanut butter — it was all vegetable oils and fats.”

He also claimed that products such as Reese’s Take 5 and Fast Break are no longer coated in milk chocolate and alleged that, in parts of Europe, Reese’s Peanut Butter Cups no longer contain milk chocolate.

Brad Reese argues that the alleged recipe changes undermine the legacy and integrity of the brand his grandfather built.

HERSHEY RAISING CHOCOLATE PRICES BY DOUBLE-DIGITS AS COCOA COSTS SOAR: REPORT

Reese argues that the changes undermine his grandfather’s legacy. (Lindsey Nicholson/UCG/Universal Images Group via Getty Images / Getty Images)

“I can’t go on representing being the grandson of Reese’s when the product is total bunk,” Brad Reese told FOX Business. “You have no idea how devastating it is.”

Hershey pushed back on the criticism, maintaining that its flagship product remains unchanged.

“Our iconic Reese’s Peanut Butter Cups are made the same way they always have been; starting with roasting fresh peanuts to make our unique, one-of-a-kind peanut butter that is then combined with milk chocolate,” The Hershey Company told FOX Business in an email.

However, Hershey acknowledged that it has adjusted recipes as it expands the brand into new shapes and variations.

“We make product recipe adjustments that allow us to make new shapes, sizes and innovations that Reese’s fans have come to love and ask for, while always protecting the essence of what makes Reese’s unique and special: the perfect combination of chocolate and peanut butter,” the company said.

US RETAILERS LOWERING PRICES ON HERSHEY’S CANDY AHEAD OF HALLOWEEN: REPORT

Hershey pushed back, insisting its “iconic Reese’s Peanut Butter Cups are made the same way they always have been; starting with roasting fresh peanuts to make our unique, one-of-a-kind peanut butter that is then combined with milk chocolate.” (Ryan Collerd/Bloomberg via Getty Images / Getty Images)

The dispute comes as the broader chocolate industry has faced intense cost pressures.

Over the past two years, several chocolate makers have adjusted their recipes after cocoa prices surged to a record high in late 2024, Reuters reported.

In July, Hershey reportedly announced price increases across its candy portfolio, citing an “unprecedented” rise in cocoa costs.

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Since then, cocoa prices have dropped, driven by weakening demand and improving supply conditions, according to Reuters.

-

Video2 days ago

Video2 days agoBitcoin: We’re Entering The Most Dangerous Phase

-

Tech4 days ago

Tech4 days agoLuxman Enters Its Second Century with the D-100 SACD Player and L-100 Integrated Amplifier

-

Sports2 days ago

Sports2 days agoGB's semi-final hopes hang by thread after loss to Switzerland

-

Video6 days ago

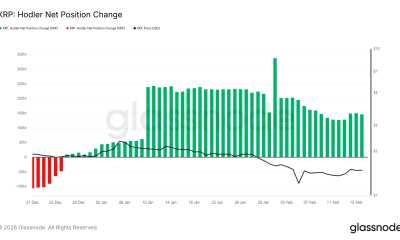

Video6 days agoThe Final Warning: XRP Is Entering The Chaos Zone

-

Crypto World2 days ago

Crypto World2 days agoCan XRP Price Successfully Register a 33% Breakout Past $2?

-

Tech2 days ago

Tech2 days agoThe Music Industry Enters Its Less-Is-More Era

-

Business1 day ago

Business1 day agoInfosys Limited (INFY) Discusses Tech Transitions and the Unique Aspects of the AI Era Transcript

-

Entertainment13 hours ago

Entertainment13 hours agoKunal Nayyar’s Secret Acts Of Kindness Sparks Online Discussion

-

Video2 days ago

Video2 days agoFinancial Statement Analysis | Complete Chapter Revision in 10 Minutes | Class 12 Board exam 2026

-

Tech17 hours ago

Tech17 hours agoRetro Rover: LT6502 Laptop Packs 8-Bit Power On The Go

-

Crypto World5 days ago

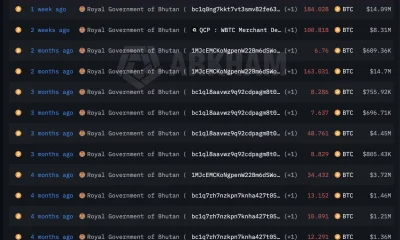

Crypto World5 days agoBhutan’s Bitcoin sales enter third straight week with $6.7M BTC offload

-

Video7 days ago

Video7 days agoPrepare: We Are Entering Phase 3 Of The Investing Cycle

-

Entertainment4 hours ago

Entertainment4 hours agoDolores Catania Blasts Rob Rausch For Turning On ‘Housewives’ On ‘Traitors’

-

NewsBeat3 days ago

NewsBeat3 days agoThe strange Cambridgeshire cemetery that forbade church rectors from entering

-

Business7 days ago

Business7 days agoBarbeques Galore Enters Voluntary Administration

-

Business19 hours ago

Business19 hours agoTesla avoids California suspension after ending ‘autopilot’ marketing

-

Crypto World6 days ago

Crypto World6 days agoKalshi enters $9B sports insurance market with new brokerage deal

-

Crypto World7 hours ago

Crypto World7 hours agoWLFI Crypto Surges Toward $0.12 as Whale Buys $2.75M Before Trump-Linked Forum

-

Crypto World6 days ago

Crypto World6 days agoEthereum Price Struggles Below $2,000 Despite Entering Buy Zone

-

NewsBeat3 days ago

NewsBeat3 days agoMan dies after entering floodwater during police pursuit