- A new rumor suggests the RTX 5090 will use 600W of power

- Comments in a Chinese forum point toward the new GPU being much louder

- PSU requirements are 1000W according to Corsair

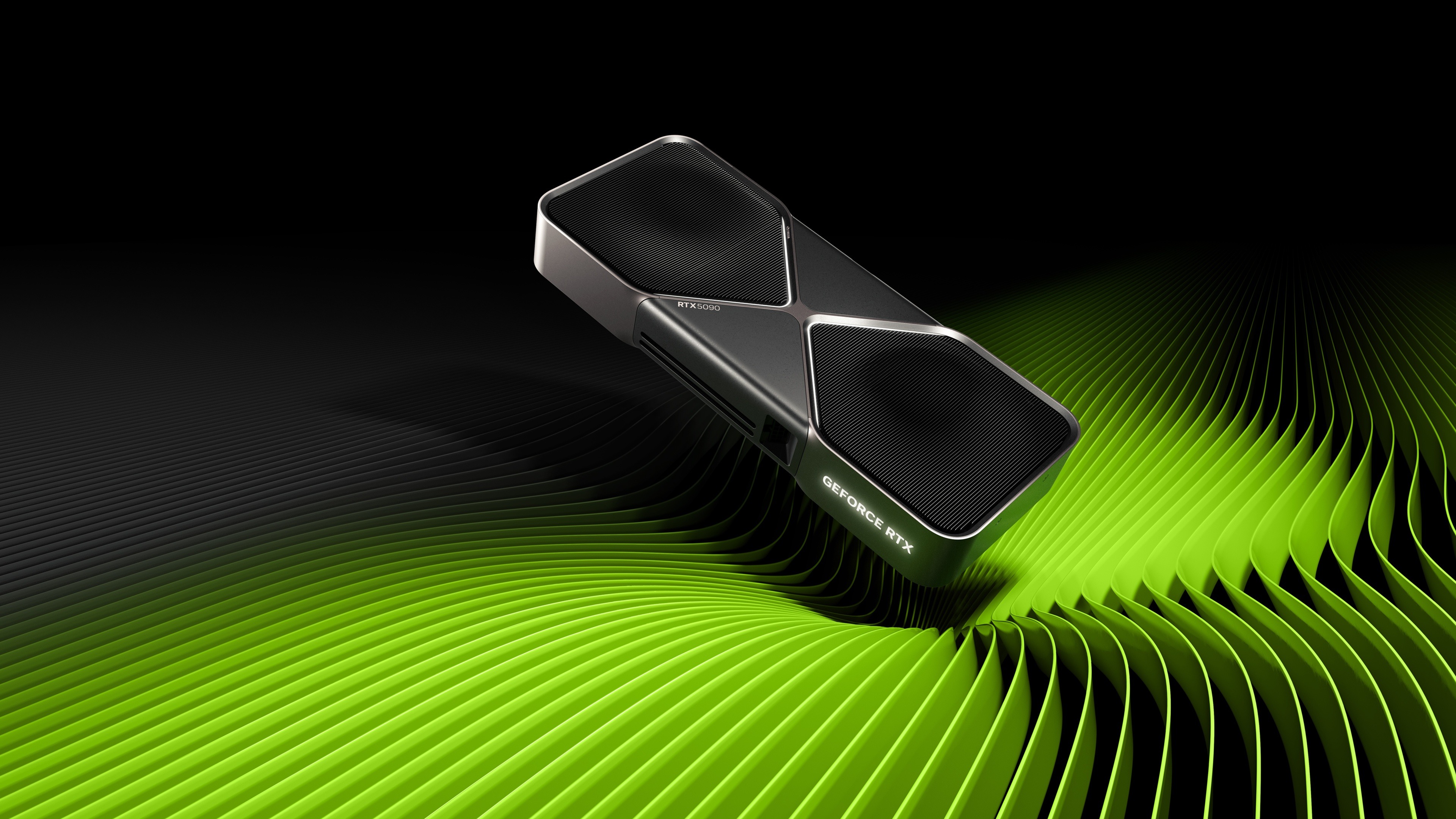

Nvidia‘s RTX 5090 promises to provide a step up from the previous generation’s RTX 4090, but that could come at a significant cost according to new rumors – and you might want to invest in a beefy power supply.

As reported by Tomasz Gawronski on X, discussions within Chiphell (a Chinese forum page about the latest PC hardware) suggest that Nvidia’s RTX 5090 Founders Edition GPU will use 600W of power while being much louder compared to the 4090. This is based on what appears to be an upcoming review with the embargo set for January 24, with a post translated from Chinese that says “The editor cursed while testing… After all, the power consumption increased, the current increased, and the screaming also increased~”.

Considering the pricing of the RTX 5090 ($1,999 / £1,939 / AU$4,039) and the reported 30% performance increase (according to Blender benchmarks highlighted by VideoCardz), this rumor likely won’t bode well with anyone intent on upgrading to Team Green’s latest flagship GPU. The RTX 4090’s power consumption is 450W, and while this is still plenty, the jump to 600W isn’t very appealing either.

Both an increase in noise and PSU requirements will be costly in multiple aspects, but that’s also expected if the performance ends up meeting the hype.

What does this mean in terms of PSU requirements?

It’s important to note that this is just a rumor, but if it’s legitimate, then RTX 5090 users will certainly have to shell out more than $1,999 / £1,939 / AU$4,039. If you don’t already own a 1000W PSU, then you’ll more than likely need to invest in one – the recommended PSU requirement for the RTX 5090 is 1000W according to Corsair.

This is especially the case if you’ve got a high-end CPU equipped, as you’ll want to avoid any system malfunctions due to your PSU not wielding enough power. Once reviews arrive, we’ll have to measure just how much of a jump the RTX 5000 series flagship GPU is from the previous generation. If I’m honest, even the RTX 4090 is still overkill for gamers, which will also be true of the RTX 5090 – so if you invest in a new GPU and new PSU, you might have to wait a while to really get the most out of your rig.

You must be logged in to post a comment Login