Business

Saudi Arabia’s SAMA issues updated regulation for finance companies

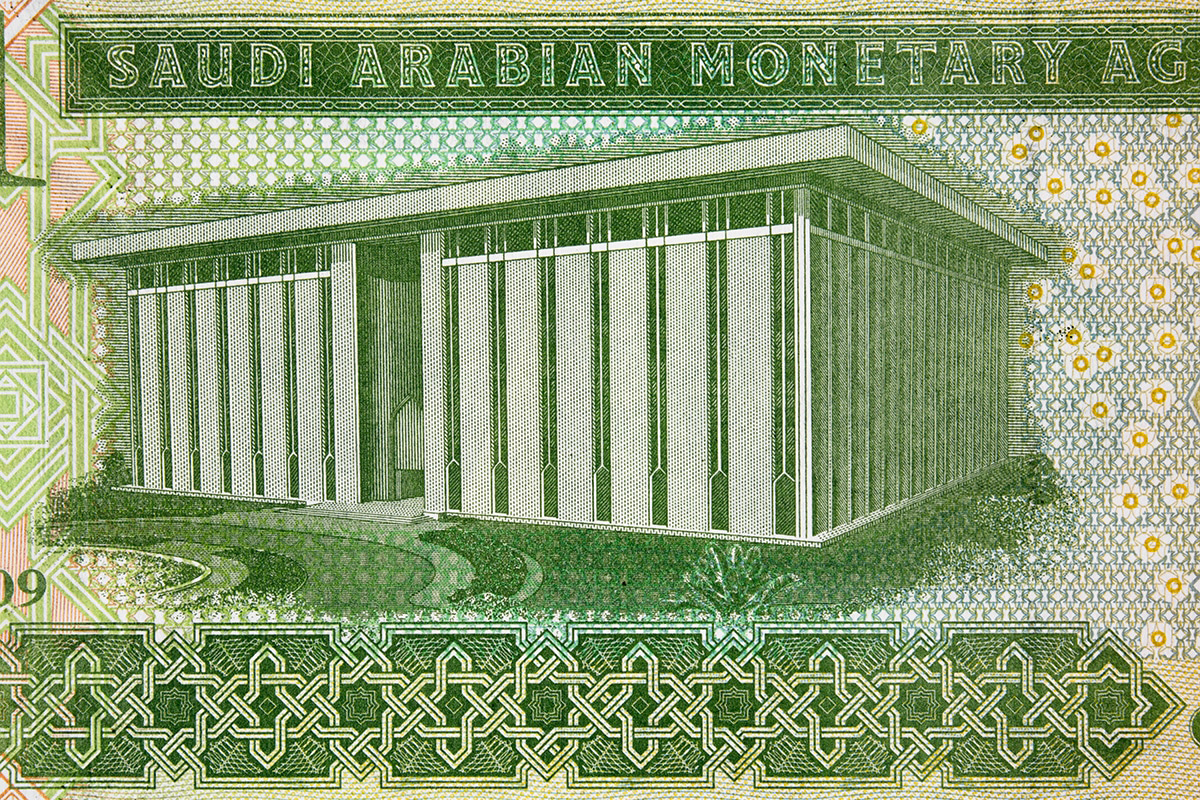

The Saudi Central Bank (SAMA) has issued an updated Implementing Regulation of the Finance Companies Control Law, strengthening oversight of the Kingdom’s finance sector and supporting its stability and growth.

SAMA said the revisions are designed to regulate requirements for carrying out all financing activities. Key changes include updates to the aggregate financing amounts that finance companies may offer, revised bank guarantee requirements for firms applying for licences, amendments to provisions governing related-party transactions, and clearer procedures for handling the expiry of licences granted to finance companies.

As part of the update, SAMA has repealed the Rules Regulating Consumer Microfinance Companies and the Rules of Engaging in Microfinance Activity. It has also amended the Rules of Licensing Finance Support Activities, aligning them with the revised regulatory framework.

The central bank noted that the updated regulation forms part of its supervisory and regulatory mandate and reflects its ongoing efforts to enhance transparency, improve compliance, and support sustainable growth across the finance sector.

SAMA added that it had previously published a draft of the updated implementing regulation for public consultation, inviting feedback from stakeholders and subject-matter experts. Comments received during the consultation period were reviewed and incorporated into the final version of the rules.

The updated Implementing Regulation of the Finance Companies Control Law, along with the amended Rules of Licensing Finance Support Activities, is available on SAMA’s website.