News Beat

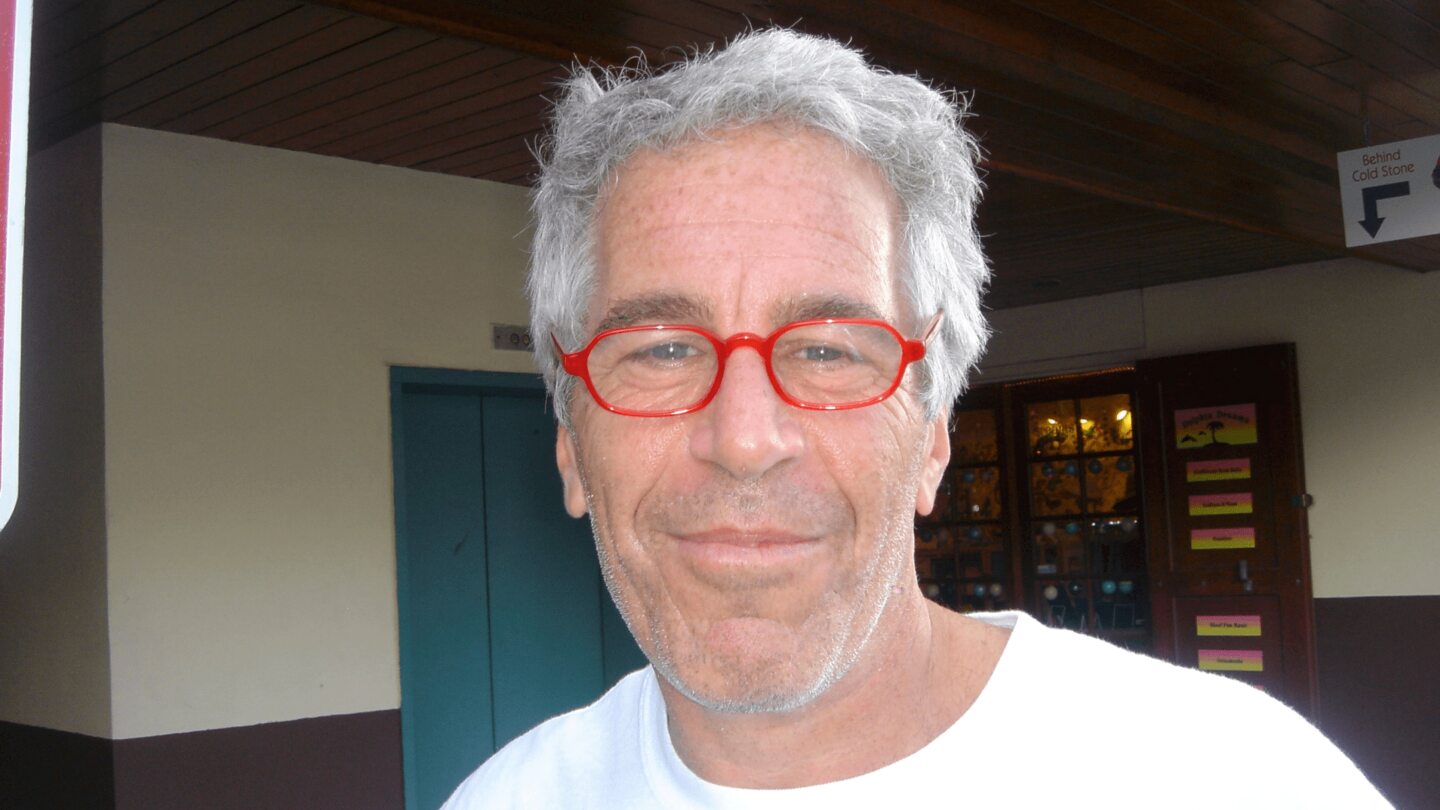

DOJ says it may need a ‘few more weeks’ to finish release of Epstein files despite Dec. 19 deadline

WASHINGTON (AP) — The Justice Department said Wednesday that finishing the release of all of the Jeffrey Epstein files could take a “few more weeks,” further delaying compliance with a Dec. 19 deadline set by Congress.

The department said the U.S. attorney’s office for the Southern District of New York, as well as the FBI, found more than a million more documents that could be relevant to the Epstein case. DOJ did not say in its statement when they were informed of those new files.

DOJ insisted in its statement that its lawyers are “working around the clock” to review those documents and make the redactions required under the law, passed nearly unanimously by Congress last month.

“We will release the documents as soon as possible,” the department said. “Due to the mass volume of material, this process may take a few more weeks.”