By Nick Bartlett, SuperWest Sports

Sports

Jonathan Greenard Speaks Out Following Rondale Moore’s Death

Minnesota Vikings wide receiver Rondale Moore passed away on Saturday, February 21, a death widely reported as a tragic suicide. And as the team’s players, coaches, staff, owners, and fans mourned Moore’s passing, Vikings defender Jonathan Greenard used his social media microphone to remind the world that players see the same tweets as you.

Greenard urged caution online while Minnesota offered support following the sudden passing of wideout Rondale Moore.

Greenard was sure to emphasize that athletes are humans, too.

A Message Echoes across a Grieving Locker Room

Think before you type and send, Greenard says.

Moore Dead at 25

NFL.com reported Saturday night, “NFL wide receiver Rondale Moore, who played most recently for the Minnesota Vikings, was found dead Saturday night in Indiana, authorities said. He was 25. Police said Moore died of a suspected self-inflicted gunshot wound. Moore was found dead in the garage of a property in his hometown of New Albany, police chief Todd Bailey said. The death remains under investigation.”

“Floyd County Coroner Matthew Tomlin did not share additional details on the circumstances of Moore’s death but said there was no threat to the public and an autopsy would be conducted Sunday. Moore, a receiver and return specialist drafted in the second round out of Purdue University, spent his first three years in the NFL with the Arizona Cardinals.”

Back in the summer of 2024, Vikings rookie cornerback Khyree Jackson died in a Maryland car accident, and the tragedies have not relented for the franchise.

Greenard’s Tweets

For starters, Las Vegas Raiders safety Jamal Adams tweeted, “I’m not jumping to conclusions, but let me say this. Fans and media be quick to label a player ‘injury prone.’ We don’t choose to get hurt… sometimes shit just happens. Y’all don’t see the rehab, the pain, the mental drain it causes. That process can make you lose yourself. This shit is real. No matter how much support you get, you still gotta fight that battle alone. Prayers up for Rondale Moore and his family. He was a baller, no question.”

Greenard retweeted the Adams tweet and commented, “Ppl legit will say the most craziest things tryna be funny on this app. Then turn around wondering why the players mentals are COOKED. Players see ALL the tweets just like yall do bc WE ARE HUMAN JUST LIKE YALL. Algorithms will flood your page w BS that other ppl try to bring you down with.”

“This isn’t all on the media but they play a part. Especially these bot pages. Use that block button and go pray for clarity on your identity fellas. We got too much life to live than to succumb to the negativity in our most vulnerable periods of time.”

It’s a familiar line of defense: fans often treat football players like Roman gladiators; they’re just humans and mortals like you.

Other Players Agree

J.J. Watt, a teammate of Moore in Arizona, tweeted, “Can’t even begin to fathom or process this. There’s just no way. Way too soon. Way too special. So much left to give. Rest in Peace Rondale.”

Hollywood Brown: “Bro ain’t no way brotha you just messaged me few hours ago. You wasn’t alone bro.. I told you I know how you feel.”

Kyler Murray posted on Instagram, “Just spoke to you bro. Blessed to have been able to share this life with you. I pray you’re in a better place now Ra.”

The NFL Players Association released this statement: “In moments like this, we are reminded of how much our players carry, on and off the field. To our members: Please know that support is always within reach. Check on your teammates and prioritize your mental health. If you or someone you know is struggling, we encourage you to take advantage of the many confidential resources and services available to you through the NFLPA.”

Statement from the Vikings

The Vikings issued this statement: “We are deeply saddened by the passing of Rondale Moore. While we are working to understand the facts, we have spoken with Rondale’s family to offer our condolences and the full support of the Minnesota Vikings.”

“We have also been in communication with our players, coaches, and staff, and will make counseling and emotional support resources available to anyone in need. Our thoughts are with Rondale’s family and friends during this devastating time.”

The day after Moore’s death, former Vikings defensive back Ronyell Whitaker also passed away at the age of 46. He played for the Vikings in 2006 and 2007.

Moore was the Big Ten Wide Receiver of the Year in 2018.

Sports

Borba: Julian Lewis Snubbed in Colorado Spring Draft?

![]()

Colorado football turns up the heat this spring as a surprise quarterback draft pick stirs the pot.

With Julian Lewis snubbed as the top QB selection in favor of true freshman Kanel Sweetwine, locker room dynamics and competitive fire reach new heights.

Is this a tactical ploy to motivate Lewis, or the start of a real QB controversy?

I dive deep into the spring practice draft and the leadership pressure mounting on Lewis as Colorado’s new wave of talent acclimates.

Coach Prime’s sky-high salary raises eyebrows nationally. Still, I make what I think is a compelling case for Deion Sanders’ worth, highlighting his transformative impact: quadrupling win totals, sparking record ticket sales, and delivering $3 billion in publicity value for Boulder.

Coach Prime’s sky-high salary raises eyebrows nationally. Still, I make what I think is a compelling case for Deion Sanders’ worth, highlighting his transformative impact: quadrupling win totals, sparking record ticket sales, and delivering $3 billion in publicity value for Boulder.

The debate rages—are wins and losses the only metric, or is Prime’s star power the very lifeblood Colorado needs to compete in the Big 12?

Spring ball hasn’t even started, but injuries loom large: eight Buffs—including top runners and receivers—face time on the sidelines, threatening depth and position battles.

Can Colorado’s new faces make the most of these openings, or will persistent injury woes undermine momentum?

Can Colorado’s new faces make the most of these openings, or will persistent injury woes undermine momentum?

I break down the immediate impact, the risk to player development, and what Colorado must do to keep its roster healthy and competitive.

Will Julian Lewis rise to the challenge? Will Coach Prime’s investment pay off, and can Colorado overcome its injury bug to deliver on soaring spring expectations?

This episode covers it all—competition, controversy, and optimism—as the Buffaloes chase a statement season.

Sports

Hot Streak Could Keep Matadors Dancing in March

Cal State Northridge is making a run in the Big West, and people might need to start paying attention.

Last week, I focused on UC Irvine and Hawai’i. This week, I zero in on the Matadors ahead of their home game on Thursday against the Anteaters.

Last week, I focused on UC Irvine and Hawai’i. This week, I zero in on the Matadors ahead of their home game on Thursday against the Anteaters.

I know there are a million things to do in L.A., but attending a CSUN game is becoming one of the better choices for basketball aficionados.

The Run and the Three-Headed Monster

On January 24th, CSUN was 11-10 and had lost three in a row, after Hawaii decimated them by 21 points.

Their season felt over.

One month later, the Matadors are 18-10, having won seven in a row, including recent victories over two of the league’s top teams to move into a three-way tie at the top of the conference.

What’s the flavor?

The biggest takeaway from their box scores is that multiple players can get jiggy with it.

During the Matadors’ seven-game winning streak, they’ve had two different players score at least 30 points in a game, and three with 20.

Josiah Davis scored 31 in an overtime victory over Santa Barbara last Thursday, and Larry Hughes II had 32 against Cal Poly on February 5th.

Mahmoud Fofana also had back-to-back 22-point games entering 2026.

This three-headed monster on offense has proven too much for opponents during this streak. Davis, Hughes II, and Fofana have combined for at least 78 points in their last seven contests.

Hughes II leads the team, averaging 18.2 points per game, while shooting 42 percent from beyond the arc.

CSUN’s dramatic run could probably have been predicted after they went on a seven-game run last year, around the same time.

But the Matadors lost number eight to UC San Diego last season, and faltered down the stretch, losing their first game in both the Big West and NIT tournaments.

Now, just like last year, they have a chance to host a top team at Premier America Credit Union Arena.

But the outcome remains unwritten.

Head Coach Andy Newman a Proven Winner

Andy Newman has instantly turned California State Northridge into a contender. Before his arrival, the Matadors hadn’t had a winning campaign since 2008-09.

Newman’s fast-paced offense has brought the Matadors to life

He’s also bringing wins to the Valley. CSUN is on pace for 20 dubs this year, having grabbed 22 a season ago, and 19 in his inaugural campaign.

The key to the Matadors’ success this season has been their dynamic offense, which ranks 59th in the nation in points per game.

But this shouldn’t come as a surprise.

Newman promised to bring “an exciting, fast paced, team-oriented style of basketball back to the valley” when he was hired, and to do it “by lighting up the scoreboard and competing for championships.”

He has done just that.

Before Cal State Northridge, he won everywhere he coached, bringing a 206-98 record to Northridge.

He was already known within Big West circles after serving as an assistant coach at Cal State Fullerton for 10 years.

Newman was also an interim Head Coach for the Titans in 2012-13, when CSUF had the best offense in the conference.

He led Cal State Northridge to the First Round of the NIT last year. This season, if they keep winning, the Matadors could find themselves in the NCAA Tournament.

Sports

Fonseca and Melo claim Rio Open Doubles Crown

João Fonseca and Marcelo Melo are the doubles champions at the Rio Open; they delivered a special moment for Brazilian tennis.

Fonseca, 19, is one of the most exciting young players on tour. A former junior world No. 1 and US Open boys’ singles champion, he has been transitioning steadily into the professional ranks, with Rio marking another significant milestone in his early career.

Melo, 42, is also a former world No. 1 in doubles and a Roland Garros and Wimbledon champion in doubles. He has spent years competing at the top of the discipline. He has multiple Masters 1000 titles and a long-standing presence as one of Brazil’s most successful doubles players.

Sports

Benjamin Sesko opens up on not starting under Michael Carrick and work behind the scenes at Man United

Manchester United striker Benjamin Sesko continued his hot streak in front of goal against Everton at the Hill Dickinson Stadium on Monday.

Benjamin Sesko was booed by home supporters as he left the Hill Dickinson Stadium on Monday night, but he did not flinch as he walked towards the team bus. The 22-year-old’s temperament is one of his biggest strengths.

Michael Carrick named Sesko on the bench for a sixth game in a row on Merseyside. Other players of a similar age might have sulked, but the Slovenia international has just got on with his job with no fuss.

Sesko delivered off the bench again to net his sixth goal in seven appearances. He is the most in-form striker in the Premier League, yet hasn’t made a start during Carrick’s interim tenure so far.

FOLLOW OUR MAN UNITED FACEBOOK PAGE! Latest news and analysis via the MEN’s Manchester United Facebook page

The conversation in the build-up to the Everton game was about whether Sesko would get his first start under Carrick, who said, “I get why everyone is making a big deal out of it,” after the 1-0 win.

The calls for Sesko to start this weekend will be deafening. The striker has made himself impossible to ignore, although his response to a question about how it feels not to be starting spoke volumes about his character.

Speaking to reporters after the game, Sesko said: “We are talking, of course, but he [Carrick] believes in me, everyone believes in me. they are getting me ready to start as soon as possible. It’s more about me showing up when it’s important, no matter how many minutes I’m getting, I’m focusing on delivering and trying to help the team secure the wins.”

When asked whether being gradually bedded in had helped him this season, he responded: “Of course. I’m getting settled in the league from game to game. Again, I’m not even thinking about ‘I have to start, I have to start’.

“For me, it’s just whenever the coach decides to put them there or not, I’m just going to be there. If I get the next minutes, five minutes, I’m going to use them and for me it’s just about trying to enjoy and delivering for the team.”

Sesko copped flak earlier in the season from pundits. Gary Neville said he was “miles off it” compared to United’s other summer signings. Ruben Amorim even said he was “struggling a little bit”.

Sources at Carrington believe Sesko might not have seen Neville’s comment, as he is rarely on social media. He prefers reading books at home and watching basketball.

Sesko is embracing the pressure that comes with a £66.4million price tag, and a transfer to the biggest club in England. “For me, the way I look at the pressure, it’s something that, if I want to be a good player, I have to have. I take it as a privilege,” he said.

“It’s something that has [to be there] if you want to play at the highest level, and it’s about accepting it and not really caring about it. For me, it didn’t really affect me [the pressure to score goals this season] and I don’t think for Cunha and Bryan as well.”

Speaking about his relationship with Cunha and Mbeumo, Sesko said: “We are understanding each other. There is a lot on the training field where we are working a lot. It’s also the way Bryan made a pass [for the winning goal] because he saw that I was running, he saw that I wanted that ball.

“And obviously, with his quality to make a perfect pass because it’s also not easy to do that to put it directly there. That’s what it’s about, to have such quality in the team. Obviously, it looks so easy [to finish], but because you have so much time, many things are on your mind, but I chose one corner, and I went fully for that.”

Sesko has made an electric start to 2026, but he was keen to credit Carrick and his coaching staff for the work they have done at Carrington. “Everyone is working for each other and I said many times the coaching staff in general, not just Carrick, but also the others he has beside him are unbelievable,” he said.

“They are working on individuals and you can see that on the pitch. In the end, to win so many games and secure so many points, it’s made out of details and that’s how we get all these points.”

Travis Binnion, formerly Under-21 manager, has been a big help for Sesko. “After the trainings, it’s work in the box, on the edge of the box, short contacts because in the Premier League you don’t have time,” he explained.

“This is where it’s really helping me, and not just me, but also the other players. I’m really happy that I can work with [Binnion] because he’s helped me a lot.”

Speaking about his mental preparation, he added: “The most you can improve is on the pitch because the ball is, in the end, the thing that has to hit the net, and this is where the most work comes out. Obviously, it’s really important when I arrive home to do some work, which is really important for the mental part.”

A rumour circulated that Sesko had spoken to Dimitar Berbatov about his finishing technique. Sesko confirmed it was not true, but said it would be “nice” to speak to him before crediting the coaching staff again. “They are doing individual work each day, which is really helping me to focus,” he said.

Sesko politely said “thank you so much” when he was congratulated at the beginning of the five-minute chat. “As much as it means to me, it means more to our team to secure the win because it was a really tough game,” he said.

“We were fighting from the start. It was an interesting game, but even though in the end it was quite hard because of the corners, we were fighting and secured the win, which was really, really important.”

Sesko is becoming a key part of this United team – and his best years are ahead of him.

Ensure our latest sport headlines always appear at the top of your Google Search by making us a Preferred Source. Click here to activate or add us as a Preferred Source in your Google search settings

Sports

NFL Insider Whispers Vikings QB Could Be Traded

The Minnesota Vikings have outwardly professed they will add another quarterback this offseason, likely to pair with 23-year-old J.J. McCarthy in a summer training camp competition — or at least to employ a top-tier backup alternative. But according to Yahoo Sports‘ Charles Robinson, McCarthy could be traded if the right deal presents itself.

It’s rumor territory, yet it connects to Minnesota’s offseason, cap planning, and a desperate QB market.

The report is especially noteworthy as the NFL Combine begins this week, and general managers + head coaches converge on one spot to wheel and deal.

Combine Week Puts the Vikings’ Quarterback Situation under a Microscope

Don’t rule out a McCarthy trade altogether, says Yahoo Sports.

Robinson on McCarthy

Most Vikings fans don’t have McCarthy on the trade block, but Robinson subtly challenged that position on Friday.

With the Combine kicking off Monday, he wrote, “Throw in some young players who might get a call or two just to see if they are available on the trade market, including Buffalo Bills wideout Keon Coleman, Jacksonville Jaguars wideout Brian Thomas Jr., Indianapolis Colts quarterback Anthony Richardson and Minnesota Vikings quarterback J.J. McCarthy. Thomas, Richardson and McCarthy are not expected to be officially on the trade block, but all three could garner some interest and calls.”

“Thomas seems less likely to be dealt with the Jaguars moving Travis Hunter primarily to cornerback. McCarthy won’t be dealt unless the Vikings are presented with a quarterback option that effectively renders any chance of him having a future as moot.”

What’s the Right Deal?

Robinson claimed McCarthy could be jettisoned via trade if his future became “moot.” That’s a rather meaty ultimatum, one that suggests the Vikings would be deep-sea fishing for his replacement. It’s rare for a quarterback-whispering head coach like Kevin O’Connell to draft a player in Round 1 and cast him off less than two years later.

Therefore, to trade McCarthy, per the Robinson theory, the incoming quarterback would have to be quite splashy. The list might look like this:

- Joe Burrow

- Justin Herbert

- Lamar Jackson

- Baker Mayfield

And there’s just no evidence to suggest any of the players’ teams will trade those passers.

Team Control for Up to Three Years

Meanwhile, McCarthy remains under contract with the Vikings through the end of 2028 if Minnesota eventually exercises his fifth-year option. They achieve no major cap relief by trading McCarthy. The franchise would basically sever ties altogether because it found something proven and better.

McCarthy struggled in 2025, his second year in the pros, sans a handful of big moments in clutch spots, as well as a memorable three-game stretch against the Washington Commanders, Dallas Cowboys, and New York Giants, when McCarthy played at a Pro Bowl clip against three poor defenses.

It’s too early to mail it on McCarthy — unless he’s required to land Burrow, for example.

Aaron Rodgers in Play? Malik Willis?

Robinson was actually full of Vikings quarterback theories.

He noted on Aaron Rodgers’s situation: “The future of Aaron Rodgers will be a pressing question for the Pittsburgh Steelers — at least as it pertains to the organization’s other options and what kind of timeline there would be for a Rodgers decision.”

“It’s possible we exit the combine under the presumption that the Steelers are going to move forward and try to find their future QB, which would shift the Rodgers conversation back to the Vikings, whom he was interested in before landing in Pittsburgh last offseason.”

The rumor mill featured Rodgers to Minnesota front and center last offseason before the 42-year-old signed with the Steelers.

Robinson also mentioned Malik Willis, the league’s top free-agent quarterback, while dropping the Vikings’ name once again.

“The ballpark speculation in the agent community is some kind of two-year deal averaging $30 to $35 million a season with $40 to $45 million guaranteed. That would put Willis in position to go back to the table next offseason and negotiate a longer term deal that tacks on to the end of 2027 and extends his guaranteed money out into a three-year window through the 2028 season,” he scribed.

“There are varying opinions on the numbers and structure, not to mention the potential interest. Willis feels like the first big quarterback domino that has to fall in March to trigger a larger migration. If he were to land in Pittsburgh, that then puts Rodgers — if he still wants to play — onto the market for the Vikings or any other suitors. And once Willis is off the board, the teams that ultimately don’t have him as an option will then have to reassess.”

Willis will turn 27 in May and may be the league’s next big reclamation story, akin to Sam Darnold and Baker Mayfield.

It’s also worth noting that Robinson isn’t a hot take merchant; if he says McCarthy could be traded for a big fish, that’s a credible assertion.

Sports

How Sri Lanka could secure crucial home semi-final advantage | Cricket News

NEW DELHI: Sri Lanka could enjoy a massive home advantage in the ongoing ICC T20 World Cup 2026, with Colombo’s R Premadasa Stadium in line to host their semi-final — but only under specific conditions confirmed by the International Cricket Council.Go Beyond The Boundary with our YouTube channel. SUBSCRIBE NOW!According to ESPNCricinfo report, as per tournament logistics shared with stakeholders after the Super Eight stage was finalised, Semi-final 1 remains a “floating” fixture that could be held either in Colombo or Kolkata. The ICC clarified that Pakistan will automatically play their semi-final in Colombo if they qualify. However, if Pakistan fail to reach the last four and Sri Lanka qualify instead, the island nation will host the semi-final in Colombo — provided their opponent is not India.

This arrangement creates a potentially decisive edge for Sri Lanka, who could play a knockout match in familiar home conditions with crowd support behind them. However, if Sri Lanka end up facing India in the semi-final, the match will not be held in Colombo, as India’s semi-final has been designated for Mumbai unless it is against Pakistan, in which case it shifts to Colombo.

Poll

Who would benefit more from the current semi-final venue rules in the T20 World Cup 2026?

The report further said that if neither Pakistan nor Sri Lanka reach the semi-finals, Kolkata will host Semi-final 1, while Mumbai will stage Semi-final 2. India, if they qualify, will play in Mumbai regardless of opponent, except in the case of a clash with Pakistan.Also, since Sri Lanka and Pakistan are in the same Super Eight group, they cannot face each other in the semi-finals.

Sports

NRR drama peaks: How Team India can still reach T20 World Cup semis | Cricket News

India’s semifinal hopes in the T20 World Cup 2026 now depend not just on winning, but on winning big. After cruising through the group stage unbeaten — including a statement victory over Pakistan — India suffered a major setback in the Super 8s. A crushing 76-run defeat to South Africa has left Suryakumar Yadav’s men under pressure in Group 1. With a net run rate of -3.800, India are well behind West Indies (+5.350) and South Africa (+3.800).For India, two wins alone may not guarantee qualification. Given their poor NRR, they need emphatic victories to stay in control of their fate.

INDIA QUALIFICATION SCENARIO

Following the heavy loss to South Africa, India face Zimbabwe in Chennai on February 26 before taking on West Indies in Kolkata on March 1.To remain in serious contention, India must first secure a commanding win over Zimbabwe. A victory by a margin of around 100 runs could play a crucial role in repairing their damaged net run rate.If India, South Africa and West Indies all finish on four points — a very realistic scenario — NRR will decide the semifinalists. With India currently lagging far behind, they must bridge the gap quickly.For instance, if India post 220 batting first, they would need to bowl Zimbabwe out for approximately 120 or less to make a significant improvement in NRR. A narrow win could leave them dependent on other results.

Scenario 1 – India win both matchesIf India beat both West Indies and Zimbabwe, they will finish on four points. And if South Africa win all their matches, both India and South Africa will qualify for the semins. If India win both matches and South Africa lose one of their games, three teams could end up tied on four points. In that case, qualification would be decided by Net Run Rate.If India win both their matches and South Africa lose both their games, then India and West Indies will qualify for the semi-final,Scenario 2 – India win one matchIf India pull off only one win, then they will be eliminated irrespective of what happens in other results of the group.Remaining Super 8 Fixtures – Group 1February 26: South Africa vs West Indies (Ahmedabad)February 26: India vs Zimbabwe (Chennai)March 1: South Africa vs Zimbabwe (Delhi)March 1: India vs West Indies (Kolkata)For the defending champions, the margin for error has vanished. The road to the semifinals now demands not just victories, but dominance.

Sports

California gubernatorial candidate Chad Bianco reveals sports vision for state

NEWYou can now listen to Fox News articles!

A lifelong New York Yankees fan is asking the people of California to make him their next governor.

Riverside County Sheriff Chad Bianco, who grew up in the heyday of the 1970-80s Yankees-Dodgers rivalry, admitted he had mixed emotions when Shohei Ohtani and company beat his childhood team in the 2024 World Series.

“I did [celebrate]. I was sad because I wanted the Yankees to win, but at the time, as a baseball fan I also noticed the Dodgers were a better team. The Dodgers deserved to win and I was very happy to be from the Los Angeles area,” Bianco told Fox News Digital.

CLICK HERE FOR MORE SPORTS COVERAGE ON FOXNEWS.COM

Around that same time, Bianco watched one of his Republican colleagues, and a staple in the 70s-80s Dodgers-Yankees rivalry, former Dodgers star Steve Garvey, make a run at a U.S. Senate seat in California. Bianco campaigned with Garvey. But Garvey came up well short against Democrat incumbent Adam Schiff that year.

Now, Bianco, who is currently a frontrunner for the California governorship in many 2026 polls, believes that two years of Democratic leadership since Garvey’s failed run has only strengthened the case for voting Republican in the Golden State.

“We’re in a little bit worse off scenario than we were in 2024,” Bianco said. “Californians are realizing that politics are corrupt, our state government is corrupt, and crime is really out of control.”

And sports hasn’t been spared any concerns in the state.

With the Winter Olympics now over, and the Summer Olympics coming to Los Angeles just over two years from now, anxiety has mounted over the feasibility of the city being able to host the games, due to crime rates, homelessness, damage from the 2025 wildfires, and rising taxes.

And on the youth front, the state faces an ongoing wave of biological male transgender athletes competing in girls’ high school sports, as California leadership has refused to comply with President Donald Trump’s mandate to ensure only female athletes compete in girls’ sports. The state’s refusal has prompted a Department of Justice lawsuit, multiple federal investigations, as dozens of California girls facing life-changing trauma, with some filing la”wsuits of their own.

Bianco thinks he has solutions for both issues.

The 2028 LA Olympics

As a sheriff, Bianco believes that if Los Angles was set to hold the Olympics this summer, in the city’s current state, it would not be possible to do so.

“No, I don’t think so,” Bianco said of the city being able to host the games if they occurred this year.

“Everyone’s wondering how they’re going to arrange the Olympics… we don’t have the money to dedicate to this, we don’t have the updated resources to dedicate to this, for transportation or even housing… I think it is absolutely embarrassing… I think the U.S. is going to have an amazing Olympics, but for the city of Los Angeles it’s certainly not a proud moment.”

Bianco pointed to financial mismanagement and alleged fraud in the state government.

Los Angeles continues to experience one of the nation’s largest homeless populations, with approximately 72,000+ individuals, driven by severe shortages in affordable housing and high rent, per the LA Homeless Services Authority.

Bianco warned of what he expects Democrat leadership would do if they remain in power when the Olympics comes around.

“They will go in at the last minute, and they will forcibly remove all of them and it’s not like they remove them, they just force them to the outskirts away from the perimeters of where these events are going to be,” Bianco said. “It’s not good for anyone, it’s not good for those events, it’s not good for those neighborhoods, it’s certainly not good for the people who are homeless.”

Bianco said a more feasible solution would require possibly years of resource re-allocation, which he hopes to take on as the state’s next governor. He would eliminated funding to

“We will have a year, possibly a year and a half to two years, to make sure we address the homeless situation, and I guarantee you that’s enough time,” Bianco said.

“It really isn’t homeless, it’s not homes, it’s drug and alcohol addiction, combined with mental health issues. And we have to be honest and we have to start addressing it for what it is. So you eliminate all the money going to the non-profits and NGOs that’s being wasted, abused and funneled back into politics. You stop that immediately.

Riverside County Sheriff Chad Bianco, a republican candidate for California governor, stops to speak with a woman during a tour of Skid Row in Los Angeles on Tuesday, Jan. 6, 2026. (Sarah Reingewirtz/MediaNews Group/Los Angeles Daily News via Getty Images)

“You put a small portion of that into the drug and alcohol rehab and the mental health rehab, and the centers that treat both. Because right now those don’t exist. I can almost guarantee you that we can address 90% of the homeless issue that we see on the streets within the first year. Within the second year, we can have it all gone.”

There is also an issue of financial strain on athletes coming into the state.

Bianco pointed to the recent Super Bowl in Santa Clara, and the financial burden that hit the players who competed in it simply because they had to pay California taxes.

Seattle Seahawks quarterback Sam Darnold lost approximately $71,000 due to California’s strict “jock tax”. While earning a $178,000 winner’s bonus, the week spent in California for Super Bowl LX triggered high state taxes on his, amounting to roughly $249,000, as the tax applies to prorated earnings from his three-year, $105 million contract.

For the Olympic athletes coming to the city in 2028, especially Americans, many of whom make far less than the average NFL player, Bianco worries how the state’s current tax system could put them at a disadvantage due to financial constraints.

“It’s going to seriously affect them with the cost of living here,” Bianco said. “They don’t make a lot of money… it’s astronomically more expensive than any place across the country, so it’s going to be detrimental for those people.”

Bianco has proposed eliminating the state income tax, intending to replace lost revenue with income from oil production. He has also stated that as governor, he would eliminate the gas tax and oppose a “mileage tax.”

“Taxes are hurting everyone,” Bianco said.

Trans athletes in girls’ sports

California has been the nation’s biggest hot bed for high school and college scandals involving biological male trans athletes competing in girls’ and women’s sports.

Current California Gov. Gavin Newsom has said he believes males in female sports is “deeply unfair” but hasn’t taken any steps to address. Newsom’s office provided a statement to Fox News Digital in September, suggesting the issue is beyond his control and responsibility.

“For the law to change, the legislature would need to send the Governor a bill. They have not,” part of the statement read.

Bianco said Newsom’s office is not telling the truth.

“Every time he opens his mouth he’s not telling the truth. He’s telling his version of what he wants you to believe… The reality is the governor is the top executive officer in this entire state and he sets the rules” Bianco said.

“That’s the governor lying to push the blame onto somebody else because he doesn’t want to be held responsible for what’s happening in our schools and our girls, because he wants to be president, and he knows the majority of the country is never ever ever going to vote for him knowing that he won’t stop this, so he’s blaming someone else.”

Bianco said he would use “force” as governor to ensure that girls’ sports are protected.

“You force people to not,” he said of how to handle schools letting males in girls’ sports. “In our high schools and in our school system, if they are going to allow it, we will not fund that. We will not fund the school, we will not provide them with their money.”

But preventing the issue from persisting is only half the battle. The fact is, the issue has persisted in California now for several years, and the state and many residents are dealing with the aftermath.

Young male children in California have even been transitioned at schools, without their parents’ permission, and later placed on girls’ sports teams and in thier locker rooms.

UFC LEGEND ENDORSES PRO-LAW ENFORCEMENT PICK FOR CALIFORNIA GOVERNOR: ‘WE NEED HIS STRENGTH’

Now, the state faces a lawsuit from the DOJ over its policy, while many schools face individualized lawsuits for related incidents.

Bianco believes he could “easily” settle the DOJ lawsuit simply by complying with Trump’s mandate.

But the individual incidents may require more steps, according to Bianco.

In Bianco’s home county of Riverside, two separate lawsuits have been filed against school districts.

A major state-funded university, San Jose State University, has been found by the U.S. Department of Education to have violated Title IX in its handling of a transgender volleyball player from 2022-24, and faces a lawsuit from former athletes and a former coach over the same issue.

Bianco believes the young women who have been affected by it are deserving of financial compensation, compensation from the schools and compensation directly from the state.

“Some [girls] have been seriously injured, and some were just emotionally traumatized. The schools should be paying for that. The state government should be paying for that,” Bianco said. “Our civil process allows for monetary remedies for situations like this, and they should be getting tons of money, because they have seriously been victimized.

“There certainly has to be those arrangements made for those lawsuits, those girls that are suing… you have to settle it… or you’re going to pay big money. So they are going to get money out of this and they should. They were wronged, they were deeply wronged.”

Under the current system, thousands of California school employees are legally required to be complicit in the system that allows trans athletes in girls’ sports, but also the system that allows males to gender transition without their parents’ consent or knowledge.

Some school employees have been fired for refusing to be complicit.

Bianco has a message for all school employees about how to handle this issue. He encourages school employees to, in the short term, risk their employment by not complying with state laws.

“Stand up and do the right thing,” Bianco said to the state’s school employees. “Thank God we have teachers that are standing up for that, and they’re doing the right thing, and they’re absolutely refusing, and they’re being fired. Take the badge of honor. Because then you sue, like these teachers are doing it, and now we’re finding that they’re winning…

“Your job as an adult is to protect our kids.”

Bianco also warned of consequences to the school employees that do comply if he becomes governor.

CLICK HERE TO DOWNLOAD THE FOX NEWS APP

“Absolutely,” Bianco said when asked if he would support consequences to school employees who are complying with the state law on trans athletes in girls’ sports and gender transitions for minors.

“Elected officials are only afraid of one thing, and that’s not getting elected again, and when they know they’re not going to get elected again because they’re harming our girls or they’re not protecting our kids, they’re going to finally be forced to do the right thing, and we’ll make sure these changes are taken care of.”

Follow Fox News Digital’s sports coverage on X, and subscribe to the Fox News Sports Huddle newsletter.

Sports

Vikings Defender Hires “The Shark” for Free Agency

NFL agent Drew Rosenhaus is known for cashing in big on behalf of his clients, and Minnesota Vikings linebacker Ivan Pace Jr. has hired him, with free agency two weeks away. Rosenhaus’s nickname is “The Shark,” so Pace Jr. has quite the asset on his side for March.

Pace’s move to Rosenhaus adds juice to his RFA outlook and keeps Minnesota’s plans worth monitoring.

No one is sure whether Pace Jr. will be back in Minnesota, but the Rosenhaus hire may signal a new chapter.

Reading the Tea Leaves on Pace’s New Representation

It’s another little hint for free agency.

Pace Jr. Hires Rosenhaus

Rosenhaus’s agency kept it pretty straightforward late last week, tweeting, “Welcome to the Family, Ivan Pace Jr.”

Here’s the official tweet:

Rosenhaus also notably announced relationships with Vikings free-agent safety Tavierre Thomas and former Vikings defender Reddy Steward, who played for the Dallas Cowboys in 2025.

A Future Totally Up in the Air

Pace Jr.’s diminished role was already apparent before the 2026 offseason.

After promising rookie and 2024 seasons, Pace saw his playing time decrease as Eric Wilson solidified his position. The emergence and steadiness of Wilson further reduced Pace’s opportunities, a shift accelerated by Pace Jr.’s early-season missed tackles. Wilson’s consistent performance kept him atop the depth chart.

The decline came at an unfortunate time for Pace. After starting 27 games over his first two seasons (2023-2024) and earning a strong 77.1 PFF grade as a rookie, his performance dipped in 2025, resulting in a 42.3 grade and hindering his chances of an early contract extension.

As a restricted free agent, Pace remains under the Vikings’ control. However, the team’s defensive success with Wilson in a larger role provides them with options regarding Pace’s future.

Pace will undoubtedly seek to regain a more prominent role. Minnesota retains the flexibility to retain him should their linebacker plans change, and the coming weeks should clarify whether his reduced role will persist.

Our Kyle Joudry noted on Pace Jr. last month, “Ivan Pace Jr. is capable of being an attacking ‘backer on defense and a nice part of special teams coverage. He may need to pursue those abilities with a different team. The undrafted talent quickly earned a promotion in the Twin Cities during his rookie season of 2023.”

“Seeing him get sent on blitzes aplenty in Minnesota made sense from within a Brian Flores defense with a deficit of pass rushers (and talent more broadly). The 2024 and 2025 seasons, though, have seen reinforcements arrive for Coach Flores. The tactician calling the shots on defense minimized Pace’s role, seemingly tipping the team’s hand in the process. Look for the RFA to get moved out in a trade. A Day 3 draft selection should be the expectation.”

Possible Pace Jr. Destinations

Two weeks ago, we speculated on potential landing spots for Pace Jr. in 2026, identifying teams that align with his skill set and estimating his market value.

Washington is a strong contender because of its familiarity with him. The Commanders’ new defensive coordinator, Daronte Jones, previously worked with Pace Jr. in Minnesota and understands his capabilities, a connection that could be significant when considering restricted free agents in their prime.

New England is another logical fit, largely because of its coaching staff. Patriots outside linebackers coach Mike Smith held the same position in Minnesota when Pace Jr. was beginning his career, giving him insight into how Pace Jr. integrates into a defense.

Jacksonville, Dallas, and Cincinnati are also potential destinations. Cincinnati has a hometown advantage that could influence negotiations if the Bengals decide to bolster their linebacker depth with a local dude.

VikingsWire‘s Andrew Harbaugh on Pace Jr.’s free agency: “Pace Jr. saw his role diminish in 2025 with the emergence of Eric Wilson in the linebacker unit. Pace still has some juice as a pass rusher if they decide to go that route and bring him back.”

“It is hard to imagine him coming back to be a part of the linebacker group after seeing how he was in coverage and run support, but the pass-rushing juice is certainly there. A one-year deal would make sense to see what he can do, but time will tell on that front.”

Other Rosenhaus Clients

Rosenhaus’s client list is huge; let’s get that out of the way. And among names that Vikings fans might recognize, here’s a peek:

- Abdul Carter

- Jalen Carter

- Nico Collins

- Christian Darrisaw

- Jonathan Greenard

- Javon Hargrave

- Aaron Jones

- Josh Metellus

- Chris Olave

- Josh Sweat

- Andrew Van Ginkel

- Kyren Williams

Rosenhaus also notably represented Rob Gronkowski and Terrell Owens in the past.

Pace Jr.’s free agency will heat up in two weeks when “legal tampering” gets underway on March 9th.

Sports

Best free bets UK & betting sign up offers

Free bets are available across UK betting sites for new and existing customers, though value varies widely depending on odds, expiry and restrictions.

At The Independent, our experts have done the hard work to find the best free bet offers through our impartial, detailed analysis, with the aim of providing users with relevant information on the best betting sign up offers that include free bets.

Our evaluations focus on the accessibility, flexibility, fairness, key terms and conditions and availability, ensuring our recommendations are reliable offer low minimum stakes, plenty of options for using free bets and clear T&Cs.

Every site that we recommend is licensed and regulated by the UKGC too, ensuring that users can rest assured that they are using a trusted site when claiming free bet offers.

Free bets can be claimed from betting sites by new and existing customers, allowing bettors to bet without risking their own money.

Terms and conditions are attached to free bets and betting offers. Usually, bettors must deposit or stake a qualifying amount to claim a free bet, which can be used on selected sports and events.

Wagering requirements can be attached to free bet offers, which means winnings must be played through a set number of times before you can withdraw funds as cash, but this is more common on casino sites.

Types of free bets

- Bet & Get: The most common betting sign-up offer, bet a minimum amount, meet the T&Cs and receive a free bet bonus.

- Moneyback specials: Typically an ongoing free bet promo, whereby punters get their money back as a free bet if there’s an underwhelming outcome such as a 0-0 draw.

- Free bet clubs: Loyalty reward schemes aimed at those who bet regularly with one bookmaker in particular.

- Enhanced odds with free bet winnings: A bookmaker offers a wildly inflated price on a popular market, such as 40/1 on Man City to win, with winnings paid out as free bets.

- No deposit free bets: These are rare, but can be obtained via free-to-play prediction games on several online bookmakers.

Free bets are straightforward to use for customers, although the way they can be deployed may differ depending on your chosen bookmaker.

Usually, betting sites will have a box or toggle on your bet slip that users can tick or move to confirm free bets on their bet.

An important note. If your bet wins, you only keep the profit, the free bet stake itself isn’t returned.

Bookmakers may require you to use free bets in precise portions, such as £5 or £10, while others will allow you to bet with amounts of your choosing until you’ve used up your balance.

|

Betting Site |

Offer Type |

Min. Bet |

Free Bet Value |

Best For |

|

Ladbrokes |

Bet & Get |

£5 |

£30 |

Low-stake sign up value |

|

Betano |

Bet & Get |

£10 |

£50 |

Football free bets |

|

Tote |

Bet & Get |

£10 |

£30 |

Horse racing free bets |

|

Bet365 |

Moneyback Special |

£10 |

£10 |

Ongoing money-back as free bet offers |

|

Virgin Bet |

Free Bet Club |

£20 |

£5 weekly |

Ongoing rewards for regular bettors |

Here are the standout betting offers on the market for users broken down into strength of category.

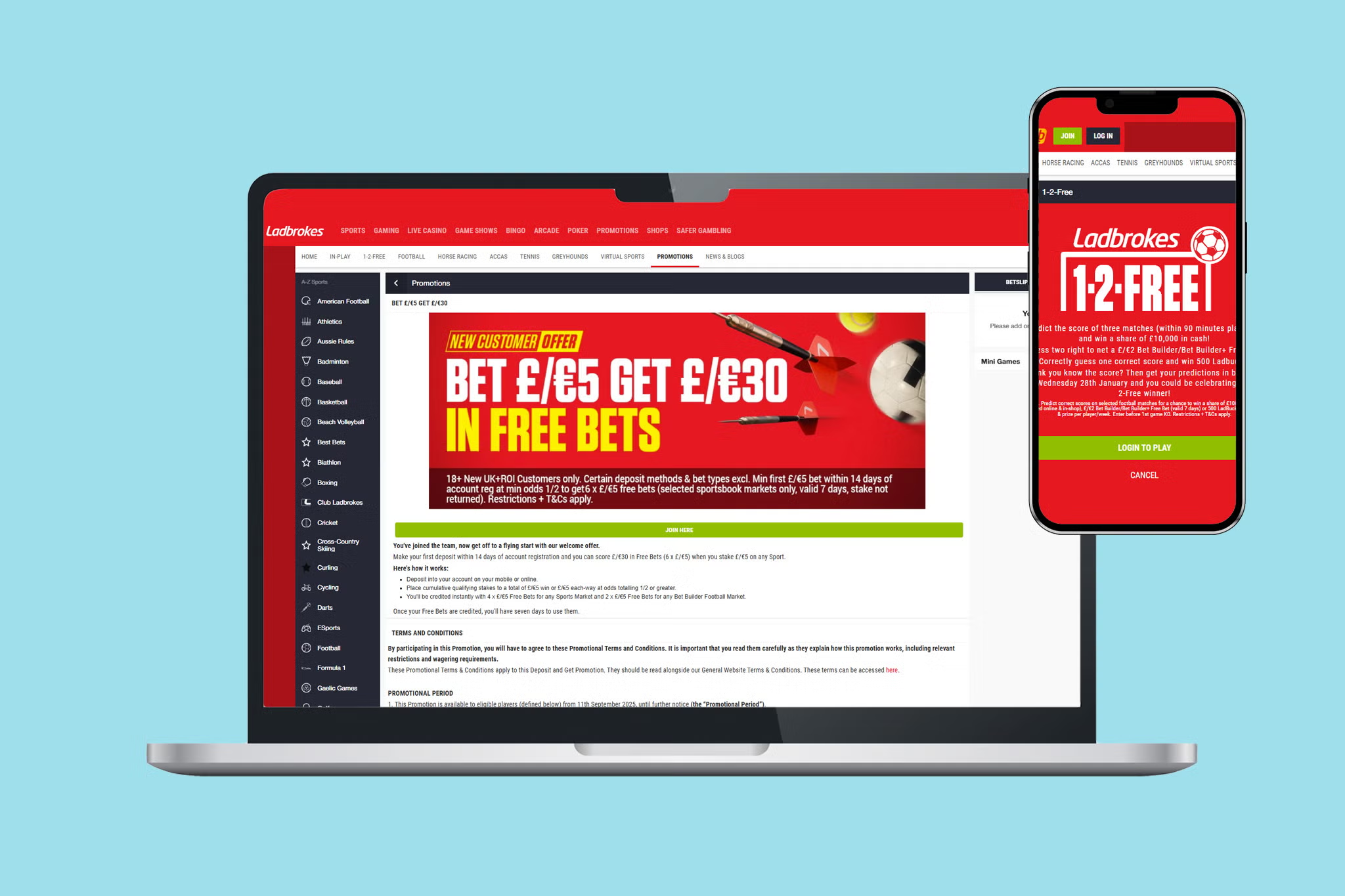

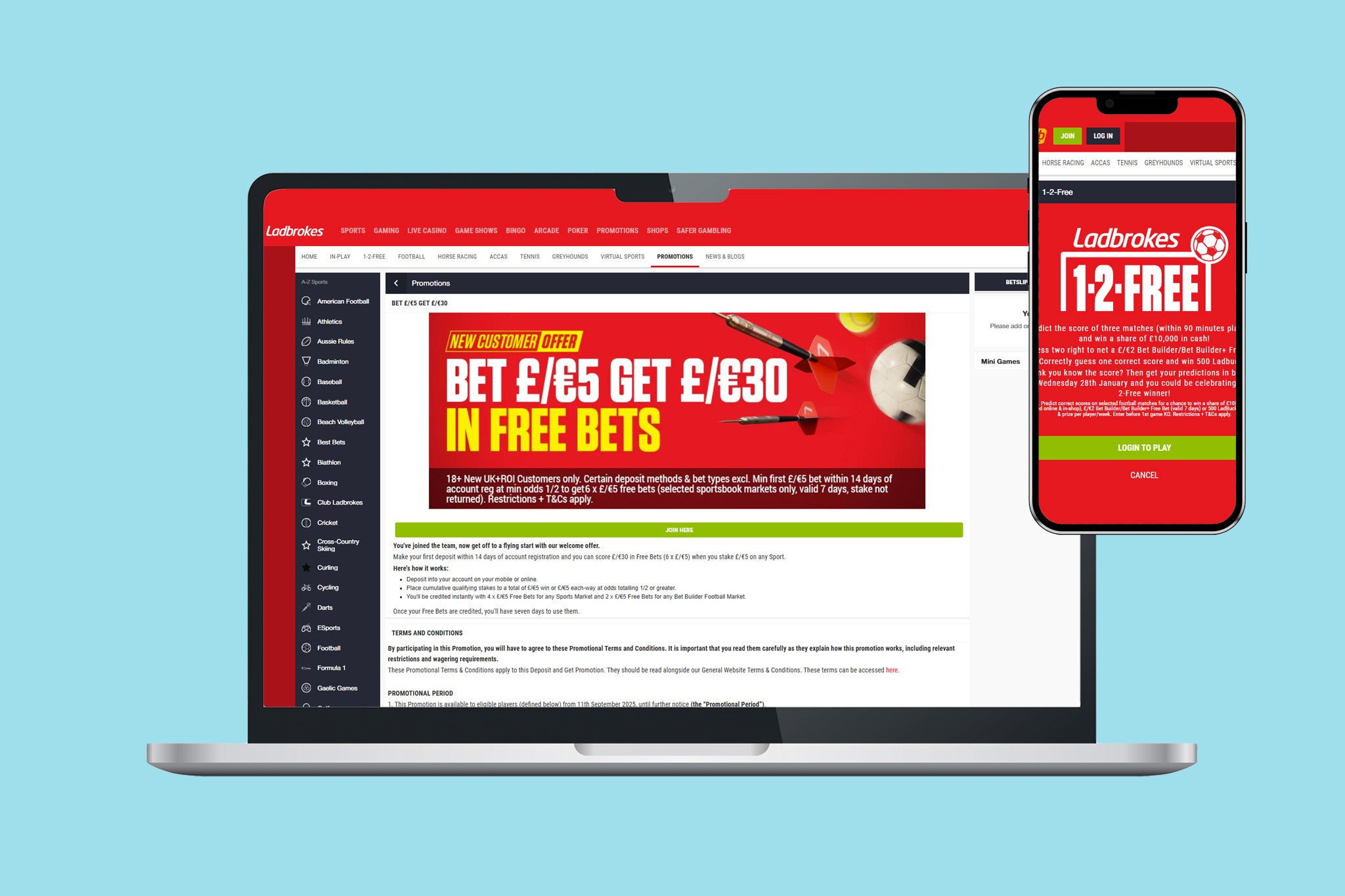

Ladbrokes – Best low-stake free bet offer

The Ladbrokes sign up offer provides the best value betting sign up offer for new customers, with users able to unlock £30 in free bets from just a £5 stake.

Users only need to place a qualifying wager on a sport with 1/2 odds, which is one of the lowest thresholds for welcome offers online, with the added freedom of being able to be on any sport.

There’s plenty of flexibility where to place your qualifying bet on both sports and bet types. There’s also 14 days to secure this betting offer after signing up.

Once your qualifying bet has settled, Ladbrokes pay out £30 in free bets in 6 x £5 installments within 24 hours.

Users will also find flexibility regarding these free bets with 4 x £5 free bets available to use on any sport, with a further 2 x £5 free bets are reserved for football bet builders.

We rate it as the best £5 deposit betting site on the market, with a return 600% return on your deposit and bet. For first-time bettors there are few betting sign up offers online that can match this value.

Betano – Best football free bets

Betano is the best option for punters looking for free bets at leading football betting sites, with the Betano sign up offer providing £50 in free bets with an initial wager of just £10 (an excellent return for a low entry stake).

The offer includes a straightforward qualifying bet with minimum odds of evens and no accumulator required, while the 30-day expiry on free bets gives users flexibility, with plenty of time to use bonuses.

This new betting site provides great variety on its football markets – from match odds and BTTS to goalscorers, correct scores and much more – and users will also find regular offers and promotions once signed up to the site.

Tote – Best for horse racing free bets

The Tote sign up offer is an excellent choice for horse racing fans, with a £10 bet returning £30 in racing value – a strong 3x reward for such a low qualifying stake.

The offer provides £20 in Tote Credit for horse racing – which is ideal for pools, exotics, and Tote-only markets – as well as £10 in free bets to use on the sportsbook, offering plenty of flexibility for new customers.

The qualifying bet simply need to be a £10 wager on any sport (with some exclusions, though win, place, or pool bets all count) at odds of evens or greater, and winnings are fully withdrawable, with Tote Credit profits available to be cashed out, keeping risk low.

Tote also guarantee boosts payouts paid at SP or better, adding an extra upside for horse racing bettors and making Tote the best choice among horse racing betting sites.

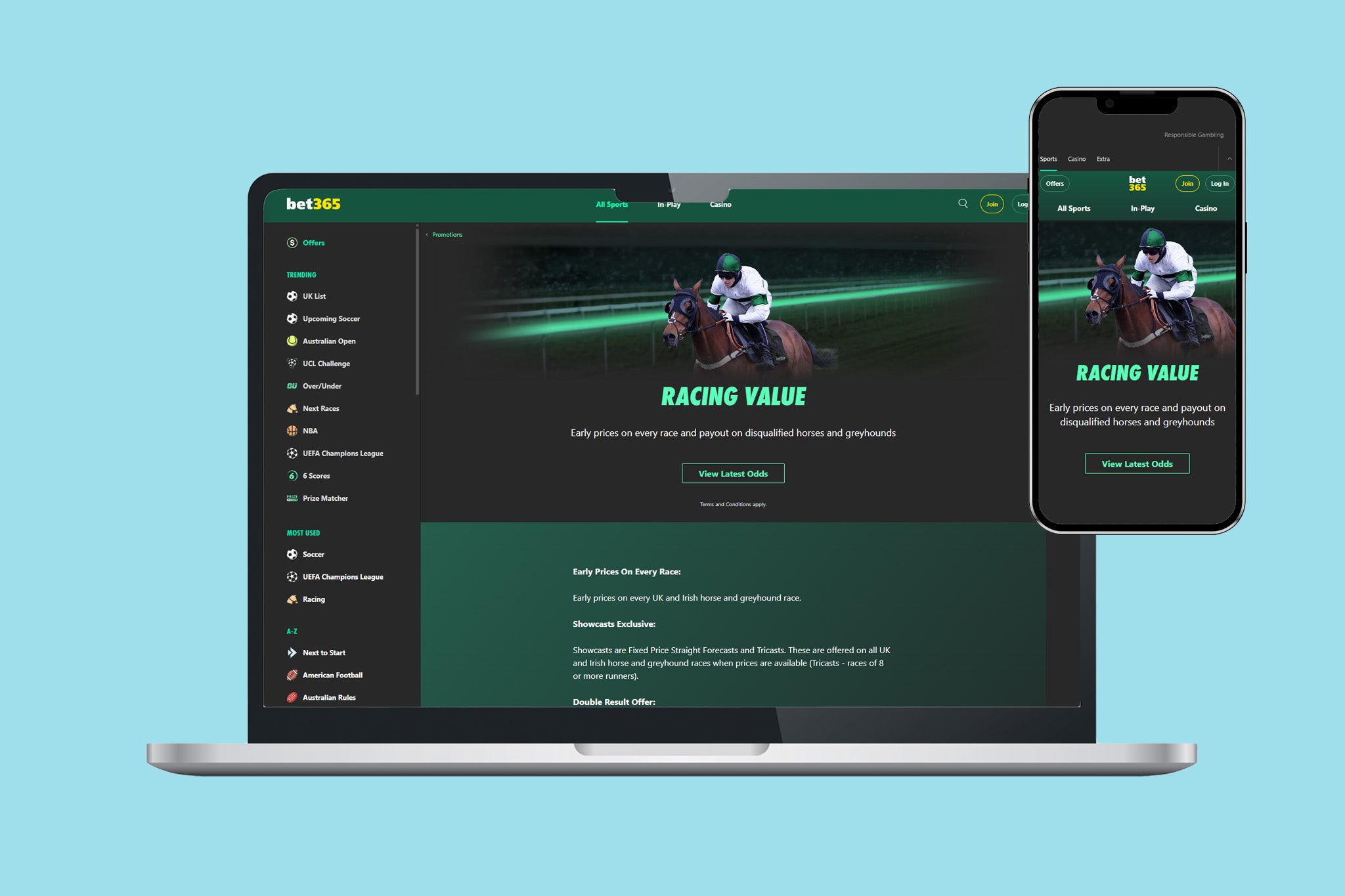

Bet365 – Best for moneyback as a free bet

Bet365 have recently launched its moneyback specials across a number of sports, including football and horse racing, as well as a range of major events.

Customers can wager up to £10 and will receive their stake back in free bets back if their bet fails. The system is simple for claiming the betting offer, with users ticking the ‘Money Back As Free Bets’ box on their bet slip to qualify.

Free bets are usually credited within a matter of hours, but it can take up to 24 hours. Free bet credits can be used anywhere on one of the best betting sites in the business with no limit on where and how your credits can be spent.

Bet365 moneyback specials are most common on football, and notably the Premier League where the highlight games of the week are covered with a moneyback special notably on Saturday and Sunday evenings, although Champions League and Europa League midweek games have also featured this betting offer.

The nuts and bolts of the requirements are as follows. Customers must place a qualifying bet builder on the eligible game or event, with bet builders needing only evens (2.0) or higher odds.

Bet365 also attaches its Sub Play On feature on football bet builders in conjunction with the moneyback special, which keeps bets alive even if a player is subbed.

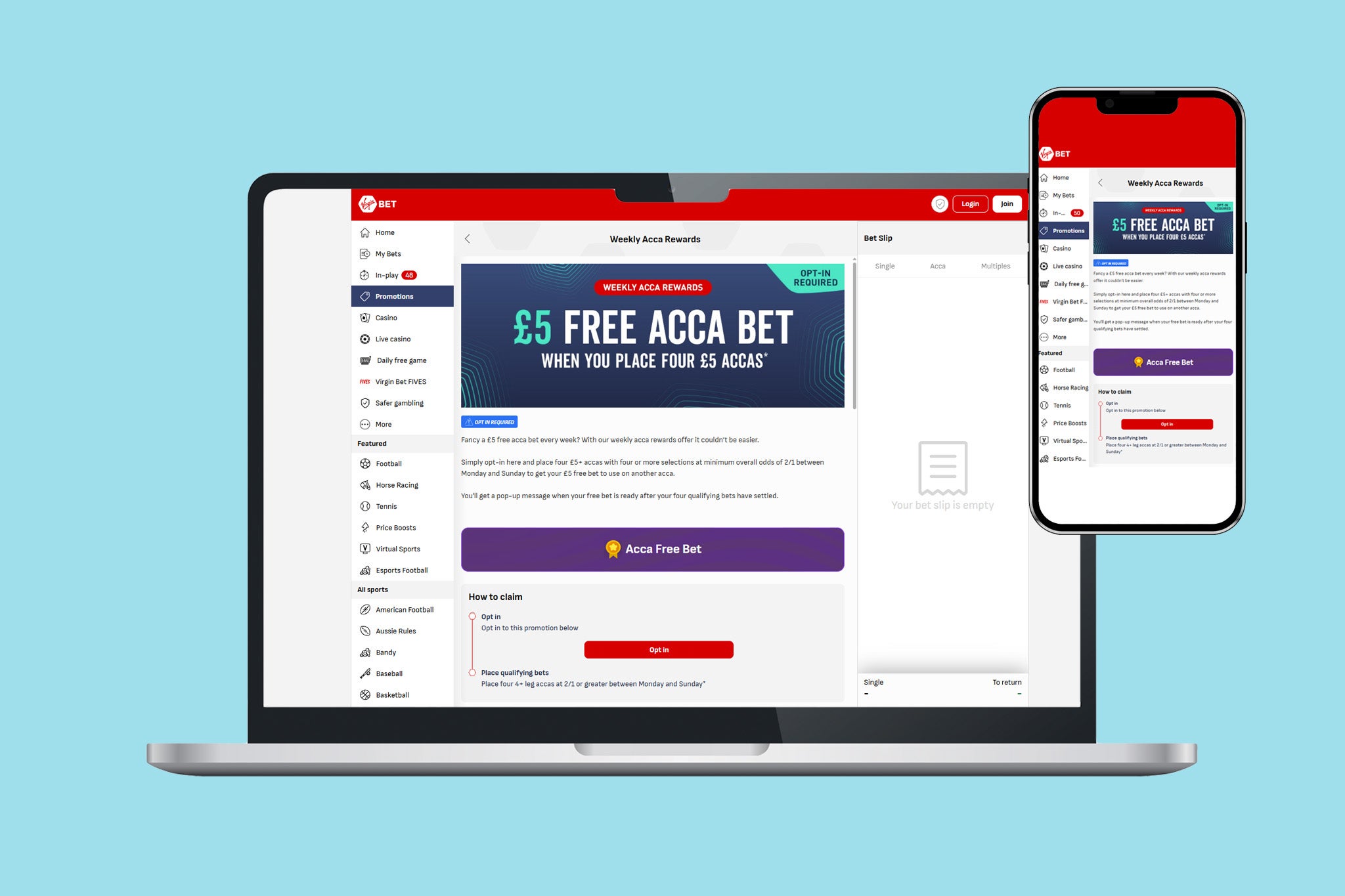

Virgin Bet – Best free bet club

Existing customers can use the weekly Virgin Bet rewards club to claim £5 in free bets for placing qualifying accas.

Users only need to opt-in on the promotion before placing four £5 accas with odds of 2/1 or greater between Monday to Sunday, making these qualifying stakes smaller than most rival free bet clubs.

Virgin Bet have an extremely low minimum odds requirement to use your free bet at 1/100.

You’re spoiled for choice, and better yet, there are no restrictions where you can use your bonus.

In addition, the seven-day expiry gives plenty of time to use the bonus, meaning the free bet club offers huge flexibility.

After the qualifying bets have settled, Virgin Bet pays out the £5 free acca bet into your account and to activate the free bet offer, simply use the toggle on your bet slip to use up the credits.

Here are the latest betting sign up offers and free bets available for today’s major sporting events.

|

Bet365: Moneyback Special Champions League |

|---|

|

Bet365 are offering a moneyback special where customers can claim £10 in free bets for losing Champions League bet builders on Tuesday. Customers need only place a bet builder on any Champions League game on Tuesday starting at 8pm GMT with odds of evens or greater and using the ‘Money Back as Free Bets’ box on their bet slip to qualify for the free bet offer. If your bet loses, you’ll receive your stake back up to £10 in free bets to use on the sportsbook. Free bets are active for seven days and are credited with 24 hours. |

|

NetBet: Champions League Free Bet Builder |

|---|

|

NetBet are running a free bet offer for customers that place a qualifying Champions League bet builder this week. To qualify, customers must create their own Champions League bet builder worth £10 on any game this week with odds of 3/1 or greater and three or more selections before kick-off. Once your qualifying wager has been confirmed, you’ll receive a £5 free bet to use on the sportsbook. Free bets are active for seven days before expiry. |

|

Unibet: NBA Bet Builder Refund |

|---|

|

Unibet are offering customers that place bet builders on the NBA £10 in free bets if their selection loses. Bettors that place a minimum £1 bet builder on NBA games with three selections or more and odds of evens or greater before selecting the Bet Builder Refund box on the bet slip. If your selection loses, you’ll receive your stake back up to £10 in free bets within 24 hours of your qualifying wager settling. Free bets can be used across the sportsbook and are active for up to seven days. |

Below, we’ve provided some detail on common traps that users can fall into when claiming free bets:

Stake not returned on free bets

Not all free bet offers return the original stake if your bet wins. For example, a £10 free bet at 3/1 pays £30 profit, not £40; always factor this in when comparing headline free bet amounts.

Short expiry windows

Free bets usually expire within 5-7 days of being credited, and occasionally less. Unused free bets are removed automatically once they expire, so casual or infrequent bettors can lose value this way.

Remember to always check the expiry date as soon as the free bet is added.

Bet builder or market restrictions

Some free bets are limited to certain types of use. For example, some are football bet builders only, accumulator bets or bets fixed to specific sports, leagues or events. These restrictions reduce flexibility and can increase risk.

Bet builder-only free bets often require multiple selections to win, meaning long odds, and it is the same with accas. Remember to check eligible markets before placing your qualifying bet.

Minimum odds requirements

Betting offers may require minimum odds on either the qualifying bet or the free bet itself, and sometimes both. Higher odds thresholds can push bettors toward riskier selections, while a smaller free bet with low odds requirements can offer better value.

Cash-Out and In-Play exclusions

Remember that cashing out a qualifying bet often voids eligibility for the offer. Some free bets also cannot be used on in-play markets.

These exclusions are commonly hidden in the T&Cs, so avoid cashing out unless you’re sure it won’t affect the promotion.

Wagering requirements and bonus conditions

Wagering is uncommon on free bets, but it is not unheard of. Some promotions attach extra conditions to winnings or follow-on bonuses instead.

Offers requiring winnings to be wagered multiple times reduce real value, while simpler “bet and get” free bets are usually the safest option.

How The Independent rates and reviews free bets

Before a bookmaker makes our list of free bet offers, they must meet key criteria to ensure a high-quality betting experience.

1. Licensing

Only sites with a valid UK Gambling Commission (UKGC) licence are considered on our list of recommended operators.

The UKGC ensures fair play and consumer protection, working alongside independent testing agencies like eCOGRA. If a bookmaker isn’t regulated, it’s not safe – anyone can verify a licence via the UKGC register.

2. Security

Every bookmaker we recommend must implement high-quality security measures such as SSL encryption and two-factor authentication to protect customer data.

3. Reputation

Reputation also plays a role – established brands like Betfred, William Hill and Bet365 consistently rank highly with us, but we also highlight new, reputable operators such as BetMGM when their free bet offers meet our expectations.

4. Mobile

With most bets now placed on phones and tablets, mobile betting functionality is essential.

Bookmakers with dedicated betting apps that mirror the desktop experience are given preference, and we also consider user app reviews from the Apple and Google Play stores.

5. Experience

The customer experience is equally crucial – we rigorously test bookmaker support channels, favouring those that provide fast, effective resolutions.

Ultimately, our rankings focus on the quality of the free bet offers, but we also take into account matters including odds restrictions, timeframe to both unlock free bet offers and use your bonus funds, wagering requirements and available payment methods.

6. Value

Operators that provide valuable betting sign up offers, competitive terms, and ongoing free bet promotions for returning customers get the highest ratings.

Why trust us? |

|---|

|

Chris Wilson is a betting content producer and sports reporter who has been working at The Independent since 2023. He writes betting tips across a range of sporting events as well as reviewing dozens of betting sites and casino sites across the UK. Chris has extensively tested and reviewed offers from established operators and new betting sites to find the best free bet offers for readers of The Independent. Responsible gambling is always at the forefront of his research, ensuring customers have a fair and secure experience claiming and using betting offers online. |

If you decide to engage with any of the online betting sites highlighted on this page, remember to gamble responsibly, even when using free bets and betting sign up offers.

When betting, always assume you’ll lose and therefore, only bet what you can afford to lose. Even free bets still involve a level of risk.

The same applies if you’re using new casino sites, slot sites, poker sites or any other form of gambling.

Make sure you use the responsible gambling tools offered by betting companies such as deposit limits, reality checks, loss limits and time outs. These can stop gambling from getting out of hand.

If you have gambling-related concerns, then seek independent help. There are several UK charities and institutions that offer support, advice and information, with a few listed below:

Can you withdraw free bets?

No, it is not possible to withdraw a free bet. It must be used according to the terms and conditions of the free bet offer or it will be forfeited.

Can you cash out free bets?

In most cases, bookmakers will not allow punters to cash out a free bet before the bet has run its course, so you will likely have to wait for your bet to settle before receiving any winnings.

Do Bet365 give free bets?

Yes, Bet365 are one of the best betting sites for free bets. They have a bet £10 get £30 welcome offer, and several ongoing free bet promotions under their ‘offers’ tab.

What does money back in free bets mean?

This means you can get a refund on your stake, but not as withdrawable cash – only as a free bet, meaning you have to stake the same cash again on a different bet.

What betting sites give free bets without deposit?

Few bookmakers hand out free bets for nothing, but you can earn free bets by entering free-to-play prediction games on Bet365, BetVictor, NetBet, Betway, Betfred, Ladbrokes, Coral and BetMGM.

We may earn commission from some of the links in this article, but we never allow this to influence our content. This revenue helps to fund journalism across The Independent.

-

Video4 days ago

Video4 days agoXRP News: XRP Just Entered a New Phase (Almost Nobody Noticed)

-

Fashion4 days ago

Fashion4 days agoWeekend Open Thread: Boden – Corporette.com

-

Politics2 days ago

Politics2 days agoBaftas 2026: Awards Nominations, Presenters And Performers

-

Sports18 hours ago

Sports18 hours agoWomen’s college basketball rankings: Iowa reenters top 10, Auriemma makes history

-

Politics19 hours ago

Politics19 hours agoNick Reiner Enters Plea In Deaths Of Parents Rob And Michele

-

Business7 days ago

Business7 days agoInfosys Limited (INFY) Discusses Tech Transitions and the Unique Aspects of the AI Era Transcript

-

Entertainment6 days ago

Entertainment6 days agoKunal Nayyar’s Secret Acts Of Kindness Sparks Online Discussion

-

Tech6 days ago

Tech6 days agoRetro Rover: LT6502 Laptop Packs 8-Bit Power On The Go

-

Sports5 days ago

Sports5 days agoClearing the boundary, crossing into history: J&K end 67-year wait, enter maiden Ranji Trophy final | Cricket News

-

Business2 days ago

Business2 days agoMattel’s American Girl brand turns 40, dolls enter a new era

-

Crypto World5 hours ago

Crypto World5 hours agoXRP price enters “dead zone” as Binance leverage hits lows

-

Business2 days ago

Business2 days agoLaw enforcement kills armed man seeking to enter Trump’s Mar-a-Lago resort, officials say

-

Entertainment6 days ago

Entertainment6 days agoDolores Catania Blasts Rob Rausch For Turning On ‘Housewives’ On ‘Traitors’

-

Business6 days ago

Business6 days agoTesla avoids California suspension after ending ‘autopilot’ marketing

-

NewsBeat1 day ago

NewsBeat1 day ago‘Hourly’ method from gastroenterologist ‘helps reduce air travel bloating’

-

Tech2 days ago

Tech2 days agoAnthropic-Backed Group Enters NY-12 AI PAC Fight

-

NewsBeat2 days ago

NewsBeat2 days agoArmed man killed after entering secure perimeter of Mar-a-Lago, Secret Service says

-

Politics2 days ago

Politics2 days agoMaine has a long track record of electing moderates. Enter Graham Platner.

-

Crypto World6 days ago

Crypto World6 days agoWLFI Crypto Surges Toward $0.12 as Whale Buys $2.75M Before Trump-Linked Forum

-

Crypto World5 days ago

Crypto World5 days ago83% of Altcoins Enter Bear Trend as Liquidity Crunch Tightens Grip on Crypto Market