Crypto World

Bitcoin 2026 ETF Sell-Off Purifies the BTC Bull Case, Analysis

Bitcoin (CRYPTO: BTC) stands at a turning point as institutional participation deepens and exchange-traded products reshape the trajectory of the largest crypto asset. Eric Jackson, founder of EMJ Capital, describes a coming wave of “purification” in which long-horizon capital becomes a more persistent buyer, even as price momentum remains tethered to ETF flows. Recent weeks have featured persistent net outflows from U.S. spot BTC ETFs, reinforcing a bearish tilt in the near term, yet Jackson argues that the industry is not failing as an asset class so much as redefining its owners and its catalysts. The market’s attention has shifted to the way Bitcoin interacts with broader markets, particularly through the lens of large equity ETFs and the evolving holdings of institutional investors.

Key takeaways

- Bitcoin has evolved into a high-beta tech position driven by ETF structures and institutional participation, with price dynamics increasingly echoing tech equities.

- Despite ongoing net outflows from U.S. spot BTC ETFs, the prevailing view is that the flow pattern may shift as longer-term institutional buyers re-emerge as meaningful holders.

- Stablecoin supply on exchanges needs to recover to counter prevailing bearish momentum and inject fresh liquidity into the market.

- Bitcoin’s price moves are closely tied to the performance of large ETFs like IGV (EXCHANGE: IGV), complicating the narrative that BTC is merely a store of value.

- The next wave of buyers could come from sovereign wealth funds, corporate treasuries, and other patient capital that plans to hold BTC for decades instead of quarters.

Tickers mentioned: $BTC, $IGV, $IBIT

Sentiment: Neutral

Price impact: Negative. BTC dipped below $63,000 amid ETF outflows.

Market context: The story sits at the intersection of ETF-driven liquidity, the risk-on attitude of macro markets, and the pursuit of longer-term capital that could redefine Bitcoin’s role beyond a short-term driver of price action.

Why it matters

The core argument explored by Jackson is that the current ETF environment is not a repudiation of Bitcoin’s thesis but a reconfiguration of who owns BTC and why. He notes that Bitcoin’s recent price action has been highly reactive to the behavior of large tech-focused baskets rather than gold-like stability, underscoring a shift toward a “high-beta tech position.” This is not a condemnation of Bitcoin as an asset; it highlights how ETF architecture can amplify or dampen moves depending on the flow dynamics of large holders.

In a contrast to 2021’s retail-driven exuberance, this cycle has institutions acting as the marginal buyers, with retail money gravitating toward other tech equities. The outcome, Jackson argues, could be a new equilibrium in which long-duration capital, less prone to rapid rebalancing, steps in as a stabilizing influence over time. This shift is underscored by the fact that the largest spot BTC ETF provider, via BlackRock, operates IBIT (EXCHANGE: IBIT), a vehicle that reframes who actually owns BTC and how its supply is interpreted in the broader market. In his words, “IBIT changed who owns Bitcoin.”

“BTC didn’t fail as an asset. It succeeded as an ETF. And that’s the problem.”

The analysis also points to a broader ecosystem dynamic: as exchange-traded products accumulate assets, their flows can become a dominant price driver, even if the asset itself remains in a longer-term growth trajectory. Jackson emphasizes that the true test is not immediate price action but the durability of new ownership patterns—whether sovereign wealth funds, corporate treasuries, and patient capital will embrace BTC as a decades-long holding rather than a quarterly rebalancing instrument. The evolution toward such ownership could act as a counterweight to cyclical pressures and help Bitcoin resist the pull of any single macro narrative.

“IBIT changed who owns Bitcoin.”

Market data cited in the commentary show a continued pattern of ETF outflows in the U.S. spot market, with sector-wide momentum often tied to the fate of the IGV (EXCHANGE: IGV), the BlackRock-run tech software ETF that remains a barometer for Bitcoin’s near-term price direction. Jackson notes a stark relationship: when IGV sells off, BTC tends to slide in tandem. This linkage reinforces the view that Bitcoin, for now, functions more as a risk-on tech proxy than as a pure store of value, a reality that could persist until a broader base of durable, long-horizon buyers emerges.

On the bearish side, data from Farside Investors indicate net outflows from US spot BTC ETFs topping the $200 million mark on a single day, reinforcing the delicate balance between supply and demand in the current environment. This outflow backdrop coincides with BTC/USD trading beneath recent support zones and with the market contemplating a potential macro bottom near the $50,000–$60,000 range. Yet the rhetoric around purification—an upgrade in the quality and durability of BTC ownership—offers a counter-narrative: the next phase could bring steadier demand from capital that does not chase quarterly returns but seeks a multi-year thesis aligned with the future of digital assets in institutional portfolios.

For observers, the key question remains: will the bears be proven right in the near term, or will the emergence of longer-duration capital push BTC toward new, steadier footing? Jackson’s framing suggests the latter, arguing that every cycle clears weak hands and paves the way for a more durable, patient class of buyers that can compress volatility over time. The bear-case focuses on current price behavior and ETF-outflow metrics; the bull-case centers on a structural shift in ownership that could re-anchor Bitcoin to a longer horizon rather than a shorter trading horizon.

As the market absorbs this tension, the role of stablecoins and liquidity in exchange ecosystems will be crucial. Jackson highlights a potential bullish trigger in the stabilization and expansion of stablecoin supply on venues where BTC trades, arguing that liquidity depth and cross-asset flows will better support a longer-duration investment thesis. The broader takeaway is not a single catalyst but a sequence of developments: improved ownership dispersion, more patient capital, and a liquidity backdrop capable of supporting larger, more durable bets on BTC’s future.

Ultimately, the narrative is not about abandoning the Bitcoin thesis but about reframing it in the language of institutions and ETFs. If “purification” proves to be a meaningful transition rather than a temporary lull, BTC could transition from a speculative cycle-driven asset to a more mature component of diversified institutional portfolios. That is the arc Jackson envisions: a gradual reweighting of the BTC thesis as the market benefits from a new class of owners who cross asset boundaries and commit to holdings that endure beyond quarterly reporting cycles.

For readers, the implications extend beyond price action. If the trend toward long-horizon ownership takes hold, Bitcoin could see more predictable demand patterns, reduced reliance on fickle retail speculation, and a broader acceptance within traditional investment portfolios. The coming months will be telling as ETF flows, stablecoin dynamics, and the behavior of IGV and IBIT converge to shape Bitcoin’s role in the institutional narrative.

What to watch next

- Watch for the end of IGV-driven selling pressure and any decoupling of BTC price from tech-equities movements.

- Observe whether stablecoin supply resumes growth on major exchanges, potentially altering liquidity dynamics.

- Track net flows into IBIT and other spot BTC ETFs as a gauge of increasing long-term institutional interest.

- Monitor commentary from sovereign wealth funds and corporate treasuries regarding BTC allocations and long-horizon positioning.

- Pay attention to price levels around the $50k–$63k range and any signals from volume that could precede a new phase of demand.

Sources & verification

- Eric Jackson’s X post discussing BTC price strength and the ongoing institutional exodus.

- Spot Bitcoin ETF net flows coverage detailing five weeks of net outflows.

- BlackRock’s position in BTC via IGV and the role of IBIT, the iShares Bitcoin Trust.

- Farside Investors’ data on netflows for Bitcoin ETFs.

- Historical references to BTC price behavior on macro timelines and timeline-based targets mentioned in market commentary.

Market reaction and the next phase for Bitcoin

Bitcoin (CRYPTO: BTC) is navigating a landscape where ETF mechanics and institutional involvement increasingly dictate price action, even as longer-horizon capital begins to align with a more durable ownership thesis. From Jackson’s perspective, the current environment is not a failure of Bitcoin’s core premise but a maturation of its ownership structure. He points to the fact that Bitcoin’s popularity as an ETF instrument has transformed who holds it and why, a transformation that could ultimately stabilize demand and reduce the volatility that has characterized the asset in previous cycles. In his framing, the “purification” process refines the Bitcoin thesis by pushing it toward a cohort of buyers capable of maintaining positions across a variety of market regimes.

IGV’s behavior—an influential proxy for tech-sector risk appetite—has underscored the degree to which BTC’s macro environment remains tethered to broader equity flows. The relationship is not a perfect one, but it has become a meaningful driver in days of outsized ETF activity. The linked commentary suggests that if IGV ceases its selling pressure, BTC could benefit from a re-tightening correlation and a broader base of liquidity that supports more stable trading ranges. IBIT, as a cornerstone of BTC exposure within a regulated ETF framework, represents a structural shift in ownership that could cement a longer-term, institutional footprint in the Bitcoin ecosystem.

Despite near-term headwinds, the long arc of this narrative remains optimistic for holders who are patient and disciplined. The prospect of sovereign wealth funds and corporate treasuries adopting BTC as a dedicated, multi-year allocation is the biggest potential inflection point described by Jackson. If realized, this shift would move Bitcoin beyond episodic cycles of price strength tied to fundraising or speculative sentiment, toward a steadier, more resilient accumulation that could redefine Bitcoin’s role in the global financial system over the coming decade. In the near term, traders will watch for liquidity signals, ETF flow trends, and the evolving interaction between BTC and large tech-equity benchmarks as the market slowly prices in a longer horizon reality.

Crypto World

Smarter Web Secures $30M Coinbase Credit to Speed BTC Buys After Fund

The Smarter Web Company PLC, a United Kingdom-listed Bitcoin treasury holder, has secured a $30 million Bitcoin-backed credit facility with Coinbase Credit. The move is designed to provide liquidity to accelerate Bitcoin purchases immediately after equity raises, reducing settlement timing risk in volatile markets. The company underscored that the facility is not intended to be a long-term debt instrument for ongoing BTC purchases. Smarter Web is publicly traded on the London Stock Exchange’s Main Market and also trades on the OTCQB Venture Market in the United States, with Bitcoin described as a core pillar of its treasury strategy and a stated goal to expand its digital asset holdings. The arrangement leverages Bitcoin held in custody with Coinbase as collateral, per a February 24, 2026 filing. See the attached document here: PDF.

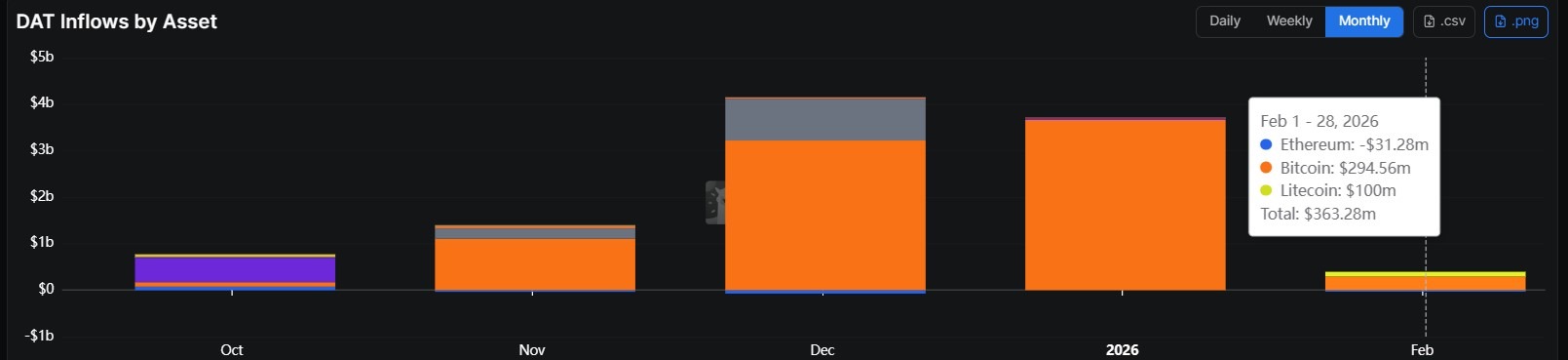

The latest development sits within a broader context of digital asset treasuries (DATs), which posted multi-billion dollar net inflows late in 2025 and into January 2026 before cooling in February. Data tracked by DefiLlama show inflows of $4 billion in December, followed by $3.7 billion in January, and then a marked slowdown to $363 million by February 24, 2026, as risk sentiment evolved. This pattern reflects a climate in which corporate balance sheets continue to scrutinize liquidity tools tied to Bitcoin exposure, even as overall demand for DATs moderates in the short term. See DefiLlama’s digital asset treasuries page for the latest inflow readings: DefiLlama.

According to BitcoinTreasuries.net, Smarter Web’s Bitcoin holdings stood at 2,689 BTC, purchased at an average cost of $112,865 per coin. At current price levels, those holdings value roughly $170 million, implying an unrealized loss of about 44% against the reported cost basis. The company’s disclosures note that, as of September 12, 2025, Smarter Web owned 2,470 BTC and described itself as the UK’s largest corporate Bitcoin holder at that time, signaling ongoing intent to grow its digital asset position. The firm also signaled interest in acquiring competitors to broaden its treasury and to pursue a spot on the FTSE 100 index. The latest holdings data suggest continued accumulation since the September 2025 update. For reference, see BitcoinTreasuries.net’s entry on Smarter Web: Smarter Web Bitcoin treasury.

The financing arrangement is designed to enable Swifter Web to borrow against its existing Bitcoin holdings to move more rapidly after equity raises, with repayment tied to the successful settlement of fundraising proceeds. The structure highlights a trend toward liquidity-centric use of BTC-backed facilities among corporate treasuries, as opposed to financing the ongoing purchase of BTC with new debt. The broader market context includes examples of divergent corporate Bitcoin strategies, where some firms are expanding exposure while others are reducing or liquidating holdings in response to capital needs and strategic shifts. For instance, a recent article discusses Strategy’s continued accumulation, with a 100th BTC purchase bringing its total to 717,722 BTC, while Bitdeer announced the liquidation of its entire Bitcoin treasury in a separate move to raise capital via a convertible debt offering. See: Strategy’s BTC purchases and Bitdeer’s treasury sale.

Diverging corporate Bitcoin strategies

The Smarter Web facility arrives amid a spectrum of corporate approaches to Bitcoin exposure. Some companies continue to add BTC to their treasuries, while others take liquidity-focused steps that involve selling or retooling holdings to support capital raises or strategic initiatives. The broader narrative underscores how treasury management is evolving as firms weigh balance-sheet resilience against market volatility and regulatory considerations.

Why it matters

The move by Smarter Web underscores a practical use-case for BTC-backed debt facilities beyond mere investment. By tying a credit facility to Bitcoin held in custody, the company can fast-track deployment of capital in the wake of equity raises, potentially capturing favorable entry prices and decoupling settlement timing from volatile market conditions. This kind of liquidity tool can help a corporate treasury bridge the gap between fundraising and asset deployment, reducing the risk of price slippage or missed opportunities during short windows after a financing round.

From a market-wide perspective, the development reflects ongoing experimentation with Bitcoin as a corporate treasury instrument. The inflow data from DATs suggests sustained interest in BTC-backed liquidity strategies through late 2025 and early 2026, even as overall momentum moderated in February. As BTC remains a volatile asset class, facilities that offer rapid access to liquidity while preserving long-term exposure can alter how companies plan capital allocation, M&A, and strategic initiatives, especially for firms with large Bitcoin holdings and ambitious growth agendas.

For investors tracking corporate exposure to Bitcoin, Smarter Web’s approach adds to the evidence that Bitcoin is being treated less as a speculative bet and more as a strategic balance-sheet asset. The company’s stated intent to avoid long-term debt financing for BTC purchases aligns with an emphasis on risk management and disciplined capital structure. As more issuers experiment with credit facilities secured by Bitcoin, market participants will watch for how these tools affect debt covenants, impacts on earnings volatility, and the potential signaling effect on other treasuries considering similar structures.

What to watch next

- Smarter Web’s upcoming earnings updates or capital-raising rounds to disclose how the facility is used to accelerate BTC deployments.

- Any changes to the company’s BTC holdings, including new acquisitions or rebalancing that would adjust the cost basis and unrealized gains/losses.

- Regulatory or market developments that could influence the viability or cost of BTC-backed facilities for corporates.

- Further DAT inflow/outflow signals from DefiLlama to gauge ongoing demand for Bitcoin treasury strategies.

- Announcements from related corporate treasuries (e.g., additional purchases, sales, or new liquidity facilities) that could provide context for Smarter Web’s strategy.

Sources & verification

- The strategic credit facility document: https://www.smarterwebcompany.co.uk/smarterweb-co-uk/_img/pdf/news/2026-02-24-strategic-credit-facility.pdf

- Smarter Web Bitcoin treasury data on BitcoinTreasuries.net: https://bitcointreasuries.net/public-companies/the-smarter-web-company-plc

- DefiLlama digital asset treasuries inflow data: https://defillama.com/digital-asset-treasuries

- Strategy BTC purchases article: https://cointelegraph.com/news/strategy-100th-bitcoin-purchase-592-btc

- Bitdeer Bitcoin treasury sale article: https://cointelegraph.com/news/bitdeer-sells-bitcoin-treasury-zero-holdings

Smarter Web taps Coinbase-backed facility to accelerate BTC deployment

In a strategic move to bolster liquidity after equity raises, The Smarter Web Company PLC has secured a $30 million Bitcoin-backed credit facility with Coinbase Credit. The facility is secured against Bitcoin held in custody with Coinbase and enables the company to move capital into Bitcoin (CRYPTO: BTC) immediately when fundraising closes, while reducing settlement timing risk during volatile markets. The company reiterates that the facility is not intended to finance ongoing, long-term BTC purchases, but rather to bridge liquidity between fundraising and deployment. Smarter Web is listed on the London Stock Exchange’s Main Market and trades on the OTCQB Venture Market in the United States; the firm emphasizes Bitcoin as a core component of its treasury strategy and has signaled an ambition to grow its digital asset holdings. The facility is designed to allow borrowing against existing holdings to accelerate post-raise deployment and to repay when fundraising proceeds settle. The filing and related documentation are available here: PDF.

The broader context for this move includes a pattern of positive net inflows into DATs through late 2025 and early 2026, followed by a cooling period in February. DefiLlama’s chart of inflows shows $4 billion in December, $3.7 billion in January, and roughly $363 million through February 24, 2026, indicating a deceleration after a burst of interest. This backdrop helps explain why Smarter Web would pursue a credit facility that unlocks faster deployment in response to equity raises while preserving long-term capital discipline. See DefiLlama’s DAT data for the latest series on inflows: DefiLlama.

Smarter Web’s Bitcoin holdings, tracked by BitcoinTreasuries.net, stood at 2,689 BTC with an average cost of $112,865 per coin, placing the current implied value near $170 million and an approximate unrealized loss of 44%. The company had previously disclosed a September 12, 2025 position of 2,470 BTC and described itself as the UK’s largest corporate Bitcoin holder at the time, with ambitions to acquire rivals to expand its treasury and potentially join the FTSE 100. The latest data suggest continued accumulation since that update, reinforcing the narrative of an aggressively managed digital-asset treasury. See Smarter Web’s BTC page for reference: Smarter Web BTC.

The rationale behind the facility is straightforward: borrow against existing BTC to accelerate deployment after fundraising, and repay once cash from the equity raise settles. It reflects how public companies are testing liquidity rails that preserve Bitcoin exposure while managing timing risk and balance-sheet constraints. The broader corporate landscape shows a mix of strategies, with some firms continuing to add BTC to their treasuries while others pivot to capitalize on capital-raising opportunities or to de-risk their holdings in a dynamically shifting market environment.

Crypto World

Coinbase Stablecoin Revenue Hits $1.35B: Bloomberg Sees 7x Growth Potential

Bloomberg Intelligence forecasts that Coinbase’s stablecoin revenue could jump sevenfold from its current $1.35 billion annual run rate.

Analysts point to a structural shift where stablecoins move beyond crypto trading collateral to become a primary rail for mainstream global payments.

Key Takeaways

- Coinbase generated approximately $1.35 billion in stablecoin revenue last year, accounting for 19% of its total income.

- Bloomberg Intelligence projects a potential 7x surge in this figure as regulatory frameworks drive payment adoption.

- The expansion hinges on the codified GENIUS Act, merchant integration via Stripe, and volume growth on the Base network.

Why Bloomberg Sees a Sevenfold Surge in Coinbase Stablecoin Revenue

Bloomberg Intelligence analysts, including Paul Gulberg, argue that the market is underestimating the utility phase of the stablecoin lifecycle.

While Coinbase reported $1.35 billion in stablecoin revenue for 2025, roughly 19% of its total top line, Bloomberg models suggest this figure is merely a baseline.

The forecast arrives despite Coinbase noting a net loss of $667 million in Q4 2025. The exchange’s revenue share agreement with Circle, the issuer of USDC, remains a bright spot, generating $364 million in the fourth quarter alone.

Bloomberg’s 7x multiple assumes that as interest rates stabilize, the sheer velocity of payment transactions will eclipse interest income as the primary revenue driver.

This thesis aligns with broader market data showing stablecoin transaction volumes hitting $33 trillion in 2025.

With USDC accounting for $18.3 trillion of that flow, the asset has already begun to decouple from pure crypto trading volumes.

The scale is big enough that the traditional finance sector can no longer ignore the fee generation potential.

Discover: The best Solana meme coins

How the GENIUS Act Is Accelerating Stablecoin Mainstream Adoption

The regulatory landscape shifted dramatically with the signing of the Guiding and Establishing National Innovation for US Stablecoins (GENIUS) Act in July 2025.

By creating a federal regime for payment stablecoins, the legislation provided the legal certainty required for large-scale institutional participation.

The Act explicitly bars issuers like Circle from paying interest to holders, a move backed by the banking lobby to protect traditional deposits.

While the regulatory framework for digital assets remains complex, the GENIUS Act has effectively greenlit stablecoins for commercial usage.

This clarity allows Coinbase to market USDC settlements to Fortune 500 companies without the overhang of legal ambiguity that plagued the sector in previous years.

Stripe Integration and Base Network Expansion Drive Payment Ambitions

Operational catalysts are already live, fueling the Bloomberg projection. The integration of USDC into Stripe’s global payment rails has reopened crypto acceptance for millions of merchants, creating a direct funnel for transaction volume.

Simultaneously, Coinbase’s own Layer-2 blockchain, the Base network, is lowering the barrier to entry for micro-transactions.

Much like other scaling solutions, the Base network reduces gas fees to fractions of a cent, making dollar-denominated transfers economically viable for daily coffee purchases.

High-throughput networks are critical here, as the Bitcoin Lightning Network demonstrated with its $1 billion monthly volume milestones, low-fee environments rapidly attract payment liquidity.

By routing these payments through Base, Coinbase captures value twice: once through the underlying sequencer fees and again through its revenue share on the growing supply of USDC required to service this commerce.

Discover: The top crypto for portfolio diversification

What a 7x Revenue Jump Would Mean for the Stablecoin Market

If Bloomberg’s 7x scenario plays out, stablecoin revenue would arguably become Coinbase’s most valuable business line, overshadowing its volatile trading fees.

This shift would fundamentally re-rate the stock, moving it from a cyclical crypto exchange play to a steady fintech payments processor. However, risks remain substantial.

The banking lobby is currently pushing the CLARITY Act in the Senate to close loopholes that allow exchanges like Coinbase to pass rewards to customers.

If new language bars these rewards, consumer adoption could slow.

Analysts at Monness Crespi maintain a sell rating, warning that optimistic projections effectively ignore the political target painted on stablecoin yields.

So, for Bloomberg’s 7x to be possible, Coinbase must defend its rewards program while successfully migrating user activity from holding USDC to spending it.

The post Coinbase Stablecoin Revenue Hits $1.35B: Bloomberg Sees 7x Growth Potential appeared first on Cryptonews.

Crypto World

$80 Floor fails, whales track this new crypto protocol

Disclosure: This article does not represent investment advice. The content and materials featured on this page are for educational purposes only.

Solana slides below key levels as investors shift focus to emerging DeFi protocol Mutuum Finance.

Summary

- Mutuum Finance rolls out dual P2C and P2P lending model with automated APY and LTV risk controls.

- V1 launches on Sepolia testnet, letting users trial WBTC, ETH, USDT, and LINK lending before mainnet.

- Health factor scoring, mtTokens, and real-time dashboards are powering Mutuum’s collateralized DeFi lending system.

Solana (SOL) is facing a difficult period as its price drops below key levels. The popular altcoin recently failed to hold its ground, causing a shift in market sentiment.

While many traders watch the charts with concern, a new crypto protocol, Mutuum Finance (MUTM), is gaining attention. Many large investors are now exploring this project as they look for fresh utility in the decentralized finance space.

Solana

Solana is currently trading at approximately $79, with its total market capitalization sitting near $45 billion. The critical $80 support level recently failed due to institutional sell-offs and global economic uncertainty.

This breakdown has led many analysts to predict a further slide toward the $67 range as long as buyers do not return quickly. Most investors now expect a period of consolidation as the network waits for a potential recovery in broader market confidence.

Despite the current price volatility, Solana continues to show significant resilience and remains a top-tier Layer-1 asset. On-chain data reveals that large wallet addresses, often called whales, have actually increased their holdings by over 2% in the last week, suggesting that major players are accumulating during this dip.

Furthermore, the ecosystem is preparing for the “Alpenglow” upgrade in early 2026, which aims to provide near-instant transaction finality and improve network stability. This combination of strong institutional interest in spot ETFs and ongoing technical improvements helps maintain long-term optimism even while the short-term market remains volatile.

Mutuum Finance

As the market searches for stability, Mutuum Finance is preparing a new decentralized lending platform. The project is developing a dual-market system that includes Peer-to-Contract (P2C) and Peer-to-Peer (P2P) lending.

According to the official project whitepaper, these markets aim to use automated mechanisms like Annual Percentage Yield (APY) and Loan-to-Value (LTV) ratios to manage rewards and risks. This setup would allow users to lend their assets for interest or borrow against them without needing a bank.

V1 protocol launch and features

The Mutuum Finance V1 protocol is now live on the Sepolia testnet. This allows users to test the system in a risk-free environment before the official mainnet launch. The platform supports major assets like WBTC, USDT, ETH, and LINK.

When users supply funds, they receive mtTokens as interest-bearing receipts. These tokens grow in value automatically as borrowers pay back their loans with interest. If users choose to borrow, they receive debt tokens to track their total balance including interest.

The entire system uses a health factor to ensure every loan stays stable and safe. This score tells users exactly how much collateral they have compared to their debt. Users can monitor their positions through a dedicated portfolio dashboard with real-time data. The dashboard also shows how pool usage affects interest rates as they change based on demand.

To ensure all asset valuations remain accurate, the protocol integrates decentralized oracles like Chainlink. These oracles provide real-time price feeds that prevent data manipulation and ensure that liquidation triggers are always fair and precise.

Why whales are tracking MUTM

Large-scale investors are moving toward Mutuum Finance as Solana’s momentum slows. The MUTM token is currently in the sale phase at a price of $0.04, having already raised over $20.6 million. With a growing base of 19,000 holders, the project has built strong community trust. This confidence is supported by a manual security audit from Halborn, which verified the safety of the protocol code.

Mutuum Finance offers a clear roadmap and a working protocol on testnet that proves its technology is unfolding. By combining high security with a transparent pricing structure, the project provides a steady alternative when navigating the current volatility of the crypto market.

Disclosure: This content is provided by a third party. Neither crypto.news nor the author of this article endorses any product mentioned on this page. Users should conduct their own research before taking any action related to the company.

Crypto World

Smarter Web Secures $30M Bitcoin Credit from Coinbase

United Kingdom-listed Bitcoin treasury firm The Smarter Web Company has secured a $30 million Bitcoin-backed credit facility from Coinbase Credit. The facility is secured against Bitcoin held in custody with Coinbase.

The company said Tuesday the facility is designed to help it deploy capital into Bitcoin (BTC) immediately after equity raises, reducing settlement timing risk during volatile markets. Smarter Web said it does not intend to use the facility as long-term debt to finance Bitcoin purchases.

Smarter Web is listed on the London Stock Exchange’s Main Market and also trades on the OTCQB Venture Market in the United States. The company describes Bitcoin as a core component of its treasury strategy and has previously said it aims to expand its digital asset holdings.

The move comes as digital asset treasuries (DATs) recorded billions in net inflows from late 2025 through January 2026 before cooling in February.

Data from DefiLlama shows DAT inflows reached $4 billion in December and $3.7 billion in January, before totalling just $363 million through Feb. 24.

While inflows remain positive in early 2026, February totals are tracking well below late-2025 peaks.

Smarter Web’s Bitcoin treasury holdings

According to data from BitcoinTreasuries.net, Smarter Web holds 2,689 Bitcoin, acquired at an average acquisition cost of $112,865 per coin.

At current prices, the company’s holdings are valued at roughly $170 million, reflecting an unrealized loss of about 44% based on the reported cost basis.

On Sept. 12, 2025, Smarter Web reported holding 2,470 BTC and described itself as the UK’s largest corporate Bitcoin holder at the time.

The company also signaled interest in acquiring competitors to expand its treasury and said it aspired to join the FTSE 100 index.

The latest tracker data suggests the company continued accumulating since then.

Smarter Web’s new facility would allow it to borrow against existing Bitcoin holdings to move more quickly following equity raises, then repay once fundraising proceeds settle.

Related: Top crypto treasury companies Strategy and Bitmine add to BTC, ETH stacks

Diverging corporate Bitcoin strategies

Smarter Web’s move comes as public companies take varied approaches to managing Bitcoin exposure.

On Monday, Strategy added 592 BTC to its balance sheet, bringing its total holdings to 717,722 BTC and marking its 100th BTC purchase since 2020.

By contrast, Bitdeer announced on Saturday that it had liquidated its entire Bitcoin treasury, reducing corporate holdings to zero while raising capital through a convertible debt offering.

Magazine: Bitdeer sells all Bitcoin, Metaplanet rejects misconduct claims: Asia Express

Crypto World

IBM Stock Just Had Its Worst Day Since 2000 – Jefferies Says Buy the Dip

TLDR

- IBM stock has dropped 28.6% in under a month, falling from $312.95 to $223.35

- The selloff was triggered by Anthropic highlighting COBOL functionality in Claude Code, raising fears AI could erode IBM’s legacy business

- Jefferies analyst Brent Thill maintained a Buy rating with a $370 price target, calling the dip a buying opportunity

- IBM’s watsonx Code Assistant for Z has been available since Q4 2023 and already converts COBOL to Java using generative AI

- IBM announced a new partnership with Deepgram, making it the first voice partner integrated into watsonx Orchestrate

IBM stock has had a rough few weeks. It has fallen 28.6% in less than a month, dropping from $312.95 on February 2 to $223.35, putting it near its 52-week low.

The single biggest blow came when the stock dropped 13% in one day — its largest single-day decline since 2000.

The catalyst was a blog post from Anthropic. The AI company highlighted COBOL functionality in its Claude Code platform, pointing out that hundreds of billions of COBOL lines remain active across finance, airlines, and government sectors.

International Business Machines Corporation, IBM

That spooked investors. IBM has long been a key player in COBOL-dependent systems like payment processing and financial infrastructure. The fear: AI could reduce demand for IBM’s legacy COBOL services.

The broader selloff also reflects a market-wide shift away from legacy tech, with investors moving toward quantum computing startups and high-yield bonds.

Jefferies Holds Its Ground

Not everyone is running. Jefferies analyst Brent Thill pushed back on the panic, arguing IBM is “already disrupting itself.”

Thill pointed to IBM’s watsonx Code Assistant for Z, which has been generally available since Q4 2023. The tool uses generative AI to convert COBOL into Java, interpret production code, and update legacy applications.

He argues this gives IBM a structural edge over general-purpose AI coding tools, which lack native access to mainframe data and operational context.

Thill also noted that IBM is building for a “multi-model, agentic world” by partnering with Anthropic, OpenAI, and others — meaning the very companies seen as threats are also partners.

He called the selloff a “near-term sentiment overhang on legacy services rather than an existential or structural risk” and maintained his Buy rating with a $370 price target, implying 66% upside from current levels.

Eleven other analysts share his bullish view. Five analysts have a Hold rating and one has a Sell, giving IBM a Moderate Buy consensus. The average price target sits at $337.53, pointing to roughly 51% upside over 12 months.

IBM Adds Voice AI Partner

On the same day, IBM announced a collaboration with Deepgram, making it IBM’s first voice AI partner.

Deepgram’s speech-to-text and text-to-speech technology will be embedded into IBM’s watsonx Orchestrate platform, allowing users to interact with AI agents using natural speech.

The integration supports multiple languages and dialects, including Arabic and Indian variants, and targets use cases in customer care, call analysis, and voice-driven data entry in healthcare and finance.

IBM’s P/E ratio currently sits at 20.3, and at least one analysis flags the stock as undervalued relative to its fair value.

Historically, IBM has only seen one comparable dip of 30% or more in under 30 days since 2010. Following that event, the stock posted a peak recovery of 42% within 12 months.

Crypto World

The ‘Digital Gold’ Narrative Fails Bitcoin (Again)

The correlation between the two assets has fallen hard recently.

Bitcoin is not in its ‘digital gold’ period, asserted the CEO and founder of the analytics company CryptoQuant. He based his conclusion on the fact that the correlation between the largest cryptocurrency and the biggest precious metal has diverged massively in the past several months.

Bitcoin is in a “not digital gold” period. pic.twitter.com/ka90HG8zmx

— Ki Young Ju (@ki_young_ju) February 24, 2026

When we examine the price performance of bitcoin and gold more closely, we can clearly see where this difference comes from. The correlation between the two was mostly in the green between 2022 and mid-2024.

Then, they broke out, going into red territory for the first time in years during and after the US presidential elections at the end of 2024. BTC skyrocketed to new peaks, while gold trailed behind.

Once the precious metal started to catch up, the correlation jumped to and over 0.5 by Q3 and early Q4 of 2025. However, that’s when the entire landscape in crypto broke, while the precious metal market continued to blossom.

Bitcoin experienced one of its most painful daily corrections on October 10 that altered the industry’s fabric. In a 24-hour period, the entire market collapsed, leaving more than $19 billion in liquidations.

Since then, the asset has not only been unable to recover to the previous heights, but it has continuously declined in value, dropping to $63,000 as of press time. In other words, it sits 50% away from its peak.

You may also like:

In contrast, gold’s price tapped a new all-time high at $5,600 at the end of January, and, besides its instant and untypical crash to $4,400, has been mostly sitting around and above $5,000. It now trades 30% above its October 10 price of $4,000, and its market cap is north of $36.1 trillion. This means the difference between the two is roughly 30x in terms of market cap.

Binance Free $600 (CryptoPotato Exclusive): Use this link to register a new account and receive $600 exclusive welcome offer on Binance (full details).

LIMITED OFFER for CryptoPotato readers at Bybit: Use this link to register and open a $500 FREE position on any coin!

Crypto World

Can Bhutan’s Solana-Backed Visa Revive Weak SOL Demand?

Solana price has slipped below a recent consolidation range, signaling weakening short-term momentum. SOL had been trading sideways for weeks before breaking lower.

The decline reflects muted investor demand. This cautious sentiment persists even as Solana expands real-world blockchain adoption.

Solana Bhutan Expand Collaboration

Bhutan recently launched the world’s first Solana-backed visa tailored for digital nomads. The initiative builds on the government’s earlier launch of a gold-backed token, TER, on the Solana blockchain. These developments highlight Solana’s expanding role in sovereign-backed digital infrastructure.

Government-level adoption strengthens Solana’s credibility as a scalable blockchain platform. However, adoption alone has not yet translated into immediate bullish price momentum for SOL.

Want more token insights like this? Sign up for Editor Harsh Notariya’s Daily Crypto Newsletter here.

Solana Holders Exhibit Concern

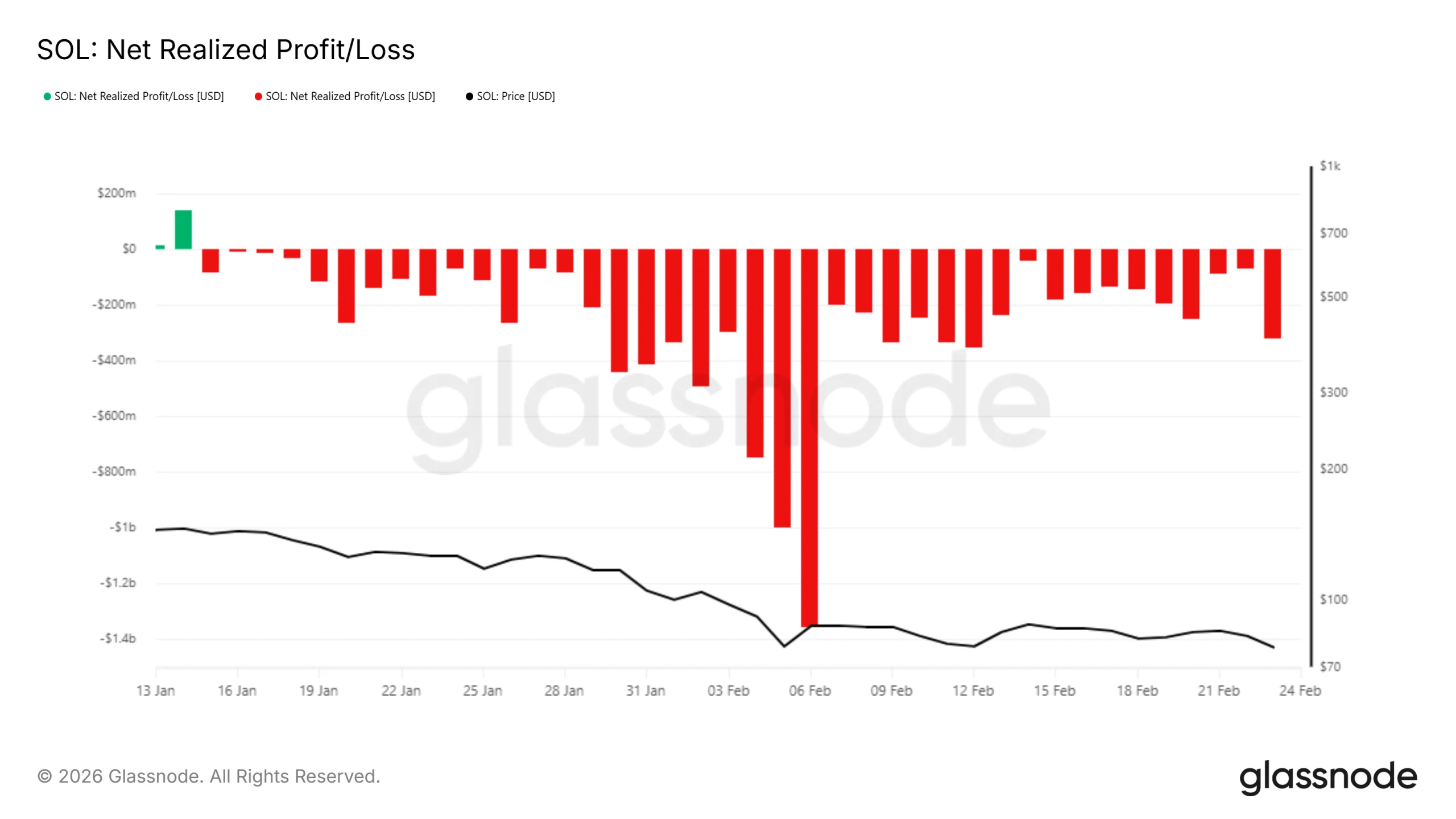

On-chain metrics show that SOL holders remain cautious. Realized net profit and loss data indicate investors continue selling at a loss. This pattern reflects fading confidence in a near-term rebound. Market participants appear focused on capital preservation rather than accumulation.

During the past 24 hours, as the broader crypto market declined, realized losses jumped by $68 million to $317 million. Elevated realized losses signal sustained bearish sentiment. Persistent selling pressure reduces recovery strength and reinforces short-term downside risks for the Solana price.

Bearishness has extended into the derivatives market. Liquidation data shows short positions currently dominate long exposure. Traders appear positioned for further downside. This imbalance suggests that speculative sentiment remains defensive despite ecosystem growth.

The liquidation map reveals $1.15 billion in potential short liquidations if SOL climbs to $89. By comparison, only $242 million in long liquidations would trigger if the price falls to $67. This skew indicates greater pressure on bearish positions during sharp upward moves.

SOL Price Is Looking At Volatility

Solana price is trading at $76 at the time of writing. Bollinger Bands are converging, signaling an impending volatility squeeze. Such setups often precede sharp price movements. Based on prevailing bearish indicators, downside risk currently appears elevated.

If SOL loses the $73 support level, the next downside target stands near $64. A drop to this zone could trigger long liquidations. Increased forced selling may intensify volatility and deepen short-term losses for holders.

Conversely, a shift in sentiment could support recovery. If bulls regain control, Solana price may reenter consolidation between $78 and $87. Sustained stability within this range would improve structure. A breakout above $89 could trigger $1.15 billion in short liquidations, accelerating upside momentum.

Crypto World

Crypto Execs Push Back on Viral Claim

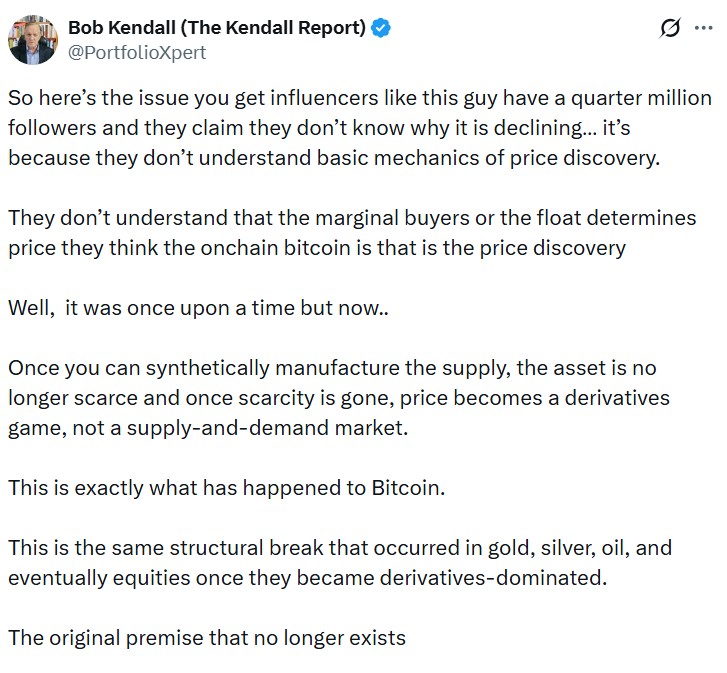

A market analysis viewed almost 5 million times on X states that Bitcoin derivatives have turned the cryptocurrency’s 21-million-supply cap into a “theoretically infinite” one.

Past Bitcoin (BTC) falls had a clear catalyst, but sharp drops in the opening months of 2026 have sparked several theories, ranging from digital asset treasuries (DATs) blowing up under pressure to a lingering hangover from October’s mass liquidation cascade.

Robert Kendall, author of “The Kendall Report,” claimed he cracked it in his viral X post. He argued that Bitcoin’s valuation logic based on fixed supply “died” once cash-settled futures, exchange-traded funds (ETFs) and other financial instruments were layered on top of the asset.

However, executives and researchers across the digital asset industry rejected Kendall’s analysis. Several told Cointelegraph that leverage affects price dynamics without changing Bitcoin’s underlying supply.

Harriet Browning, vice president of sales at institutional staking company Twinstake, told Cointelegraph, “When institutions allocate via ETFs and DATs, they are not diluting scarcity, as there will still only ever be 21 million. They are not minting new Bitcoin.”

“Instead, they are putting Bitcoin into the hands of long-term institutional holders who deeply understand its value proposition, not speculative traders looking for a quick exit,” she added.

Scarcity, lost coins and the question of effective float

When Bitcoin was first introduced to the world, the only way to acquire it was to buy it from other enthusiasts, mine it or trade it for pizza. Soon, crypto exchanges became available and opened retail access to the spot market.

In 2026, investors can also gain exposure through financial products built on spot crypto. To put it simply, Bitcoin now has a paper market of its own. However, skeptics of Kendall’s analysis said that a paper market does not damage Bitcoin’s scarcity.

“Gold has a massive paper market in futures, ETFs and unallocated accounts that dwarfs physical supply, yet nobody argues gold isn’t scarce. Paper claims don’t change the amount of gold in the ground, and the same logic applies to Bitcoin,” Luke Nolan, a senior research associate at CoinShares, told Cointelegraph.

Bitcoin is often compared to gold for similarities like headlining the internet generation’s own gold rush, being a store of value and being a hedge against currency debasement. It is also programmed to a hard supply cap that doesn’t fluctuate even when investment products are built on top of it, much like a gold bar wouldn’t magically sprout out of its own derivatives.

Like precious metals, new Bitcoin enters the market through a process called mining. Instead of digging the earth, the system rewards those who verify transactions on the blockchain about every 10 minutes. Those rewards are sliced in half every four years, so Bitcoin’s supply growth slows over time, along with the amount of virgin Bitcoin entering the economy.

As of February, about 19.99 million BTC has been mined, though Nolan calls this metric misleading, as not all of these coins are available for investors. Users can lose their passwords or take them to their graves. Up to 4 million coins are estimated to be permanently lost.

With more spot Bitcoin becoming inaccessible, Nolan claimed that the institutional access layer actually reinforces Bitcoin’s scarcity.

“Spot ETFs require physical BTC to be held in custody, and in 2025 alone, combined ETF and corporate treasury holdings grew significantly. That is real supply being pulled off the market,” he said.

Related: Are quantum-proof Bitcoin wallets insurance or a fear tax?

Bitcoin’s shift to derivatives-led price formation

Even critics of Kendall’s supply argument acknowledge that Bitcoin’s short-term price discovery now leans heavily on instruments tied to institutional markets.

Derivative activity has increasingly shifted to traditional finance venues. CME futures overtook Binance in BTC futures open interest in late 2023, although Binance recently regained the lead.

“Derivatives markets have become the primary venue for expressing institutional views on Bitcoin, and as a result, they now play a central role in spot price discovery,” said Browning.

Browning added that derivatives and ETFs influence Bitcoin’s spot price through three main transmission channels.

First, markets like CME influence short-term price discovery because institutional traders express their bullish or bearish views in futures before the spot market. When futures prices diverge from spot prices, traders opt for arbitrage strategies, such as basis trades, to close the gap. According to Browning, hedge funds routinely buy spot Bitcoin or its ETFs while shorting CME futures to capture the premium between the two.

Second, when banks sell Bitcoin-linked notes to clients, they typically hedge their exposure by buying Bitcoin through ETFs, effectively creating more spot demand.

Related: Banks can’t seem to service crypto, even as it goes mainstream

Third, crypto-native perpetual futures can spill over into the spot market through funding-rate arbitrage. When funding rates are positive, heavy long positioning encourages traders to buy spot Bitcoin and short futures to earn funding payments, adding spot demand. When funding turns negative, that flow can reverse and pressure the price.

“Today, derivatives volumes frequently exceed spot volumes, and many institutional participants prefer derivatives, alongside ETFs, for capital efficiency, hedging and short exposure,” Browning said.

“Spot markets increasingly serve as the settlement and inventory layer, while derivatives increasingly influence marginal price discovery, and new price levels are negotiated.”

Derivatives don’t delete Bitcoin’s scarcity from the blockchain

The rise of Bitcoin’s paper market means investors no longer have to directly hold BTC to gain exposure.

Futures and perpetual contracts allow investors to express bullish or bearish views, hedge risk or deploy leverage. Similar derivatives have long existed in commodities markets without altering the physical amount of gold, oil or other assets in circulation.

Nima Beni, founder of crypto leasing platform BitLease, told Cointelegraph:

“The premise that synthetic exposure destroys scarcity is as flawed as a misapplied commodity-market analogy used about paper gold. It was wrong then; it’s wrong now.”

Kendall defended his position after Bitcoiners equipped with their own arguments flooded his viral post.

“I’m not arguing [derivatives] ‘delete’ scarcity from the blockchain. What I’m saying is they shift where marginal price is set,” he said.

Bitcoin’s 21-million cap remains unchanged in code. No derivative contract, ETF or structured product can mint new coins beyond that limit. But what has evolved around Bitcoin is price discovery.

Derivatives increasingly shape marginal price formation before flows filter back into spot. That alters how and where Bitcoin’s value is negotiated.

Both Kendall and his critics ultimately agree on that point.

Magazine: Bitcoin may take 7 years to upgrade to post-quantum: BIP-360 co-author

Cointelegraph Features and Cointelegraph Magazine publish long-form journalism, analysis and narrative reporting produced by Cointelegraph’s in-house editorial team and selected external contributors with subject-matter expertise. All articles are edited and reviewed by Cointelegraph editors in line with our editorial standards. Contributions from external writers are commissioned for their experience, research or perspective and do not reflect the views of Cointelegraph as a company unless explicitly stated. Content published in Features and Magazine does not constitute financial, legal or investment advice. Readers should conduct their own research and consult qualified professionals where appropriate. Cointelegraph maintains full editorial independence. The selection, commissioning and publication of Features and Magazine content are not influenced by advertisers, partners or commercial relationships.

Crypto World

MoonPay unveils AI onramp for brave new agent economy

Cryptocurrency payments firm MoonPay has introduced a non-custodial financial layer that gives AI agents access to wallets, funds, and the ability to transact autonomously, the company said on Tuesday.

MoonPay Agents, as the new service is called, requires a user to verify and fund their agent’s wallet through MoonPay, and thereafter the agent can take over, trading, swapping, and moving money on its own.

While AI agents are primed and ready to trade, allocate capital and execute strategies, they are constrained inasmuch as they can’t participate in the economy without access to money, Moonpay said in an emailed press release. The idea of MoonPay Agents is to unlock that financial layer, from funding to execution to off-ramping back to fiat.

The AI service generates a MoonPay link to fund a wallet, and the user completes a one-time KYC and connects a payment method through MoonPay’s checkout, and the agent can then transact autonomously.

“AI agents can reason, but they cannot act economically without capital infrastructure,” said Ivan Soto-Wright, CEO and Founder of MoonPay. “MoonPay is the bridge between AI and money. The fastest way to move money is crypto, and we’ve built the infrastructure to let agents do exactly that: non-custodial, permissionless, and ready to use in minutes.”

Crypto World

Bitcoin Realized Losses Have Hit Bear Market Levels

Data from Glassnode shows loss-taking now outweighs profits, a shift rarely seen outside deep bear phases.

Bitcoin’s on-chain data has flashed a signal that has historically come before prolonged bear market conditions, with the Realized Profit/Loss Ratio confirming a regime shift toward loss-dominant selling.

The move suggests that liquidity is evaporating from the market, forcing investors to realize losses rather than book profits, a dynamic last seen during the deepest crypto winter periods of 2018 and 2022.

Key Metric Flips Below 1 Signaling Capitulation Risk

According to data from on-chain analytics firm Glassnode, the 90-day simple moving average of the Realized Profit/Loss Ratio has officially fallen below 1. The metric, which compares the total value of BTC sold at a profit versus those sold at a loss, indicates that loss-taking now outweighs profit-taking across the network.

“This confirms a full transition into an excess loss-realization regime,” Glassnode analysts noted in a February 24 update on X.

The firm highlighted that historically, breaks below this threshold have persisted for six months or more before reclaiming the 1 level, a recovery that typically signals a “constructive return of liquidity to the market.”

The reading represents the culmination of a trend that began in early February, when the ratio was hovering near 1.5, and late January, when it stood around 1.32.

Furthermore, the current on-chain structure shows confluence with previous bear market bottoms. CryptoQuant contributor _OnChain observed that indicators tied to whale activity, particularly Unspent Profitability Ratios (UPR) for various holder cohorts, have reached levels similar to May-June 2022, a period that preceded significant downside before the ultimate bottom formed later that year.

Market Context and Historical Parallels

The current sell-side pressure follows a dramatic cooldown in profit-taking that occurred in December 2025. Glassnode’s earlier data showed that 7-day average realized profits crashed from over $1 billion in Q4 2025 to just $183.8 million by December, which temporarily allowed Bitcoin to stabilize and rally above $96,000 in early January.

You may also like:

However, that stabilization proved short-lived as macroeconomic headwinds intensified, with Bitcoin trading at approximately $63,200 at the time of writing, down 3.6% in 24 hours and almost 29% over the past month. The asset is also nearly 50% below its all-time high reached in October 2025.

Analysts have attributed the continued weakness to a combination of macro factors rather than a structural breakdown in Bitcoin’s fundamentals. U.S. President Donald Trump’s recent tariff announcements, including a proposed increase on taxes on global imports, have rattled risk assets across traditional and crypto markets.

Despite the bearish signals, some analysts maintain that Bitcoin’s long-term cycle remains intact. Bitwise CIO Matt Hougan recently framed current volatility as a necessary “teenage state” of monetary evolution, arguing that maturing assets must pass through speculative gradients before achieving institutional stability.

However, chartist Ali Martinez warned that a three-day “death cross” could be confirmed in late February, which foreshadowed final downside moves in 2014, 2018, and 2022, historically leading to additional declines of 30% to 50%.

Binance Free $600 (CryptoPotato Exclusive): Use this link to register a new account and receive $600 exclusive welcome offer on Binance (full details).

LIMITED OFFER for CryptoPotato readers at Bybit: Use this link to register and open a $500 FREE position on any coin!

-

Video5 days ago

Video5 days agoXRP News: XRP Just Entered a New Phase (Almost Nobody Noticed)

-

Fashion4 days ago

Fashion4 days agoWeekend Open Thread: Boden – Corporette.com

-

Politics2 days ago

Politics2 days agoBaftas 2026: Awards Nominations, Presenters And Performers

-

Sports20 hours ago

Sports20 hours agoWomen’s college basketball rankings: Iowa reenters top 10, Auriemma makes history

-

Business7 days ago

Business7 days agoInfosys Limited (INFY) Discusses Tech Transitions and the Unique Aspects of the AI Era Transcript

-

Entertainment6 days ago

Entertainment6 days agoKunal Nayyar’s Secret Acts Of Kindness Sparks Online Discussion

-

Politics21 hours ago

Politics21 hours agoNick Reiner Enters Plea In Deaths Of Parents Rob And Michele

-

Tech6 days ago

Tech6 days agoRetro Rover: LT6502 Laptop Packs 8-Bit Power On The Go

-

Sports5 days ago

Sports5 days agoClearing the boundary, crossing into history: J&K end 67-year wait, enter maiden Ranji Trophy final | Cricket News

-

Business2 days ago

Business2 days agoMattel’s American Girl brand turns 40, dolls enter a new era

-

Crypto World7 hours ago

Crypto World7 hours agoXRP price enters “dead zone” as Binance leverage hits lows

-

Business2 days ago

Business2 days agoLaw enforcement kills armed man seeking to enter Trump’s Mar-a-Lago resort, officials say

-

Entertainment6 days ago

Entertainment6 days agoDolores Catania Blasts Rob Rausch For Turning On ‘Housewives’ On ‘Traitors’

-

Business6 days ago

Business6 days agoTesla avoids California suspension after ending ‘autopilot’ marketing

-

NewsBeat1 day ago

NewsBeat1 day ago‘Hourly’ method from gastroenterologist ‘helps reduce air travel bloating’

-

Tech2 days ago

Tech2 days agoAnthropic-Backed Group Enters NY-12 AI PAC Fight

-

NewsBeat2 days ago

NewsBeat2 days agoArmed man killed after entering secure perimeter of Mar-a-Lago, Secret Service says

-

Politics2 days ago

Politics2 days agoMaine has a long track record of electing moderates. Enter Graham Platner.

-

Crypto World6 days ago

Crypto World6 days agoWLFI Crypto Surges Toward $0.12 as Whale Buys $2.75M Before Trump-Linked Forum

-

Crypto World5 days ago

Crypto World5 days ago83% of Altcoins Enter Bear Trend as Liquidity Crunch Tightens Grip on Crypto Market