Strands is the NYT’s latest word game after the likes of Wordle, Spelling Bee and Connections – and it’s great fun. It can be difficult, though, so read on for my Strands hints.

Want more word-based fun? Then check out my Wordle today, NYT Connections today and Quordle today pages for hints and answers for those games.

SPOILER WARNING: Information about NYT Strands today is below, so don’t read on if you don’t want to know the answers.

NYT Strands today (game #244) – hint #1 – today’s theme

What is the theme of today’s NYT Strands?

• Today’s NYT Strands theme is… Good on paper

NYT Strands today (game #244) – hint #2 – clue words

Play any of these words to unlock the in-game hints system.

- LATE

- LAST

- STALE

- STARE

- PUFF

- CLIP

NYT Strands today (game #244) – hint #3 – spangram

What is a hint for today’s spangram?

• Stationery cupboard

NYT Strands today (game #244) – hint #4 – spangram position

What are two sides of the board that today’s spangram touches?

First: left, 4th row

Last: right, 4th row

Right, the answers are below, so DO NOT SCROLL ANY FURTHER IF YOU DON’T WANT TO SEE THEM.

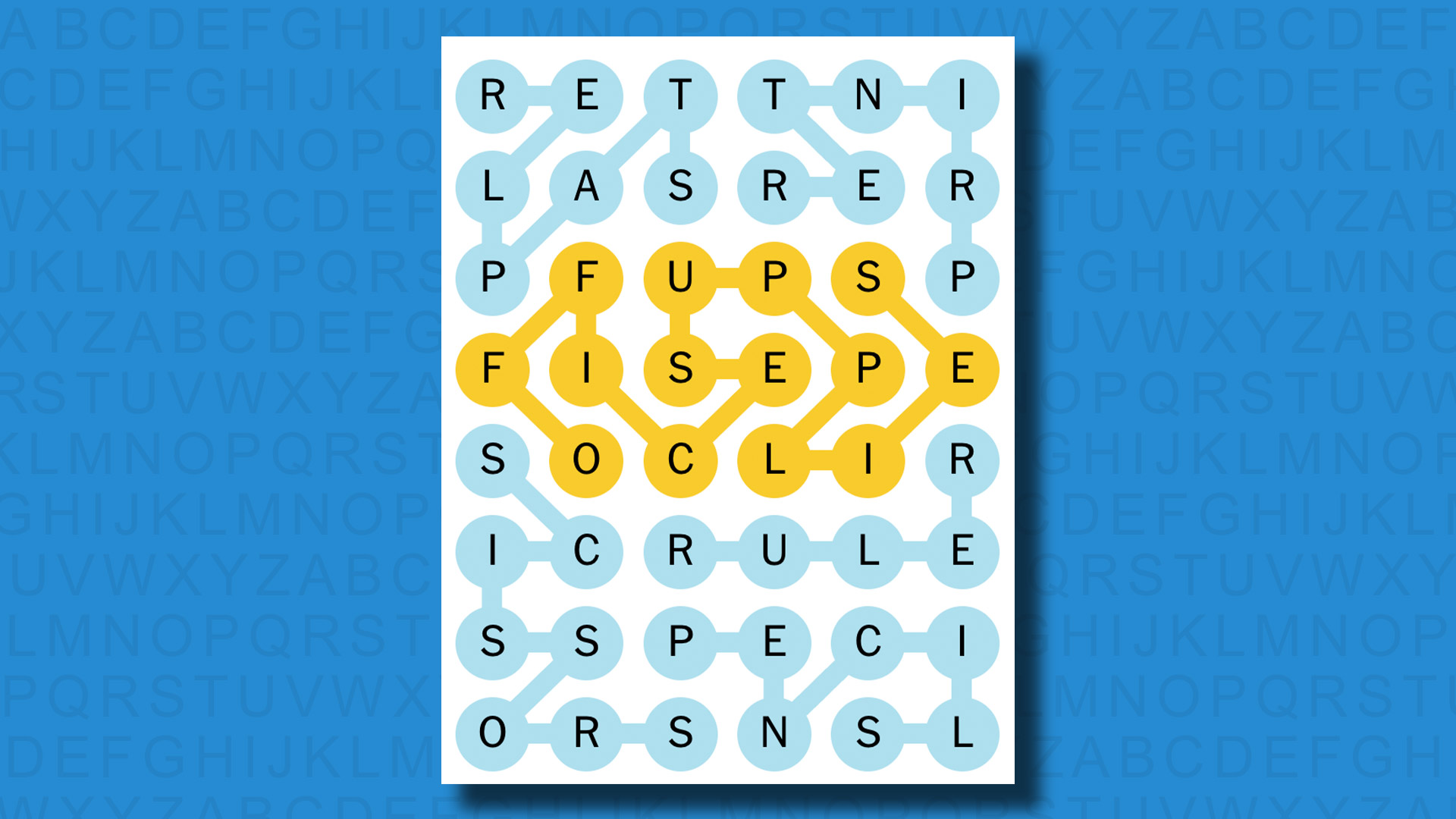

NYT Strands today (game #244) – the answers

The answers to today’s Strands, game #244, are…

- PRINTER

- SCISSORS

- PENCILS

- STAPLER

- RULER

- SPANGRAM: OFFICESUPPLIES

- My rating: Easy

- My score: Perfect

As the father of teenage daughters I am well aware of all of the OFFICESUPPLIES in today’s Strands. Not because they work in an office, obviously, but because they are at school and seem to get through about 20 RULERs and 50 PENCILS a year, constantly need me to help them use the PRINTER and still seem a little clueless about how to use SCISSORS or a STAPLER. Kids today, eh? Too much time spent in front of a screen, clearly.

My own parental issues aside, this was an easy Strands puzzle to solve. The theme clue provided a good push in the right direction, and when I found PRINTER by accident my course was duly charted. None of the words were had to think of, and only the rather long and complex spangram provided any real challenge.

How did you do today? Send me an email and let me know.

Yesterday’s NYT Strands answers (Friday, 1 November, game #243)

- QUEEN

- KING

- ROOK

- TIMER

- BISHOP

- PAWN

- KNIGHT

- BOARD

- SPANGRAM: CHECKMATE

What is NYT Strands?

Strands is the NYT’s new word game, following Wordle and Connections. It’s now out of beta so is a fully fledged member of the NYT’s games stable and can be played on the NYT Games site on desktop or mobile.

I’ve got a full guide to how to play NYT Strands, complete with tips for solving it, so check that out if you’re struggling to beat it each day.

You must be logged in to post a comment Login