Good morning! Let’s play Connections, the NYT’s clever word game that challenges you to group answers in various categories. It can be tough, so read on if you need clues.

What should you do once you’ve finished? Why, play some more word games of course. I’ve also got daily Wordle hints and answers, Strands hints and answers and Quordle hints and answers articles if you need help for those too.

SPOILER WARNING: Information about NYT Connections today is below, so don’t read on if you don’t want to know the answers.

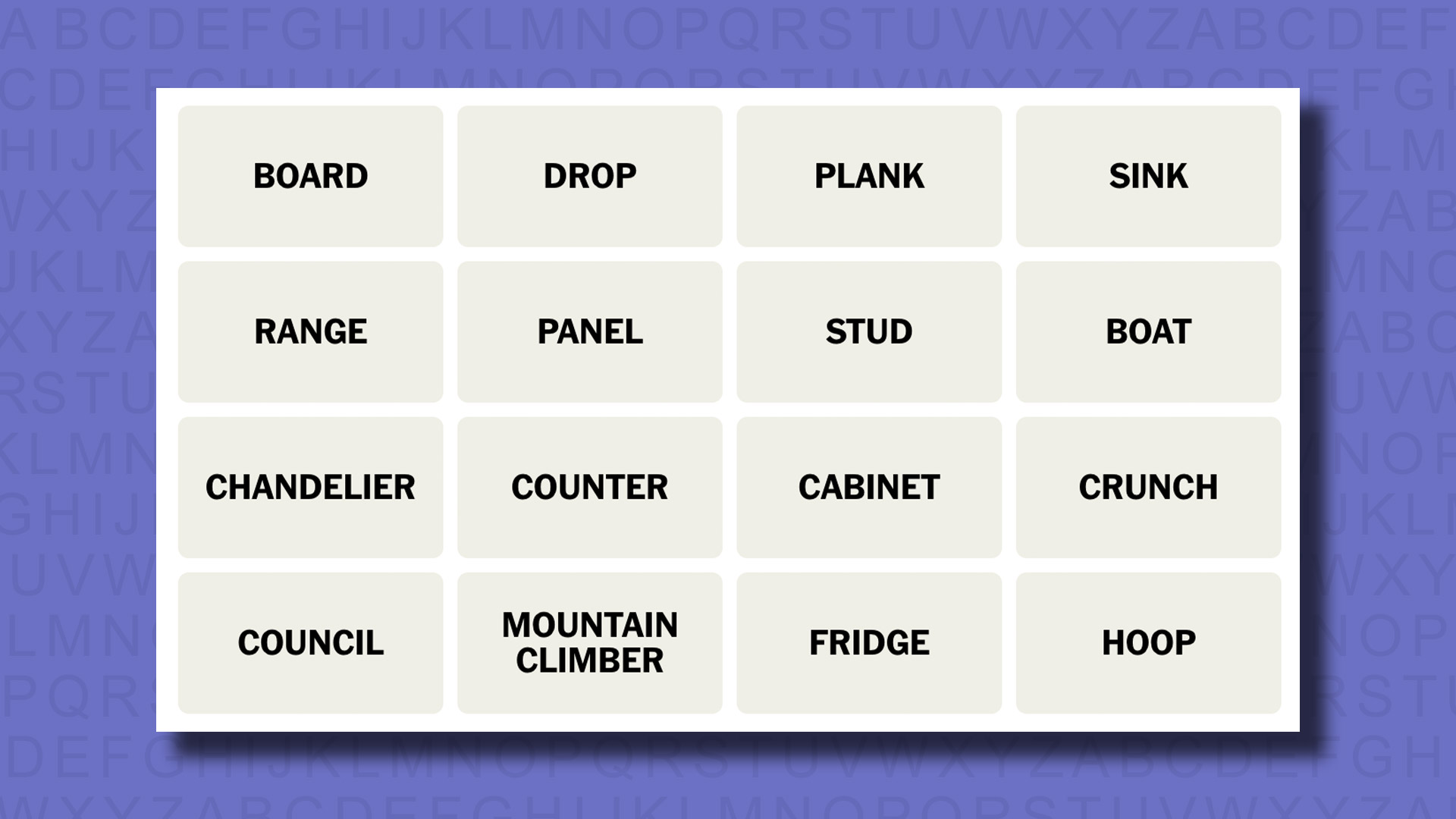

NYT Connections today (game #510) – today’s words

Today’s NYT Connections words are…

- BOARD

- DROP

- PLANK

- SINK

- RANGE

- PANEL

- STUD

- BOAT

- CHANDELIER

- COUNTER

- CABINET

- CRUNCH

- COUNCIL

- MOUNTAIN CLIMBER

- FRIDGE

- HOOP

NYT Connections today (game #510) – hint #1 – group hints

What are some clues for today’s NYT Connections groups?

- Yellow: Maybe dishwasher too

- Green: Decision makers, collectively

- Blue: Work those abs

- Purple: Body decoration types

Need more clues?

We’re firmly in spoiler territory now, but read on if you want to know what the four theme answers are for today’s NYT Connections puzzles…

NYT Connections today (game #510) – hint #2 – group answers

What are the answers for today’s NYT Connections groups?

- YELLOW: SEEN IN A KITCHEN

- GREEN: GROUP OF ADVISORS

- BLUE: CORE EXERCISES

- PURPLE: KINDS OF EARRINGS

Right, the answers are below, so DO NOT SCROLL ANY FURTHER IF YOU DON’T WANT TO SEE THEM.

NYT Connections today (game #510) – the answers

The answers to today’s Connections, game #510, are…

- YELLOW: SEEN IN A KITCHEN COUNTER, FRIDGE, RANGE, SINK

- GREEN: GROUP OF ADVISORS BOARD, CABINET, COUNCIL, PANEL

- BLUE: CORE EXERCISES BOAT, CRUNCH, MOUNTAIN CLIMBER, PLANK

- PURPLE: KINDS OF EARRINGS CHANDELIER, DROP, HOOP, STUD

- My rating: Moderate

- My score: Perfect

This was another day where I solved purple relatively early – and it’s just as well that I did, as the chance of my completing blue was only slightly above zero. That’s because blue was CORE EXERCISES, featuring BOAT, CRUNCH, MOUNTAIN CLIMBER and PLANK, a subject about which I have almost no knowledge and absolutely no interest. I do know what a CRUNCH and a PLANK are, and did consider that one answer might involves those exercises, but had no idea what the other two would be, and even once I saw them I was still none the wiser as to what a BOAT or a MOUNTAIN CLIMBER actually involves.

With the latter I instead initially thought it might go with CHANDELIER as part of a ‘things that hang’ group, but I was on the wrong track there. Instead, CHANDELIER went with HOOP, DROP and STUD to form the purple KINDS OF EARRINGS group, which I was pleased to solve.

Yellow and green were theoretically easier, as you’d expect them to be, but it took me a while to separate them. I figured that SEEN IN A KITCHEN would be one, but as well as COUNTER, FRIDGE, RANGE, SINK – the eventual answers – I could have included CABINET, so waited until I’d solved a few more before tackling it. My patience paid off and I completed today’s Connections with no mistakes (for once).

How did you do today? Send me an email and let me know.

Yesterday’s NYT Connections answers (Friday, 1 November, game #509)

- YELLOW: PROGRESS SLOWLY CRAWL, CREEP, DRAG, INCH

- GREEN: WAYS TO ORDER A BEER BOTTLE, CAN, DRAFT, TAP

- BLUE: CHEESY CORN SNACK UNIT BALL, CURL, DOODLE, PUFF

- PURPLE: ___ EFFECT BUTTERFLY, DOMINO, HALO, PLACEBO

What is NYT Connections?

NYT Connections is one of several increasingly popular word games made by the New York Times. It challenges you to find groups of four items that share something in common, and each group has a different difficulty level: green is easy, yellow a little harder, blue often quite tough and purple usually very difficult.

On the plus side, you don’t technically need to solve the final one, as you’ll be able to answer that one by a process of elimination. What’s more, you can make up to four mistakes, which gives you a little bit of breathing room.

It’s a little more involved than something like Wordle, however, and there are plenty of opportunities for the game to trip you up with tricks. For instance, watch out for homophones and other word games that could disguise the answers.

It’s playable for free via the NYT Games site on desktop or mobile.

You must be logged in to post a comment Login