Crypto World

Will Polygon price retest January highs as stablecoin activity and app revenue surges?

Polygon has fallen nearly 40% from its yearly high in tandem with a market-wide weakness. Can it recover from its losses now as its stablecoin market and app revenues surge?

Summary

- Polygon price is eyeing a rebound amid strengthening fundamentals, including stablecoin activity and revenue surge.

- A potential bullish crossover is forming on the daily chart.

According to data from crypto.news, the Polygon (POL) price fell over 50% from its January high to a yearly low of $0.088 on Feb. 11. This occurred amid a broader market pullback triggered by massive liquidations across leveraged markets as Bitcoin fell below multiple key support zones due to macroeconomic and geopolitical stress.

POL has since bounced back and remained in consolidation between $0.100 and $0.115.

The Polygon network is showing signs of strength, which may position it for a breakout

First, its on-chain stats have grown significantly stronger over recent weeks. Data from DeFiLlama shows that the total supply of stablecoins on the network has surged to $3.26 billion from the $2.4 billion seen at the beginning of February.

At the same time, the weekly revenue generated by DeFi apps on the network has also soared by nearly 70% within the period.

A stronger stablecoin supply and weekly revenue suggest a surge in activity and liquidity, which is a healthy sign for a network and could likely attract more institutional capital.

Second, Polygon’s aggressive token burn strategy is also helping support its price gains. It has recently completed burning over 100 million POL tokens. As tokens are burnt, they are permanently removed from the circulating supply, driving scarcity and providing an accessible bullish narrative for short-term traders.

Third, the daily chart shows that the Polygon price is close to confirming a bullish crossover between the 50-day and 100-day moving averages. Bullish crossovers are typically followed by sustained rallies once confirmed.

Key levels to watch

For now, the next overhead resistance level lies at $0.122, which is the strong pivot reverse level of the Murrey Math lines. Bulls must reclaim this level to confirm a trend reversal.

Subsequently, bulls can then try to push the token all the way up to its January high at $0.184, which would mark a roughly 64% rally from its current price of $0.112.

On the contrary, failure to hold the ultimate support level of the Murrey Math lines at $0.097 will result in a drop back to its yearly low of $0.088.

Disclosure: This article does not represent investment advice. The content and materials featured on this page are for educational purposes only.

Crypto World

What Are Whales Doing Now?

Ethereum (ETH) is seeing renewed buying interest amid its latest recovery rally, which has pushed the price back above $2000.

On-chain data shows whales accumulating the second-largest cryptocurrency. At the same time, the Coinbase Premium Index has moved above zero for the first time since early January.

Ethereum Reclaims $2000 as Crypto Market Extends Midweek Rally

The crypto market extended its gains today, continuing the upward trajectory that began on Wednesday. Ethereum’s price has risen about 8% during the same period. At press time, the altcoin was trading at $2054.

On-chain analytics platform Santiment noted that the 30-day Market Value to Realized Value (MVRV) ratio for large-cap cryptocurrencies shifted significantly following the rally, suggesting the market is rebalancing after a period of undervaluation.

The MVRV metric compares market capitalization with realized capitalization, providing insight into average holder profitability. Ethereum, in particular, has moved from a strongly undervalued position to a mildly undervalued zone, with its MVRV ratio currently at -5.5%.

ETH Surge Triggers Major Whale Buys

The recovery has been accompanied by notable whale activity. Blockchain analytics firm Lookonchain documented that whale address 0xAb59 invested $14.57 million to acquire 7,008 ETH at an average price of $2,079.

In addition, whale address 0x166f withdrew 20,000 ETH worth $38.25 million from Binance and Deribit within a two-hour window. The large-scale exchange withdrawal involved five transfers, with the largest being 8,000 ETH from a Binance hot wallet.

US investor demand is also showing signs of improvement. According to CryptoQuant data, the Ethereum Coinbase Premium Index has moved over zero.

Throughout much of January and early February 2026, the index, which measures the price difference between Ethereum on Coinbase and Binance, remained firmly negative. This typically signals weaker buying pressure from US-based investors during periods of price softness.

The current reading shows ETH trading at a slight premium on Coinbase. This typically reflects stronger buying pressure from US-based investors, including institutional participants, compared to offshore markets.

“Most of the moments when the ETH Coinbase premium turned positive were followed by an upward trend. And now, the Coinbase premium has risen to 0. We’ve reached a critical turning point,” an analyst wrote.

Meanwhile, some derivatives traders have also benefited from ETH’s rise. Data shared by OnchainLens shows that trader Machi’s leveraged 25x long on ETH has swung back into profit, now up more than $760,000.

Separately, the whale known as “pension-usdt.eth” has closed both ETH and BTC long positions, realizing approximately $1.16 million in gains.

For now, momentum appears positive, but whether it can be sustained in the coming weeks remains to be seen.

Crypto World

Sam Bankman-Fried’s social media campaign fails to sway Trump on pardon

The White House has reaffirmed that former FTX CEO Sam Bankman-Fried will not receive a presidential pardon, even as the disgraced crypto founder publicly courts President Donald Trump through a sustained social media campaign.

Summary

- The White House reaffirmed that Sam Bankman-Fried will not receive a presidential pardon, despite his recent public appeals directed at Donald Trump.

- Bankman-Fried has used social media to criticize the Justice Department and align himself with Trump’s rhetoric, in what observers see as a bid for clemency.

- Trump has previously stated he has no intention of pardoning the former FTX CEO, even as he has shown clemency toward other high-profile figures.

Sam Bankman-Fried’s Trump appeals fall flat

A White House spokesperson reiterated to media that Trump has no plans to grant clemency to Bankman-Fried, revealing the president’s stance.

Bankman-Fried, serving a 25-year sentence for fraud and conspiracy related to the collapse of his cryptocurrency exchange FTX, has in recent weeks taken to platforms like X to align himself with Trump’s policies, criticize the judge who sentenced him, and lash out at his legal foes.

The messaging, widely interpreted as an attempt to influence Trump’s pardon calculus, also includes praise for conservative causes and disparagement of the Biden administration.

Despite these efforts, Trump’s position remains firm. In January, the president declared that Bankman-Fried was not among the individuals he intended to pardon — a list that also excludes other high-profile figures such as former New Jersey senator Robert Menendez and Venezuela’s Nicolás Maduro.

The White House statement reiterated this stance and suggested that the FTX founder’s public overtures have not altered Trump’s approach to clemency.

Bankman-Fried’s pivot toward Trump contrasts sharply with his earlier role as a major Democratic donor before FTX’s collapse. The shift in tone has been accompanied by amplification across various accounts on social media, with critics dismissing the campaign as ineffective “sock-puppet” activity.

The president has granted pardons to several figures associated with the cryptocurrency world, including Binance founder Changpeng Zhao and BitMEX’s leadership. Still, advisers and political observers view Bankman-Fried’s bid as unlikely to succeed, given his controversial reputation and the severity of his crimes.

Crypto World

Buterin outlines 4-year roadmap to faster, quantum-resistant Ethereum

Ethereum (CRYPTO: ETH) co-founder Vitalik Buterin has expanded on a four-year roadmap designed to dramatically accelerate block production and transaction confirmations. The Strawmap, a visual plan released by the Ethereum Foundation’s Protocol team, frames the network’s next phase as a sequence of incremental steps intended to make the blockchain feel more live and responsive rather than a system where users wait for each new block to arrive.

In a Thursday update, Buterin added detail to the Strawmap, noting that “fast slots” sit in their own lane within the plan and do not connect directly to the rest of the roadmap, which remains largely independent of the slot time. The core objective is to shrink the current 12-second block cadence toward as low as 2 seconds over time, enabling swifter confirmations and a more immediate user experience.

The roadmap outlines a measured path: 12 seconds down to 8, then 6, 4, and ultimately 2 seconds per slot, with each step pursued incrementally to minimize disruption while preserving security and network reliability. This approach is designed to avoid the complexity and risk of implementing sweeping changes all at once, favoring controlled, bite-sized upgrades that can be deployed with fewer unintended consequences.

The Strawmap also highlights improvements to peer-to-peer communication among Ethereum nodes. By refining how blocks and data are shared—reducing duplicated data transfers and accelerating how quickly nodes achieve consensus—the network can sustain shorter slot times without compromising security. Buterin described these P2P enhancements as essential to making shorter slots viable while preserving the network’s integrity.

Finality from minutes to seconds

The second major thrust in the Strawmap is finality—the point at which a transaction is mathematically irreversible. Today, finality sits around 16 minutes, but the roadmap envisages a target window of roughly 6 to 16 seconds, achieved by replacing the current, more complex confirmation regime with a simpler, cleaner model that is also designed to be quantum-resistant.

“The goal is to decouple slots and finality, to allow us to reason about both separately,” Buterin explained. He described this as an invasive set of changes, prompting the team to bundle the most significant upgrade with a cryptographic switch—specifically a move to post-quantum hash-based signatures—to minimize risk and complexity across forks.

The push toward quantum resistance is anchored in a staged approach: slots would become quantum-resistant earlier than finality, a decision that could see the chain continue to function even if distant quantum threats emerged before full post-quantum finality is achieved. “One interesting consequence of the incremental approach is that there is a pathway to making the slots quantum-resistant much sooner than making the finality quantum-resistant,” Buterin noted. In practical terms, the network might quickly reach a regime where, if quantum computers materialize, the finality guarantee could be suspended temporarily, yet the chain would continue to operate.

Guardrails aside, the overarching plan is to pursue a component-by-component replacement of Ethereum’s slot structure and consensus, yielding a cleaner, simpler, quantum-resistant, prover-friendly, end-to-end formally verified framework. The four-year horizon envisages seven forks, roughly every six months, with Glamsterdam and Hegotá already confirmed for later this year.

The Strawmap is the Ethereum Foundation’s attempt to visualize a long view for Ethereum’s evolution beyond today’s constraints, balancing speed, security, and future-proof cryptography.

Key takeaways

- Current block time sits around 12 seconds, with the roadmap aiming for a path down to 2 seconds per slot in incremental steps.

- Improvements to peer-to-peer data sharing are designed to reduce block propagation time without sacrificing security.

- Finality is targeted to move from minutes (roughly 16) toward seconds (6–16) through a simpler, quantum-resistant approach to confirmations.

- The plan calls for seven forks over four years, with Glamsterdam and Hegotá already confirmed for later this year.

- Cryptography changes are paired with the upgrade path, including a shift to post-quantum hash-based signatures to support long-term security.

Tickers mentioned: $ETH

Sentiment: Neutral

Market context: The drive to accelerate Ethereum’s block production and simplify finality sits within broader industry efforts to improve L1 throughput while preparing for future cryptographic threats, all against a backdrop of growing demand for faster, more scalable blockchain services and ongoing debates about post-quantum readiness.

Why it matters

The Strawmap represents a fundamental rethinking of how Ethereum validates transactions and finalizes states. By decoupling slot timing from finality, the network aims to create a more modular upgrade path. This modularity could allow developers to test and deploy changes in smaller, safer increments, reducing the risk of destabilizing the network during major upgrades.

From a user and developer perspective, shorter slot times could translate into faster inclusion of transactions and more responsive DeFi and smart contract interactions. For validators and node operators, the proposed P2P optimizations and cryptographic shifts are expected to lessen the burden of processing large data loads and maintaining security in the face of emerging quantum-era threats, respectively.

Yet the changes are not trivial. The shift to a new cryptographic regime and the introduction of a simplified finality mechanism will require careful implementation across forks, with substantial testing to prevent disruption. The four-year horizon and seven forks underscore the breadth of coordination required among developers, researchers, and the wider ecosystem to ensure a smooth transition.

What to watch next

- The first of the planned forks under the Strawmap timeline, Glamsterdam, and Hegotá, slated for later this year, and their specific upgrade goals.

- Ongoing work on node communication protocols and data sharing improvements to reduce block propagation times.

- The cryptography switch to post-quantum signatures and the associated testing cycles across testnets and mainnet participants.

- Public updates from the Ethereum Foundation’s Protocol team on fork schedules and implementation milestones.

Sources & verification

What Strawmap changes for Ethereum’s block production and finality

Ethereum’s roadmap, as articulated by Vitalik Buterin and the Ethereum Foundation, centers on a deliberate, phased approach to transforming how blocks are produced and how state changes become final. At the heart of the plan is the intent to shrink the slot time—a metric that dictates how quickly new blocks are produced—from the current roughly 12 seconds toward a target as low as 2 seconds. The progression is designed to be gradual: 12 → 8 → 6 → 4 → 2 seconds, with each step evaluated for security and performance before advancing. This geometric, square-root-inspired trajectory is intended to preserve the network’s integrity while delivering tangible increases in transaction throughput and responsiveness.

Parallel to slot-time optimization, the Strawmap emphasizes improvements to how Ethereum nodes communicate with one another. By enhancing the efficiency of block propagation—reducing redundant data, and optimizing the sharing of new blocks and related information—it’s possible to support shorter slots without broadening attack surfaces or creating bottlenecks. Buterin has underscored that these improvements should not come at the expense of security, arguing that better messaging and data handling can unlock faster consensus without inviting new risks.

The roadmap’s second major thrust—finality—targets a dramatic reduction in the time required to irreversibly confirm a transaction. Where today finality hinges on a multi-layer, often lengthy confirmation process, the plan envisions a streamlined mechanism that can achieve finality within a window of about 6 to 16 seconds. A key part of this redesign is the switch to a more straightforward cryptographic architecture designed to be post-quantum resistant. This aligns with Ethereum Foundation materials that stress quantum readiness and the need to secure long-term security guarantees as the ecosystem scales.

To manage the scope and risk of such a sweeping overhaul, the strategy involves a decoupled approach to slots and finality. By treating these components as separable concerns, the network can be reasoned about more clearly, with targeted upgrades deployed in discrete forks. Buterin described the changes as highly invasive, necessitating a coordinated move that bundles the most significant cryptographic shift with the upgrade to a new, post-quantum hashing regime. This pairing aims to minimize disruption while laying the groundwork for future-proof security in a post-quantum era.

A notable implication of this incremental path is a staged advancement toward quantum resistance for slots ahead of finality. If quantum hardware were suddenly to arrive, there could be a temporary loss of finality guarantees; however, the chain would continue to operate, preserving usability and security in parallel. The overall trajectory anticipates ongoing, progressive reductions in both slot time and finality time, with a long horizon that envisions seven forks over four years and periodic, well-communicated upgrades designed to minimize risk for users and operators alike.

Crypto World

China holiday spending sends a strong signal on consumer stimulus plans

People watch performances to welcome the ‘God of Wealth’ during Lunar New Year festivities at Qianmen Street in Beijing, China, on February 21, 2026.

Nurphoto | Nurphoto | Getty Images

BEIJING — China’s consumer market is recovering — just enough that policymakers likely won’t need to roll out the large-scale stimulus that investors have long hoped for.

The nine-day Lunar New Year, which ended Monday, saw a steady rise in spending across the country, from hotel bookings to duty-free shopping. Rail travel hit a record of over 18.7 million passengers in a single day.

The better-than-expected data suggest that Beijing’s recent support measures are effective, while underscoring a broader consumer trend: spending on experiences such as travel and entertainment is still picking up faster than traditional goods, CCB International Securities said in a report Tuesday.

China’s retail sales have remained sluggish since the pandemic. Unlike the U.S., which handed out cash to consumers, Beijing has instead offered trade-in programs and vouchers. Chinese authorities have increasingly emphasized the need to boost consumers’ incomes, but have yet to release details.

That’s not likely to change soon.

“Policymakers are likely to build on the positive [holiday] momentum and introduce targeted, incremental easing around the March Two Sessions to stabilize expectations and sustain the recovery,” the CCB analysts said, referring to the annual parliamentary meetings that kicks off next week.

Chinese Premier Li Qiang is set to announce the year’s economic targets and policy priorities on March 5.

Still price-conscious

Despite the travel rebound, consumers remained price sensitive. Nationwide, tourism trips per day grew by 5.7% on average from a year ago, in line with 2025, according to official holiday figures released late Tuesday. Even though spending climbed by 5.5%, it slowed from 7% in 2025.

“Such trends reflect better sentiment from a longer holiday, but consumers remained budget cautious in general,” Morgan Stanley Equity Analyst Lillian Lou said in a report Wednesday.

In a sign of persistent deflationary pressure, the holiday recorded a 0.2% drop in average spend per tourist trip compared with a year ago, according to CNBC’s analysis of official data.

To boost consumer spending, China extended the official holiday period by one day compared with last year. Many people also took personal leave around the holiday, suggesting the official figures may not capture the entire spending picture.

“The extended holiday encouraged families to travel together,” Jihong He, chief strategy officer at H World Group, one of China’s largest hotel operators, said in a statement.

“That shift is driving demand for larger rooms and family-friendly configurations designed for shared experiences,” He said.

H World operates more than 12,000 hotels across over 30 brands in mainland China. For the Lunar New Year, the company said the top 10 destinations, with hotel occupancy rates of 90% or higher, were all located in southern or coastal cities, including Sanya in the tropical island province of Hainan.

China in December expanded a zero-tariff policy for the island to encourage duty-free luxury goods purchases within the mainland. Official figures showed Hainan’s holiday-period duty-free sales rose 30.8% from a year ago to 2.72 billion yuan ($400 million).

Alibaba-owned travel booking platform Fliggy said bookings for hotel and theme park packages during the holiday season more than doubled from last year. More remote, scenic destinations such as Altay in Xinjiang and Pu’er in Yunnan also saw bookings more than double, the company said.

Government support

China has sought to promote its growing services sector. This month, the National Bureau of Statistics disclosed that it was giving more weight to services in its consumer price index than in the previous base period in 2020.

Even consumer goods in China are increasingly oriented towards dining and social activities, Bruce Pang, adjunct associate professor at CUHK Business School, said in Chinese remarks translated by CNBC.

The key to consumption recovery is confidence in income and employment prospects, he said, rather than shopping promotions. Policymakers should place greater emphasis on those long-term issues, Pang added.

In the fall, China’s top leaders pledged to boost consumption over the next five years, and have subsequently said the country will prioritize domestic demand.

Local governments in China issued more than 2.05 billion yuan in consumption vouchers and subsidies ahead of the holiday, CCB analysts said, “effectively putting a floor under demand.”

However, prioritizing consumption does not necessarily signal sweeping stimulus, said Liqian Ren, director of Modern Alpha at U.S.-based fund manager WisdomTree.

Instead, Beijing appears focused on preventing consumption growth from slipping below a certain level, Ren noted, indicating sector growth of roughly 2% to 3%.

Crypto World

Can bulls break $2 as Bitcoin reclaims $65K?

XRP price is back in focus as Bitcoin stages a sharp 24-hour rebound, reclaiming the $65,000 level after dipping to roughly $62,800 earlier this week.

Summary

- Bitcoin has rebounded to $65,000 after defending the $62,800 support zone, shifting short-term momentum back to buyers.

- XRP is consolidating near $1.36, with resistance at $1.45 and $1.60, while $2 remains a distant macro target.

- The XRP/BTC pair remains in a broader downtrend, suggesting XRP is still underperforming Bitcoin despite improving momentum indicators.

Can XRP price follow Bitcoin’s $65K rebound?

The Bitcoin (BTC) price chart shows a strong impulsive bounce, with BTC climbing back above short-term consolidation levels and attempting to stabilize after the heavy sell-off on Feb. 23–24.

The recovery suggests buyers are defending the mid-$62K region, turning it into near-term support, while $66,000–$67,000 now stands as immediate resistance.

Against this backdrop, the Ripple token (XRP) is trading near $1.36 on the daily chart, consolidating after a prolonged downtrend from above $2.20 in January. Price action shows XRP holding above the $1.30 support zone, with stronger structural support sitting near $1.20, the level that triggered the early-February bounce.

On the upside, XRP faces layered resistance at $1.45 and $1.60. A break above $1.60 would open the path toward $1.80, but bulls would still need a sustained breakout above that level before $2.00 comes into focus. At present, the $2 mark remains a distant macro resistance rather than an immediate target.

Indicators show tentative improvement. Balance of Power has flipped positive at 0.28, suggesting buyers are regaining short-term control, while the Chaikin Money Flow (CMF) has turned slightly positive at 0.03 — signaling mild capital inflows.

However, neither indicator reflects strong bullish momentum yet.

Meanwhile, the XRP/BTC pair remains in a broader downtrend, hovering around 0.0000209 BTC, indicating XRP is still underperforming Bitcoin. For a credible move toward $2, XRP would likely need not just Bitcoin stability above $65K, but also renewed relative strength against BTC.

For now, XRP’s outlook improves if $1.30 holds, but a decisive breakout above $1.60 is the real trigger bulls must clear before $2 enters the conversation. At current momentum, a move to $2 would likely require a broader market breakout led by Bitcoin clearing $67K.

Crypto World

Bitcoin’s 200-Week Trend Line Is Next on the Horizon for Bulls

Bitcoin began an assault below the 200-week exponential moving average in fresh signs of upward BTC price momentum at the start of the US session.

Bitcoin (BTC) hit $67,000 at Wednesday’s Wall Street open as bulls shook off fresh US tariff pledges.

Key points:

-

Bitcoin enjoys a sustained rebound as BTC price action rises above $67,000.

-

A key long-term trend line now comes back into view, with the weekly close in focus.

-

Gold analysis reveals a developing RSI divergence with Bitcoin.

BTC price sets up rematch with 200-week trend

Data from TradingView showed daily BTC price gains hitting 4.5% as a local rebound continued.

Bitcoin appeared unfazed by an announcement from U.S. Trade Representative Jamieson Greer over 15% tariffs, which may become reality “within the coming days.”

“So right now, as we talked about, 10% is in place. There will be a proclamation raising it to 15% where appropriate,” he told Bloomberg.

Tariff headlines often spark volatility in crypto markets, with their impact nonetheless cooling in recent months.

Already enjoying respite from sustained selling pressure, BTC/USD thus approached a key long-term level in the form of the 200-week exponential moving average (EMA).

As Cointelegraph reported, BTC price losing the level as support has become a classic bear market signal.

Commenting, trader and analyst Rekt Capital repeated analysis from earlier in February, suggesting that the upcoming weekly close should be above the 200-week EMA, now at $68,330.

Trader Castillo Trading also eyed weekly time frames, with a potential upside target near $74,500 — Bitcoin’s 2025 yearly lows.

Still watching the same Weekly structure on $BTC.

Nice start on the bounce, and if this continues to hold as a support level a good re-test and potential short could be that 2025 Yearly Lows level around $74,492.#Bitcoin https://t.co/olaw6RO9Kr pic.twitter.com/NYHWSu8d5q— Castillo Trading (@CastilloTrading) February 25, 2026

Bitcoin teases RSI bullish divergence versus gold

As gold ranged above the $5,000 per ounce mark, meanwhile, crypto trader, analyst and entrepreneur Michaël van de Poppe saw reason for Bitcoin bulls to stay optimistic.

Related: Bitcoin ETF sell-off is ‘purification’ of bull case, investor says

“Interesting enough; There’s a strong bullish divergence on the daily chart of $BTC vs. Gold,” he told X followers on the day, referring to the relative strength index (RSI).

“It’s not confirmed, but given the recent strength (today and yesterday) in Bitcoin, I think a slight rotation is starting. It’s about time.”

Such a turnaround in capital flows would upend market opinions from earlier in the year.

As Cointelegraph reported, analysis even concluded that Bitcoin had lost its quest to be “digital gold” with its comedown from October 2025 all-time highs.

This article does not contain investment advice or recommendations. Every investment and trading move involves risk, and readers should conduct their own research when making a decision. While we strive to provide accurate and timely information, Cointelegraph does not guarantee the accuracy, completeness, or reliability of any information in this article. This article may contain forward-looking statements that are subject to risks and uncertainties. Cointelegraph will not be liable for any loss or damage arising from your reliance on this information.

Crypto World

Circle Beats Earnings as USDC Circulation Hits $75B

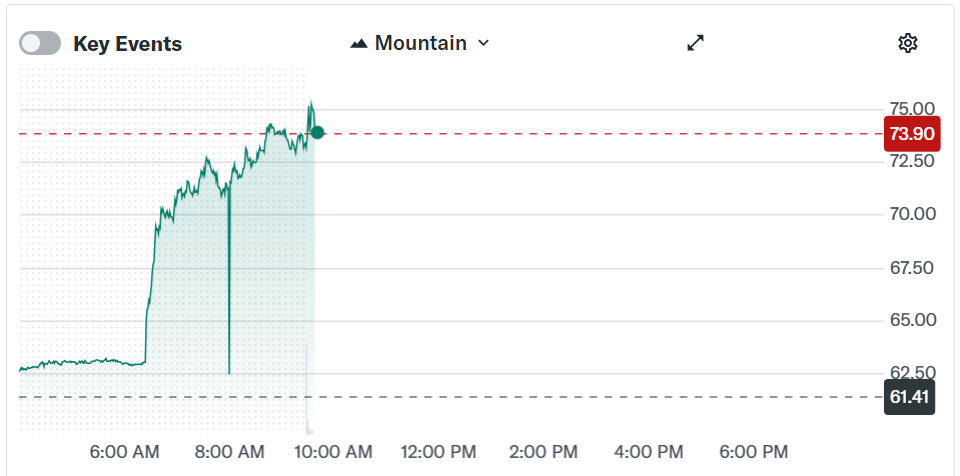

Stablecoin issuer Circle Internet Group reported stronger-than-expected fourth-quarter earnings on Wednesday, driven by rapid growth in its USDC stablecoin business and expanding payments operations, underscoring continued momentum in an otherwise challenging crypto market.

For the quarter ending Dec. 31, 2025, Circle posted revenue of $770 million, a 77% increase from a year earlier, and reported net income of $133.4 million, or 43 cents per share. Analysts expected per-share earnings of 16 cents on revenue of $747 million.

The strong quarter was fueled in part by a 72% year-on-year increase in the circulation of Circle’s US dollar-pegged stablecoin, USDC (USDC), which reached about $75.3 billion by year-end.

For the full year 2025, Circle reported revenue of $2.7 billion, up 64% from the prior year. The company recorded a net loss of $70 million for the year, largely due to $424 million in stock-based compensation tied to its 2025 initial public offering (IPO).

Despite the annual loss, operating income was positive at about $157 million, reflecting solid underlying performance.

Circle’s shares surged on the news, rising more than 20% in early trading Wednesday morning, to nearly $74.

Related: Better, Framework Ventures reach $500M stablecoin mortgage financing deal

Arc rollout and policy tailwinds bolster Circle’s expansion

Circle highlighted several operational milestones during the quarter, including the public testnet launch of Arc, its new blockchain infrastructure platform designed to help institutions build tokenized financial applications. More than 100 institutional participants have joined the testnet, the company said.

The Circle Payments Network, a cross-border payments coordination layer enabling banks to settle transactions using stablecoins, expanded to 55 financial institutions, with additional companies undergoing eligibility review and onboarding.

While Circle is best known for issuing USDC, the world’s second-largest stablecoin by market capitalization, its euro-denominated stablecoin, EURC, also posted strong growth. EURC circulation reached 310 million euros ($365 million), up 284% year over year.

Circle has also benefited from a more favorable regulatory backdrop in the United States under President Donald Trump’s administration, including the passage of the GENIUS Act, which establishes a federal framework for payment stablecoins and issuer oversight.

However, broader industry momentum has faced hurdles. As The Wall Street Journal reported, progress on a separate market structure bill known as the CLARITY Act has stalled amid ongoing tensions between crypto industry advocates and banking groups over issues including stablecoin yield and reward mechanisms.

Related: Bank lobby is ‘panicking’ about yield-bearing stablecoins — NYU professor

Crypto World

Mutuum Finance (MUTM) V1 Protocol: Feature Expansion & DeFi

DeFi cryptocurrency Mutuum Finance has launched its V1 protocol on the Sepolia testnet, introducing the core mechanics of its lending and borrowing system. The team also stated that an additional feature is scheduled to be rolled out next week as development continues.

Mutuum Finance Protocol Upgrade

In a recent statement published on X, the team confirmed that it is working on several upcoming features while refining key components of the codebase, including optimizations to the Stability Factor. According to the update, a new protocol feature is expected to be released in the coming week.

The project has reported more than $20.6 million raised to date, with over 19,000 holders of its MUTM token, currently priced at $0.04. In the same update, the team noted that the Sepolia testnet version of its lending and borrowing protocol has surpassed $90 million in testnet total value locked (TVL), reflecting simulated liquidity activity during beta testing.

Lending and Borrowing with Mutuum Finance

In the current beta version, users can interact with the protocol’s core functionality. The interface displays a protocol overview including total liquidity, available liquidity, and total variable debt. Four assets are currently supported for minting and interaction on testnet: ETH, USDT, LINK, and WBTC. The portfolio section provides data on net worth, net APY, Stability Factor, and total supplied and borrowed balances, with mtTokens also integrated into the current version of the platform.

When users supply assets to the platform, they receive corresponding mtTokens as proof of deposit. For example, supplying WBTC results in the issuance of mtWBTC. These tokens accrue value over time based on the applicable APY, which is determined by pool utilization.

By depositing $10,000 worth of USDT into the protocol, users receive mtUSDT in return. If the average annual percentage yield (APY) is around 4–5% over a one-year period, the position could generate approximately $400 to $500 in passive income, depending on pool utilization and borrowing demand. In addition, users can stake their mtTokens within the safety module, where eligible participants receive dividends denominated in MUTM tokens.

On the borrowing side, collateral is required to secure loans and protect the protocol against default risk. Rather than selling assets, users can post them as collateral and borrow against their value. For example, an investor holding $1,000 worth of ETH who does not wish to liquidate the position can deposit that ETH as collateral and borrow USDT. The borrowed stablecoins can then be used for expenses or deployed into other investments, while the user retains exposure to potential upside in ETH. Once the borrowed amount and accrued interest are repaid, the full collateral can be withdrawn.

Audited Protocol

Mutuum Finance has undergone a security audit of its lending and borrowing protocol conducted by Halborn, a blockchain security firm that has also performed audits for major projects such as Solana. In addition, the MUTM token smart contract was reviewed by CertiK, receiving a Token Scan score of 90 out of 100.

In partnership with CertiK, Mutuum Finance has established a bug bounty program with a reward pool of up to $50,000, aimed at identifying potential vulnerabilities and strengthening protocol security.

The total supply of MUTM is capped at 4 billion tokens. A portion of this allocation is designated for incentives, including giveaways, leaderboard bonuses, and other community reward programs.

Mutuum Finance continues to advance development of its lending and borrowing protocol as testing progresses on the Sepolia network. With additional features scheduled for rollout and security reviews completed, the project remains focused on refining its infrastructure ahead of full deployment.

Crypto World

U.S. Treasury sanctions Operation Zero over stolen cyber tools

The U.S. Department of the Treasury has sanctioned a Russia-based cyber “exploit broker” and its affiliates in a high-profile national security action targeting the theft and sale of proprietary U.S. government cyber tools, officials announced Tuesday.

Summary

- The U.S. Treasury sanctioned Russian exploit broker Operation Zero and associates for trafficking stolen U.S. cyber tools, using the Protecting American Intellectual Property Act.

- The action adds individuals and entities to the SDN list, blocking their U.S. assets and barring U.S. persons from dealings with them.

- The sanctions coincide with a DOJ and FBI investigation into a former defense contractor employee who sold proprietary cyber tools for cryptocurrency

Operation Zero blacklisted by U.S.

The designation marks the first use of the Protecting American Intellectual Property Act (PAIPA) in a sanctions case aimed at combatting digital trade-secret theft.

The Treasury’s Office of Foreign Assets Control (OFAC) placed Russian national Sergey Sergeyevich Zelenyuk and his St. Petersburg-based company Matrix LLC, also known as Operation Zero, on the Specially Designated Nationals (SDN) list, along with five associated individuals and entities.

The sanctions target the acquisition and redistribution of “exploits,” specialized computer code that can be used to take advantage of vulnerabilities in widely used software.

According to the Treasury, at least eight U.S. government cyber tools developed for defense and intelligence use were stolen from a U.S. company and allegedly sold by Operation Zero to unauthorized actors.

In its announcement, the Treasury said that Zelenyuk and his network offered substantial bounties to obtain exploits and then monetized the tech with buyers in Russia and elsewhere. Federal officials have expressed concern that such tools could be used for criminal activity or espionage, including ransomware and other destabilizing cyber operations.

The sanctions also encompass individuals linked to the group’s operations, including an affiliate company based in the United Arab Emirates and suspected members of the Trickbot cybercrime gang, previously sanctioned in other actions.

Under U.S. sanctions law, the property and interests of SDN-designated persons within U.S. jurisdiction are blocked, and U.S. persons are generally prohibited from engaging in transactions with them.

The action works in tandem with an ongoing criminal investigation by the Department of Justice and FBI into a former U.S. defense contractor employee who pleaded guilty last year to stealing the cyber tools and selling them for cryptocurrency.

Treasury officials said the sanctions aim to deter future theft of American intellectual property that could threaten national security, underscoring Washington’s broader strategy to hold foreign cyber actors accountable through economic and financial tools.

Crypto World

Kalshi Bans US Politician Over Insider Trading

A regulatory spotlight has intensified around prediction markets after Kalshi, a Commodity Futures Trading Commission-regulated platform, banned a high-profile political candidate for trading on his own candidacy. The case underscores how even modest bets on real-world outcomes can trigger fast discipline when they intersect with insider-trading rules, and it comes as lawmakers and agencies sharpen their focus on the speculative-use cases that have quietly grown alongside the crypto ecosystem.

Key takeaways

- Kalshi issued a five-year ban plus a $2,000 penalty after a former California gubernatorial candidate wagered on his own bid and publicized the action on social media, violating platform rules.

- The politician’s actions align with reports that the description matches Kyle Langford, who has shifted from a Republican to a Democrat run for California’s 26th Congressional District; Kalshi noted he is no longer seeking the governorship and is pursuing Congress instead.

- In a May 25, 2025 X post, Langford showed a Kalshi bet of $98.76 on the odds of his victory, a detail Kalshi disclosed as part of the enforcement case and the public record surrounding the incident.

- Separately, a YouTube editor—widely reported as Artem Kaptur of MrBeast notoriety—tolerated a roughly $4,000 accumulation on YouTube stream markets between August and September 2025, resulting in a two-year penalty and about $20,000 in fines for insider-trading violations.

- Kalshi has signaled a broader crackdown, stating it has investigated around 200 cases, frozen several flagged accounts, and now operates with a surveillance audit committee and a partnership with Solidus Labs to detect market abuse as prediction markets scale.

Market context: Kalshi’s enforcement actions occur as prediction markets move toward greater mainstream participation and face intensified regulatory scrutiny. The company has pointed to internal surveillance capabilities and industry collaboration to curb abuse, while lawmakers have floated bills to curb insider trading among government insiders on these venues. The evolving framework aims to balance innovation with investor protection in markets that resemble, in some respects, both traditional trading and decentralized crypto ecosystems.

Why it matters

For traders and ordinary users, the Kalshi cases emphasize a core truth of prediction markets: information asymmetry and improper access carry legal risk. When a participant leverages privileged information—whether real-time, non-public data or an enhanced awareness of an opponent’s strategy—the odds of a fair outcome are eroded. Kalshi’s enforcement actions demonstrate that even seemingly modest bets can become substantial violations if they breach platform rules or disclosures, and they highlight the tension between the novelty of prediction markets and established securities-like expectations of fairness and compliance.

The enforcement framework also signals to other platforms that regulators and market monitors will pursue insider-trading and market-manipulation cases with visible penalties. Kalshi’s public disclosures about the Langford case and the YouTube-creator episode reveal a broader ambition: to deter participants from exploiting private information or unusual access to information channels, whether through social media disclosures, behind-the-scenes connections, or content-driven data streams. The platform’s stance can be read as a commitment to strict governance as prediction markets integrate with mainstream media, political events, and high-profile personalities.

From a policy perspective, the incidents sit at an intersection of financial-market integrity and digital-age governance. The industry has long argued that prediction markets offer useful foresight on real-world events, yet skeptics warn about the potential for manipulation and the overhang of regulatory risk. The Kalshi actions echo broader conversations in Washington about how to supervise new betting formats that blend real-world outcomes with digital platforms, while ensuring that insiders do not gain unfair advantage or profit from information unavailable to the broader public.

Beyond Kalshi, the regulatory mood has grown louder. Congressional discussions and CFTC-led efforts point to a growing taxonomy of enforcement priorities—insider trading, information leakage, and market abuse—that now extend to online prediction platforms with real-money stakes. In parallel, related coverage around Polymarket and other venues has amplified calls for clear guardrails, while public officials outline steps to harmonize the rules with ongoing crypto-market developments. The tension between innovation and accountability remains central to the evolving narrative around prediction markets and crypto-linked financial ecosystems.

In this environment, enforcement actions that surface publicly—such as the Langford-related ban and the YouTube-creator incident—serve as high-profile reminders for participants to treat prediction-market markets with the seriousness they deserve. Kalshi’s leadership has framed these cases as part of a broader discipline strategy, noting that its surveillance apparatus, governance enhancements, and third-party partnerships are designed to identify, investigate, and address market abuse before it becomes systemic.

What to watch next

- Follow Kalshi’s ongoing enforcement docket for new cases and the status of active investigations, including any additional penalties or account suspensions.

- Monitor the CFTC’s predicted shift toward formal advisory collaboration with industry players on prediction-market integrity and insider-trading enforcement.

- Watch for any legislative developments in the United States that would constrain or guide insider trading in prediction markets, especially in relation to government insiders.

- Track updates on the Kalshi-surveillance partnership with Solidus Labs and how their joint framework shapes market abuse detection across listings and events.

- Observe related coverage around high-profile figures and content creators involved in prediction-market activities, including how platforms handle disclosures and potential MNPI issues.

Sources & verification

- Kalshi’s enforcement case page documenting the governance action and penalties tied to the California candidate case.

- Public X posts by Kyle Langford referencing his Kalshi bet and candidacy status.

- Reports surrounding Artem Kaptur and the YouTube-stream-market enforcement action, including Kalshi’s disclosures and penalties.

- Kalshi’s statements on expanding surveillance and partnering with Solidus Labs to address market abuse.

- CFTC leadership statements and the establishment of a prediction markets advisory to coordinate enforcement efforts.

Kalshi enforcement actions highlight insider-trading risk in prediction markets

A political candidacy became the focal point for a broader discussion about market integrity after Kalshi announced a five-year ban and a $2,000 penalty on a former California gubernatorial hopeful who bet on his own bid and publicized it on X. The company said the individual placed a wager of about $200 on his candidacy, and Kalshi emphasized that the account did not generate profits from the trade. The public references tied to this case align with a broader pattern in which prediction-market platforms maintain strict prohibitions against insider trading, and violations are met with tangible penalties and disqualification from the platform.

The athlete-candidate narrative quickly shifted to a widely discussed possible match to Kyle Langford, who has since pivoted to a bid for California’s 26th Congressional District. Kalshi confirmed that the description fits Langford, noting he is no longer pursuing the governorship and has turned his ambitions toward Congress. A May 25, 2025 post on X shows Langford sharing a video of himself placing the Kalshi bet—specifically $98.76 on the odds of victory. Kalshi stated that this account did not withdraw profits, and the case was reported to the CFTC for further review. The company’s decision to publicize the enforcement action underscores its commitment to transparency in maintaining a level playing field for all users.

In a separate enforcement action that drew public attention, Kalshi flagged a YouTube editor for insider-trading-like activity across YouTube stream markets during August and September 2025. The editor traded approximately $4,000 on Kalshi markets in ways that violated Kalshi’s internal rules, resulting in a two-year penalty and roughly $20,000 in fines. The platform described the trading as statistically anomalous, pointing to an unusually high success rate on markets with low odds. Kalshi’s investigators concluded that the individual likely had access to material non-public information, though the specific identity was not disclosed in the company’s public release. The coverage in mainstream media has widely identified the implicated party as Artem Kaptur, a member of MrBeast’s team, highlighting how public content creators can intersect with financial-market activity in novel ways.

Kalshi’s broader enforcement program is not limited to these cases; the platform has publicly disclosed investigations into around 200 cases and has frozen several flagged accounts. Earlier in the month, Kalshi announced the creation of a surveillance-audit committee and a collaboration with Solidus Labs to bolster its ability to detect market abuse and insider trading across its prediction markets. The aim is to raise the bar for governance, promote integrity, and deter would-be insiders from exploiting information asymmetries for personal gain as these markets continue to attract participation from a broader audience, including institutions and highly-visible public figures.

The intensified regulatory posture surrounding prediction markets is also reflected in political developments. US lawmakers introduced a bill aimed at curbing trading by government insiders after a Polymarket user earned more than $400,000 on bets tied to Venezuelan President Nicolás Maduro—trades executed hours before U.S. authorities captured Maduro in Caracas. In response, the CFTC chair signaled that the agency would not hesitate to pursue violators, stating that a new advisory group would work with industry participants to identify and address insider trading in prediction markets. The combined signal from Kalshi, policymakers, and regulators suggests a turning point for how these markets are policed as they move from niche experiments to potential mainstream financial instruments.

As this environment evolves, the line between innovation and enforcement becomes more pronounced. Kalshi’s actions, the high-profile cases, and the regulatory dialogue reflect a broader industry shift toward more robust surveillance, clearer governance, and stricter penalties for those who undermine market integrity. For users, developers, and participants in the growing ecosystem around event-based markets, these developments serve as a reminder to prioritize compliance, transparency, and responsible trading practices—an essential framework if prediction markets are to achieve scalable trust and sustainable growth.

-

Video6 days ago

Video6 days agoXRP News: XRP Just Entered a New Phase (Almost Nobody Noticed)

-

Politics4 days ago

Politics4 days agoBaftas 2026: Awards Nominations, Presenters And Performers

-

Fashion5 days ago

Fashion5 days agoWeekend Open Thread: Boden – Corporette.com

-

Sports2 days ago

Sports2 days agoWomen’s college basketball rankings: Iowa reenters top 10, Auriemma makes history

-

Politics2 days ago

Politics2 days agoNick Reiner Enters Plea In Deaths Of Parents Rob And Michele

-

Crypto World2 days ago

Crypto World2 days agoXRP price enters “dead zone” as Binance leverage hits lows

-

Business4 days ago

Business4 days agoMattel’s American Girl brand turns 40, dolls enter a new era

-

Business4 days ago

Business4 days agoLaw enforcement kills armed man seeking to enter Trump’s Mar-a-Lago resort, officials say

-

Tech1 day ago

Tech1 day agoUnsurprisingly, Apple's board gets what it wants in 2026 shareholder meeting

-

NewsBeat7 hours ago

NewsBeat7 hours agoManchester Central Mosque issues statement as it imposes new measures ‘with immediate effect’ after armed men enter

-

NewsBeat5 hours ago

NewsBeat5 hours agoCuba says its forces have killed four on US-registered speedboat | World News

-

NewsBeat3 days ago

NewsBeat3 days ago‘Hourly’ method from gastroenterologist ‘helps reduce air travel bloating’

-

Tech3 days ago

Tech3 days agoAnthropic-Backed Group Enters NY-12 AI PAC Fight

-

Business1 day ago

Business1 day agoTrue Citrus debuts functional drink mix collection

-

NewsBeat3 days ago

NewsBeat3 days agoArmed man killed after entering secure perimeter of Mar-a-Lago, Secret Service says

-

Politics4 days ago

Politics4 days agoMaine has a long track record of electing moderates. Enter Graham Platner.

-

NewsBeat1 day ago

NewsBeat1 day agoPolice latest as search for missing woman enters day nine

-

Crypto World1 day ago

Crypto World1 day agoEntering new markets without increasing payment costs

-

Sports3 days ago

2026 NFL mock draft: WRs fly off the board in first round entering combine week

-

Crypto World6 days ago

Crypto World6 days ago83% of Altcoins Enter Bear Trend as Liquidity Crunch Tightens Grip on Crypto Market