Technology

Why algorithms show violence to boys

BBC

BBCIt was 2022 and Cai, then 16, was scrolling on his phone. He says one of the first videos he saw on his social media feeds was of a cute dog. But then, it all took a turn.

He says “out of nowhere” he was recommended videos of someone being hit by a car, a monologue from an influencer sharing misogynistic views, and clips of violent fights. He found himself asking – why me?

Over in Dublin, Andrew Kaung was working as an analyst on user safety at TikTok, a role he held for 19 months from December 2020 to June 2022.

He says he and a colleague decided to examine what users in the UK were being recommended by the app’s algorithms, including some 16-year-olds. Not long before, he had worked for rival company Meta, which owns Instagram – another of the sites Cai uses.

When Andrew looked at the TikTok content, he was alarmed to find how some teenage boys were being shown posts featuring violence and pornography, and promoting misogynistic views, he tells BBC Panorama. He says, in general, teenage girls were recommended very different content based on their interests.

TikTok and other social media companies use AI tools to remove the vast majority of harmful content and to flag other content for review by human moderators, regardless of the number of views they have had. But the AI tools cannot identify everything.

Andrew Kaung says that during the time he worked at TikTok, all videos that were not removed or flagged to human moderators by AI – or reported by other users to moderators – would only then be reviewed again manually if they reached a certain threshold.

He says at one point this was set to 10,000 views or more. He feared this meant some younger users were being exposed to harmful videos. Most major social media companies allow people aged 13 or above to sign up.

TikTok says 99% of content it removes for violating its rules is taken down by AI or human moderators before it reaches 10,000 views. It also says it undertakes proactive investigations on videos with fewer than this number of views.

When he worked at Meta between 2019 and December 2020, Andrew Kaung says there was a different problem. He says that, while the majority of videos were removed or flagged to moderators by AI tools, the site relied on users to report other videos once they had already seen them.

He says he raised concerns while at both companies, but was met mainly with inaction because, he says, of fears about the amount of work involved or the cost. He says subsequently some improvements were made at TikTok and Meta, but he says younger users, such as Cai, were left at risk in the meantime.

Several former employees from the social media companies have told the BBC Andrew Kaung’s concerns were consistent with their own knowledge and experience.

Algorithms from all the major social media companies have been recommending harmful content to children, even if unintentionally, UK regulator Ofcom tells the BBC.

“Companies have been turning a blind eye and have been treating children as they treat adults,” says Almudena Lara, Ofcom’s online safety policy development director.

‘My friend needed a reality check’

TikTok told the BBC it has “industry-leading” safety settings for teens and employs more than 40,000 people working to keep users safe. It said this year alone it expects to invest “more than $2bn (£1.5bn) on safety”, and of the content it removes for breaking its rules it finds 98% proactively.

Meta, which owns Instagram and Facebook, says it has more than 50 different tools, resources and features to give teens “positive and age-appropriate experiences”.

Cai told the BBC he tried to use one of Instagram’s tools and a similar one on TikTok to say he was not interested in violent or misogynistic content – but he says he continued to be recommended it.

He is interested in UFC – the Ultimate Fighting Championship. He also found himself watching videos from controversial influencers when they were sent his way, but he says he did not want to be recommended this more extreme content.

“You get the picture in your head and you can’t get it out. [It] stains your brain. And so you think about it for the rest of the day,” he says.

Girls he knows who are the same age have been recommended videos about topics such as music and make-up rather than violence, he says.

Meanwhile Cai, now 18, says he is still being pushed violent and misogynistic content on both Instagram and TikTok.

When we scroll through his Instagram Reels, they include an image making light of domestic violence. It shows two characters side by side, one of whom has bruises, with the caption: “My Love Language”. Another shows a person being run over by a lorry.

Cai says he has noticed that videos with millions of likes can be persuasive to other young men his age.

For example, he says one of his friends became drawn into content from a controversial influencer – and started to adopt misogynistic views.

His friend “took it too far”, Cai says. “He started saying things about women. It’s like you have to give your friend a reality check.”

Cai says he has commented on posts to say that he doesn’t like them, and when he has accidentally liked videos, he has tried to undo it, hoping it will reset the algorithms. But he says he has ended up with more videos taking over his feeds.

So, how do TikTok’s algorithms actually work?

According to Andrew Kaung, the algorithms’ fuel is engagement, regardless of whether the engagement is positive or negative. That could explain in part why Cai’s efforts to manipulate the algorithms weren’t working.

The first step for users is to specify some likes and interests when they sign up. Andrew says some of the content initially served up by the algorithms to, say, a 16-year-old, is based on the preferences they give and the preferences of other users of a similar age in a similar location.

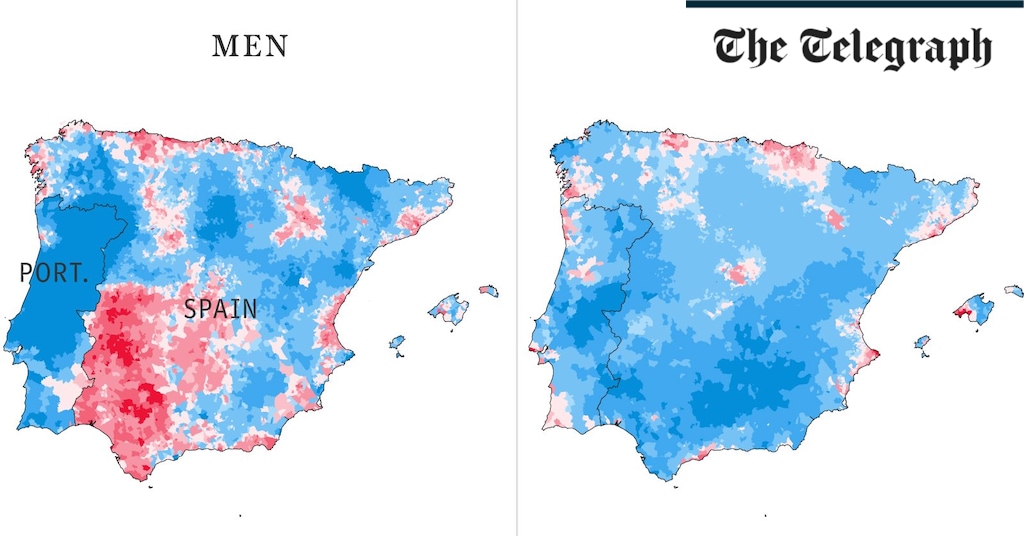

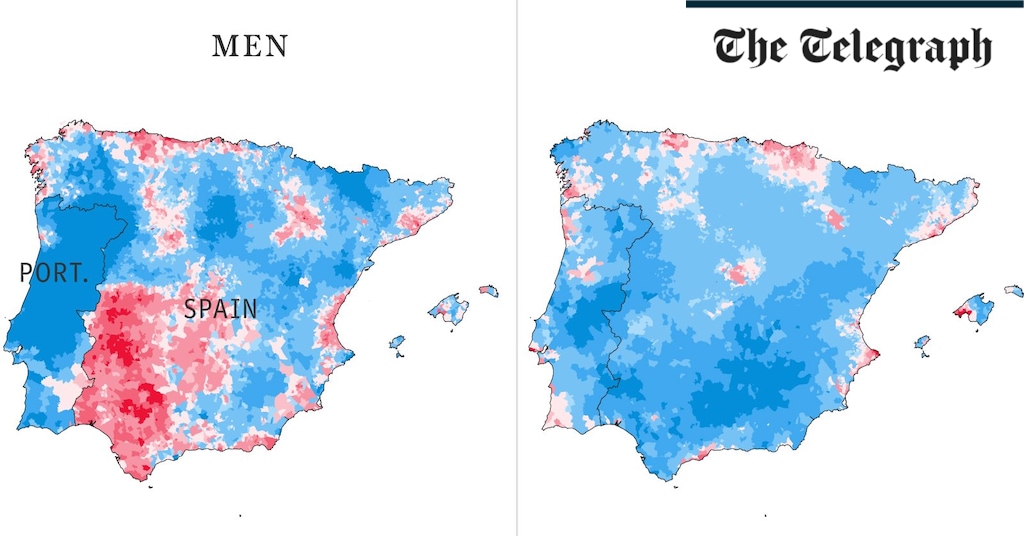

According to TikTok, the algorithms are not informed by a user’s gender. But Andrew says the interests teenagers express when they sign up often have the effect of dividing them up along gender lines.

The former TikTok employee says some 16-year-old boys could be exposed to violent content “right away”, because other teenage users with similar preferences have expressed an interest in this type of content – even if that just means spending more time on a video that grabs their attention for that little bit longer.

The interests indicated by many teenage girls in profiles he examined – “pop singers, songs, make-up” – meant they were not recommended this violent content, he says.

He says the algorithms use “reinforcement learning” – a method where AI systems learn by trial and error – and train themselves to detect behaviour towards different videos.

Andrew Kaung says they are designed to maximise engagement by showing you videos they expect you to spend longer watching, comment on, or like – all to keep you coming back for more.

The algorithm recommending content to TikTok’s “For You Page”, he says, does not always differentiate between harmful and non-harmful content.

According to Andrew, one of the problems he identified when he worked at TikTok was that the teams involved in training and coding that algorithm did not always know the exact nature of the videos it was recommending.

“They see the number of viewers, the age, the trend, that sort of very abstract data. They wouldn’t necessarily be actually exposed to the content,” the former TikTok analyst tells me.

That was why, in 2022, he and a colleague decided to take a look at what kinds of videos were being recommended to a range of users, including some 16-year-olds.

He says they were concerned about violent and harmful content being served to some teenagers, and proposed to TikTok that it should update its moderation system.

They wanted TikTok to clearly label videos so everyone working there could see why they were harmful – extreme violence, abuse, pornography and so on – and to hire more moderators who specialised in these different areas. Andrew says their suggestions were rejected at that time.

TikTok says it had specialist moderators at the time and, as the platform has grown, it has continued to hire more. It also said it separated out different types of harmful content – into what it calls queues – for moderators.

Panorama: Can We Live Without Our Phones?

What happens when smartphones are taken away from kids for a week? With the help of two families and lots of remote cameras, Panorama finds out. And with calls for smartphones to be banned for children, Marianna Spring speaks to parents, teenagers and social media company insiders to investigate whether the content pushed to their feeds is harming them.

Watch on Monday on BBC One at 20:00 BST (20:30 in Scotland) or on BBC iPlayer (UK only)

‘Asking a tiger not to eat you’

Andrew Kaung says that from the inside of TikTok and Meta it felt really difficult to make the changes he thought were necessary.

“We are asking a private company whose interest is to promote their products to moderate themselves, which is like asking a tiger not to eat you,” he says.

He also says he thinks children’s and teenagers’ lives would be better if they stopped using their smartphones.

But for Cai, banning phones or social media for teenagers is not the solution. His phone is integral to his life – a really important way of chatting to friends, navigating when he is out and about, and paying for stuff.

Instead, he wants the social media companies to listen more to what teenagers don’t want to see. He wants the firms to make the tools that let users indicate their preferences more effective.

“I feel like social media companies don’t respect your opinion, as long as it makes them money,” Cai tells me.

In the UK, a new law will force social media firms to verify children’s ages and stop the sites recommending porn or other harmful content to young people. UK media regulator Ofcom is in charge of enforcing it.

Almudena Lara, Ofcom’s online safety policy development director, says that while harmful content that predominantly affects young women – such as videos promoting eating disorders and self-harm – have rightly been in the spotlight, the algorithmic pathways driving hate and violence to mainly teenage boys and young men have received less attention.

“It tends to be a minority of [children] that get exposed to the most harmful content. But we know, however, that once you are exposed to that harmful content, it becomes unavoidable,” says Ms Lara.

Ofcom says it can fine companies and could bring criminal prosecutions if they do not do enough, but the measures will not come in to force until 2025.

TikTok says it uses “innovative technology” and provides “industry-leading” safety and privacy settings for teens, including systems to block content that may not be suitable, and that it does not allow extreme violence or misogyny.

Meta, which owns Instagram and Facebook, says it has more than “50 different tools, resources and features” to give teens “positive and age-appropriate experiences”. According to Meta, it seeks feedback from its own teams and potential policy changes go through robust process.

Science & Environment

“Dark oxygen” created in the ocean without photosynthesis, researchers say

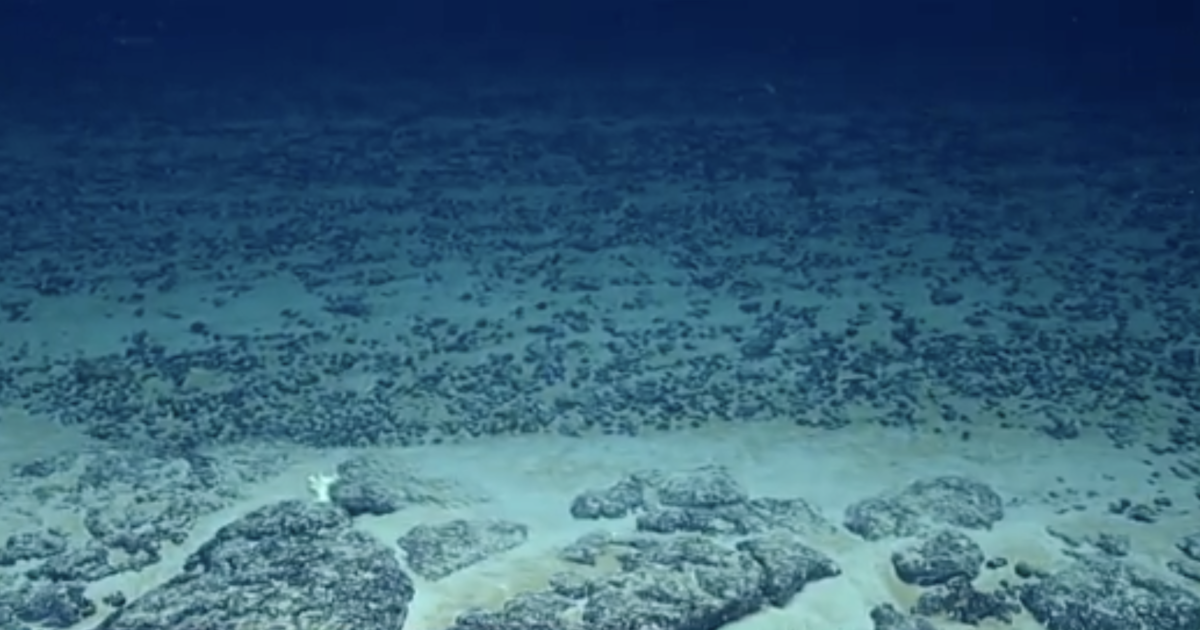

Researchers have discovered bundles of “dark oxygen” being formed on the ocean floor.

In a new study, over a dozen scientists from across Europe and the United States studied “polymetallic nodules,” or chunks of metal, that cover large swaths of the sea floor. Those nodules and other items found on the ocean floor in the deep sea between Hawaii and Mexico were subjected to a range of experiments, including injection with other chemicals or cold seawater.

The experiments showed that more oxygen — which is necessary for all life on Earth — was being created by the nodules than was being consumed. Scientists dubbed this output “dark oxygen.”

About half of the world’s oxygen comes from the ocean, but scientists previously believed it was entirely made by marine plants using sunlight for photosynthesis. Plants on land use the same process, where they absorb carbon dioxide and produce oxygen. But scientists for this study examined nodules about three miles underwater, where no sunlight can reach.

This isn’t the first time attention has been drawn to the nodules. The chunks of metal are made of minerals like cobalt, nickel, manganese and copper that are necessary to make batteries. Those materials may be what causes the production of dark oxygen.

“If you put a battery into seawater, it starts fizzing,” lead researcher Andrew Sweetman, a professor from the Scottish Association for Marine Science, told CBS News partner BBC News. “That’s because the electric current is actually splitting seawater into oxygen and hydrogen [which are the bubbles]. We think that’s happening with these nodules in their natural state.”

The metals on the nodules are valued in the trillions of dollars, setting of a race to pull the nodules up from the ocean’s depths in a process known as deep sea or seabed mining. Environmental activists have decried the practice.

Sweetman and other marine scientists worry that the deep sea mining could disrupt the production of dark oxygen and pose a threat to marine life that may depend on it.

“I don’t see this study as something that will put an end to mining,” Sweetman told the BBC. “[But] we need to explore it in greater detail and we need to use this information and the data we gather in future if we are going to go into the deep ocean and mine it in the most environmentally friendly way possible.”

Technology

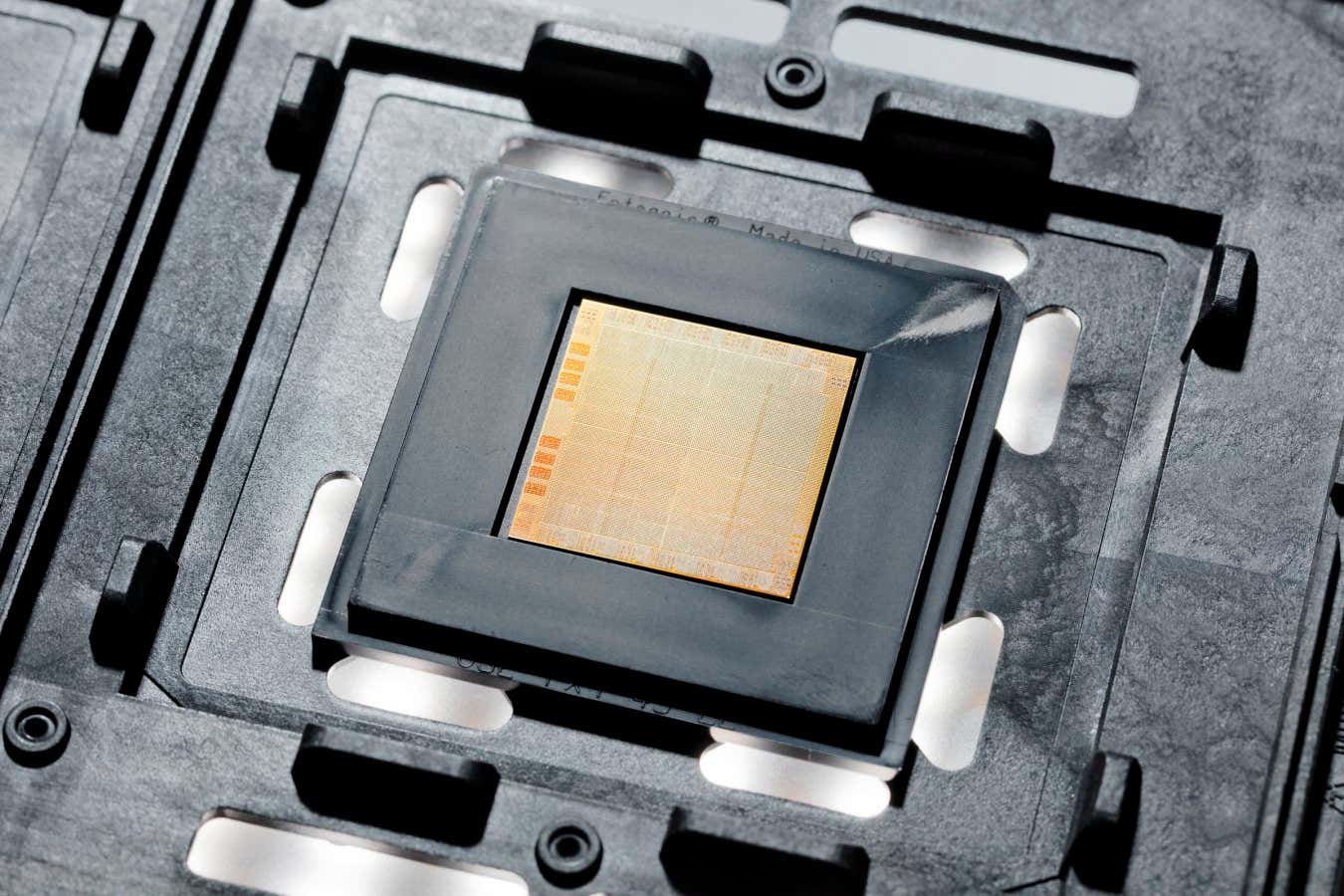

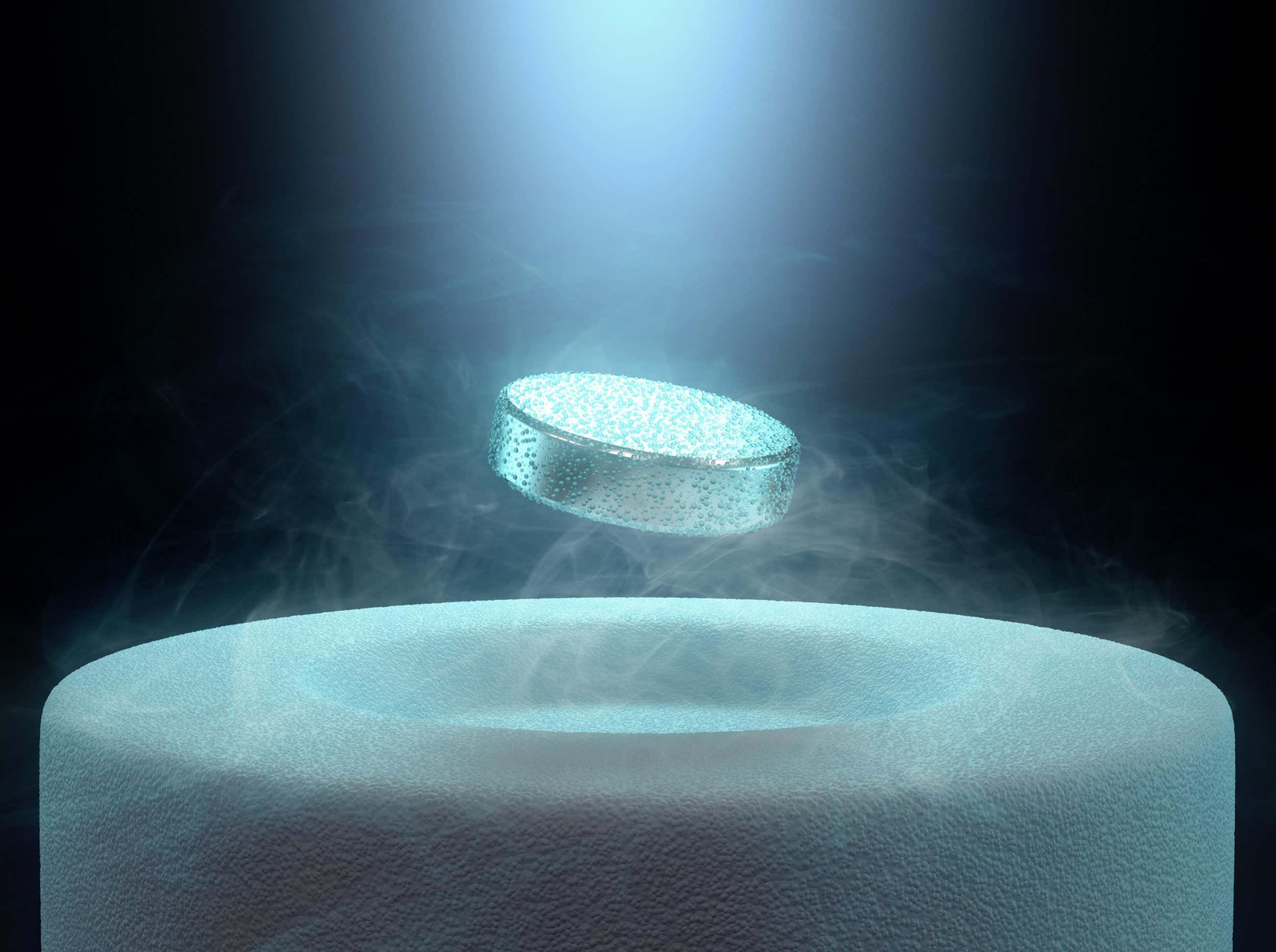

Quantum computers teleport and store energy harvested from empty space

A quantum computing chip

IBM

Energy cannot be created from nothing, but physicists found a way to do the next best thing: extract energy from seemingly empty space, teleport it elsewhere and store it for later use. The researchers successfully tested their protocol using a quantum computer.

The laws of quantum physics reveal that perfectly empty space cannot exist – even places fully devoid of atoms still contain tiny flickers of quantum fields. In 2008, Masahiro Hotta at Tohoku University in Japan proposed that those flickers, together with the …

Science & Environment

What caused the hydrothermal explosion at Yellowstone National Park? A meteorologist explains

Yellowstone National Park visitors were sent running and screaming Tuesday when a hydrothermal explosion spewed boiling hot water and rocks into the air. No one was injured, but it has left some wondering: How does this happen and why wasn’t there any warning?

The Weather Channel’s Stephanie Abrams said explosions like this are caused by underground channels of hot water, which also create Yellowstone’s iconic geysers and hot springs.

“When the pressure rapidly drops in a localized spot, it actually forces the hot water to quickly turn to steam, triggering a hydrothermal explosion since gas takes up more space than liquid,” Abrams said Wednesday on “CBS Mornings.” “And this explosion can rupture the surface, sending mud and debris thousands of feet up and more than half a mile out in the most extreme cases.”

Tuesday’s explosion was not that big, Abrams said, “but a massive amount of rocks and dirt buried the Biscuit Basin,” where the explosion occurred.

A nearby boardwalk was left with a broken fence and was covered in debris. Nearby trees were also killed, with the U.S. Geological Survey saying the plants “can’t stand thermal activity.”

“Because areas heat up and cool down over time, trees will sometimes die out when an area heats up, regrow as it cools down, but then die again when it heats up,” the agency said on X.

The USGS said it considers this explosion small, and that similar explosions happen in the national park “perhaps a couple times a year.” Often, though, they happen in the backcountry and aren’t noticed.

“It was small compared to what Yellowstone is capable of,” USGS Volcanoes said on X. “That’s not to say it was not dramatic or very hazardous — obviously it was. But the big ones leave craters hundreds of feet across.”

The agency also said that “hydrothermal explosions, “being episodes of water suddenly flashing to steam, are notoriously hard to predict” and “may not give warning signs at all.” It likened the eruptions to a pressure cooker.

While Yellowstone sits on a dormant volcano, officials said the explosion was not related to volcanic activity.

“This was an isolated incident in the shallow hot-water system beneath Biscuit Basin,” the USGS said. “It was not triggered by any volcanic activity.”

Technology

What happened to the Metaverse?

S6

Ep135

What happened to the Metaverse?

Host Andrew Davidson is joined by technology experts Brian Benway and Jan Urbanek in a discussion about the Metaverse. Our experts shed light on the latest technological and hardware advancements and marketing strategies from Big Tech. What will it take for the Metaverse to gain mainstream popularity? Listen now to find out!

Head over to Mintel’s LinkedIn to let us know what you think of today’s episode, and visit mintel.com to become a member of our free Spotlight community.

Visit the Mintel Store to explore all our technology research and buy a report today.

Meet the Host

Andrew Davidson

SVP/Chief Insights Officer, Mintel Comperemedia.

Meet the Guests

Brian Benway

Senior Analyst, Gaming and Entertainment, Mintel Reports US.

Jan Urbanek

Senior Analyst, Consumer Technology, Mintel Reports Germany.

Mintel News

For the latest in consumer and industry news, top trends and market perspectives, stay tuned to Mintel News featuring commentary from Mintel’s team of global category analysts.

More from Mintel

-

Mintel Technology Reports

Mintel’s Technology market research help you anticipate what’s next in the Technology industries….

-

Global Outlook on Sustainability: A Consumer Study 2024-25

Get the latest sustainability data, with insights on consumers, products and markets to inform your innovation strategy and build your sustainability strategy….

Podcast

2024-03-15T03:16:00+00:00

2024

0

Latest insights

June 6, 2024

In the exciting world of Artificial Intelligence (AI), prompts are instructions or queries you enter into the AI interface to get responses. If you want helpful responses, you…

November 28, 2023

Head over to Mintel’s LinkedIn to let us know what you think of today’s episode, and visit mintel.com to become a member of our free Spotlight community. Learn…

Download the Latest Market Intelligence

Science & Environment

Archaeologists make stunning underwater discovery of ancient mosaic in sea off Italy

Researchers studying an underwater city in Italy say they have found an ancient mosaic floor that was once the base of a Roman villa, a discovery that the local mayor called “stupendous.”

The discovery was made in Bay Sommersa, a marine-protected area and UNESCO World Heritage Site off the northern coast of the Gulf of Naples. The area was once the Roman city of Baia, but it has become submerged over the centuries thanks to volcanic activity in the area. The underwater structures remain somewhat intact, allowing researchers to make discoveries like the mosaic floor.

The Campi Flegrei Archaeological Park announced the latest discovery, which includes “thousands of marble slabs” in “hundreds of different shapes,” on social media.

“This marble floor has been at the center of the largest underwater restoration work,” the park said, calling the research “a new challenge” and made “very complicated due to the extreme fragment of the remains and their large expansion.”

The marble floor is made of recovered, second-hand marble that had previously been used to decorate other floors or walls, the park said. Each piece of marble was sharpened into a square and inscribed with circles. The floor is likely from the third century A.D., the park said in another post, citing the style of the room and the repurposing of the materials as practices that were common during that time.

Researchers are working carefully to extract the marble pieces from the site, the park said. The recovery work will require careful digging around collapsed walls and other fragmented slabs, but researchers hope to “be able to save some of the geometries.”

Once recovered, the slabs are being brought to land and cleaned in freshwater tanks. The marble pieces are then being studied “slab by slab” to try to recreate the former mosaic, the park said.

“The work is still long and complex, but we are sure that it will offer many prompts and great satisfactions,” the park said.

Technology

SpaceX fires up Starship engines ahead of fifth test flight

SpaceX has just performed a static fire of the six engines on its Starship spacecraft as it awaits permission from the Federal Aviation Administration (FAA) for the fifth test flight of the world’s most powerful rocket.

The Elon Musk-led spaceflight company shared footage and an image of the test fire on X (formerly Twitter) on Thursday. It shows the engines firing up while the vehicle remained on the ground.

Six engine static fire of Flight 6 Starship pic.twitter.com/fzJz9BWBn6

— SpaceX (@SpaceX) September 19, 2024

For flights, the Starship spacecraft is carried to orbit by the first-stage Super Heavy booster, which pumps out 17 million pounds of thrust at launch, making it the most powerful rocket ever built.

The Super Heavy booster and Starship spacecraft — collectively known as the Starship — have launched four times to date, with the performance of each test flight showing improvements over the previous one.

The first one, for example, exploded shortly after lift off from SpaceX’s Starbase facility in Boca Chica, Texas, in April last year, while the second effort, which took place seven months later, achieved stage separation before an explosion occurred — an incident that was captured in dramatic footage. The third and fourth flights lasted much longer and achieved many of the mission objectives, including getting the Starship spacecraft to orbit.

The fifth test flight isn’t likely to take place until November at the earliest, according to a recent report. It will involve the first attempt to use giant mechanical arms to “catch” the Super Heavy booster as it returns to the launch area. SpaceX recently expressed extreme disappointment at the time that it’s taking the FAA to complete an investigation that will pave the way for the fifth Starship test, and has said that it’ll be ready to launch the vehicle within days of getting permission from the FAA.

Once testing is complete, NASA wants to use the Starship, along with its own Space Launch System rocket, to launch crew and cargo to the moon and quite possibly for destinations much further into space such as Mars. NASA is already planning to use a modified version of the Starship spacecraft to land the first astronauts in five decades on the lunar surface in the Artemis III mission, currently set for 2026.

-

Sport11 hours ago

Sport11 hours agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

News1 day ago

News1 day agoYou’re a Hypocrite, And So Am I

-

News12 hours ago

News12 hours agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

Sport10 hours ago

Sport10 hours agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

Technology9 hours ago

Technology9 hours agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

Science & Environment13 hours ago

Science & Environment13 hours agoHow one theory ties together everything we know about the universe

-

Science & Environment21 hours ago

Science & Environment21 hours agoSunlight-trapping device can generate temperatures over 1000°C

-

News8 hours ago

News8 hours agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Science & Environment1 day ago

Science & Environment1 day agoQuantum time travel: The experiment to ‘send a particle into the past’

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoCardano founder to meet Argentina president Javier Milei

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

Business9 hours ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Science & Environment13 hours ago

Science & Environment13 hours ago‘Running of the bulls’ festival crowds move like charged particles

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Science & Environment13 hours ago

Science & Environment13 hours agoRethinking space and time could let us do away with dark matter

-

Science & Environment10 hours ago

Science & Environment10 hours agoWe may have spotted a parallel universe going backwards in time

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoArthur Hayes’ ‘sub $50K’ Bitcoin call, Mt. Gox CEO’s new exchange, and more: Hodler’s Digest, Sept. 1 – 7

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoTreason in Taiwan paid in Tether, East’s crypto exchange resurgence: Asia Express

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoLeaked Chainalysis video suggests Monero transactions may be traceable

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoAre there ‘too many’ blockchains for gaming? Sui’s randomness feature: Web3 Gamer

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoCrypto whales like Humpy are gaming DAO votes — but there are solutions

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoHelp! My parents are addicted to Pi Network crypto tapper

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours ago‘Everything feels like it’s going to shit’: Peter McCormack reveals new podcast

-

Science & Environment13 hours ago

Science & Environment13 hours agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoFed rate cut may be politically motivated, will increase inflation: Arthur Hayes

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoDecentraland X account hacked, phishing scam targets MANA airdrop

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoMemecoins not the ‘right move’ for celebs, but DApps might be — Skale Labs CMO

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoSEC asks court for four months to produce documents for Coinbase

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoBlockdaemon mulls 2026 IPO: Report

-

Business10 hours ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Politics10 hours ago

Politics10 hours agoI’m in control, says Keir Starmer after Sue Gray pay leaks

-

Politics9 hours ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

Business8 hours ago

Axel Springer top team close to making eight times their money in KKR deal

-

News8 hours ago

News8 hours ago“Beast Games” contestants sue MrBeast’s production company over “chronic mistreatment”

-

News8 hours ago

News8 hours agoSean “Diddy” Combs denied bail again in federal sex trafficking case in New York

-

News8 hours ago

News8 hours agoBrian Tyree Henry on his love for playing villains ahead of “Transformers One” release

-

News8 hours ago

News8 hours agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

Technology3 days ago

Technology3 days agoYouTube restricts teenager access to fitness videos

-

News12 hours ago

News12 hours agoChurch same-sex split affecting bishop appointments

-

Politics2 days ago

Politics2 days agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Politics1 day ago

Politics1 day agoWhat is the House of Lords, how does it work and how is it changing?

-

Politics1 day ago

Politics1 day agoKeir Starmer facing flashpoints with the trade unions

-

Health & fitness2 days ago

Health & fitness2 days agoWhy you should take a cheat day from your diet, and how many calories to eat

-

Technology12 hours ago

Technology12 hours agoFivetran targets data security by adding Hybrid Deployment

-

Science & Environment1 day ago

Science & Environment1 day agoElon Musk’s SpaceX contracted to destroy retired space station

-

News11 hours ago

Freed Between the Lines: Banned Books Week

-

MMA10 hours ago

MMA10 hours agoUFC’s Cory Sandhagen says Deiveson Figueiredo turned down fight offer

-

MMA10 hours ago

MMA10 hours agoDiego Lopes declines Movsar Evloev’s request to step in at UFC 307

-

Football10 hours ago

Football10 hours agoNiamh Charles: Chelsea defender has successful shoulder surgery

-

Football10 hours ago

Football10 hours agoSlot's midfield tweak key to Liverpool victory in Milan

-

Science & Environment14 hours ago

Science & Environment14 hours agoHyperelastic gel is one of the stretchiest materials known to science

-

Science & Environment13 hours ago

Science & Environment13 hours agoHow to wrap your head around the most mind-bending theories of reality

-

Technology2 days ago

Technology2 days agoCan technology fix the ‘broken’ concert ticketing system?

-

Fashion Models9 hours ago

Fashion Models9 hours agoMiranda Kerr nude

-

Fashion Models9 hours ago

Fashion Models9 hours ago“Playmate of the Year” magazine covers of Playboy from 1971–1980

-

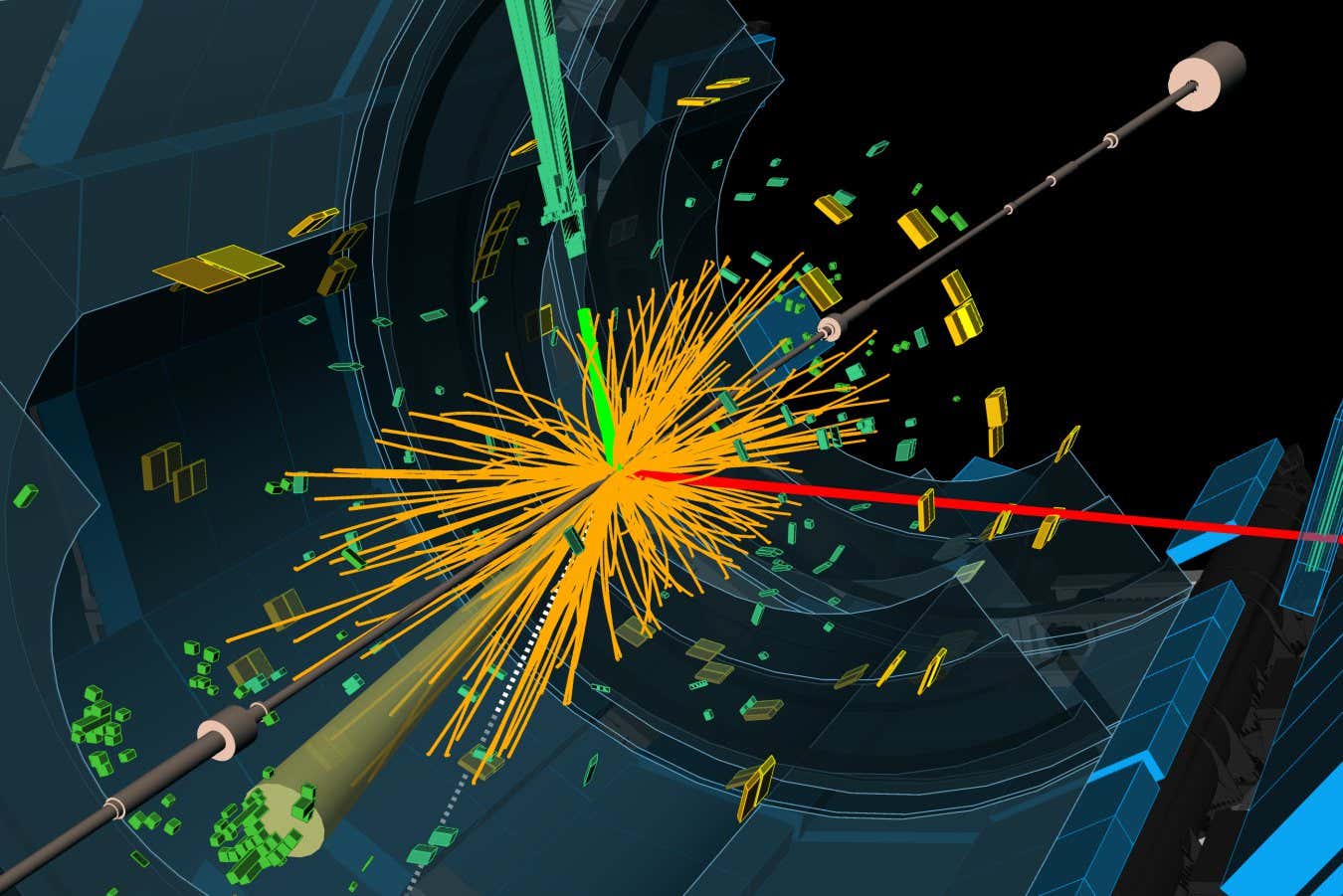

Science & Environment18 hours ago

Science & Environment18 hours agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Health & fitness2 days ago

Health & fitness2 days ago11 reasons why you should stop your fizzy drink habit in 2022

-

Politics9 hours ago

Politics9 hours agoLabour MP urges UK government to nationalise Grangemouth refinery

-

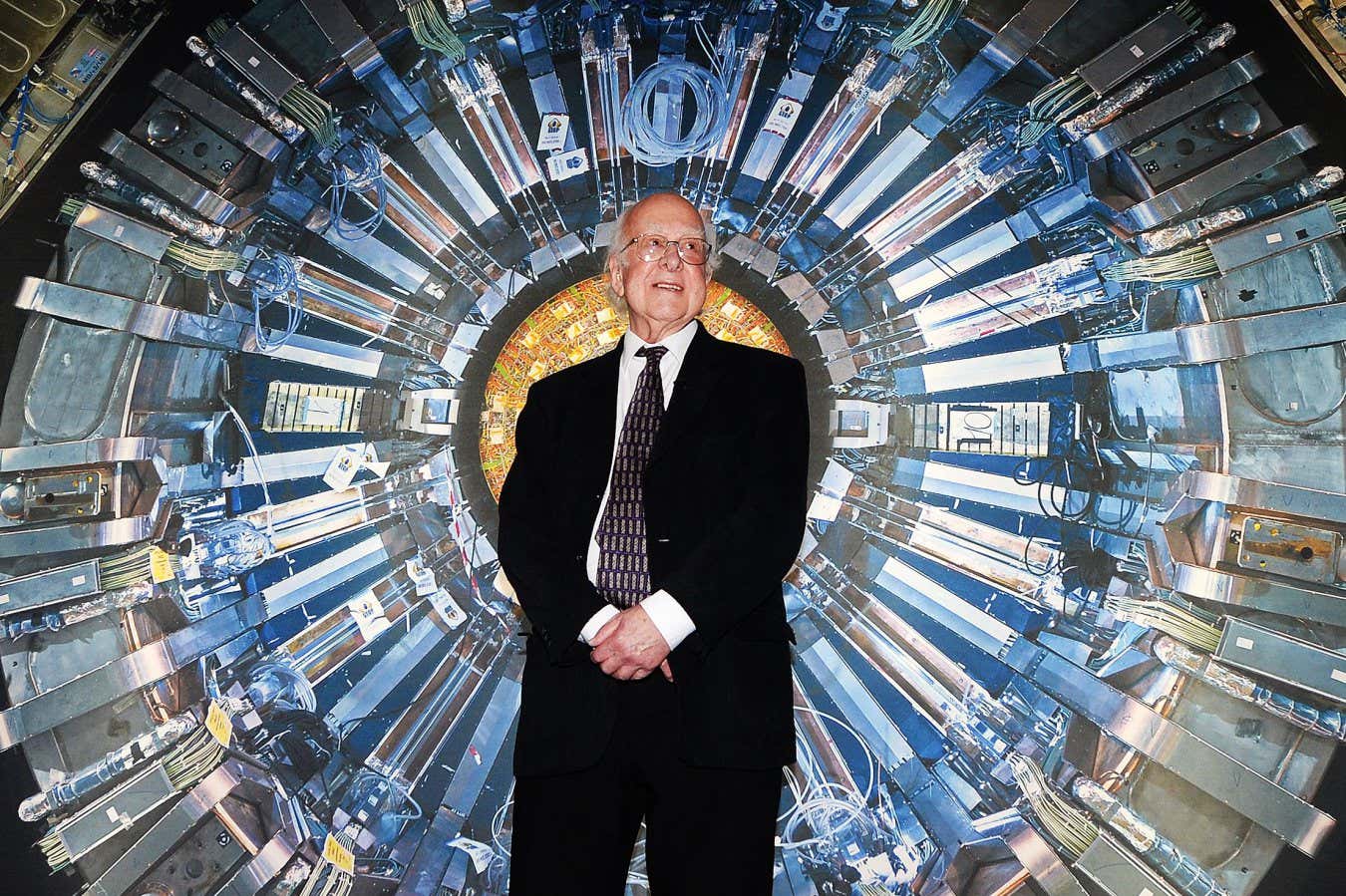

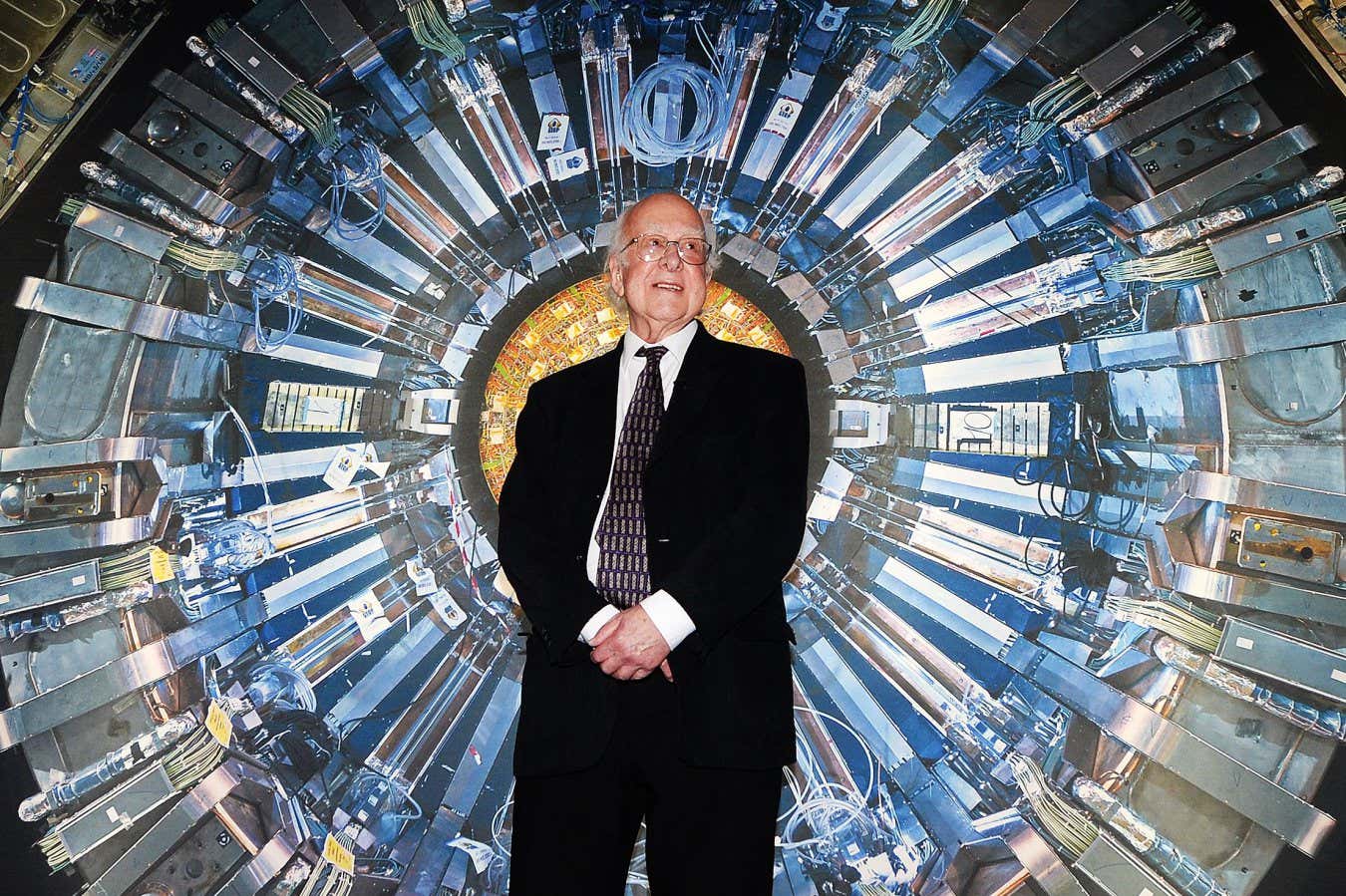

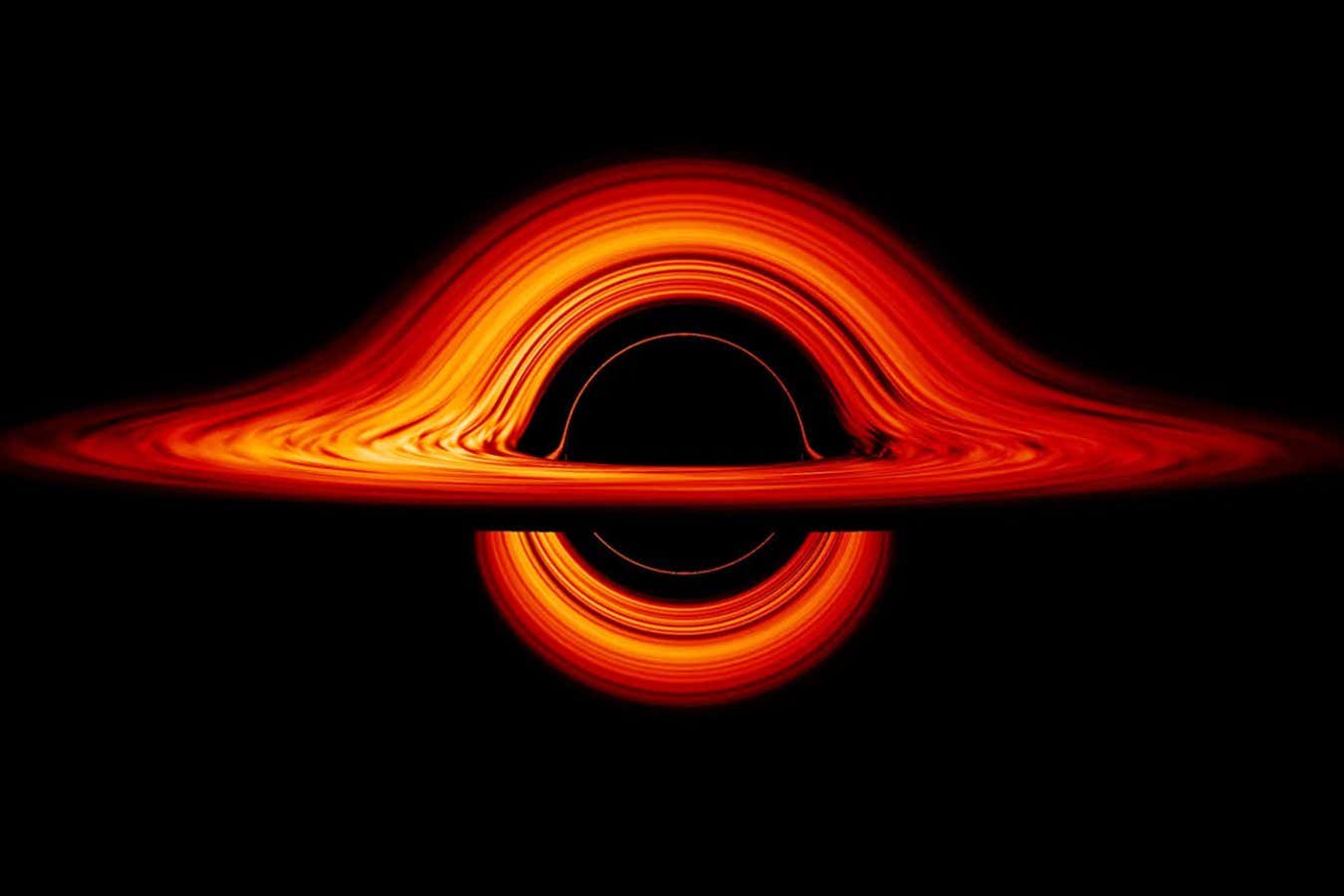

Science & Environment17 hours ago

Science & Environment17 hours agoHow Peter Higgs revealed the forces that hold the universe together

-

Technology2 days ago

Technology2 days agoWhat will future aerial dogfights look like?

-

Science & Environment12 hours ago

Science & Environment12 hours agoOdd quantum property may let us chill things closer to absolute zero

-

Science & Environment19 hours ago

Science & Environment19 hours agoQuantum forces used to automatically assemble tiny device

-

Entertainment8 hours ago

Entertainment8 hours ago“Jimmy Carter 100” concert celebrates former president’s 100th birthday

-

CryptoCurrency10 hours ago

CryptoCurrency10 hours agoSEC settles with Rari Capital over DeFi pools, unregistered broker activity

-

News8 hours ago

News8 hours agoJoe Posnanski revisits iconic football moments in new book, “Why We Love Football”

-

Health & fitness2 days ago

Health & fitness2 days agoHow to adopt mindful drinking in 2022

-

Health & fitness2 days ago

Health & fitness2 days agoWhat 10 days of a clean eating plan actually does to your body and why to adopt this diet in 2022

-

Health & fitness2 days ago

Health & fitness2 days agoWhen Britons need GoFundMe to pay for surgery, it’s clear the NHS backlog is a political time bomb

-

Health & fitness2 days ago

Health & fitness2 days agoThe maps that could hold the secret to curing cancer

-

Health & fitness2 days ago

Covid v flu v cold and how to tell the difference between symptoms this winter

-

Science & Environment24 hours ago

Science & Environment24 hours agoQuantum to cosmos: Why scale is vital to our understanding of reality

-

Business2 days ago

Business2 days agoBillionaire investor Ray Dalio warns of threat to democracy

-

Science & Environment1 day ago

Science & Environment1 day agoHow to wrap your mind around the real multiverse

-

Technology3 days ago

Technology3 days agoTrump says Musk could head ‘government efficiency’ force

-

Technology2 days ago

Technology2 days agoTech Life: Athletes using technology to improve performance

-

Science & Environment2 days ago

Science & Environment2 days agoParticle physicists may have solved a strange mystery about the muon

-

Science & Environment1 day ago

Science & Environment1 day agoTime may be an illusion created by quantum entanglement

-

Science & Environment1 day ago

Science & Environment1 day agoHow the weird and powerful pull of black holes made me a physicist

-

Politics23 hours ago

Politics23 hours agoIs there a £22bn ‘black hole’ in the UK’s public finances?

-

Science & Environment23 hours ago

Science & Environment23 hours agoX-ray laser fires most powerful pulse ever recorded

-

Science & Environment22 hours ago

Science & Environment22 hours agoWhat are fractals and how can they help us understand the world?

-

Science & Environment22 hours ago

Science & Environment22 hours agoHow indefinite causality could lead us to a theory of quantum gravity

-

Science & Environment21 hours ago

Science & Environment21 hours agoDoughnut-shaped swirls of laser light can be used to transmit images

-

Science & Environment21 hours ago

Science & Environment21 hours agoBeing in two places at once could make a quantum battery charge faster

-

Science & Environment20 hours ago

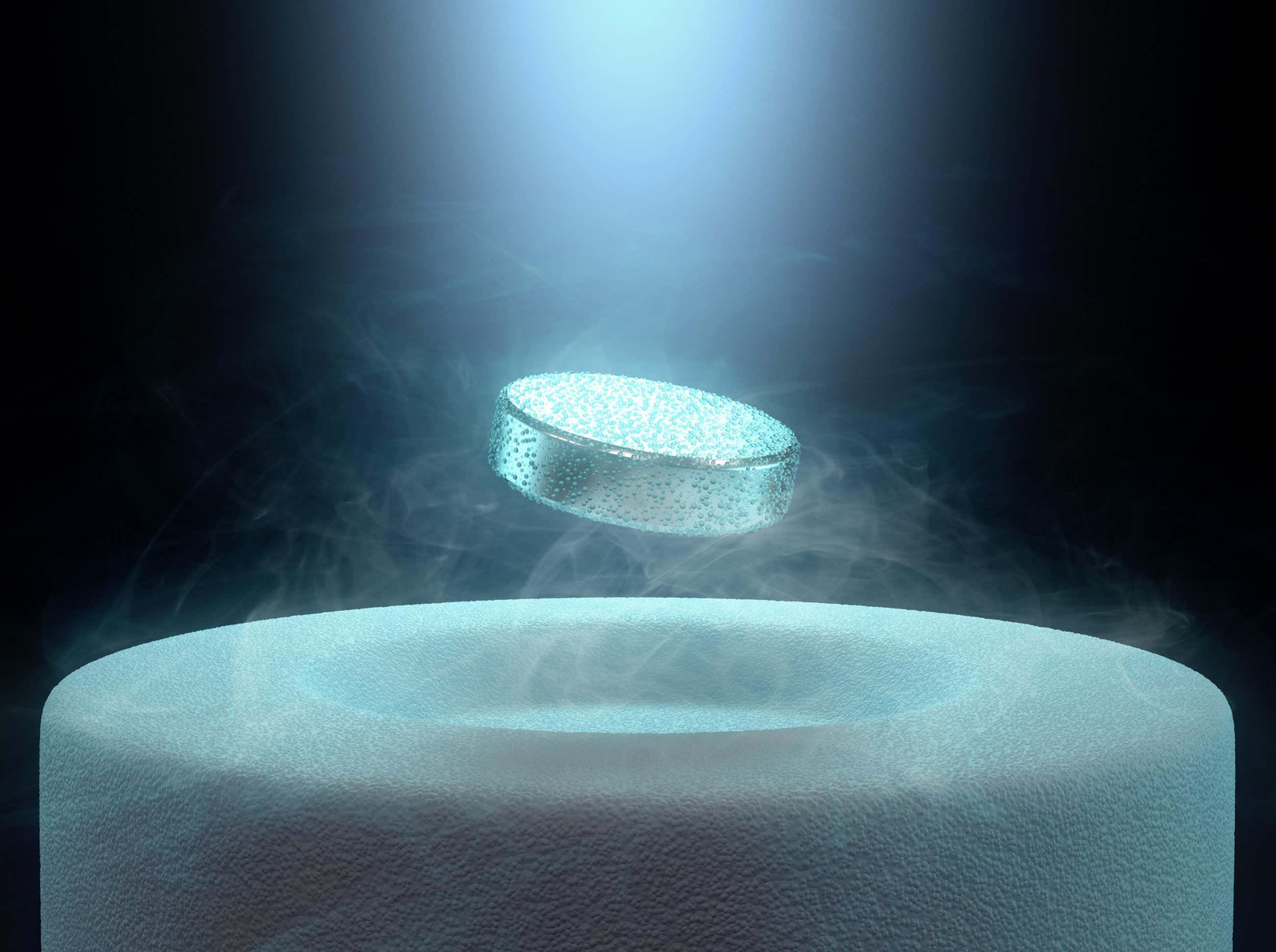

Science & Environment20 hours agoWhy we are finally within reach of a room-temperature superconductor

You must be logged in to post a comment Login