2024 has been a stressful year. Other people might pick more relaxing ways to de-stress — a spa day, a nice cocktail, or maybe a social media detox. Not me, baby. I’ve decided to cope the same way I always do: ramping up training instead of stress eating my weight in mini-muffins. Except it’s unreasonable to abandon my desk and run a 5K every time the news cycle spikes my anxiety. Which is why, for the last three months, I’ve taken to logging miles on a desk treadmill. Specifically, the $240 Mobvoi Home Walking Treadmill.

Technology

Mobvoi Home Walking Treadmill review: the smart features stressed me out

As an overly self-quantified wearables reviewer, I picked Mobvoi’s walking treadmill for one reason and one reason only: it pairs with a smartwatch so all your steps are properly counted.

My beef with treadmills — especially ones you stick under a standing desk — is that you can walk 500 miles on them but your smartwatch will record maybe 100 steps. Your legs could be working overtime, but smartwatches rely on arm swings to count steps. I know because anytime I write and walk at the same time, my Apple Watch says I’ve done diddly squat. And that’s even when I record an indoor walking session. No one needs to record every little step, but it helps me keep track of my workout volume and intensity.

Many folks get around that by strapping a smartwatch to their ankle. I refuse. Not only because I’ve tried it and found it uncomfortable but also because fitness tracking algorithms and sensors are all programmed and tested for your arm. Treadmill walk data becomes useless to me, a wearables reviewer, if I can’t trust that data to be accurate.

That’s where Mobvoi’s treadmill comes in. You can download the Mobvoi Treadmill app from the Google Play Store onto any Android smartwatch. (I used it with Mobvoi’s TicWatch Atlas and Samsung’s Galaxy Watches.) It connects to the walking pad when you turn it on. And voila. Your metrics are right there on your wrist — even if your arms are limp as you’re typing an email. It’s pretty accurate, too! There aren’t any extra sensors, but once connected to the app, it allows the device to share data with your watch. I noticed that meant my Android watches would correctly register my subtler movements as steps. My Oura Ring and Apple Watch didn’t.

Problem solved! Or, it would’ve been if I were a devoted Mobvoi user. But alas, even this simple walking pad can’t escape gadget ecosystems.

For whatever reason, Apple Watch users are out of luck, as the Mobvoi Treadmill app isn’t available in Apple’s App Store. To view your live stats via the wrist, you must have an Android smartwatch. I don’t love that, and it’s a little baffling considering all this does is connect the walking pad and your watch over Bluetooth. Fortunately, I spend a good chunk of the year testing Wear OS watches and don’t care about having two phones and wearing two smartwatches at all times. But that’s not most people, and for regular iPhone users, this is a nonstarter. I asked Mobvoi if an iOS version would ever arrive but didn’t hear back.

Somehow, getting your phone to actually save that data is even more of a headache. If you have a Mobvoi watch, there’s no problem. Workouts recorded in the Mobvoi Treadmill app automatically pop up in the separate Mobvoi Health app on your phone. But the Mobvoi Health app only works with Mobvoi watches. If you use any other kind of Android smartwatch, you can’t actually log the treadmill data into whatever health app you keep on your phone. The data is just stuck on your wrist.

Things like this are why people pick one ecosystem and stick with it. During testing, I fixated on how to get all my walking pad data onto my iPhone — the device where most of my health data is stored. Thinking through all the ways to get my data off Android and into Apple’s Health and Fitness apps was so exhausting, it made me not want to use the walking pad at all. There were weeks when I let it collect dust in my office because I didn’t want to use it if I wasn’t getting credit for it. And getting that credit was too much work.

At this point, I had to take a good hard look in the mirror. The whole point was to use this device to relieve stress. Instead, all I’d done was overcomplicate a walking pad. I ended up anxious and dreading my imperfect, messy metrics. I was so concerned about doing something “the right way” that I ended up not doing it at all. Looking back, I’ll be the first person to tell you that’s absurd. And yet, I’ve also been part of enough running and fitness communities to know that this is a common trap that even the best of us fall into.

My experience improved once I chucked the smartwatches into a drawer. I accepted my step counts wouldn’t be accurate and that my training algorithms across a dozen wearable platforms would be slightly off. I actually stopped recording my walks on every single platform altogether. As a result, my mental health improved, and I take far more walks now. My life isn’t any less stressful — I just have more endorphins, but that’s enough to make me more resilient.

Once I stopped caring about the data, I was free to figure out how to use the walking pad meaningfully. A lifelong overachiever, I started out trying to walk and work at 2.5mph to make it “worth it.” Imagine my surprised Pikachu face learning it’s quite difficult to walk at a brisk pace and write emails or even read because you’re bobbing up and down. And sweaty. Eventually, I accepted that my desk walks don’t have to be fast and found a turtle-like speed that works. (I’ve written most of this review at a 0.6mph pace.)

On mornings when the caffeine just isn’t hitting, a 20-minute walk usually jogs my shriveled brain cells while I catch up on the news. When something just isn’t working in a draft, walking while reading my sentences helps enormously. I’ve also noticed how my body becomes so stiff when I’m frustrated, anxious, angry, or full of dread. Hopping on a walking pad for a ploddingly slow 10 minutes is always enough to loosen me up — even if my step count isn’t impressive.

I highly recommend a walking pad if you, too, often experience existential dread and anxiety. Just maybe not this exact one. It’s funny. I picked Mobvoi’s treadmill precisely because it had the bells and whistles. But at the end of the day, all the extra connectivity, the data, and the “smarts” got in my way. Sometimes, the best thing you can do is remember why you’re doing something, zero in on it, and cut out the extra noise.

Technology

Google’s new AI model is here to help you learn

Google’s Gemini is useful as an educational tool to help you study for that exam. However, Gemini is sort of the “Everything chatbot” that’s useful for just about everything. Well, Google has a new model for people looking for more of a robust educational tool. Google calls it Learn About, and it could give other tools a run for their money.

Say what you want about Google’s AI, the company has been hard at work making AI tools centered around teaching rather than cheating. For example, it has tools in Android studio that guides programmers and help them learn coding. Also, we can’t forget about NotebookLM. This is the tool that takes your uploaded educational content and helps you digest it. We can’t forget abou the Audio Overviews feature that turns your uploaded media into a live podcast-style educational discussion.

So, Google has a strong focus on education with its AI tools. Let’s just hope that other companies will follow suit.

Google’s new AI tool is called Learn About

This tool is pretty self-explanatory, as it focus on giving you more text-book style explanations for your questions. Rather than simply giving you answers, this tool will go the extra mile to be more descriptive with its explanation. Along with that, Learn About will also provide extra context on the subject and give you other educational material on it.

Google achieved this by using a totally different model to power this tool. Rather than using the Gemini model, Ask About uses a model called LearnLM. At this point, we don’t really know much about this model, but we know that Google steered it more towards providing academic answers.

Gemini’s answer vs. Learn About’s answers

We tested it out by asking what pulsars are, and we compared the answer to what Gemini gave us for the same question. Gemini delivered a pretty fleshed-out explanation in the form of a few paragraphs. It also snagged a few pictures from the internet and pasted the link to a page at the bottom. This is good for a person who’s casually looking up a definition. Maybe that person isn’t looking to learn the ins and outs of what a pulsar is.

There was one issue with Gemini’s answer; one of the images that it pasted was an image of a motorcycle. It pasted an image of the Bajaj Pulsar 150. So, while it technically IS a pulsar, a motorcycle shares very few similarities with massive rapidly spinning balls of superheated plasma billions of miles away from Earth.

What about Learn About?

Learn About also gave an explanation in the form of a few paragraphs; however, Learn About’s explanation was shorter. It makes up for it by producing more extraneous material. Along with images, it provided three links (one of which was a YouTube video) and chips with commands like Simplify, Go deeper, and Get images (more on the chips below).

Under the chips, you’ll see suggestions of other queries that you can put in for additional context. Lastly, in textbook style, you’ll see colored blocks with additional content. For example, there’s a Why it matters block and a Stop & think block.

Chips

Going back to the aforementioned chips, selecting Simplify and Get images are axiomatic enough. Tapping/clicking on the Go Deeper chip is a bit more interesting. It brought up an Interactive List consisting of a selection of additional queries that will provide extra information about pulsars. Each query you select will bring up even more information.

Textbook blocks

Think about the textbooks you used in school, and you’ll be familiar with these blocks. These blocks come in different colors. The Why it matters block tells you why this information is important. Next, the Stop & think block seems to give you a little bit of tangential information. It asks a question and has a button to reveal the answer. It’s a way to get you to think outside of the box a bit.

There’s a Build your vocab box that introduces you to a relevant term and shows you a dictionary-style definition of it. This is a term that the reader is most likely not familiar with.

The next block we encountered was the Test your knowledge block. This one has a quiz-style question and it gives you two options. Other subject matters might have more choices, but this is what we got in our usage.

We also saw a Common misconception block. This one pretty much explains itself.

Bottom bar

At the very bottom of the screen, you’ll see a bar with some additional chips. One chip should show the title of the current subject, and Tapping/clicking on it will bring up a floating window with additional topic suggestions. In our case, we also saw the interactive list that we saw previously. This one will show the list in a floating window.

One issue

So, do you remember when Gemini gave us the image of the motorcycles? Well, while the majority of Learn About’s images were relevant to the subject, it still retrieved two images of the motorcycles. As comical as it is, it shows that Google’s AI still has a ways to go before it’s perfect. However, barring that little mishap, Learn About runs as smoothly as the motorcycle it’s surfacing pictures of.

Use it today!

You can use Learn About today if you want to try it out. Just go to the Learn About website Learn About website, and you’ll be able to try it out. Just know that, as with most Google services, the availability will depend on your region. We were able to access it in the U.S. in English. Just know that you may not have it in regions that Google typically overlooks.

You can use it regardless of if you’re a free or paid user. Please note that Learn About is technically an experiment. This means that Google only put this on the market for testing. Google could potentially lock this behind a paywall after the beta testing phase. Just know that this feature could disappear down the line. So, you’ll want to get in and use it while you can.

Technology

GOG’s preservation label highlights classic games it’s maintaining for modern hardware

GOG is launching an effort to help make older video games playable on modern hardware. The will label the classic titles that the platform has taken steps to adapt in order to make them compatible with contemporary computer systems, controllers and screen resolutions, all while adhering to its DRM-free policy. The move could bring new life to games of decades past, just as GOG did two years ago with a refresh of . So far, 92 games have received the preservation treatment.

“Our guarantee is that they work and they will keep working,” the company says in the video announcing the initiative.

Preservation has been a hot topic as more games go digital only. Not only are some platforms disk drives by default, but ownership over your library is more ephemeral than it seems. After all, most game purchases are , and licenses can be revoked (as The Crew players know ).

Technology

How Microsoft’s next-gen BitNet architecture is turbocharging LLM efficiency

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

One-bit large language models (LLMs) have emerged as a promising approach to making generative AI more accessible and affordable. By representing model weights with a very limited number of bits, 1-bit LLMs dramatically reduce the memory and computational resources required to run them.

Microsoft Research has been pushing the boundaries of 1-bit LLMs with its BitNet architecture. In a new paper, the researchers introduce BitNet a4.8, a new technique that further improves the efficiency of 1-bit LLMs without sacrificing their performance.

The rise of 1-bit LLMs

Traditional LLMs use 16-bit floating-point numbers (FP16) to represent their parameters. This requires a lot of memory and compute resources, which limits the accessibility and deployment options for LLMs. One-bit LLMs address this challenge by drastically reducing the precision of model weights while matching the performance of full-precision models.

Previous BitNet models used 1.58-bit values (-1, 0, 1) to represent model weights and 8-bit values for activations. This approach significantly reduced memory and I/O costs, but the computational cost of matrix multiplications remained a bottleneck, and optimizing neural networks with extremely low-bit parameters is challenging.

Two techniques help to address this problem. Sparsification reduces the number of computations by pruning activations with smaller magnitudes. This is particularly useful in LLMs because activation values tend to have a long-tailed distribution, with a few very large values and many small ones.

Quantization, on the other hand, uses a smaller number of bits to represent activations, reducing the computational and memory cost of processing them. However, simply lowering the precision of activations can lead to significant quantization errors and performance degradation.

Furthermore, combining sparsification and quantization is challenging, and presents special problems when training 1-bit LLMs.

“Both quantization and sparsification introduce non-differentiable operations, making gradient computation during training particularly challenging,” Furu Wei, Partner Research Manager at Microsoft Research, told VentureBeat.

Gradient computation is essential for calculating errors and updating parameters when training neural networks. The researchers also had to ensure that their techniques could be implemented efficiently on existing hardware while maintaining the benefits of both sparsification and quantization.

BitNet a4.8

BitNet a4.8 addresses the challenges of optimizing 1-bit LLMs through what the researchers describe as “hybrid quantization and sparsification.” They achieved this by designing an architecture that selectively applies quantization or sparsification to different components of the model based on the specific distribution pattern of activations. The architecture uses 4-bit activations for inputs to attention and feed-forward network (FFN) layers. It uses sparsification with 8 bits for intermediate states, keeping only the top 55% of the parameters. The architecture is also optimized to take advantage of existing hardware.

“With BitNet b1.58, the inference bottleneck of 1-bit LLMs switches from memory/IO to computation, which is constrained by the activation bits (i.e., 8-bit in BitNet b1.58),” Wei said. “In BitNet a4.8, we push the activation bits to 4-bit so that we can leverage 4-bit kernels (e.g., INT4/FP4) to bring 2x speed up for LLM inference on the GPU devices. The combination of 1-bit model weights from BitNet b1.58 and 4-bit activations from BitNet a4.8 effectively addresses both memory/IO and computational constraints in LLM inference.”

BitNet a4.8 also uses 3-bit values to represent the key (K) and value (V) states in the attention mechanism. The KV cache is a crucial component of transformer models. It stores the representations of previous tokens in the sequence. By lowering the precision of KV cache values, BitNet a4.8 further reduces memory requirements, especially when dealing with long sequences.

The promise of BitNet a4.8

Experimental results show that BitNet a4.8 delivers performance comparable to its predecessor BitNet b1.58 while using less compute and memory.

Compared to full-precision Llama models, BitNet a4.8 reduces memory usage by a factor of 10 and achieves 4x speedup. Compared to BitNet b1.58, it achieves a 2x speedup through 4-bit activation kernels. But the design can deliver much more.

“The estimated computation improvement is based on the existing hardware (GPU),” Wei said. “With hardware specifically optimized for 1-bit LLMs, the computation improvements can be significantly enhanced. BitNet introduces a new computation paradigm that minimizes the need for matrix multiplication, a primary focus in current hardware design optimization.”

The efficiency of BitNet a4.8 makes it particularly suited for deploying LLMs at the edge and on resource-constrained devices. This can have important implications for privacy and security. By enabling on-device LLMs, users can benefit from the power of these models without needing to send their data to the cloud.

Wei and his team are continuing their work on 1-bit LLMs.

“We continue to advance our research and vision for the era of 1-bit LLMs,” Wei said. “While our current focus is on model architecture and software support (i.e., bitnet.cpp), we aim to explore the co-design and co-evolution of model architecture and hardware to fully unlock the potential of 1-bit LLMs.”

Source link

Technology

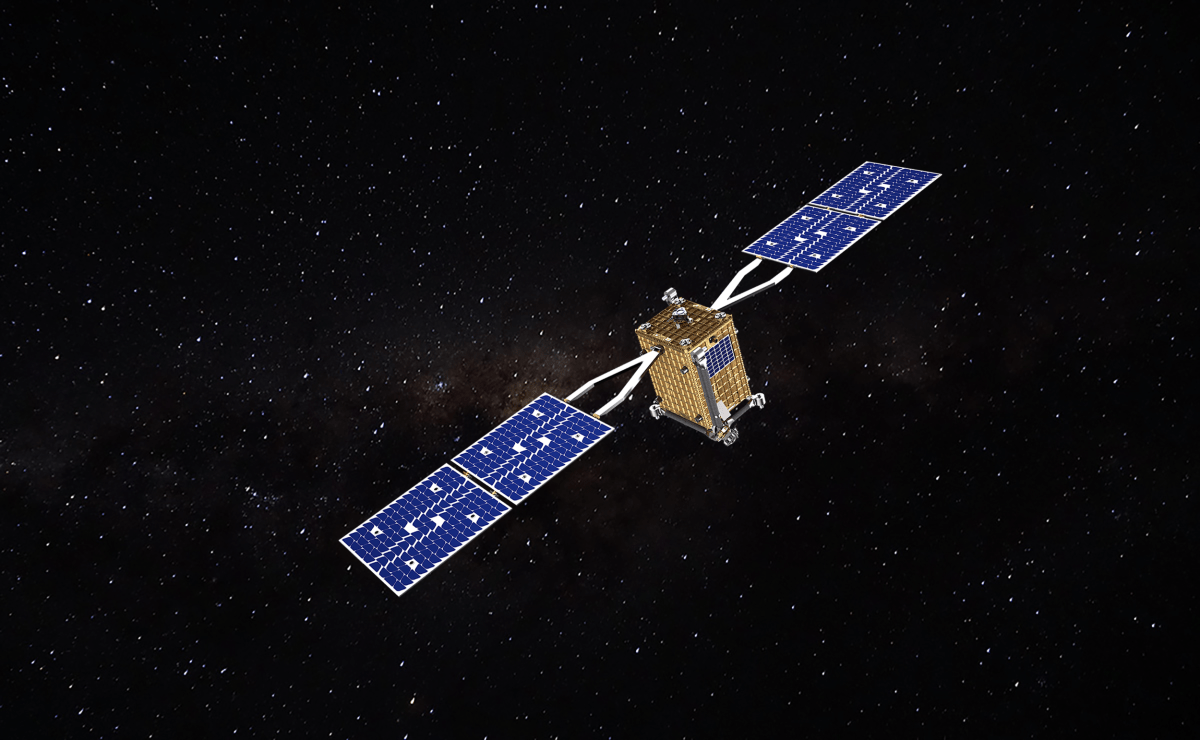

Starfish Space raises $29M to launch satellite-servicing spacecraft missions

Starfish Space has closed a new tranche of funding led by a major defense tech investor as it looks to launch three full-size satellite servicing and inspection spacecraft in 2026.

The Washington-based startup’s Otter spacecraft is designed for two primary missions: extending the operational life of expensive satellites in geostationary orbit (GEO) and disposing of defunct satellites in low Earth orbit (LEO). It’s a series of capabilities that have never been available for satellite operators, who launch their satellites with the expectation that they’ll only have a limited span of useful life.

The aim, as Starfish CEO and co-founder Austin Link put it in a recent interview, is to “make it affordable enough that the benefits of having your satellite serviced outweigh the costs.”

The $29 million round was led by Shield Capital, a venture firm focused on funding technologies that will affect U.S. national security. It has participated in just a handful of other deals in the space industry. The round also includes participation from new investors Point72 Ventures, Booz Allen Ventures, Aero X Ventures, Trousdale Ventures, TRAC VC, and existing investors Munich Re Ventures, Toyota Ventures, NFX, and Industrious Ventures.

“You start a company because you want to build satellites, not because you want to fundraise,” Link told TechCrunch. Link founded Starfish in 2019 with Trevor Bennett after the pair worked as flight sciences engineers at Blue Origin. They raised $7 million in 2021 and $14 million two years later. Starfish launched its first demonstration mission, a sub-scale spacecraft fittingly called Otter Pup, last summer.

Although that mission did not quite go according to plan, Starfish has racked up several wins since then, including three separate contracts for full-size Otter spacecraft. That includes a $37.5 million deal with the U.S. Space Force for a first-of-its-kind docking and maneuvering mission with a defense satellite in GEO and a contract with major satellite communications company Intelsat for life extension services. The third contract, a $15 million NASA mission to inspect multiple defunct satellites in LEO, was announced while Starfish was in the middle of fundraising, Link said.

Starfish purposefully set out to find investors that had experience helping their portfolio companies navigate selling to the government, Link said. “The government is a customer that it sometimes can be harder to scale with, so having investors that understood the process a little better … we thought they’d be good additions to our cap table.”

Link added that the company is seeing a “fairly even split” in demand between government and commercial customers.

Satellite servicing, life extension, and satellite disposal are “exciting first steps,” Link said, but they’re stepping stones on the way to developing a broader suite of capabilities for even more ambitious missions on orbit.

“Along the way, we end up with this set of autonomy and robotics technologies and capabilities and datasets that allow us to go eventually do broadly a set of complex robotic or servicing or ISAM-type missions in space that maybe stretch a little beyond what we do with the Otter,” he said. “I think a lot of those are a long ways off, and not necessarily where our focus is right now … but some of the effort that goes into the Otter today and is funded through this funding round, and some of the growth there leads to a longer term where Starfish Space can have a broad impact on the way that humans go out into the universe.”

Technology

Apple updates Logic Pro with new sounds and search features

Apple today announced some minor updates to Logic Pro for both the Mac and the iPad, including the ability to search for plug-ins and sources and the addition of more analog-simulating sounds.

In Logic Pro for Mac 11.1 and Logic Pro for iPad 2.1, you can now reorder channel strips and plug-ins in the mixer and plug-in windows to make it easier to organize the layout of an audio mix.

As for the new sounds, Apple added a library of analog synthesizer samples called Modular Melodies, akin to the Modular Rhythms pack already found in Logic.

A more exciting sonic addition is the new Quantec Room Simulator (QRS) plug-in, which emulates the vintage digital reverb hardware of the same name, found in professional recording studios all over the world. Apple has acquired the technology for the classic QRS model and the later YardStick models to integrate into this software.

Specific to Logic Pro for Mac, you are now able to share a song to the Mac’s Voice Memos app — which may be a great feature for when Voice Memos gets that multitrack option on the iPhone in iOS 18.2

Added to the iPad version of Logic Pro is the ability to add your own local third party sample folders to the browser window, to make it easier to bring external audio files into tracks and sampler plug-ins.

These upgrades are small for current Logic users, but they do overall make the digital audio workplace easier to use and adds to the plethora of useful tools for no additional cost. Users will have access to upgrade to Logic Pro for Mac 11.1 and Logic Pro for iPad 2.1 today.

Technology

Today’s Wordle answer is so hard it nearly cost me my 1,045-game streak – and it’s all the NYT’s fault

Having a very, very long Wordle streak is a blessing and a curse. On the one hand, it grants me immense bragging rights over those mere mortals with their streaks in the hundreds. On the other, it turns every game into a must-win ordeal. After all, what would I be without my Wordle streak? A so-called ‘expert’ with no credentials, that’s what. I’d be laughed out of town.

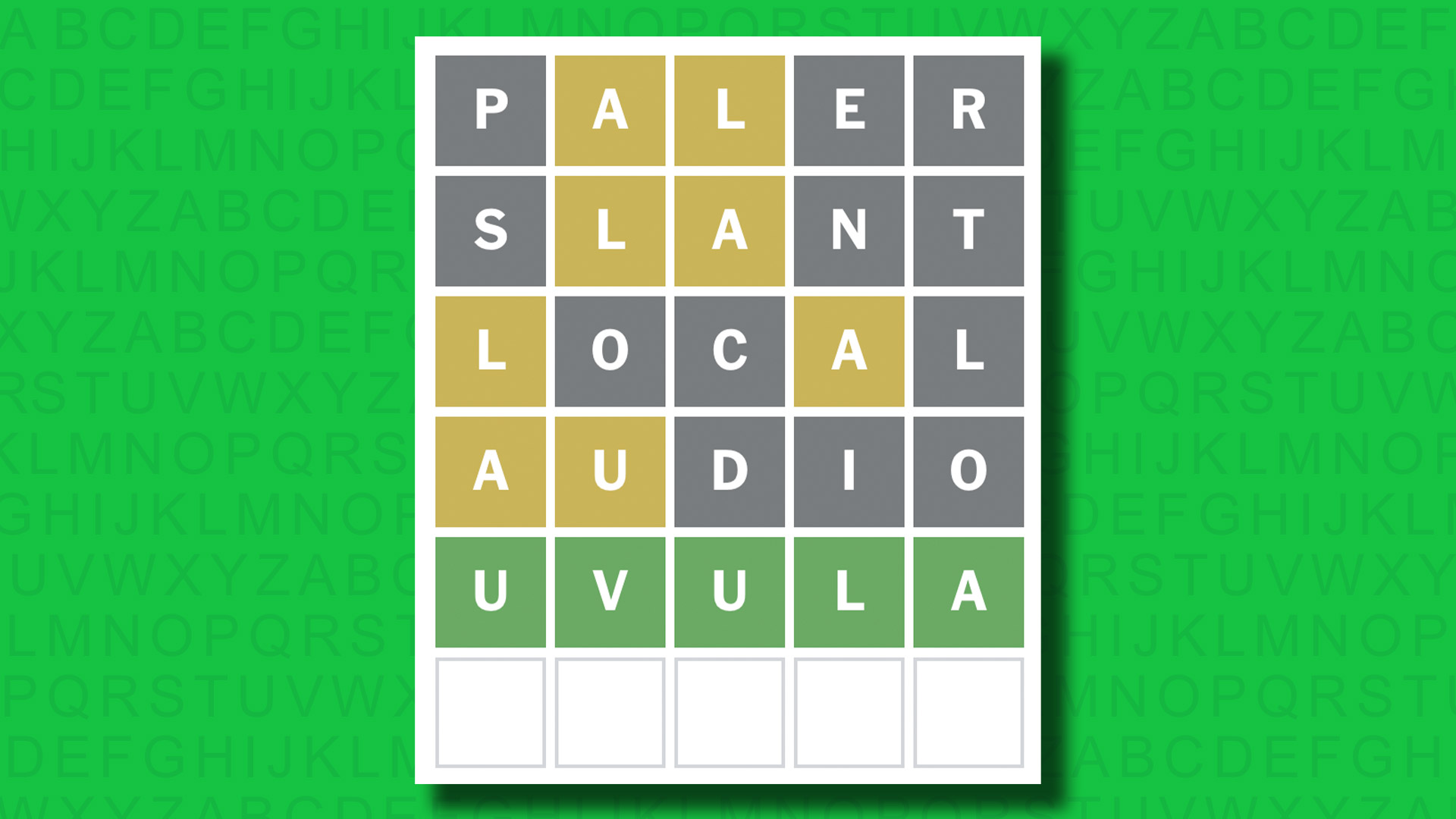

I joke, of course, but having gone 1,046 games without a loss I would rather not give up my streak all the same. I nearly had to today, though, because game #1,244 (Thursday, 14 November) nearly sent me back to ground zero.

I’m pretty sure I won’t be the only one, though – because today’s Wordle answer is undoubtedly a very difficult one. And it’s all the fault of the New York Times’ puzzle setters.

To explain why, I’ll need to reveal the solution, so don’t read past this point if you haven’t played yet, because SPOILERS FOR TODAY’S WORDLE, GAME #1,244, ON THURSDAY, 14 NOVEMBER 2024 will follow. You have been warned.

Wordle hall of shame

Let’s start with a question: what’s the hardest Wordle ever? Is it CAULK, one of the first games to upset thousands of avid Wordlers soon after the game’s meteoric rise to prominence? Or maybe BORAX, a word that many players outside of the United States had almost no knowledge of? Or JAZZY, with its repeated Zs and very-uncommon J at the start?

None of those, actually – the toughest ever is PARER, game #454 in September 2022. That’s based on the fact that it had an average score of 6.3, which to put it in context is half a guess more than its closest competitor, MUMMY (#491, 5.8).

Those average scores come from WordleBot, the in-game AI helper tool that analyzes your Wordle after you’ve played. As well as doing that, it records the average across everyone who plays, and in turn I note down those averages to keep a sort of league table. I have 956 of them listed now, giving me a pretty good idea of which Wordles people have found most difficult.

By that measure, today’s game is some way down the list of the hardest ever, with an average of 4.9. High, but not ridiculously so. However, that only tells half of the story.

Right, let’s get into the specifics now, which means revealing today’s answer. This is your last chance to go away and play if you haven’t done so yet.

**FINAL SPOILER ALERT**

Sorry, what?

Today’s answer is UVULA.

No, me neither.

I do sort of know what it means, actually. Or at least I knew before I played it that it was a real word, albeit a fairly obscure one with a very strange spelling. For the unaware, it’s the soft dangly bit between your tonsils at the back of your throat. I thought it was the ridge at the top of your mouth, maybe, but at least I was in the right area.

Others will not be so lucky. Twitter is already alight with people complaining that either they had no idea what it means or that it was just too obscure.

You have got to be kidding me Wordle 1,244 6/6⬜⬜🟨⬜⬜⬜🟨⬜⬜⬜🟨🟨⬜🟨⬜⬜⬜🟩🟨🟨🟨🟨🟩⬜⬜🟩🟩🟩🟩🟩November 13, 2024

Wordle 1.244 X/6⬜🟨⬜🟨⬜🟨🟨⬜⬜⬜⬜🟨🟨⬜⬜🟨⬜⬜⬜⬜⬜🟨⬜⬜🟩🟨🟨🟨⬜🟩How am I supposed to know THAT ???????? #nyt #wordleNovember 13, 2024

As well as its relative obscurity as a word, UVULA suffers from having an incredibly unusual format, with UVU at the start; that’s not found in any other answer. Plus, it contains two letter Us – and as I show in my analysis of every Wordle answer, that’s a very rare occurrence too, with only 10 games among the original 2,309 solutions having that format.

All of this added up to make it a very tough game. Its average of 4.9 puts it just outside of the top 20, but I think that’s misleading – people are probably solving it through brute force in the end, because there are no other words that have that format. With a word like PARER, in contrast, there are lots of alternatives and it’s therefore easy to keep guessing until you eventually fail. Here, you will ultimately reach a point where nothing else fits!

That’s what happened to me, anyway. I usually solve Wordle in about 10-20 minutes, sometimes 30-40 if it’s a difficult one and I’m playing carefully. Here, I must have stared at the board and played around with various letter combinations for two hours. That’s genuinely no exaggeration. My family thought I’d gone mad.

I nearly gave up – I was completely stumped. However, I have a daily column to write so kept going and eventually scored a five. My streak was genuinely in doubt, though – I could easily have wasted a couple of guesses on similarly obscure words that weren’t correct.

NYT blues

So why is this the NYT’s fault and not mine?

Well, it’s worth noting that this is – for the second day in a row – a non-canon Wordle answer. By that I mean that it was not among the 2,309 answers originally dreamed up by the game’s creator Josh Wardle and his partner, but has instead been added by the NYT.

Yesterday’s PRIMP (see below) was also one of these, and in total we’ve now had 10 of them: GUANO (game #646), SNAFU (#659), BALSA (#720), KAZOO (#730), LASER (#1038), PIOUS (#1054), BEAUT (#1186) and MOMMY (#1208).

Notice anything about those words? Yes – they include some of the toughest in recent memory.

I can easily work out the average for Wordle as a whole, and right now it stands at 3.964 across those 956 games that I have a score for. However, if you look at the average for those 10 games added by the NYT you’ll see that it’s a mighty 4.35. It’s official: the NYT is making Wordle harder!

There is a reason for this, of course – in that Josh Wardle used up most of the obvious answers. LASER (average: 3.3) and PIOUS (3.8) are the only two below 4.0, and are also the most common words among them, MOMMY aside. That last word is an outlier, meanwhile, because it contains three Ms, an incredibly unusual format (and it had an average of 5.0 as a result).

Elsewhere, KAZOO was a 5.1, PRIMP yesterday was 4.5, BALSA 4.4 and SNAFU 4.3, so it certainly appears as though the NYT’s editors are choosing tougher words when given the chance.

There’s nothing wrong with this, really – and I like a challenge as much as anyone. UVULA is a perfectly fair word, albeit an undeniably difficult one to solve in Wordle. But I wasn’t feeling anywhere near as charitable when I was sat staring at a seemingly impossible game, and I doubt you will be if you just lost your streak today, either.

You might also like

-

Science & Environment2 months ago

Science & Environment2 months agoHow to unsnarl a tangle of threads, according to physics

-

Technology2 months ago

Technology2 months agoWould-be reality TV contestants ‘not looking real’

-

Technology2 months ago

Technology2 months agoIs sharing your smartphone PIN part of a healthy relationship?

-

Science & Environment2 months ago

Science & Environment2 months agoHyperelastic gel is one of the stretchiest materials known to science

-

Science & Environment2 months ago

Science & Environment2 months ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment2 months ago

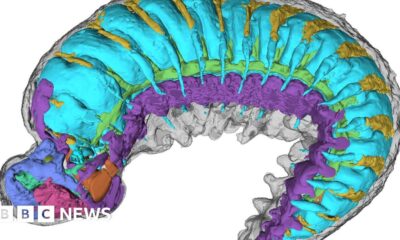

Science & Environment2 months agoX-rays reveal half-billion-year-old insect ancestor

-

Science & Environment2 months ago

Science & Environment2 months agoPhysicists have worked out how to melt any material

-

News1 month ago

News1 month ago‘Blacks for Trump’ and Pennsylvania progressives play for undecided voters

-

MMA1 month ago

MMA1 month ago‘Dirt decision’: Conor McGregor, pros react to Jose Aldo’s razor-thin loss at UFC 307

-

News1 month ago

News1 month agoWoman who died of cancer ‘was misdiagnosed on phone call with GP’

-

Money1 month ago

Money1 month agoWetherspoons issues update on closures – see the full list of five still at risk and 26 gone for good

-

Sport1 month ago

Sport1 month agoAaron Ramsdale: Southampton goalkeeper left Arsenal for more game time

-

Football1 month ago

Football1 month agoRangers & Celtic ready for first SWPL derby showdown

-

Sport1 month ago

Sport1 month ago2024 ICC Women’s T20 World Cup: Pakistan beat Sri Lanka

-

Business1 month ago

how UniCredit built its Commerzbank stake

-

Science & Environment2 months ago

Science & Environment2 months agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Science & Environment2 months ago

Science & Environment2 months agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment2 months ago

Science & Environment2 months agoSunlight-trapping device can generate temperatures over 1000°C

-

Science & Environment2 months ago

Science & Environment2 months agoLiquid crystals could improve quantum communication devices

-

Technology1 month ago

Technology1 month agoUkraine is using AI to manage the removal of Russian landmines

-

Technology1 month ago

Technology1 month agoSamsung Passkeys will work with Samsung’s smart home devices

-

Business1 month ago

Top shale boss says US ‘unusually vulnerable’ to Middle East oil shock

-

Science & Environment2 months ago

Science & Environment2 months agoQuantum forces used to automatically assemble tiny device

-

Science & Environment2 months ago

Science & Environment2 months agoLaser helps turn an electron into a coil of mass and charge

-

MMA1 month ago

MMA1 month agoPereira vs. Rountree prediction: Champ chases legend status

-

News1 month ago

News1 month agoMassive blasts in Beirut after renewed Israeli air strikes

-

News1 month ago

News1 month agoNavigating the News Void: Opportunities for Revitalization

-

Science & Environment2 months ago

Science & Environment2 months agoWhy this is a golden age for life to thrive across the universe

-

Technology2 months ago

Technology2 months agoRussia is building ground-based kamikaze robots out of old hoverboards

-

Technology1 month ago

Technology1 month agoGmail gets redesigned summary cards with more data & features

-

News1 month ago

News1 month agoCornell is about to deport a student over Palestine activism

-

Technology1 month ago

Technology1 month agoSingleStore’s BryteFlow acquisition targets data integration

-

Science & Environment2 months ago

Science & Environment2 months agoQuantum ‘supersolid’ matter stirred using magnets

-

Technology1 month ago

Technology1 month agoMicrophone made of atom-thick graphene could be used in smartphones

-

Sport1 month ago

Sport1 month agoBoxing: World champion Nick Ball set for Liverpool homecoming against Ronny Rios

-

Entertainment1 month ago

Entertainment1 month agoBruce Springsteen endorses Harris, calls Trump “most dangerous candidate for president in my lifetime”

-

Technology1 month ago

Technology1 month agoEpic Games CEO Tim Sweeney renews blast at ‘gatekeeper’ platform owners

-

Sport1 month ago

Sport1 month agoShanghai Masters: Jannik Sinner and Carlos Alcaraz win openers

-

Money1 month ago

Money1 month agoTiny clue on edge of £1 coin that makes it worth 2500 times its face value – do you have one lurking in your change?

-

Business1 month ago

Business1 month agoWater companies ‘failing to address customers’ concerns’

-

MMA1 month ago

MMA1 month agoPennington vs. Peña pick: Can ex-champ recapture title?

-

Technology2 months ago

Technology2 months agoMeta has a major opportunity to win the AI hardware race

-

MMA1 month ago

MMA1 month agoDana White’s Contender Series 74 recap, analysis, winner grades

-

MMA1 month ago

MMA1 month agoKayla Harrison gets involved in nasty war of words with Julianna Pena and Ketlen Vieira

-

Sport1 month ago

Sport1 month agoAmerica’s Cup: Great Britain qualify for first time since 1964

-

Technology1 month ago

Technology1 month agoMicrosoft just dropped Drasi, and it could change how we handle big data

-

Technology1 month ago

Technology1 month agoLG C4 OLED smart TVs hit record-low prices ahead of Prime Day

-

Science & Environment2 months ago

Science & Environment2 months agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

News2 months ago

News2 months ago▶️ Hamas in the West Bank: Rising Support and Deadly Attacks You Might Not Know About

-

News1 month ago

News1 month agoHarry vs Sun publisher: ‘Two obdurate but well-resourced armies’

-

Sport1 month ago

Sport1 month agoWXV1: Canada 21-8 Ireland – Hosts make it two wins from two

-

MMA1 month ago

MMA1 month ago‘Uncrowned queen’ Kayla Harrison tastes blood, wants UFC title run

-

Football1 month ago

Football1 month ago'Rangers outclassed and outplayed as Hearts stop rot'

-

Science & Environment2 months ago

Science & Environment2 months agoNerve fibres in the brain could generate quantum entanglement

-

Science & Environment2 months ago

Science & Environment2 months agoNuclear fusion experiment overcomes two key operating hurdles

-

Technology2 months ago

Technology2 months agoWhy Machines Learn: A clever primer makes sense of what makes AI possible

-

Technology2 months ago

Technology2 months agoUniversity examiners fail to spot ChatGPT answers in real-world test

-

Travel1 month ago

World of Hyatt welcomes iconic lifestyle brand in latest partnership

-

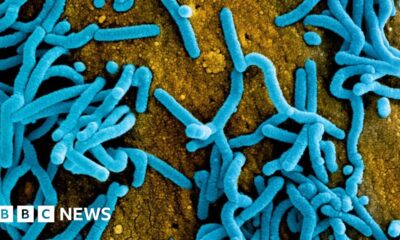

News1 month ago

News1 month agoRwanda restricts funeral sizes following outbreak

-

Technology1 month ago

Technology1 month agoCheck, Remote, and Gusto discuss the future of work at Disrupt 2024

-

Sport1 month ago

Sport1 month agoURC: Munster 23-0 Ospreys – hosts enjoy second win of season

-

Sport1 month ago

Sport1 month agoNew Zealand v England in WXV: Black Ferns not ‘invincible’ before game

-

TV1 month ago

TV1 month agoসারাদেশে দিনব্যাপী বৃষ্টির পূর্বাভাস; সমুদ্রবন্দরে ৩ নম্বর সংকেত | Weather Today | Jamuna TV

-

Business1 month ago

It feels nothing like ‘fine dining’, but Copenhagen’s Kadeau is a true gift

-

Business1 month ago

Italy seeks to raise more windfall taxes from companies

-

Business1 month ago

The search for Japan’s ‘lost’ art

-

Business1 month ago

Business1 month agoWhen to tip and when not to tip

-

News1 month ago

News1 month agoHull KR 10-8 Warrington Wolves – Robins reach first Super League Grand Final

-

Sport1 month ago

Sport1 month agoPremiership Women’s Rugby: Exeter Chiefs boss unhappy with WXV clash

-

Politics1 month ago

‘The night of the living dead’: denial-fuelled Tory conference ends without direction | Conservative conference

-

Science & Environment2 months ago

Science & Environment2 months agoA tale of two mysteries: ghostly neutrinos and the proton decay puzzle

-

MMA1 month ago

MMA1 month agoHow to watch Salt Lake City title fights, lineup, odds, more

-

Sport1 month ago

Sport1 month agoSnooker star Shaun Murphy now hits out at Kyren Wilson after war of words with Mark Allen

-

MMA1 month ago

MMA1 month agoStephen Thompson expects Joaquin Buckley to wrestle him at UFC 307

-

Sport1 month ago

Sport1 month agoHow India became a Test cricket powerhouse

-

Sport1 month ago

Sport1 month agoFans say ‘Moyes is joking, right?’ after his bizarre interview about under-fire Man Utd manager Erik ten Hag goes viral

-

Science & Environment2 months ago

Science & Environment2 months agoA slight curve helps rocks make the biggest splash

-

Technology1 month ago

Technology1 month agoNintendo’s latest hardware is not the Switch 2

-

News1 month ago

News1 month agoCrisis in Congo and Capsizing Boats Mediterranean

-

Money1 month ago

Money1 month agoThe four errors that can stop you getting £300 winter fuel payment as 880,000 miss out – how to avoid them

-

TV1 month ago

TV1 month agoTV Patrol Express September 26, 2024

-

Football1 month ago

Football1 month agoFifa to investigate alleged rule breaches by Israel Football Association

-

Football1 month ago

Football1 month agoWhy does Prince William support Aston Villa?

-

News1 month ago

News1 month ago▶ Hamas Spent $1B on Tunnels Instead of Investing in a Future for Gaza’s People

-

Technology1 month ago

Technology1 month agoSamsung Galaxy Tab S10 won’t get monthly security updates

-

News2 months ago

News2 months ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Science & Environment2 months ago

Science & Environment2 months agoHow to wrap your mind around the real multiverse

-

News1 month ago

News1 month agoUK forces involved in response to Iran attacks on Israel

-

Technology1 month ago

Technology1 month agoMusk faces SEC questions over X takeover

-

Sport1 month ago

Sport1 month agoChina Open: Carlos Alcaraz recovers to beat Jannik Sinner in dramatic final

-

Business1 month ago

Bank of England warns of ‘future stress’ from hedge fund bets against US Treasuries

-

Technology1 month ago

Technology1 month agoJ.B. Hunt and UP.Labs launch venture lab to build logistics startups

-

Sport1 month ago

Sport1 month agoSturm Graz: How Austrians ended Red Bull’s title dominance

-

Sport1 month ago

Sport1 month agoBukayo Saka left looking ‘so helpless’ in bizarre moment Conor McGregor tries UFC moves on Arsenal star

-

Sport1 month ago

Sport1 month agoCoco Gauff stages superb comeback to reach China Open final

-

Sport1 month ago

Sport1 month agoMan Utd fans prepare for ‘unholy conversations’ as Scott McTominay takes just 25 seconds to score for Napoli again

-

Sport1 month ago

Sport1 month agoPhil Jones: ‘I had to strip everything back – now management is my focus’

-

Sport4 weeks ago

Sport4 weeks agoSunderland boss Regis Le Bris provides Jordan Henderson transfer update 13 years after £20m departure to Liverpool

-

Womens Workouts2 months ago

Womens Workouts2 months ago3 Day Full Body Women’s Dumbbell Only Workout

-

Science & Environment2 months ago

Science & Environment2 months agoTime travel sci-fi novel is a rip-roaringly good thought experiment

You must be logged in to post a comment Login