Technology

DOJ sues Visa for locking out rival payment platforms

The Department of Justice has filed an antitrust lawsuit against Visa, alleging that the financial services firm has an illegal monopoly over debit network markets and has attempted to unlawfully crush competitors, including fintech companies like PayPal and Square. The lawsuit, which was first rumored by Bloomberg, follows a multiyear investigation of Visa which the company disclosed in 2021.

“We allege that Visa has unlawfully amassed the power to extract fees that far exceed what it could charge in a competitive market,” Attorney General Merrick Garland said in a statement. “Merchants and banks pass along those costs to consumers, either by raising prices or reducing quality or service. As a result, Visa’s unlawful conduct affects not just the price of one thing — but the price of nearly everything.”

“Visa’s unlawful conduct affects not just the price of one thing — but the price of nearly everything”

Visa makes more than $7 billion a year in payment processing fees alone, and more than 60 percent of debit transactions in the United States run on Visa’s network, the complaint claims. The government alleges that Visa’s market dominance is partly due to the “web of exclusionary agreements” it imposes on businesses and banks. Visa has also attempted to “smother” competitors — including smaller debit networks and newer fintech companies — the complaint alleges. Visa executives allegedly feel particularly threatened by Apple, which the company has described as an “existential threat,” the DOJ claims.

According to the complaint, Visa entered into paid agreements with potential competitors as part of an effort to fend off competition from newer entrants into the payment processing industry. These practices have allowed Visa to build an “enormous moat” around its business, the complaint alleges.

Regulators have had their eyes on Visa for a while. In 2020, the DOJ filed a civil antitrust lawsuit to stop Visa’s $5.3 billion acquisition of Plaid, a fintech company, arguing that Visa was attempting to snuff out a “payments platform that would challenge Visa’s monopoly.” Acquiring Plaid, the DOJ’s complaint claimed, was Visa’s “insurance policy” to protect against a “threat to our important US debit business.” Visa and Plaid scrapped their plans for a merger in 2021 as a result of the DOJ’s lawsuit.

Payment processors are a ubiquitous part of Americans’ lives, and they’re increasingly powerful internet gatekeepers. In 2020, Visa and Mastercard stopped processing payments on Pornhub after reports of illegal content on the site. The following year, OnlyFans announced (before ultimately abandoning) a ban on “explicit sexual content,” citing payment processors’ reticence to work with the website because of its reputation as a porn platform. But the DOJ argues that Visa didn’t come by this dominance fairly — and it’s taking steps to stop it.

Technology

Fierce new Monster Hunter Wilds trailer reveals release date

Capcom has treated us to another long look at Monster Hunter Wilds, including that all-important release date. The hunt is on beginning February 28, 2025, on PlayStation 5, Xbox Series X/S, and PC.

The latest trailer for the next entry in the massively popular Monster Hunter franchise showed off a more personal side to the story, opening with a child fleeing the wrath of the White Wraith and introducing us to many of the characters we can look forward to bonding with while slaying giant beasts. The adorable Palicos are back in full force, helping with cooking and on the battlefield as they have in prior games. In one instance a hunter was knocked out and saved by a Palico dropping a health potion on them.

Speaking of monsters, a number of impressive beasts appeared here, though none that haven’t been shown in prior trailers, including a massive water-born creature that leaps and dives through the water and a large hairy beast that the hunter uses their grappling hook to crush with some debris in the environment. However, the star of the show remains The White Wraith Arkveld. This is the game’s premier monster and “big bad” that the plot will center around hunting. This is described as a species of monster that was believed to be extinct, yet has reappeared and wreaks havoc on the world and its people.

Weather has been a major focus for Monster Hunter Wilds, and this trailer shows a few more instances of how the landscape and ecology can shift based on the current weather. Minor examples show how rain can cause a river to become a flood, while sandstorms can cut visibility down to nearly nothing and cause deadly lightning strikes.

Monster Hunter Wilds will come out on February 28, 2025, on PlayStation 5, Xbox Series X/S, and PC. Preorders are live right now with a special Layered Armor Guild Knight Set and Hope Charm Talisman offered as bonuses.

Servers computers

The Ultimate Mini Server Rack – Size doesn't matter…

Jeff’s Video – https://www.youtube.com/watch?v=c8-cdA50bpU

Tim’s Ansible Video – https://www.youtube.com/watch?v=CbkEWcUZ7zM

Jeff’s Axe Effect – https://www.craftcomputing.com/product/axe-effect-temperature-sensor-beta-/1

Products:

Rackmate T1 – https://amzn.to/3WaZJh5

Rackmate T1 (Europe) – https://www.amazon.de/dp/B0CS6MHCY8

10″ Screen – https://amzn.to/4bCf3Yx

Vobot Dock – https://link.rdwl.me/66B7l

Sensor Panel – https://amzn.to/3XVqR54

Button – https://amzn.to/3xDD6sh

Netgear switch – https://amzn.to/45SCXhf

ITX Board – https://amzn.to/3xNreUu

Intel 13700k – https://amzn.to/3VYbs12

CPU Cooler – https://amzn.to/3RXHzNc

Power Block – https://amzn.to/3Li5B1P

ITX PSU – https://amzn.to/3LccMbI

Folding Keyboard – https://amzn.to/4bxF1ML

HDMI Splitter – https://amzn.to/3xQpiup

1TB SSD – https://amzn.to/4cRSYGp

——————————————————————————————-

🛒 Amazon Shop – https://www.amazon.com/shop/raidowl

👕 Merch – https://www.raidowlstore.com

🔥 Check out today’s best deals from Newegg: https://howl.me/clshD8fv8xj

——————————————————————————————-

Join the Discord: https://discord.gg/CUzhMSS7qd

Become a Channel Member!

https://www.youtube.com/channel/UC9evhW4JB_UdXSLeZGy8lGw/join

Support the channel on:

Patreon – https://www.patreon.com/RaidOwl

Discord – https://bit.ly/3J53xYs

Paypal – https://bit.ly/3Fcrs5V

My Hardware:

Intel 13900k – https://amzn.to/3Z6CGSY

Samsung 980 2TB – https://amzn.to/3myEa85

Logitech G513 – https://amzn.to/3sPS6yv

Logitech G703 – https://shop-links.co/cgVV8GQizYq

WD Ultrastar 12TB – https://amzn.to/3EvOPXc

My Studio Equipment:

Sony FX3 – https://shop-links.co/cgVV8HHF3mX / https://amzn.to/3qq4Jxl

Sony 24mm 1.4 GM – https://shop-links.co/cgVV8HuQfCc

Tascam DR-40x Audio Recorder – https://shop-links.co/cgVV8G3Xt0e

Rode NTG4+ Mic – https://amzn.to/3JuElLs

Atmos NinjaV – https://amzn.to/3Hi0ue1

Godox SL150 Light – https://amzn.to/3Es0Qg3

https://links.hostowl.net/

0:00 Intro

0:31 Rackmate T1 Mini Rack

1:32 Let’s check out the hardware

1:42 Screens!

4:06 Networking

4:43 Mini ITX PC

5:50 There’s a GPU?

6:51 Raspberry Pi 5s

7:37 I’m calling out Techno Tim

8:07 K3s/Docker/Ansible

9:28 Axe Effect temperature monitor

9:55 Why no PoE?

10:07 Peripherals

10:30 Powering everything

11:48 Overall thoughts

source

Technology

Spotify’s ‘AI Playlist’ is rolling out in Beta in the US & other regions

Generative AI, aka GenAI, has taken the world by storm. It’s not new to see the GenAI features these days on multiple apps, smartphones, PCs, and other tech products. Spotify is one such app that has recently announced a GenAI-powered feature called “AI playlists.” Today, Spotify announced it is expanding the AI Playlist in beta to Premium users in more countries.

Spotify’s AI Playlist is now available in the US as part of the Beta rollout

Initially, Spotify launched the AI Playlist feature back in April this year. Back then, it was exclusively available to Premium users from the UK and Australia. Now, the feature is available to Premium users in the U.S., Canada, Ireland, and New Zealand as part of the beta rollout. To catch you up, Spotify’s AI Playlist creates a customized playlist based on the given text prompt.

You can ask AI to curate a playlist according to your mood and vibe. For example, you can enter a prompt like “Give me some funky and upbeat songs.” That’s all you have to do. Then, Spotify uses the LLM technology to get the idea and scour through your app’s search history to create a playlist that matches your prompt.

This new feature can be your song-recommending friend when Spotify’s music feed doesn’t quite show what you might be looking for. It is worth noting that the generated playlist includes 30 songs which you can re-customize with additional prompts.

Here’s how you can access the feature on Spotify

If you are a Premium user and live in the said countries, the feature can be accessed on the Spotify app by tapping on the “+” button at the top right next to your Spotify library. Once you select the “AI Playlist” option, a drop-down menu will open the chat box. That’s where you can enter the text prompts. Not to forget, the feature also provides prompt suggestions.

Currently, the AI Playlist beta is only available on Spotify’s Android and iOS apps for now. That also means the feature is not available on the Spotify desktop app or web. However, we expect the audio streaming giant to bring it all devices down the line. In related news, Spotify has recently made some changes to the Family plan to prevent the “Kids” playlists from messing with parents’ algorithms and recommendations.

Servers computers

Rack 20U dan 30U

Closedrack 20U dan 30U W600 D900/1100mm adalah solusi untuk kebutuhan perangkat Rackmount anda.

Sebagai informasi :

1. 20/30U adalah tinggi rack, “U” adalah satuan tinggi perangkat yg di gunakan International dan jadi patokan penentuan kebutuhan rack. U=44mm

2. W600 adalah lebar rack yaitu 600mm/ 60cm dimana di dalam nya ada railing 19″(Inch) yg merupakan lebar perangkat International. Jika suatu perangkat di katakan “Rack Mount”, maka lebar perangkat HARUS 19″.

3. D900/ 1100mm adalah Depth/ kedalaman dari rack tsb dimana ini tidak ada standart baku, contoh ada perangkat yg depth nya hanya 300mm tapi untuk server biasa nya 700 depth nya.

Closedrack di gunakan terutama untuk mengamankan Perangkat Elektronik yg kita install selain agar tidak hilang, terutama agar settingan yg sudah di lakukan tidak di rubah2 olah tangan2 jahil.

Silakan feel free untuk diskusi kebutuhan rack anda.

WA: 0812 991 9892 (WILLIAM)

Pleease LIKE, SUBCRIBE, SHARE dan Comment untuk update produk2 lain nya. Many thanks

#Rack 20U dan 30U .

source

Technology

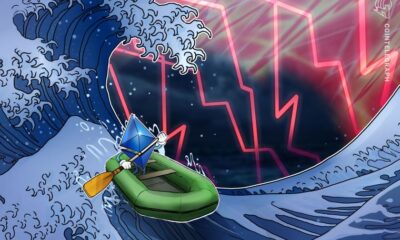

FTX advisor and Alameda CEO Caroline Ellison gets two years in prison

A US district court judge sentenced Caroline Ellison, the former advisor and ex-girlfriend to the convicted crypto fraudster and FTX founder Sam Bankman-Fried, to two years in prison.

reported Ellison’s sentence for her role in the $8 billion in fraud committed by the FTX crypto exchange that sent for 25 years back in March. Ellison will also have to serve three years of supervised release once she’s finished her prison sentence.

Ellison pled guilty at the end of 2022 to just as Bankman-Fried was being extradited to the US from the Bahamas. US Securities and Exchange Commission (SEC) Director of Enforcement Sanjay Wadhwa said following Ellison’s plea that she and Wang “were active participants in a scheme to conceal material information from FTX investors.”

Ellison was also the former chief executive officer of FTX’s sister company Alameda Research. Prosecutors said she diverted FTX customers’ funds onto Alameda’s books to hide risks from their clients. Ellison testified against Bankman-Fried, making her a key witness in his criminal fraud trial.

Prosecutors also got Bankman-Friend’s house arrest and bail revoked when a judge determined the FTX founder tried to hinder Ellison’s testimony last year. Bankman-Fried tried to message FTX’s general counsel on Signal and email in 2023 to influence Ellison’s testimony who was only identified as “Witness-1.”

Nine months later, Bankman-Fried showed that prosecutors said were an attempt to damage her reputation especially amongst prospective jurors. The judge agreed both instances merited Bankman-Fried’s arrest and jailing while he awaited trial. Bankman-Fried is currently serving his 25-year sentence in a federal prison in Brooklyn awaiting appeal for his conviction.

Ellison issued a statement before her sentence apologizing for her crimes to the people she and her former firm defrauded. Prosecutors did not issue a recommended sentence and characterized her cooperation with investigators as “exemplary” in a memo to the judge.

“Not a day goes by that I don’t think of the people I hurt,” Ellison said in court. “I am deeply ashamed of what I have done.”

Technology

AutoToS makes LLM planning fast, accurate and inexpensive

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Large language models (LLMs) have shown promise in solving planning and reasoning tasks by searching through possible solutions. However, existing methods can be slow, computationally expensive and provide unreliable answers.

Researchers from Cornell University and IBM Research have introduced AutoToS, a new technique that combines the planning power of LLMs with the speed and accuracy of rule-based search algorithms. AutoToS eliminates the need for human intervention and significantly reduces the computational cost of solving planning problems. This makes it a promising technique for LLM applications that must reason over large solution spaces.

Thought of Search

There is a growing interest in using LLMs to handle planning problems, and researchers have developed several techniques for this purpose. The more successful techniques, such as Tree of Thoughts, use LLMs as a search algorithm that can validate solutions and propose corrections.

While these approaches have demonstrated impressive results, they face two main challenges. First, they require numerous calls to LLMs, which can be computationally expensive, especially when dealing with complex problems with thousands of possible solutions. Second, they do not guarantee that the LLM-based algorithm qualifies for “completeness” and “soundness.” Completeness ensures that if a solution exists, the algorithm will eventually find it, while soundness guarantees that any solution returned by the algorithm is valid.

Thought of Search (ToS) offers an alternative approach. ToS leverages LLMs to generate code for two key components of search algorithms: the successor function and the goal function. The successor function determines how the search algorithm explores different nodes in the search space, while the goal function checks whether the search algorithm has reached the desired state. These functions can then be used by any offline search algorithm to solve the problem. This approach is much more efficient than keeping the LLM in the loop during the search process.

“Historically, in the planning community, these search components were either manually coded for each new problem or produced automatically via translation from a description in a planning language such as PDDL, which in turn was either manually coded or learned from data,” Michael Katz, principal research staff member at IBM Research, told VentureBeat. “We proposed to use the large language models to generate the code for the search components from the textual description of the planning problem.”

The original ToS technique showed impressive progress in addressing the soundness and completeness requirements of search algorithms. However, it required a human expert to provide feedback on the generated code and help the model refine its output. This manual review was a bottleneck that reduced the speed of the algorithm.

Automating ToS

“In [ToS], we assumed a human expert in the loop, who could check the code and feedback the model on possible issues with the generated code, to produce a better version of the search components,” Katz said. “We felt that in order to automate the process of solving the planning problems provided in a natural language, the first step must be to take the human out of that loop.”

AutoToS automates the feedback and exception handling process using unit tests and debugging statements, combined with few-shot and chain-of-thought (CoT) prompting techniques.

AutoToS works in multiple steps. First, it provides the LLM with the problem description and prompts it to generate code for the successor and goal functions. Next, it runs unit tests on the goal function and provides feedback to the model if it fails. The model then uses this feedback to correct its code. Once the goal function passes the tests, the algorithm runs a limited breadth-first search to check if the functions are sound and complete. This process is repeated until the generated functions pass all the tests.

Finally, the validated functions are plugged into a classic search algorithm to perform the full search efficiently.

AutoToS in action

The researchers evaluated AutoToS on several planning and reasoning tasks, including BlocksWorld, Mini Crossword and 24 Game. The 24 Game is a mathematical puzzle where you are given four integers and must use basic arithmetic operations to create a formula that equates to 24. BlocksWorld is a classic AI planning domain where the goal is to rearrange blocks stacked in towers. Mini Crosswords is a simplified crossword puzzle with a 5×5 grid.

They tested various LLMs from different families, including GPT-4o, Llama 2 and DeepSeek Coder. They used both the largest and smallest models from each family to evaluate the impact of model size on performance.

Their findings showed that with AutoToS, all models were able to identify and correct errors in their code when given feedback. The larger models generally produced correct goal functions without feedback and required only a few iterations to refine the successor function. Interestingly, GPT-4o-mini performed surprisingly well in terms of accuracy despite its small size.

“With just a few calls to the language model, we demonstrate that we can obtain the search components without any direct human-in-the-loop feedback, ensuring soundness, completeness, accuracy and nearly 100% accuracy across all models and all domains,” the researchers write.

Compared to other LLM-based planning approaches, ToS drastically reduces the number of calls to the LLM. For example, for the 24 Game dataset, which contains 1,362 puzzles, the previous approach would call GPT-4 approximately 100,000 times. AutoToS, on the other hand, needed only 2.2 calls on average to generate sound search components.

“With these components, we can use the standard BFS algorithm to solve all the 1,362 games together in under 2 seconds and get 100% accuracy, neither of which is achievable by the previous approaches,” Katz said.

AutoToS for enterprise applications

AutoToS can have direct implications for enterprise applications that require planning-based solutions. It cuts the cost of using LLMs and reduces the reliance on manual labor, enabling experts to focus on high-level planning and goal specification.

“We hope that AutoToS can help with both the development and deployment of planning-based solutions,” Katz said. “It uses the language models where needed—to come up with verifiable search components, speeding up the development process and bypassing the unnecessary involvement of these models in the deployment, avoiding the many issues with deploying large language models.”

ToS and AutoToS are examples of neuro-symbolic AI, a hybrid approach that combines the strengths of deep learning and rule-based systems to tackle complex problems. Neuro-symbolic AI is gaining traction as a promising direction for addressing some of the limitations of current AI systems.

“I don’t think that there is any doubt about the role of hybrid systems in the future of AI,” Harsha Kokel, research scientist at IBM, told VentureBeat. “The current language models can be viewed as hybrid systems since they perform a search to obtain the next tokens.”

While ToS and AutoToS show great promise, there is still room for further exploration.

“It is exciting to see how the landscape of planning in natural language evolves and how LLMs improve the integration of planning tools in decision-making workflows, opening up opportunities for intelligent agents of the future,” Kokel and Katz said. “We are interested in general questions of how the world knowledge of LLMs can help improve planning and acting in real-world environments.”

Source link

-

Womens Workouts1 day ago

Womens Workouts1 day ago3 Day Full Body Women’s Dumbbell Only Workout

-

News6 days ago

News6 days agoYou’re a Hypocrite, And So Am I

-

Sport5 days ago

Sport5 days agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

Technology7 days ago

Technology7 days agoWould-be reality TV contestants ‘not looking real’

-

News2 days ago

News2 days agoOur millionaire neighbour blocks us from using public footpath & screams at us in street.. it’s like living in a WARZONE – WordupNews

-

Science & Environment5 days ago

Science & Environment5 days ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment6 days ago

Science & Environment6 days agoHow to unsnarl a tangle of threads, according to physics

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Science & Environment6 days ago

Science & Environment6 days agoLiquid crystals could improve quantum communication devices

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

Science & Environment6 days ago

Science & Environment6 days agoQuantum ‘supersolid’ matter stirred using magnets

-

Science & Environment6 days ago

Science & Environment6 days agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment6 days ago

Science & Environment6 days agoSunlight-trapping device can generate temperatures over 1000°C

-

Science & Environment6 days ago

Science & Environment6 days agoHow to wrap your mind around the real multiverse

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

Science & Environment6 days ago

Science & Environment6 days agoWhy this is a golden age for life to thrive across the universe

-

Health & fitness7 days ago

Health & fitness7 days agoThe secret to a six pack – and how to keep your washboard abs in 2022

-

Science & Environment6 days ago

Science & Environment6 days agoLaser helps turn an electron into a coil of mass and charge

-

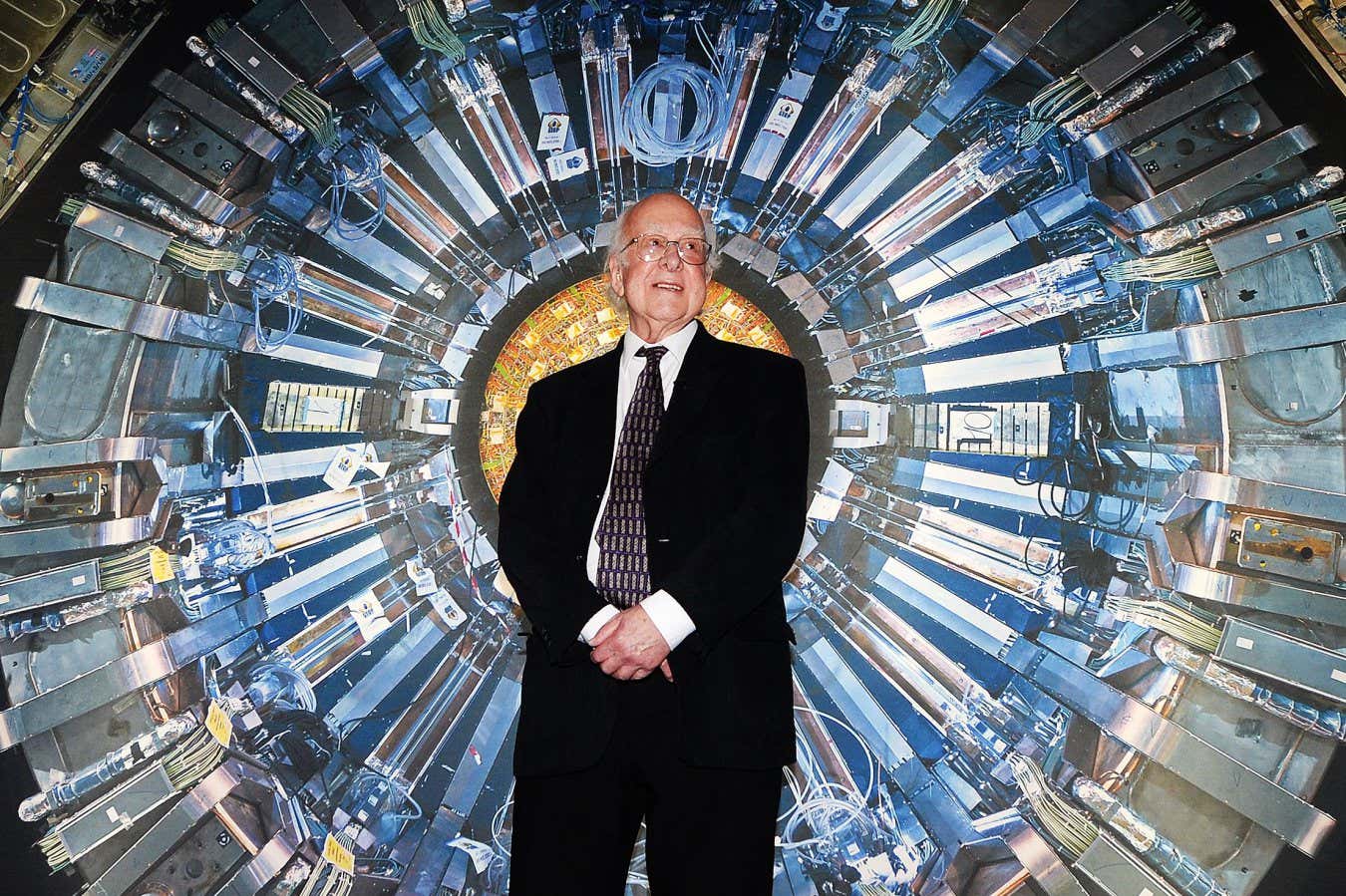

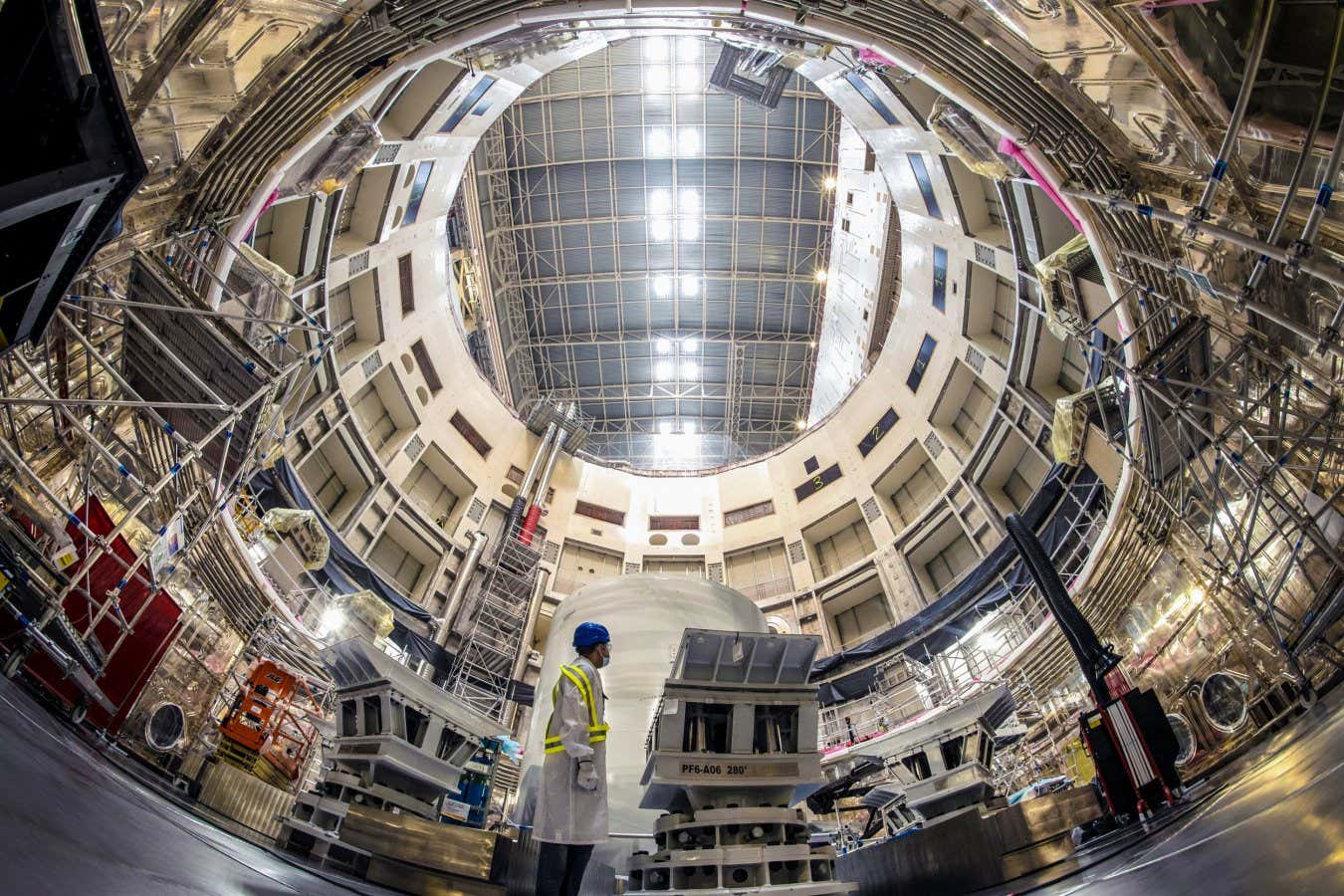

Science & Environment6 days ago

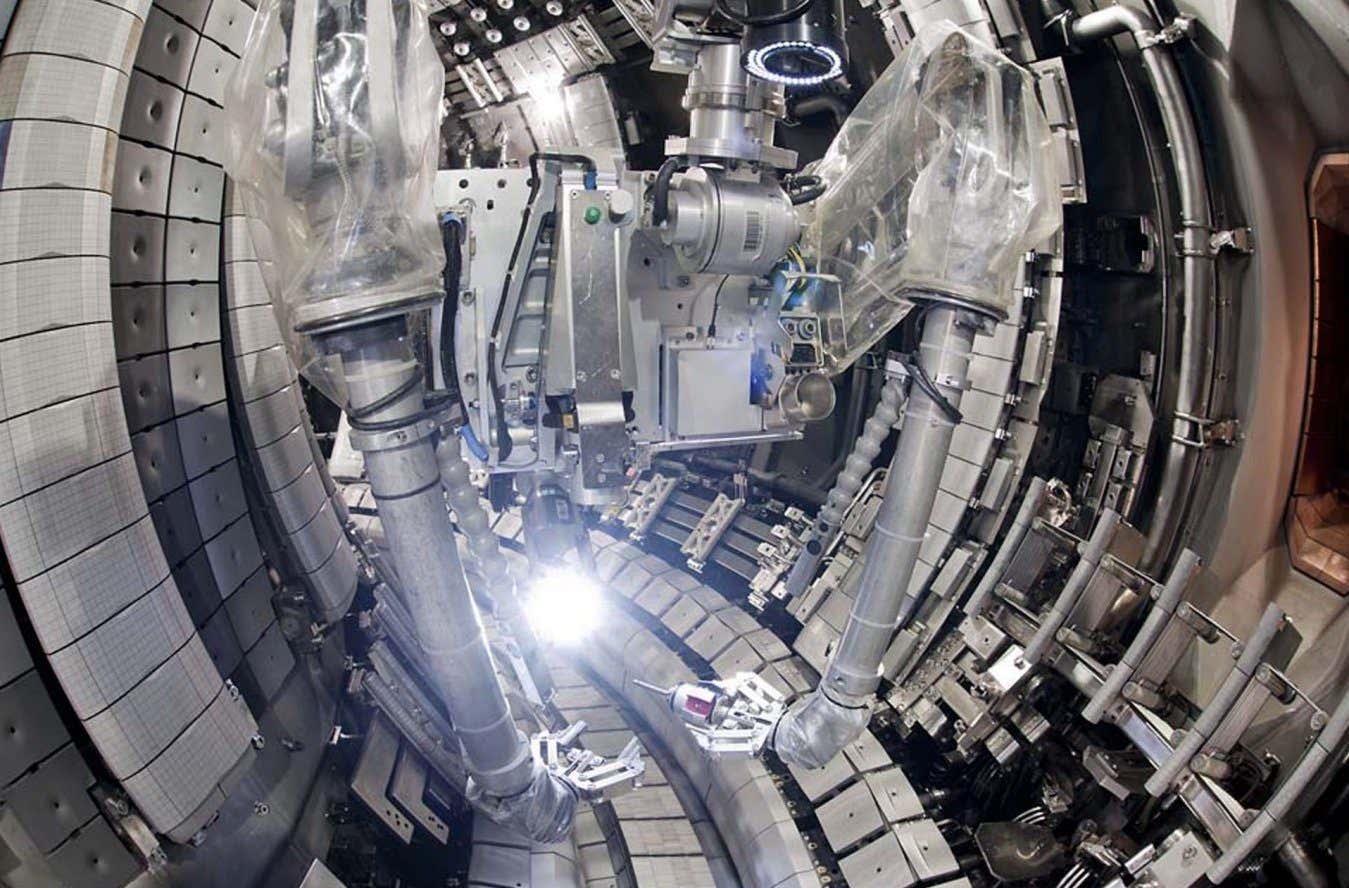

Science & Environment6 days agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

Science & Environment6 days ago

Science & Environment6 days agoNerve fibres in the brain could generate quantum entanglement

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoCardano founder to meet Argentina president Javier Milei

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoSEC asks court for four months to produce documents for Coinbase

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoBlockdaemon mulls 2026 IPO: Report

-

Science & Environment2 days ago

Science & Environment2 days agoMeet the world's first female male model | 7.30

-

News5 days ago

News5 days agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

Sport5 days ago

Sport5 days agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

Science & Environment5 days ago

Science & Environment5 days agoHyperelastic gel is one of the stretchiest materials known to science

-

Technology5 days ago

Technology5 days agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

Science & Environment6 days ago

Science & Environment6 days agoQuantum forces used to automatically assemble tiny device

-

News5 days ago

News5 days agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Science & Environment6 days ago

Science & Environment6 days agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment6 days ago

Science & Environment6 days agoQuantum time travel: The experiment to ‘send a particle into the past’

-

Science & Environment6 days ago

Science & Environment6 days agoPhysicists are grappling with their own reproducibility crisis

-

Science & Environment6 days ago

Science & Environment6 days agoNuclear fusion experiment overcomes two key operating hurdles

-

CryptoCurrency5 days ago

CryptoCurrency5 days ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency5 days ago

CryptoCurrency5 days ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

-

Womens Workouts4 days ago

Womens Workouts4 days agoBest Exercises if You Want to Build a Great Physique

-

Womens Workouts4 days ago

Womens Workouts4 days agoEverything a Beginner Needs to Know About Squatting

-

News5 days ago

News5 days agoChurch same-sex split affecting bishop appointments

-

Politics5 days ago

Politics5 days agoLabour MP urges UK government to nationalise Grangemouth refinery

-

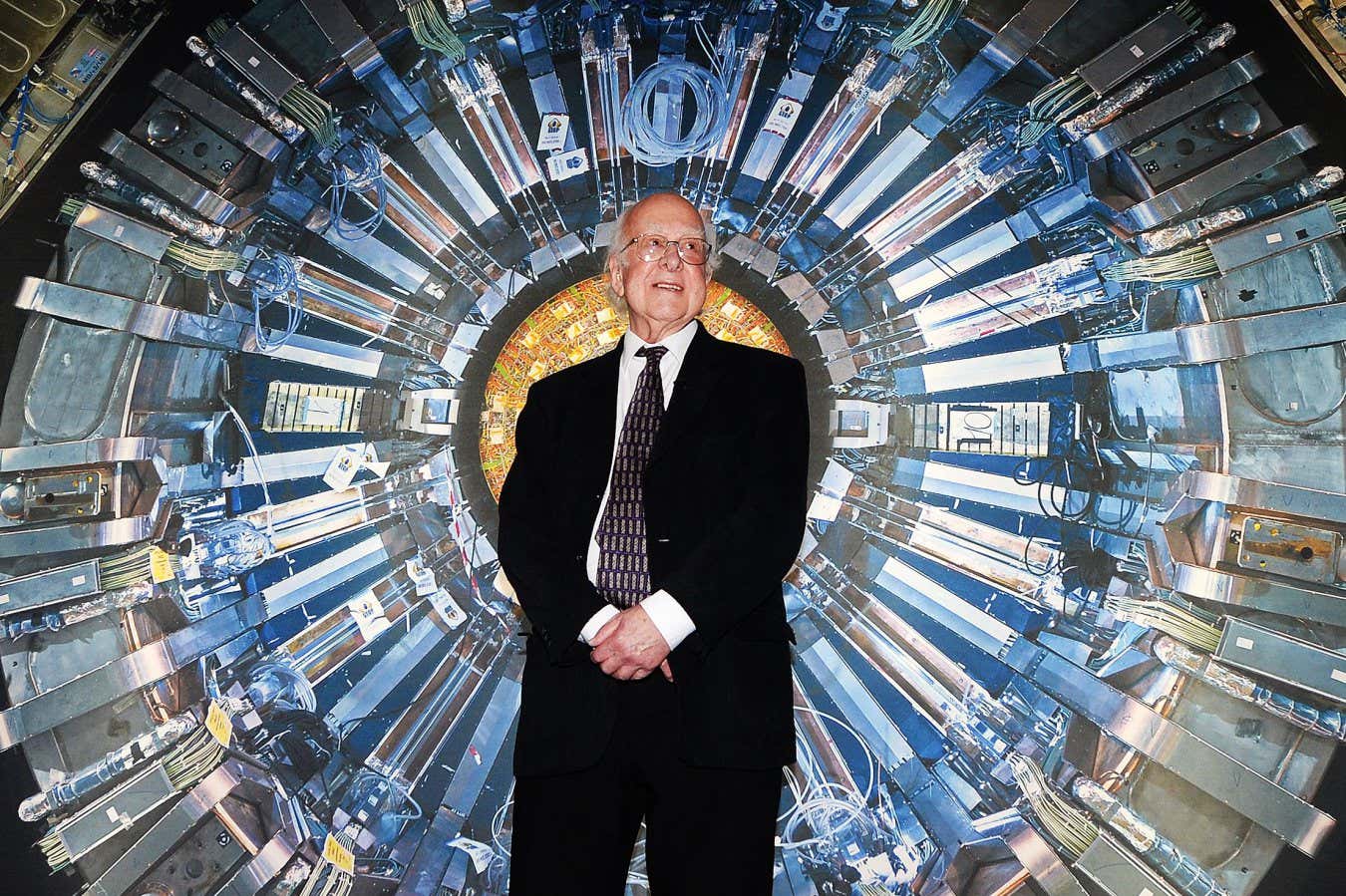

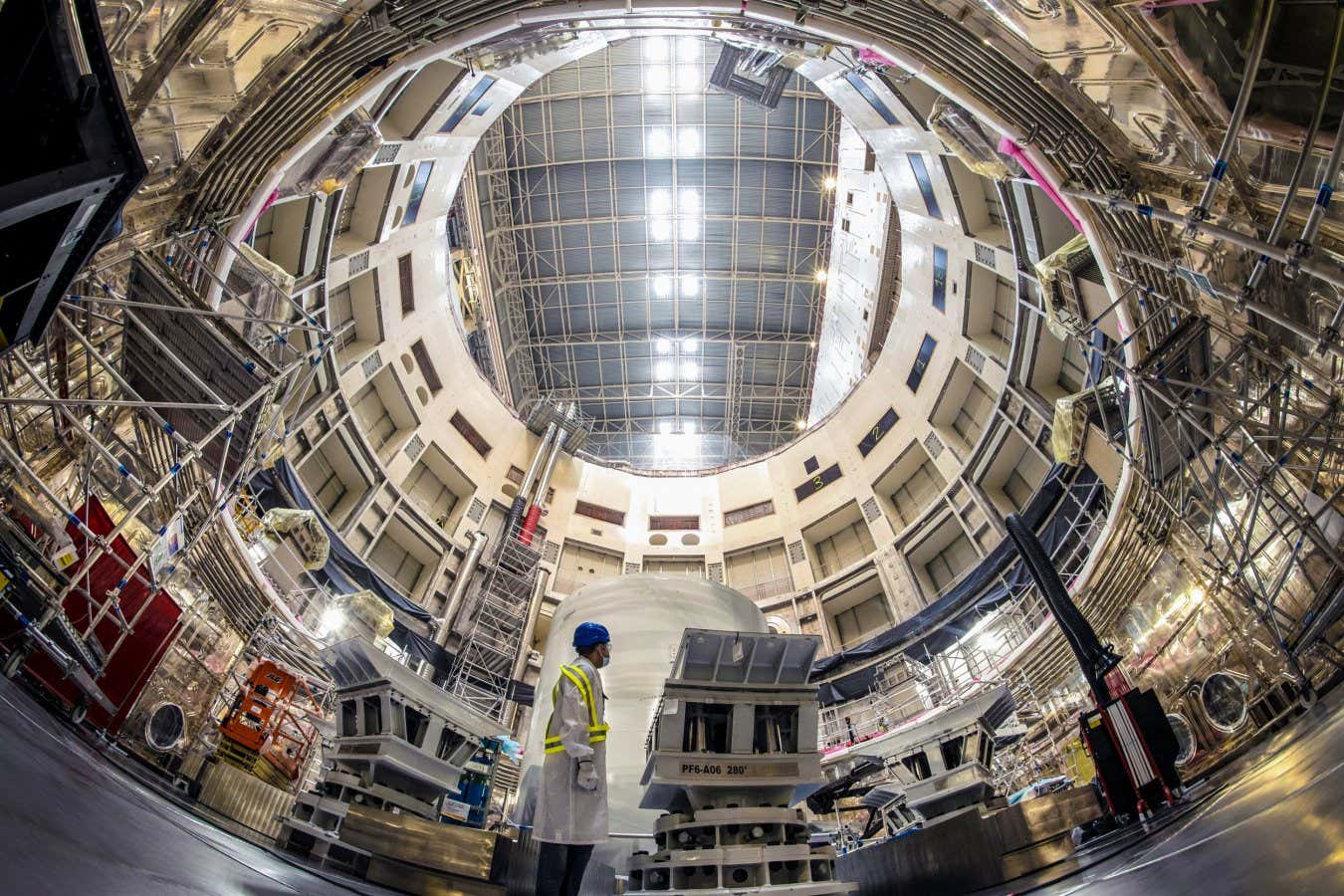

Science & Environment5 days ago

Science & Environment5 days agoHow one theory ties together everything we know about the universe

-

Science & Environment1 week ago

Science & Environment1 week agoCaroline Ellison aims to duck prison sentence for role in FTX collapse

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoHelp! My parents are addicted to Pi Network crypto tapper

-

Science & Environment5 days ago

Science & Environment5 days agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

CryptoCurrency5 days ago

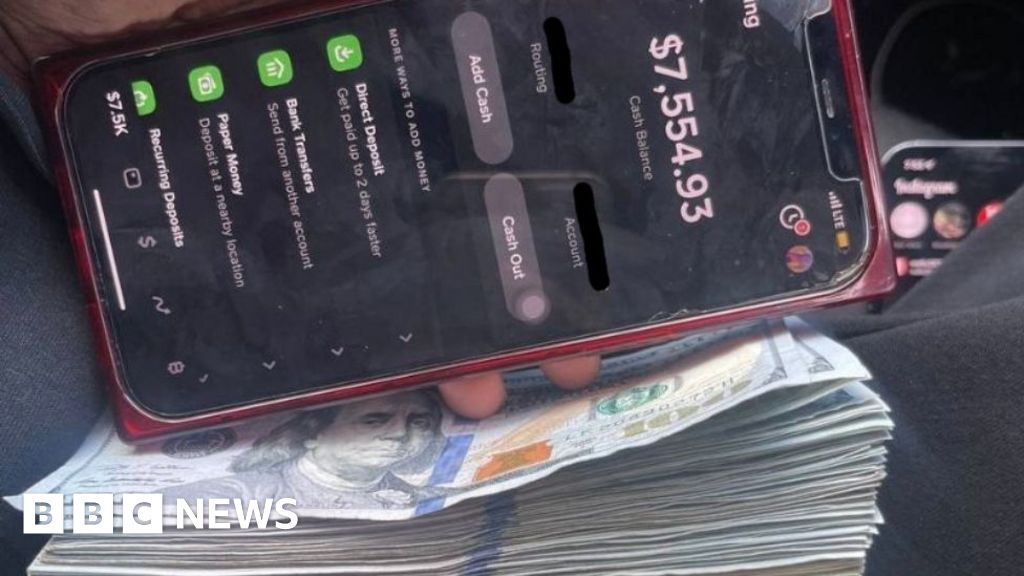

CryptoCurrency5 days agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency5 days ago

CryptoCurrency5 days ago‘Silly’ to shade Ethereum, the ‘Microsoft of blockchains’ — Bitwise exec

-

CryptoCurrency5 days ago

CryptoCurrency5 days ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

Business5 days ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Business5 days ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

News5 days ago

News5 days agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

News4 days ago

News4 days agoBangladesh Holds the World Accountable to Secure Climate Justice

-

News2 days ago

News2 days agoFour dead & 18 injured in horror mass shooting with victims ‘caught in crossfire’ as cops hunt multiple gunmen

-

Politics7 days ago

Politics7 days agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Technology5 days ago

Technology5 days agoFivetran targets data security by adding Hybrid Deployment

-

Money6 days ago

Money6 days agoWhat estate agents get up to in your home – and how they’re being caught

-

Science & Environment6 days ago

Science & Environment6 days agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Fashion Models5 days ago

Fashion Models5 days agoMixte

-

Science & Environment5 days ago

Science & Environment5 days agoHow Peter Higgs revealed the forces that hold the universe together

-

News6 days ago

News6 days ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

News6 days ago

News6 days agoRoad rage suspects in custody after gunshots, drivers ramming vehicles near Boise

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

Science & Environment5 days ago

Science & Environment5 days agoUK spurns European invitation to join ITER nuclear fusion project

-

Science & Environment5 days ago

Science & Environment5 days agoWhy we need to invoke philosophy to judge bizarre concepts in science

-

Science & Environment5 days ago

Science & Environment5 days agoHow do you recycle a nuclear fusion reactor? We’re about to find out

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoElon Musk is worth 100K followers: Yat Siu, X Hall of Flame

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoEthereum falls to new 42-month low vs. Bitcoin — Bottom or more pain ahead?

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoETH falls 6% amid Trump assassination attempt, looming rate cuts, ‘FUD’ wave

-

Politics5 days ago

The Guardian view on 10 Downing Street: Labour risks losing the plot | Editorial

-

Politics5 days ago

Politics5 days agoI’m in control, says Keir Starmer after Sue Gray pay leaks

-

Politics5 days ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

Business5 days ago

UK hospitals with potentially dangerous concrete to be redeveloped

-

Business5 days ago

Axel Springer top team close to making eight times their money in KKR deal

-

News5 days ago

News5 days ago“Beast Games” contestants sue MrBeast’s production company over “chronic mistreatment”

-

News5 days ago

News5 days agoSean “Diddy” Combs denied bail again in federal sex trafficking case

-

CryptoCurrency5 days ago

CryptoCurrency5 days agoBitcoin options markets reduce risk hedges — Are new range highs in sight?

-

Money5 days ago

Money5 days agoBritain’s ultra-wealthy exit ahead of proposed non-dom tax changes

-

Womens Workouts4 days ago

Womens Workouts4 days agoHow Heat Affects Your Body During Exercise

-

Womens Workouts4 days ago

Womens Workouts4 days agoKeep Your Goals on Track This Season

-

Womens Workouts4 days ago

Womens Workouts4 days agoWhich Squat Load Position is Right For You?

-

News2 days ago

News2 days agoWhy Is Everyone Excited About These Smart Insoles?

-

Womens Workouts1 day ago

Womens Workouts1 day ago3 Day Full Body Toning Workout for Women

-

News5 days ago

News5 days agoPolice chief says Daniel Greenwood 'used rank to pursue junior officer'

-

Science & Environment6 days ago

Science & Environment6 days agoElon Musk’s SpaceX contracted to destroy retired space station

-

Politics1 week ago

Starmer ally Hollie Ridley appointed as Labour general secretary | Labour

-

Technology1 week ago

Technology1 week ago‘The dark web in your pocket’

-

Business1 week ago

Business1 week agoGuardian in talks to sell world’s oldest Sunday paper

-

News5 days ago

Freed Between the Lines: Banned Books Week

You must be logged in to post a comment Login