Crypto World

The Next Paradigm Shift Beyond Large Language Models

Artificial Intelligence has made extraordinary progress over the last decade, largely driven by the rise of large language models (LLMs). Systems such as GPT-style models have demonstrated remarkable capabilities in natural language understanding and generation. However, leading AI researchers increasingly argue that we are approaching diminishing returns with purely text-based, token-prediction architectures.

One of the most influential voices in this debate is Yann LeCun, Chief AI Scientist at Meta, who has consistently advocated for a new direction in AI research: World Models. These systems aim to move beyond pattern recognition toward a deeper, more grounded understanding of how the world works.

In this article, we explore what world models are, how they differ from large language models, why they matter, and which open-source world model projects are currently shaping the field.

What Are World Models?

At their core, world models are AI systems that learn internal representations of the environment, allowing them to simulate, predict, and reason about future states of the world.

Rather than mapping inputs directly to outputs, a world model builds a latent model of reality—a kind of internal mental simulation. This enables the system to answer questions such as:

- What is likely to happen next?

- What would happen if I take this action?

- Which outcomes are plausible or impossible?

This approach mirrors how humans and animals learn. We do not simply react to stimuli; we form internal models that let us anticipate consequences, plan actions, and avoid costly mistakes.

Yann LeCun views world models as a foundational component of human-level artificial intelligence, particularly for systems that must interact with the physical world.

Why Large Language Models Are Not Enough

Large language models are fundamentally statistical sequence predictors. They excel at identifying patterns in massive text corpora and predicting the next token given context. While this produces fluent and often impressive outputs, it comes with inherent limitations.

Key Limitations of LLMs

Lack of grounded understanding: LLMs are trained primarily on text rather than on physical experience.

Weak causal reasoning: They capture correlations rather than true cause-and-effect relationships.

No internal physics or common sense model:

They cannot reliably reason about space, time, or physical constraints.

Reactive rather than proactive: They respond to prompts but do not plan or act autonomously.

As LeCun has repeatedly stated,

predicting words is not the same as understanding the world.

How World Models Differ from Traditional Machine Learning

World models represent a significant departure from both classical supervised learning and modern deep learning pipelines.

Self-Supervised Learning at Scale

World models typically learn in a self-supervised or unsupervised manner. Instead of relying on labelled datasets, they learn by:

Predicting future states from past observations

- Filling in missing sensory information

- Learning latent representations from raw data such as video, images, or sensor streams

- This mirrors biological learning: humans and animals acquire vast amounts of knowledge simply by observing the world, not by receiving explicit labels.

Core Components of a World Model

A practical world model architecture usually consists of three key elements:

1. Perception Module

Encodes raw sensory inputs (e.g. images, video, proprioception) into a compact latent representation.

2. Dynamics Model

Learns how the latent state evolves over time, capturing causality and temporal structure.

3. Planning or Control Module

Uses the learned model to simulate future trajectories and select actions that optimise a goal.

This separation allows the system to think before it acts, dramatically improving efficiency and safety.

Practical Applications of World Models

World models are particularly valuable in domains where real-world experimentation is expensive, slow, or dangerous.

Robotics

Robots equipped with world models can predict the physical consequences of their actions, for example, whether grasping one object will destabilise others nearby.

Autonomous Vehicles

By simulating multiple future driving scenarios internally, world models enable safer planning under uncertainty.

Game Playing and Simulated Environments

World models allow agents to learn strategies without exhaustive trial-and-error in the real environment.

Industrial Automation

Factories and warehouses benefit from AI systems that can anticipate failures, optimise workflows, and adapt to changing conditions.

In all these cases, the ability to simulate outcomes before acting is a decisive advantage.

Open-Source World Model Projects You Should Know

The field of world models is still emerging, but several open-source initiatives are already making a significant impact.

1. World Models (Ha & Schmidhuber)

One of the earliest and most influential projects, introducing the idea of learning a compressed latent world model using VAEs and RNNs. This work demonstrated that agents could learn effective policies almost entirely inside their own simulated worlds.

2. Dreamer / DreamerV2 / DreamerV3 (DeepMind, open research releases)

Dreamer agents learn a latent dynamics model and use it to plan actions in imagination rather than the real environment, achieving strong performance in continuous control tasks.

3. PlaNet

A model-based reinforcement learning system that plans directly in latent space, reducing sample complexity.

4. MuZero (Partially Open)

While not fully open source, MuZero introduced a powerful concept: learning a dynamics model without explicitly modelling environment rules, combining planning with representation learning.

5. Meta’s JEPA (Joint Embedding Predictive Architectures)

Yann LeCun’s preferred paradigm, JEPA focuses on predicting abstract representations rather than raw pixels, forming a key building block for future world models.

These projects collectively signal a shift away from brute-force scaling toward structured, model-based intelligence.

Are We Seeing Diminishing Returns from LLMs?

While LLMs continue to improve, their progress increasingly depends on:

- More data

- Larger models

- Greater computational cost

World models offer an alternative path: learning more efficiently by understanding structure rather than memorising patterns. Many researchers believe the future of AI lies in hybrid systems that combine language models with world models that provide grounding, memory, and planning.

Why World Models May Be the Next Breakthrough

World models address some of the most fundamental weaknesses of current AI systems:

They enable common-sense reasoning

- They support long-term planning

- They allow safe exploration

- They reduce dependence on labelled data

- They bring AI closer to real-world interaction

For applications such as robotics, autonomous systems, and embodied AI, world models are not optional; they are essential.

Conclusion

World models represent a critical evolution in artificial intelligence, moving beyond language-centric systems toward agents that can truly understand, predict, and interact with the world. As Yann LeCun argues, intelligence is not about generating text, but about building internal models of reality.

With increasing open-source momentum and growing industry interest, world models are likely to play a central role in the next generation of AI systems. Rather than replacing large language models, they may finally give them what they lack most: a grounded understanding of the world they describe.

Crypto World

Judge Dismisses Bancor-Affiliated Patent Case Against Uniswap

A New York federal judge dismissed a patent infringement lawsuit brought by Bancor-affiliated entities against Uniswap, ruling that the asserted patents claim abstract ideas and are not eligible for protection under US patent law.

In a memorandum opinion and order dated Tuesday, Feb. 10, Judge John G. Koeltl of the US District Court for the Southern District of New York granted the defendant’s motion to dismiss the complaint filed by Bprotocol Foundation and LocalCoin Ltd. against Universal Navigation Inc. and the Uniswap Foundation.

The court found that the patents are directed to the abstract idea of calculating crypto exchange rates and therefore fail the two-step test for patent eligibility established by the US Supreme Court.

The ruling marks a procedural win for Uniswap, but it is not final. The case was dismissed without prejudice, giving the plaintiffs 21 days to file an amended complaint. If no amended complaint is filed, the dismissal will convert to one with prejudice.

Shortly after the ruling, Uniswap founder Hayden Adams wrote on X, “A lawyer just told me we won.”

Cointelegraph reached out to representatives of Bprotocol Foundation and Uniswap for comment but had not received a response by publication.

Judge finds that patents claim abstract ideas

As previously reported, Bancor alleged that Uniswap infringed patents related to a “constant product automated market maker” system underpinning decentralized exchanges.

The dispute centered on whether Uniswap’s protocol unlawfully used patented technology for automated token pricing and liquidity pools.

Koeltl said that the patents were directed to “the abstract idea of calculating currency exchange rates to perform transactions.”

He wrote that currency exchange is a “fundamental economic practice” and that calculating pricing information is abstract under established Federal Circuit precedent.

The judge rejected arguments that implementing the pricing formula on blockchain infrastructure made the claims patentable, and said the patents merely use existing blockchain and smart contract technology “in predictable ways to address an economic problem.”

He said limiting an abstract idea to a particular technological environment does not make it patent-eligible. The court also found no “inventive concept” sufficient to transform the abstract idea into a patent-eligible application.

Related: Vitalik draws line between ‘real DeFi’ and centralized yield stablecoins

Complaint fails to plead infringement

Beyond patent eligibility, the court found that the amended complaint did not plausibly allege direct infringement.

According to the memorandum, the plaintiffs failed to identify how Uniswap’s publicly available code includes the required reserve ratio constant specified in the patents.

The judge also dismissed claims of induced and willful infringement, finding that the complaint did not plausibly allege that the defendants knew about the patents before the lawsuit was filed.

The dismissal without prejudice leaves open the possibility that Bprotocol Foundation and LocalCoin Ltd. could attempt to refile with revised claims.

Magazine: A ‘tsunami’ of wealth is headed for crypto: Nansen’s Alex Svanevik

Crypto World

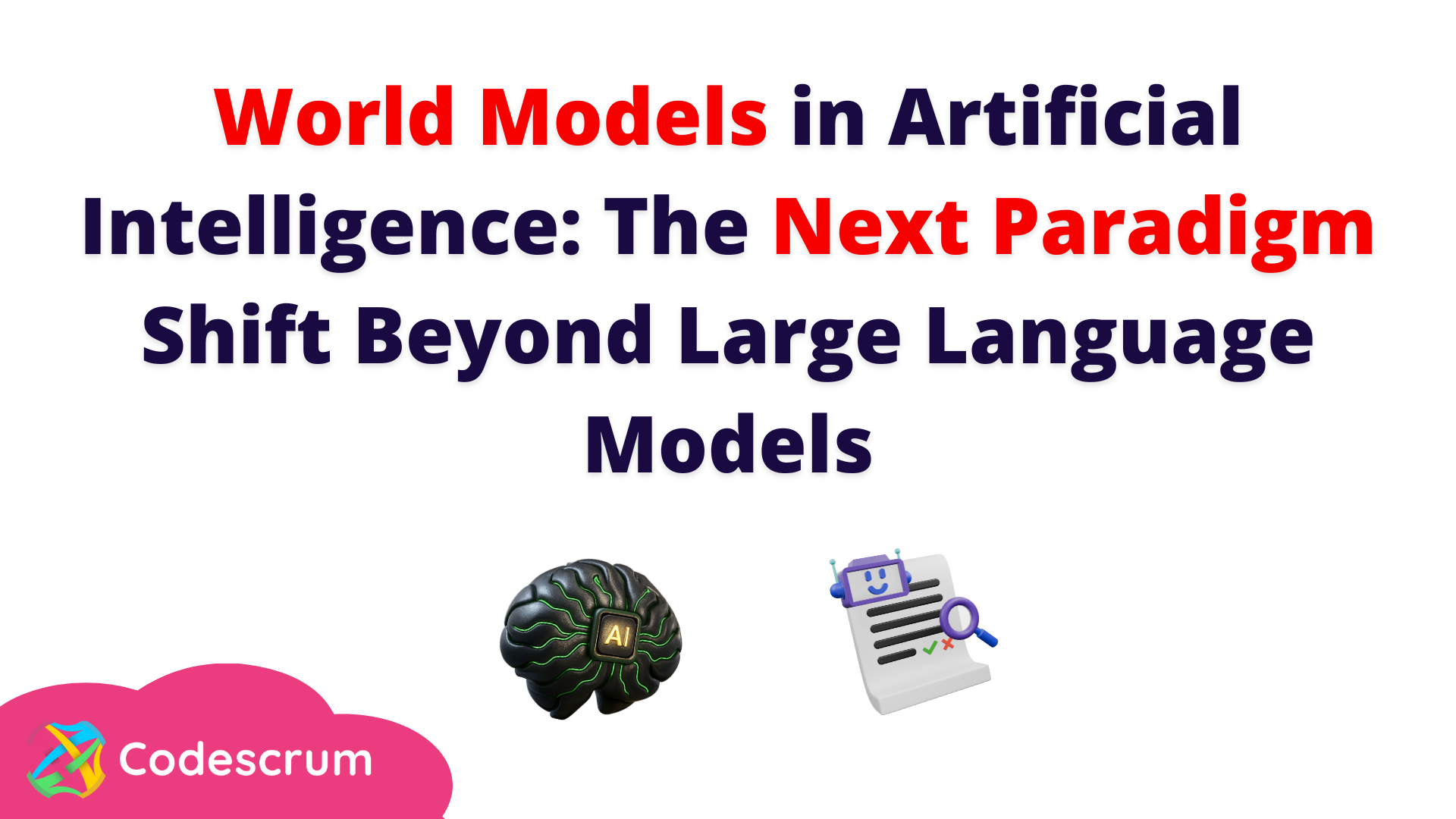

Why Bitcoin OG Erik Voorhees Just Went All-In on Gold

Erik Voorhees, the early Bitcoin advocate and founder of ShapeShift, is making a bold pivot into gold.

The move comes as gold recovers following a 21% crash, with prospects for further gains if analyst projections are any guide.

Sponsored

Sponsored

Erik Voorhees’ Gold Move Signals a Shift Beyond Bitcoin

Lookonchain reports that Voorhees created nine new wallets and spent $6.81 million in USDC. The Bitcoin OG purchased 1,382 ounces of PAXG, a gold-backed token just like Tether Gold, at an average price of $4,926 per ounce.

Voorhees, who entered the Bitcoin ecosystem in 2011 and later founded several of the earliest major crypto companies, has long championed Bitcoin as “digital gold.”

His latest purchases suggest a nuanced strategy to diversify into traditional safe-haven assets even while remaining a vocal advocate for crypto.

Analyst Jacob King notes that Voorhees’ move signals that some of crypto’s earliest adopters are hedging against potential market volatility by holding both physical and tokenized gold.

Gold prices have been holding steady above $5,000 per ounce, supported by strong central bank demand and inflows from gold ETFs. As of this writing, the gold price was trading for $5,048, up by almost 15% since bottoming out at $4,402 on February 2.

Sponsored

Sponsored

According to Coin Bureau CEO and co-founder Nic Puckrin, the recent dip in gold prices reflects a temporary pause rather than a retreat. Puckrin cites upcoming US jobs and CPI data, which are likely to influence rate-cut expectations.

Gold Set for Breakout as Analysts Forecast $6,300+ Amid Strategic Dollar Shift

Elsewhere, technical analyst Rashad Hajiyev notes that gold is poised for a breakout after testing a critical resistance level, projecting a near-term breakout to around $5,200 per ounce before entering a range-bound phase.

Sponsored

Meanwhile, Wells Fargo recently characterized the pullback as a healthy correction after a sharp rally, raising its 2026 gold target to $6,100–$6,300 per ounce. The multinational financial services firm cited geopolitical risks, market volatility, and sustained central bank demand.

“Buy the gold dip, Wells Fargo says. The recent pullback in gold is a healthy correction after a sharp rally,” wrote Walter Bloomberg.

Meanwhile, Daniel Oliver, founder of Myrmikan Capital, projects a longer-term surge to $12,595 per ounce, driven by central bank buying and concerns over a potential “government bond death spiral.”

Gold Outpaces Stocks as Macro Shifts and Crypto Moves Highlight Its Safe-Haven Appeal

Gold’s strong performance relative to equities is stark. Historical data shows gold surging 1,658% since 2000, compared to the S&P 500’s 460% gain.

Sponsored

Sponsored

Even after factoring in dividends, the S&P’s total return of roughly 700% reflects gold’s value as a portfolio diversifier. This is especially true in periods of macroeconomic and geopolitical uncertainty.

According to analysts, broader macroeconomic factors are driving gold’s rise. Sunil Reddy notes that US policy is quietly shifting away from maximizing dollar purchasing power toward reindustrialization and trade rebalancing.

This “softer dollar” approach is boosting demand for hard assets like gold and silver, signaling a strategic pivot rather than purely speculative buying.

Voorhees’ move into gold may reflect an awareness of these dynamics. By deploying millions into PAXG, the Bitcoin pioneer appears to be betting on gold’s continued relevance as a hedge against dollar weakness and a counterbalance to crypto market volatility.

Still, investors should conduct their own research and not rely solely on analysts’ projections.

Crypto World

BTC, ETH, XRP, and SOL Holdings Revealed

The investment bank’s positions are through crypto ETFs, not direct token holdings.

The behemoth in investment banking published its Q4 2025 Form 13F disclosure, outlining its positions in four of the largest cryptocurrencies by market cap.

Given the recent price declines in the digital asset space, their USD value has declined, but the disclosure still shows an interesting pattern.

Goldman’s Crypto Portfolio

🚨NEW: Wall Street investment bank @GoldmanSachs just revealed it holds $1.1B $BTC, $1B $ETH, $153M $XRP and $108M $SOL.

Goldman has representation at the White House meeting on stablecoin yield today. Its CEO David Solomon is scheduled to speak at @worldlibertyfi Forum in Palm…

— Eleanor Terrett (@EleanorTerrett) February 10, 2026

The filing, which went viral on X yesterday, shows that Goldman has indirect exposure to approximately 13,740 BTC through the US-based spot Bitcoin ETFs. Since the filings reflect the value of the holdings at the end of the quarter, not the current value or the price paid upon purchase, there’s a significant discrepancy between what they are worth now and what they were reported to be then, due to the infamous crypto volatility.

At the end of Q4, the BTC position was valued at around $1.7 billion. Since then, the asset has declined by almost 50%, bringing these holdings’ current value to $920 million. Also, there’s a difference between Terrett’s post and today’s valuation as BTC tumbled once again this morning to under $67.000.

Nevertheless, it’s worth noting that this doesn’t represent a realized loss. Moreover, the filings indicated that Goldman has not reduced its BTC position.

Additionally, the investment bank now has exposure to three of the largest altcoins, including XRP and SOL, whose ETFs tracking their performance launched in Q4 last year.

You may also like:

Wall Street Warming Up to Crypto?

As mentioned above, the filing was quickly reposted yesterday on social media, and the crypto community embraced it as a definitive sign of Wall Street and institutions putting billions in the digital asset market.

Big moves.

Goldman isn’t just talking crypto — they’re putting billions on the line. BTC, ETH, XRP, SOL all show serious institutional conviction.

With White House access and CEO appearances, crypto is clearly on Wall Street’s radar. 👀

— The Ripple Mo | XRP 🇺🇸 (@IXEIAH) February 10, 2026

The timing is also intriguing as the White House continues to work on a crypto bill, the CLARITY Act, which has faced some resistance from the banking industry. In fact, some commentators believe that Goldman’s filings being published now indicate the bank is “positioning” itself in a power move and should not be regarded as a simple transparency act.

SECRET PARTNERSHIP BONUS for CryptoPotato readers: Use this link to register and unlock $1,500 in exclusive BingX Exchange rewards (limited time offer).

Crypto World

Binance rolls out 5x futures on privacy L2 asset

Privacy-focused Aztec L2 gets a 5x Binance perpetual listing, with second-by-second mark pricing and tight funding bands in pre-market.

Summary

- Binance launches AZTECUSDT perpetuals with up to 5x leverage and USDT settlement.

- Funding starts capped at +0.005% pre-market, moving to a ±2.00% range after launch, with 4-hour settlements.

- Aztec is a privacy-first Ethereum Layer-2 with a 10.35 billion token supply and round-the-clock trading on Binance Futures.

Cryptocurrency exchange Binance announced plans to launch a perpetual futures contract for Aztec on its futures trading platform, according to an official company statement.

Binance to initiate new trading for Aztec

Binance Futures will initiate pre-market trading for the Aztec perpetual futures contract on February 11, 2026, at 07:30 UTC, the exchange stated. The contract will offer leverage of up to 5x during the pre-market period.

The underlying asset of the contract is Aztec, a privacy-focused Layer-2 solution built on the Ethereum blockchain, according to the announcement. The project aims to enable developers to build applications that protect user privacy. A dollar-pegged stablecoin will serve as the settlement unit for the contract.

The total and maximum supply of Aztec tokens stands at 10.35 billion, Binance reported. The contract’s tick size is set at 0.00001, with a minimum transaction amount of 1 Aztec token and a minimum notional value of 5 USD.

The mark price will be recalculated every second based on the average transaction prices over the preceding 10 seconds, the exchange stated. A two-tiered funding rate system will be implemented, with the funding rate capped at +0.005% during the pre-market period. Following the conclusion of pre-market trading, the funding rate limit will expand to a range of +2.00% to -2.00%. Funding fees will be settled every four hours.

The Aztec perpetual contract will be available for round-the-clock trading on Binance Futures and will support Multi-Assets Mode, according to the announcement. The exchange cautioned users about potential high volatility in the new product and advised appropriate risk management.

Crypto World

BingX Rolls Out Copy Trading Plaza and Enhanced Lead Trader Homepage in Major Upgrade to Copy Trading Suite

PANAMA CITY, February 11, 2026 – BingX, a leading cryptocurrency exchange and Web3-AI company, announced the upcoming launch of an array of enhancements to its copy trading suite, including the all-new Copy Trading Plaza and an upgraded Lead Trader Homepage. This major overhaul redefines BingX’s copy trading experience with a refreshed experience, smarter discovery, and deeper data transparency, aimed at significantly increasing visibility and engagement across its copy trading ecosystem.

The new Copy Trading Plaza consolidates discovery, evaluation, and execution into a single, intuitive destination designed to help users identify top strategies faster and with greater confidence:

- Intelligent Discovery: Discover both real traders and AI-driven strategies tailored to your trading preference on the BingX mobile app

- Centralized Copy Trading Hub: One-stop access to curated trader lists and strategy insights to improve overall copy trading efficiency

- Professional-Grade Metrics: Select trading strategies with advanced ranking and filtering powered by professional risk and performance metrics

The revamped Lead Trader Homepage offers a variety of advancements:

- Multidimensional Data: A fully revamped personal page with multidimensional performance data, offering copiers greater transparency and Lead Traders more opportunities

- Enhanced Transparency: Deep dives into trading behavior, risk profile, and historical performance, allowing traders to implement new trading strategies with greater confidence

- New Set of Tools: Integrated tools to help Lead Traders build credibility, grow visibility, and manage copiers more effectively

As the first exchange to offer copy trading in Web3, BingX operates one of the industry’s largest and most active copy trading communities. To date, the platform has recorded over 1.3 billion cumulative copy trading orders and $580 billion in cumulative trading volume, underscoring its scale, liquidity, and long-standing user trust.

“This overhaul is a structural leap forward for copy trading on BingX,” said Vivien Lin, Chief Product Officer at BingX. “By unifying smarter discovery, professional-grade metrics, and enhanced trader profiles, we’re enabling users to make faster, better-informed decisions while empowering Lead Traders to build influence and long-term value.”

To celebrate the launch, BingX is rolling out a limited-time campaign, offering users who complete their first copy trade or apply to become a lead trader and place their first trade by February 28 via the new homepage will be entered into a lucky draw, with the top prize reaching up to 9,999 USDT.

About BingX

Founded in 2018, BingX is a leading crypto exchange and Web3-AI company, serving over 40 million users worldwide. Ranked among the top five global crypto derivatives exchanges and a pioneer of crypto copy trading, BingX addresses the evolving needs of users across all experience levels.

Powered by a comprehensive suite of AI-driven products and services, including futures, spot, copy trading, and TradFi offerings, BingX empowers users with innovative tools designed to enhance performance, confidence, and efficiency.

BingX has been the principal partner of Chelsea FC since 2024, and became the first official crypto exchange partner of Scuderia Ferrari HP in 2026.

For media inquiries, please contact: media@bingx.com

For more information, please visit: https://bingx.com/

Disclaimer: This is a Press Release provided by a third party who is responsible for the content. Please conduct your own research before taking any action based on the content.

Crypto World

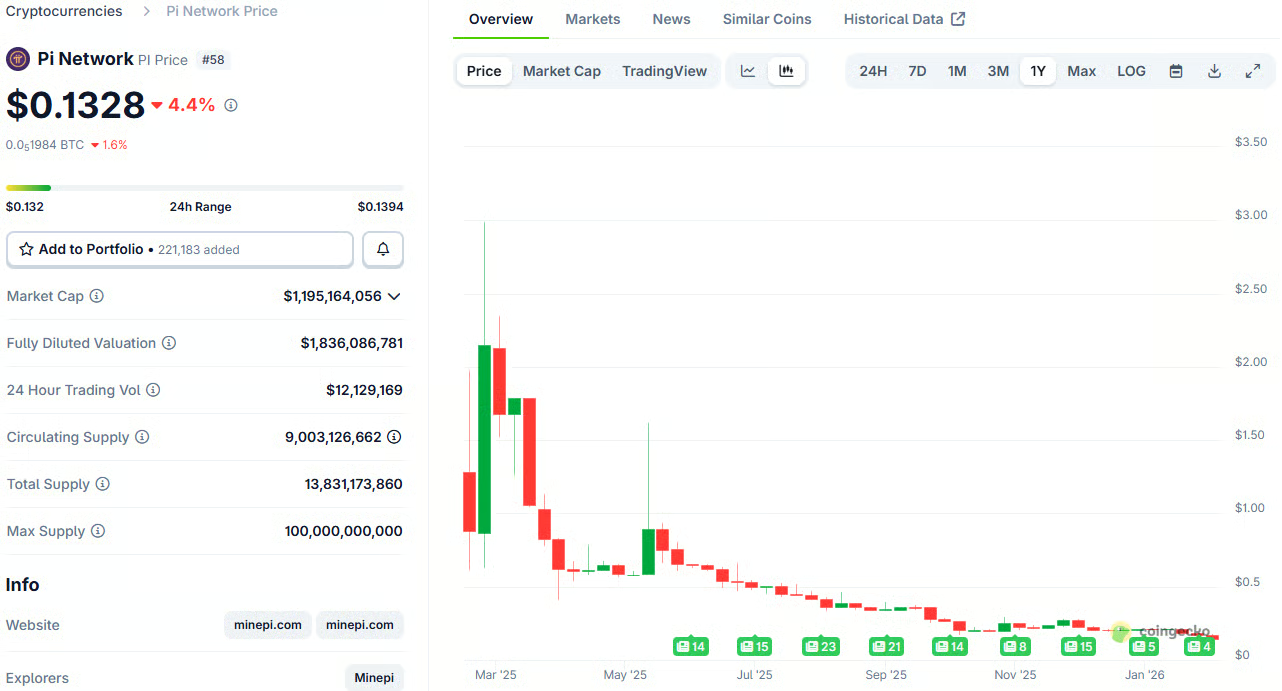

Pi Network’s Price Sees Another All-Time Low, But Next 3 Days Could Be Even Worse: Details

Here’s why PI could continue to chart big losses in the next few days.

The overall market-wide correction that took place in the past 12 hours or so has not been kind to many altcoins, but there’s one that stands out as perhaps the biggest victim of the brutal state of the industry.

Pi Network’s native token, which traded close to $3 less than a year ago, has been on a massive free-fall ride since then. The latest price crash from minutes ago meant a fresh all-time low of $0.132, according to data from CoinGecko. In fact, the chart below demonstrates a clear and painful pattern, showing a 95.6% decline in less than a year.

While this calamity is already bad enough, on-chain data suggests that it might not be the end of PI’s struggles.

PiScan is a website dedicated to increasing the project’s transparency, especially when it comes to the daily (and monthly) schedules for token unlocks. After all, a significant portion of PI has been locked, and investors are gradually receiving access to their holdings.

However, the next few days could intensify the selling pressure because the schedule does not show a “gradual” token unlock. On average, the number of coins to be released in the next month stands at just over 8.5 million, which is already a lot higher than the 4-5 million seen just a couple of months ago.

However, these numbers are significantly higher for February 12, 13, and 14. More precisely, 16.9 million tokens will be released on February 14, while the number for tomorrow will be 18.9 million. February 13, which, coincidentally (or not), is Friday the 13th, will be the record day, with 23.6 million PI unlocked.

It’s worth noting that once these tokens are released, they will be free for trading. Although this doesn’t guarantee they will be sold off immediately, it certainly raises such concerns given the overall market state, rising FUD, and the latest criticism of Pi Network and its team.

You may also like:

SECRET PARTNERSHIP BONUS for CryptoPotato readers: Use this link to register and unlock $1,500 in exclusive BingX Exchange rewards (limited time offer).

Disclaimer: Information found on CryptoPotato is those of writers quoted. It does not represent the opinions of CryptoPotato on whether to buy, sell, or hold any investments. You are advised to conduct your own research before making any investment decisions. Use provided information at your own risk. See Disclaimer for more information.

Crypto World

STON.fi Brings Bitcoin and Ethereum to TON DeFi

STON.fi, one of the leading AMM protocols on The Open Network (TON), announced that TON-native representations of Bitcoin (BTC) and Ethereum (ETH) are now available within the ecosystem in a fully non-custodial DeFi format.

The integration gives TON users direct access to the two largest crypto assets, including the ability to swap them and provide liquidity, while maintaining full control over their funds.

BTC and ETH are represented on TON as wrapped assets issued in TON-native format, each fully backed 1:1 by the underlying tokens and managed through smart contracts. Ethereum is available as wrapped ETH (WETH), while Bitcoin is accessible via cbBTC, a Bitcoin-backed token issued by Coinbase and fully collateralized with BTC on a one-to-one basis. This structure allows both assets to be used across decentralized applications within TON ecosystem without interacting directly with their native blockchains.

Through STON.fi, users can deploy WETH and cbBTC across TON DeFi, including swapping and providing liquidity via WETH/USDt and cbBTC/USDt pools. At the same time, Omniston, STON.fi’s liquidity aggregation protocol, enables swaps to WETH and cbBTC from any TON-native token, routing liquidity across the ecosystem. Applications integrated with Omniston instantly gain access to WETH and cbBTC liquidity, enabling swaps across hundreds of TON-based dApps without additional integrations and expanding the range of available DeFi strategies within the ecosystem.

“Bringing BTC and ETH into TON DeFi is about expanding real utility, not just asset coverage,” said Slavik Baranov, CEO of STON.fi Dev. “This launch enables users to actively use Bitcoin and Ethereum inside TON ecosystem rather than holding them passively. By making these assets usable in TON-native DeFi, we’re strengthening the overall depth of the ecosystem.”

As TON continues to develop as a blockchain closely integrated with Telegram — a messenger used daily by hundreds of millions of people — access to major crypto assets directly within Telegram-native and TON-based applications has become a natural part of the ecosystem’s evolution. Bitcoin and Ethereum sit at the core of the global crypto economy, and their availability on TON allows users to access these assets directly within the apps they already use, without leaving the ecosystem, through decentralized and permissionless infrastructure.

To learn more about how WETH and cbBTC integration works on STON.fi, users can visit: https://ston.fi/eth-ton and https://ston.fi/btc-ton.

About STON.fi

STON.fi is one of the leading non-custodial swap dApps and a suite of swap-enabling protocols within The Open Network (TON) ecosystem, known for its deep liquidity, wide token coverage, and dominance in total value locked (TVL) and trading volume. With over $6.8 billion in total trading volume and more than 31 million operations, STON.fi dominates TON DeFi ecosystem in token coverage, liquidity depth, and active user participation. Backed by top investors such as CoinFund, Delphi Ventures, The Open Platform, Karatage, TON Ventures, and others, STON.fi continues to advance decentralized finance through open development and innovations such as Omniston — a decentralized liquidity aggregation protocol.

Crypto World

Arkham Intelligence said to be shutting trading platform as crypto bear market bites

Arkham Exchange, the cryptocurrency trading platform built by data analytics company Arkham Intelligence, is closing down, according to a person familiar with the matter.

Arkham, whose backers include OpenAI CEO Sam Altman, did not respond to requests for comment.

The company, which was founded in 2020 and now boasts over 3 million registered users, floated the idea of adding a crypto derivatives exchange back in October 2024. The plan was to compete with giants such as Binance for retail investors.

By early 2025, Arkham Exchange had added spot crypto trading in a number of U.S. states. But volumes appear to have been a challenge, despite the firm adding a mobile trading app in December.

Binance, the largest crypto exchange by volume, had almost $9 billion of daily trading, according to CoinGecko data. Coinbase (COIN), the No. 2, had $2 billion. Akrham recorded just under $620,000 in the past 24 hours.

In addition to Altman, Arkham’s backers include Draper Associates, Binance Labs and Bedrock.

Arkham hosts its own native crypto token, ARKM, which was trading at close to $0.12 at the time of writing.

Crypto World

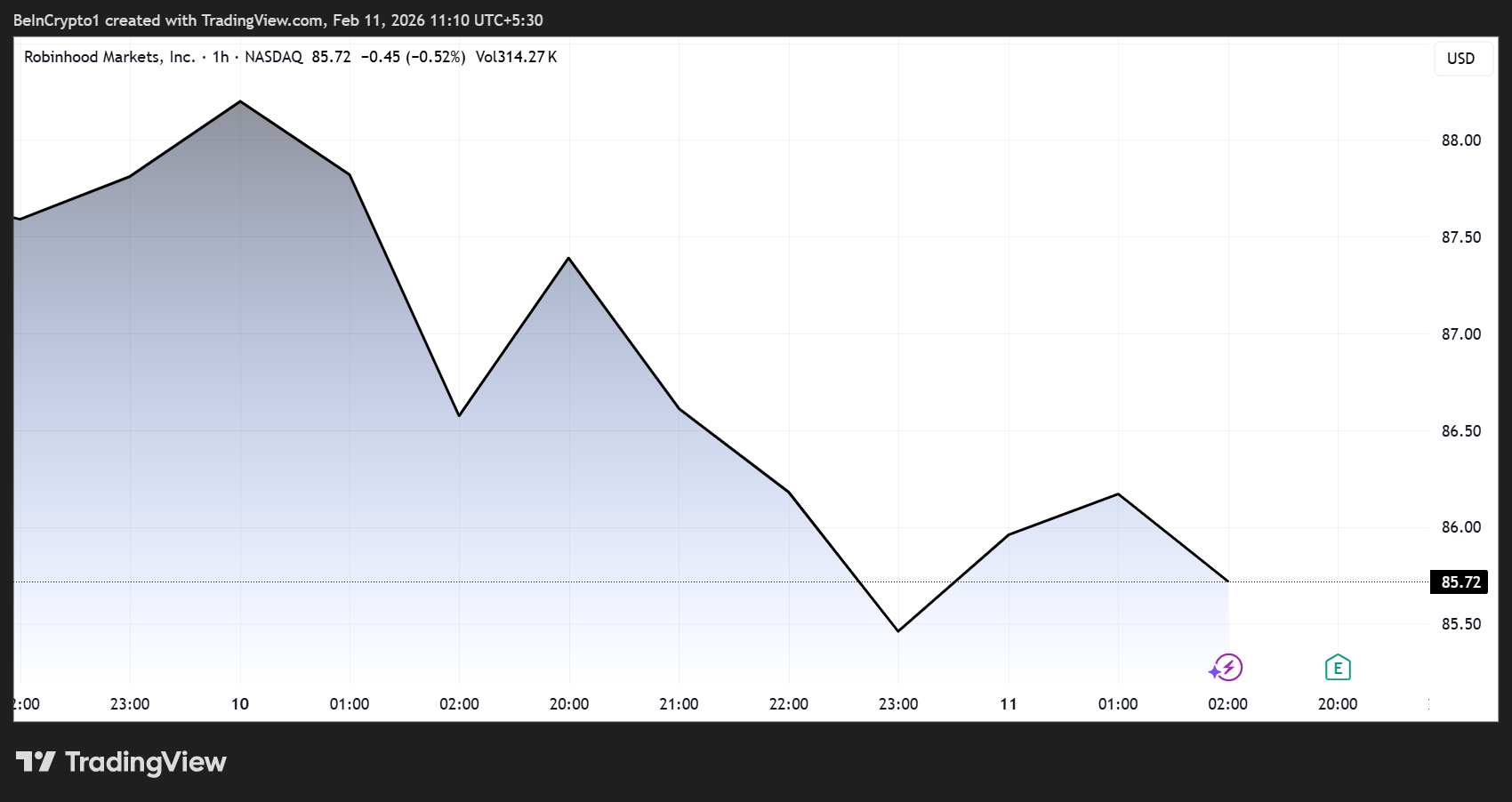

Why Everyone’s Talking About Robinhood Q4 2025 Earnings

Robinhood’s Q4 2025 earnings report triggered a sharp market reaction, with the company’s stock falling roughly 8% after revenue came in below expectations.

Yet the most striking takeaway from the call was not the drop in crypto trading revenue, but the growing prominence of prediction markets and automation as pillars of the platform’s future strategy.

Robinhood Earnings Show Prediction Markets Overtaking Crypto as Key Growth Driver

Nearly one-third of analyst questions during the earnings call focused on prediction markets, reflecting how quickly the sector is moving from experimental feature to potential core business line.

Sponsored

Sponsored

“30% of $HOOD Q&A (6 of 20 questions) concerned prediction markets, by far the #1 topic,” stated Matthew Sigel, Head of Digital Assets Research at VanEck.

According to Sigel, the attention reflects fast-paced growth across the industry, with volumes now above $10 billion per month (approximately $300–400 million per day), roughly comparable to the average daily US sports betting handle.

Revenue Miss and Crypto Slowdown

Robinhood reported Q4 net revenue of $1.28 billion, below expectations of about $1.35 billion. Transaction-based revenue and crypto trading also missed forecasts, with crypto revenue coming in at approximately $221 million versus expectations closer to $248 million.

Analysts see the market reaction as largely tied to high expectations and slowing growth in key metrics rather than structural weakness in the business.

Christian Bolu, senior analyst at Autonomous Research, described the results as disappointing on the surface but constructive in outlook.

“I would say look at an expensive stock, and you know a topline miss is not helpful at all,” Bolu said, noting that some key metrics, including deposit growth, also slowed.

However, he emphasized that the longer-term outlook remains positive:

“The commentary from the management team is pretty constructive in terms of the pipeline for 2026 in terms of new business growth, and actually, transaction volumes have been very strong in January as well. So, the outlook here is actually pretty decent.”

Sponsored

Sponsored

Prediction Markets Move to Center Stage

While crypto remains an important segment, analysts increasingly see prediction markets and event contracts becoming a larger share of the business over time.

“Over time, we think things like event contracts and prediction markets will be a bigger part of the business than crypto,” Bolu added in the interview with Yahoo Finance.

The opportunity is substantial. Despite rising competition from platforms like Kalshi and Polymarket, Robinhood’s distribution advantage could prove decisive.

“The good thing about Robinhood is their value prop from a business perspective is the distribution,” Bolu said. “There aren’t many folks that can distribute or have the distribution that they do.”

Regulation Remains the Key Constraint

Even as interest grows, regulatory uncertainty remains the biggest barrier to expansion. Sigel highlighted that the issue was directly addressed during the earnings call.

“Binary yes/no contracts … can fit under CFTC event contract authority… But contracts with continuous or formula-based payouts tied to a single issuer’s financial performance could be treated as SEC ‘security-based swaps’ under Dodd-Frank.”

Sponsored

Sponsored

However, the Van Eck executive acknowledged that the lack of clarity is slowing progress:

“There’s no formal framework clarifying that boundary yet, which is why management referenced needing ‘regulatory relief.’”

AI Automation Quietly Reshaping the Business

Beyond new trading products, Robinhood is also transforming its internal operations through automation and artificial intelligence. Against this backdrop, Sigel shared one of the most striking disclosures from the call:

“AI support is really cranking. Now over 75% of our cases are solved by AI, including the complex cases that previously required licensed brokerage professionals,” he shared.

The company is also automating its engineering workflow, optimizing the entire engineering pipeline from code writing through code review to deployment and testing.

Reportedly, this is already turning into real savings and efficiency gains, estimated at over $100 million in 2025 alone.

These cost reductions could help offset cyclical revenue swings in areas like crypto and options trading.

Sponsored

Sponsored

A More Diversified Robinhood

Analysts say Robinhood today looks very different from the trading app that rose to prominence during earlier crypto and meme-stock cycles.

Bolu described the company as “a much more mature company a much more diversified company,” pointing to:

- Growing net interest income

- Retirement accounts

- Banking products, and

- Credit cards as additional revenue streams.

This diversification is one reason many analysts remain bullish despite short-term volatility. More than 80% of analysts still rate the stock a buy, according to market commentary following the results.

Robinhood’s latest earnings reinforced a key shift: crypto may no longer be the dominant narrative driving the platform.

Instead, the next phase of growth appears to be forming around prediction markets, options trading, subscriptions, and AI-driven efficiency. These segments could reduce reliance on highly cyclical crypto trading volumes.

If those trends continue, the earnings call may ultimately be remembered less for a revenue miss and more for revealing where the platform is heading next.

Crypto World

Tom Lee sees bitcoin rebound, ether bottoming below $1,800

HONG KONG — Thomas Lee, chief investment officer of Fundstrat and chairman of ether treasury firm BitMine Immersion (BMNR), said that investors should focus less on timing the exact low and start looking for entries in a keynote speech at Consensus Hong Kong 2026 on Wednesday.

“You should be thinking about opportunities here instead of selling,” Lee said.

BTC has suffered a 50% drawdown from its October record highs, its worst correction since 2022.

On Wednesday, bitcoin fell back below $67,000, giving up some of the bounce from last week’s crash lows. After managing a rapid reversal above $72,000 from $60,000 over the weekend, BTC was down 2.8% over the past 24 hours. Ethereum’s ether , meanwhile, slipped to $1,950, also around 3% lower.

‘Perfected bottom’

Lee attributed the recent weakness in crypto prices to the volatility in metals, which rippled across asset classes. Late January, gold’s market capitalization fluctuated by trillions of dollars in a single day, triggering margin calls and weighing on risk assets.

After bitcoin severely underperformed gold in 2025, he argued that the yellow metal likely has topped for this year and bitcoin is poised to outperform through 2026.

On ether , Lee said repeated 50% drawdowns since 2018 have often been followed by sharp rebounds.

Citing market technician Tom DeMark, he said ETH may need to briefly dip below $1,800 to form a “perfected bottom” before a more sustained recovery.

Read more: SkyBridge’s Scaramucci is buying the bitcoin dip, calls Trump a crypto President

-

Politics3 days ago

Politics3 days agoWhy Israel is blocking foreign journalists from entering

-

NewsBeat2 days ago

NewsBeat2 days agoMia Brookes misses out on Winter Olympics medal in snowboard big air

-

Sports4 days ago

Sports4 days agoJD Vance booed as Team USA enters Winter Olympics opening ceremony

-

Business3 days ago

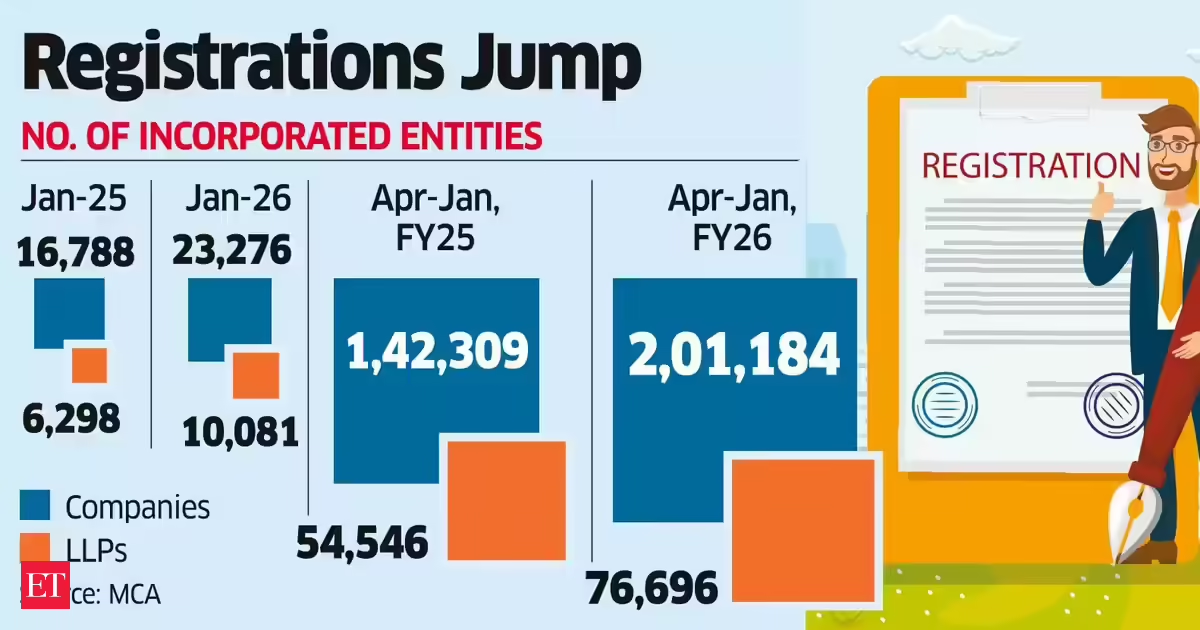

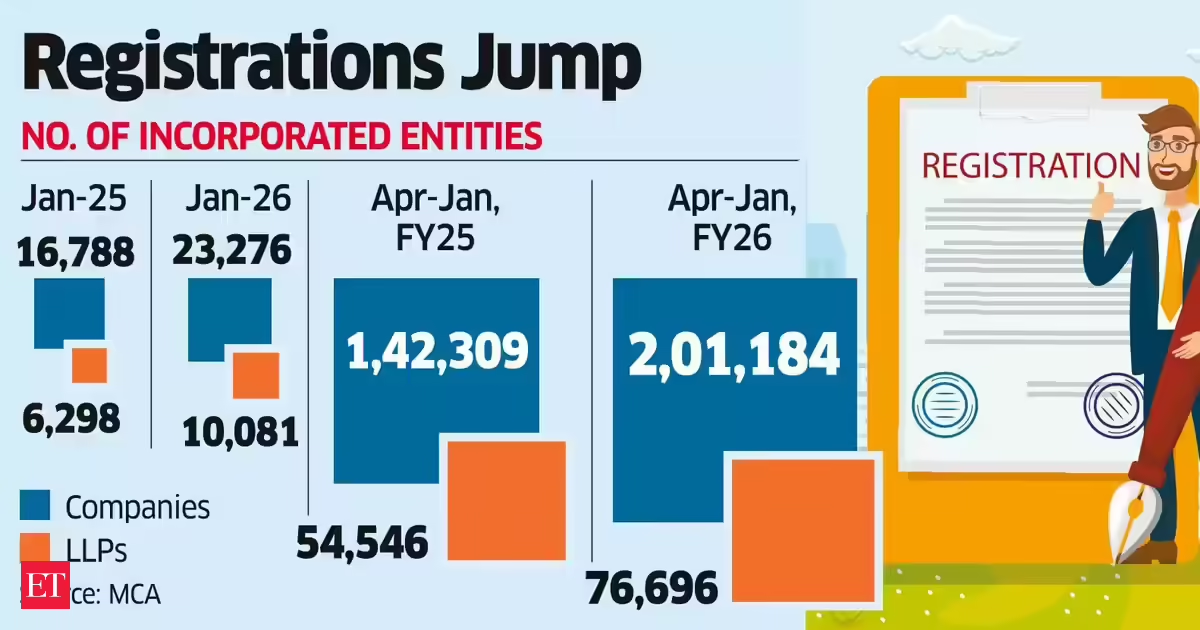

Business3 days agoLLP registrations cross 10,000 mark for first time in Jan

-

Tech5 days ago

Tech5 days agoFirst multi-coronavirus vaccine enters human testing, built on UW Medicine technology

-

Tech7 hours ago

Tech7 hours agoSpaceX’s mighty Starship rocket enters final testing for 12th flight

-

NewsBeat2 days ago

NewsBeat2 days agoWinter Olympics 2026: Team GB’s Mia Brookes through to snowboard big air final, and curling pair beat Italy

-

Sports2 days ago

Sports2 days agoBenjamin Karl strips clothes celebrating snowboard gold medal at Olympics

-

Politics3 days ago

Politics3 days agoThe Health Dangers Of Browning Your Food

-

Sports4 days ago

Former Viking Enters Hall of Fame

-

Sports5 days ago

New and Huge Defender Enter Vikings’ Mock Draft Orbit

-

Business3 days ago

Business3 days agoJulius Baer CEO calls for Swiss public register of rogue bankers to protect reputation

-

NewsBeat5 days ago

NewsBeat5 days agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Business6 days ago

Business6 days agoQuiz enters administration for third time

-

Crypto World18 hours ago

Crypto World18 hours agoBlockchain.com wins UK registration nearly four years after abandoning FCA process

-

Crypto World1 day ago

Crypto World1 day agoU.S. BTC ETFs register back-to-back inflows for first time in a month

-

NewsBeat2 days ago

NewsBeat2 days agoResidents say city high street with ‘boarded up’ shops ‘could be better’

-

Sports2 days ago

Kirk Cousins Officially Enters the Vikings’ Offseason Puzzle

-

Crypto World1 day ago

Crypto World1 day agoEthereum Enters Capitulation Zone as MVRV Turns Negative: Bottom Near?

-

NewsBeat6 days ago

NewsBeat6 days agoStill time to enter Bolton News’ Best Hairdresser 2026 competition