OpenAI is bringing o1, its “reasoning” AI model, to its API — but only for certain developers, to start.

Starting Tuesday, o1 will begin rolling out to devs in OpenAI’s “tier 5” usage category, the company said. To qualify for tier 5, developers have to spend at least $1,000 with OpenAI and have an account that’s older than 30 days since their first successful payment.

O1 replaces the o1-preview model that was already available in the API.

Unlike most AI, reasoning models like o1 effectively fact-check themselves, which helps them avoid some of the pitfalls that normally trip up models. As a drawback, they often take longer to arrive at solutions.

They’re also quite pricey — in part because they require a lot of computing resources to run. OpenAI charges $15 for every ~750,000 words o1 analyzes and $60 for every ~750,000 words the model generates. That’s 6x the cost of OpenAI’s latest “non-reasoning” model, GPT-4o.

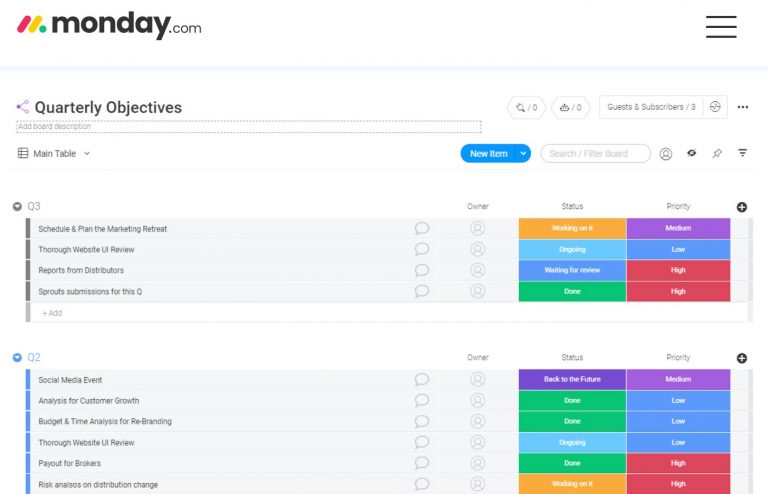

O1 in the OpenAI API is far more customizable than o1-preview, thanks to new features like function calling (which allows the model to be connected to external data), developer messages (which lets devs instruct the model on tone and style), and image analysis. In addition to structured outputs, o1 also has an API parameter, “reasoning_effort,” that enables control over how long the model “thinks” before responding to a query.

OpenAI said that the version of o1 in the API — and, soon, the company’s AI chatbot platform, ChatGPT — is a “new post-trained” version of o1. Compared to the o1 model released in ChatGPT two weeks ago, this one, “o1-2024-12-17,” improves on “areas of model behavior based on feedback,” OpenAI vaguely said.

“We are rolling out access incrementally while working to expand access to additional usage tiers and ramping up rate limits,” the company wrote in a blog post.

In a note on its website, OpenAI said that the newest o1 should provide “more comprehensive and accurate responses,” particularly for questions pertaining to programming and business, and is less likely to incorrectly refuse requests.

In other dev-related news Tuesday, OpenAI announced new versions of its GPT-4o and GPT-4o mini models as part of the Realtime API, OpenAI’s API for building apps with low-latency, AI-generated voice responses. The new models (“gpt-4o-realtime-preview-2024-12-17” and “gpt-4o-mini-realtime-preview-2024-12-17”), which boast improved data efficiency and reliability, are also cheaper to use, OpenAI said.

Speaking of the Realtime API (no pun intended), it remains in beta, but it’s gained several new capabilities, like concurrent out-of-band responses, which enables background tasks such as content moderation to run without interrupting interactions. The API also now supports WebRTC, the open standard for building real-time voice applications for browser-based clients, smartphones, and Internet of Things devices.

In what’s certainly no coincidence, OpenAI hired the creator of WebRTC, Justin Uberti, in early December.

“Our WebRTC integration is designed to enable smooth and responsive interactions in real-world conditions, even with variable network quality,” OpenAI wrote in the blog. “It handles audio encoding, streaming, noise suppression, and congestion control.”

In the last of its updates Tuesday, OpenAI brought preference fine-tuning to its fine-tuning API; preference fine-tuning compares pairs of a model’s responses to “teach” a model to distinguish between preferred and “nonpreferred” answers to questions. And the company launched an “early access” beta for official software developer kits in Go and Java.

+ There are no comments

Add yours