During its latest Unpacked event, Samsung dished all the details on the Galaxy S25 lineup. The Galaxy S25 and S25 Plus start at $799.99 and $999.99, respectively, while the S25 Ultra runs a cool $1299.99 in its entry-level configuration. You can preorder the phones ahead of their launch on February 7th, but before you do, you’re probably wondering what’s new.

Technology

Samsung Galaxy S25 vs. S25 Plus vs. S25 Ultra: specs comparison

The phones don’t look or feel much different, save for the slightly curvier Galaxy S25 Ultra. The Snapdragon Elite 8 is perhaps the S25 family’s most notable hardware upgrade, which is up to 40 percent faster than the Snapdragon 8 Gen 3 chipset and comes with a new neural processing unit to support Samsung’s expanded Galaxy AI experience. The company introduced multimodal and generative AI improvements, after all, and the Galaxy S25 line will be among the first to usher in new Google Gemini features.

Our reviews are still forthcoming, and it’s much too early for us to determine whether any of these phones are actually worth upgrading for. But that doesn’t mean we can’t distill their differences to help you determine which device you’d rather buy. Keep reading for a full breakdown of all of the hardware and software changes, the unique traits of each Galaxy S25 device, and a closer look at their specs — plus their counterparts from last year.

Design

In terms of shape and size, it’s hard to tell the Galaxy S25 and S25 Plus from their last-gen counterparts. But the S25 Ultra looks a bit different than the S24 Ultra with its subtly rounded corners and flat edges, which are more visually aligned with the smaller phones. It’s the thinnest and lightest Ultra yet, even if only by a hair. And the Ultra-exclusive S Pen is back, albeit without gestures and the remote shutter feature.

Samsung says the aluminum frame on the Galaxy S25 and S25 Plus features at least one recycled component. Both sandwich their components between slabs of Corning’s Gorilla Glass Victus 2, but the Ultra uses a titanium frame and a display that’s protected by Corning Gorilla Armor 2. It’s a ceramic-infused material said to be stronger than typical tempered glass with antireflective and scratch-resistant properties. (The rear still uses Victus 2.)

Samsung also tweaked the design of the camera modules on all three phones, adding a thicker bordering hump with a bolder aesthetic. The S25 and S25 Plus come in several new color options, too, including an “icy” blue and a new mint green to help them stand apart, as well as navy and silver for a more traditional aesthetic. Three more colors will be available exclusively from Samsung.com: black, red, and rose gold.

The Ultra has its own set of titanium colors, including black, gray, and silverish hues of blue and white. If you order the Ultra from Samsung.com, you’ll also be able to choose from rose gold, black, and green.

Storage and RAM

The Samsung Galaxy S25 series is available with largely the same memory and storage options as the previous models, except all three models now start with 12GB of RAM. You can get the base Galaxy S25 with 128GB or 256GB of storage, while the Plus starts with 256GB of storage with a 512GB option. The Ultra, meanwhile, offers the same starting configurations as the Plus, along with a 1TB configuration.

Processor

All three Galaxy S25 phones use a Snapdragon 8 Elite chipset — no matter where in the world you’re purchasing from. The processor uses an Oryon CPU similar to the ones you’ll find in newer Qualcomm laptops.

The 3nm chip has two “prime” cores and six performance cores with a dedicated “Hexagon” neural processing unit that supports multimodal AI capabilities with 40 percent faster efficiency compared to the Snapdragon 8 Gen 3. The added headroom allows support for more on-device AI functions, including Generative Edit. Many of these features should generally work faster without the added overhead of server-side processing.

Overall, Samsung claims the Snapdragon 8 Elite offers 37 percent faster CPU performance and 30 percent faster GPU performance for gaming, at least compared to the Snapdragon 8 Gen 3 it’s replacing. That being said, we can’t yet discern how that translates in practice.

Display

The Dynamic AMOLED displays on the Galaxy S25 smartphones are largely unchanged compared to the previous generation. The base Galaxy S25 still has a 6.2-inch Full HD Plus display, while the 6.7-inch display on the Galaxy S25 Plus remains Quad HD Plus.

The S25 Ultra’s display is slightly larger than last year’s at 6.9 inches — a 0.1-inch increase to make up for the slight curve — with the same QHD Plus resolution. All three still support a maximum 120Hz variable refresh rate.

Cameras

The Galaxy S25 and S25 Plus have the same three rear cameras, including a 50-megapixel wide sensor, a 12-megapixel ultrawide option, and a 10-megapixel telephoto sensor. Meanwhile, the Galaxy S25 Ultra offers four total rear cameras, including a main 200-megapixel wide-angle camera, a new 50-megapixel ultrawide camera with macro mode (up from the S24 Ultra’s 12-megapixel), a 50-megapixel telephoto sensor with 5x optical zoom, and a 12-megapixel sensor for 3x zoom. All still use the same 12-megapixel front camera.

Recording options are largely similar across the board, with all three Galaxy S25 models supporting 8K resolution at up to 30 frames per second on their main wide-angle sensors and 4K at up to 60 frames per second for all cameras. However, the Galaxy S25 Ultra supports 4K at up to 120 frames per second.

Samsung now enables 10-bit HDR recording by default on all S25 phones, and they retain the Log color profile option for advanced color grading. The cameras picked up other software-enabled tricks, too, including the Audio Eraser feature first seen in Pixel phones. That feature lets you choose and isolate specific sounds — including voices, music, and wind — with the option to lower the rest or mute them entirely.

There’s also a new Virtual Aperture feature in Expert RAW, allowing you to adjust your footage’s depth of field after recording. There’s a new suite of filters inspired by iconic film looks, too.

Samsung says its new ProScaler feature on the Galaxy S25 Plus and Ultra offers 40 percent better upscaling compared to the Galaxy S24’s based on its signal-to-noise ratio. Since that feature requires QHD Plus resolution, you won’t find it on the base Galaxy S25.

Battery

Like the Galaxy S24 line, the Galaxy S25, S25 Plus, and S25 Ultra use 4,000mAh, 4,900mAh, and 5,000mAh batteries, respectively. That being said, Samsung says they offer the longest battery life of any Galaxy phones to date, largely thanks to hardware and software efficiency improvements.

Fast charging over USB-C returns in all three, of course, but they’re now also “Qi2 Ready.” That means there are no magnets embedded directly in the devices — which is the case with Apple’s latest handsets — but you will be able to obtain 15W wireless charging speeds when paired with Samsung’s magnetic Qi2 Ready cases. That should effectively enable you to use magnetic Qi2 chargers with Samsung Galaxy S25 devices.

Android 15, One UI 7, and Galaxy AI

The Galaxy S25’s launch is less about the hardware and more an opportunity to introduce One UI 7, its AI-heavy take on Android 15. While there are several visual tweaks, the bigger change is in Galaxy AI’s expanded granularity and cohesiveness.

Both Samsung and Google are introducing new multimodal AI features with the Galaxy S25’s launch. Google Gemini Live will launch first on the Galaxy S25, for example, though it will eventually come to the Galaxy S24 and Google Pixel 9. It’s a full-fledged conversational AI companion that’s now the default assistant when long-pressing the home button. (Bixby is still available in its own app.)

Gemini Live supports natural language commands for generative tasks and on-device functions. You can feed it images and files to facilitate requests, and it can dive into multiple apps to help complete them.

You can also get more personalized daily summaries with Now Brief, which is accessible directly from the lockscreen’s new Now Bar (which feels similar to the iPhone’s Dynamic Island). You’ll also notice a redesigned AI Select menu (which you may remember as Smart Select), 20 supported languages for on-device translations, call transcriptions directly within the dialer, and more. Most of these changes should port to older Galaxy flagships, but we’re not yet sure whether all of them will.

By the numbers

No, you’re not experiencing deja vu — the Galaxy S25 smartphones feel largely familiar on paper, as our comparison chart below illustrates. Outside the processor bump, the hardware differences are pretty minor compared to Samsung’s last-gen phones.

The software changes are the most significant upgrades this year, but many of those features will come to older phones, too, thanks to the now-customary seven years of OS updates you’ll get when purchasing a flagship Galaxy phone. Check out the full specs below to see how exactly these devices compare.

Related:

Technology

The Royal Shakespeare Company is turning Macbeth into a neo-noir game

Macbeth, William Shakespeare’s iconic play, is being reimagined as an interactive video game with a neo-noir vibe — and it’s being developed in part by the Royal Shakespeare Company. The game, titled Lili, is a “screen life thriller video game” where you’ll have access to a modern-day Lady Macbeth’s personal devices, according to a press release.

“Players will be immersed in a stylized, neo-noir vision of modern Iran, where surveillance and authoritarianism are part of daily life,” the release says. “The gameplay will feature a blend of live-action cinema within an interactive game format, giving players the chance to immerse themselves in the world of Lady Macbeth and make choices that influence her destiny.” It sounds kind of like a version of Macbeth inspired by Sam Barlow’s interactive thrillers.

The Royal Shakespeare Company is making the game in collaboration with iNK Stories, a New York-based indie studio and publisher that also made 1979 Revolution: Black Friday. It stars Zar Amir as “Lady Macbeth (Lili),” per the press release.

Lili is set to release “later in 2025.”

Technology

Someone bought the domain ‘OGOpenAI’ and redirected it to a Chinese AI lab

A software engineer has bought the website “OGOpenAI.com” and redirected it to DeepSeek, a Chinese AI lab that’s been making waves in the open source AI world lately.

Software engineer Ananay Arora tells TechCrunch that he bought the domain name for “less than a Chipotle meal,” and that he plans to sell it for more.

The move was an apparent nod to how DeepSeek releases cutting-edge open AI models, just as OpenAI did in its early years. DeepSeek’s models can be used offline and for free by any developer with the necessary hardware, similar to older OpenAI models like Point-E and Jukebox.

DeepSeek caught the attention of AI enthusiasts last week when it released an open version of its DeepSeek-R1 model, which the company claims performs better than OpenAI’s o1 on certain benchmarks. Outside of models such as Whisper, OpenAI rarely releases its flagship AI in an “open” format these days, drawing criticism from some in the AI industry. In fact, OpenAI’s reticence to release its most powerful models is cited in a lawsuit from Elon Musk, who claims that the startup isn’t staying true to its original nonprofit mission.

Arora says he was inspired by a now-deleted post on X from Perplexity’s CEO, Aravind Srinivas, comparing DeepSeek to OpenAI in its more “open” days. “I thought, hey, it would be cool to have [the] domain go to DeepSeek for fun,” Arora told TechCrunch via DM.

DeepSeek joins Alibaba’s Qwen in the list of Chinese AI labs releasing open alternatives to OpenAI’s models.

The American government has tried to curb China’s AI labs for years with chip export restrictions, but it may need to do more if the latest AI models coming out of the country are any indication.

Technology

Claude will eventually start speaking up during your chats

- Anthropic CEO has promised several updates to Claude, including one that gives it memory.

- This means the AI can remember things and bring it up in future conversations.

- It remains to be seen how this performs and is slated to arrive in the coming months.

The Claude AI chatbot will receive major upgrades in the months ahead, including the ability to listen and respond by voice alone. Anthropic CEO Dario Amodei explained the plans to the Wall Street Journal at the World Economic Forum in Davos, including the voice mode and an upcoming memory feature.

Essentially, Claude is about to get a personality boost, allowing it to talk back and remember who you are. The two-way voice mode promises to let users speak to Claude and hear it respond, creating a more natural, hands-free conversation. Whether this makes Claude a more accessible version of itself or will let it mimic a human on the phone is questionable, though.

Either way, Anthropic seems to be aiming for a hybrid between a traditional chatbot and voice assistants like Alexa or Siri, though presumably with all the benefits of its more advanced AI.

Claude’s upcoming memory feature will allow the chatbot to recall past interactions. For example, you could share your favorite book, and Claude will remember it the next time you chat. You could even discuss your passion for knitting sweaters and Claude will pick up the thread in your next conversation. While this memory function could lead to more personalized exchanges, it also raises questions about what happens when Claude mixes those memories with an occasional hallucination.

Claude demand

Still, there’s no lack of interest in what Claude can do. Amodei mentioned that Anthropic has been overwhelmed by the surge in demand for AI over the past year. Amodei explained that the company’s compute capacity has been stretched to its limits in recent months.

Anthropic’s push for Claude’s upgrades is part of its effort to stay competitive in a market dominated by OpenAI and tech giants like Google. With OpenAI and Google integrating ChatGPT and Gemini into everything they can think of, Anthropic needs to find a way to stand out. By adding voice and memory to Claude’s repertoire, Anthropic hopes to stand out as an alternative that might lure away fans of ChatGPT and Gemini.

A voice-enabled, memory-enhanced AI chatbot like Claude may also serve as a leader, or at least a competitor, among the trend of making AI chatbots seem more human. The aim seems to be to blur the line between a tool and a companion. And if you want people to use Claude to that extent, a voice and a memory are going to be essential.

You might also like

Technology

Google buys part of HTC’s XR business for $250 million

Google has agreed to acquire a part of HTC’s extended reality (XR) business for $250 million, expanding its push into virtual and augmented reality hardware following the recent launch of its Android XR platform.

The deal involves transferring some of the HTC VIVE engineering staff to Google and granting non-exclusive intellectual property rights, according to the Taiwanese firm. HTC will retain rights to use and develop the technology.

The transaction marks the second major deal between the companies after Google’s $1.1 billion purchase of HTC’s smartphone unit in 2017.

Google said the acquisition will accelerate Android XR platform development across headsets and glasses. The move comes as tech giants race to establish dominance in XR technology, with Apple and Meta maintaining their lead in the virtual reality market.

Google and HTC will explore additional collaboration opportunities, they said.

Technology

Canon’s new RF 16-28mm F2.8 wide-angle zoom lens impressed me, but I’m less convinced we need it

- It’s an ultra-wide zoom lens designed for full-frame cameras like the Canon EOS R8

- Practically identical design to the RF 28-70mm F2.8 IS STM

- A £1,249 list price – we’ll confirm US and Australia pricing asap

Canon has unveiled its latest ultra-wide angle zoom lens for it’s full-frame mirrorless cameras, the RF 16-28mm F2.8 IS STM, and I got a proper feel for it during a hands-on session hosted by Canon ahead of its launch.

It features a bright maximum F2.8 aperture across its entire 16-28mm range, and is a much more compact and affordable option for enthusiasts than Canon’s pro RF 15-35mm F2.8L IS USM lens. Consider the 16-28mm a sensible match for Canon’s beginner and mid-range full-frame cameras instead, such as the EOS R8.

Design-wise, the 16-28mm is a perfect match with the RF 28-70mm F2.8 IS STM lens – the pair share the same control layout and are almost identical in size, even if the 28-70mm lens is around 10 percent heavier.

The new lens is seemingly part of a move by Canon to deliver more accessible fast aperture zooms that fit better with Canon’s smaller mirrorless bodies – the 16-28mm weighs just 15.7oz / 445g and costs £1,249 – that’s much less than the comparable pro L-series lens.

The right fit for enthusiasts

Despite its lower price tag, the 16-28mm still feels reassuringly solid – the rugged lens is made in Japan and features a secure metal lens mount. You get a customizable control ring, autofocus / manual focus switch plus an optical stabilizer switch, and that’s the extent of the external controls.

When paired with a Canon camera that features in-body image stabilization, such as the EOS R6 Mark II, you get up to 8 stops of stabilization, although the cheaper EOS R8 isn’t blessed with that feature, and for which the lens offers 5.5 stops of stabilization alone.

I tested the 16-28mm lens with an EOS R8 and the pair is a perfect match, as is the EOS R6 Mark II which is only a little bit bigger.

I didn’t get too many opportunities to take pictures with the new lens during my brief hands-on, but I have taken enough sample images captured in raw and JPEG format to get a good enough idea of the lens’ optical qualities and deficiencies.

For example, at the extreme wide angle 16mm setting and with the lens aperture wide open at F2.8, raw files demonstrate severe curvilinear distortion and vignetting. Look at the corresponding JPEG, which was captured simultaneously, and you can see just how much lens correction is being applied to get you clean JPEGs out of the camera (check out the gallery of sample images below).

Those lens distortions really are quite severe, but when you look at the JPEG output, all is forgiven – even with such heavy processing taking place to correct curvilinear distortion and vignetting, detail is consistently sharp from the center to the very edges and corners of the frame, while light fall off in the corners is mostly dealt with.

I’ll go out on a limb and suggest the target audience for this lens will be less concerned with these lens distortions, so long as it’s possible to get the end results you like, and my first impressions are that you can certainly do that – I’ve grabbed some sharp selfies and urban landscapes, with decent control over depth of field, plus enjoyed the extra wide perspective that makes vlogging a whole lot easier.

A worthy addition to the Canon RF-mount family?

I expect most photographers and filmmakers will mostly use the 16-28mm lens’ extreme ends of its zoom range; 16mm and 28mm. The former is particularly handy for video work thanks to its ultra wide perspective, while it’s a versatile range for landscape and architecture photography.

That zoom range is hardly extensive, however, and I’m not sure if it’s a lens that particularly excites me, even if it does make a sensible pairing with the RF 28-70mm F2.8 for enthusiasts.

It is much cheaper than a comparable L-series lens, but I’d hardly call a £1,249 lens cheap. Also, why not just pick up the RF 16mm F2.8 STM and the RF 28mm F2.8 prime lenses instead? These are Canon’s smallest lenses for full-frame cameras and the pair combined costs half the price of the 16-28mm F2.8.

As capable as the 16-28mm appears to be on my first impressions – it’s a super sharp lens with versatile maximum aperture – I’m simply not convinced how much extra it brings to the RF-mount table, and if there’s enough of a case for it for most people.

You might also like

Technology

Scale AI is facing a third worker lawsuit in about a month

Scale AI is facing its third lawsuit over alleged labor practices in just over a month, this time from workers claiming they suffered psychological trauma from reviewing disturbing content without adequate safeguards.

Scale, which was valued at $13.8 billion last year, relies on workers it categorizes as contractors to do tasks like rating AI model responses.

Earlier this month, a former worker sued alleging she was effectively paid below the minimum wage and misclassified as a contractor. A complaint alleging similar issues was also filed in December 2024.

This latest complaint, filed January 17 in the Northern District of California, is a class action complaint that focuses on the psychological harms allegedly suffered by six people who worked on Scale’s platform Outlier.

The plaintiffs claim they were forced to write disturbing prompts about violence and abuse — including child abuse — without proper psychological support, suffering retaliation when they sought mental health counsel. They say they were misled about the job’s nature during hiring and ended up with mental health issues like PTSD due to their work. They are seeking the creation of a medical monitoring program along with new safety standards, plus unspecified damages and attorney fees.

One of the plaintiffs, Steve McKinney, is the lead plaintiff in that separate December 2024 complaint against Scale. The same law firm, Clarkson Law Firm of Malibu, California, is representing plaintiffs in both complaints.

Clarkson Law Firm previously filed a class action suit against OpenAI and Microsoft over allegedly using stolen data — a suit that was dismissed after being criticized by a district judge for its length and content. Referencing that case, Joe Osborne, a spokesperson for Scale AI, criticized Clarkson Law Firm and said Scale plans “to defend ourselves vigorously.”

“Clarkson Law Firm has previously — and unsuccessfully — gone after innovative tech companies with legal claims that were summarily dismissed in court. A federal court judge found that one of their previous complaints was ‘needlessly long’ and contained ‘largely irrelevant, distracting, or redundant information,’” Osborne told TechCrunch.

Osborne said that Scale complies with all laws and regulations and has “numerous safeguards in place” to protect its contributors like the ability to opt-out at any time, advanced notice of sensitive content, and access to health and wellness programs. Osborne added that Scale does not take on projects that may include child sexual abuse material.

In response, Glenn Danas, partner at Clarkson Law Firm, told TechCrunch that Scale AI has been “forcing workers to view gruesome and violent content to train these AI models” and has failed to ensure a safe workplace.

“We must hold these big tech companies like Scale AI accountable or workers will continue to be exploited to train this unregulated technology for profit,” Danas said.

Technology

Samsung’s clever new ‘Circle to Search’ trick could help you figure out that song that is stuck in your head

- A new ‘Circle to Search’ trick is available on Samsung’s latest Galaxy phones

- It lets you sing or hum songs that you want to identify

- The feature worked well in our early hands-on demos

Sure, Shazam and the Google Assistant, or even Gemini, can help you identify a song that’s playing in a coffee shop or while you’re out and about. But what about that tune you have stuck in your head that you’re desperate to put a name to?

Suffice it to say, that’s not a problem I have for anything by Springsteen, but it does happen for other songs, and Samsung’s latest and greatest – the Galaxy S25, S25 Plus, and S25 Ultra – might just be able to cure this. It’s courtesy of the latest expansion of Google’s Circle to Search on devices.

Launched on the Galaxy S24 last year and then expanded to other devices like Google’s own family of Pixel phones, you can long press at the bottom and then circle something on the screen to figure out what it is or find out more.

For instance, it could be a fun hat within a TikTok or Instagram Reel video, a snazzy button down, or even more info on a concert happening or a location like San Jose – where Samsung’s Galaxy Unpacked took place.

Circle to Search for songs

Now, though, when you long-press the home button – or engage the assistant in another way – you’ll see a music note icon.

From there, you can just start singing as Google will tell you it is listening. I as well as my colleague, TechRadar’s Editor-at-Large Lance UIanoff, then hummed two tracks – “Hot To Go” by Chapell Roan, which the Galaxy S25 Ultra took tries to identify it properly – and then it got “Fly Me To The Moon” (a classic) on its first try.

While Lance did have to hum a good bit, it did in fact figure out what that song inside our head was, and this could make the latest facet of Circle to Search a pretty handy function. It will, of course, also do the job of Shazam and listen to whatever is playing when you select it via the microphone built into your device as well.

Further, you can use it to circle a video on screen and figure out what was playing – as you can see in the hands-on embed below, it was able to do this for a TikTok. That ultimately doesn’t seem quite as helpful given a video on TikTok – or an Instagram Reel – will note the audio it is using. But this could be particularly useful for a long YouTube video that uses a variety of background music or if you’re streaming a title and can’t figure out the song.

Google’s latest tool expansion for Circle to Search will be available from day one on the Galaxy S25, Galaxy S25 Plus, and Galaxy S25 Ultra, but it’s worth pointing out that the search giant – turned AI giant – has been teasing this feature for a bit, and some even found it hiding in existing code. After our demo of it on the S25 Ultra, we had a hunch it would arrive elsewhere and it should be arriving on other devices with Circle to Search.

As for when it will arrive on the Galaxy S24, Z Flip 6, or Z Fold 6, that remains to be seen, and we’re also wondering that same question for Samsung’s other new Galaxy AI features. And if you’re keen to learn more about the Galaxy S25 family, check out our hands-on and our Galaxy S25 live blog for the event.

@techradar Samsung is adding an entirely new way to ‘Circle to Search’ and it could help you figure out that song that is stuck in your head

You might also like

Technology

Raymond Tonsing’s Caffeinated Capital seeks $400M for fifth fund

Caffeinated Capital, a San Francisco venture firm started by a solo capitalist Raymond Tonsing, is raising a fifth fund of $400 million, according to a regulatory filing.

The firm, an early investor in software company Airtable and defense startup Saronic, has already raised $160 million toward the fund. If Caffeinated hits its target, it will be the 15-year-old firm’s largest capital haul date. Although the outfit didn’t announce its previous fund, PitchBook data estimates that Caffeinated closed its fund four with a total of $209 million in commitments.

Although Tonsing was Caffeinated’s only general partner until four years ago, Varun Gupta, who led data science and machine learning at Affirm, joined him as a second general partner in 2020.

Tonsing was an early investor in Affirm, a buy-now-pay-later platform that went public in 2021. The firm’s other notable exits include A/B testing startup Optimizely, which PitchBook estimates was sold for $600 million in 2020.

Technology

NYT Strands today — my hints, answers and spangram for Thursday, January 23 (game #326)

Strands is the NYT’s latest word game after the likes of Wordle, Spelling Bee and Connections – and it’s great fun. It can be difficult, though, so read on for my Strands hints.

Want more word-based fun? Then check out my NYT Connections today and Quordle today pages for hints and answers for those games, and Marc’s Wordle today page for the original viral word game.

SPOILER WARNING: Information about NYT Strands today is below, so don’t read on if you don’t want to know the answers.

NYT Strands today (game #326) – hint #1 – today’s theme

What is the theme of today’s NYT Strands?

• Today’s NYT Strands theme is… Udderly delicious

NYT Strands today (game #326) – hint #2 – clue words

Play any of these words to unlock the in-game hints system.

- STALE

- BURY

- START

- SIDE

- CRUD

- BUTCH

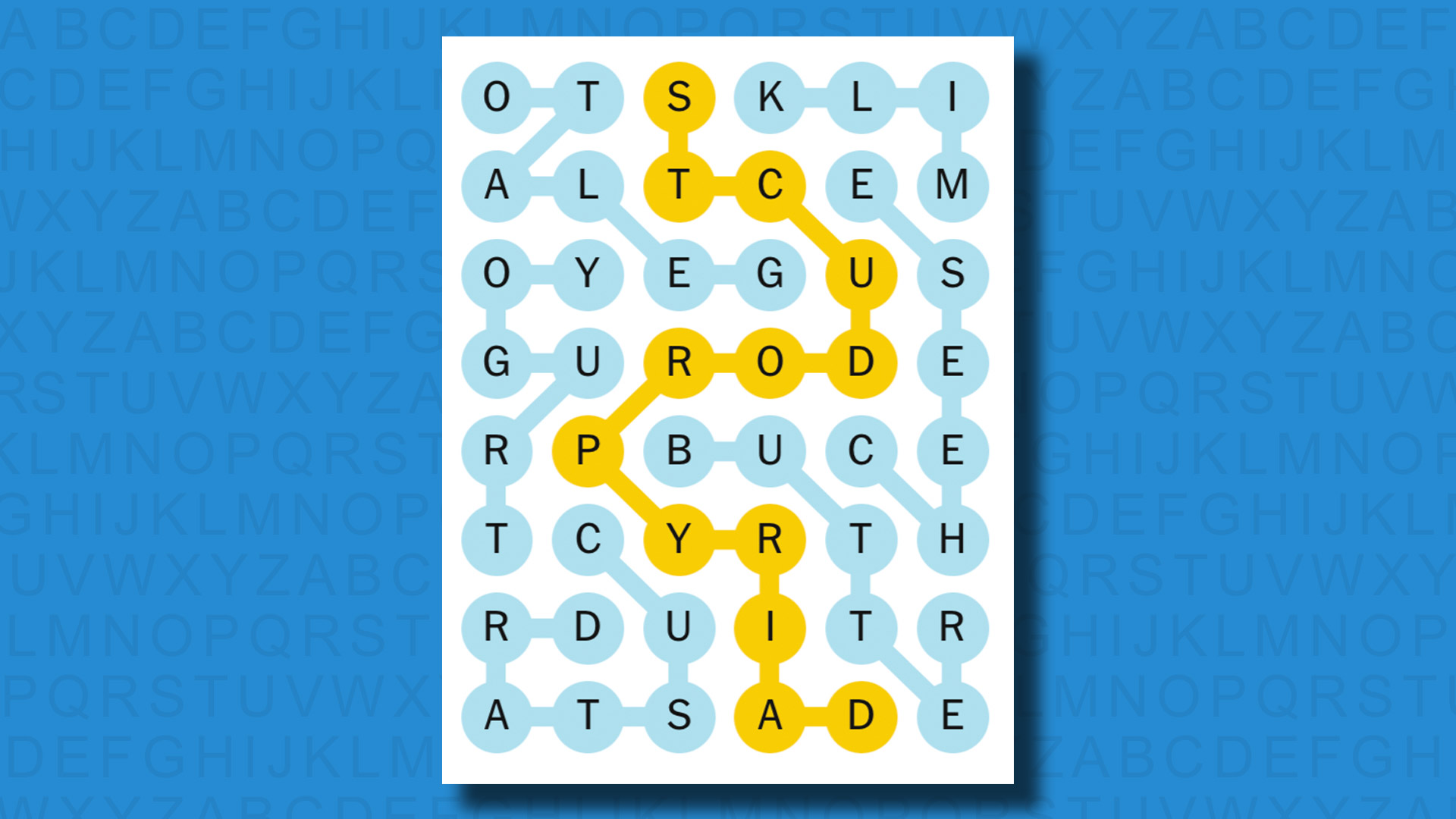

NYT Strands today (game #326) – hint #3 – spangram

What is a hint for today’s spangram?

• Cow classics

NYT Strands today (game #326) – hint #4 – spangram position

What are two sides of the board that today’s spangram touches?

First side: bottom, 5th column

Last side: top, 3rd column

Right, the answers are below, so DO NOT SCROLL ANY FURTHER IF YOU DON’T WANT TO SEE THEM.

NYT Strands today (game #326) – the answers

The answers to today’s Strands, game #326, are…

- MILK

- BUTTER

- CHEESE

- GELATO

- CUSTARD

- YOGURT

- SPANGRAM: DAIRY PRODUCTS

- My rating: Easy

- My score: Perfect

Being lactose intolerant and also, despite this condition, a turophile, I found today’s Strands enjoyable but, much like my beloved cheese, hard to stomach.

I put my diminutive stature down to a dislike of creamy creations, as height and milk protein have been shown to be linked. Researchers have attributed an obsession with DAIRY PRODUCTS as the reason why people from the Netherlands are better at reaching things on high shelves than any other nation in the world. In a year the average Dutch person consumes over 25% more CHEESE and other milk-based products than their American or British counterparts and this has resulted in a growth spurt over the past century, taking the Dutch from the shortest people in Europe to the tallest – the average Dutchman is more than 6ft tall and the average Dutch woman about 5ft 7in.

Anyway, a lovely easy Strands with a tasty subject matter.

Yesterday’s NYT Strands answers (Wednesday, 22 January, game #325)

- BRAVE

- CARS

- SOUL

- ONWARD

- ELEMENTAL

- RATATOUILLE

- SPANGRAM: ANIMATION

What is NYT Strands?

Strands is the NYT’s new word game, following Wordle and Connections. It’s now out of beta so is a fully fledged member of the NYT’s games stable and can be played on the NYT Games site on desktop or mobile.

I’ve got a full guide to how to play NYT Strands, complete with tips for solving it, so check that out if you’re struggling to beat it each day.

Technology

A Samsung integration helps make Google’s Gemini the AI assistant to beat

One of the most important changes in Samsung’s new phones is a simple one: when you long-press the side button on your phone, instead of activating Samsung’s own Bixby assistant by default, you’ll get Google Gemini.

This is probably a good thing. Bixby was never a very good virtual assistant — Samsung originally built it primarily as a way to more simply navigate device settings, not to get information from the internet. It has gotten better since and can now do standard assistant things like performing visual searches and setting timers, but it never managed to catch up to the likes of Alexa, Google Assistant, and now, even Siri. So, if you’re a Samsung user, this is good news! Your assistant is probably better now. (And if, for some unknown reason, you really do truly love Bixby, don’t worry: there’s still an app.)

The switch to Gemini is an even bigger deal for Google. Google was caught off guard a couple of years ago when ChatGPT launched but has caught up in a big way. According to recent reporting from The Wall Street Journal, CEO Sundar Pichai now believes Gemini has surpassed ChatGPT, and he wants Google to have 500 million users by the end of this year. It might just get there one Samsung phone at a time.

Gemini is now a front-and-center feature on the world’s most popular Android phones, and millions upon millions of people will likely start to use it more — or use it at all — now that it’s so accessible. For Google, which is essentially betting that Gemini is the future of every single one of its products, that brings a hugely important new set of users and interactions. All that data makes Gemini better, which makes it more useful, which makes it more popular. Which makes it better again.

Right now, Google appears to be well ahead of its competitors in one important way: Gemini is the most capable virtual assistant on the market right now, and it’s not particularly close. It’s not that Gemini is specifically great; it’s just that it has more access to more information and more users than anyone else. This race is still in its early stages, and no AI product is very good yet — but Google knows better than anyone that if you can be everywhere, you can get good really fast. That worked so well with search that it got Google into antitrust trouble. This time, at least so far, it seems like Google’s going to have an even easier time taking over the market.

It’s not that Gemini is specifically great; it’s just that it has more access to more information and more users than anyone else

For years, there were three meaningful players in the virtual assistant space. Amazon’s Alexa, Google’s Assistant, and Apple’s Siri all offered similar features and were similarly accessible through speakers and phones and wearables. But now? The much-hyped, AI-first “Remarkable Alexa” is, by all accounts, massively delayed and massively underpowered. The latest versions of Siri shipped with a wackier animation and seemingly no new smarts or capabilities.

There are other ascendant AI assistants, of course. ChatGPT, Claude, Grok, and Copilot all have strong underlying models, and some share the same multimodal capabilities as Gemini. There are lots of good reasons to pick them or even something like Perplexity over Gemini. But they’re missing the most important thing: distribution. They’re apps you have to download, log in to, and open every time. Gemini is a button you can press — and that’s a big difference. There’s a reason OpenAI is reportedly working on everything from a web browser to a Jony Ive-designed ChatGPT gadget: the built-in options usually win.

The built-in options are also the ones that tend to have the best integration across the platform, which might be the whole ball game. Gemini can already change settings on your phone and, with new upgrades, can even do things across apps — grabbing information from your email and dumping it into a text message draft, just to name one example. Because of the way iOS and Android are architected, no other assistant has this kind of access — and again, there’s no indication that Siri’s ever going to be as good as it needs to be. If the future of assistants is this kind of agentic, using-your-apps-for-you behavior, Google’s inherent advantage might be insurmountable.

Google is practically spoiled for places to put Gemini

Meanwhile, Google is practically spoiled for places to put Gemini. The company recently announced that all paying Workspace customers will get Gemini access. You can access Gemini with one click from your Gmail inbox or summon it with one keystroke in Docs. And the underlying tech is even more pervasive. You can use Gemini to find stuff on YouTube and in Drive, and practically every time you search, a Gemini-powered AI Overview appears at the top of your results. “Today, all seven of our products and platforms with more than two billion monthly users use Gemini models,” Pichai said on Google’s earnings call last fall. (Fun fact: the word “gemini” appears 29 times in that earnings call transcript, only three fewer than “search.”)

When it comes to how people actually encounter and interact with these models, though, the phone is still the AI device of choice. And that’s where Google has maybe its largest advantage. “Gemini’s deep integration is improving Android,” Pichai said on that earnings call. “For example, Gemini Live lets you have free-flowing conversations with Gemini; people love it.” For now, smartphones are the most compelling AI devices, and Google can integrate its systems unlike any other. Apple, scrambling to play catch-up with the iPhone, had to launch an awkward handoff with ChatGPT just so Siri could answer more questions.

All of these assistants, including Gemini, still have lots of limitations. They lie; they misunderstand; they lack the necessary integrations to do even some of the basic things Alexa and Assistant have been able to do for years. The Gemini models still occasionally do ridiculous, deal-breaking things like tell people to eat rocks and generate diverse founding fathers. But if you believe the AI era is coming, or is maybe even here, then there is nothing more important right now than getting your AI platform in front of users. People are developing new habits, learning new systems, developing new relationships with their virtual assistants. The more entrenched we become, the less likely we will be to dump our AI friend for another one.

ChatGPT had the first-mover advantage and captured the world’s imagination by showing just how compelling an AI chatbot could be. But Google has the distribution. It can put its sparkly icon in front of practically the entire population of the internet every single day, across a huge range of products, and get the kind of data and feedback it needs to eventually do this well. Even as it fights in court over how powerful its default status made it in search, Google is executing the same playbook with AI. And it’s working again.

-

Fashion8 years ago

Fashion8 years agoThese ’90s fashion trends are making a comeback in 2025

-

Entertainment8 years ago

Entertainment8 years agoThe Season 9 ‘ Game of Thrones’ is here.

-

Fashion8 years ago

Fashion8 years ago9 spring/summer 2025 fashion trends to know for next season

-

Entertainment8 years ago

Entertainment8 years agoThe old and New Edition cast comes together to perform You’re Not My Kind of Girl.

-

Sports8 years ago

Sports8 years agoEthical Hacker: “I’ll Show You Why Google Has Just Shut Down Their Quantum Chip”

-

Business8 years ago

Uber and Lyft are finally available in all of New York State

-

Entertainment8 years ago

Disney’s live-action Aladdin finally finds its stars

-

Sports8 years ago

Steph Curry finally got the contract he deserves from the Warriors

-

Entertainment8 years ago

Mod turns ‘Counter-Strike’ into a ‘Tekken’ clone with fighting chickens

-

Fashion8 years ago

Your comprehensive guide to this fall’s biggest trends

You must be logged in to post a comment Login