Crypto World

How to Reduce Non-Determinism and Hallucinations in Large Language Models (LLMs)

In recent months, two separate pieces of research have shed light on two of the most pressing issues in large language models (LLMs): their

non-deterministic nature and their tendency to

hallucinate. Both phenomena have a direct impact on the

reliability,

reproducibility, and

practical usefulness of these technologies.

On the one hand,

Thinking Machines, led by former OpenAI CTO Mira Murati, has published a paper proposing ways to make LLMs return the

exact same answer to the

exact same prompt every time, effectively defeating non-determinism. On the other hand,

OpenAI has released research identifying the root cause of hallucinations and suggesting how they could be significantly reduced.

Let’s break down both findings and why they matter for the future of AI.

The problem of non-determinism in LLMs

Anyone who has used ChatGPT, Claude, or Gemini will have noticed that when you type in the exact same question multiple times, you don’t always get the same response. This is what’s known as

non-determinism: the same input does not consistently lead to the same output.

In some areas, such as creative writing, this variability can actually be a feature; it helps generate fresh ideas. But in domains where

consistency, auditability, and reproducibility are critical — such as healthcare, education, or scientific research — it becomes a serious limitation.

Why does non-determinism happen?

The most common explanation so far has been a mix of two technical issues:

- Floating-point numbers: computer systems round decimal numbers, which can introduce tiny variations.

- Concurrent execution on GPUs: calculations are performed in parallel, and the order in which they finish can vary, changing the result.

However, Thinking Machines argues that this doesn’t tell the whole story. According to their research, the real culprit is batch size.

When a model processes multiple prompts at once, it groups them into batches (or “carpools”). If the system is busy, the batch is large; if it’s quiet, the batch is small. These variations in batch size subtly change the order of operations inside the model, which can ultimately influence which word is predicted next. In other words, tiny shifts in the order of addition can completely alter the final response.

Thinking Machines’ solution

The key, they suggest, is to keep internal processes consistent regardless of batch size. Their paper outlines three core fixes:

- Batch-invariant kernels: ensure operations are processed in the same order, even at the cost of some speed.

- Consistent mixing: use one stable method of combining operations, independent of workload.

- Ordered attention: slice input text uniformly so the attention mechanism processes sequences in the same order each time.

The results are striking: in an experiment with the Qwen 235B model, applying these methods produced 1,000 identical completions to the same prompt, rather than dozens of unique variations.

This matters because determinism makes it possible to audit, debug, and above all, trust model outputs. It also enables stable benchmarks and easier verification, paving the way for reliable applications in mission-critical fields.

The problem of hallucinations in LLMs

The second major limitation of today’s LLMs is hallucination: confidently producing false or misleading answers. For example, inventing a historical date or attributing a theory to the wrong scientist.

Why do models hallucinate?

According to OpenAI’s paper, hallucinations aren’t simply bugs; they are baked into the way we train LLMs. There are two key phases where this happens:

- Pre-training: even with a flawless dataset (which is impossible), the objective of predicting the next word naturally produces errors. Generating the

right answer is harder than checking whether an answer

is right. - Post-training (reinforcement learning): models are fine-tuned to be more “helpful” and “decisive”. But current metrics reward correct answers while penalising both mistakes

and admissions of ignorance. The result? Models learn that it’s better to bluff with a confident but wrong answer than to say “I don’t know”.

This is much like a student taking a multiple-choice exam: leaving a question blank guarantees zero, while guessing gives at least a chance of scoring. LLMs are currently trained with the same incentive structure.

OpenAI’s solution: behavioural calibration

The proposed solution is surprisingly simple yet powerful: teach models when not to answer. Instead of forcing a response to every question, set a confidence threshold.

- If the model is, for instance, more than 75% confident, it answers.

- If not, it responds:

“I don’t know.”

This technique is known as behavioural calibration. It aligns the model’s stated confidence with its actual accuracy.

Crucially, this requires rethinking benchmarks. Today’s most popular evaluations only score right and wrong answers. OpenAI suggests a three-tier scoring system:

- +1 for a correct answer

- 0 for “I don’t know”

- –1 for an incorrect answer

This way, honesty is rewarded and overconfident hallucinations are discouraged.

Signs of progress

Some early users report that GPT-5 already shows signs of this approach: instead of fabricating answers, it sometimes replies,

“I don’t know, and I can’t reliably find out.” Even Elon Musk praised this behaviour as an impressive step forward.

The change may seem small, but it has profound implications: a model that admits uncertainty is far more trustworthy than one that invents details.

Two sides of the same coin: reliability and trust

What makes these two breakthroughs especially interesting is how complementary they are:

- Thinking Machines is tackling

non-determinism, making outputs consistent and reproducible. - OpenAI is addressing

hallucinations, making outputs more honest and trustworthy.

Together, they target the biggest barrier to wider LLM adoption: confidence. If users — whether researchers, doctors, teachers, or policymakers — can trust that an LLM will both give reproducible answers and know when to admit ignorance, the technology can be deployed with far greater safety.

Conclusion

Large language models have transformed how we work, research, and communicate. But for them to move beyond experimentation and novelty, they need more than just raw power or creativity: they need trustworthiness.

Thinking Machines has shown that non-determinism is not inevitable; with the right adjustments, models can behave consistently. OpenAI has demonstrated that hallucinations are not just random flaws but the direct result of how we train and evaluate models, and that they can be mitigated with behavioural calibration.

Taken together, these advances point towards a future of AI that is more transparent, reproducible, and reliable. If implemented at scale, they could usher in a new era where LLMs become dependable partners in science, education, law, and beyond.

Crypto World

ETH Slides 35% in a Month as ETF Flows Turn Negative

A new report from BestBroker highlights ETH ETF assets shrinking since the start of the year.

U.S. spot Ethereum ETFs are recording major outflows as demand weakens across the crypto market, according to a new report from BestBrokers.

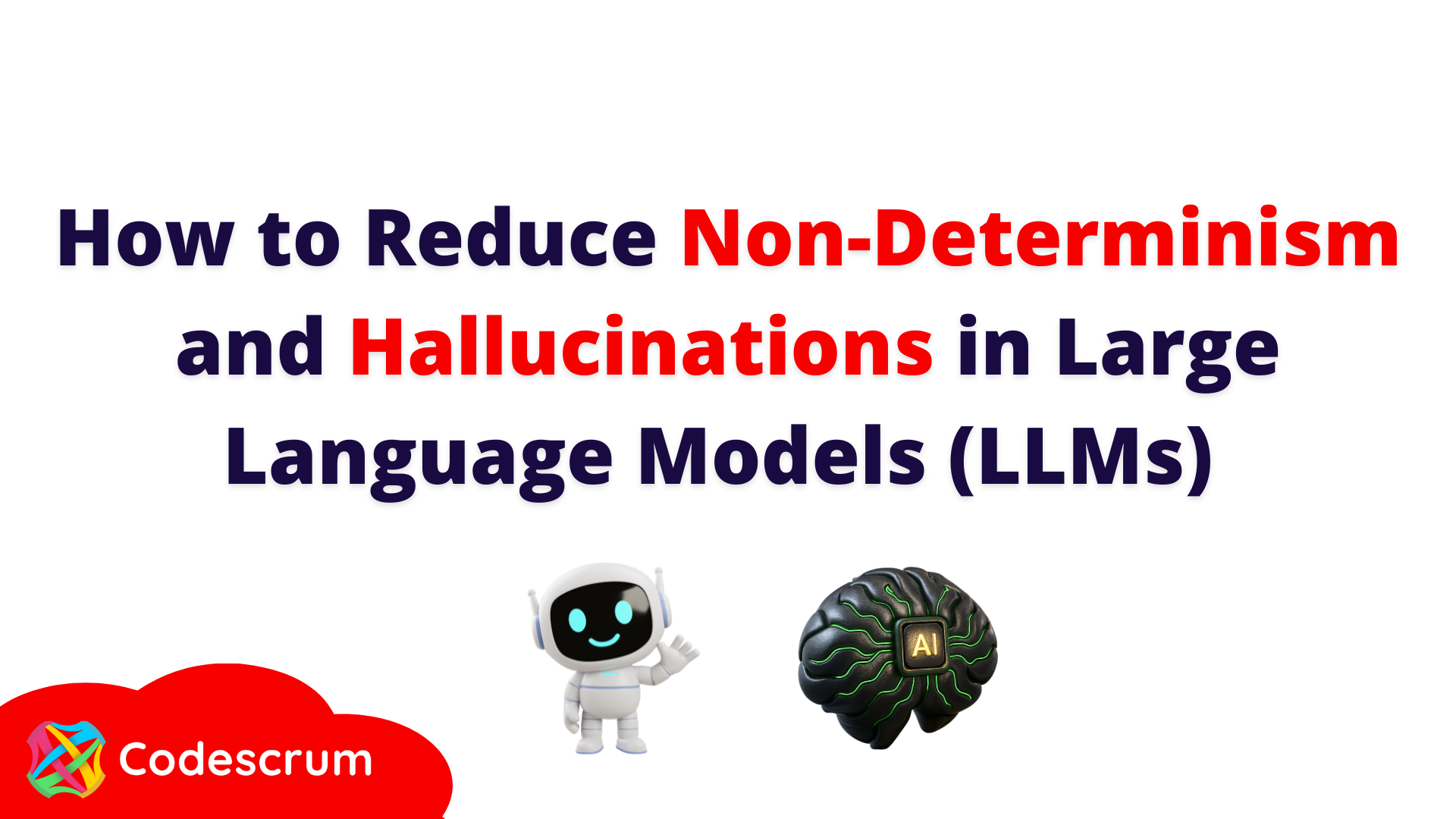

ETH ETF holdings dropped from more than 6.1 million ETH in late January to about 5.8 million by Feb. 23. Total assets in those funds also fell from $18.6 billion to about $11.9 billion. The data also shows that the market is highly concentrated, with BlackRock holding about 57% of all ETH in U.S. ETFs – well ahead of Grayscale and Fidelity.

Ether (ETH) has fallen sharply, down about 35% over the past month and nearly 40% over the past three months. Currently, the world’s second-largest cryptocurrency by market capitalization is trading at around $1,850, per CoinGecko.

The findings highlight how quickly sentiment toward crypto has soured over the past few months. It also shows how investors continue to pull money from riskier assets amid rising volatility.

Bitcoin Findings

On the Bitcoin side, the report said spot Bitcoin ETFs have also had a weaker start to 2026 after steady inflows in 2024 and 2025. BestBrokers estimates more than $4 billion in net outflows since the start of the year, with total ETF holdings slipping to 1.26 million BTC as of Feb. 23 – the first mid-quarter decline since launch.

BlackRock’s iShares Bitcoin Trust (IBIT) led the pullback, posting outflows of 19,300 BTC in February, while Grayscale and Fidelity also recorded outflows.

BestBrokers’ report said the divergence suggests institutions are treating Bitcoin as longer-term exposure, while Ethereum funds are more sensitive to market sentiment.

Crypto World

BSTR Eyes April Approval for SPAC Public Listing

TLDR

- BSTR plans to go public through a SPAC merger with Cantor Equity Partners I.

- Adam Back said shareholder approval for the listing could come as soon as April.

- The company intends to debut with 30,000 bitcoin on its balance sheet.

- Founding shareholders will contribute 25,000 bitcoin to the new entity.

- Early investors will add 5,000 bitcoin in kind to complete the holdings.

Bitcoin Standard Treasury Company is advancing plans for a public listing through a SPAC merger. Adam Back said shareholders could approve the transaction as soon as April. The company aims to debut with 30,000 bitcoin on its balance sheet despite recent market weakness.

BSTR Plans Public Debut With 30,000 Bitcoin

BSTR will merge with Cantor Equity Partners I, a SPAC led by Brandon Lutnick. The companies announced the proposed transaction in the summer of 2025 during a surge in crypto treasury formations.

Back and other founding shareholders will contribute 25,000 bitcoin to the new entity. Early investors will add 5,000 bitcoin in kind, bringing total holdings to 30,000 coins.

Back confirmed the timeline during an interview with CNBC on Monday. He said shareholder approval for the public listing could arrive as soon as April.

He stated that BSTR intends to launch with a large bitcoin reserve from day one. He added that the company structured the contributions to ensure balance sheet strength at listing.

Market Conditions and Strategy Ahead of Listing

Bitcoin has declined to about $63,000 after trading at higher levels earlier in the year. At the same time, several bitcoin treasury companies have lost large portions of their market value.

Back said a lower bitcoin price could support BSTR before it lists publicly. He explained that a reduced reference price may allow the company to accumulate more bitcoin at discounted levels.

He told CNBC that such positioning could strengthen the balance sheet over time. He said this approach may increase long-term upside if market conditions improve.

Back addressed the recent bitcoin pullback during the interview. He said the decline occurred despite what he described as a favorable regulatory backdrop in the United States.

He attributed the weakness to macroeconomic pressures affecting risk assets. He cited geopolitical tensions and tariff uncertainty as factors weighing on broader markets.

Back also discussed the role of bitcoin treasury companies in the market. He said these firms focus on acquiring and holding bitcoin as a core strategy.

He acknowledged that accumulation often slows during bear markets. However, he said, treasury companies remove bitcoin from circulation, which supports long-term supply dynamics.

Crypto World

Bitwise CEO says AI Is ‘Unstoppable freight train’ for Crypto, Haun’s Monica urges caution

SAN FRANCISCO, CA – As artificial intelligence races ahead, some crypto executives believe it could become the force that finally pushes blockchain infrastructure into widespread use. Others aren’t convinced the leap is so straightforward.

In a recent panel discussion at NEARCON 2026, Bitwise CEO Hunter Horsley described AI as “an unstoppable freight train,” arguing that its pace of development is unlike anything crypto has experienced. “AI is accomplishing a quarter’s worth of roadmap every two weeks right now,” he said, suggesting that projections based on previous crypto adoption cycles may already be outdated. “You have to dump the last six years of data and cut it fresh from the last six months.”

For Horsley, the implication is that public blockchains could benefit disproportionately from AI’s rise. “If there’s one space that will be an unmitigated benefactor of the adoption proliferation of AI, it will be public blockchains and crypto assets,” he said.

As autonomous agents begin to act on behalf of users, he suggested, crypto-native tools may offer practical advantages. “Agents, obviously, you’re not going to want to authorize OpenClaw with your credit card… You’re gonna want to fund them with stablecoins. They’re gonna want to transact confidentially,” Horsley said, pointing to stablecoins and onchain infrastructure as potential guardrails for machine-driven activity.

Diogo Monica, general partner at Haun Ventures and co-founder of Anchorage Digital, pushed back on the assumption that agentic commerce automatically requires new rails.

“There is a chance that the agent payments commerce looks exactly like the current payment commerce for the foreseeable future,” Monica said. “You are telling me that a superhuman intelligence cannot use the current payment rails, the current credit cards, the current instant settlement, to pay for things and to figure it out on their own.”

“You can’t tell me that AGI is coming and agents are going to be super smart… and tell me that they’re not going to be smart enough to figure out different systems,” he added.

Still, Monica acknowledged a deeper alignment between the technologies. “AI creates digital abundance and crypto versus digital scarcity. These are actually complementary technologies,” he said, adding that crypto’s privacy and verification tools could help mitigate some of the risks AI introduces.

Whether blockchains become the default rails for autonomous commerce remains unresolved. But as AI accelerates, the debate over crypto’s role in that future is clearly intensifying.

Read more: NEAR Launches Near.com super app, touting AI capabilities and confidential transactions

Crypto World

BNB coin price outlook as Binance stablecoin reserves hits lowest levels

- BNB coin struggles below $600 as regulatory noise clouds short-term sentiment.

- Falling stablecoin reserves point to weaker liquidity and cautious traders.

- A key Binance coin price support sits near $573, while bulls must reclaim $597 to regain momentum.

Binance Coin (BNB) is under pressure as the broader crypto market flashes mixed signals.

As the BNB coin continues to fall, recent exchange data from CryptoQuant shows that stablecoin reserves held on the Binance crypto exchange have fallen to their lowest levels in several months.

Falling stablecoin reserves raise liquidity concerns

Stablecoins are often treated as dry powder in the crypto market.

When reserves decline on major exchanges, it usually means capital is being pulled out rather than positioned for new buys.

The latest drop in Binance’s stablecoin balances suggests traders are either de-risking or waiting on the sidelines.

This reduction in available liquidity can weaken short-term price support across major assets, including Binance Coin.

Lower reserves also reduce the market’s ability to absorb large sell orders, increasing the risk of sharper moves during periods of volatility.

For BNB, this matters because its price tends to be closely linked to activity and confidence on the Binance platform.

Bitcoin inflows and shifting trader sentiment

As the stablecoin reserves on Binance drop, Bitcoin balances on Binance have climbed to their highest levels since late 2024.

An increase in BTC held on exchanges is often interpreted as potential selling pressure or preparation for active trading.

This shift can increase short-term volatility across the market and spill over into altcoins like BNB coin.

Combined with falling stablecoin reserves, it paints a picture of traders repositioning rather than aggressively buying.

Such an environment usually favours range-bound trading instead of strong trend moves.

Market hesitation

Binance Coin has failed to hold above the $600 level, a zone that had acted as support earlier in the year.

Although momentum indicators like the Relative Strength Index (RSI) suggest selling pressure has cooled slightly since the coin is currently oversold, there is not enough buying pressure to confirm a trend reversal.

While buyers appear active near lower support zones, follow-through has been limited.

This type of price behaviour often precedes either a consolidation phase or a sharper move once liquidity returns.

BNB coin price forecast

The BNB price forecast now depends heavily on how it reacts around well-defined technical levels.

The first level traders should watch, according to analysts, is $573.49, which has acted as short-term support.

A clean break below that area could open the door for a move toward the next support near $543.03.

On the upside, $597.41 remains the key resistance level that bulls must reclaim.

A decisive move above that zone would likely encourage a push toward $619.48, with $642.11 standing as the next major resistance.

However, as long as stablecoin liquidity remains tight, upside moves may struggle to sustain momentum.

Crypto World

ASTER holds range as traders position for March mainnet launch

ASTER traded flat into mid-February as traders priced in March mainnet launch.

Summary

- ASTER consolidated in an accumulation zone into Feb. 19, with traders watching a key resistance level that could open upside targets if broken amid broader market weakness.

- Token Terminal showed 6 daily, 44 weekly and 340 monthly active addresses as of Feb. 18, highlighting thin underlying usage versus the bullish technical and positioning setup.

- A fee-to-buyback model directs up to 80% of platform fees to on-chain buybacks, while a Stage 6 airdrop distributing 64m ASTER (0.8% supply) runs through Mar. 29 alongside a March mainnet window.

ASTER token consolidated through mid-February as market participants positioned ahead of the project’s scheduled March mainnet launch, according to trader analysis and project roadmap data.

Trader Don Wedge identified an accumulation zone in a chart posted February 19, highlighting a key resistance level that, if breached, could enable movement toward higher price targets, according to the posted analysis.

The token’s price movement occurred during a broader cryptocurrency market decline, suggesting positioning centered on project-specific developments rather than general market sentiment shifts, according to market observers.

Trader Shuarix stated February 19 that momentum was building ahead of the March mainnet window, citing confirmed mainnet timing, increased on-chain activity, and pre-launch positioning as factors driving price action.

Aster Chain‘s official roadmap lists the Layer 1 mainnet launch in the first quarter of 2026, with multiple reports indicating March as the target delivery period. Mainnet launches typically establish token utility through transaction fees, staking mechanisms, and governance functions.

Token Terminal data as of February 18 showed six daily active addresses, 44 weekly active addresses, and 340 monthly active addresses on the network. The usage figures raised questions about whether fundamental network adoption supported the technical price setup.

A whale position on Hyperliquid held a four-times leveraged long position open for 22 days as of February 19, according to on-chain data. Large leveraged position exits can trigger selling pressure and cascading liquidations, according to market analysts.

Aster implemented a fee-to-buyback mechanism starting February 4, directing up to 80 percent of daily platform fees toward on-chain token buybacks, according to project documentation. Approximately 40 percent functions as automatic daily buybacks, with 20 to 40 percent allocated to a strategic wallet for discretionary purchases.

The buyback structure creates proportional bid support tied to platform volumes and fees, according to the mechanism’s design. If activity increases ahead of mainnet, buyback flows rise correspondingly; reduced activity diminishes the bid structure.

Aster’s Stage 6 airdrop phase, designated “Convergence,” runs from February 2 through March 29, 2026, allocating approximately 64 million ASTER tokens, representing 0.8 percent of total supply, according to project announcements. The distribution marks the final transaction-activity-based phase before emissions transition to staking-based rewards.

Airdrop completion could reduce selling pressure from participants accumulating points, potentially affecting price volatility post-claim, according to market analysts.

The project roadmap lists fiat on-ramp and off-ramp integration via third-party providers for the first quarter of 2026. Staking and governance features are scheduled for the second quarter of 2026, according to the published timeline.

The mainnet launch window, fee buyback mechanism, and airdrop phase conclusion provide structural developments supporting technical price action, according to market analysis. Token Terminal’s usage metrics indicate fundamental gaps that mainnet delivery may not resolve without sustained adoption growth, according to the data.

Market participants positioned for resistance breakouts face execution risk if large leveraged holders exit before key price levels clear, according to trading analysts monitoring the setup.

Crypto World

Bitwise Acquires Chorus One, Signals More Staked ETFs

Bitwise is expanding its staking services through the strategic acquisition of Chorus One, a staking infrastructure specialist that oversees more than $2.2 billion in actively staked assets. The move underscores how traditional asset-management firms are deepening their on-chain offerings as institutions seek diversified yields and regulated exposure to proof-of-stake ecosystems. Bitcoin (CRYPTO: BTC) and Ethereum (CRYPTO: ETH) have long anchored crypto investment strategies, and Bitwise’s latest deal signals a broader push into staking across multiple networks as demand for yield on locked crypto continues to grow. The integration comes as Bitwise looks to broaden its portfolio of exchange-traded products (ETPs) and staking solutions in a regulatory landscape that has shown appetite for a wider array of crypto investment products.

Bitwise said on Tuesday that 50 Chorus One employees will join Bitwise Onchain Solutions, a segment already handling substantial on-chain activity and staking for thousands of clients. The transfer of talent will bolster Bitwise’s staking operations, enabling the firm to scale its offerings and support a broader set of networks while leveraging Chorus One’s established infrastructure. The deal’s financial terms were not disclosed, but the strategic alignment is clear: a long-tenured staking provider joining a manager with a growing footprint in crypto ETPs and a plan to diversify product structures beyond spot exposure.

The significance of staking in the current market context cannot be overstated. Staking, the process by which holders lock tokens to participate in network consensus and earn rewards, has emerged as a meaningful yield channel alongside potential price appreciation. Industry players typically note annual yields ranging roughly from 2% to 10%, depending on the chain and validator economics. The Bitwise move aligns with broader market activity where investors seek yield-enhancing strategies within regulated product wrappers. A linked industry debate has highlighted interest from the U.S. Securities and Exchange Commission in embracing a wider set of crypto investment vehicles, which could eventually pave the way for more diverse staking-focused ETFs and ETPs.

The acquisition adds a multi-chain dimension to Bitwise’s staking capabilities, extending the reach to more than 30 proof-of-stake networks. In practical terms, Bitwise will be able to offer staking services across major ecosystems such as Solana (CRYPTO: SOL), Avalanche (CRYPTO: AVAX), Tezos (CRYPTO: XTZ), Sui (CRYPTO: SUI), Aptos (CRYPTO: APT), and Tezos, among others. By integrating Chorus One’s technical backbone with Bitwise’s distribution channels, the combined entity aims to deliver staking services more efficiently, with a focus on security, governance participation, and compliance-ready product structures for institutional clients. The breadth of networks is particularly noteworthy given the fragmented nature of staking across the crypto space, where different chains require bespoke tooling, validator oversight, and risk management frameworks.

Chorus One has carved a niche delivering staking infrastructure since 2018, serving finance firms, family offices, high-net-worth individuals, custodians, funds, exchanges, and decentralized protocols. The firm’s clients benefit from a modular, scalable framework that supports validator operations, node management, and governance participation, all of which dovetail with Bitwise’s core competency in designing, managing, and distributing crypto investment products. The deal also ensures continuity for Chorus One’s existing customers, as the team, including Chorus One CEO Brian Crain, will remain engaged in advisory capacities at Bitwise. The continuity of leadership suggests a smooth transition and a shared emphasis on reliability and risk controls in staking operations.

Bitwise has been building its presence in the exchange-traded space for years, and the Chorus One integration sits squarely within a broader strategy to diversify product lines beyond just spot exposure. Bitwise’s workforce, now nearing 200 employees globally, is already deeply involved in crafting, managing, and distributing crypto ETPs to a growing roster of clients. The company has reported robust flows through its flagship funds—Bitwise Bitcoin ETF (BITB) and Bitwise Ethereum ETF (ETHW)—which have drawn considerable investor attention since their respective launches in January and July 2024, collectively moving billions of dollars of allocations. The broader footprint includes other theme-based and sector-focused ETPs such as the Bitwise Solana Staking ETF (BSOL), along with XRP, Chainlink (CLNK), and Dogecoin (BWOW) variants, illustrating Bitwise’s intent to embed staking and yield across diverse crypto themes while maintaining a strong core exposure to the largest cryptocurrencies.

The strategic logic for Bitwise is clear: staking represents a growth vector that can complement a wide slate of ETPs while leveraging an infrastructure partner with an established track record. By bringing Chorus One’s deep bench of engineers, operators, and governance-minded expertise under the Bitwise umbrella, the company aims to accelerate product development and expand access to staking through a regulated, institution-grade lens. As Bitwise’s leadership has noted, staking is one of the most compelling growth opportunities for the firm’s client base, which spans thousands of spot-asset holders and institutional investors seeking diversified yield alongside potential upside from crypto price dynamics.

From a market-wide perspective, the integration aligns with rising interest in staking-as-a-service despite ongoing regulatory scrutiny. The SEC’s public position on crypto products has shown a willingness to entertain a broader slate of offerings, potentially enabling more ETFs and ETF-like products that incorporate staking mechanics. This regulatory openness, combined with competitive pressure from peers expanding into staking, creates a backdrop in which Bitwise’s expanded capacity could translate into more investor-friendly products, clearer custody and reporting standards, and more transparent risk controls around staking operations. In practice, this means investors who prefer traditional investment vehicles may soon see more choice when it comes to gaining exposure to staking yields on multiple chains, rather than relying solely on direct token holdings.

Bitwise’s leadership underscores staking as a core strategic thrust. Bitwise CEO Hunter Horsley described staking as a growth engine for Bitwise’s global client base, highlighting the potential to unlock on-chain yields across a broader set of networks while maintaining the governance and security standards that institutional investors demand. The Chorus One acquisition thus reads as a signal that Bitwise intends to scale not only its asset base but also its staking ecosystem, transforming how institutions access and manage on-chain yields through a familiar, regulated product framework. The combination of Chorus One’s technical capability and Bitwise’s distribution network could accelerate the adoption of staking across more jurisdictions and investor segments, particularly as the crypto market continues to mature and competition among ETP issuers intensifies.

Market reaction and key details

The deal’s impact on Bitwise’s staking strategy is tangible. With Chorus One’s team on board, Bitwise gains a broader, more scalable staking infrastructure that can support a wider array of networks and staking configurations. The extended network reach means clients can participate in validator governance and earn staking rewards across more ecosystems without the operational burden of self-managing multiple staking setups. The integration also positions Bitwise to accelerate product development for institutional-grade staking solutions, potentially leading to new ETPs that embed staking yields alongside traditional price exposure.

On the investor side, Bitwise’s growing scale—now with nearly 200 employees and a portfolio that includes more than 40 investment products—helps explain why the market has increasingly looked to Bitwise as a bridge between traditional finance and crypto-native strategies. Bitwise’s flagship funds remain a focal point for capital inflows; the Bitwise Bitcoin ETF (BITB) and Bitwise Ethereum ETF (ETHW) have been central to early 2024-2025 performance narratives, drawing billions of dollars in flows since their inception. This real-world performance data, combined with Chorus One’s proven staking framework, could set the stage for additional fundraising, product launches, and potential partnerships as the crypto market evolves toward greater institutional participation.

Chorus One’s CEO, Brian Crain, will join Bitwise in an advisory capacity, emphasizing continuity in the transition and signaling a long-term collaboration rather than a short-term integration. The leadership alignment is notable because it preserves the technical and governance ethos that Chorus One built over the past five years, while infusing Bitwise’s distribution and compliance capabilities with that experience. The resulting synergies may manifest in more efficient onboarding of new staking clients, improved reporting and risk-management tools for staked assets, and a more cohesive approach to custody and regulatory alignment across staking operations.

As Bitwise continues to expand its staking footprint, the broader ecosystem will be watching how the company navigates regulatory developments, product approvals, and the evolving demand for on-chain yield. The market’s current climate—characterized by liquidity dynamics, rising risk appetite in certain segments, and ongoing regulatory soul-searching—could determine the pace at which Bitwise translates this strategic acquisition into tangible product launches and investor uptake. The Chorus One integration is a meaningful data point in a sector that is still maturing, with staking poised to become a more prominent feature of crypto investment vehicles for both retail and institutional stakeholders.

What to watch next

- Timeline for onboarding Chorus One staff into Bitwise Onchain Solutions and any restructuring of staking operations.

- Regulatory updates or product approvals from the SEC related to staking-enabled ETPs and new crypto investment products.

- Subsequent launches or pilots of staking-focused ETFs across additional networks beyond the current portfolio (SOL, AVAX, XTZ, SUI, APT, etc.).

- Rollout of enhanced governance, reporting, and risk-management tooling tied to the expanded staking program.

- Monitoring Bitwise’s asset growth, AUM, and product launches to gauge how the Chorus One integration translates into client acquisitions and inflows.

Sources & verification

- Bitwise press release announcing the acquisition of Chorus One and integration details.

- Chorus One’s staking infrastructure profile and public statements about multi-chain staking capabilities.

- Bitwise communications noting AUM, employee count, and the breadth of crypto ETPs (as cited in the article).

- The referenced discussion by Bitwise leadership on staking growth opportunities and client demand.

Crypto World

Further Losses on the Way?

Is HYPE at risk of falling to $0?

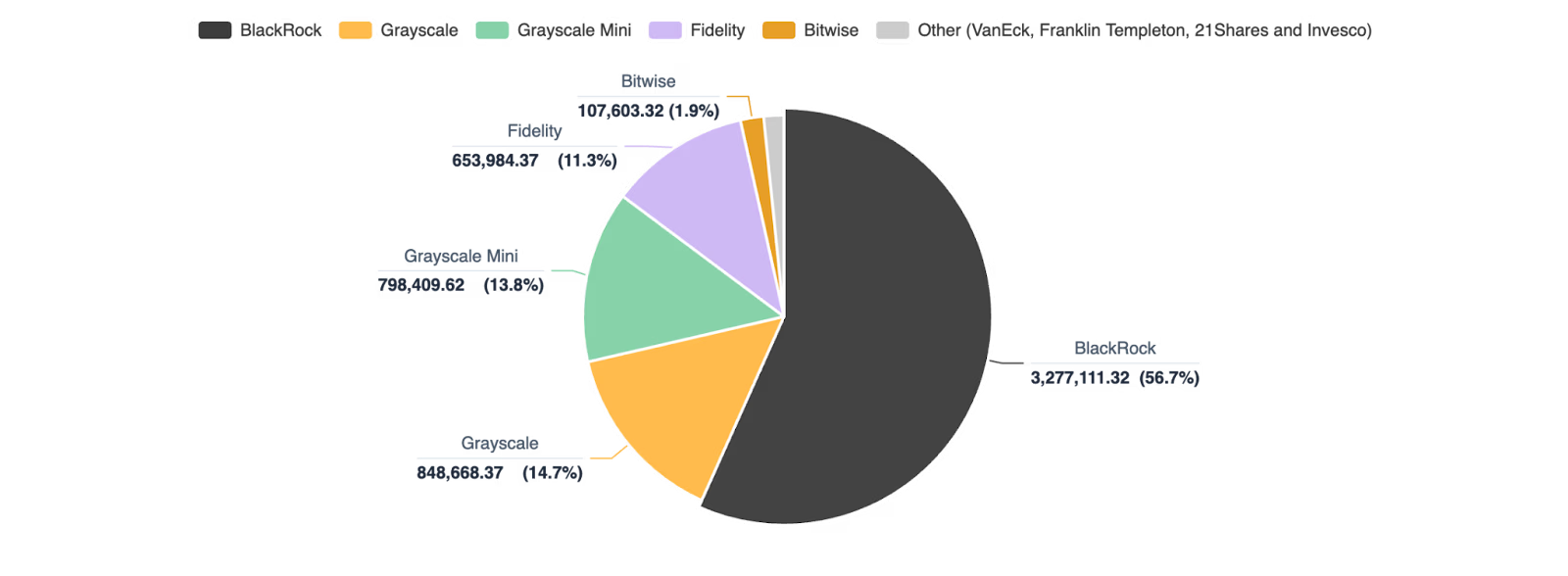

HYPE, the native token of the decentralized exchange Hyperliquid, has performed quite poorly lately, coinciding with the red wave sweeping through the entire crypto sector.

The token has been the subject of numerous price predictions, with some analysts envisioning additional declines in the short term.

Where is the Bottom?

Currently, HYPE is worth roughly $26, representing an 11% weekly loss and a 56% collapse from its all-time high of almost $60 registered in mid-September last year.

The popular market observer Ali Martinez analyzed the asset’s recent performance and concluded that it is breaking out of a certain triangle formation, risking a further plunge to as low as $20. Sjuul | AltCryptoGems also envisioned a deeper pullback ahead.

“As you can see, price action started to slow down and is locally breaking down. Since we have a big cap below, I would not be surprised to see a bigger correction coming,” he added.

Nebraskangooner appears to be the biggest pessimist. He claimed HYPE has been rejected at a key resistance level, forecasting the eventual collapse to zero.

HYPE’s recent exchange netflow reinforces the bearish scenario. Over the last few days, inflows have slightly surpassed outflows, suggesting that some investors have moved away from self-custody and shifted their holdings to centralized platforms. This doesn’t necessarily mean they intend to cash out, but in many cases, such transfers do precede selling activity.

How About a Rebound?

The optimists, who forecast that Hyperliquid’s native token could rally substantially in the near future, are just as vocal. X user HYPEconimst suggested that the possible path ahead is a sweep to $27.5, a reclaim of the $30.5 zone, and a pump to $45.5.

You may also like:

The analyst, who goes by the name ryandcrypto on the social media platform, argued that the asset’s price will not plunge below $20 “easily” and “would probably take BTC going well below $60K.”

For their part, TraderSZ envisioned significant volatility ahead and an eventual ascent above $36 in the coming months.

HYPE’s Relative Strength Index (RSI) also hints that a resurgence might be on the way. The technical analysis tool shows whether the asset is overbought or oversold by measuring the speed and magnitude of recent price changes. It runs from 0 to 100, where ratios around and below 30 indicate a rally could be incoming, while anything above 70 is considered bearish territory. As of this writing, the RSI stands just north of the bullish zone.

Binance Free $600 (CryptoPotato Exclusive): Use this link to register a new account and receive $600 exclusive welcome offer on Binance (full details).

LIMITED OFFER for CryptoPotato readers at Bybit: Use this link to register and open a $500 FREE position on any coin!

Crypto World

TSUI) to Begin Trading on Tuesday Feb 24th, Expanding U.S. Access to Sui

[PRESS RELEASE – New York, New York, February 24th, 2026]

U.S. spot ETF significantly expands regulated investor access to the Sui ecosystem in the world’s largest capital market

The Sui Foundation today announced that trading has officially commenced on the Nasdaq for TSUI, a spot SUI ETF issued by 21shares, a global leader in crypto exchange-traded products. The fund provides U.S. investors with a regulated, high-liquidity vehicle to gain direct exposure to Sui’s performance through their existing brokerage accounts following recent SEC approval.

The launch marks another major milestone in Sui’s continued growth as a payments platform and modern global finance layer. Sui is the full stack for a new global economy, founded by the tech leaders who spearheaded Meta’s Diem and Libra initiatives, and is advancing a vision of moving money as freely as messages. 21shares has long been at the forefront of bringing digital asset exposure into traditional financial markets, offering a broad suite of regulated crypto ETPs across Europe and beyond. Its expansion into a U.S. spot SUI ETF reflects accelerating institutional confidence in Sui’s infrastructure and ecosystem.

Spot ETFs provide exposure directly tied to the underlying SUI token, offering a straightforward structure for both institutional and retail investors seeking secure and compliant access to emerging blockchain ecosystems.

Sui’s traction with institutions is rooted in its unique technical design. Built using the Move programming language, Sui’s object-centric model enables parallel execution, sub-second finality, and horizontally scalable throughput. This architecture supports payments, tokenization, stablecoins, BTCfi, and decentralized finance at internet scale, eliminating many of the frictions found on earlier blockchains.

“TSUI marks yet another widely-available access point to Sui, leveraging the industry’s preeminent tech stack to support global payments use cases and financial applications at scale,” said Evan Cheng, Co-Founder and CEO of Mysten Labs, the original contributor to Sui. “In a little more than two years, Sui has made significant inroads into payments and cross-border settlement, which has transformed it into one of the world’s most robust onchain economies and attracted the interest of leading institutions like 21shares as a result.”

The ETF approval arrives amid surging institutional interest in Sui, joining a growing list of institutional-grade products or planned initiatives, including from Bitwise, Canary Capital, Franklin Templeton, Grayscale, and VanEck. In December 2025, 21shares also launched the first leveraged ETFin the U.S. tied to SUI. The introduction of TSUI expands access further through a straightforward, spot-based structure.

“Following our successful launch of a leveraged SUI product, the introduction of TSUI represents the next step in expanding access to Sui through a straightforward, spot-based structure,” said Duncan Moir, President of 21shares. “Sui’s rapid ecosystem growth, technical strength, and institutional relevance were clear to us early on. We are pleased to provide U.S. investors with transparent tools to access this next-generation blockchain.”

As institutional capital continues to enter digital assets and stablecoins gain traction as a global payments layer, Sui’s scalable, low-latency infrastructure is designed to meet the demands of modern finance. To learn more about Sui and explore the ecosystem, visit https://sui.io.

About Sui

Sui, where money moves as freely as messages, is a next-generation Layer 1 blockchain built for scalable finance and global payments. Founded by the core team behind Meta’s stablecoin initiative and powered by an object-centric model, Sui makes assets, permissions, and user data programmable and ownable. Sui’s primitives offer builders everything they need to create high-performance payments and financial applications, including instant agentic payments. Learn more at sui.io.

Contact: media@sui.io

About 21shares

21shares is one of the world’s leading cryptocurrency exchange traded product providers and offers the largest suite of crypto ETPs in the market. The company was founded to make cryptocurrency more accessible to investors, and to bridge the gap between traditional finance and decentralized finance. 21sShares listed the world’s first physically-backed crypto ETP in 2018, building a seven-year track record of creating crypto exchange-traded funds that are listed on some of the biggest, most liquid securities exchanges globally. Backed by a specialized research team, proprietary technology, and deep capital markets expertise, 21shares delivers innovative, simple and cost-efficient investment solutions.

21shares is a member of 21.co, a global leader in decentralized finance. For more information, please visit www.21shares.com.

Contact: press@21shares.com

Important Information

Investing involves risk, including the possible loss of principal. There is no assurance that TSUI (“the Fund”) will generate a profit for investors.

There are special risks associated with short selling and margin investing. Please ask your financial advisor for more information about these risks. SUI is a relatively new asset class, and the market for SUI is subject to rapid changes and uncertainty. SUI is largely unregulated and SUI investments may be more susceptible to fraud and manipulation than more regulated investments.

SUI is subject to unique and substantial risks, including significant price volatility and lack of liquidity, and theft. The value of an investment in the Fund could decline significantly and without warning, including to zero. SUI is subject to rapid price swings, including as a result of actions

and statements by influencers and the media, changes in the supply of and demand for SUI, and other factors. There is no assurance that SUI will maintain its value over the long-term.

The trading prices of many digital assets, including SUI, have experienced extreme volatility in recent periods and may continue to do so.Extreme volatility in the future, including further declines in the trading prices of SUI, could have a material adverse effect on the value of the Shares and the Shares could lose all or substantially all of their value.

Failure by the Fund’s SUI Custodian to exercise due care in the safekeeping of the Fund’s SUI could result in a loss to the Fund. Shareholders cannot be assured that the SUI Custodian will maintain adequate insurance with respect to the SUI held by the custodian on behalf of the Fund.

The Fund is not actively managed and will not take any actions to take advantage, or mitigate the impacts, of volatility in the price of SUI. An investment in the Fund is not a direct investment in SUI. Investors will also forgo certain rights conferred by owning SUI directly. Shares of the Fund are generally bought and sold at market price (not NAV) and are not individually redeemed from the Fund. Only Authorized Participants may trade directly with the Fund and only large blocks of Shares called “creation units.” Your brokerage commissions will reduce returns.

If an active trading market for the Shares does not develop or continue to exist, the market prices and liquidity of the Shares may be adversely affected.

Shares in the Fund are not FDIC insured and may lose value and have no bank guarantee.

This material must be accompanied or preceded by a prospectus. Carefully consider the Fund’s investment objectives, risk factors, and fees and expenses before investing. For further discussion of the risks associated with an investment in the Fund please read the Fund’s prospectus: https://www.21shares.com/en-us/product/SUI

The Marketing Agent is Foreside Global Services, LLC

21Shares US LLC is the Sponsor to the Fund.

21Shares is not affiliated with Foreside Global Services LLC

2026. 21Shares US LLC. No part of this material may be reproduced in any form, or referred to in any other publication, without written permission.

Binance Free $600 (CryptoPotato Exclusive): Use this link to register a new account and receive $600 exclusive welcome offer on Binance (full details).

LIMITED OFFER for CryptoPotato readers at Bybit: Use this link to register and open a $500 FREE position on any coin!

Crypto World

CNBC World’s Top Fintech Companies 2026: Apply now

A person using a laptop and mobile phone.

Tom Werner | Digitalvision | Getty Images

Applications are now open for the fourth edition of CNBC’s World’s Top Fintech Companies list, produced in partnership with market research firm Statista.

Each year, CNBC and Statista chart the top fintech players from around the world, ranging from startups to Big Tech names, across segments including payments, wealth technology, insurance and more.

Last year’s iteration included heavyweights such as Mastercard, Stripe and Visa, as well as many newer scaleups. Credit rewards company Bilt, payments upstart TerraPay and insurance platform Entsia made their debuts on the list.

The World’s Top Fintech Companies has been expanded this year, with regulation tech — companies helping others meet their financial regulatory obligations — becoming its own segment.

Over the years, fintech has progressed from a high-growth challenger segment to a core part of the global financial system, helped by a Covid-fueled race to digitize. Artificial intelligence has spurred the sector further, and has been tipped as a source of transformative change.

The global fintech market attracted $44.7 billion in investment across over 2,200 deals in the first half of 2025, according to the most recent report by KPMG, although this was lower than the $54.2 billion investment seen over the six months prior.

How to apply

Companies can submit their information for consideration by clicking here. Developing innovative, technology-based financial products and services should be the core business of nominees.

The form, hosted by Statista, includes questions about a company’s business model and certain key performance indicators, including revenue growth and employee headcount.

You can read more about the research project and methodology here.

The deadline for submissions is April 24, 2026.

For questions about the list or assistance with the form, please email Statista: topfintechs@statista.com.

Crypto World

ETH Falls To $1.8K As Bearish Data Spooks Investors

Key takeaways:

-

ETH futures liquidations reached $224 million after a 9% price drop, while the network’s onchain activity fell to a 12-month low.

-

ETH’s high correlation with Bitcoin and massive outflows from exchange-traded funds suggest further downside risk for Ether price.

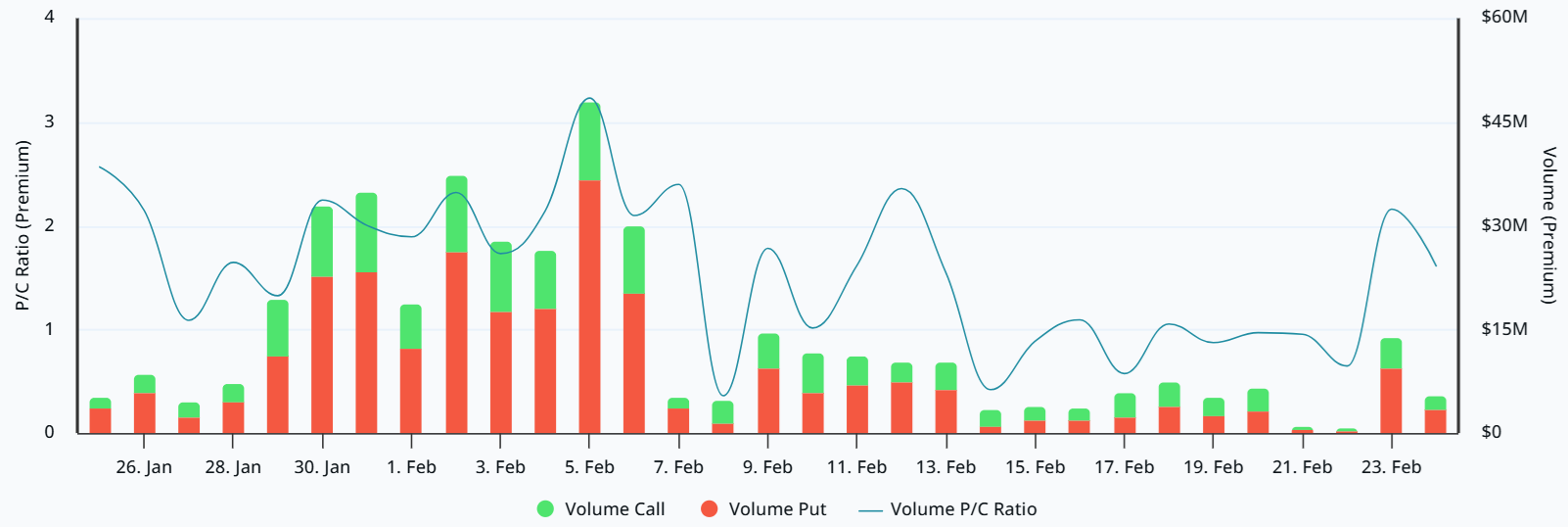

Ether (ETH) plunged to $1,800 on Tuesday, wiping out $224 million in leveraged bullish positions over 48 hours. This 14% price slide over the last 10 days has left top traders defensive. Options and futures data, sluggish onchain activity, and steady outflows from Ether spot exchange-traded funds (ETFs) all point to a shaky floor at $1,800.

After demand for put (sell) and call (buy) options stayed fairly balanced from Monday through Saturday, things shifted quickly on Tuesday. The ETH put-to-call volume premium jumped to 2.2x, showing a sudden scramble for downside protection. While some might have sold puts to bet on a price bounce, the broader market seems to be bracing for more volatility.

The options delta skew (put-call) sat at 18% on Tuesday, meaning puts were trading at a clear premium. This lopsided demand shows that hedging is the priority right now. There is a real lack of confidence here, even with ETH sitting 63% below its all-time high. A lot of this frustration comes down to some pretty weak onchain numbers.

The total value locked (TVL) on Ethereum has slipped to $51 billion, which is the lowest level seen since May 2025. With fewer deposits hitting decentralized applications (DApps), network fees have taken a hit to $13.7 million over the last 30 days. That is a far cry from the $33 million average seen in late 2025. Traders are worried that ETH demand for data processing won’t return anytime soon.

Even though it was expected, the recent $7 million in ETH sales linked to Ethereum co-founder Vitalik Buterin haven’t helped the mood. The Ethereum co-founder earmarked ETH 16,384 of his personal holdings in January as donations to fund privacy-focused technologies, open source hardware and secure, verifiable software systems. Still, the optics of the move added another layer of bearish pressure to an already shaky week.

Outflows from Ether ETFs have only made things worse for investor sentiment. Usually, this kind of movement means institutional players are losing interest.

Related: Longest Ether dip since 2022 ignored by whales–What’s next for ETH?

The US-listed Ether ETFs have seen $405 million in net outflows since Feb. 11, which has pushed total assets under management down to $12.4 billion. This shift happened right as gold prices climbed above $5,150. In fact, gold ETFs pulled in $822 million in the week ending Feb. 20, according to gold.org.

Ether’s weak onchain and derivatives data is not a guaranteed death sentence. However, the fact that whales and market makers seem to be bracing for more downside definitely fuels the bearish mood. Ether’s price is also stuck to Bitcoin (BTC) right now as the assets’ 20-day correlation has stayed above 95% for the last three weeks.

The ETH drop to $1,800 has created a bit of a loop, where traders are still guessing at what is really driving this crypto bear market. That uncertainty is forcing traders to sell at a loss, and the situation may not change while professional traders display fear. Until those derivatives metrics stabilize, the odds of ETH sliding further are still on the table.

This article does not contain investment advice or recommendations. Every investment and trading move involves risk, and readers should conduct their own research when making a decision. While we strive to provide accurate and timely information, Cointelegraph does not guarantee the accuracy, completeness, or reliability of any information in this article. This article may contain forward-looking statements that are subject to risks and uncertainties. Cointelegraph will not be liable for any loss or damage arising from your reliance on this information.

-

Video5 days ago

Video5 days agoXRP News: XRP Just Entered a New Phase (Almost Nobody Noticed)

-

Fashion4 days ago

Fashion4 days agoWeekend Open Thread: Boden – Corporette.com

-

Politics3 days ago

Politics3 days agoBaftas 2026: Awards Nominations, Presenters And Performers

-

Entertainment7 days ago

Entertainment7 days agoKunal Nayyar’s Secret Acts Of Kindness Sparks Online Discussion

-

Sports1 day ago

Sports1 day agoWomen’s college basketball rankings: Iowa reenters top 10, Auriemma makes history

-

Politics1 day ago

Politics1 day agoNick Reiner Enters Plea In Deaths Of Parents Rob And Michele

-

Tech7 days ago

Tech7 days agoRetro Rover: LT6502 Laptop Packs 8-Bit Power On The Go

-

Sports6 days ago

Sports6 days agoClearing the boundary, crossing into history: J&K end 67-year wait, enter maiden Ranji Trophy final | Cricket News

-

Business3 days ago

Business3 days agoMattel’s American Girl brand turns 40, dolls enter a new era

-

Crypto World18 hours ago

Crypto World18 hours agoXRP price enters “dead zone” as Binance leverage hits lows

-

Business2 days ago

Business2 days agoLaw enforcement kills armed man seeking to enter Trump’s Mar-a-Lago resort, officials say

-

Entertainment6 days ago

Entertainment6 days agoDolores Catania Blasts Rob Rausch For Turning On ‘Housewives’ On ‘Traitors’

-

Business7 days ago

Business7 days agoTesla avoids California suspension after ending ‘autopilot’ marketing

-

Tech2 days ago

Tech2 days agoAnthropic-Backed Group Enters NY-12 AI PAC Fight

-

NewsBeat2 days ago

NewsBeat2 days ago‘Hourly’ method from gastroenterologist ‘helps reduce air travel bloating’

-

NewsBeat2 days ago

NewsBeat2 days agoArmed man killed after entering secure perimeter of Mar-a-Lago, Secret Service says

-

Politics2 days ago

Politics2 days agoMaine has a long track record of electing moderates. Enter Graham Platner.

-

Crypto World6 days ago

Crypto World6 days agoWLFI Crypto Surges Toward $0.12 as Whale Buys $2.75M Before Trump-Linked Forum

-

Tech8 hours ago

Tech8 hours agoUnsurprisingly, Apple's board gets what it wants in 2026 shareholder meeting

-

NewsBeat3 hours ago

NewsBeat3 hours agoPolice latest as search for missing woman enters day nine