Quordle was one of the original Wordle alternatives and is still going strong now more than 1,000 games later. It offers a genuine challenge, though, so read on if you need some Quordle hints today – or scroll down further for the answers.

Enjoy playing word games? You can also check out my Wordle today, NYT Connections today and NYT Strands today pages for hints and answers for those puzzles.

SPOILER WARNING: Information about Quordle today is below, so don’t read on if you don’t want to know the answers.

Quordle today (game #1012) – hint #1 – Vowels

How many different vowels are in Quordle today?

• The number of different vowels in Quordle today is 3*.

* Note that by vowel we mean the five standard vowels (A, E, I, O, U), not Y (which is sometimes counted as a vowel too).

Quordle today (game #1012) – hint #2 – repeated letters

Do any of today’s Quordle answers contain repeated letters?

• The number of Quordle answers containing a repeated letter today is 2.

Quordle today (game #1012) – hint #3 – uncommon letters

Do the letters Q, Z, X or J appear in Quordle today?

• No. None of Q, Z, X or J appear among today’s Quordle answers.

Quordle today (game #1012) – hint #4 – starting letters (1)

Do any of today’s Quordle puzzles start with the same letter?

• The number of today’s Quordle answers starting with the same letter is 0.

If you just want to know the answers at this stage, simply scroll down. If you’re not ready yet then here’s one more clue to make things a lot easier:

Quordle today (game #1012) – hint #5 – starting letters (2)

What letters do today’s Quordle answers start with?

• F

• G

• R

• T

Right, the answers are below, so DO NOT SCROLL ANY FURTHER IF YOU DON’T WANT TO SEE THEM.

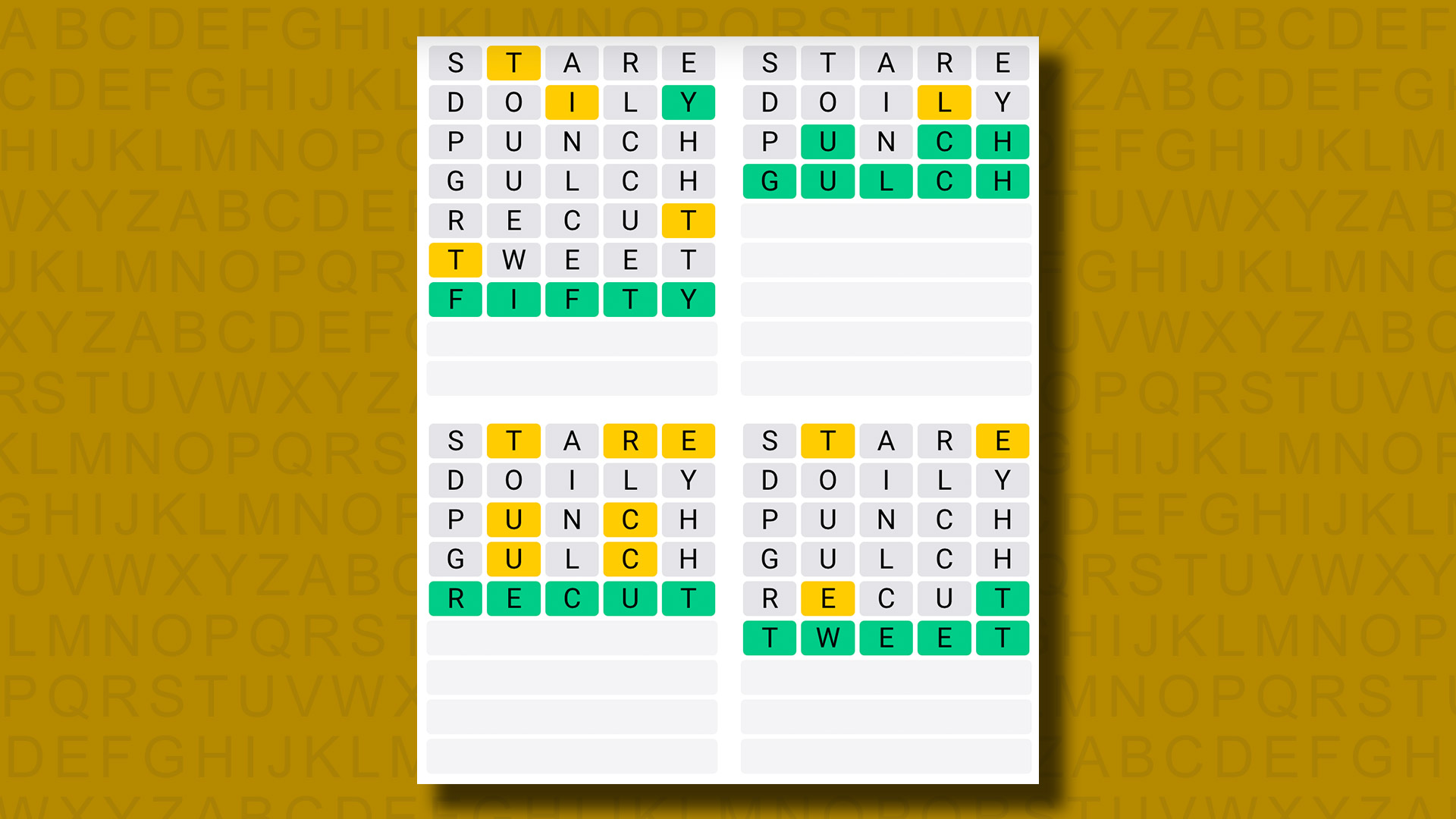

Quordle today (game #1012) – the answers

The answers to today’s Quordle, game #1012, are…

I needed a lucky guess to solve today’s Quordle. I’d completed the other three quadrants without too many problems, but was left with the top left. At that stage the answer could have been BITTY, KITTY or FIFTY, so really I should have narrowed it down. But I was impatient, played FIFTY, and got it right.

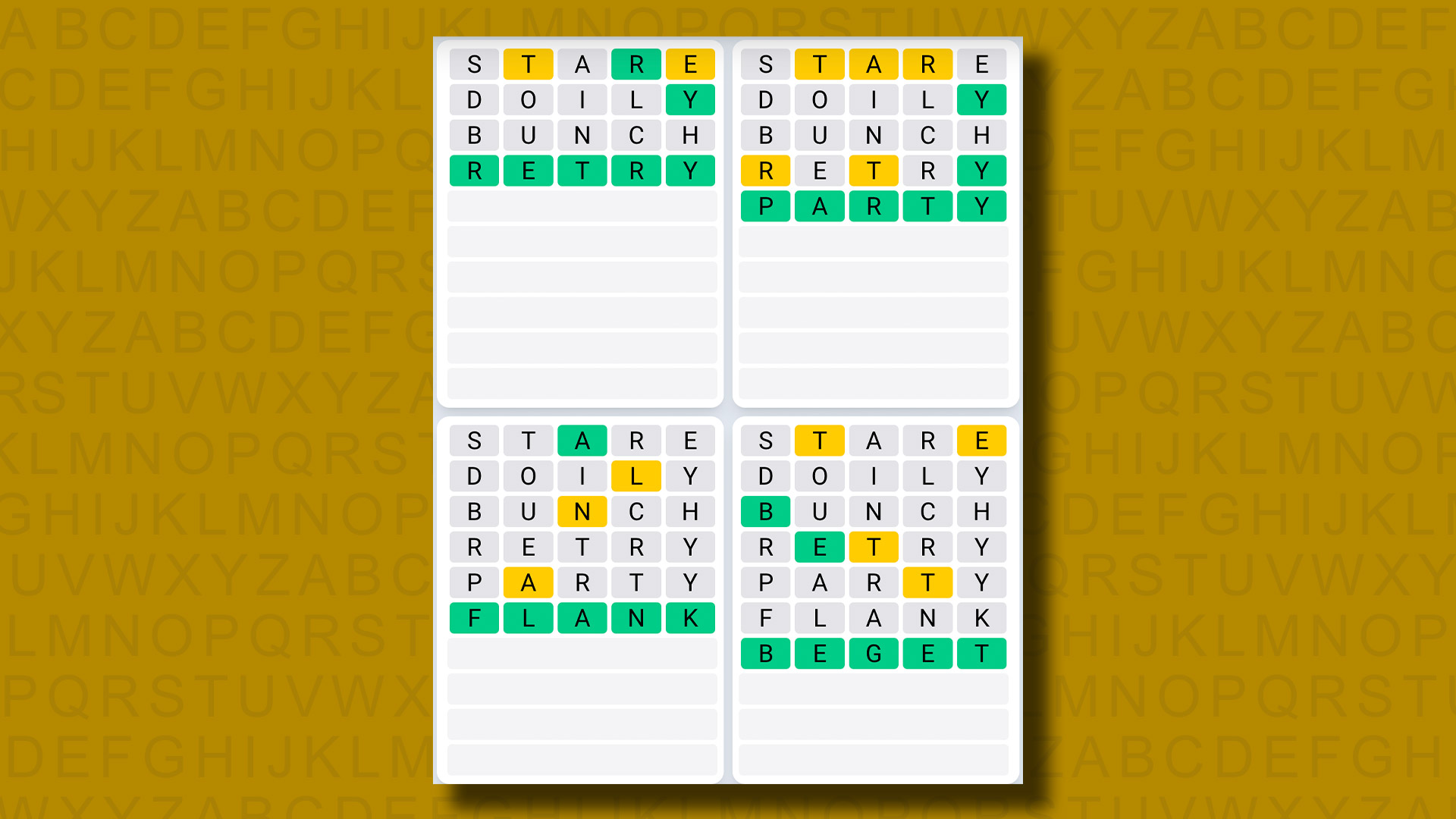

I then repeated the trick on the Daily Sequence, solving each one on the first guess when I probably didn’t deserve to. That kind of attitude will surely come back to haunt me at some point, but sometimes you have to just go for it, eh?

How did you do today? Send me an email and let me know.

Daily Sequence today (game #1012) – the answers

The answers to today’s Quordle Daily Sequence, game #1012, are…

Quordle answers: The past 20

- Quordle #1011, Thursday 31 October: TWINE, RIGID, BELCH, AMEND

- Quordle #1010, Wednesday 30 October: SLOOP, BRINE, BROOD, FLUID

- Quordle #1009, Tuesday 29 October: CLIFF, BURNT, SNAKY, POLYP

- Quordle #1008, Monday 28 October: MACAW, LIEGE, GOUGE, CARGO

- Quordle #1007, Sunday 27 October: STUNG, CLOUT, SOWER, BASIS

- Quordle #1006, Saturday 26 October: DUCHY, CANNY, BLOCK, SMART

- Quordle #1005, Friday 25 October: PRANK, EXIST, RUDDY, PICKY

- Quordle #1004, Thursday 24 October: DAIRY, RALLY, CURLY, LABEL

- Quordle #1003, Wednesday 23 October: DROSS, ANNEX, GRAVE, BROKE

- Quordle #1002, Tuesday 22 October: ADORE, SMITH, AFOOT, LUCID

- Quordle #1001, Monday 21 October: TREAD, NINTH, GRIEF, UNSET

- Quordle #1000, Sunday 20 October: CORAL, WHOSE, HEIST, SOAPY

- Quordle #999, Saturday 19 October: GUSTY, BROKE, ENJOY, HAZEL

- Quordle #998, Friday 18 October: PUPIL, MOCHA, EGRET, NATAL

- Quordle #997, Thursday 17 October: BUILD, BIRTH, LURCH, SASSY

- Quordle #996, Wednesday 16 October: EERIE, SMIRK, HUNCH, EMBED

- Quordle #995, Tuesday 15 October: UMBRA, BRIEF, GRAVY, TORUS

- Quordle #994, Monday 14 October: ROGUE, STORY, EMCEE, AUNTY

- Quordle #993, Sunday 13 October: UNFIT, NYMPH, THUMB, PUREE

- Quordle #992, Saturday 12 October: SAUCY, UNDUE, EGRET, HELLO

You must be logged in to post a comment Login