Technology

Artificial intelligence

About AI: A brief introduction

Artificial intelligence (AI) might sound like something from a science fiction movie in which robots are ready to take over the world. While such robots are purely fixtures of science fiction (at least for now), AI is already part of our daily lives, whether we know it or not.

Think of your Google inbox: Some of the emails you receive end up in your spam folder, while others are marked as ‘social’ or ‘promotion’. How does this happen? Google uses AI algorithms to automatically filter and sort e-mails by categories. These algorithms can be seen as small programs that are trained to recognise certain elements within an email that make it likely to be a spam message, for example. When the algorithm identifies one or several of those elements, it marks the email as spam and sends it to your spam folder. Of course, algorithms do not work perfectly, but they are continuously improved. When you find a legitimate email in your spam folder, you can tell Google that it was wrongly marked as spam. Google uses that information to improve how its algorithms work.

AI is widely used in internet services: Search engines use AI to provide better search results; social media platforms rely on AI to automatically detect hate speech and other forms of harmful content; and, online stores use AI to suggest products you are likely interested in based on your previous shopping habits. More complex forms of AI are used in manufacturing, transportation, agriculture, healthcare, and many other areas. Self-driving cars, programs able to recognise certain medical conditions with the accuracy of a doctor, systems developed to track and predict the impact of weather conditions on crops – they all rely on AI technologies.

As the name suggests, AI systems are embedded with some level of ‘intelligence’ which makes them capable to perform certain tasks or replicate certain specific behaviours that normally require human intelligence. What makes them ‘intelligent’ is a combination of data and algorithms. Let’s look at an example which involves a technique called machine learning. Imagine a program able to recognise cars among millions of images. First of all, that program is fed with a high number of car images. Algorithms then ‘study’ those images to discover patterns, and in particular the specific elements that characterise the image of a car. Through machine learning, algorithms ‘learn’ what a car looks like. Later on, when they are presented with millions of different images, they are able to identify the images that contain a car. This is, of course, a simplified example – there are far more complex AI systems out there. But basically all of them involve some level of initial training data and an algorithm which learns from that data in order to be able to perform a task.

Some AI systems go beyond this, by being able to learn from themselves and improve themselves. One famous example is DeepMind’s AlphaGo Zero: The program initially only knows the rules of the Go game; however, it then plays the game with itself and learns from its successes and failures to become better and better.

Going back to where we started: Is AI really able to match human intelligence? In specific cases – like playing the game of Go – the answer is ‘yes’. That being said, what has been coined as ‘artificial general intelligence’ (AGI) – advanced AI systems that can replicate human intellectual capabilities in order to perform complex and combined tasks – does not yet exist. Experts have divided opinions on whether AGI is something we will see in the near future, but it is certain that scientists and tech companies will continue to develop more and more complex AI systems.

The policy implications of AI

Applying AI for social good is a principle that many tech companies have adhered to. They see AI as a tool that can help address some of the world’s most pressing problems, in areas such as climate change and disease eradication. The technology and its many applications certainly carry significant potential for good, but there are also risks. Accordingly, the policy implications of AI advancements are far‐reaching. While AI can generate economic growth, there are growing concerns over the significant disruptions it could bring to the labour market. Issues related to privacy, safety, and security are also in focus.

As innovations in the field continue, more and more AI standards and AI governance frameworks are being developed to help ensure that AI applications have minimal unintended consequences.

Social and economic

AI has significant potential to stimulate economic growth and contribute to sustainable development. But it also comes with disruptions and challenges.

Safety and security

AI applications bring into focus issues related to cybersecurity (from cybersecurity risks specific to AI systems to AI applications in cybersecurity), human safety, and national security.

Human rights

The uptake of AI raises profound implications for privacy and data protection, freedom of expression, freedom of assembly, non-discrimination, and other human rights and freedoms.

Ethical concerns

The involvement of AI algorithms in judgments and decision-making gives rise to concerns about ethics, fairness, justice, transparency, and accountability.

Governing AI

When debates on AI governance first emerged, one overarching question was whether AI-related challenges (in areas such as safety, privacy, and ethics) call for new legal and regulatory frameworks, or whether existing ones could be adapted to also cover AI.

Applying and adapting existing regulation was seen by many as the most suitable approach. But as AI innovation accelerated and applications became more and more pervasive, AI-specific governance and regulatory initiatives started emerging at national, regional, and international levels.

USA Bill of Rights

The Blueprint for an AI Bill of Rights is a guide for a society that protects people from AI threats and uses technologies in ways that reinforce our highest values. Responding to the experiences of the American public, and informed by insights from researchers, technologists, advocates, journalists, and policymakers, this framework is accompanied by From Principles to Practice—a handbook for anyone seeking to incorporate these protections into policy and practice, including detailed steps toward actualising these principles in the technological design process.

China’s Interim Measures for Generative Artificial Intelligence

Released in July 2023 and applicable starting 15 August 2023, the measures apply to ‘the use of generative AI to provide services for generating text, pictures, audio, video, and other content to the public in the People’s Republic of China’. The regulation covers issues related to intellectual property rights, data protection, transparency, and data labelling, among others.

EU’s AI Act

Proposed by the European Commission in April 2021, the EU AI Act was formally adopted by the European Council on 21 May 2024, and came into effect on 1 August of the same year. The AI regulation introduces a risk-based regulatory approach for AI systems: if an AI system poses exceptional risks, it is banned; if an AI system comes with high risks (for instance, the use of AI in performing surgeries), it will be strictly regulated; if an AI system only involves limited risks, focus is placed on ensuring transparency for end users.

UNESCO Recommendation on AI Ethics

Adopted by UNESCO member states in November 2021, the recommendation outlines a series of values, principles, and actions to guide states in the formulation of their legislation, policies, and other instruments regarding AI. For instance, the document calls for action to guarantee individuals more privacy and data protection, by ensuring transparency, agency, and control over their personal data. Explicit bans on the use of AI systems for social scoring and mass surveillance are also highlighted, and there are provisions for ensuring that real-world biases are not replicated online.

OECD Recommendation on AI

Adopted by the OECD Council in May 2019, the recommendation encourages countries to promote and implement a series of principles for responsible stewardship of trustworthy AI, from inclusive growth and human-centred values to transparency, security, and accountability. Governments are further encouraged to invest in AI research and development, foster digital ecosystems for AI, shape enabling policy environments, build human capacities, and engage in international cooperation for trustworthy AI.

Council of Europe work on a Convention on AI and human rights

In 2021 the Committee of Ministers of the Council of Europe (CoE) approved the creation of a Committee on Artificial Intelligence (CAI) tasked with elaborating a legal instrument on the development, design, and application of AI systems based on the CoE’s standards on human rights, democracy and the rule of law, and conducive to innovation. On 17 May 2024, the Committee of Ministers adopted the Framework Convention on AI, Human Rights, Democracy and the Rule of Law. The Convention will be opened for signature on 5 September 2024.

Group of Governmental Experts on Lethal Autonomous Weapons Systems

Within the UN System, the High Contracting Parties to the Convention on Certain Conventional Weapons (CCW) established a Group of Governmental Experts on emerging technologies in the area of lethal autonomous weapons systems (LAWS) to explore the technical, military, legal, and ethical implications of LAWS. The group has been convened on an annual basis since its creation. In 2019, it agreed on a series of Guiding principles, which, among other issues, confirmed the application of international humanitarian law to the potential development and use of LAWS, and highlighted that human responsibility must be retained for decisions on the use of weapons systems.

Global Partnership on Artificial Intelligence

Launched in June 2020 and counting 29 members in 2024, the Global Partnership on Artificial Intelligence (GPAI) is a multistakeholder initiative dedicated to ‘sharing multidisciplinary research and identifying key issues among AI practitioners, with the objective of facilitating international collaboration, reducing duplication, acting as a global reference point for specific AI issues, and ultimately promoting trust in and the adoption of trustworthy AI’.

(AU) Continental AI Strategy

Adopted by the African Union Executive Council on July 18-19, 2024, the AI Strategy advocates for unified national approaches among AU member states to navigate the complexities of AI-driven transformation. It seeks to enhance regional and global cooperation, positioning Africa as a leader in inclusive and responsible AI development. The Continental AI Strategy emphasizes a people-centric, development-oriented, and inclusive approach, structured around five key focus areas and fifteen policy recommendations.

National AI strategies

As AI technologies continue to evolve at a fast pace and have more and more applications in various areas, countries are increasingly aware that they need to keep up with this evolution and to take advantage of it. Many are developing national AI development strategies, as well as addressing the economic, social, and ethical implications of AI advancements. China, for example, released a national AI development plan in 2017, intended to help make the country the world leader in AI by 2030 and build a national AI industry worth of US$150 billion. In the United Arab Emirates (UAE), the adoption of a national AI strategy was complemented by the appointment of a State Minister for AI to work on ‘making the UAE the world’s best prepared [country] for AI and other advanced technologies’. Canada, France, Germany and Mauritius were among the first countries to launch national AI strategies. These are only a few examples; there are many more countries that have adopted or are working on such plans and strategies, as the map below shows.

Last updated: June 2024

AI on the international level

The Council of Europe, the EU, OECD, and UNESCO are not the only international spaces where AI-related issues are discussed; the technology and its policy implications are now featured on the agenda of a wide range of international organisations and processes. Technical standards for AI are being developed at ITU, the ISO, the IEC, and other standard-setting bodies. ITU is also hosting an annual AI for Good summit exploring the use of AI to accelerate progress towards sustainable development. UNICEF has begun working on using AI to realise and uphold children’s rights, while the International Labour Organization (ILO) is looking at the impact of AI automation on the world of work. The World Intellectual Property Organization (WIPO) is discussing intellectual property issues related to the development of AI, the World Health Organization (WHO) looks at the applications and implications of AI in healthcare, and the World Meteorological Organization (WMO) has been using AI in weather forecast, natural hazard management, and disaster risk reduction.

As discussions on digital cooperation have advanced at the UN level, AI has been one of the topics addressed within this framework. The 2019 report of the UN High-Level Panel on Digital Cooperation tackles issues such as the impact of AI on labour markets, AI and human rights, and the impact of the misuse of AI on trust and social cohesion. The UN Secretary-General’s Roadmap on Digital Cooperation, issued in 2020, identifies gaps in international coordination, cooperation, and governance when it comes to AI. The Our Common Agenda report released by the Secretary-General in 2021 proposes the development of a Global Digital Compact (with principles for ‘an open, free and secure digital future for all’) which could, among other elements, promote the regulation of AI ‘to ensure that it is aligned with shared global values’.

AI and its governance dimensions have featured high on the agenda of bilateral and multilateral processes such as the EU-US Trade and Technology Council, G7, G20, and BRICS. Regional organisations such as the African Union (AU), the Association of Southeast Asian Nations (ASEAN), and the Organization of American States (OAS) are also paying increasing attention to leveraging the potential of AI for economic growth and sustainable development.

In recent years, annual meetings of the Internet Governance Forum (IGF) have featured AI among their main themes.

More on the policy implications of AI

The economic and social implications of AI

AI has significant potential to stimulate economic growth. In production processes, AI systems increase automation, and make processes smarter, faster, and cheaper, and therefore bring savings and increased efficiency. AI can improve the efficiency and the quality of existing products and services, and can also generate new ones, thus leading to the creation of new markets. It is estimated that the AI industry could contribute up to US$15.7 trillion to the global economy by 2030. Beyond the economic potential, AI can also contribute to achieving sustainable development goals (SDGs); for instance, AI can be used to detect water service lines containing hazardous substances (SDG 6 – clean water and sanitation), to optimise the supply and consumption of energy (SDG 7 – affordable and clean energy), and to analyse climate change data and generate climate modelling, helping to predict and prepare for disasters (SDG 13 – climate action). Across the private sector, companies have been launching programmes dedicated to fostering the role of AI in achieving sustainable development. Examples include IBM’s Social Science for Good, Google’s AI for Social Good, and Microsoft’s AI for Good projects.

For this potential to be fully realised, there is a need to ensure that the economic benefits of AI are broadly shared at a societal level, and that the possible negative implications are adequately addressed. The 2022 edition of the Government AI Readiness Index warns that ‘care needs to be taken to make sure that AI systems don’t just entrench old inequalities or disenfranchise people. In a global recession, these risks are evermore important.’ One significant risk is that of a new form of global digital divide, in which some countries reap the benefits of AI, while others are left behind. Estimates for 2030 show that North America and China will likely experience the largest economic gains from AI, while developing countries – with lower rates of AI adoption – will register only modest economic increases.

The disruptions that AI systems could bring to the labour market are another source of concern. Many studies estimate that automated systems will make some jobs obsolete, and lead to unemployment. Such concerns have led to discussions about the introduction of a ‘universal basic income’ that would compensate individuals for disruptions brought on the labour market by robots and by other AI systems. There are, however, also opposing views, according to which AI advancements will generate new jobs, which will compensate for those lost without affecting the overall employment rates. One point on which there is broad agreement is the need to better adapt the education and training systems to the new requirements of the jobs market. This entails not only preparing the new generations, but also allowing the current workforce to re-skill and up-skill itself.

AI, safety, and security

AI applications in the physical world (e.g. in transportation) bring into focus issues related to human safety, and the need to design systems that can properly react to unforeseen situations with minimal unintended consequences. Beyond self-driving cars, the (potential) development of other autonomous systems – such as lethal autonomous weapons systems – has sparked additional and intense debates on their implications for human safety.

AI also has implications in the cybersecurity field. In addition to the cybersecurity risks associated with AI systems (e.g. as AI is increasingly embedded in critical systems, they need to be secured to potential cyberattacks), the technology has a dual function: it can be used as a tool to both commit and prevent cybercrime and other forms of cyberattacks. As the possibility of using AI to assist in cyberattacks grows, so does the integration of this technology into cybersecurity strategies. The same characteristics that make AI a powerful tool to perpetrate attacks also help to defend against them, raising hopes for levelling the playing field between attackers and cybersecurity experts.

Going a step further, AI is also looked at from the perspective of national security. The US Intelligence Community, for example, has included AI among the areas that could generate national security concerns, especially due to its potential applications in warfare and cyber defense, and its implications for national economic competitiveness.

AI and human rights

AI systems work with enormous amounts of data, and this raises concerns regarding privacy and data protection. Online services such as social media platforms, e-commerce stores, and multimedia content providers collect information about users’ online habits, and use AI techniques such as machine learning to analyse the data and to ‘improve the user’s experience’ (for example, Netflix suggests movies you might want to watch based on movies you have already seen). AI-powered products such as smart speakers also involve the processing of user data, some of it of personal nature. Facial recognition technologies embedded in public street cameras have direct privacy implications.

How is all of this data processed? Who has access to it and under what conditions? Are users even aware that their data is extensively used? These are only some of the questions generated by the increased use of personal data in the context of AI applications. What solutions are there to ensure that AI advancements do not come at the expense of user privacy? Strong privacy and data protection regulations (including in terms of enforcement), enhanced transparency and accountability for tech companies, and embedding privacy and data protection guarantees into AI applications during the design phase are some possible answers.

Algorithms, which power AI systems, could also have consequences on other human rights. For example, AI tools aimed at automatically detecting and removing hate speech from online platforms could negatively affect freedom of expression: Even when such tools are trained on significant amounts of data, the algorithms could wrongly identify a text as hate speech. Complex algorithms and human-biassed big data sets can serve to reinforce and amplify discrimination, especially among those who are disadvantaged.

Ethical concerns

As AI algorithms involve judgements and decision-making – replicating similar human processes – concerns are being raised regarding ethics, fairness, justice, transparency, and accountability. The risk of discrimination and bias in decisions made by or with the help of AI systems is one such concern, as illustrated in the debate over facial recognition technology (FRT). Several studies have shown that FRT programs present racial and gender biases, as the algorithms involved are largely trained on photos of males and white people. If law enforcement agencies rely on such technologies, this could lead to biassed and discriminatory decisions, including false arrests.

One way of addressing concerns over AI ethics could be to combine ethical training for technologists (encouraging them to prioritise ethical considerations when creating AI systems) with the development of technical methods for designing AI systems in a way that they can avoid such risks (i.e. fairness, transparency, and accountability by design). The Institute of Electrical and Electronics Engineers’ Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems is one example of initiatives that are aimed at ensuring that technologists are educated, trained, and empowered to prioritise ethical considerations in the design and development of intelligent systems.

Researchers are carefully exploring the ethical challenges posed by AI and are working, for example, on the development of AI algorithms that can ‘explain themselves’. Being able to better understand how an algorithm makes a certain decision could also help improve that algorithm.

AI and other digital technologies and infrastructures

Telecom infrastructure

AI is used to optimise network performance, conduct predictive maintenance, dynamically allocate network resources, and improve customer experience, among others.

Internet of things

The interplay between AI and IoT can be seen in multiple applications, from smart home devices and vehicle autopilot systems to drones and smart cities applications.

Semiconductors

AI algorithms are used in the design of chips, for improved performance and power efficiency, for instance. And then semiconductors themselves are used in AI hardware and research.

Quantum computing

Although largely still a field of research, quantum computing promises enhanced computational power which, coupled with AI, can help address complex problems.

Other advanced technologies

AI techniques are increasingly used in the research and development of other emerging and advanced technologies, from 3D printing and virtual reality, to biotechnology and synthetic biology.

Science & Environment

Discovery of “hobbit” fossils suggests tiny humans roamed Indonesian islands 700,000 years ago

Twenty years ago on an Indonesian island, scientists discovered fossils of an early human species that stood at about 3 1/2 feet tall, earning them the nickname “hobbits.”

Now a new study suggests ancestors of the hobbits were even slightly shorter.

“We did not expect that we would find smaller individuals from such an old site,” said Yousuke Kaifu, co-author of the study, which was published on Tuesday in the journal Nature.

The original hobbit fossils date back to between 60,000 and 100,000 years ago. The new fossils were excavated at a site called Mata Menge, about 45 miles from the cave where the first hobbit remains were uncovered. The fossils were found on the top of a ribbon-shaped, pebbly sandstone layer in a small stream. They included exceptionally small teeth that possibly came from two individuals, researchers said.

In 2016, researchers suspected the earlier relatives could be shorter than the hobbits after studying a jawbone and teeth collected from the new site. Further analysis of a tiny arm bone fragment and teeth suggests the ancestors were a mere 2.4 inches shorter and existed 700,000 years ago.

“They’ve convincingly shown that these were very small individuals,” said Dean Falk, an evolutionary anthropologist at Florida State University who was not involved with the research.

Researchers have debated how the hobbits — named Homo floresiensis after the remote Indonesian island of Flores — evolved to be so small and where they fall in the human evolutionary story. They’re thought to be among the last early human species to go extinct.

Scientists don’t yet know whether the hobbits shrank from an earlier, taller human species called Homo erectus that lived in the area, or from an even more primitive human predecessor. More research — and fossils — are needed to pin down the hobbits’ place in human evolution, said Matt Tocheri, an anthropologist at Canada’s Lakehead University.

“This question remains unanswered and will continue to be a focus of research for some time to come,” Tocheri, who was not involved with the research, said in an email.

Technology

1X releases generative world models to train robots

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Robotics startup 1X Technologies has developed a new generative model that can make it much more efficient to train robotics systems in simulation. The model, which the company announced in a new blog post, addresses one of the important challenges of robotics, which is learning “world models” that can predict how the world changes in response to a robot’s actions.

Given the costs and risks of training robots directly in physical environments, roboticists usually use simulated environments to train their control models before deploying them in the real world. However, the differences between the simulation and the physical environment cause challenges.

“Robicists typically hand-author scenes that are a ‘digital twin’ of the real world and use rigid body simulators like Mujoco, Bullet, Isaac to simulate their dynamics,” Eric Jang, VP of AI at 1X Technologies, told VentureBeat. “However, the digital twin may have physics and geometric inaccuracies that lead to training on one environment and deploying on a different one, which causes the ‘sim2real gap.’ For example, the door model you download from the Internet is unlikely to have the same spring stiffness in the handle as the actual door you are testing the robot on.”

Generative world models

To bridge this gap, 1X’s new model learns to simulate the real world by being trained on raw sensor data collected directly from the robots. By viewing thousands of hours of video and actuator data collected from the company’s own robots, the model can look at the current observation of the world and predict what will happen if the robot takes certain actions.

The data was collected from EVE humanoid robots doing diverse mobile manipulation tasks in homes and offices and interacting with people.

“We collected all of the data at our various 1X offices, and have a team of Android Operators who help with annotating and filtering the data,” Jang said. “By learning a simulator directly from the real data, the dynamics should more closely match the real world as the amount of interaction data increases.”

The learned world model is especially useful for simulating object interactions. The videos shared by the company show the model successfully predicting video sequences where the robot grasps boxes. The model can also predict “non-trivial object interactions like rigid bodies, effects of dropping objects, partial observability, deformable objects (curtains, laundry), and articulated objects (doors, drawers, curtains, chairs),” according to 1X.

Some of the videos show the model simulating complex long-horizon tasks with deformable objects such as folding shirts. The model also simulates the dynamics of the environment, such as how to avoid obstacles and keep a safe distance from people.

Challenges of generative models

Changes to the environment will remain a challenge. Like all simulators, the generative model will need to be updated as the environments where the robot operates change. The researchers believe that the way the model learns to simulate the world will make it easier to update it.

“The generative model itself might have a sim2real gap if its training data is stale,” Jang said. “But the idea is that because it is a completely learned simulator, feeding fresh data from the real world will fix the model without requiring hand-tuning a physics simulator.”

1X’s new system is inspired by innovations such as OpenAI Sora and Runway, which have shown that with the right training data and techniques, generative models can learn some kind of world model and remain consistent through time.

However, while those models are designed to generate videos from text, 1X’s new model is part of a trend of generative systems that can react to actions during the generation phase. For example, researchers at Google recently used a similar technique to train a generative model that could simulate the game DOOM. Interactive generative models can open up numerous possibilities for training robotics control models and reinforcement learning systems.

However, some of the challenges inherent to generative models are still evident in the system presented by 1X. Since the model is not powered by an explicitly defined world simulator, it can sometimes generate unrealistic situations. In the examples shared by 1X, the model sometimes fails to predict that an object will fall down if it is left hanging in the air. In other cases, an object might disappear from one frame to another. Dealing with these challenges still requires extensive efforts.

One solution is to continue gathering more data and training better models. “We’ve seen dramatic progress in generative video modeling over the last couple of years, and results like OpenAI Sora suggest that scaling data and compute can go quite far,” Jang said.

At the same time, 1X is encouraging the community to get involved in the effort by releasing its models and weights. The company will also be launching competitions to improve the models with monetary prizes going to the winners.

“We’re actively investigating multiple methods for world modeling and video generation,” Jang said.

Source link

Science & Environment

NASA outlines backup plan to get Starliner crew back to Earth from International Space Station if Boeing ship can’t bring them home

As NASA debates the safety of Boeing’s Starliner spacecraft in the wake of multiple helium leaks and thruster issues, the agency is “getting more serious” about a backup plan to bring the ship’s two crew members back to Earth aboard a SpaceX Crew Dragon, officials said Wednesday.

In that case — and no final decisions have been made — Starliner commander Barry “Butch” Wilmore and co-pilot Sunita Williams would remain aboard the International Space Station for another six months and come down on a Crew Dragon that’s scheduled for launch Sept. 24 to carry long-duration crew members to the outpost.

NASA

Two of the four “Crew 9” astronauts already assigned to the Crew Dragon flight would be bumped from the mission and the ship would be launched with two empty seats. Wilmore and Williams then would return to Earth next February with the two Crew 9 astronauts.

Shortly before the Crew Dragon launch, the Starliner would undock from the station’s forward port and return to Earth under computer control, without any astronauts aboard. The Crew Dragon then would dock at the vacated forward port.

Two earlier Starliner test flights were flown without crews and both landed successfully. The current Starliner’s computer system would need to be updated with fresh data files, and flight controllers would need to brush up on the procedures, but that work can be done in time to support a mid September return.

If that scenario plays out, Wilmore and Williams would end up spending 268 days — 8.8 months — in space instead of the week or so they planned when they blasted off atop a United Launch Alliance Atlas 5 rocket on June 5.

Based on uncertainty about the precise cause of the thruster problems, “I would say that our chances of an uncrewed Starliner return have increased a little bit based on where things have gone over the last week or two,” said Ken Bowersox, NASA’s director of space operations.

“That’s why we’re looking more closely at that option to make sure that we can handle it.”

But he cautioned that no final decisions will be made on when — or how — to bring the Starliner crew home until the agency completes a top-level flight readiness review.

No date has been set, but it could happen by late next week or the week after.

“Our prime option is to return Butch and Suni on Starliner,” said Steve Stich, manager of NASA’s Commercial Crew Program. “However, we have done the requisite planning to make sure we have other options open. We have been working with SpaceX to ensure that they’re ready to (return) Butch and Suni on Crew 9 if we need that.

“Now, we haven’t approved this plan (yet). We’ve done all the work to make sure this plan is there … but we have not turned that on formally. We wanted to make sure we had all that flexibility in place.”

Before the Starliner was launched, NASA and Boeing engineers knew about a small helium leak in the spacecraft’s propulsion system. After ground tests and analysis, the team concluded the ship could be safely launched as is.

NASA

The day after launch, however, four more helium leaks developed and five aft-facing maneuvering thrusters failed to operate as expected. Ever since, NASA and Boeing have been carrying out data reviews and ground tests in an effort to understand exactly what caused both issues.

The Starliner uses pressurized helium to push propellants to the thrusters, which are critical to keeping the spacecraft properly oriented. That’s especially important during the de-orbit braking “burn” using larger rocket engines to slow the ship down for re-entry and an on-target landing.

To clear the Starliner for a piloted return to Earth, engineers must develop acceptable “flight rationale” based on test data and analyses that provides confidence the ship can make it through re-entry and landing with the required level of safety.

“The Boeing team (is) very confident that the vehicle could bring the crew home right now with the uncertainty we’ve got,” said Bowersox. “But we’ve got other folks that are probably a little more conservative. They’re worried that we don’t know for sure, so they estimate the risk higher and they would recommend that that we avoid coming home (on Starliner) because we have another option.

“So that’s a part of the discussion that we’re having right now. But again, I think both views are reasonable with the uncertainty band that we’ve got, and so our effort is trying to reduce that uncertainty.”

Boeing adamantly argues the Crew Dragon backup plan isn’t needed and that tests and analyses of helium leaks in the Starliner’s propulsion system and initial trouble with maneuvering thrusters show the spacecraft has more than enough margin to bring Wilmore and Williams safely back to Earth.

The helium leaks are understood, Boeing says, they have not gotten worse and more than enough of the pressurized gas is on board to push propellants to the thrusters needed to maneuver and stabilize the spacecraft through the critical de-orbit braking burn to drop out of orbit for re-entry and landing.

Likewise, engineers believe they now understand what caused a handful of aft-facing maneuvering jets to overheat and fire at lower-than-expected thrust during rendezvous with the space station, causing the Starliner’s flight computer to shut them down during approach.

Ground tests of a new Starliner thruster, fired hundreds of times under conditions that mimicked what those aboard the spacecraft experienced, replicated the overheating signature, which was likely caused by multiple firings during tests of the capsule’s manual control system during extended exposure to direct sunlight.

The higher-than-expected heating likely caused small seals in thruster valve “poppets” to deform and expand, the analysis indicates, which reduced the flow of propellant. The thrusters aboard the Starliner were test fired in space under more normal conditions and all operated properly, indicating the seals had returned to a less intrusive shape.

New procedures are in place to prevent the overheating that occurred during the rendezvous. Additional manual test firings have been ruled out, no extended exposure to the sun is planned and less frequent firings are required for station departure compared to rendezvous.

William Harwood/CBS News

In a statement Wednesday, Boeing said, “We still believe in Starliner’s capability and its flight rationale. If NASA decides to change the mission, we will take the actions necessary to configure Starliner for an uncrewed return.”

The helium plumbing and thrusters are housed in the Starliner’s service module, which will be jettisoned to burn up in the atmosphere before the crew capsule re-enters for landing. As such, engineers will never be able to examine the hardware first hand to prove, with certainty, what went wrong.

At this point, that uncertainty appears to support bringing Wilmore and Williams back to Earth aboard the Crew Dragon. But it’s not yet a certainty.

“If we could replicate the physics in some offline testing to understand why this poppet is heating up and extruding and then why it’s contracting, that would give us additional confidence to move forward, to return Butch and Sonny on this vehicle,” Stich said.

“That’s what the team is really striving to do, to try to look at all the data and see if we can get a good physical explanation of what’s happening.”

In the meantime, the wait for a decision, one way or the other, drags on.

“In the end, somebody – some one person – designated to be the decision maker, that person has to come to a conclusion,” Wayne Hale, a former shuttle flight director and program manager, wrote in a blog post earlier this week.

“The engineers will always always always ask for more tests, more analysis, more time to get more information to be more certain of their conclusions. The decider also has to decide when enough has been done. The rub in all of this … is that it always involves the risk to human life.”

Hale concluded his post by saying: “I do not envy today’s decision makers, the ones weighing flight rationale. My only advice is to listen thoroughly, question effectively, ask for more data when necessary. But when it is time, a decision must be made.”

Technology

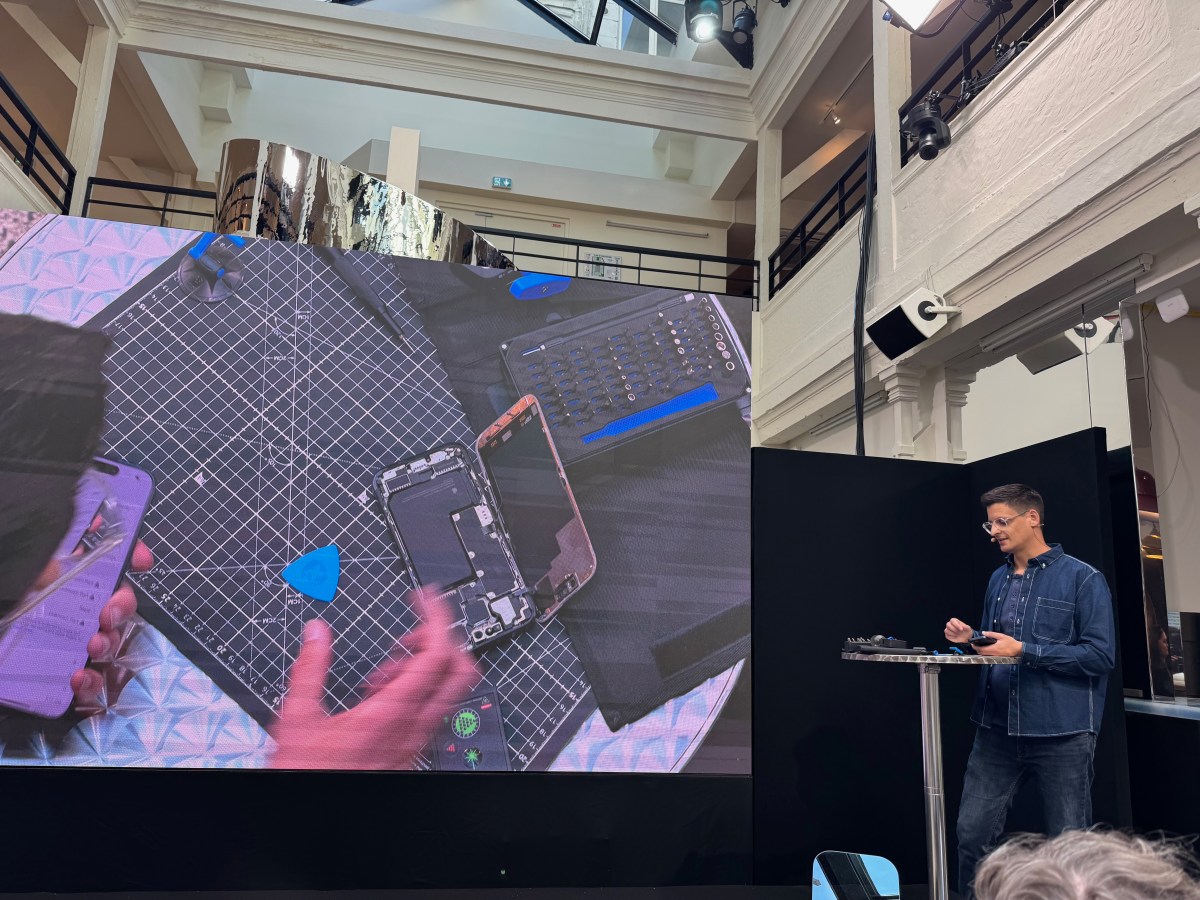

Back Market lays out its plan to make refurbished phones go mainstream

Back Market held a press conference on Thursday morning in Paris to talk about upcoming product launches and give an update on the company’s current situation. If you’re not familiar with the French startup, it operates a marketplace of refurbished electronics devices — mostly smartphones. It has attracted a lot of investor cash in recent years but has also been through tougher times.

In 2021, just like many large tech companies, Back Market rode the wave of zero-interest rate policies around the world and raised an enormous amount of money: a $335 million Series D round was followed by another $510 million Series E round mere months later.

After reaching a valuation of $5.7 billion, Back Market realized that the economy was slowing down. It conducted a small round of layoffs in late 2022, telling French newspaper Les Échos it was “the best way to achieve profitability in the coming years.”

Fast-forward to Thursday’s press conference and the company was keen to demonstrate its focus is back on product launches and new projects. Back Market said it wants to find new distribution channels and go premium so that more people think about buying a refurbished device instead of a new one.

Finding customers where they are already

Over the past 10 years, Back Market hasn’t just captured a decent chunk of the secondhand electronics market, it has expanded the market for refurbished smartphones. The pitch is simple: A refurbished device is cheaper than a new one and it’s also better for the planet. Moreover, when it comes to smartphones, it has become much harder to define why this year’s model is better than last year’s — so why shell out lots of money buying new to get only an incremental upgrade?

The company doesn’t handle smartphones and other electronic devices directly. Instead, it partners with 1,800 companies that repair and resell old devices. So it’s essentially a specialized services marketplace. Since its inception, it has sold 30 million refurbished devices to 15 million customers.

Most Back Market customers buy devices on its website or through its mobile app. But the company has recognized it’s sometimes constrained by its partners’ inventory. This is why it wants to expand supply and demand with strategic partnerships.

For instance, it’s partnering with Sony for PlayStation consoles. “A lot of people are coming to Back Market to try and purchase their PlayStation,” said co-founder and CEO Thibaud Hug de Larauze. But the issue is that Back Market is constrained when it comes to supplies for this type of device.

While many people think about smartphone trade-ins, most people don’t think about selling their old consoles. “With this partnership with PlayStation by Sony, we are the only partner to trade in every PlayStation within Sony’s website, within the Sony PlayStation store,” he noted.

As a result, people buying a new PlayStation get a discount with trade-ins at checkout and Back Market is no longer out of stock for old PlayStation consoles. This is a good example of what Back Market has in mind for future partnerships.

“This is one of the first [partnerships of this kind] but we really want to bring it everywhere where customers are actually shopping new. We want to get them where they are, in order to get their old tech — in order to serve it to people who want access to refurbished tech,” Hug de Larauze added.

On the smartphone front, trade-ins are already quite popular. However, customers visiting a phone store usually end up buying a new device along with a long-term plan.

Back Market is going to partner with telecom companies so that customers can also get a discount on refurbished devices in exchange for a long-term plan. The first two partners for this are Bouygues Telecom in France and Visible, a subsidiary of Verizon Wireless in the U.S.

A new premium tier with official parts

Quality remains the main concern when it comes to buying refurbished devices. In addition to allowing returns, the company is constantly tracking the rate of faulty devices on its platform and trying to bring that number down. Back Market now has a defective rate of 4%, meaning that one in every 25 phones doesn’t work as expected in one way or another.

When customers buy a smartphone on Back Market, they can choose between a device in “fair,” “good,” or “excellent” condition. The company has now rolled out a new top tier — called “premium.”

The main difference between smartphones with no signs of use and premium refurbished devices is that Back Market certifies that premium devices have been repaired with official parts exclusively.

In addition to this new premium tier, Back Market is working on an app update to turn it into a smartphone companion. You can register your smartphone with your Back Market account to receive tips to keep your device in good shape for longer. They are also working on gamification features, including badges and rewards.

Similarly, Back Market will make it easier to check the value of your current phone. “You open the Back Market app, you shake your phone and you’ll find out,” chief product officer, Amandine Durr, explained. This feature will launch around Black Friday.

Finally, Back Market is going to use generative AI to make it easier to browse the catalog. It can be hard to compare two smartphone models to understand which one is better for you. In a few months, you’ll be able to select two phones and get an AI-generated summary of how the two models compare.

Profitability in Europe this year

When thinking about growth potential, instead of focusing on the smartphone industry, Back Market said it draws inspiration from the car industry.

“Nine people out of 10 are purchasing a pre-owned car today,” said Hug de Larauze. “Everything has been created and lined up for that — the availability of spare parts for everyone, you’re not forced to repair your car where you purchased it.”

Similarly, repairability is changing for smartphones and spare parts, starting with the European Union. By June 2025, manufacturers will be forced to sell their spare parts to people and companies who want to fix devices themselves.

The shift to refurbished devices is also already well underway in Europe. “Back Market is going to be profitable for the first time in Europe in 2024,” said Hug de Larauze. “This is a big milestone for us because when we created the company and until very recently… we had that label that said: ‘OK, this is an impact company.’ Impact means good feelings, but the money is not there.

“Well it’s not the case, it’s actually making money,” he added. Now, let’s see if Back Market can become the go-to destination for refurbished devices in more countries, starting with the U.S.

Science & Environment

Drones setting a new standard in ocean rescue technology

Last month, two young paddleboarders found themselves stranded in the ocean, pushed 2,000 feet from the shore by strong winds and currents. Thanks to the deployment of a drone, rescuers kept an eye on them the whole time and safely brought them aboard a rescue boat within minutes.

In North Carolina, the Oak Island Fire Department is one of a few in the country using drone technology for ocean rescues. Firefighter-turned-drone pilot Sean Barry explained the drone’s capabilities as it was demonstrated on a windy day.

“This drone is capable of flying in all types of weather and environments,” Barry said.

Equipped with a camera that can switch between modes — including infrared to spot people in distress — responders can communicate instructions through a speaker. It also can carry life-preserving equipment.

The device is activated by a CO2 cartridge when it comes in contact with water. Once triggered, it inflates into a long tube, approximately 26 inches long, providing distressed swimmers something to hold on to.

In a real-life rescue, after a 911 call from shore, the drone spotted a swimmer in distress. It released two floating tubes, providing the swimmer with buoyancy until help arrived.

Like many coastal communities, Oak Island’s population can swell from about 10,000 to 50,000 during the summer tourist season. Riptides, which are hard to detect on the surface, can happen at any time.

Every year, about 100 people die due to rip currents on U.S. beaches. More than 80% of beach rescues involve rip currents, if you’re caught in one, rescuers advise to not panic or try to fight it, but try to float or swim parallel to the coastline to get out of the current.

Oak Island Fire Chief Lee Price noted that many people underestimate the force of rip currents.

“People are, ‘Oh, I’m a good swimmer. I’m gonna go out there,’ and then they get in trouble,” Price said.

For Price, the benefit of drones isn’t just faster response times but also keeping rescuers safe. Through the camera and speaker, they can determine if someone isn’t in distress.

Price said many people might not be aware of it.

“It’s like anything as technology advances, it takes a little bit for everybody to catch up and get used to it,” said Price.

In a demonstration, Barry showed how the drone can bring a safety rope to a swimmer while rescuers prepare to pull the swimmer to shore.

“The speed and accuracy that this gives you … rapid deployment, speed, accuracy, and safety overall,” Price said. “Not just safety for the victim, but safety for our responders.”

Technology

Netflix teases its animated Splinter Cell series

It’s been quite some time since we heard anything about Netflix’s animated adaptation of Splinter Cell — but the streamer has finally provided some details on the show. The reveal comes in the form of a very brief teaser trailer, which shows a little bit of the show, but mostly showcases Liev Schreiber’s gravelly take on lead character Sam Fisher. We also have a proper name now: it’s called Splinter Cell: Deathwatch.

-

Sport8 hours ago

Sport8 hours agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

News1 day ago

News1 day agoYou’re a Hypocrite, And So Am I

-

News9 hours ago

News9 hours agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

Sport8 hours ago

Sport8 hours agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

CryptoCurrency7 hours ago

CryptoCurrency7 hours agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Technology7 hours ago

Technology7 hours agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoArthur Hayes’ ‘sub $50K’ Bitcoin call, Mt. Gox CEO’s new exchange, and more: Hodler’s Digest, Sept. 1 – 7

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoTreason in Taiwan paid in Tether, East’s crypto exchange resurgence: Asia Express

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoAre there ‘too many’ blockchains for gaming? Sui’s randomness feature: Web3 Gamer

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoCrypto whales like Humpy are gaming DAO votes — but there are solutions

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoHelp! My parents are addicted to Pi Network crypto tapper

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours ago‘Everything feels like it’s going to shit’: Peter McCormack reveals new podcast

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoFed rate cut may be politically motivated, will increase inflation: Arthur Hayes

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoDecentraland X account hacked, phishing scam targets MANA airdrop

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoCardano founder to meet Argentina president Javier Milei

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoMemecoins not the ‘right move’ for celebs, but DApps might be — Skale Labs CMO

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoSEC asks court for four months to produce documents for Coinbase

-

Science & Environment11 hours ago

Science & Environment11 hours ago‘Running of the bulls’ festival crowds move like charged particles

-

MMA8 hours ago

MMA8 hours agoUFC’s Cory Sandhagen says Deiveson Figueiredo turned down fight offer

-

MMA8 hours ago

MMA8 hours agoDiego Lopes declines Movsar Evloev’s request to step in at UFC 307

-

Football7 hours ago

Football7 hours agoNiamh Charles: Chelsea defender has successful shoulder surgery

-

Football7 hours ago

Football7 hours agoSlot's midfield tweak key to Liverpool victory in Milan

-

Science & Environment11 hours ago

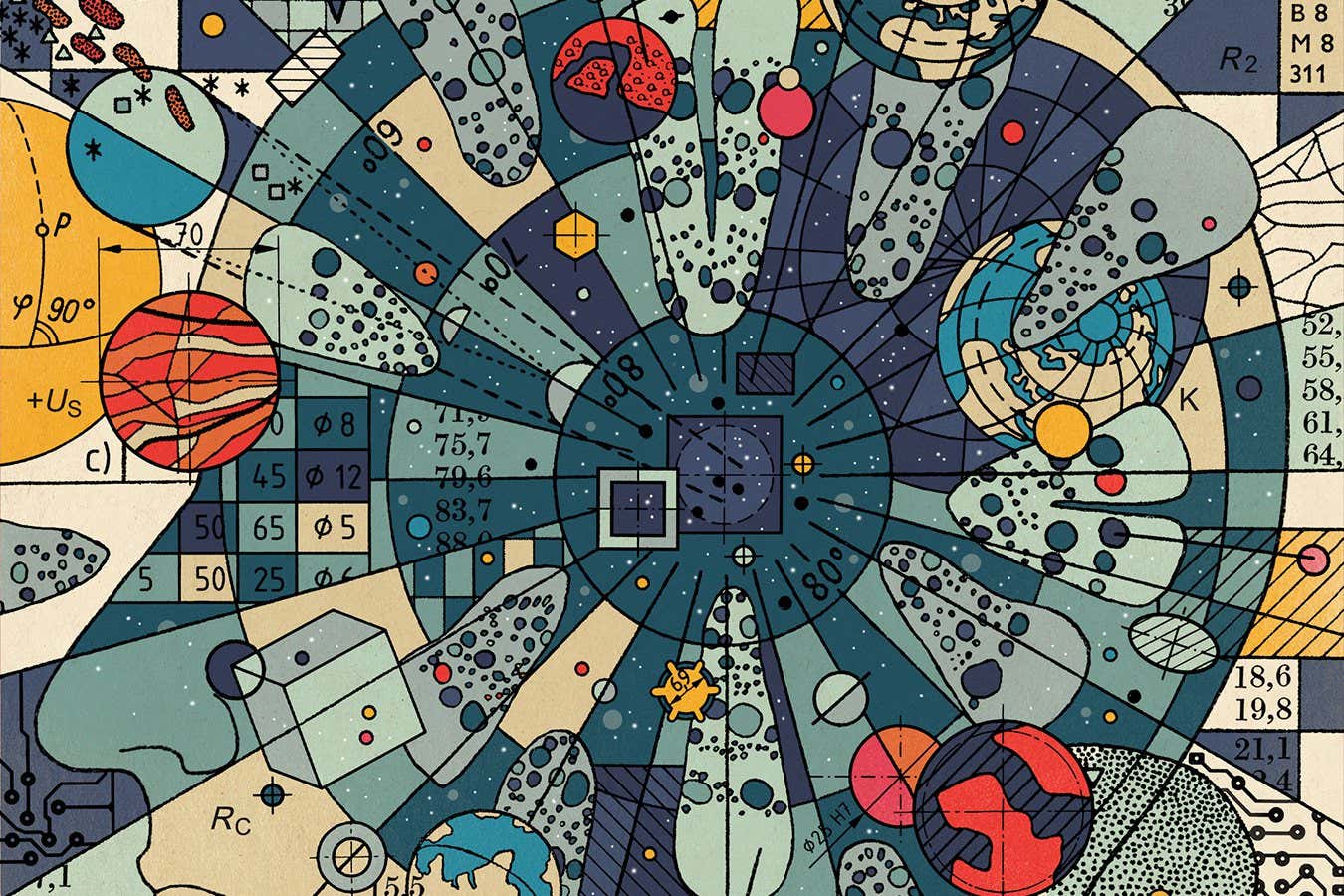

Science & Environment11 hours agoRethinking space and time could let us do away with dark matter

-

Science & Environment11 hours ago

Science & Environment11 hours agoHow one theory ties together everything we know about the universe

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoSEC settles with Rari Capital over DeFi pools, unregistered broker activity

-

News6 hours ago

News6 hours agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Science & Environment22 hours ago

Science & Environment22 hours agoQuantum time travel: The experiment to ‘send a particle into the past’

-

Science & Environment8 hours ago

Science & Environment8 hours agoWe may have spotted a parallel universe going backwards in time

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoLeaked Chainalysis video suggests Monero transactions may be traceable

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoFive crypto market predictions that haven’t come true — yet

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoSolana unveils new Seeker device, says it’s not just a ‘memecoin phone’

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoReal-world asset tokenization is the crypto killer app — Polygon exec

-

Science & Environment11 hours ago

Science & Environment11 hours agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoBinance CEO says task force is working ‘across the clock’ to free exec in Nigeria

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoElon Musk is worth 100K followers: Yat Siu, X Hall of Flame

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoBitcoin bull rally far from over, MetaMask partners with Mastercard, and more: Hodler’s Digest Aug 11 – 17

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoEthereum falls to new 42-month low vs. Bitcoin — Bottom or more pain ahead?

-

CryptoCurrency8 hours ago

CryptoCurrency8 hours agoBlockdaemon mulls 2026 IPO: Report

-

Business7 hours ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Business7 hours ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Business6 hours ago

UK hospitals with potentially dangerous concrete to be redeveloped

-

Business6 hours ago

Axel Springer top team close to making eight times their money in KKR deal

-

News6 hours ago

News6 hours ago“Beast Games” contestants sue MrBeast’s production company over “chronic mistreatment”

-

News6 hours ago

News6 hours agoSean “Diddy” Combs denied bail again in federal sex trafficking case

-

News6 hours ago

News6 hours agoSean “Diddy” Combs denied bail again in federal sex trafficking case in New York

-

News6 hours ago

News6 hours agoBrian Tyree Henry on his love for playing villains ahead of “Transformers One” release

-

News6 hours ago

News6 hours agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Technology3 days ago

Technology3 days agoYouTube restricts teenager access to fitness videos

-

News10 hours ago

News10 hours agoChurch same-sex split affecting bishop appointments

-

Politics2 days ago

Politics2 days agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Science & Environment11 hours ago

Science & Environment11 hours agoPhysicists have worked out how to melt any material

-

Politics22 hours ago

Politics22 hours agoWhat is the House of Lords, how does it work and how is it changing?

-

Politics23 hours ago

Politics23 hours agoKeir Starmer facing flashpoints with the trade unions

-

Technology9 hours ago

Technology9 hours agoFivetran targets data security by adding Hybrid Deployment

-

Science & Environment1 day ago

Science & Environment1 day agoElon Musk’s SpaceX contracted to destroy retired space station

-

News8 hours ago

Freed Between the Lines: Banned Books Week

-

Sport8 hours ago

Sport8 hours agoUFC’s Dan Ige feels confident after Diego Lopes dominates Brian Ortega

-

Football7 hours ago

Football7 hours agoFootball Daily

-

Science & Environment11 hours ago

Science & Environment11 hours agoHow to wrap your head around the most mind-bending theories of reality

-

Fashion Models7 hours ago

Fashion Models7 hours agoMiranda Kerr nude

-

Fashion Models7 hours ago

Fashion Models7 hours ago“Playmate of the Year” magazine covers of Playboy from 1971–1980

-

Health & fitness2 days ago

Health & fitness2 days ago11 reasons why you should stop your fizzy drink habit in 2022

-

Politics6 hours ago

Politics6 hours agoLabour MP urges UK government to nationalise Grangemouth refinery

-

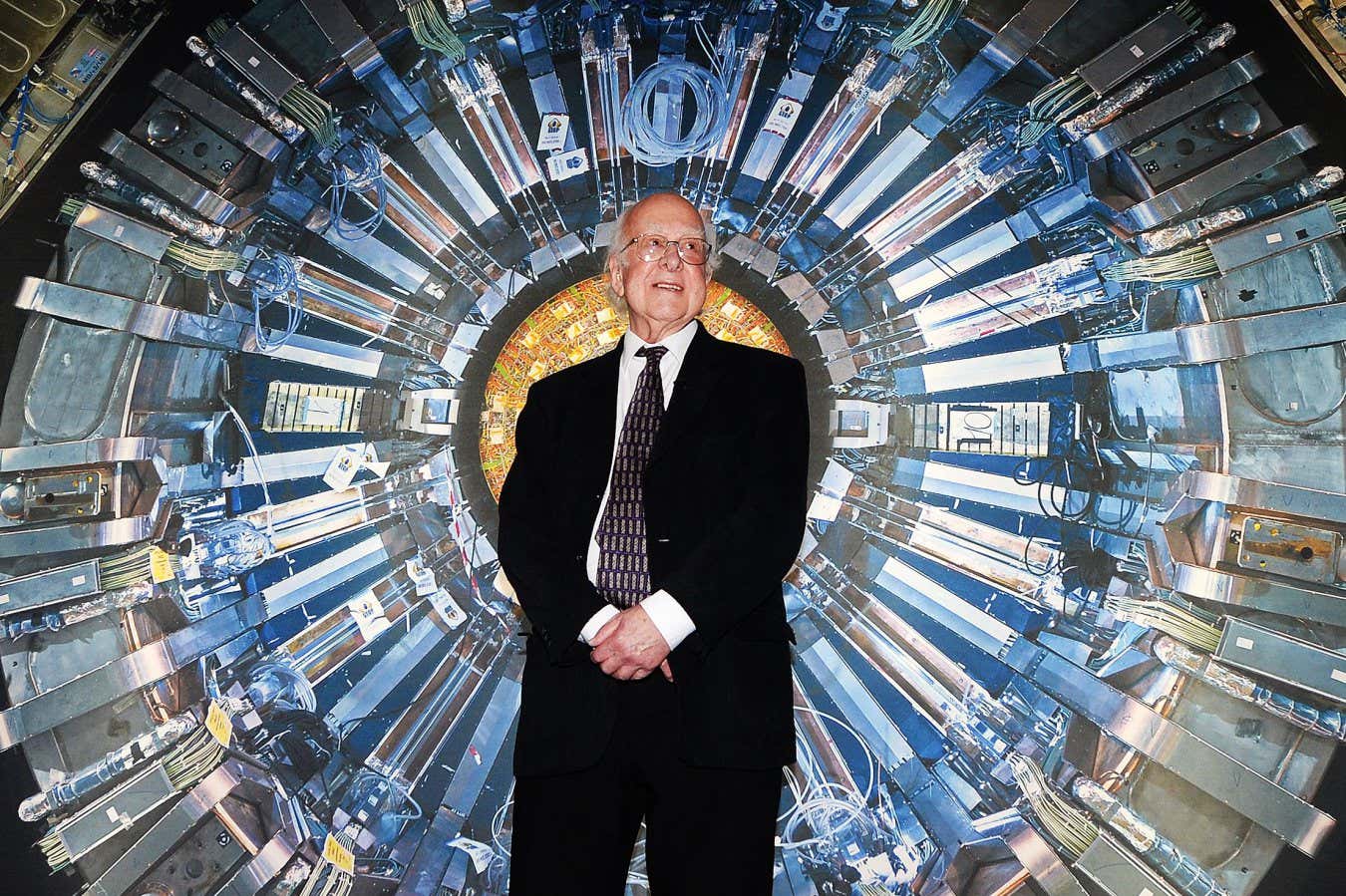

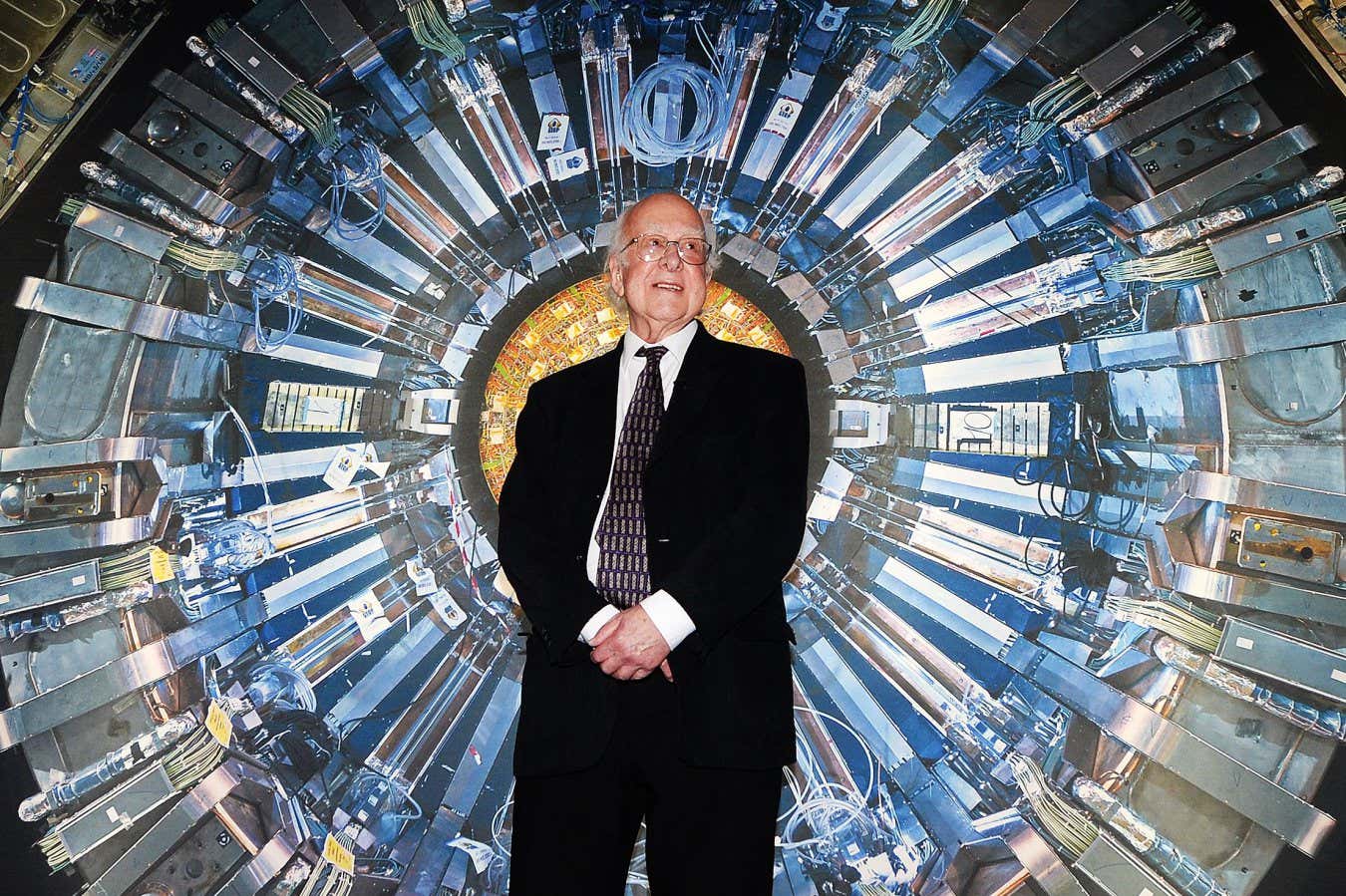

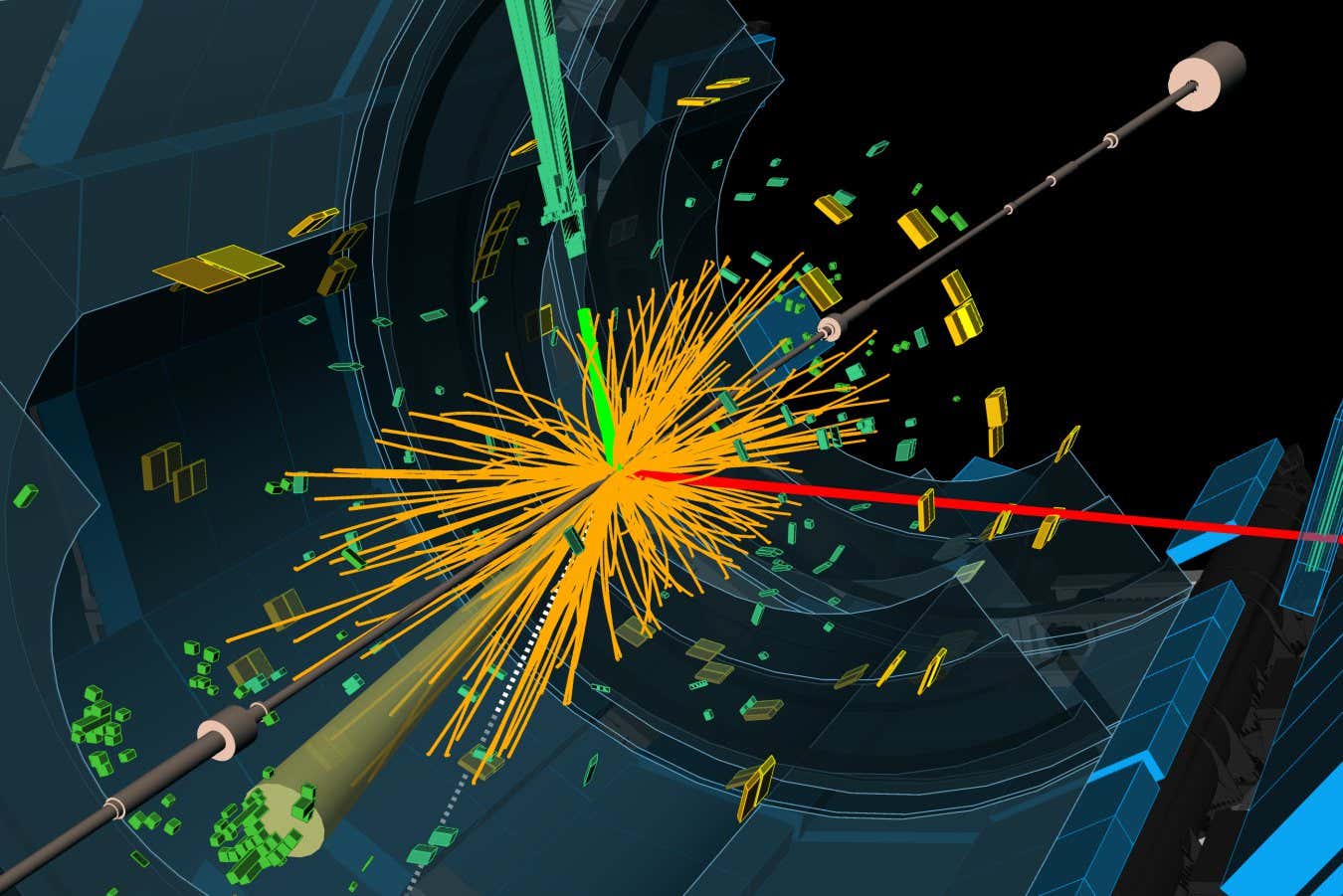

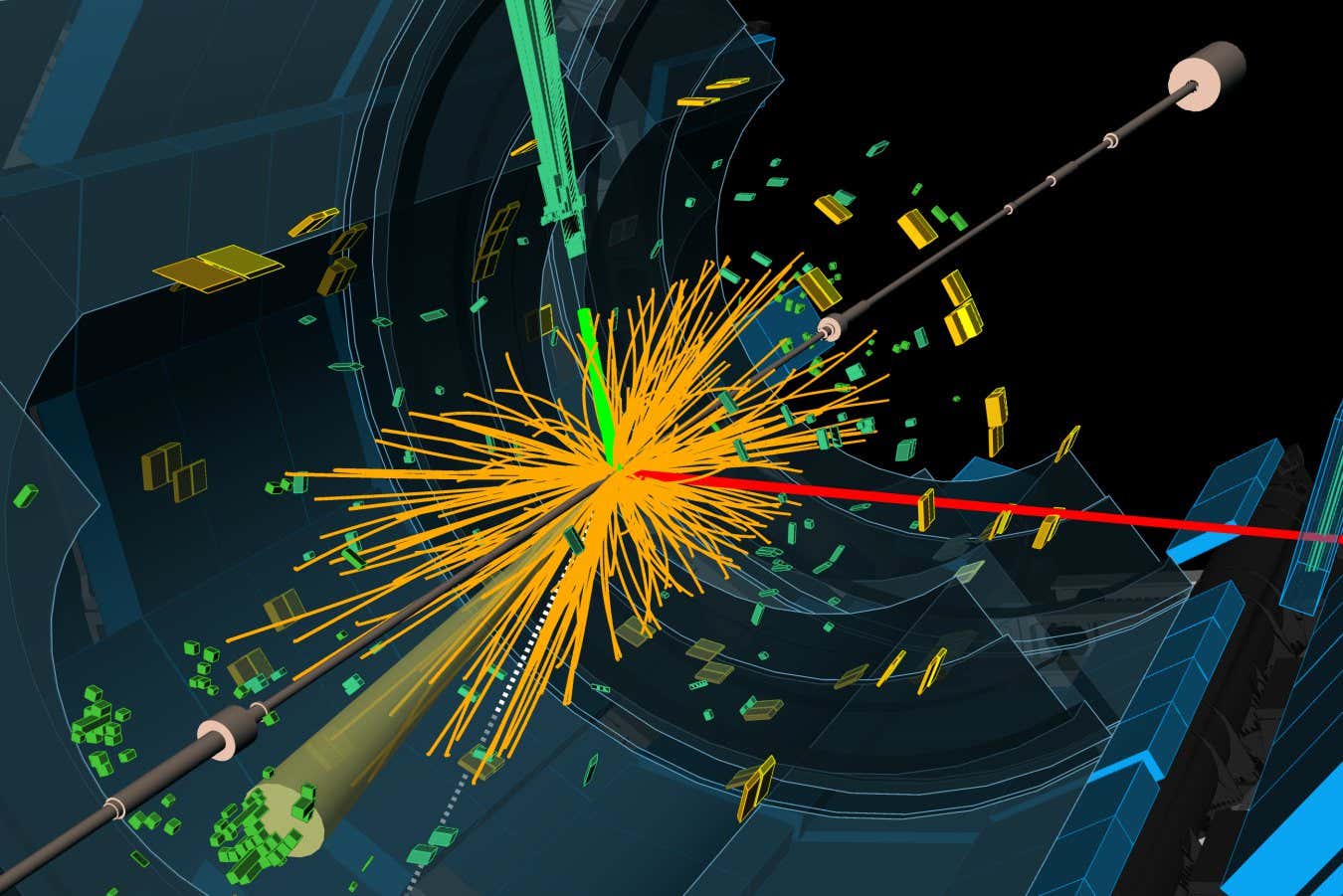

Science & Environment14 hours ago

Science & Environment14 hours agoHow Peter Higgs revealed the forces that hold the universe together

-

News4 days ago

News4 days agoIndia Now Moves from Deliberations to Deliverables on Crimes Against Women

-

Technology2 days ago

Technology2 days agoWhat will future aerial dogfights look like?

-

Science & Environment9 hours ago

Science & Environment9 hours agoOdd quantum property may let us chill things closer to absolute zero

-

Science & Environment16 hours ago

Science & Environment16 hours agoQuantum forces used to automatically assemble tiny device

-

Entertainment6 hours ago

Entertainment6 hours ago“Jimmy Carter 100” concert celebrates former president’s 100th birthday

-

Science & Environment19 hours ago

Science & Environment19 hours agoSunlight-trapping device can generate temperatures over 1000°C

-

News6 hours ago

News6 hours agoJoe Posnanski revisits iconic football moments in new book, “Why We Love Football”

-

Health & fitness2 days ago

Health & fitness2 days agoHow to adopt mindful drinking in 2022

-

Health & fitness2 days ago

Health & fitness2 days agoWhat 10 days of a clean eating plan actually does to your body and why to adopt this diet in 2022

-

Health & fitness2 days ago

Health & fitness2 days agoWhen Britons need GoFundMe to pay for surgery, it’s clear the NHS backlog is a political time bomb

-

Health & fitness2 days ago

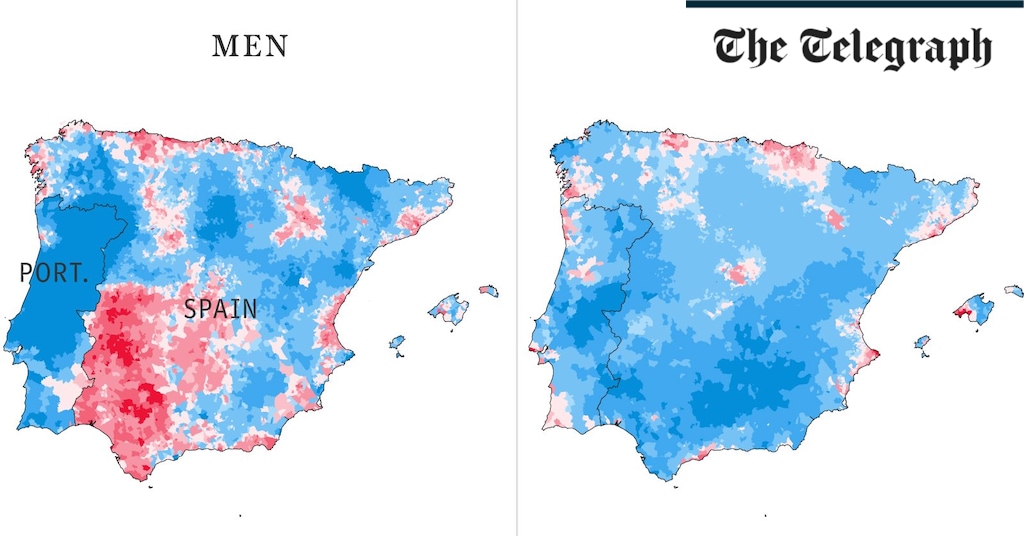

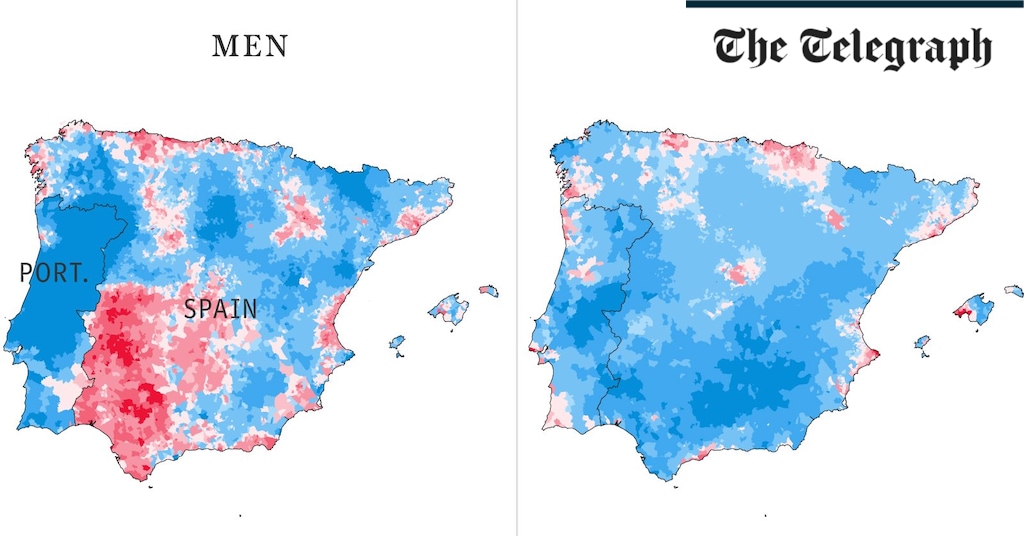

Health & fitness2 days agoThe maps that could hold the secret to curing cancer

-

Health & fitness2 days ago

Covid v flu v cold and how to tell the difference between symptoms this winter

-

Science & Environment21 hours ago

Science & Environment21 hours agoQuantum to cosmos: Why scale is vital to our understanding of reality

-

Technology3 days ago

Technology3 days agoTrump says Musk could head ‘government efficiency’ force

-

Technology1 day ago

Technology1 day agoTech Life: Athletes using technology to improve performance

-

Science & Environment1 day ago

Science & Environment1 day agoParticle physicists may have solved a strange mystery about the muon

-

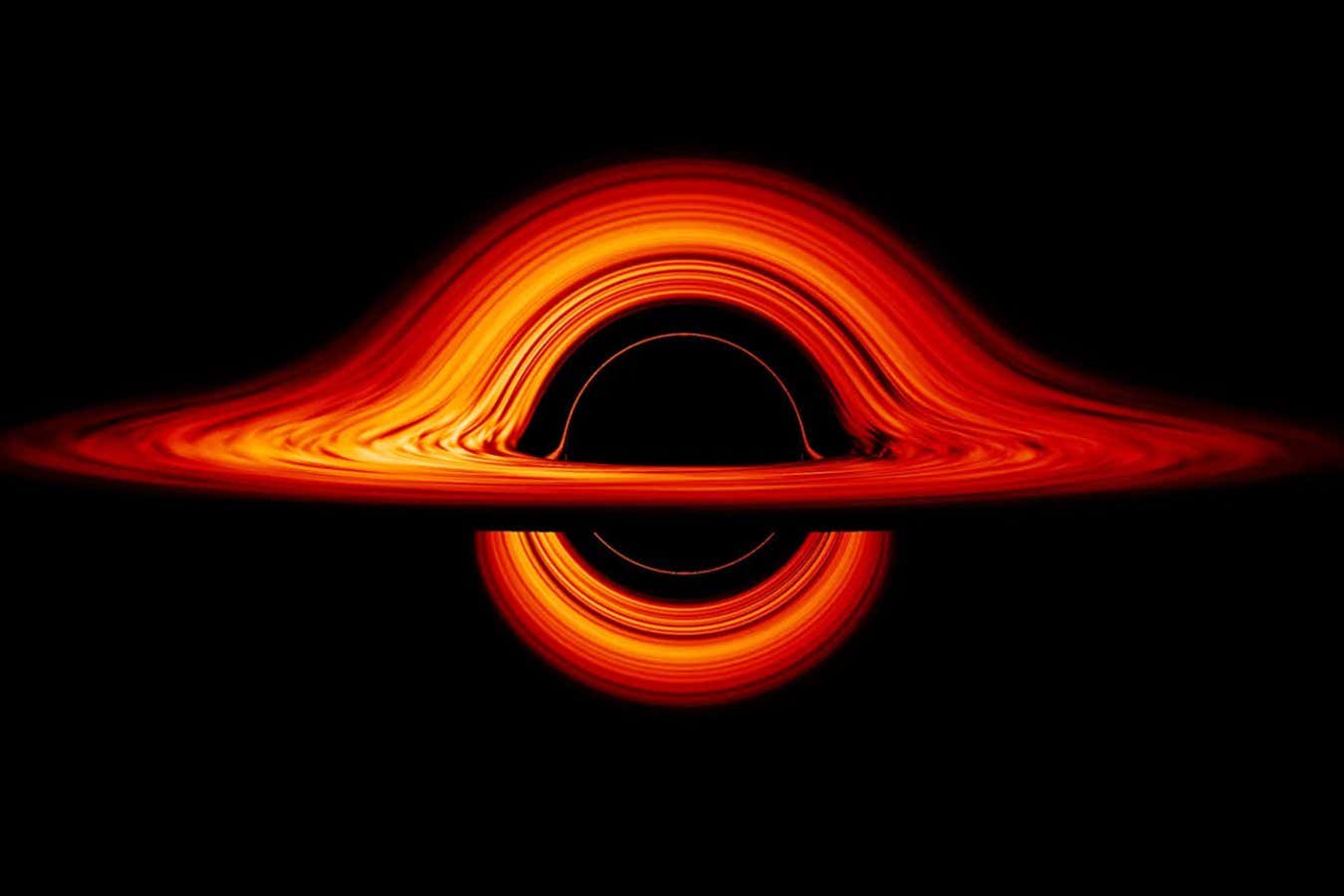

Science & Environment22 hours ago

Science & Environment22 hours agoHow the weird and powerful pull of black holes made me a physicist

-

Politics20 hours ago

Politics20 hours agoIs there a £22bn ‘black hole’ in the UK’s public finances?

-

Science & Environment20 hours ago

Science & Environment20 hours agoX-ray laser fires most powerful pulse ever recorded

-

Science & Environment20 hours ago

Science & Environment20 hours agoHow indefinite causality could lead us to a theory of quantum gravity

You must be logged in to post a comment Login