Just over a year ago, Epic Games laid off around 16 percent of its employees. The problem, Epic said, was its own big ideas for the future and just how expensive they were to build. “For a while now, we’ve been spending way more money than we earn,” Epic CEO Tim Sweeney wrote in an email to staff.

Technology

CleanPlay aims to reduce carbon footprint of gaming | The DeanBeat

Rich Hilleman and David Helgason have helped shape the video game industry. Now they want to offset its environmental impact with CleanPlay.

More than just a startup, CleanPlay is on a mission to rapidly and dramatically reduce the carbon footprint of the video game industry. Hilleman is the former chief creative officer at Electronic Arts, while Helgason was the former CEO and cofounder of the Unity game engine.

They said they have seen gaming evolve over 30 years from a geeky hobby into one of the largest communities on the planet. Today the community of gaming is vast, diverse, and a wonderful space where the connections between creators and players extend far beyond entertainment.

In parallel, climate change has emerged as the defining challenge of our era, impacting every person on earth – gamers and non-gamers alike, they said.

Join us for GamesBeat Next!

GamesBeat Next is almost here! GB Next is the premier event for product leaders and leadership in the gaming industry. Coming up October 28th and 29th, join fellow leaders and amazing speakers like Matthew Bromberg (CEO Unity), Amy Hennig (Co-President of New Media Skydance Games), Laura Naviaux Sturr (GM Operations Amazon Games), Amir Satvat (Business Development Director Tencent), and so many others. See the full speaker list and register here.

“We embarked on a journey to understand the intersection of climate and gaming, delving into the CO2 emissions of the videogames industry. Although gaming is relatively low in carbon intensity compared to revenue, its total emissions are significant and growing,” Hilleman and Helgason said.

Current estimates suggest that the gaming industry is nearing 100 million metric tons of CO2 emissions annually, roughly equivalent to the emissions from 20 million passenger cars or deforestation of 80,000 square miles (nearly Great Britain’s size). Notably, 80% of console emissions come from players’ electricity consumption, with gaming making up 1% of U.S. household energy consumption.

How CleanPlay works

That’s where CleanPlay comes in. CleanPlay empowers players to take simple and immediate climate action while enjoying their favorite games – and to get rewarded in the process.

Partnering with game developers, publishers, and platform holders, CleanPlay is inviting the majority of gamers who already feel a personal responsibility to combat climate change to join the cause. Through the CleanPlay app – launching first on PlayStation 5 – CleanPlay aims to ensure that every watt of energy used while gaming is matched with clean energy.

The company is using verified tools today and it is also engaging the community to pursue even more impactful solutions for reducing CO2 emissions and driving the clean energy transition in gaming.

As the gaming industry continues to expand, the energy demand will be far greater than most anticipate in the coming years. That’s why ensuring the quality and integrity of the clean energy solution is CleanPlay’s top priority.

The company is working closely with sustainability experts to carefully select clean energy projects that not only meet this growing demand but also make a real impact on driving clean energy adoption.

Transparency and rigorous tracking are embedded in every step the company takes, ensuring that CleanPlay’s contributions to clean energy are both meaningful and measurable, the company said.

Moreover, CleanPlay offers a way to transform sustainability efforts into a revenue-generating opportunity for gaming companies. The platform empowers entire teams – from game designers, developers, and publishers to financial and sustainability leaders – to seamlessly integrate clean energy into their operations, creating an economically rewarding model that offers new monetization opportunities, enhanced player engagement, and increased loyalty.

By incorporating in-game features and reward systems tied to clean energy actions, we’re delivering value that resonates with eco-conscious gamers and helping industry partners realign their strategies, ultimately turning environmental action into a powerful driver of profitability and long-term success.

The gaming community, with its proactive, passionate, and agile members, is ideal to lead the charge in community-driven demand for CO2 reductions, and will create a roadmap for other industries to rapidly improve, CleanPay said.

Activities

Gamers can engage in up to 1,000 hours of eco-conscious play, annually, matched by clean energy. The gamers can also access to hand-picked gaming premiums, enhancing playing experience with exceptional content. They can also connect with like-minded players at exclusive community events, designed to unite and inspire.

Gamers can also join influencer sessions led by prominent gaming influencers, offering unique gameplay experiences and insider tips. And they can get exclusive offers on games and content, benefiting from special offers tailored to support sustainable gaming practices.

Right now, only about 20% of electricity generated in the U.S. comes from renewable sources. That’s not enough, CleanPlay said.

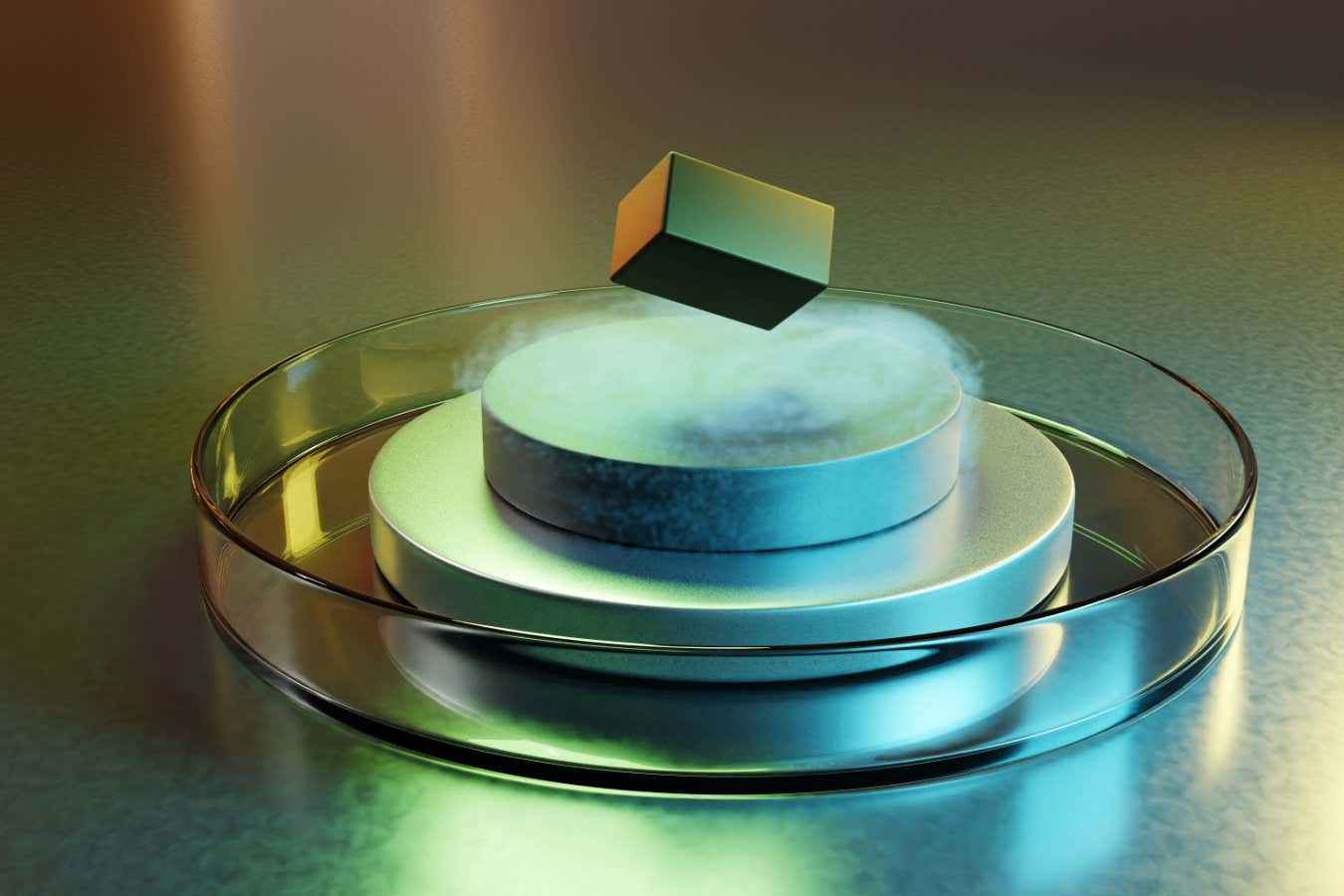

“We are focused on increasing the proportion of clean energy on the grid, and we’re looking to drive real impact through the gaming community,” CleanPlay said. “At launch, we’re starting by purchasing high-integrity Renewable Energy Certificates (RECs) while exploring options like Virtual Power Purchase Agreements (VPPAs), community solar subscriptions, and direct investment into new projects and technologies (we like thinking about tidal energy, hydrogen fuel cells, and nuclear fusion, don’t you?). We also support efforts like 24/7 accounting and granular certificates.”

Origins

Helgason was the former CEO and cofounder of Unity, the game engine company. He started it in 2004 and focused on making a game engine for mobile games, expanding it later on to compete head-on with Epic Games’ Unreal engine. He stepped aside as CEO in 2014.

The firm went public in the go-go pre-pandemic days in 2020 at a valuation of $13.7 billion, and Helgason became wealthy. He started a venture fund in 2021.

“As you know, I used to be quite active in the games industry, but then years ago, I decided to spend most of my energy on cleantech,” said Helgason, in an interview with GamesBeat. “I’ve been investing into technology solutions for many years, both software and hardware, into synthetic biology and batteries and all this stuff. That’s really fun.”

But he also saw playing games as part of the problem, as game consoles, PCs, and mobile devices all use energy.

“It occured to me that we could harness that somehow, to be part of a solution,” Helgason said.

He connected with Hilleman, who had spent decades at Electronic Arts building franchises like Madden NFL Football and EA Sports. Hilleman joined EA in 1982 as employee No. 39. Hilleman eventually became chief creative officer of EA, and he retired in 2016. He took an interest in clean energy and started designing electric cars for racing such as the Blackbird.

“When David gave me the challenge, the part that got me interested was not solving the carbon footprint for the business. That’s a relevant thing, but it was providing leverage to show other businesses how to do it, how to involve their customers in the process, how to use the kind of techniques that we’re going to use to reward them for the things that they do right, have it to inform them when they’re not,” Hilleman said. “The real opportunity here, one of the most powerful things that we have found, is that the clean power industry has an excess of capacity that they’re having a hard time delivering to the extent that they’re paying three and four figure fees to get access to customers with any hope of closing them.”

Helgason caught up with his mentor Hilleman during the spring of 2021. Hilleman was busy designing four different EV race cars. But Helgason waited until the off season and they brainstormed again.

“Rich was a systemic thinker,” Helgason said. “The publishers were starting to talk about having goals” for the environment. One paper written around the time was about how to optimize code for power utilization on an Xbox game console. Microsoft did a similar paper about running the game Halo.

“Once I had those numbers, I could start to really figure out what the real problem was,” Helgason said, at least as far as the consoles go. Given the consumption numbers, Helgason realized that the efforts of the publishers and players weren’t really addressing the real environmental problems. he called a friend at Sony and started thinking about it more.

A big idea

He thought that the platform company should sell carbon mitigation as part of the company’s primary subscription for online play for the game console.

“That’s where the notion came — which is, hey, why don’t we be in that business? And why don’t we be in that business in a way that’s more imaginative than a console player can be, and that we can extend it with other platforms,” Helgason said.

“Our goal is to meet the need, put it on whatever platform, in an appropriate way. We’re starting on the console first because we have three really variable characters,” Hilleman said.

He noted that PCs may run on as low as 35 watts (or up to 400 for a gamer PC) while consoles were running on 200 watts. The team of a few people created an application with the option to purchase carbon-free energy for your game console.

“We purchase carbon free energy in some fashion and add it to the grid. The effect of that is to try to erase the carbon footprint of that particular individual once they’ve made that choice, then we try to share a couple of key pieces of information with them,” Hilleman said. “We have a reasonable model. This is the second positive characteristic of consoles — they’re very homogeneous, meaning they’re all the same, all about the same power profile, which makes all of that stuff easy to model and predict, because we currently do not have on platform metering.”

So CleanPlay can inform a player of their power consumption and how much of it is being offset. Players have options like buying a renewable energy certificate that supports clean energy power plants.

A subscription service

The subscription price isn’t set yet, but Hilleman believes it will be under $20. As for cloud games, much of the computing and electricity is used in internet-connected data centers. Big cloud providers like Amazon, Google and Microsoft are offering aggressive plans to rein in their power requirements.

Hilleman said he wishes every party involved in power usage, from AI companies on down, would to a better job of understanding and disclosing their power utilization. Since that isn’t happening, customers have no idea how much power they’re using. If they did, they might make different choices, Hilleman said.

“That’s the core of what we’re about,” Hilleman said. “We see all of the platforms (for power usage) as an opportunity. The key thing that I think distinguishes us from the other people in the space is that we think customers want to be a part of it, and we think that if we give them that choice, that they’re not only going to take it, but they’re going to make us smarter by it.”

Hilleman hopes he can get 10% of video game players to join the cause, and that could actually mitigate the entire carbon footprint of the game business, he said. Gamers can be part of the solution, but so far they have never been asked to help, he said. Hilleman said he appreciates the efforts on every side where game companies and gamers are trying to go green, but he worries the effort is too small.

“Nobody should be pointing fingers at anyone else,” Hilleman said.

Hilleman thinks that measurement of energy consumption, and showing how it’s changing, will be important in convincing gamers to stay the course, as it will cost them money. But Hilleman also believes it’s important to incentivize people by giving them gifts. Those can come from publishers.

The application for the consoles is done. It’s possible for the company to ship this year. As for Unity, Helgason hopes game engines can be part of the solution in the future by making it easier to do metering of electricity usage while gaming.

Source link

Servers computers

15U Wall-Mount Server Rack – RK15WALLO | StarTech.com

This open-frame wall-mount server rack provides 15U of storage, allowing you to save space and stay organized. The equipment rack can hold up to 198 lb. (90 kg).

Easy, hassle-free installation

The network rack is 12 in. (30.4 cm) deep, so it’s perfect for smaller spaces. It’s easy to assemble and features mounting holes that are spaced 16 in. apart, making it suitable for mounting on almost any wall, based on common wall-frame stud spacing. The wall-mount rack comes in a flat-pack making it easy to transport and reducing your cost in shipping.

Free up space

You can mount the open-frame rack where space is limited, such as on a server room wall, office, or above a doorway, to expand your workspace and ensure your equipment is easy to access.

Keep your equipment cool and accessible

By having an open frame the server rack provides passive cooling to equipment. Additionally, the open frame provides easy access and configurability to your equipment, allowing you to maximize productivity.

The RK15WALLO is backed by a StarTech.com 5-year warranty and free lifetime technical support.

To learn more visit StarTech.com

source

Technology

Gmail’s Gemini-powered Q&A feature comes to iOS

A few months ago, Google introduced a new way to search Gmail with the help of its Gemini AI. The feature, called Gmail Q&A, lets you find specific emails and information by asking the Gemini chatbot questions. You can ask things like “What time is our dinner reservation on Saturday?” to quickly find the information you need. It was only initially available on Android devices, but now Google has started rolling it out to iPhones.

In addition to being able to ask questions, you can also use the feature to find unread emails from a specific sender simply by telling Gemini to “Find unread emails by [the person’s name].” You can ask the chatbot to summarize a topic you know you can find in your inbox, such as work projects that you’ve been on for months consisting of multiple conversations across several threads. And you can even use Gemini in Gmail to do general search queries without having to leave your inbox. To access Gemini, simply tap on the star icon at the top right corner of your Gmail app.

Google says the feature could take up to 15 days to reach your devices. Take note, however, that you do need to have access to Gemini Business, Enterprise, Education, Education Premium or Google One AI Premium to be able to use it.

Technology

Meta enters AI video wars with powerful Movie Gen model

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Meta founder and CEO Mark Zuckerberg, who built the company atop of its hit social network Facebook, finished this week strong, posting a video of himself doing a leg press exercise on a machine at the gym on his personal Instagram (a social network Facebook acquired in 2012).

Except, in the video, the leg press machine transforms into a neon cyberpunk version, an Ancient Roman version, and a gold flaming version as well.

As it turned out, Zuck was doing more than just exercising: he was using the video to announce Movie Gen, Meta’s new family of generative multimodal AI models that can make both video and audio from text prompts, and allow users to customize their own videos, adding special effects, props, costumes and changing select elements simply through text guidance, as Zuck did in his video.

The models appear to be extremely powerful, allowing users to change only selected elements of a video clip rather than “re-roll” or regenerate the entire thing, similar to Pika’s spot editing on older models, yet with longer clip generation and sound built in.

Meta’s tests, outlined in a technical paper on the model family released today, show that it outperforms the leading rivals in the space including Runway Gen 3, Luma Dream Machine, OpenAI Sora and Kling 1.5 on many audience ratings of different attributes such as consistency and “naturalness” of motion.

Meta has positioned Movie Gen as a tool for both everyday users looking to enhance their digital storytelling as well as professional video creators and editors, even Hollywood filmmakers.

Movie Gen represents Meta’s latest step forward in generative AI technology, combining video and audio capabilities within a single system.

Specificially, Movie Gen consists of four models:

1. Movie Gen Video – a 30B parameter text-to-video generation model

2. Movie Gen Audio – a 13B parameter video-to-audio generation model

3. Personalized Movie Gen Video – a version of Movie Gen Video post-trained to generate personalized videos based on a person’s face

4. Movie Gen Edit – a model with a novel post-training procedure for precise video editing

These models enable the creation of realistic, personalized HD videos of up to 16 seconds at 16 FPS, along with 48kHz audio, and provide video editing capabilities.

Designed to handle tasks ranging from personalized video creation to sophisticated video editing and high-quality audio generation, Movie Gen leverages powerful AI models to enhance users’ creative options.

Key features of the Movie Gen suite include:

• Video Generation: With Movie Gen, users can produce high-definition (HD) videos by simply entering text prompts. These videos can be rendered at 1080p resolution, up to 16 seconds long, and are supported by a 30 billion-parameter transformer model. The AI’s ability to manage detailed prompts allows it to handle various aspects of video creation, including camera motion, object interactions, and environmental physics.

• Personalized Videos: Movie Gen offers an exciting personalized video feature, where users can upload an image of themselves or others to be featured within AI-generated videos. The model can adapt to various prompts while maintaining the identity of the individual, making it useful for customized content creation.

• Precise Video Editing: The Movie Gen suite also includes advanced video editing capabilities that allow users to modify specific elements within a video. This model can alter localized aspects, like objects or colors, as well as global changes, such as background swaps, all based on simple text instructions.

• Audio Generation: In addition to video capabilities, Movie Gen also incorporates a 13 billion-parameter audio generation model. This feature enables the generation of sound effects, ambient music, and synchronized audio that aligns seamlessly with visual content. Users can create Foley sounds (sound effects amplifying yet solidifying real life noises like fabric ruffling and footsteps echoing), instrumental music, and other audio elements up to 45 seconds long. Meta posted an example video with Foley sounds below (turn sound up to hear it):

Trained on billions of videos online

Movie Gen is the latest advancement in Meta’s ongoing AI research efforts. To train the models, Meta says it relied upon “internet scale image, video, and audio data,” specifically, 100 million videos and 1 billion images from which it “learns about the visual world by ‘watching’ videos,” according to the technical paper.

However, Meta did not specify if the data was licensed in the paper or public domain, or if it simply scraped it as many other AI model makers have — leading to criticism from artists and video creators such as YouTuber Marques Brownlee (MKBHD) — and, in the case of AI video model provider Runway, a class-action copyright infringement suit by creators (still moving through the courts). As such, one can expect Meta to face immediate criticism for its data sources.

The legal and ethical questions about the training aside, Meta is clearly positioning the Movie Gen creation process as novel, using a combination of typical diffusion model training (used commonly in video and audio AI) alongside large language model (LLM) training and a new technique called “Flow Matching,” the latter of which relies on modeling changes in a dataset’s distribution over time.

At each step, the model learns to predict the velocity at which samples should “move” toward the target distribution. Flow Matching differs from standard diffusion-based models in key ways:

• Zero Terminal Signal-to-Noise Ratio (SNR): Unlike conventional diffusion models, which require specific noise schedules to maintain a zero terminal SNR, Flow Matching inherently ensures zero terminal SNR without additional adjustments. This provides robustness against the choice of noise schedules, contributing to more consistent and higher-quality video outputs .

• Efficiency in Training and Inference: Flow Matching is found to be more efficient both in terms of training and inference compared to diffusion models. It offers flexibility in terms of the type of noise schedules used and shows improved performance across a range of model sizes. This approach has also demonstrated better alignment with human evaluation results.

The Movie Gen system’s training process focuses on maximizing flexibility and quality for both video and audio generation. It relies on two main models, each with extensive training and fine-tuning procedures:

• Movie Gen Video Model: This model has 30 billion parameters and starts with basic text-to-image generation. It then progresses to text-to-video, producing videos up to 16 seconds long in HD quality. The training process involves a large dataset of videos and images, allowing the model to understand complex visual concepts like motion, interactions, and camera dynamics. To enhance the model’s capabilities, they fine-tuned it on a curated set of high-quality videos with text captions, which improved the realism and precision of its outputs. The team further expanded the model’s flexibility by training it to handle personalized content and editing commands.

• Movie Gen Audio Model: With 13 billion parameters, this model generates high-quality audio that syncs with visual elements in the video. The training set included over a million hours of audio, which allowed the model to pick up on both physical and psychological connections between sound and visuals. They enhanced this model through supervised fine-tuning, using selected high-quality audio and text pairs. This process helped it generate realistic ambient sounds, synced sound effects, and mood-aligned background music for different video scenes.

It follows earlier projects like Make-A-Scene and the Llama Image models, which focused on high-quality image and animation generation.

This release marks the third major milestone in Meta’s generative AI journey and underscores the company’s commitment to pushing the boundaries of media creation tools.

Launching on Insta in 2025

Set to debut on Instagram in 2025, Movie Gen is poised to make advanced video creation more accessible to the platform’s wide range of users.

While the models are currently in a research phase, Meta has expressed optimism that Movie Gen will empower users to produce compelling content with ease.

As the product continues to develop, Meta intends to collaborate with creators and filmmakers to refine Movie Gen’s features and ensure it meets user needs.

Meta’s long-term vision for Movie Gen reflects a broader goal of democratizing access to sophisticated video editing tools. While the suite offers considerable potential, Meta acknowledges that generative AI tools like Movie Gen are meant to enhance, not replace, the work of professional artists and animators.

As Meta prepares to bring Movie Gen to market, the company remains focused on refining the technology and addressing any existing limitations. It plans further optimizations aimed at improving inference time and scaling up the model’s capabilities. Meta has also hinted at potential future applications, such as creating customized animated greetings or short films entirely driven by user input.

The release of Movie Gen could signal a new era for content creation on Meta’s platforms, with Instagram users among the first to experience this innovative tool. As the technology evolves, Movie Gen could become a vital part of Meta’s ecosystem and that of creators — pro and indie alike.

Source link

Servers computers

27U Network Server Rack #homelab #network #business #informationtechnology #website #homenetworking

Technology

Epic has a plan for the rest of the decade

On Tuesday, onstage at the Unreal Fest conference in Seattle, Sweeney declared that the company is now “financially sound.” The announcement kicked off a packed two-hour keynote with updates on Unreal Engine, the Unreal Editor for Fortnite, the Epic Games Store, and more.

In an interview with The Verge, Sweeney says that reining in Epic’s spending was part of what brought the company to this point. “Last year, before Unreal Fest, we were spending about a billion dollars a year more than we were making,” Sweeney says. “Now, we’re spending a bit more than we’re making.”

“The real power will come when we bring these two worlds together”

Sweeney says the company is well set up for the future, too, and that it has the ability to make the types of long-term bets he spent the conference describing. “We have a very, very long runway comparing our savings in the bank to our expenditure,” Sweeney says. “We have a very robust amount of funding relative to pretty much any company in the industry and are making forward investments really judiciously that we could throttle up or down as our fortunes change. We feel we’re in a perfect position to execute for the rest of this decade and achieve all of our plans at our size.”

Epic has ambitious plans. Right now, Epic offers both Unreal Engine, its high-end game development tools, and Unreal Editor for Fortnite, which is designed to be simpler to use. What it’s building toward is a new version of Unreal Engine that can tie them together.

“The real power will come when we bring these two worlds together so we have the entire power of our high-end game engine merged with the ease of use that we put together in [Unreal Editor for Fortnite],” Sweeney says. “That’s going to take several years. And when that process is complete, that will be Unreal Engine 6.”

Unreal Engine 6 is meant to let developers “build an app once and then deploy it as a standalone game for any platform,” Sweeney says. Developers will be able to deploy the work that they do into Fortnite or other games that “choose to use this technology base,” which would allow for interoperable content.

The upcoming “persistent universe” Epic is building with Disney is an example of the vision. “We announced that we’re working with Disney to build a Disney ecosystem that’s theirs, but it fully interoperates with the Fortnite ecosystem,” Sweeney says. “And what we’re talking about with Unreal Engine 6 is the technology base that’s going to make that possible for everybody. Triple-A game developers to indie game developers to Fortnite creators achieving that same sort of thing.”

If you read my colleague Andrew Webster’s interview with Sweeney from March 2023, the idea of interoperability to make the metaverse work will seem familiar. At Unreal Fest this week, I got a better picture of how the mechanics of that might work with things like Unreal Engine 6 and the company’s soon-to-open Fab marketplace to shop for digital assets.

Fab will be able to host assets that can work in Minecraft or Roblox, Sweeney says. But the bigger goal is to let Fab creators offer “one logical asset that has different file formats that work in different contexts.” He gave an example of how a user might buy a forest mesh set that has different content optimized for Unreal Engine, Unity, Roblox, and Minecraft. “Having seamless movement of content from place to place is going to be one of the critical things that makes the metaverse work without duplication.”

But for an interoperable metaverse to really be possible, companies like Epic, Roblox, and Microsoft will need to find ways for players to move between those worlds instead of keeping them siloed — and for the most part, that isn’t on the horizon.

Sweeney says Epic hasn’t had “those sorts of discussions” with anyone but Disney yet. “But we will, over time,” he says. He described an ideal where companies, working as peers, would use revenue sharing as a way to create incentives for item shops that people want to buy digital goods from and “sources of engagement” (like Fortnite experiences) that people want to spend time in.

“The whole thesis here is that players are gravitating towards games which they can play together with all their friends, and players are spending more on digital items in games that they trust they’re going to play for a long time,” Sweeney says. “If you’re just dabbling in a game, why would you spend money to buy an item that you’re never going to use again? If we have an interoperable economy, then that will increase player trust that today’s spending on buying digital goods results in things that they’re going to own for a long period of time, and it will work in all the places they go.”

“People are not dogmatic about where they play”

“There’s no reason why we couldn’t have a federated way to flow between Roblox, Minecraft, and Fortnite,” Epic EVP Saxs Persson says. “From our perspective, that would be amazing, because it keeps people together and lets the best ecosystem win.” Epic sees in its surveys that “people are not dogmatic about where they play,” Persson says.

Of course, there’s plenty of opportunity for Epic, which already makes a widely played game and a widely used game engine and is building Fortnite into a game-making tool. (And I haven’t even mentioned how Unreal Engine is increasingly used in filmmaking and other industries.) The end state sounds great for Epic, but Epic also has to make the math make sense for everyone else.

And it has to do that without much of a presence on mobile. The company has spent years in legal battles with Apple and Google over their mobile app store practices, and it just sued Samsung, too. The Epic Games Store recently launched on Android globally and on iOS in the EU, but thanks to restrictions on third-party app stores, the company’s game store boss, Steve Allison, tells The Verge that reaching its end-of-year install goal is “likely impossible.” Any major change could take quite a while, according to Sweeney. “It will be a long battle, and it will likely result in a long series of battles, each of which moves a set of freedoms forward, rather than having a single worldwide moment of victory,” Sweeney says.

There’s one other battle Epic is fighting: Fortnite is still hugely popular, but there is waning interest — or hype, at least — in the metaverse. Sweeney and Persson, however, don’t exactly agree about the term seemingly falling out of popularity.

“It’s like there’s metaverse weather,” Sweeney says. “Some days it’s good, some days it’s bad. Depends on who’s doing the talking about it.”

Servers computers

DevOps & SysAdmins: Are blade servers noisier than regular rack servers? (6 Solutions!!)

DevOps & SysAdmins: Are blade servers noisier than regular rack servers?

Helpful? Please support me on Patreon: https://www.patreon.com/roelvandepaar

With thanks & praise to God, and with thanks to the many people who have made this project possible! | Content (except music & images) licensed under CC BY-SA https://meta.stackexchange.com/help/licensing | Music: https://www.bensound.com/licensing | Images: https://stocksnap.io/license & others | With thanks to user sysadmin1138 (serverfault.com/users/3038), user lweeks (serverfault.com/users/461999), user David Mackintosh (serverfault.com/users/6767), user Dan Carley (serverfault.com/users/7083), user codeulike (serverfault.com/users/1395), user Chopper3 (serverfault.com/users/1435), user Brian Knoblauch (serverfault.com/users/1403), and the Stack Exchange Network (serverfault.com/questions/76767). Trademarks are property of their respective owners. Disclaimer: All information is provided “AS IS” without warranty of any kind. You are responsible for your own actions. Please contact me if anything is amiss at Roel D.OT VandePaar A.T gmail.com .

source

-

Womens Workouts2 weeks ago

Womens Workouts2 weeks ago3 Day Full Body Women’s Dumbbell Only Workout

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHow to unsnarl a tangle of threads, according to physics

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHyperelastic gel is one of the stretchiest materials known to science

-

Technology2 weeks ago

Technology2 weeks agoWould-be reality TV contestants ‘not looking real’

-

Science & Environment2 weeks ago

Science & Environment2 weeks ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

News2 weeks ago

News2 weeks agoOur millionaire neighbour blocks us from using public footpath & screams at us in street.. it’s like living in a WARZONE – WordupNews

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHow to wrap your mind around the real multiverse

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoSunlight-trapping device can generate temperatures over 1000°C

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoLiquid crystals could improve quantum communication devices

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoQuantum ‘supersolid’ matter stirred using magnets

-

Science & Environment2 weeks ago

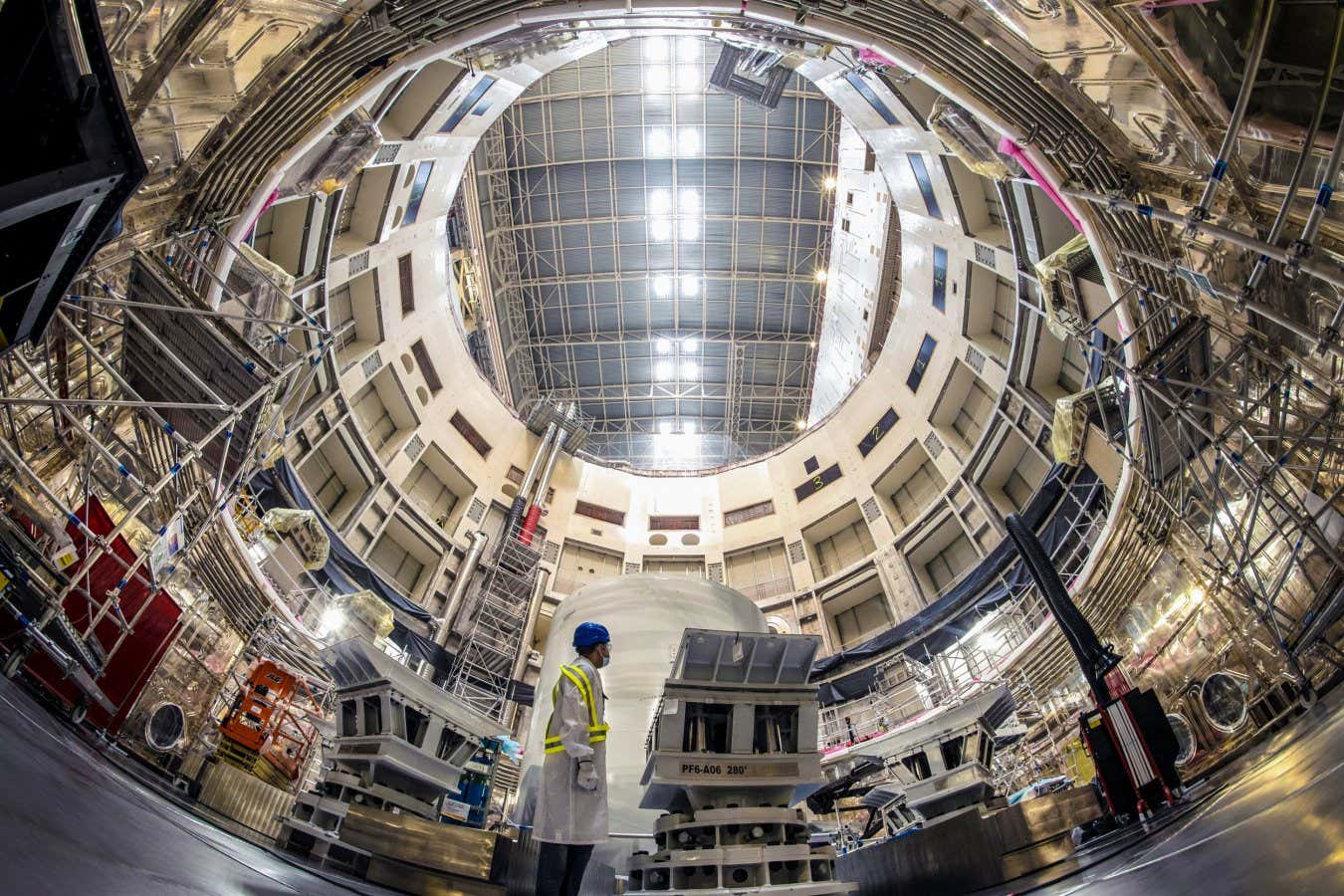

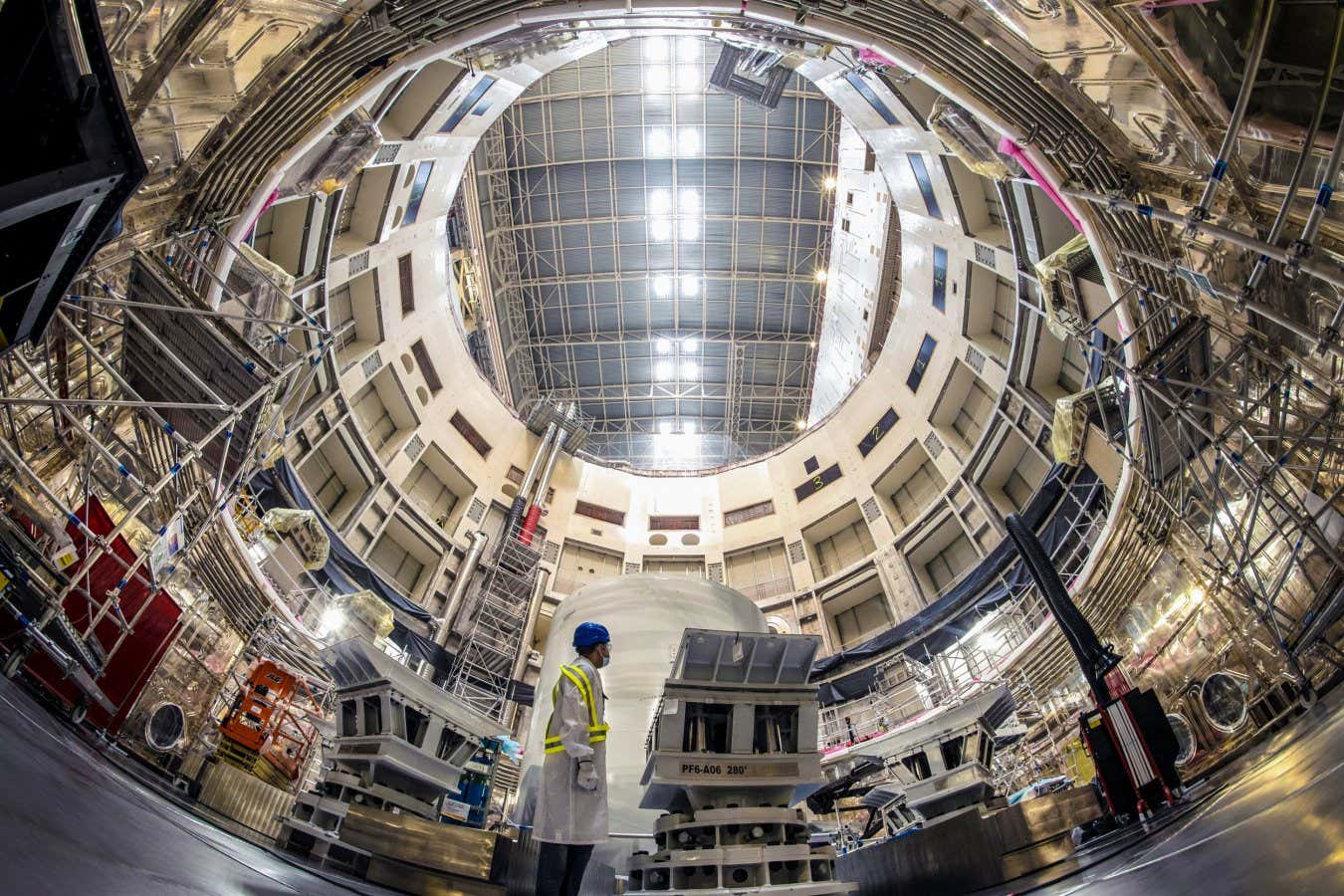

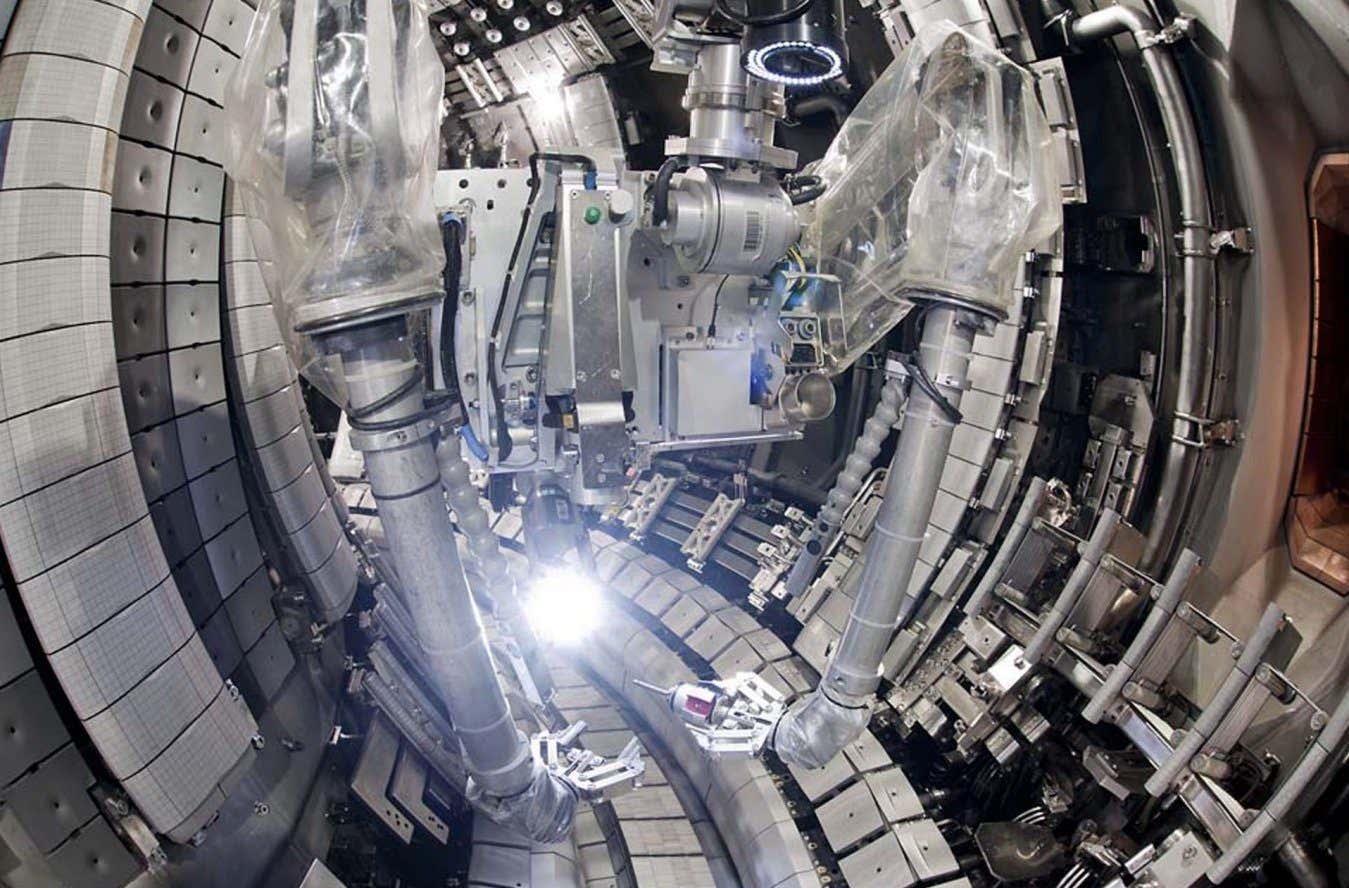

Science & Environment2 weeks agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoPhysicists are grappling with their own reproducibility crisis

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoQuantum forces used to automatically assemble tiny device

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoWhy this is a golden age for life to thrive across the universe

-

News2 weeks ago

News2 weeks agoYou’re a Hypocrite, And So Am I

-

News3 weeks ago

the pick of new debut fiction

-

Sport2 weeks ago

Sport2 weeks agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

Science & Environment3 weeks ago

Science & Environment3 weeks agoCaroline Ellison aims to duck prison sentence for role in FTX collapse

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoNuclear fusion experiment overcomes two key operating hurdles

-

Technology1 week ago

Technology1 week ago‘From a toaster to a server’: UK startup promises 5x ‘speed up without changing a line of code’ as it plans to take on Nvidia, AMD in the generative AI battlefield

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoNerve fibres in the brain could generate quantum entanglement

-

MMA1 week ago

MMA1 week agoConor McGregor challenges ‘woeful’ Belal Muhammad, tells Ilia Topuria it’s ‘on sight’

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoLaser helps turn an electron into a coil of mass and charge

-

Business7 days ago

Eurosceptic Andrej Babiš eyes return to power in Czech Republic

-

Football1 week ago

Football1 week agoFootball Focus: Martin Keown on Liverpool’s Alisson Becker

-

News2 weeks ago

News2 weeks agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoRethinking space and time could let us do away with dark matter

-

News2 weeks ago

News2 weeks ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoUK spurns European invitation to join ITER nuclear fusion project

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoCardano founder to meet Argentina president Javier Milei

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoMeet the world's first female male model | 7.30

-

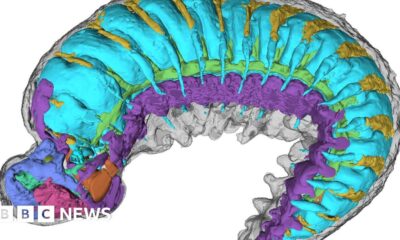

Science & Environment1 week ago

Science & Environment1 week agoX-rays reveal half-billion-year-old insect ancestor

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoA slight curve helps rocks make the biggest splash

-

Business3 weeks ago

JPMorgan in talks to take over Apple credit card from Goldman Sachs

-

News3 weeks ago

News3 weeks ago▶️ Hamas in the West Bank: Rising Support and Deadly Attacks You Might Not Know About

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

Womens Workouts2 weeks ago

Womens Workouts2 weeks agoBest Exercises if You Want to Build a Great Physique

-

News2 weeks ago

News2 weeks agoWhy Is Everyone Excited About These Smart Insoles?

-

News2 weeks ago

News2 weeks agoFour dead & 18 injured in horror mass shooting with victims ‘caught in crossfire’ as cops hunt multiple gunmen

-

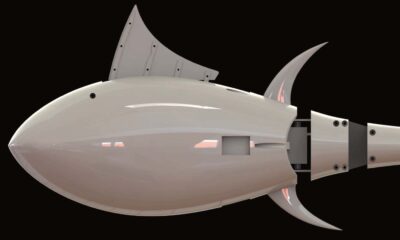

Technology2 weeks ago

Technology2 weeks agoRobo-tuna reveals how foldable fins help the speedy fish manoeuvre

-

Business1 week ago

Should London’s tax exiles head for Spain, Italy . . . or Wales?

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Health & fitness2 weeks ago

Health & fitness2 weeks agoThe secret to a six pack – and how to keep your washboard abs in 2022

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoQuantum time travel: The experiment to ‘send a particle into the past’

-

News3 weeks ago

News3 weeks agoNew investigation ordered into ‘doorstep murder’ of Alistair Wilson

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoWhy we need to invoke philosophy to judge bizarre concepts in science

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

Womens Workouts2 weeks ago

Womens Workouts2 weeks agoEverything a Beginner Needs to Know About Squatting

-

Womens Workouts2 weeks ago

Womens Workouts2 weeks ago3 Day Full Body Toning Workout for Women

-

Travel2 weeks ago

Travel2 weeks agoDelta signs codeshare agreement with SAS

-

Servers computers1 week ago

Servers computers1 week agoWhat are the benefits of Blade servers compared to rack servers?

-

Politics1 week ago

Politics1 week agoHope, finally? Keir Starmer’s first conference in power – podcast | News

-

Technology1 week ago

Technology1 week agoThe best robot vacuum cleaners of 2024

-

Sport2 weeks ago

Sport2 weeks agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

Technology2 weeks ago

Technology2 weeks agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

News2 weeks ago

News2 weeks agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Health & fitness2 weeks ago

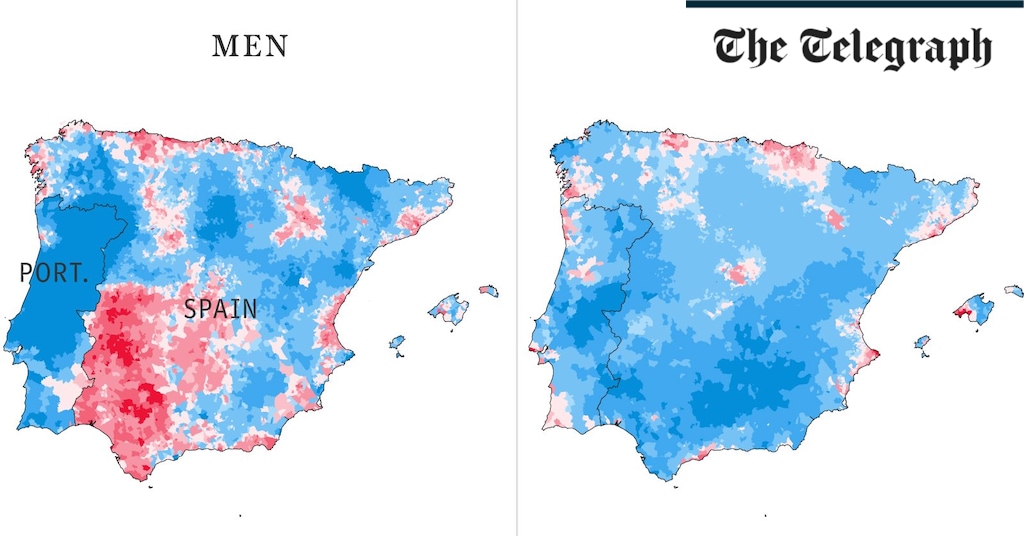

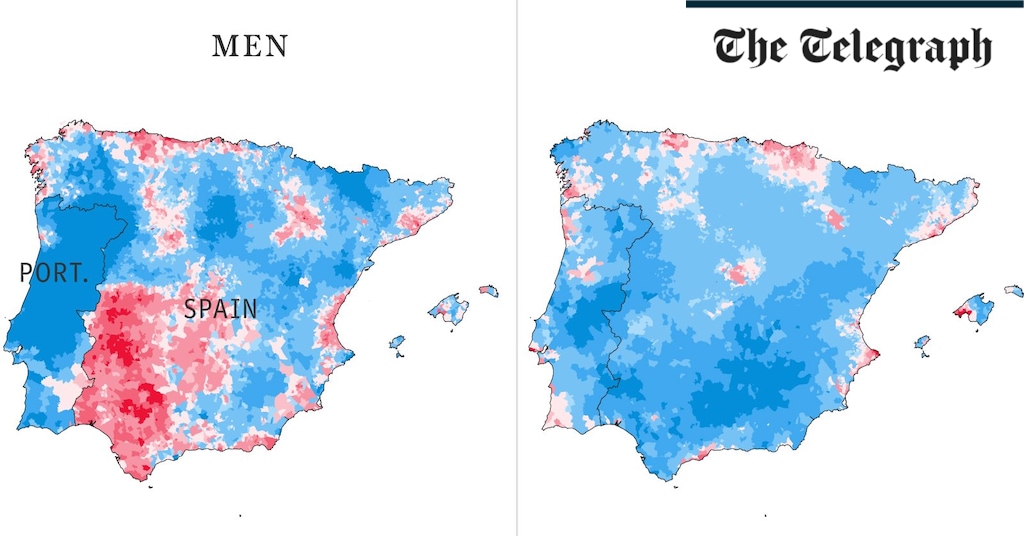

Health & fitness2 weeks agoThe maps that could hold the secret to curing cancer

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoBeing in two places at once could make a quantum battery charge faster

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoBlockdaemon mulls 2026 IPO: Report

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

Politics2 weeks ago

UK consumer confidence falls sharply amid fears of ‘painful’ budget | Economics

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoCNN TÜRK – 🔴 Canlı Yayın ᴴᴰ – Canlı TV izle

-

News1 week ago

News1 week agoUS Newspapers Diluting Democratic Discourse with Political Bias

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHow one theory ties together everything we know about the universe

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoTiny magnet could help measure gravity on the quantum scale

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHow do you recycle a nuclear fusion reactor? We’re about to find out

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoDecentraland X account hacked, phishing scam targets MANA airdrop

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoSEC asks court for four months to produce documents for Coinbase

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks ago‘Silly’ to shade Ethereum, the ‘Microsoft of blockchains’ — Bitwise exec

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

Business2 weeks ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Business2 weeks ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Politics2 weeks ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

News2 weeks ago

News2 weeks agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Womens Workouts2 weeks ago

Womens Workouts2 weeks agoHow Heat Affects Your Body During Exercise

-

Womens Workouts2 weeks ago

Womens Workouts2 weeks agoKeep Your Goals on Track This Season

-

TV2 weeks ago

TV2 weeks agoCNN TÜRK – 🔴 Canlı Yayın ᴴᴰ – Canlı TV izle

-

News2 weeks ago

News2 weeks agoChurch same-sex split affecting bishop appointments

-

Politics3 weeks ago

Politics3 weeks agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Technology2 weeks ago

Technology2 weeks agoFivetran targets data security by adding Hybrid Deployment

-

Science & Environment2 weeks ago

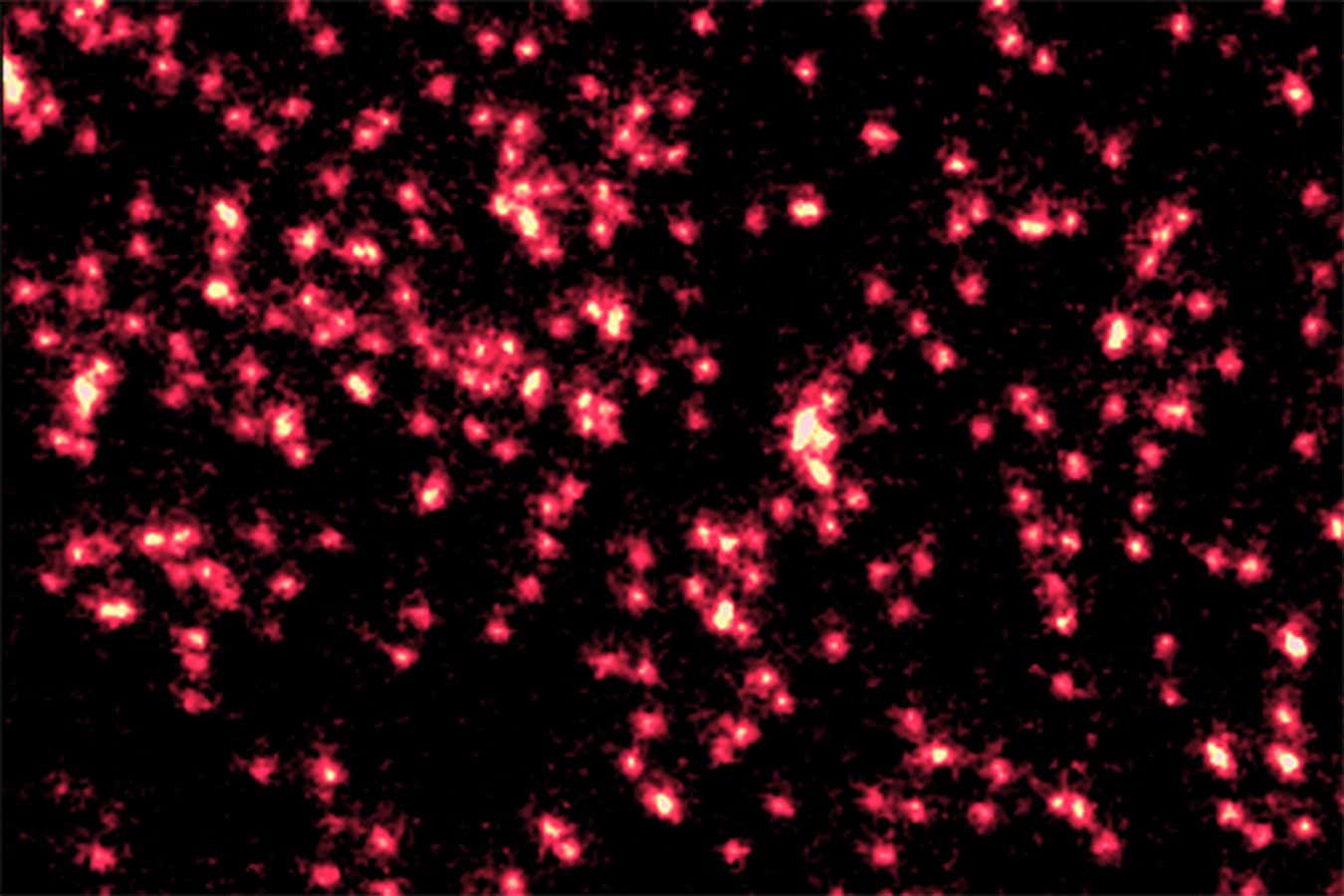

Science & Environment2 weeks agoSingle atoms captured morphing into quantum waves in startling image

-

Politics2 weeks ago

Politics2 weeks agoLabour MP urges UK government to nationalise Grangemouth refinery

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoA tale of two mysteries: ghostly neutrinos and the proton decay puzzle

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency2 weeks ago

CryptoCurrency2 weeks agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

You must be logged in to post a comment Login