Technology

Everything you need to know about From season 3

MGM+ subscribers are ahead of the curve on From, a sci-fi/horror series that follows people who have been trapped in a small town in Middle America that will literally not let them leave. There are even monsters that come out at night to hunt people for sport, and the human survivors have largely split into two factions: the Township and Colony House. But there’s one thing that almost everyone in the town wants, and that’s a way to escape once and for all.

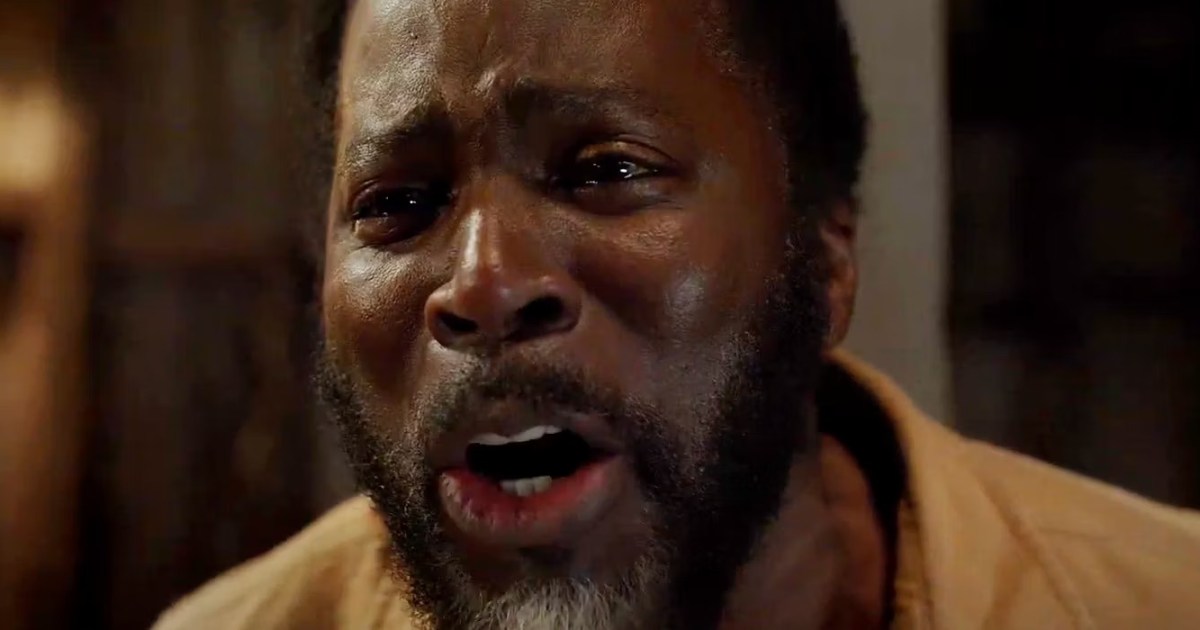

From is headlined by Harold Perrineau, an actor who knows a thing or two about playing a single father who is cut off from the rest of the world after his stint on Lost. On this series, Perrineau plays Boyd Stevens, the father of Ellis Stevens (Corteon Moore), and the closest thing that the town has to both a sheriff and a mayor. Boyd also has plenty of inner demons to contend with in addition to the creatures lurking inside and outside of the town.

Die-hard fans of From have been hyping up the series ahead of its return for season 3. But for newcomers and veterans alike, we’ve put together this guide to everything you need to know about From season 3. And as soon as we know more, we’ll share it here as well.

What’s coming up in the remaining episodes of From season 3?

MGM+ has released the description of From season 3 episode 7, Three Fragile Lives, which will premiere on November 3.

“The edges begin to fray as concerns about Fatima’s pregnancy deepen; Jade follows a clue trail into the forest; Julie and Randall seek a bit of normalcy.”

Episode 8 will be called Thresholds, and it debuts on November 10. The final episodes of the season will be a two-part story, Revelations: Chapter One and Revelations: Chapter Two, on November 17 and November 24, respectively.

Is there a trailer for From season 3?

MGM+ released the From season 3 official trailer during a panel at San Diego Comic-Con 2024. The mystery and creepiness will most certainly continue in the new season. ” In the wake of Season 2’s epic cliffhanger, escape will become a tantalizing and very real possibility as the true nature of the town comes into focus, and the townspeople go on offense against the myriad horrors surrounding them,” the trailer’s synopsis reads.

In April, MGM+ debuted a brief 30-second teaser that features Boyd being tortured, but not in terms of physical pain to himself. Instead, he’s forced to watch someone he cares about in obvious distress as he attempts to offer words of comfort. It’s a disturbing scene, and it may be the realization of the warning that Boyd received last season while confronting the entity. This may be the price that Boyd has to pay, even if the actual punishment is inflicted on someone else.

Has From season 3 begun filming?

Yes. Harold Perrineau confirmed that the third season was filming at the end of December.

We started filming Season 3….

So I think you’re good 👍🏾 #FROMily@FROMonMGM https://t.co/sKxkRP4cWa— Harold Perrineau (@HaroldPerrineau) December 29, 2023

So far, Perrineau hasn’t shared an update about whether filming has been completed. But he has actively promoted the show on social media.

The official From account on the social media service formerly known as Twitter hasn’t had much new in 2024. The account did share this image of one of the protective talismans back in February in response to, of all things, the announcement of a new Taylor Swift album.

👀 https://t.co/Onulvh0HoB pic.twitter.com/oBjXhrLdHN

— FROM on MGM+ (@FROMonMGM) February 5, 2024

What will happen in From season 3?

This show makes it difficult to predict what will happen next, but the season 2 finale did end on a pretty big cliffhanger. Tabitha is back in the outside world, and that opens up a lot of possibilities. Will she try to warn people about the town? Does she feel obligated to rescue the people that she left behind? And more importantly, can she find the town again without getting trapped herself? Having Tabitha outside of the town also offers the show a chance to reveal whether the disappearance of the various characters who are trapped in the town has been noticed or investigated by the people they knew and loved.

Meanwhile, back in the town, Boyd was warned that fighting the entity would just prolong everyone’s suffering, but he did it anyway. Presumably, there will be consequences for disregarding that warning.

Who is in From’s season 3 cast?

Perrineau leads From season 3’s large ensemble cast. Featured performers include:

- Harold Perrineau as Boyd Stevens

- Catalina Sandino Moreno as Tabitha Matthews

- Eion Bailey as Jim Matthews

- Avery Konrad as Sara Myers

- David Alpay as Jade Herrera

- Elizabeth Saunders as Donna Raines

- Scott McCord as Victor

- Ricky He as Kenny Liu

- Corteon Moore as Ellis Stevens

- Chloe Van Landschoot as Kristi Miller

- Pegah Ghafoori as Fatima Hassan

- Hannah Cheramy as Julie Matthews

- Simon Webster as Ethan Matthews

- Elizabeth Moy as Tian-Chen Liu

- Nathan D. Simmons as Elgin

- Kaelen Ohm as Marielle

- Angela Moore as Bakta

- A.J. Simmons as Randall

- Deborah Grover as Tillie

The biggest season 3 additions include Robert Joy as Henry, a curmudgeon to whom the years have not been kind, and Samantha Brown as Acosta, a new-to-the-force police officer who is in over her head.

Technology

What’s new on Apple TV+ this month (November 2024)

Due to its unique model that includes only original content, Apple TV+ tends to have a very slim new release slate. However, just about every Apple TV+ release features A-list talent, and it has set a high bar for quality. Just look at Best Picture winner CODA and Emmy-winning drama Severance (returning in January).

This month is no exception, as there are only four new additions to the library in November. We’ve highlighted the two most anticipated, but don’t overlook Season 2 of the critically acclaimed comedy Bad Sisters or the Malala Yousafzai and Jennifer Lawrence documentary Bread & Roses.

There are only a few new arrivals each month to Apple TV+, but they’re usually all worth at least a glance. This month is no exception. Read on for everything coming to Apple TV+ in October 2024.

Looking for more content? Check out our guides on the best new shows to stream, the best shows on Apple TV+, the best shows on Netflix, and the best shows on Hulu.

Need more suggestions?

Our top picks for November

Everything new on Apple TV+ in November

November 13

November 15

November 22

Last month’s top picks

Technology

Google could add album art to ‘Now Playing’ on Pixel phones

Google may upgrade the “Now Playing” feature by adding the much-needed album art to the history page. Now Playing has been able to identify songs with a high degree of accuracy, but the list only included the name of the song and the artist.

Now Playing is constantly operating in the background, but only for music

Introduced way back in 2017 along with the Pixel 2, the Now Playing feature has remained exclusive to the Google Pixel phones. It essentially identifies songs that are playing nearby and works well even on the latest Pixel 9 devices.

Apps like Shazam have been recognizing music and songs for quite some time. However, Now Playing has some tricks for the Pixel phones. Now Playing works entirely in the background. Pixel users don’t even need to pull out their phones.

While working in the background, Now Playing relies on the low-power efficiency cores to continuously analyze audio through the microphone. If it picks up audio that seems like music or a song, Now Playing requests the performance cores to record a few seconds of the audio.

Now Playing then matches the recorded audio on a database containing tens of thousands of fingerprints of the most popular songs in a particular region. After processing and matching, Now Playing displays the name and artist of the song on the lock screen as well as in a notification.

Needless to say, Now Playing is fairly accurate. However, the list of songs it recognizes contains only the name of the song, the artist, and a timestamp.

Google’s Now Playing feature for Pixel devices may get album art

The songs that Now Playing recognized are visible under Settings > Sound & vibration > Now Playing. The page lists the history of identified songs in reverse chronological order.

Although there’s an icon next to each song, Google has refused to append any album art to the songs Now Playing recognizes. According to Android Authority, this might change in the future.

The hidden system app that downloads the Now Playing database may soon also grab album art. The code change is titled “#AlbumArt Add Now Playing album art downloads to the network usage log”.

Google has yet to assign a dedicated online repository from where Now Playing will download album art for the songs it recognizes. However, Ambient Music Mod, an open-source port of Now Playing by developer Kieron Quinn, already has the feature. The reverse-engineered version essentially replaces the generic music note icon with album art.

Technology

Disney forms dedicated AI and XR group to coordinate company-wide use and adoption

Disney is adding another layer to its AI and extended reality strategies. As first reported by Reuters, the company recently formed a dedicated emerging technologies unit. Dubbed the Office of Technology Enablement, the group will coordinate the company’s exploration, adoption and use of artificial intelligence, AR and VR tech.

It has tapped Jamie Voris, previously the CTO of its Studios Technology division, to oversee the effort. Before joining Disney in 2010, Voris was the chief technology officer at the National Football League. More recently, he led the development of the company’s Apple Vision Pro app. Voris will report to Alan Bergman, the co-chairman of Disney Entertainment. Reuters reports the company eventually plans to grow the group to about 100 employees.

“The pace and scope of advances in AI and XR are profound and will continue to impact consumer experiences, creative endeavors, and our business for years to come — making it critical that Disney explore the exciting opportunities and navigate the potential risks,” Bergman wrote in an email Disney shared with Engadget. “The creation of this new group underscores our dedication to doing that and to being a positive force in shaping responsible use and best practices.”

A Disney spokesperson told Engadget the Office of Technology Enablement won’t take over any existing AI and XR projects at the company. Instead, it will support Disney’s other teams, many of which are already working on products that involve those technologies, to ensure their work fits into the company’s broader strategic goals.

“It is about bringing added focus, alignment, and velocity to those efforts, and about reinforcing our commitment being a positive force in shaping responsible use and best practices,” the spokesperson said.

It’s safe to say Disney has probably navigated the last two decades of technological change better than most of Hollywood. For instance, the company’s use of the Unreal Engine in conjunction with a digital set known as The Volume has streamlined the production of VFX-heavy shows like The Mandalorian. With extended reality and AI in particular promising tidal changes to how humans work and play, it makes sense to add some additional oversight to how those technologies are used at the company.

If you buy something through a link in this article, we may earn commission.

Technology

Meta unveils AI tools to give robots a human touch in physical world

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

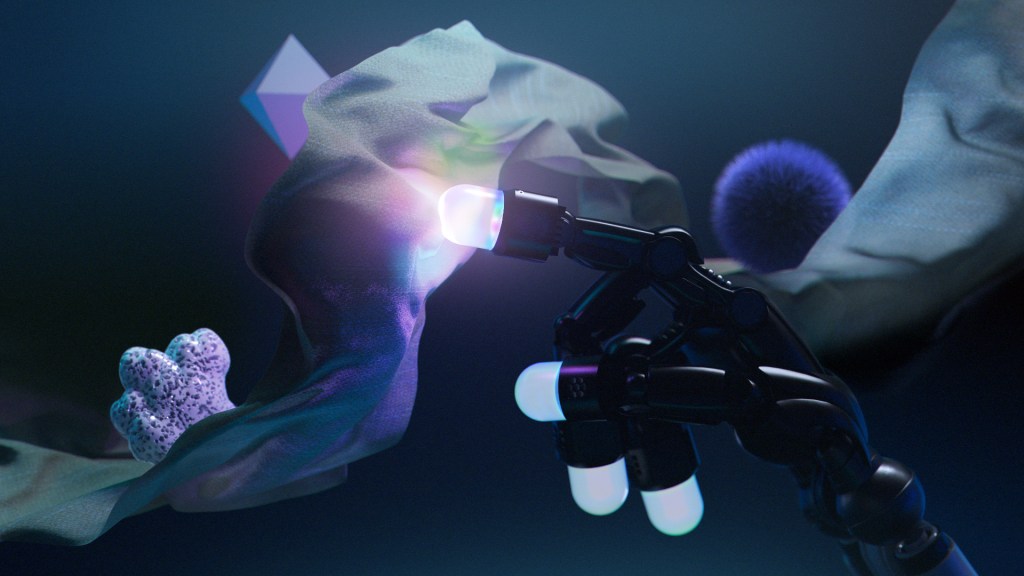

Meta made several major announcements for robotics and embodied AI systems this week. This includes releasing benchmarks and artifacts for better understanding and interacting with the physical world. Sparsh, Digit 360 and Digit Plexus, the three research artifacts released by Meta, focus on touch perception, robot dexterity and human-robot interaction. Meta is also releasing PARTNR a new benchmark for evaluating planning and reasoning in human-robot collaboration.

The release comes as advances in foundational models have renewed interest in robotics, and AI companies are gradually expanding their race from the digital realm to the physical world.

There is renewed hope in the industry that with the help of foundation models such as large language models (LLMs) and vision-language models (VLMs), robots can accomplish more complex tasks that require reasoning and planning.

Tactile perception

Sparsh, which was created in collaboration with the University of Washington and Carnegie Mellon University, is a family of encoder models for vision-based tactile sensing. It is meant to provide robots with touch perception capabilities. Touch perception is crucial for robotics tasks, such as determining how much pressure can be applied to a certain object to avoid damaging it.

The classic approach to incorporating vision-based tactile sensors in robot tasks is to use labeled data to train custom models that can predict useful states. This approach does not generalize across different sensors and tasks.

Meta describes Sparsh as a general-purpose model that can be applied to different types of vision-based tactile sensors and various tasks. To overcome the challenges faced by previous generations of touch perception models, the researchers trained Sparsh models through self-supervised learning (SSL), which obviates the need for labeled data. The model has been trained on more than 460,000 tactile images, consolidated from different datasets. According to the researchers’ experiments, Sparsh gains an average 95.1% improvement over task- and sensor-specific end-to-end models under a limited labeled data budget. The researchers have created different versions of Sparsh based on various architectures, including Meta’s I-JEPA and DINO models.

Touch sensors

In addition to leveraging existing data, Meta is also releasing hardware to collect rich tactile information from the physical. Digit 360 is an artificial finger-shaped tactile sensor with more than 18 sensing features. The sensor has over 8 million taxels for capturing omnidirectional and granular deformations on the fingertip surface. Digit 360 captures various sensing modalities to provide a richer understanding of the environment and object interactions.

Digit 360 also has on-device AI models to reduce reliance on cloud-based servers. This enables it to process information locally and respond to touch with minimal latency, similar to the reflex arc in humans and animals.

“Beyond advancing robot dexterity, this breakthrough sensor has significant potential applications from medicine and prosthetics to virtual reality and telepresence,” Meta researchers write.

Meta is publicly releasing the code and designs for Digit 360 to stimulate community-driven research and innovation in touch perception. But as in the release of open-source models, it has much to gain from the potential adoption of its hardware and models. The researchers believe that the information captured by Digit 360 can help in the development of more realistic virtual environments, which can be big for Meta’s metaverse projects in the future.

Meta is also releasing Digit Plexus, a hardware-software platform that aims to facilitate the development of robotic applications. Digit Plexus can integrate various fingertip and skin tactile sensors onto a single robot hand, encode the tactile data collected from the sensors, and transmit them to a host computer through a single cable. Meta is releasing the code and design of Digit Plexus to enable researchers to build on the platform and advance robot dexterity research.

Meta will be manufacturing Digit 360 in partnership with tactile sensor manufacturer GelSight Inc. They will also partner with South Korean robotics company Wonik Robotics to develop a fully integrated robotic hand with tactile sensors on the Digit Plexus platform.

Evaluating human-robot collaboration

Meta is also releasing Planning And Reasoning Tasks in humaN-Robot collaboration (PARTNR), a benchmark for evaluating the effectiveness of AI models when collaborating with humans on household tasks.

PARTNR is built on top of Habitat, Meta’s simulated environment. It includes 100,000 natural language tasks in 60 houses and involves more than 5,800 unique objects. The benchmark is designed to evaluate the performance of LLMs and VLMs in following instructions from humans.

Meta’s new benchmark joins a growing number of projects that are exploring the use of LLMs and VLMs in robotics and embodied AI settings. In the past year, these models have shown great promise to serve as planning and reasoning modules for robots in complex tasks. Startups such as Figure and Covariant have developed prototypes that use foundation models for planning. At the same time, AI labs are working on creating better foundation models for robotics. An example is Google DeepMind’s RT-X project, which brings together datasets from various robots to train a vision-language-action (VLA) model that generalizes to various robotics morphologies and tasks.

Source link

Technology

How to build a company that can save the world and generate a profit

For startups that hope to save the world, or at least make it a better place, balancing impact with profit can be tricky.

“Investor and shareholder expectations are often not aligned with how hard and intractable the problems are that we face as a society,” Allison Wolff, co-founder and CEO of Vibrant Planet, said on the Builders Stage at TechCrunch Disrupt 2024. “I think in some ways, we’re a little bit stuck.”

But it’s not impossible.

Wolff’s company develops cloud-based software for utilities, insurers, and land managers like the U.S. Forest Service to model and respond to wildfire risk. To ensure the company keeps its eye on the mission, it has registered as a public benefit corporation, which requires companies to report on impact in addition to the usual financial information.

“That’s an elegant structure to consider if you haven’t already, and it’s easy to convert,” she said. “And it’s a good forcing function to do the reporting side of that, to really think through every year, what impact are we having, and how do we account for it.”

Another approach is to find a technology and business model that tightly couples purpose and profit. That’s what Areeb Malik and his co-founders did when launching Glacier, their robotic recycling company.

“When I was starting my company, I was looking for the right opportunity, and it was really about aligning profitability with impacts,” he said onstage.

“If you can find a place where you can align, for instance, climate impact, the thing that I’m super passionate about, with making money, then I welcome a PE fund to come and take over my business, because they will juice us for money. That money directly correlates with climate impact.”

Holding fast to the mission isn’t necessarily enough, though, Hyuk-Jeen Suh, general partner at SkyRiver Ventures, said at Disrupt. Mission means nothing if a company’s reach remains limited.

“A lot of founders get focused so much on making their widget that that one widget is all they care about. They haven’t figured out how to build the foundation for scale,” he said. “When you’re making a widget, you have to think, how am I going to mass produce this? How am I going to mass market this?”

If that all sounds like too much for startups to juggle, that maybe the mission part should fall to the wayside while they master the basics, Suh said that sort of ambition is actually a sign that the companies are on the right track. “They almost have to bite more than they can chew, because without that boldness and vision, I think it will be difficult to really make an impact.”

Technology

Some iPhone 14 Plus phones have a camera issue, but Apple may fix it for free

Apple announced a new service program to fix iPhone 14 Plus phones that have rear cameras that won’t show a preview.

Apple has determined that the rear camera on a very small percentage of iPhone 14 Plus devices may exhibit no preview. Affected devices were manufactured between April 10, 2023 to April 28, 2024.

If your iPhone 14 Plus is affected — and you can enter your serial number on the program page to see if yours is — Apple says it or an Authorized Service Provider will service your phone for free. If you’ve already paid to have the camera repaired, Apple says to reach out to ask if you can get a refund.

Eligible phones will be covered by this program for three years after they were originally sold.

-

Science & Environment1 month ago

Science & Environment1 month agoHow to unsnarl a tangle of threads, according to physics

-

Technology1 month ago

Technology1 month agoIs sharing your smartphone PIN part of a healthy relationship?

-

Science & Environment1 month ago

Science & Environment1 month ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment1 month ago

Science & Environment1 month agoHyperelastic gel is one of the stretchiest materials known to science

-

Technology1 month ago

Technology1 month agoWould-be reality TV contestants ‘not looking real’

-

Science & Environment1 month ago

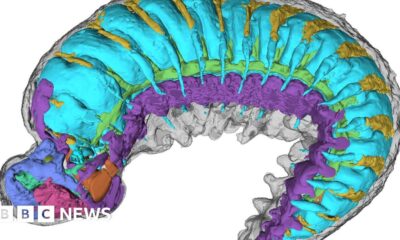

Science & Environment1 month agoX-rays reveal half-billion-year-old insect ancestor

-

Science & Environment1 month ago

Science & Environment1 month agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment1 month ago

Science & Environment1 month agoSunlight-trapping device can generate temperatures over 1000°C

-

Sport4 weeks ago

Sport4 weeks agoBoxing: World champion Nick Ball set for Liverpool homecoming against Ronny Rios

-

Technology1 month ago

Technology1 month agoUkraine is using AI to manage the removal of Russian landmines

-

Technology4 weeks ago

Technology4 weeks agoGmail gets redesigned summary cards with more data & features

-

Football1 month ago

Football1 month agoRangers & Celtic ready for first SWPL derby showdown

-

Science & Environment1 month ago

Science & Environment1 month agoLaser helps turn an electron into a coil of mass and charge

-

Science & Environment1 month ago

Science & Environment1 month agoPhysicists have worked out how to melt any material

-

Science & Environment1 month ago

Science & Environment1 month agoLiquid crystals could improve quantum communication devices

-

Technology1 month ago

Technology1 month agoRussia is building ground-based kamikaze robots out of old hoverboards

-

Technology4 weeks ago

Technology4 weeks agoSamsung Passkeys will work with Samsung’s smart home devices

-

MMA1 month ago

MMA1 month agoDana White’s Contender Series 74 recap, analysis, winner grades

-

Sport4 weeks ago

Sport4 weeks agoAaron Ramsdale: Southampton goalkeeper left Arsenal for more game time

-

Science & Environment1 month ago

Science & Environment1 month agoQuantum ‘supersolid’ matter stirred using magnets

-

News4 weeks ago

News4 weeks agoWoman who died of cancer ‘was misdiagnosed on phone call with GP’

-

Technology1 month ago

Technology1 month agoEpic Games CEO Tim Sweeney renews blast at ‘gatekeeper’ platform owners

-

TV4 weeks ago

TV4 weeks agoসারাদেশে দিনব্যাপী বৃষ্টির পূর্বাভাস; সমুদ্রবন্দরে ৩ নম্বর সংকেত | Weather Today | Jamuna TV

-

Money4 weeks ago

Money4 weeks agoWetherspoons issues update on closures – see the full list of five still at risk and 26 gone for good

-

MMA4 weeks ago

MMA4 weeks ago‘Uncrowned queen’ Kayla Harrison tastes blood, wants UFC title run

-

Science & Environment1 month ago

Science & Environment1 month agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

News4 weeks ago

News4 weeks ago‘Blacks for Trump’ and Pennsylvania progressives play for undecided voters

-

Technology4 weeks ago

Technology4 weeks agoMicrosoft just dropped Drasi, and it could change how we handle big data

-

Technology1 month ago

Technology1 month agoWhy Machines Learn: A clever primer makes sense of what makes AI possible

-

Science & Environment1 month ago

Science & Environment1 month agoWhy this is a golden age for life to thrive across the universe

-

News4 weeks ago

News4 weeks agoNavigating the News Void: Opportunities for Revitalization

-

News4 weeks ago

News4 weeks agoMassive blasts in Beirut after renewed Israeli air strikes

-

News1 month ago

News1 month agoRwanda restricts funeral sizes following outbreak

-

MMA4 weeks ago

MMA4 weeks agoPereira vs. Rountree prediction: Champ chases legend status

-

MMA4 weeks ago

MMA4 weeks ago‘Dirt decision’: Conor McGregor, pros react to Jose Aldo’s razor-thin loss at UFC 307

-

Technology1 month ago

Technology1 month agoMicrophone made of atom-thick graphene could be used in smartphones

-

Football4 weeks ago

Football4 weeks agoWhy does Prince William support Aston Villa?

-

Technology4 weeks ago

Technology4 weeks agoCheck, Remote, and Gusto discuss the future of work at Disrupt 2024

-

Business4 weeks ago

Business4 weeks agoWhen to tip and when not to tip

-

News4 weeks ago

News4 weeks ago▶ Hamas Spent $1B on Tunnels Instead of Investing in a Future for Gaza’s People

-

Technology4 weeks ago

Technology4 weeks agoMusk faces SEC questions over X takeover

-

News4 weeks ago

News4 weeks agoCornell is about to deport a student over Palestine activism

-

Sport1 month ago

Sport1 month agoChina Open: Carlos Alcaraz recovers to beat Jannik Sinner in dramatic final

-

Science & Environment1 month ago

Science & Environment1 month agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

Science & Environment1 month ago

Science & Environment1 month agoQuantum forces used to automatically assemble tiny device

-

Science & Environment1 month ago

Science & Environment1 month agoA slight curve helps rocks make the biggest splash

-

News2 months ago

News2 months ago▶️ Hamas in the West Bank: Rising Support and Deadly Attacks You Might Not Know About

-

Business1 month ago

how UniCredit built its Commerzbank stake

-

Business4 weeks ago

Business4 weeks agoWater companies ‘failing to address customers’ concerns’

-

MMA4 weeks ago

MMA4 weeks agoKayla Harrison gets involved in nasty war of words with Julianna Pena and Ketlen Vieira

-

MMA4 weeks ago

MMA4 weeks ago‘I was fighting on automatic pilot’ at UFC 306

-

Sport4 weeks ago

Sport4 weeks ago2024 ICC Women’s T20 World Cup: Pakistan beat Sri Lanka

-

Sport4 weeks ago

Sport4 weeks agoWales fall to second loss of WXV against Italy

-

Science & Environment1 month ago

Science & Environment1 month agoNuclear fusion experiment overcomes two key operating hurdles

-

Womens Workouts1 month ago

Womens Workouts1 month ago3 Day Full Body Women’s Dumbbell Only Workout

-

Technology1 month ago

Technology1 month agoMeta has a major opportunity to win the AI hardware race

-

Business1 month ago

DoJ accuses Donald Trump of ‘private criminal effort’ to overturn 2020 election

-

Sport4 weeks ago

Sport4 weeks agoCoco Gauff stages superb comeback to reach China Open final

-

Business1 month ago

Bank of England warns of ‘future stress’ from hedge fund bets against US Treasuries

-

News4 weeks ago

News4 weeks agoHull KR 10-8 Warrington Wolves – Robins reach first Super League Grand Final

-

Sport4 weeks ago

Sport4 weeks agoWXV1: Canada 21-8 Ireland – Hosts make it two wins from two

-

News4 weeks ago

News4 weeks agoGerman Car Company Declares Bankruptcy – 200 Employees Lose Their Jobs

-

Technology4 weeks ago

Technology4 weeks agoLG C4 OLED smart TVs hit record-low prices ahead of Prime Day

-

MMA4 weeks ago

MMA4 weeks agoKetlen Vieira vs. Kayla Harrison pick, start time, odds: UFC 307

-

Entertainment4 weeks ago

Entertainment4 weeks agoBruce Springsteen endorses Harris, calls Trump “most dangerous candidate for president in my lifetime”

-

Sport4 weeks ago

Sport4 weeks agoMan City ask for Premier League season to be DELAYED as Pep Guardiola escalates fixture pile-up row

-

Football4 weeks ago

Football4 weeks ago'Rangers outclassed and outplayed as Hearts stop rot'

-

Sport1 month ago

Sport1 month agoSturm Graz: How Austrians ended Red Bull’s title dominance

-

Sport4 weeks ago

Sport4 weeks agoPremiership Women’s Rugby: Exeter Chiefs boss unhappy with WXV clash

-

Technology4 weeks ago

Technology4 weeks agoIf you’ve ever considered smart glasses, this Amazon deal is for you

-

Science & Environment1 month ago

Science & Environment1 month agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment1 month ago

Science & Environment1 month agoNerve fibres in the brain could generate quantum entanglement

-

Technology1 month ago

Technology1 month agoUniversity examiners fail to spot ChatGPT answers in real-world test

-

News1 month ago

News1 month ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Business1 month ago

Business1 month agoStocks Tumble in Japan After Party’s Election of New Prime Minister

-

Business4 weeks ago

Sterling slides after Bailey says BoE could be ‘a bit more aggressive’ on rates

-

TV4 weeks ago

TV4 weeks agoTV Patrol Express September 26, 2024

-

Technology4 weeks ago

Technology4 weeks agoSingleStore’s BryteFlow acquisition targets data integration

-

Technology4 weeks ago

Technology4 weeks agoTexas is suing TikTok for allegedly violating its new child privacy law

-

Business4 weeks ago

The search for Japan’s ‘lost’ art

-

Money4 weeks ago

Money4 weeks agoPub selling Britain’s ‘CHEAPEST’ pints for just £2.60 – but you’ll have to follow super-strict rules to get in

-

Business1 month ago

Top shale boss says US ‘unusually vulnerable’ to Middle East oil shock

-

Technology4 weeks ago

Technology4 weeks agoJ.B. Hunt and UP.Labs launch venture lab to build logistics startups

-

Technology4 weeks ago

Technology4 weeks agoOpenAI secured more billions, but there’s still capital left for other startups

-

News4 weeks ago

News4 weeks agoFamily plans to honor hurricane victim using logs from fallen tree that killed him

-

Technology4 weeks ago

Technology4 weeks agoThe best shows on Max (formerly HBO Max) right now

-

Sport4 weeks ago

Sport4 weeks agoNew Zealand v England in WXV: Black Ferns not ‘invincible’ before game

-

Money3 weeks ago

Money3 weeks agoTiny clue on edge of £1 coin that makes it worth 2500 times its face value – do you have one lurking in your change?

-

Entertainment4 weeks ago

Entertainment4 weeks agoNew documentary explores actor Christopher Reeve’s life and legacy

-

Science & Environment1 month ago

Science & Environment1 month agoHow to wrap your mind around the real multiverse

-

Sport1 month ago

Sport1 month agoWorld’s sexiest referee Claudia Romani shows off incredible figure in animal print bikini on South Beach

-

News4 weeks ago

News4 weeks agoHarry vs Sun publisher: ‘Two obdurate but well-resourced armies’

-

Technology4 weeks ago

Technology4 weeks agoThe best budget robot vacuums for 2024

-

Business1 month ago

Business1 month agoChancellor Rachel Reeves says she needs to raise £20bn. How might she do it?

-

MMA1 month ago

MMA1 month agoJulianna Peña trashes Raquel Pennington’s behavior as champ

-

Technology1 month ago

Technology1 month agoThis AI video generator can melt, crush, blow up, or turn anything into cake

-

Technology1 month ago

Technology1 month agoAmazon’s Ring just doubled the price of its alarm monitoring service for grandfathered customers

-

Technology4 weeks ago

Technology4 weeks agoBest iPad deals for October 2024

-

Politics4 weeks ago

Rosie Duffield’s savage departure raises difficult questions for Keir Starmer. He’d be foolish to ignore them | Gaby Hinsliff

-

Technology4 weeks ago

Technology4 weeks agoQuoroom acquires Investory to scale up its capital-raising platform for startups

You must be logged in to post a comment Login