Technology

Going beyond GPUs: The evolving landscape of AI chips and accelerators

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

This article is part of a VB Special Issue called “Fit for Purpose: Tailoring AI Infrastructure.” Catch all the other stories here.

Data centers are the backend of the internet we know. Whether it’s Netflix or Google, all major companies leverage data centers, and the computer systems they host, to deliver digital services to end users. As the focus of enterprises shifts toward advanced AI workloads, data centers’ traditional CPU-centric servers are being buffed with the integration of new specialized chips or “co-processors.”

At the core, the idea behind these co-processors is to introduce an add-on of sorts to enhance the computing capacity of the servers. This enables them to handle the calculational demands of workloads like AI training, inference, database acceleration and network functions. Over the last few years, GPUs, led by Nvidia, have been the go-to choice for co-processors due to their ability to process large volumes of data at unmatched speeds. Due to increased demand GPUs accounted for 74% of the co-processors powering AI use cases within data centers last year, according to a study from Futurum Group.

According to the study, the dominance of GPUs is only expected to grow, with revenues from the category surging 30% annually to $102 billion by 2028. But, here’s the thing: while GPUs, with their parallel processing architecture, make a strong companion for accelerating all sorts of large-scale AI workloads (like training and running massive, trillion parameter language models or genome sequencing), their total cost of ownership can be very high. For example, Nvidia’s flagship GB200 “superchip”, which combines a Grace CPU with two B200 GPUs, is expected to cost between $60,000 and $70,000. A server with 36 of these superchips is estimated to cost around $2 million.

While this may work in some cases, like large-scale projects, it is not for every company. Many enterprise IT managers are looking to incorporate new technology to support select low- to medium-intensive AI workloads with a specific focus on total cost of ownership, scalability and integration. After all, most AI models (deep learning networks, neural networks, large language models etc) are in the maturing stage and the needs are shifting towards AI inferencing and enhancing the performance for specific workloads like image recognition, recommender systems or object identification — while being efficient at the same time.

>>Don’t miss our special issue: Fit for Purpose: Tailoring AI Infrastructure.<<

This is exactly where the emerging landscape of specialized AI processors and accelerators, being built by chipmakers, startups and cloud providers, comes in.

What exactly are AI processors and accelerators?

At the core, AI processors and accelerators are chips that sit within servers’ CPU ecosystem and focus on specific AI functions. They commonly revolve around three key architectures: Application-Specific Integrated Circuited (ASICs), Field-Programmable Gate Arrays (FPGAs), and the most recent innovation of Neural Processing Units (NPUs).

The ASICs and FPGAs have been around for quite some time, with programmability being the only difference between the two. ASICs are custom-built from the ground up for a specific task (which may or may not be AI-related), while FPGAs can be reconfigured at a later stage to implement custom logic. NPUs, on their part, differentiate from both by serving as the specialized hardware that can only accelerate AI/ML workloads like neural network inference and training.

“Accelerators tend to be capable of doing any function individually, and sometimes with wafer-scale or multi-chip ASIC design, they can be capable of handling a few different applications. NPUs are a good example of a specialized chip (usually part of a system) that can handle a number of matrix-math and neural network use cases as well as various inference tasks using less power,” Futurum group CEO Daniel Newman tells Venturebeat.

The best part is that accelerators, especially ASICs and NPUs built for specific applications, can prove more efficient than GPUs in terms of cost and power use.

“GPU designs mostly center on Arithmetic Logic Units (ALUs) so that they can perform thousands of calculations simultaneously, whereas AI accelerator designs mostly center on Tensor Processor Cores (TPCs) or Units. In general, the AI accelerators’ performance versus GPUs performance is based on the fixed function of that design,” Rohit Badlaney, the general manager for IBM’s cloud and industry platforms, tells VentureBeat.

Currently, IBM follows a hybrid cloud approach and uses multiple GPUs and AI accelerators, including offerings from Nvidia and Intel, across its stack to provide enterprises with choices to meet the needs of their unique workloads and applications — with high performance and efficiency.

“Our full-stack solutions are designed to help transform how enterprises, developers and the open-source community build and leverage generative AI. AI accelerators are one of the offerings that we see as very beneficial to clients looking to deploy generative AI,” Badlaney said. He added while GPU systems are best suited for large model training and fine-tuning, there are many AI tasks that accelerators can handle equally well – and at a lesser cost.

For instance, IBM Cloud virtual servers use Intel’s Gaudi 3 accelerator with a custom software stack designed specifically for inferencing and heavy memory demands. The company also plans to use the accelerator for fine-tuning and small training workloads via small clusters of multiple systems.

“AI accelerators and GPUs can be used effectively for some similar workloads, such as LLMs and diffusion models (image generation like Stable Diffusion) to standard object recognition, classification, and voice dubbing. However, the benefits and differences between AI accelerators and GPUs entirely depend on the hardware provider’s design. For instance, the Gaudi 3 AI accelerator was designed to provide significant boosts in compute, memory bandwidth, and architecture-based power efficiency,” Badlaney explained.

This, he said, directly translates to price-performance benefits.

Beyond Intel, other AI accelerators are also drawing attention in the market. This includes not only custom chips built for and by public cloud providers such as Google, AWS and Microsoft but also dedicated products (NPUs in some cases) from startups such as Groq, Graphcore, SambaNova Systems and Cerebras Systems. They all stand out in their own way, challenging GPUs in different areas.

In one case, Tractable, a company developing AI to analyze damage to property and vehicles for insurance claims, was able to leverage Graphcore’s Intelligent Processing Unit-POD system (a specialized NPU offering) for significant performance gains compared to GPUs they had been using.

“We saw a roughly 5X speed gain,” Razvan Ranca, co-founder and CTO at Tractable, wrote in a blog post. “That means a researcher can now run potentially five times more experiments, which means we accelerate the whole research and development process and ultimately end up with better models in our products.”

AI processors are also powering training workloads in some cases. For instance, the AI supercomputer at Aleph Alpha’s data center is using Cerebras CS-3, the system powered by the startup’s third-generation Wafer Scale Engine with 900,000 AI cores, to build next-gen sovereign AI models. Even Google’s recently introduced custom ASIC, TPU v5p, is driving some AI training workloads for companies like Salesforce and Lightricks.

What should be the approach to picking accelerators?

Now that it’s established there are many AI processors beyond GPUs to accelerate AI workloads, especially inference, the question is: how does an IT manager pick the best option to invest in? Some of these chips may deliver good performance with efficiencies but might be limited in terms of the kind of AI tasks they could handle due to their architecture. Others may do more but the TCO difference might not be as massive when compared to GPUs.

Since the answer varies with the design of the chips, all experts VentureBeat spoke to suggested the selection should be based upon the scale and type of the workload to be processed, the data, the likelihood of continued iteration/change and cost and availability needs.

According to Daniel Kearney, the CTO at Sustainable Metal Cloud, which helps companies with AI training and inference, it is also important for enterprises to run benchmarks to test for price-performance benefits and ensure that their teams are familiar with the broader software ecosystem that supports the respective AI accelerators.

“While detailed workload information may not be readily in advance or may be inconclusive to support decision-making, it is recommended to benchmark and test through with representative workloads, real-world testing and available peer-reviewed real-world information where available to provide a data-driven approach to choosing the right AI accelerator for the right workload. This upfront investigation can save significant time and money, particularly for large and costly training jobs,” he suggested.

Globally, with inference jobs on track to grow, the total market of AI hardware, including AI chips, accelerators and GPUs, is estimated to grow 30% annually to touch $138 billion by 2028.

Source link

Servers computers

Dell Sliding Rail installation guidline.

Technology

NYT Mini Crossword today: puzzle answers for Sunday, September 29

The New York Times has introduced the next title coming to its Games catalog following Wordle’s continued success — and it’s all about math. Digits has players adding, subtracting, multiplying, and dividing numbers. You can play its beta for free online right now.

In Digits, players are presented with a target number that they need to match. Players are given six numbers and have the ability to add, subtract, multiply, or divide them to get as close to the target as they can. Not every number needs to be used, though, so this game should put your math skills to the test as you combine numbers and try to make the right equations to get as close to the target number as possible.

Players will get a five-star rating if they match the target number exactly, a three-star rating if they get within 10 of the target, and a one-star rating if they can get within 25 of the target number. Currently, players are also able to access five different puzzles with increasingly larger numbers as well. I solved today’s puzzle and found it to be an enjoyable number-based game that should appeal to inquisitive minds that like puzzle games such as Threes or other The New York Times titles like Wordle and Spelling Bee.

In an article unveiling Digits and detailing The New York Time Games team’s process to game development, The Times says the team will use this free beta to fix bugs and assess if it’s worth moving into a more active development phase “where the game is coded and the designs are finalized.” So play Digits while you can, as The New York Times may move on from the project if it doesn’t get the response it is hoping for.

Digits’ beta is available to play for free now on The New York Times Games’ website

Technology

Samsung Galaxy A16 4G & A16 5G have been spotted

Samsung appears to be gearing up for the launch of the Galaxy A16, the company’s upcoming entry-level offering. If you keep up with the leaks, you may have already seen the device and probably know some of its key specs. Now, the Samsung Galaxy A16 4G and A16 5G have been reportedly spotted on the FCC and TUV Rheinland certification websites hinting at an imminent launch.

The Galaxy A16 4G and A16 5G were spotted on two certification websites

Today, folks over at 91Mobiles spotted the 4G and 5G models of the Samsung Galaxy A16 smartphone on the USA’s FCC certification website. The “Status Information” page for the 4G model on FCC’s website reportedly details its “Wi-Fi Mac Address,” “Bluetooth address,” and serial number.

The FCC ID for the 4G model of the Galaxy A16 is A3LSMA165M. It is worth noting that the device will come with support for Wi-Fi, NFC, Bluetooth, GPS, and of course 4G LTE. The FCC listing also details the model number of the 4G device, charger, and data cable which are SM-A165M/DS, EP-TA800, and EP-DN980, respectively.

On the other hand, the listing tells us the FCC ID of the Galaxy A16 5G which is A3LSMA166M. Besides, the FCC listing also suggests that the device will support 5G, 4G LTE, Wi-Fi, Bluetooth, and NFC. Additionally, the model number for its charger and data cable are EP-TA800 and EP-DN980, respectively.

The FCC listing also includes a rough sketch of the device hinting at the triple-rear camera setup. However, that seems confirmed based on the exclusive image of the Galaxy A16 5G shared by us.

The TUV Rheinland listing reveals the battery capacity of the 5G model

That’s not all, both models of the Galaxy A16 were spotted on the TUV Rheinland certification website. The TUV Rheinland listing suggests that the 5G model could come with a rated battery of 4860mAh and 5000mAh typical.

Besides, the listing reportedly suggests that both models could come with 18W fast wired charging support at 9V and 2.77A. Meanwhile, you can expect a 25W charger in the box alongside both models of the Galaxy A16.

Earlier, some leaks hinted that the 5G model could pack Samsung’s Exynos 1330 SoC under the hood. Additionally, the MediaTek Dimensity 6300 might power the device’s 4G LTE model. The best part is that Samsung could offer a massive six years of Android OS updates for both devices.

That’s not all, previous leaks also hint that the Galaxy A16 4G and A16 5G could come with similar color options i.e. Black, Gray, and Light Green. Moreover, both devices could run Android 14-based One UI 6.1 out of the box.

Servers computers

Tủ rack, tủ mạng 20U D800 chất lượng cao, giá rẻ thương hiệu SeArack.

Thông tin kĩ thuật tủ rack, tủ mạng 20U D800:

* Kích thước: H.1060*W.600*D.800mm.

* Hệ thống cửa đều có khoá an toàn, dễ dàng tháo lắp.

* Cửa trước đảm bảo tính thẩm mỹ và tạo độ thông thoáng cho các thiết bị trong tủ.

* Hệ thống bánh xe và chân tăng giúp dễ di chuyển cũng như cố định tủ.

* Bảo hành: 12 tháng theo tiêu chuẩn nhà sản xuất.

Liên hệ hotline 0982626028 để được tư vấn và đặt hàng giá tốt nhất. Wedsite: www.codienhanoi.vn hoặc www.searack.vn

#codienhanoivn #turack #tumang #turacksearack #severrack #hethongmang

source

Technology

Prime Day deals include this Blink Outdoor 4 and Echo Show 5 bundle for only $60

Although the October edition of Amazon Prime Day isn’t in full swing just yet, there are still tons of deals to be had, especially on the company’s own products. A bundle of a Blink Outdoor 4 camera and Echo Show 5 smart display is . That’s $130 off the regular price and it marks a record low for this bundle. There is one caveat, though: this deal is only available to Prime members.

This bundle is one that makes a lot of sense. You’ll be able to use your Echo Show 5 to get a live view of whatever the Blink Outdoor 4 camera is capturing with a simple Alexa command.

A bundle of the Blink Outdoor 4 camera and Echo Show 5 smart display has dropped to $60, the lowest price to date. But you’ll need to be a Prime member to snag this discount.

We recommend both products individually in our guides. We believe the Blink Outdoor 4 is the around. The name is a bit of a misnomer as you can easily place it inside your home too, not least because it runs on two AA batteries. You’ll only need to replace the cells every two years or so.

If you do place the camera outside, you can rest easy knowing that it’s weather resistant. Other features include night vision, motion detection and two-way audio. You will need a Blink Subscription Plan to store clips in the cloud. Otherwise, you can save footage locally with a Sync Module 2 (which is available separately) and USB flash drive.

As for the Echo Show 5, it’s one of the (only beaten out by its larger sibling, the Echo Show 8). It’s a compact, 5.5-inch smart display that works well as an alarm clock on your nightstand. The tap-to-snooze function comes in handy there, while there’s a sunrise alarm that gradually brightens the screen.

The Echo Show 5 does have a built-in camera, which might give you cause for concern if you want to place it by your bed. But the physical camera cover should sate any privacy concerns on that front.

Follow @EngadgetDeals on Twitter for the latest tech deals and buying advice in the lead up to October Prime Day 2024.

Servers computers

Dell PowerEdge M620 Blade Server and M1000e Blade Enclosure Overview ( IT Creations, Inc )

http://www.itcreations.com/

Phone: 1-800-983-5318

E-Mail: itcreationstv@gmail.com

An all-around efficient, powerful and scalable system, the M620 can handle the most demanding of tasks with ease.

Music:

Growing UP’ by Andrea Quarin

(www.melodyloops.com/tracks/growing-up/)Order #: 23615698474

Date: 2013-03-26

as Royalty Free Music under Creative Commons License CC BY-ND 3.0 (www.melodyloops.com/support/full-license/)

For more information contact Melody Loops at support@melodyloops.com .

source

-

Womens Workouts6 days ago

Womens Workouts6 days ago3 Day Full Body Women’s Dumbbell Only Workout

-

Technology2 weeks ago

Technology2 weeks agoWould-be reality TV contestants ‘not looking real’

-

News7 days ago

News7 days agoOur millionaire neighbour blocks us from using public footpath & screams at us in street.. it’s like living in a WARZONE – WordupNews

-

Science & Environment1 week ago

Science & Environment1 week agoHyperelastic gel is one of the stretchiest materials known to science

-

News2 weeks ago

News2 weeks agoYou’re a Hypocrite, And So Am I

-

Science & Environment1 week ago

Science & Environment1 week ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment1 week ago

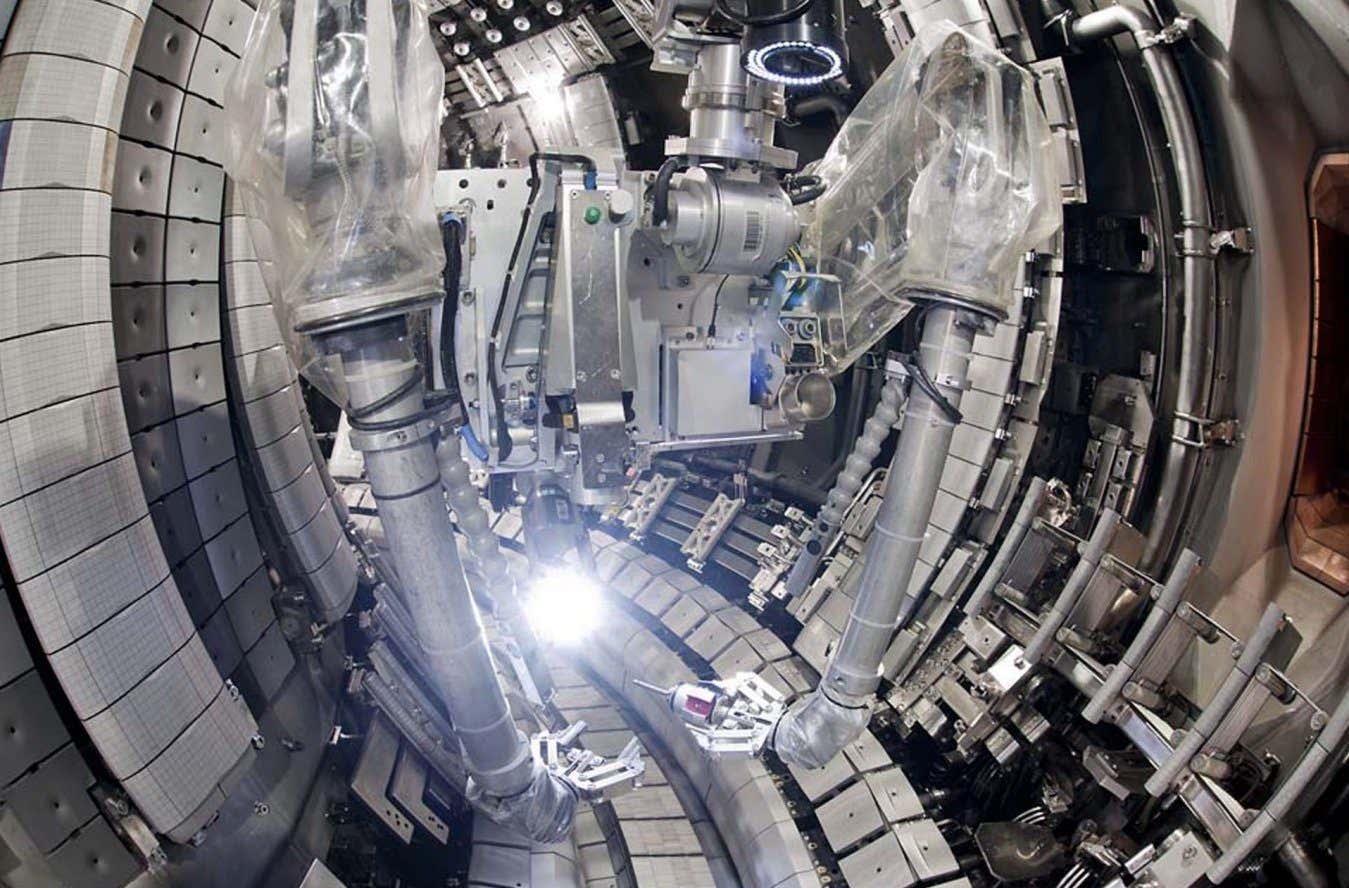

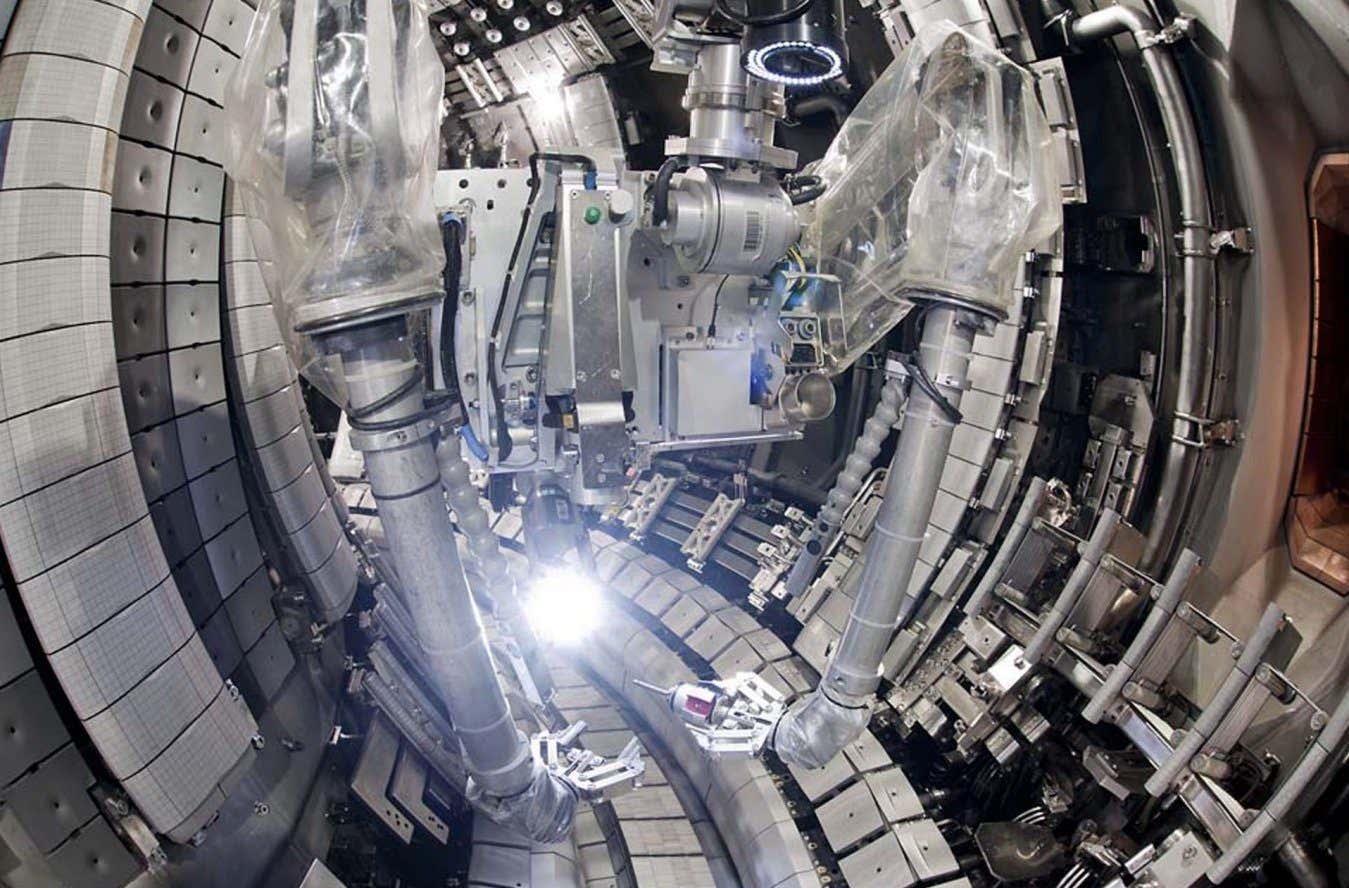

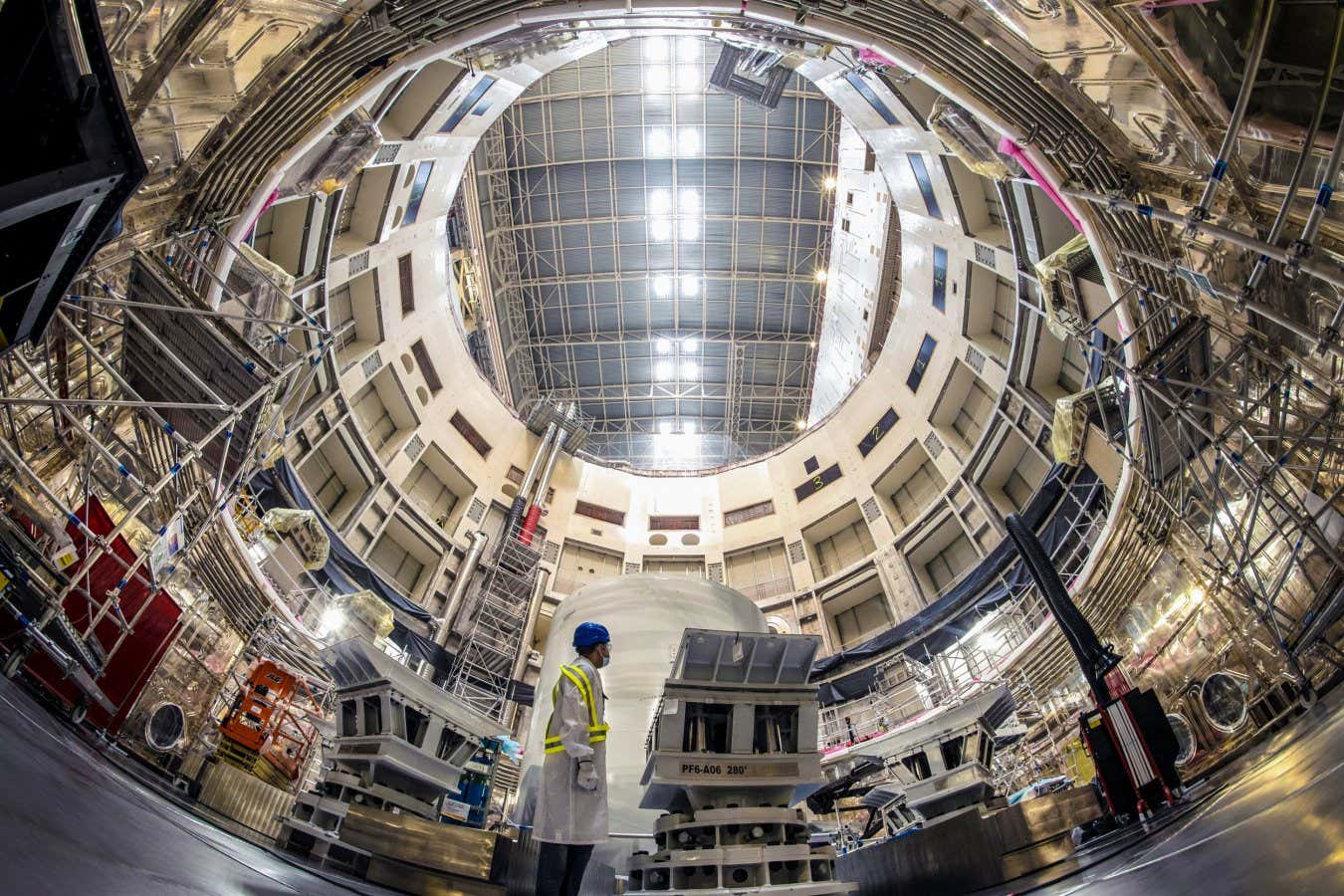

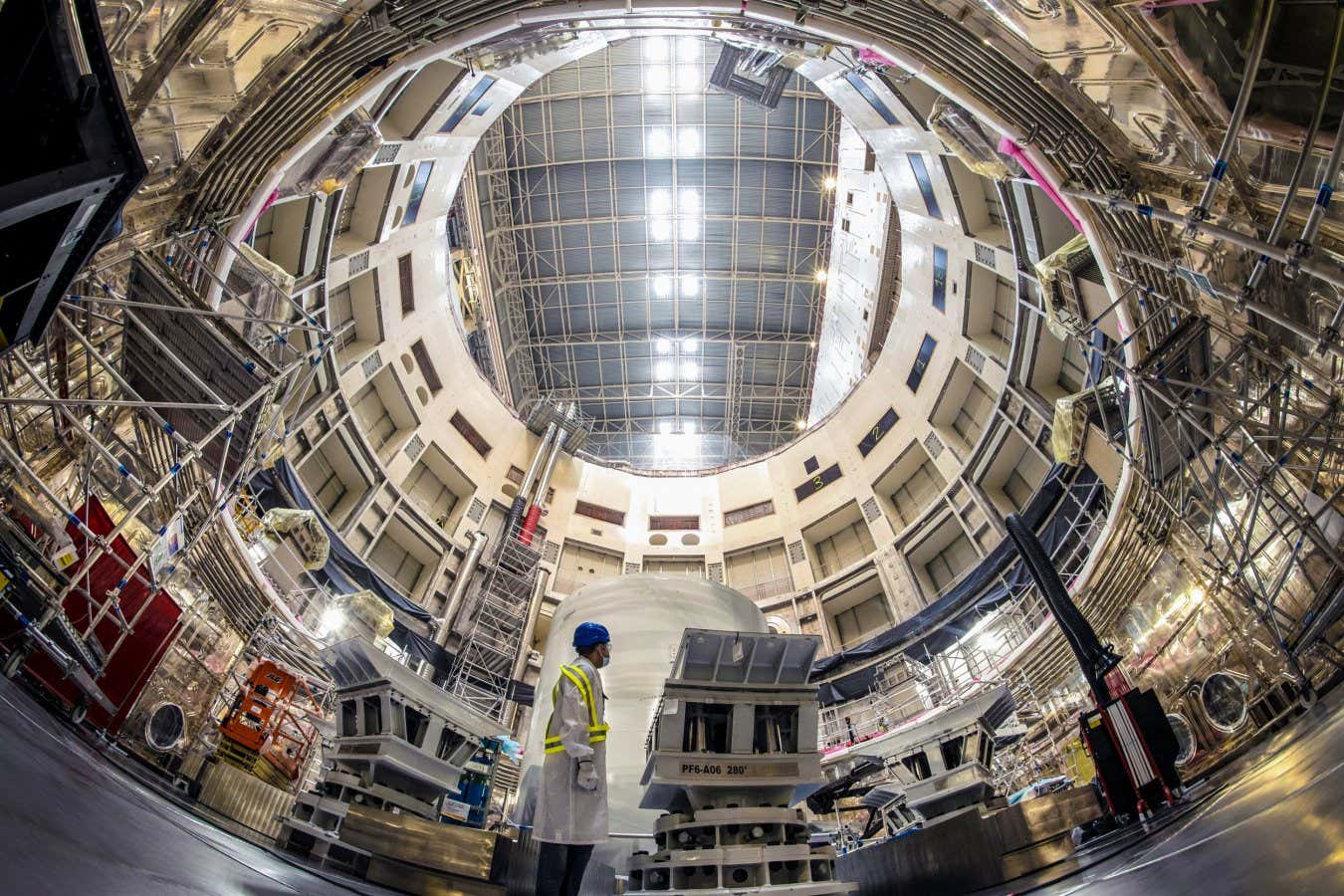

Science & Environment1 week agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

Science & Environment1 week ago

Science & Environment1 week agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment1 week ago

Science & Environment1 week agoHow to wrap your mind around the real multiverse

-

Science & Environment1 week ago

Science & Environment1 week agoSunlight-trapping device can generate temperatures over 1000°C

-

Sport1 week ago

Sport1 week agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoHow to unsnarl a tangle of threads, according to physics

-

Science & Environment1 week ago

Science & Environment1 week agoPhysicists are grappling with their own reproducibility crisis

-

Science & Environment1 week ago

Science & Environment1 week agoLiquid crystals could improve quantum communication devices

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum ‘supersolid’ matter stirred using magnets

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoCaroline Ellison aims to duck prison sentence for role in FTX collapse

-

Science & Environment1 week ago

Science & Environment1 week agoWhy this is a golden age for life to thrive across the universe

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum forces used to automatically assemble tiny device

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCardano founder to meet Argentina president Javier Milei

-

News1 week ago

News1 week agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

Womens Workouts1 week ago

Womens Workouts1 week agoBest Exercises if You Want to Build a Great Physique

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoNerve fibres in the brain could generate quantum entanglement

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment1 week ago

Science & Environment1 week agoLaser helps turn an electron into a coil of mass and charge

-

Science & Environment1 week ago

Science & Environment1 week agoNuclear fusion experiment overcomes two key operating hurdles

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Womens Workouts1 week ago

Womens Workouts1 week agoEverything a Beginner Needs to Know About Squatting

-

Science & Environment7 days ago

Science & Environment7 days agoMeet the world's first female male model | 7.30

-

Science & Environment1 week ago

Science & Environment1 week agoHow do you recycle a nuclear fusion reactor? We’re about to find out

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency1 week ago

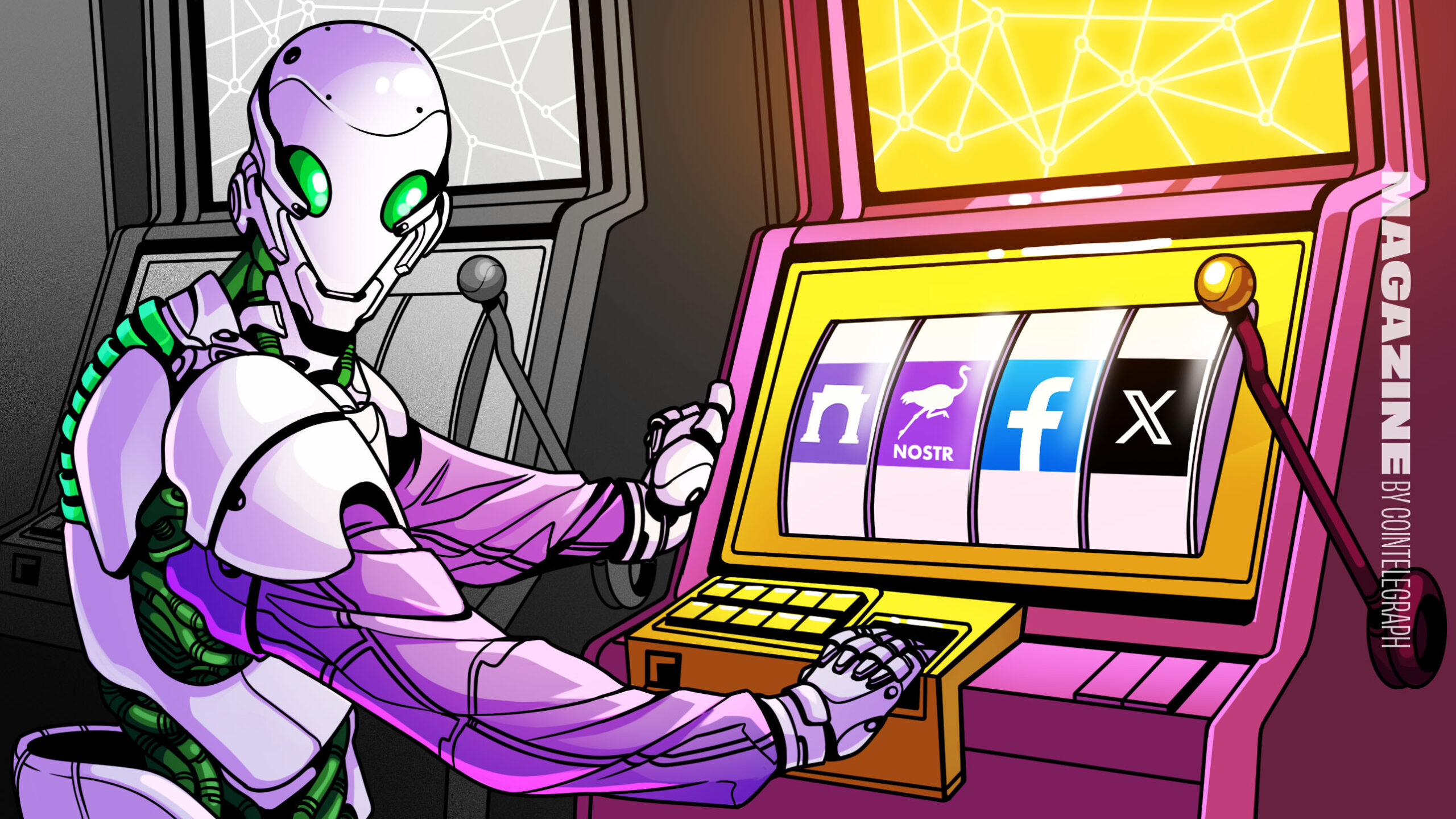

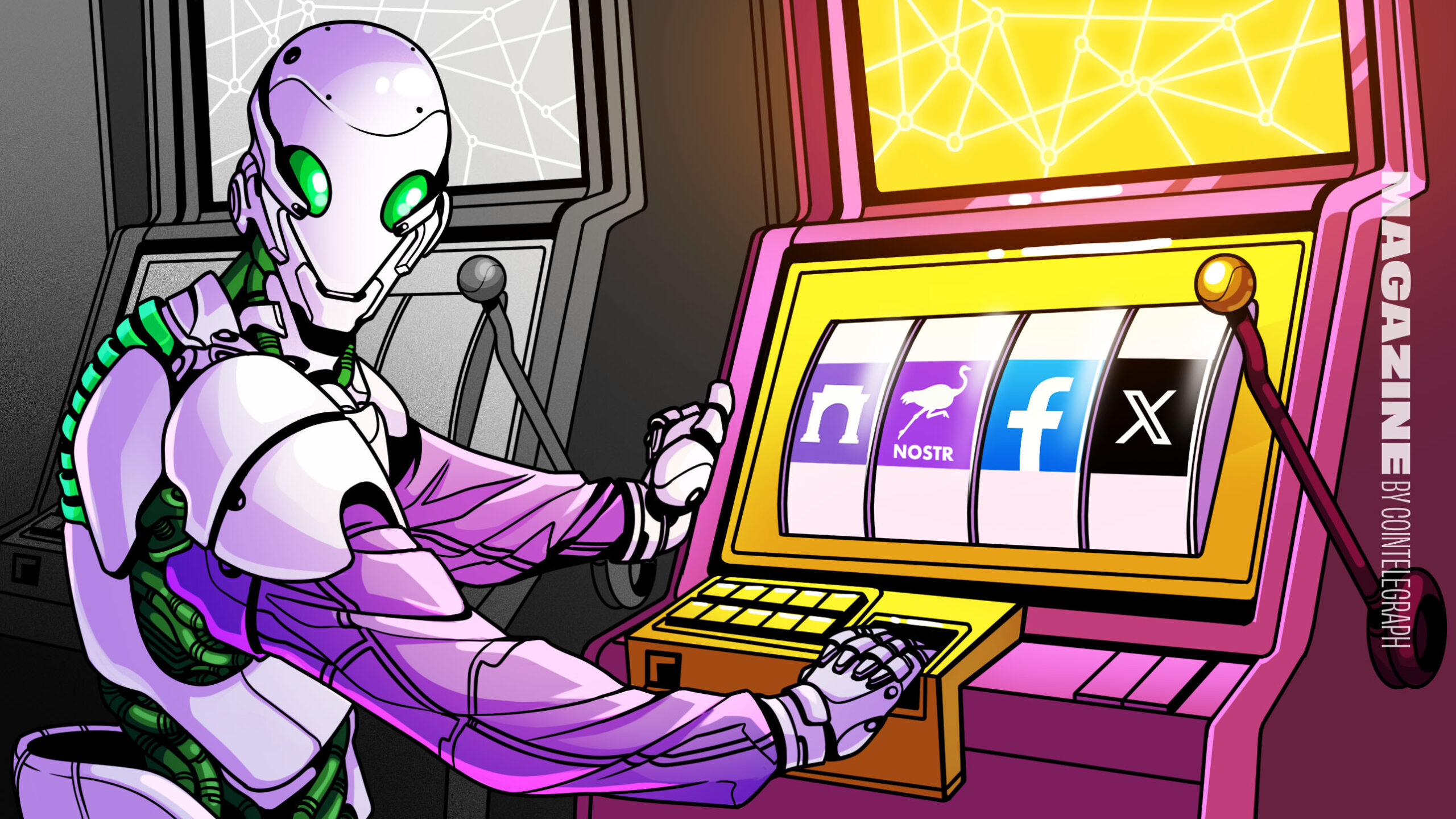

CryptoCurrency1 week agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBlockdaemon mulls 2026 IPO: Report

-

News1 week ago

News1 week agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

News7 days ago

News7 days agoFour dead & 18 injured in horror mass shooting with victims ‘caught in crossfire’ as cops hunt multiple gunmen

-

Womens Workouts5 days ago

Womens Workouts5 days ago3 Day Full Body Toning Workout for Women

-

Travel5 days ago

Travel5 days agoDelta signs codeshare agreement with SAS

-

Politics4 days ago

Politics4 days agoHope, finally? Keir Starmer’s first conference in power – podcast | News

-

News2 weeks ago

News2 weeks ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum time travel: The experiment to ‘send a particle into the past’

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoSEC asks court for four months to produce documents for Coinbase

-

Sport1 week ago

Sport1 week agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

Business1 week ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Technology1 week ago

Technology1 week agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

News1 week ago

News1 week agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Womens Workouts1 week ago

Womens Workouts1 week agoKeep Your Goals on Track This Season

-

Science & Environment1 week ago

Science & Environment1 week agoWhy we need to invoke philosophy to judge bizarre concepts in science

-

Science & Environment1 week ago

Science & Environment1 week agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

News1 week ago

News1 week agoChurch same-sex split affecting bishop appointments

-

Science & Environment1 week ago

Science & Environment1 week agoTiny magnet could help measure gravity on the quantum scale

-

Technology1 week ago

Technology1 week agoFivetran targets data security by adding Hybrid Deployment

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDecentraland X account hacked, phishing scam targets MANA airdrop

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘Silly’ to shade Ethereum, the ‘Microsoft of blockchains’ — Bitwise exec

-

Business1 week ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Politics1 week ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

Womens Workouts1 week ago

Womens Workouts1 week agoHow Heat Affects Your Body During Exercise

-

News7 days ago

News7 days agoWhy Is Everyone Excited About These Smart Insoles?

-

Politics2 weeks ago

Politics2 weeks agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Technology2 weeks ago

Technology2 weeks agoCan technology fix the ‘broken’ concert ticketing system?

-

Health & fitness2 weeks ago

Health & fitness2 weeks agoThe secret to a six pack – and how to keep your washboard abs in 2022

-

Science & Environment1 week ago

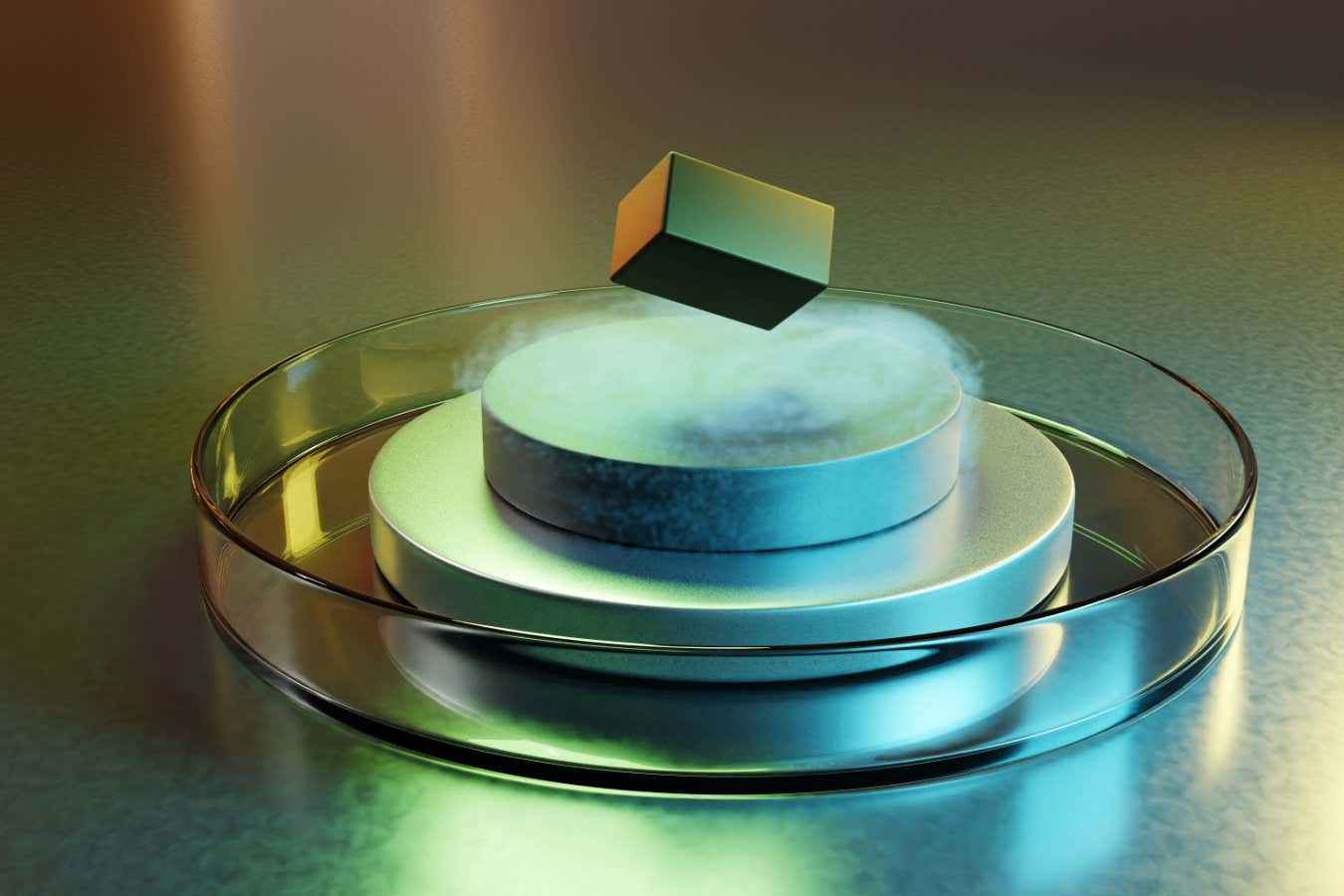

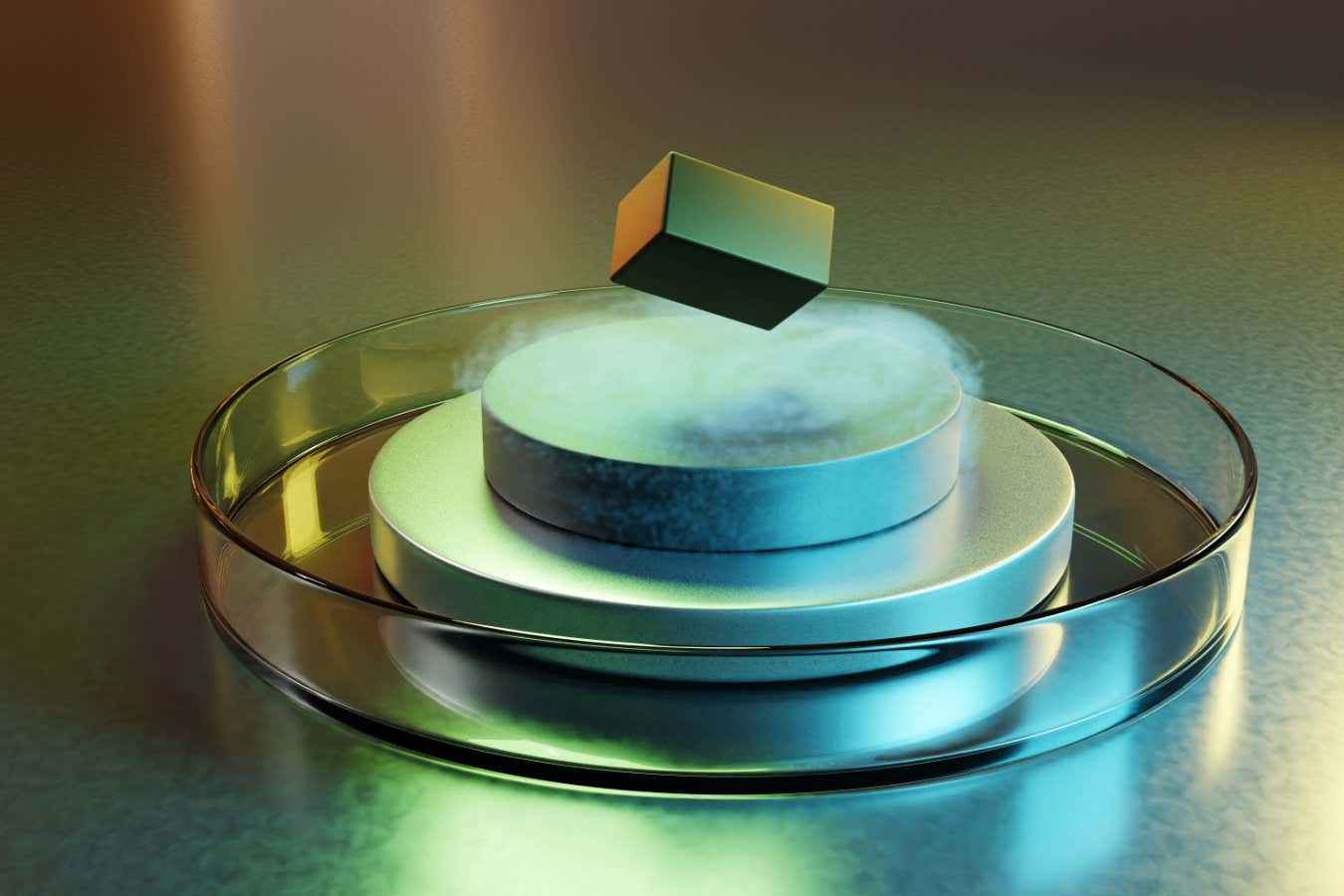

Science & Environment1 week agoBeing in two places at once could make a quantum battery charge faster

-

Science & Environment1 week ago

Science & Environment1 week agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Science & Environment1 week ago

Science & Environment1 week agoHow one theory ties together everything we know about the universe

-

Science & Environment1 week ago

Science & Environment1 week agoA tale of two mysteries: ghostly neutrinos and the proton decay puzzle

-

Science & Environment1 week ago

Science & Environment1 week agoUK spurns European invitation to join ITER nuclear fusion project

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘Everything feels like it’s going to shit’: Peter McCormack reveals new podcast

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoEthereum falls to new 42-month low vs. Bitcoin — Bottom or more pain ahead?

-

News1 week ago

News1 week agoBrian Tyree Henry on his love for playing villains ahead of “Transformers One” release

-

Womens Workouts1 week ago

Womens Workouts1 week agoWhich Squat Load Position is Right For You?

-

News1 week ago

News1 week agoBangladesh Holds the World Accountable to Secure Climate Justice

-

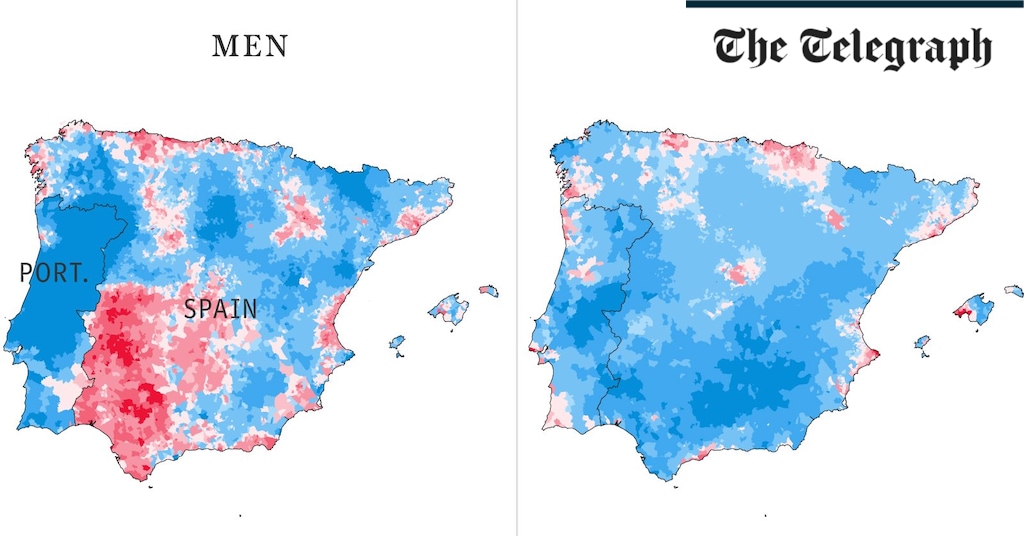

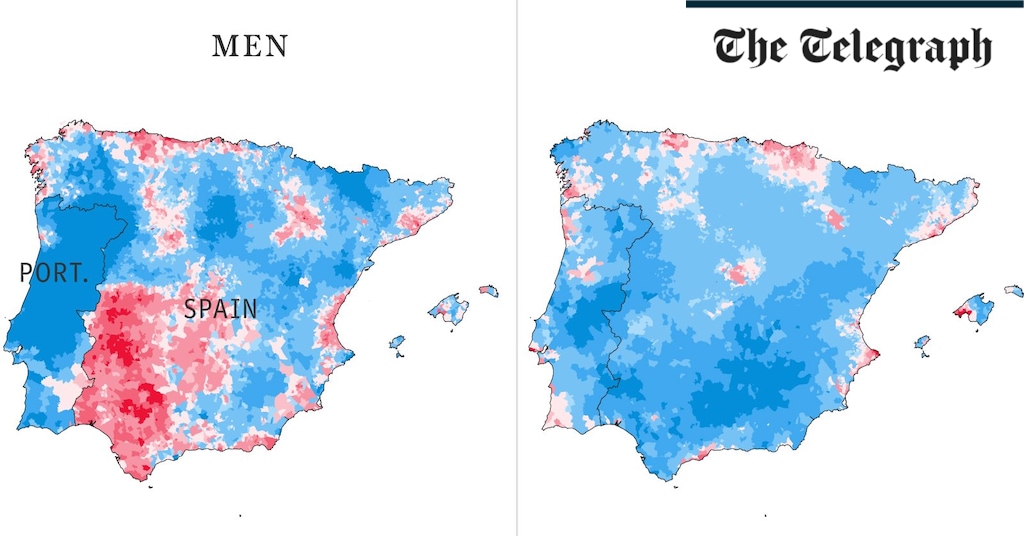

Health & fitness2 weeks ago

Health & fitness2 weeks agoThe maps that could hold the secret to curing cancer

-

Science & Environment2 weeks ago

Science & Environment2 weeks agoA slight curve helps rocks make the biggest splash

-

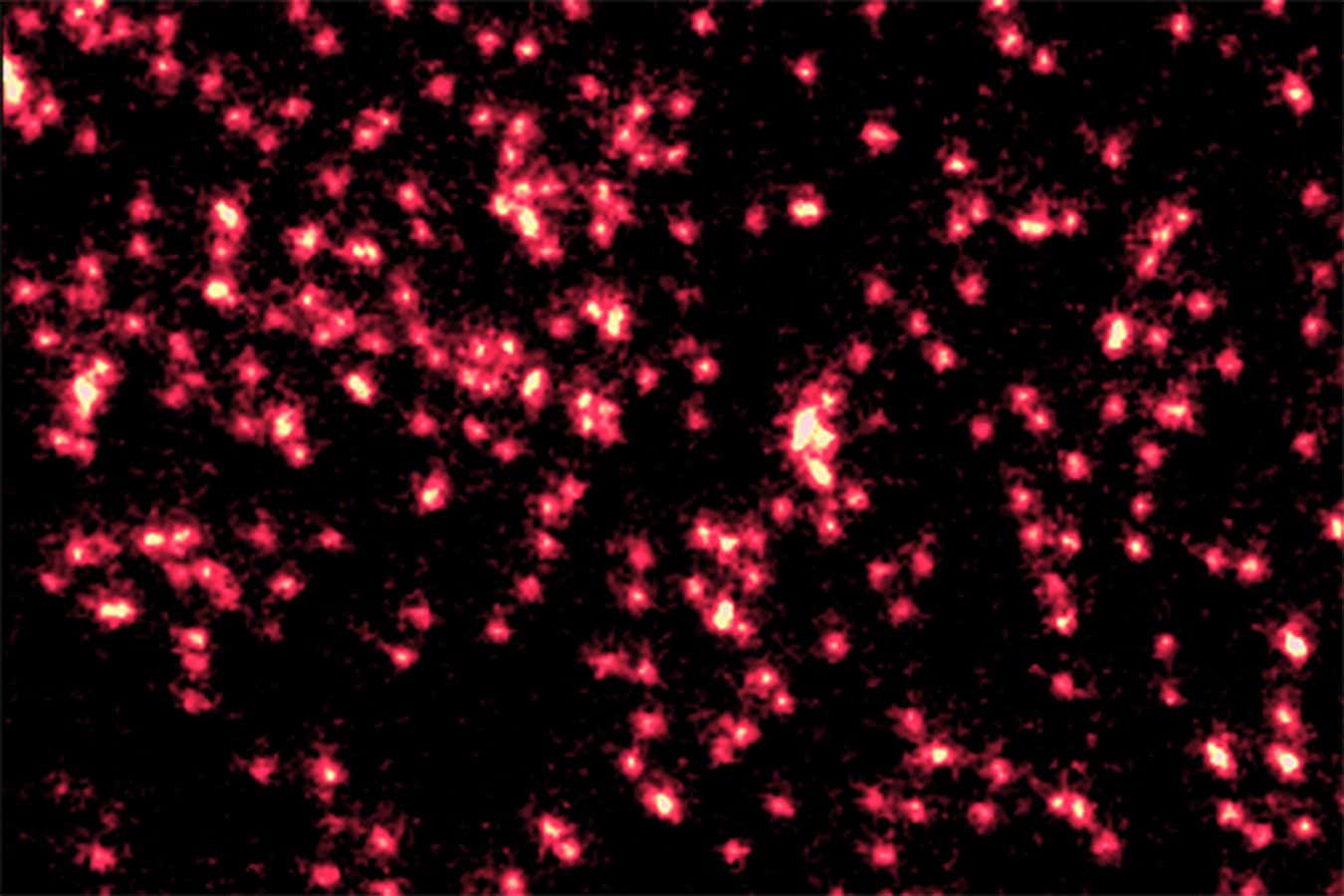

Science & Environment1 week ago

Science & Environment1 week agoSingle atoms captured morphing into quantum waves in startling image

-

Science & Environment1 week ago

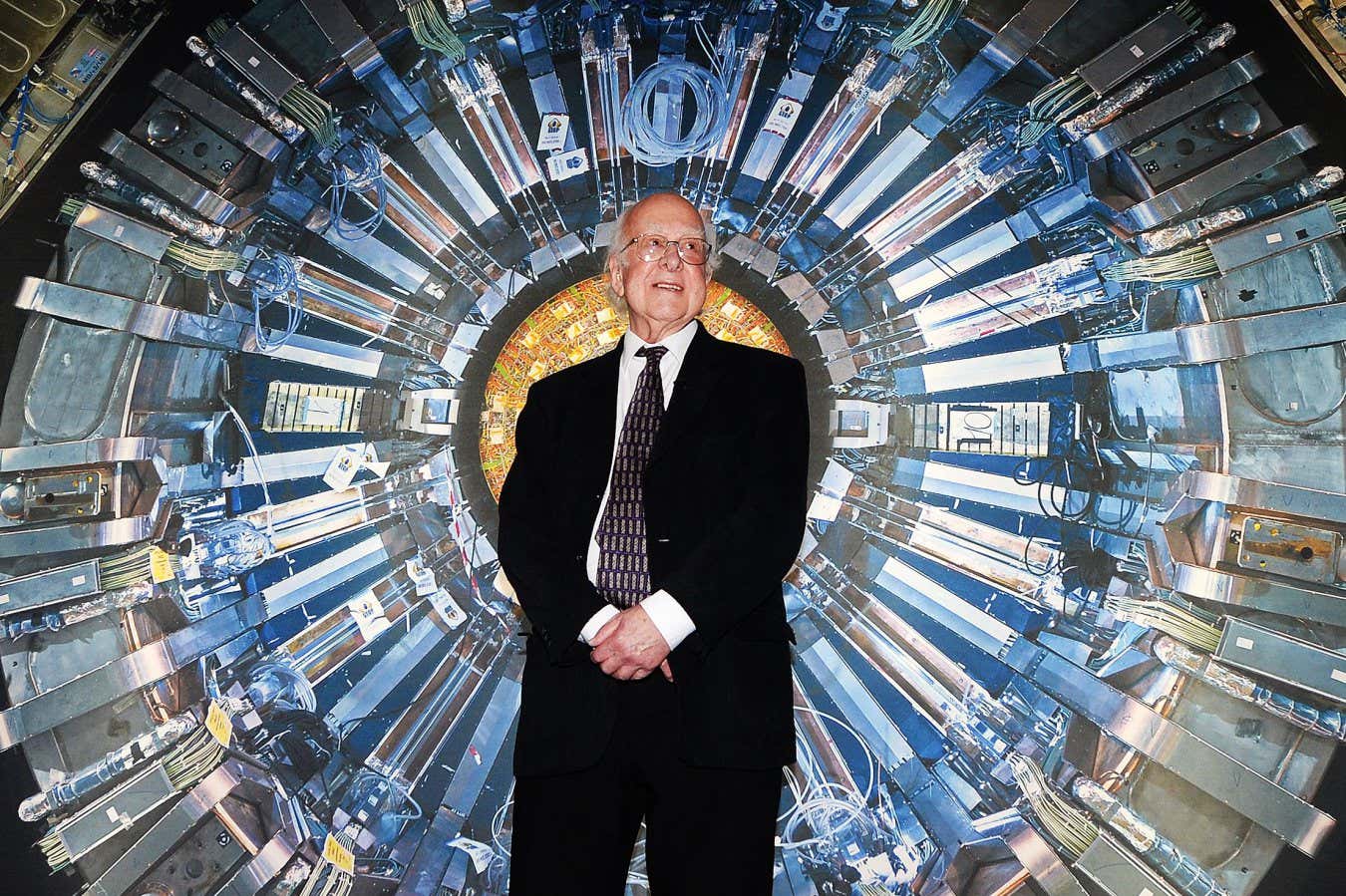

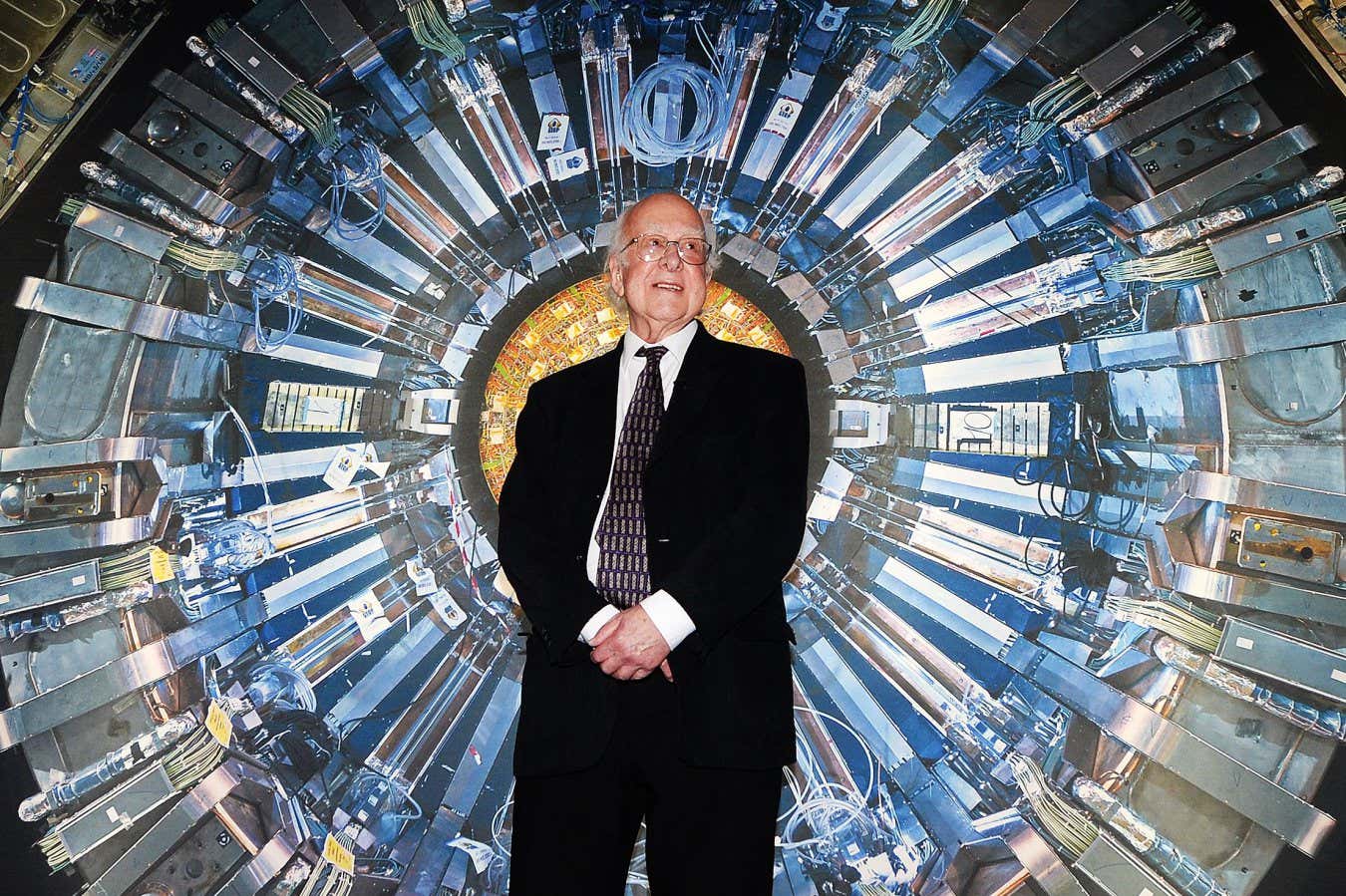

Science & Environment1 week agoHow Peter Higgs revealed the forces that hold the universe together

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoHelp! My parents are addicted to Pi Network crypto tapper

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

Fashion Models1 week ago

Fashion Models1 week agoMixte

-

Politics1 week ago

Politics1 week agoLabour MP urges UK government to nationalise Grangemouth refinery

-

Money1 week ago

Money1 week agoBritain’s ultra-wealthy exit ahead of proposed non-dom tax changes

-

Womens Workouts1 week ago

Womens Workouts1 week agoWhere is the Science Today?

-

Womens Workouts1 week ago

Womens Workouts1 week agoSwimming into Your Fitness Routine

-

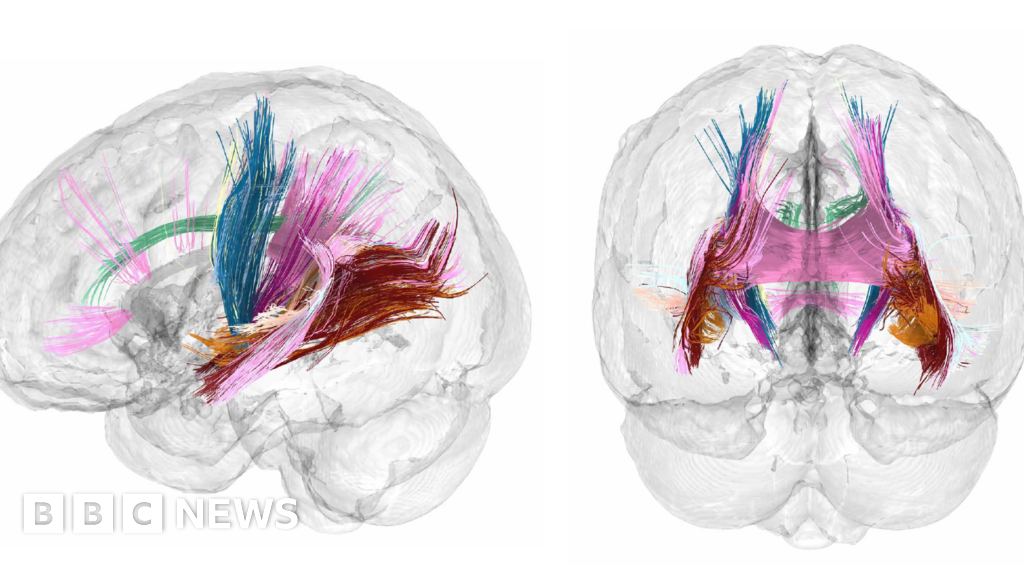

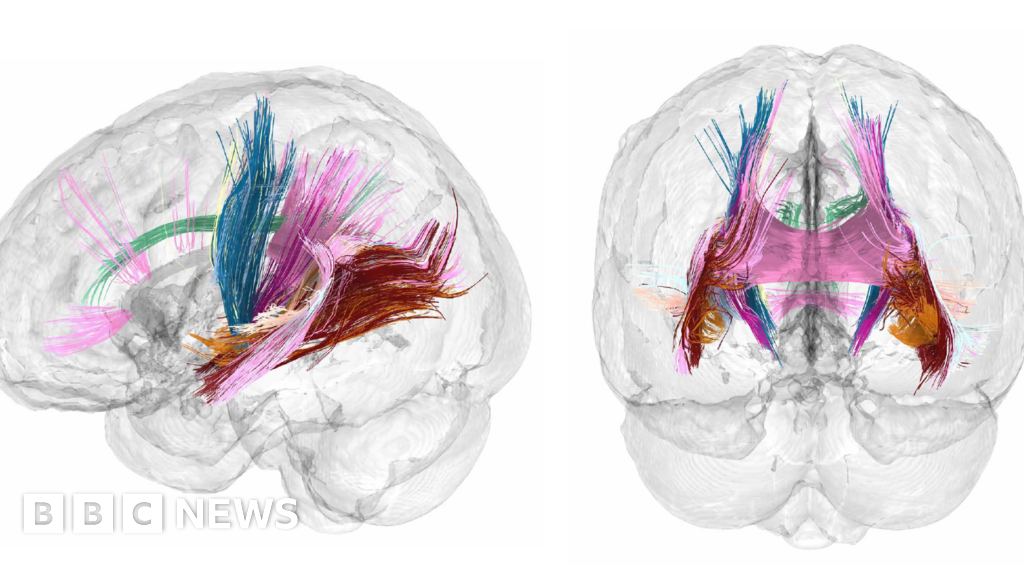

News2 weeks ago

News2 weeks agoBrain changes during pregnancy revealed in detailed map

-

Business2 weeks ago

JPMorgan in talks to take over Apple credit card from Goldman Sachs

You must be logged in to post a comment Login