Chinese manufacturer Beelink has earned a reputation for producing quality mini PCs across a range of price points. The Beelink U59 is a definite standout for those looking for a budget option – in our four and a half star review we said it offered a “good feature set that might appeal to many different customers.”

Beelink also offers higher-end products like the GTi Ultra, which features Intel‘s 12th to 14th Gen Core CPUs, with support for Beelink’s exclusive eGPU solution for users requiring extra graphics power. Beelink has been developing its SER series for some time. Its most recent release was the SER8 model powered by an AMD Ryzen 7 8845HS Hawk Point processor, 32GB of DDR5 memory, and 1TB of storage. Priced around $650, the SER8 delivered strong performance in a compact design.

Coming up next is the SER9, the first mini PC to feature AMD‘s Ryzen AI 9 HX 370 Strix Point CPU, paired with AMD Radeon 890M graphics. The Strix Point processor includes four Zen 5 cores, eight Zen 5c cores, and AMD’s latest RDNA 3.5 Radeon 890M integrated graphics. It also incorporates a Ryzen NPU capable of up to 50 TOPS of AI performance, making it ideal for AI-driven tasks. If you need extra AI functionality, the SER9 includes a built-in MIC AI chip for seamless voice recognition.

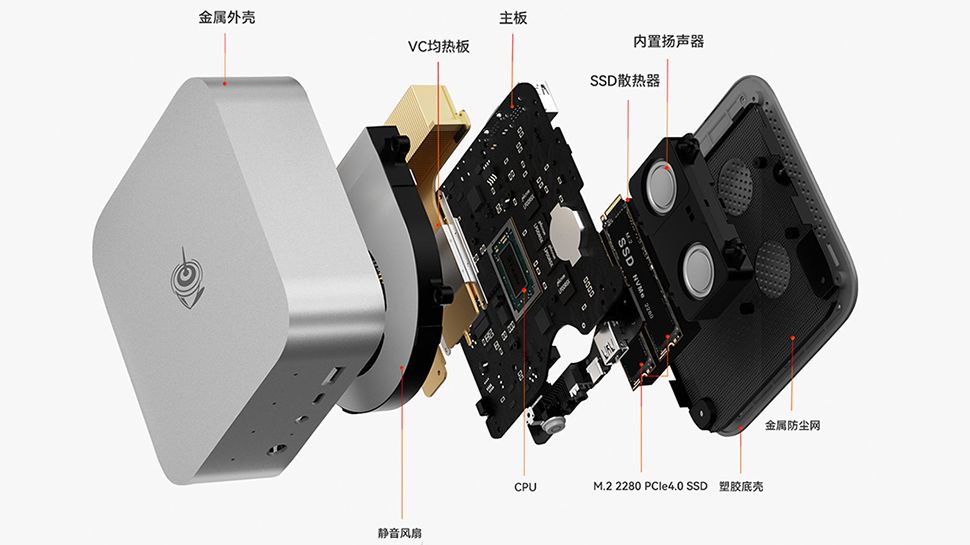

Design-wise, the SER9 closely mirrors its predecessor, the SER8, as well as the GTi Ultra. Although we haven’t had any confirmation, is expected to come with 32GB of LPDDR5x-7500MHz memory and an M.2 slot for PCIe 4.0 SSD storage. According to information leaked on Weibo, the mini PC will be available in four colors: Frost Silver, Flame Orange, Pine Green, and Deep Gray. As with many mini PCs, the Beelink SER9 offers a wide range of ports. It includes a USB4 Type-C port (40 Gbps), a USB 3.2 Gen 2 Type-C port (10 Gbps), two USB 3.2 Gen 2 Type-A ports (10 Gbps), two USB 2.0 Type-A ports (480 Mbps), an HDMI 2.0 port, and a DisplayPort 1.4. There’s also a 2.5 GbE Ethernet port, with two 3.5mm audio jacks for microphones and speakers. This model also includes integrated speakers.

Beelink has stated that the SER9 will be priced at $999, with shipping expected to begin in October. While initially announced for the Chinese market, the mini PC is anticipated to be available globally (eventually) through Beelink.com.

Ports galore

More from TechRadar Pro

Technology

This is the world’s most powerful Mini PC and I can’t wait to test it: Beelink’s tiny computer packs the Ryzen AI 9 HX 370 CPU and promises to deliver the GPU performance of an RTX 3050 with a whopping 50 TOPS

Science & Environment

Antarctica’s “Doomsday Glacier” is set to retreat “further and faster,” scientists warn

The outlook for “Doomsday Glacier” just got gloomier.

Scientists are warning the Antarctic Ice Sheet, known formally as the Thwaites Glacier, will deteriorate “further and faster” and that sea level rise triggered by the melting could impact “hundreds of millions” in coastal communities.

“Towards the end of this century, or into the next century, it is very probable that we will see a rapid increase in the amount of ice coming off of Antarctica,” said Dr. Ted Scambos, a glaciologist at the University of Colorado. “The Thwaites is pretty much doomed.”

The findings are the culmination of six years of research conducted by the International Thwaites Glacier Collaboration, a collective of more than 100 scientists.

The “Doomsday Glacier,” roughly the size of the state of Florida, is one of the largest glaciers in the world. Scientists predict that its collapse could contribute to 65 centimeters, or roughly 26 inches in sea levels to rise.

The sea level rise could be even higher though if you account for the ice the Thwaites will draw in from the large surrounding glacial basins when it collapses. “That total will be closer to three meters of sea level rise,” Scambos said.

According to the researchers, the volume of water flowing into the sea from the Thwaites and its neighboring glaciers has doubled from the 1990s to the 2010s.

Approximately 1/3 of the front of the Twaites is currently covered by a thick plate of ice — an ice shelf — floating in the ocean that blocks ice from flowing into the sea. However, Scambos said the melting is accelerating and that the ice sheet is “very near to the point of breakup.”

“Probably within the next two or three years, it will break apart into some large icebergs,” he said. This will eventually leave the front of the glacier exposed. This may not necessarily lead to a sudden acceleration in melting, but it will change how the ocean interacts with the front of the ice shelf, Scambos said.

Deep ridges that prevent ice from flowing into the ocean are on their way out. The ridges, in the bedrock below the ice sheet in Antarctica, provide a “resistive force” against the ice, Scambos said, that slows down its flow into the ocean. As the Thwaites collapses, it will lose contact with these protective ridges, causing more ice to empty into the ocean.

One of the more surprising findings to come from the International Thwaites Glacier Collaboration was how tidal activity around the glacier is pumping warmer sea water into the ice sheet at high speed. That water, which is a couple of degrees above freezing, is getting trapped in parts of the glacier and forced further upstream.

“It goes in every day, it gets squashed up under the glacier. It completely melts whatever freshwater ice it can, and then it gets ejected, and then the whole thing starts again,” said Scambos.

The new findings from the International Thwaites Glacier Collaboration add to a vast body of research on how the deterioration of glaciers worldwide could contribute to sea level rise. In May, a study found that high-pressure ocean water is seeping beneath the “Doomsday Glacier” leading to a “vigorous ice melt.”

Study co-author Christine Dow called the Thwaites the “most unstable place in the Antarctic” and said the speed at which its melting could prove “devastating for coastal communities around the world.”

Researchers at the University of California, Irvine predicted the ocean could rise by about 60 centimeters, or about 23.6 inches, roughly on par with the predictions from scientists part of the International Thwaites Glacier Collaboration.

Scientists also have also warned about the potential consequences if the Greenland ice sheet were to melt. Greenland’s melting ice mass is now the No. 1 driver of sea level rise, according to Paul Bierman, a scientist at the University of Vermont. If it melts completely, scientists project it could lead to 20 to 25 feet of sea-level rise.

Rising global temperatures linked to climate change have made oceans warmed and generated new wind patterns that make these glaciers more susceptible to melting.

“It is very likely related to increasing greenhouse gasses in the atmosphere, which changed wind patterns around Antarctica, and therefore changed ocean circulation around Antarctica,” said Scambos. “That’s the main culprit.”

Scientists project that without intervention, the Thwaites could completely disappear by the 23rd century.

Technology

Exploding interest in GenAI makes AI governance a necessity

Enterprises need to heed a warning: Ignore AI governance at your own risk.

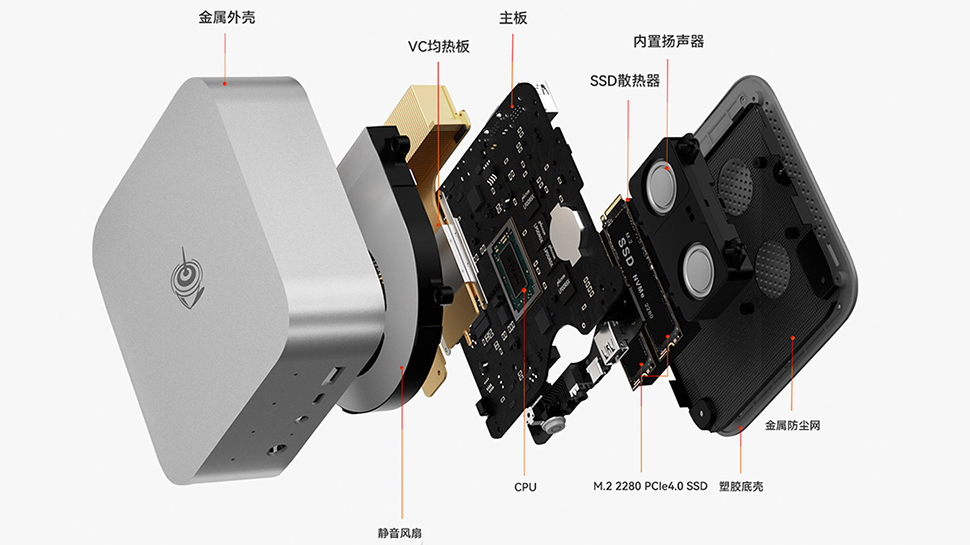

AI governance is essentially a set of policies and standards designed to mitigate the risks associated with using AI — including generative AI — to inform business decisions and automate processes previously carried out by human beings.

The reason it’s now needed, that it cannot be ignored, is that enterprise interest in generative AI is exploding, which has led to more interest in traditional AI as well.

Historically, AI and machine learning models and applications were developed by and used mostly by small data science teams and other experts within organizations, never leaving their narrow purview. The tools were used to do forecasting, scenario planning and other types of predictive analytics, as well as automate certain repetitive processes also overseen by small groups of experts.

Now, however, sparked by OpenAI’s November 2022 launch of ChatGPT, which represented significant improvement in large language model capabilities, enterprises want to extend their use of AI tools to more employees to drive more rapid growth. LLMs such as ChatGPT and Google Gemini enable true natural language processing that was previously impossible.

When combined with an enterprise’s data, true NLP lets any employee with an internet connection and the requisite clearance query and analyze data in ways that previously required expert knowledge, including coding skills and data literacy training. In addition, when applied to enterprise data, generative AI technology can be trained to relieve experts of repetitive tasks, including coding, documentation and even data pipeline development, thus making developers and data scientists more efficient.

That combination of enabling more employees to make data-driven decisions and improving the efficiency of experts can result in significant growth.

If done properly.

If not, enterprises risk serious consequences, including decisions based on bad AI outputs, data leaks, legal noncompliance, customer dissatisfaction and lack of accountability, all of which could lead to financial losses.

Therefore, as interest in expanding the use of both generative AI and traditional AI transitions to more actual use of AI, and as more employees with less expertise get access to data and AI tools, enterprises need to ensure their AI tools are governed.

Some organizations have heeded the warning, according to Kevin Petrie, an analyst at BARC U.S.

“It is increasing,” he said. “Security and governance are among the top concerns related to AI — especially GenAI — so the demand for AI governance continues to rise.”

However, according to a survey Petrie conducted in 2023, only 25% of respondents said their organization has the proper AI governance controls to support AI and machine learning initiatives, while nearly half said their organization lacks the proper governance controls.

Diby Malakar, vice president of product management at data catalog specialist Alation, similarly said he has noticed a growing emphasis on AI governance.

SingleStore customers, like those of most data management and analytics vendors, have expressed interest in developing and deploying generative AI-driven tools. And as they build and implement conversational assistants, code translation tools and automated processes, they are concerned with ensuring proper use of the tools.

“In every customer call, they are saying they’re doing more with GenAI, or at least thinking about it,” Malakar said. “And one of the first few things they talk about is how to govern those assets — assets as in AI models, feature stores and anything that could be used as input into the AI or the machine learning lifecycle.”

Governance, however, is hard. Data governance has been a challenge for enterprises for years. Now, AI governance is taking its place alongside data governance as a requirement as well as a challenge.

Data has long been a driver of business decisions.

For decades, however, data stewardship and analysis were the domain of small teams within organizations. Data was kept on premises, and even high-level executives had to request that IT personnel develop charts, graphs, reports, dashboards and other data assets before they could use them to inform decisions.

The process of requesting information, developing an asset to analyze the information and reaching a decision was lengthy, taking at a minimum a few days and — depending on how many requests were made and the size of the data team — even months. With data so controlled, there was little need for data governance. Then, a bit less than 20 years ago, self-service analytics began to emerge. Vendors such as Tableau and Qlik developed visualization-based platforms that enabled business users to view and analyze data on their own, with proper training.

With data no longer the sole domain of experts — and with trained experts no longer the only ones in control of their organization’s data, and business users empowered to take action on their own — organizations needed guidelines.

And with data in the hands of more people within an enterprise — still only about a quarter of all employees, but more than before — more oversight was needed. Otherwise, organizations risked noncompliance with government regulations and data breaches that could reveal sensitive information or cost a company its competitive advantage.

A similar circumstance is now taking place with AI — albeit at a much faster rate, given all that has happened in less than two years — that necessitates AI governance. Just as data was once largely inaccessible, so was AI. And just as self-service analytics enabled more people within organizations to use data, necessitating data governance, generative AI is enabling more people within organizations to use AI, necessitating AI governance.

Donald Farmer, founder and principal of TreeHive Strategy, noted a similarity between the rising need for AI governance and events that necessitated data governance.

“That is a parallel,” he said. “It’s a reasonable one.”

However, what is happening with AI is taking place much more quickly and on a much larger scale than what happened with self-service analytics, Farmer continued. AI has the potential to completely alter how businesses conduct themselves, if properly governed. Farmer compared what AI can do for today’s enterprises to what electricity did for businesses at the turn of the 20th century. At the time, widespread electrical use was dangerous. In response, organizations employed what were then known as CEOs — chief electricity officers — who oversaw the use of electricity and made sure safety was maintained.

“This is a very fundamental shift that we’re just seeing the start of,” Farmer said. “It’s almost as fundamental as [electricity] — everything you do is going to be affected by AI. The comparison with self-service analytics is accurate, but it’s even more fundamental than that.”

Alation’s Malakar similarly noted parallels to be drawn between self-service analytics and the surging interest in AI. Both are rooted in less-technical employees wanting to use technology to help make decisions and take action.

“What we see is that the business analyst who doesn’t know coding wants less and less reliance on IT,” Malakar said. “They want to be empowered to make decisions that are data-related.” First, that was enabled to some degree by self-service analytics. Now, it can be enabled to a much larger degree by generative AI. Every enterprise has questions such as how to reduce expenses, predict churn or implement the most effective marketing campaign. AI can provide the answers.

And with generative AI, it can provide the answers to virtually any employee.

“They’re all AI/ML questions that were not being asked to the same degree 10 years ago,” Malakar said. “So now all these things like privacy, security, explainability [and] accountability become very important — a lot more important than it was in the world of pure data governance.”

At its core, AI governance is a lot like — and connected to — data governance. Data governance frameworks are documented sets of guidelines to ensure the proper use of data, including policies related to data privacy, quality and security. In addition, data governance includes access controls that limit who can do what with their organization’s data.

AI governance is linked to data governance and essentially builds on it, according to Petrie.

AI governance applies the same standards as data governance — practices and policies designed to ensure the proper use of AI tools and accuracy of AI models and applications. But without good data governance as a foundation, AI governance loses significance.

Before AI models and applications can be effective and used to inform decisions and automate processes, they need to be trained using good, accurate data. “[Data governance and AI governance] are inextricably linked and overlap quite a bit,” Petrie said. “All the risks of AI have predecessors when it comes to data governance. You should view data governance as the essential foundation of AI governance.”

Most enterprises do have data governance frameworks, he continued. But the same cannot be said for AI governance, as Petrie’s 2023 survey demonstrated.

“That signals a real problem,” he said.

The problem could be one that puts an organization at a competitive disadvantage — that they’re not ready to develop and deploy AI models and applications and reap their benefits, while competitors are doing so. Potentially more damaging, however, is if an enterprise is developing and deploying AI tools, but isn’t properly managing how they’re used. Rather than simply holding back growth, this could lead to negative consequences. But AI governance is about more than just protection from potential problems. It’s also about enabling confident use of AI tools, according to Farmer.

Good data governance frameworks strike a balance between putting limits on data use aimed to protect the enterprise from problems and supporting business users so that they can work with data without fearing that they’re going to unintentionally put their organization in a precarious position.

Good AI governance frameworks need to strike that same balance so that someone asking a question of an AI assistant isn’t afraid that the response they get and subsequent action they take will have a negative effect. Instead, that user needs to feel empowered.

“People are beginning to come around to the idea that a well-governed system gives people more confidence in being able to apply it at scale,” Farmer said. “Good governance isn’t a restricting function. It should be an enabling function. If it’s well governed, you give people freedom.” Specific elements of a good framework for AI governance combine belief in the need for a system to manage the use of AI, guidelines that properly enforce the system and technological tools that assist in its execution, according to Petrie.

“AI governance is defining and enforcing policies, standards and rules to mitigate risks related to AI,” he said. “To do that, you need people and process and technology.”

The people aspect starts with executive support for an AI governance program led by someone such as a chief data officer or chief data and analytics officer. Also involved in developing and implementing the framework are those in supporting roles, such as data scientists, data architects and data engineers.

The process aspect is the AI governance framework itself — the policies that address security, privacy, accuracy and accountability. The technology is the infrastructure. Among other tools, it includes data and machine learning observability platforms that look at data quality and pipeline performance, catalogs that organize data and include governance capabilities, master data management capabilities to ensure consistency, and machine learning lifecycle management platforms to train and monitor models and applications.

Together, the elements of AI governance should lead to confidence, according to Malakar. They should lead to conviction in the outputs of AI models and applications so that end users can confidently act. They should also lead to faith that the organization is protected from misuse.

“AI governance is about being able to use AI applications and foster an environment of trust and integrity in the use of those AI applications,” Malakar said. “It’s best practices and accountability. Not every company will be good at each one of [the aspects of AI governance], but if they at least keep those principles in mind, it will lead to better leverage of AI.”

Confidence is perhaps the most significant outcome of a good AI governance framework, according to Farmer. When the data used to feed and train AI models can be trusted, so can the outputs. And when the outputs can be trusted, users can take the actions that lead to growth. Similarly, when the processes automated and overseen by AI tools can be trusted, data scientists, engineers and other experts can use the time they’ve been given by being relieved of mundane tasks to take on new ones that likewise lead to growth.

“The benefit is confidence,” Farmer said. “There’s confidence to do more with it when you’re well governed.”

More tangibly, good AI governance leads to regulatory compliance and avoiding the financial and reputational harm that comes with regulatory violations, according to Petrie. Europe, in particular, has stringent regulations related to AI, and the U.S. is similarly expected to increase regulatory restrictions on exactly what AI developers and deployers can and cannot do.

Beyond regulatory compliance, good AI governance results in good customer relationships, Petrie continued. AI models and applications can provide enterprises with hyperpersonalized information about customers, efficiently enabling personalized shopping experiences and cross-selling opportunities that can increase profits. “Those benefits are significant,” Petrie said. “[But] if you’re going to take something to customers — GenAI in particular — you better make sure you’re getting it right, because you’re playing with your revenue stream.”

If enterprises get generative AI — or traditional AI, for that matter — wrong, i.e., if the governance framework controlling how AI models and applications are developed and deployed is poor, the consequences can be severe.

“All sorts of bad things can happen,” Petrie said.

Some of them are the polar opposite of what can happen when an organization has a good AI governance framework. Instead of regulatory compliance, organizations can wind up with inquisitive regulators, and instead of strong customer relationships, they can wind up with poor ones. But those are the end results.

First, what leads to inquisitive regulators and poor customer relationships, among other things, includes poor accuracy, biased outputs and mishandling of intellectual property.

“If those risks are not properly controlled and mitigated, you can wind up with … regulatory penalties or costs related to compliance, angry or alienated customers, and you can end up with operational processes that hit bottlenecks because the intended efficiency benefits of AI will not be delivered,” Petrie said.

Lack of data security, explainability and accountability are other results of poor AI governance, according to Malakar. Without the combination of good data governance and AI governance, there can be security breaches as well as improperly prepared data — personally identifiable information that hasn’t been anonymized, for example — that seeps into models and gets exposed. In addition, without good governance, it can be difficult to explain and fix bad outputs in a timely fashion or know whom to hold accountable.

“You don’t want to build a model where it can’t be trusted,” Malakar said. “That’s a risk to the entire culture of the company and can drive morale issues.”

Ultimately, just as good AI governance engenders confidence, bad AI governance leads to a lack of confidence, according to Farmer.

If one competing company trusts its AI models and applications and another doesn’t, the one that can act with confidence will reap the benefits, while the other will be stuck in place and miss out on the growth opportunities enabled by generative AI’s significant potential. “Given that the shift is so fundamental, not being well governed is really going to hold you back,” Farmer said. “Governance is the difference between the ability to move swiftly and with confidence, and being held back and taking dangerous risks.”

Eric Avidon is a senior news writer for TechTarget Editorial and a journalist with more than 25 years of experience. He covers analytics and data management.

Surging need

Elements of AI governance

Benefits and consequences

Technology

28 Years Later was partially shot on an iPhone 15 Pro Max

Danny Boyle’s zombie sequel 28 Years Later was shot using several iPhone 15 Pro Max smartphones, . This makes it the biggest movie ever made using iPhones, as the budget was around $75 million.

There are some major caveats worth going over. First of all, the sourcing on the story is anonymous, as the film’s staff was required to sign an NDA. Also, the entire film wasn’t shot using last year’s high-end Apple smartphone. Engadget has confirmed that Boyle and his team used a bunch of different cameras, with the iPhone 15 Pro Max being just one tool.

Finally, it’s not like the director just plopped the smartphone on a tripod and called it a day. Each iPhone looks to have been adapted to integrate with full-frame DSLR lenses. Speaking of, those professional-grade lenses cost a small fortune. The phones were also nestled in protective cages.

Even if the phones weren’t exclusively used to make this movie, it’s still something of a full-circle moment for Boyle and his team. The original 28 Days Later was shot primarily on a that cost $4,000 at the time. This camcorder recorded footage to MiniDV tapes.

28 Years Later is the third entry in the franchise and is due to hit theaters in June 2025. The film stars Jodie Comer, Aaron Taylor-Johnson, Ralph Fiennes and Cillian Murphy. This will be the first of three new films . Plot details are non-existent, but all three upcoming movies are being written by Alex Garland. He co-wrote the first one and has since gone on to direct genre fare like Ex Machina, and, most recently, Civil War. He also made a truly underrated .

As for the intersection of smartphones and Hollywood, several films have been shot with iPhones. These include Sean Baker’s Tangerine and Steven Soderbergh’s Unsane.

Technology

Netflix reveals new games based on Rebel Moon and other shows

As part of its Geeked Week announcements, Netflix revealed more details about new games coming to its platform. Several of the games are based on the company’s shows, including Rebel Moon and Squid Game, while others such as Monument Valley 3 are well-regarded IPs in their own right. Several of the games are coming in 2025, while others are s…Read More

Technology

M&As and AI are in the spotlight, but there’s still capital left for quick commerce and more

Welcome to Startups Weekly — your weekly recap of everything you can’t miss from the world of startups. Want it in your inbox every Friday? Sign up here.

This week brought reassuring signs that dealmaking is still happening on both sides of the table. New unicorns are being minted, and more capital is flowing into AI, but deals are also coming from some unexpected directions.

Most interesting startup stories from the week

AI news was plentiful this week, but also varied, from large and small M&As to new launches.

AI portfolio: Typeface, a generative AI unicorn, purchased two companies to expand its enterprise offering: New York City-based Treat, which uses AI to create personalized photo products, and Narrato, an Australian AI-powered content creation and management platform.

AI again: Global HR company Workday bought AI-powered contract management platform Evisort, adding to its AI-related acquisitions. The companies didn’t disclose the price tag, but Evisort had raised $155.6 million in capital and debt.

FinOps FTW: IBM acquired Kubecost, a Kubernetes cost optimization startup, as its name suggests. This is another sign of the ongoing rise of FinOps, which may also be boosted by the need to lessen the cost and impact of GenAI.

Only you: Recently launched SocialAI is a social network with a big twist — it is filled with bots, and that’s on purpose. Founder Michael Sayman told TechCrunch that his goal was for users to be able to bounce ideas off a diverse community of AIs.

Most interesting fundraises this week

This week was also busy on the dealmaking front, and some of the capital went to sectors and places you might not necessarily expect.

Flying solo: Quick commerce app Flink raised $150 million, including $115 million in equity. The near-unicorn was once an acquisition target of competitors but is now seeking to forge its own path, with a focus on Germany and the Netherlands.

On alert: New York-based startup Intezer raised $33 million to make sure security teams aren’t overwhelmed by alerts. Using AI, the startup helps them with not only triaging, but also with investigation, which it does much faster than a human would, CEO Itai Tevet said.

Getting permits: NYC-based GreenLite raised a $28.5 million Series A round to facilitate construction permitting. Its co-founders don’t come from the construction sector but previously worked at Gopuff, which got its own taste of dealing with permits when it tried to launch a ghost kitchen network across the U.S.

Tailwinds: Armenian B2B SaaS startup EasyDMARC raised a $20 million Series A round of funding to simplify email security and authentication. The company facilitates the adoption of a technical standard that Google and Yahoo will soon make mandatory for bulk email senders.

Most interesting VC and fund news this week

Accelerating: Salesforce Ventures announced at Dreamforce that its San Francisco-based AI fund would once again double in size and reach $1 billion, a significant acceleration compared to the $5 billion total deployed in its first 15 years.

Decacorn fund: Insights Partners is nearing a whopping $10 billion fundraise for its 13th fund, according to the Financial Times, which also noted the recent sales of two Insight portfolio companies, Own and Recorded Future.

Builders: Proptech venture firm Era Ventures raised $88 million for its first fund, which will be deployed in startups from seed to Series B. Its portfolio includes Honey Homes, a subscription service for handymen that has raised $21.35 million in venture funding to date.

Last but not least

In a recent episode of the Equity podcast, J.P. Morgan’s Head of Startup Banking Ashraf Hebela discussed his recent Startup Insights report and what it might take to create a unicorn in 2024. He also touched on the hot topic of “Founder Mode.”

Technology

8BitDo now sells the NES-themed keycaps from its retro keyboard

8BitDo is now selling a set of keycaps featuring the same Nintendo Entertainment System (NES) inspired design as those used on the Retro Mechanical Keyboard it debuted last July. While the keyboard is now available in four styles including Commodore 64 and Famicom designs, only the NES style keycaps are currently available on their own.

The $49.99 8BitDo Retro Keycaps set includes 165 PBT keys with legends printed using dye-sublimation for added durability. The expanded set allows the keys to be used on larger keyboards with a dedicated number pad. 8BitDo’s $99.99 mechanical keyboards are only available in a shorter tenkeyless layout.

The set features alternate designs for some keys like a spacebar with an added health meter in two different lengths, and both the American ANSI and international ISO versions of others, like an Enter key with an inverted L design, and a smaller Shift key. 8BitDo says the set supports 65, 75, 80, 95, and 100-percent layouts, as well as ergonomic split keyboards, but compatibility is limited to MX-style switches.

-

Sport1 day ago

Sport1 day agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

News2 days ago

News2 days agoYou’re a Hypocrite, And So Am I

-

Science & Environment1 day ago

Science & Environment1 day ago‘Running of the bulls’ festival crowds move like charged particles

-

News1 day ago

News1 day agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Science & Environment2 days ago

Science & Environment2 days agoSunlight-trapping device can generate temperatures over 1000°C

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoCardano founder to meet Argentina president Javier Milei

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

Science & Environment1 day ago

Science & Environment1 day agoQuantum ‘supersolid’ matter stirred using magnets

-

Sport1 day ago

Sport1 day agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

Technology1 day ago

Technology1 day agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

Science & Environment1 day ago

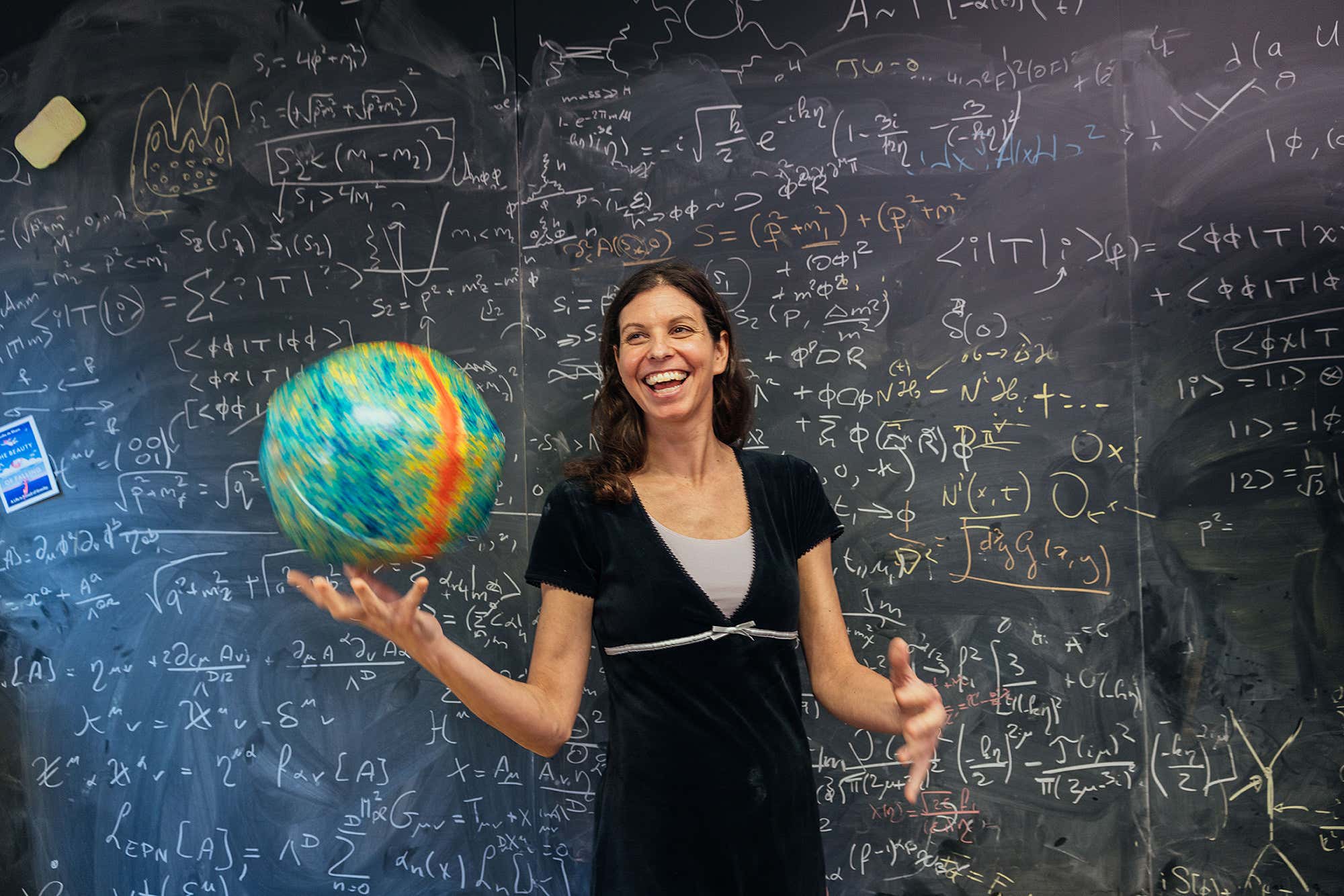

Science & Environment1 day agoHow one theory ties together everything we know about the universe

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoSEC asks court for four months to produce documents for Coinbase

-

CryptoCurrency1 day ago

CryptoCurrency1 day ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBlockdaemon mulls 2026 IPO: Report

-

CryptoCurrency23 hours ago

CryptoCurrency23 hours agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

Politics1 day ago

Politics1 day agoLabour MP urges UK government to nationalise Grangemouth refinery

-

News24 hours ago

News24 hours agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Science & Environment2 days ago

Science & Environment2 days agoHow to wrap your mind around the real multiverse

-

Science & Environment2 days ago

Science & Environment2 days agoQuantum time travel: The experiment to ‘send a particle into the past’

-

Science & Environment1 day ago

Science & Environment1 day agoNuclear fusion experiment overcomes two key operating hurdles

-

CryptoCurrency1 day ago

CryptoCurrency1 day ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency1 day ago

CryptoCurrency1 day ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency1 day ago

CryptoCurrency1 day ago‘Everything feels like it’s going to shit’: Peter McCormack reveals new podcast

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoDecentraland X account hacked, phishing scam targets MANA airdrop

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoMemecoins not the ‘right move’ for celebs, but DApps might be — Skale Labs CMO

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoEthereum falls to new 42-month low vs. Bitcoin — Bottom or more pain ahead?

-

Business1 day ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Politics3 days ago

Politics3 days agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Science & Environment2 days ago

Science & Environment2 days agoWhy this is a golden age for life to thrive across the universe

-

Science & Environment2 days ago

Science & Environment2 days agoElon Musk’s SpaceX contracted to destroy retired space station

-

MMA1 day ago

MMA1 day agoUFC’s Cory Sandhagen says Deiveson Figueiredo turned down fight offer

-

MMA1 day ago

MMA1 day agoDiego Lopes declines Movsar Evloev’s request to step in at UFC 307

-

Football1 day ago

Football1 day agoNiamh Charles: Chelsea defender has successful shoulder surgery

-

Football1 day ago

Football1 day agoSlot's midfield tweak key to Liverpool victory in Milan

-

Science & Environment1 day ago

Science & Environment1 day agoHyperelastic gel is one of the stretchiest materials known to science

-

Technology3 days ago

Technology3 days agoCan technology fix the ‘broken’ concert ticketing system?

-

Fashion Models1 day ago

Fashion Models1 day agoMiranda Kerr nude

-

Science & Environment2 days ago

Science & Environment2 days agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment1 day ago

Science & Environment1 day agoOdd quantum property may let us chill things closer to absolute zero

-

Science & Environment1 day ago

Science & Environment1 day agoRethinking space and time could let us do away with dark matter

-

Technology2 days ago

Technology2 days agoWould-be reality TV contestants ‘not looking real’

-

Science & Environment2 days ago

Science & Environment2 days agoHow to unsnarl a tangle of threads, according to physics

-

Science & Environment2 days ago

Science & Environment2 days agoX-ray laser fires most powerful pulse ever recorded

-

Science & Environment2 days ago

Science & Environment2 days agoPhysicists are grappling with their own reproducibility crisis

-

Science & Environment2 days ago

Science & Environment2 days agoBeing in two places at once could make a quantum battery charge faster

-

Science & Environment1 day ago

Science & Environment1 day agoWe may have spotted a parallel universe going backwards in time

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoArthur Hayes’ ‘sub $50K’ Bitcoin call, Mt. Gox CEO’s new exchange, and more: Hodler’s Digest, Sept. 1 – 7

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoTreason in Taiwan paid in Tether, East’s crypto exchange resurgence: Asia Express

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoLeaked Chainalysis video suggests Monero transactions may be traceable

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoAre there ‘too many’ blockchains for gaming? Sui’s randomness feature: Web3 Gamer

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoCrypto whales like Humpy are gaming DAO votes — but there are solutions

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoHelp! My parents are addicted to Pi Network crypto tapper

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

Science & Environment1 day ago

Science & Environment1 day agoWhy we need to invoke philosophy to judge bizarre concepts in science

-

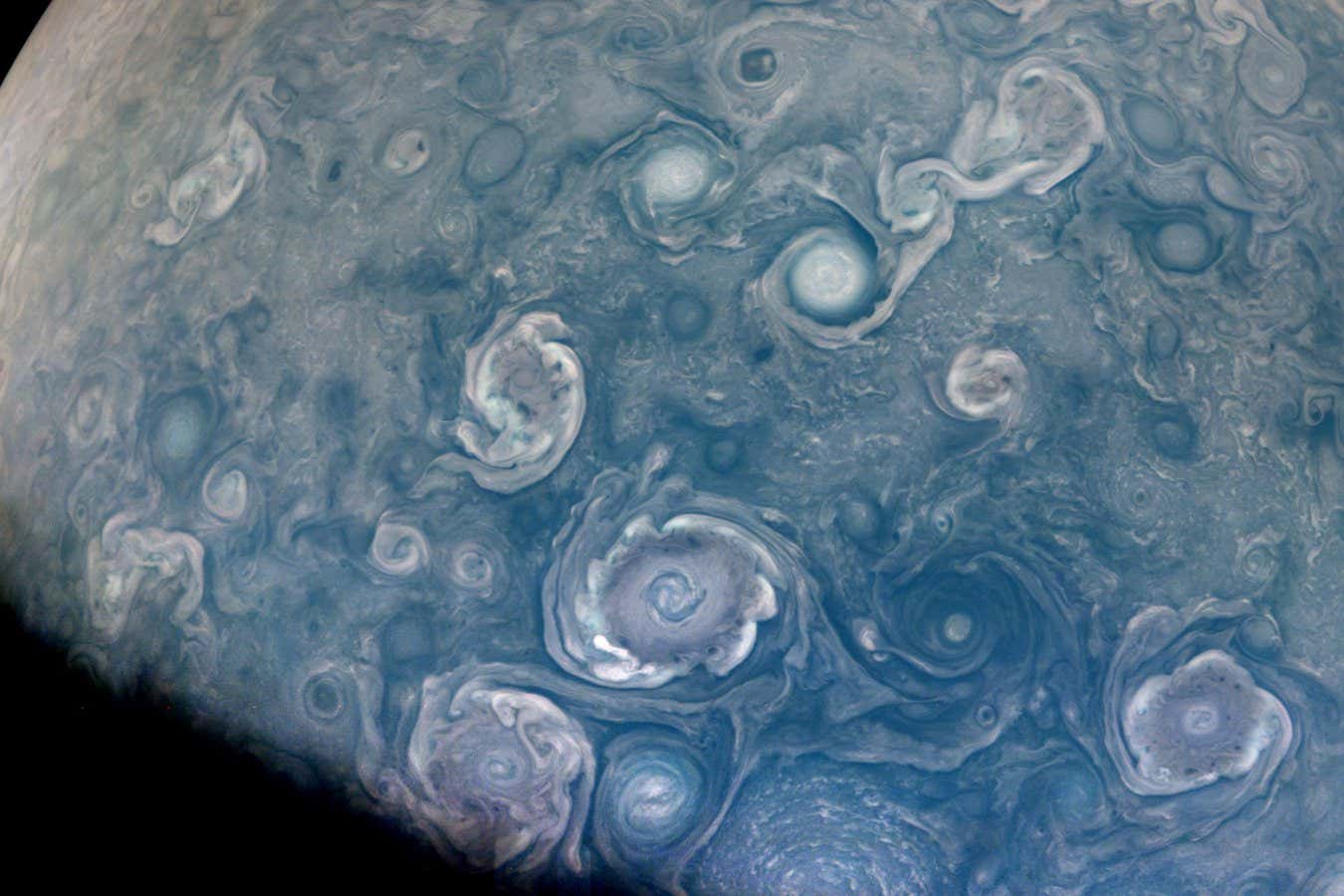

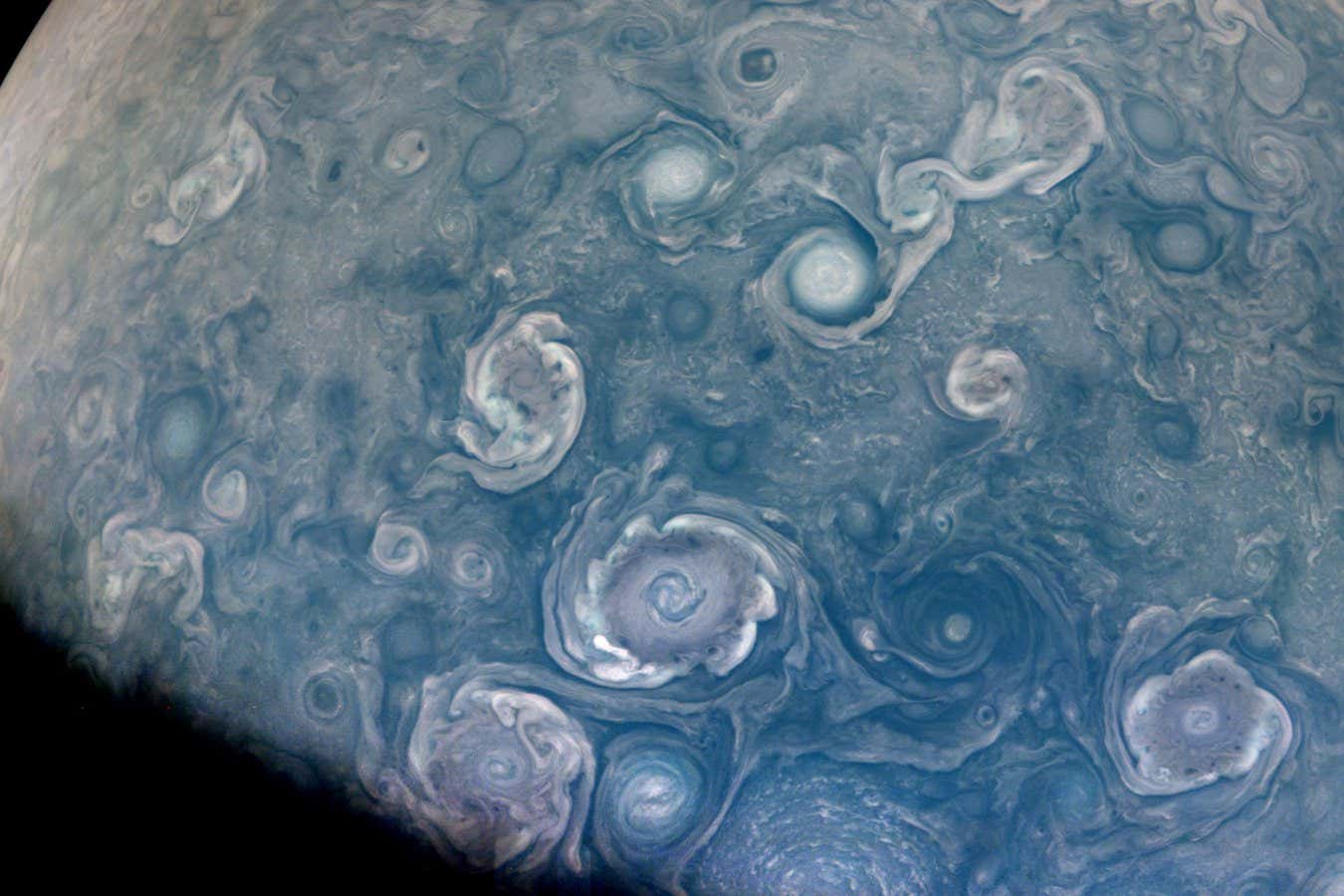

Science & Environment1 day ago

Science & Environment1 day agoJupiter’s stormy surface replicated in lab

-

Science & Environment1 day ago

Science & Environment1 day agoA tale of two mysteries: ghostly neutrinos and the proton decay puzzle

-

Science & Environment1 day ago

Science & Environment1 day agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoFed rate cut may be politically motivated, will increase inflation: Arthur Hayes

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBinance CEO says task force is working ‘across the clock’ to free exec in Nigeria

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoElon Musk is worth 100K followers: Yat Siu, X Hall of Flame

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency1 day ago

CryptoCurrency1 day agoBitcoin bull rally far from over, MetaMask partners with Mastercard, and more: Hodler’s Digest Aug 11 – 17

-

CryptoCurrency1 day ago

CryptoCurrency1 day ago‘Silly’ to shade Ethereum, the ‘Microsoft of blockchains’ — Bitwise exec

-

Business1 day ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Politics1 day ago

The Guardian view on 10 Downing Street: Labour risks losing the plot | Editorial

-

Politics1 day ago

Politics1 day agoI’m in control, says Keir Starmer after Sue Gray pay leaks

-

Politics1 day ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

News24 hours ago

News24 hours ago“Beast Games” contestants sue MrBeast’s production company over “chronic mistreatment”

-

News24 hours ago

News24 hours agoSean “Diddy” Combs denied bail again in federal sex trafficking case in New York

-

News24 hours ago

News24 hours agoBrian Tyree Henry on his love for playing villains ahead of “Transformers One” release

-

News24 hours ago

News24 hours agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

CryptoCurrency23 hours ago

CryptoCurrency23 hours agoBitcoin options markets reduce risk hedges — Are new range highs in sight?

-

News1 day ago

News1 day agoChurch same-sex split affecting bishop appointments

-

Politics2 days ago

Politics2 days agoKeir Starmer facing flashpoints with the trade unions

-

Health & fitness3 days ago

Health & fitness3 days agoWhy you should take a cheat day from your diet, and how many calories to eat

-

Technology1 day ago

Technology1 day agoFivetran targets data security by adding Hybrid Deployment

-

Technology4 days ago

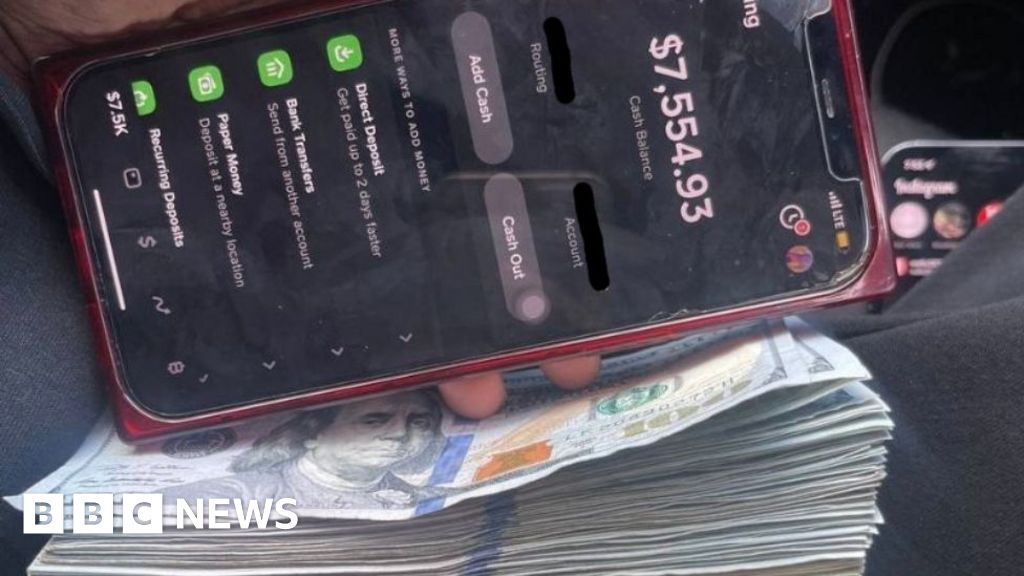

Technology4 days ago‘The dark web in your pocket’

-

News1 day ago

Freed Between the Lines: Banned Books Week

-

Science & Environment1 day ago

Science & Environment1 day agoThe physicist searching for quantum gravity in gravitational rainbows

-

Science & Environment1 day ago

Science & Environment1 day agoHow to wrap your head around the most mind-bending theories of reality

-

Fashion Models1 day ago

Fashion Models1 day ago“Playmate of the Year” magazine covers of Playboy from 1971–1980

-

News4 days ago

News4 days agoDid the Pandemic Break Our Brains?

-

Science & Environment1 day ago

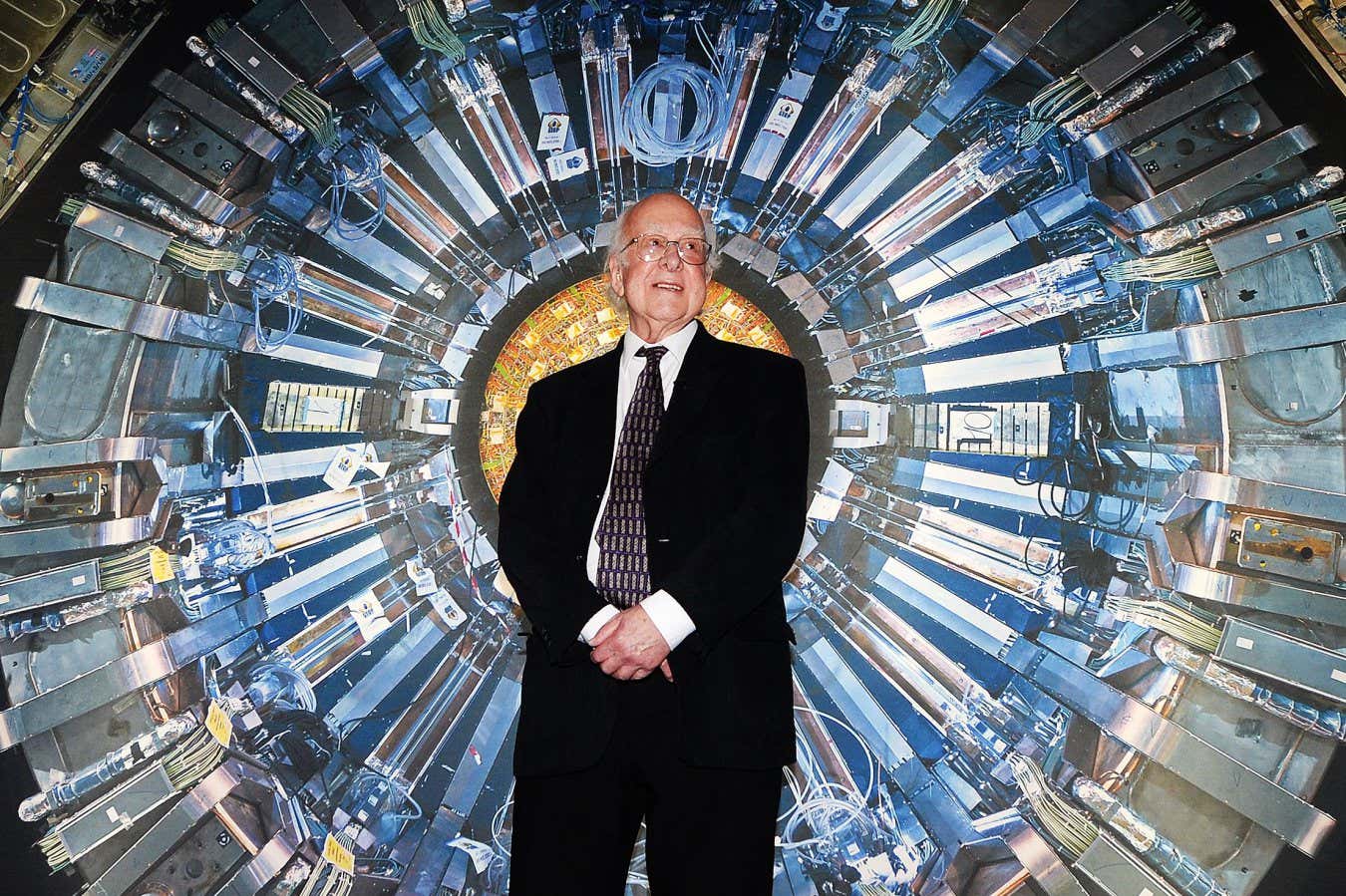

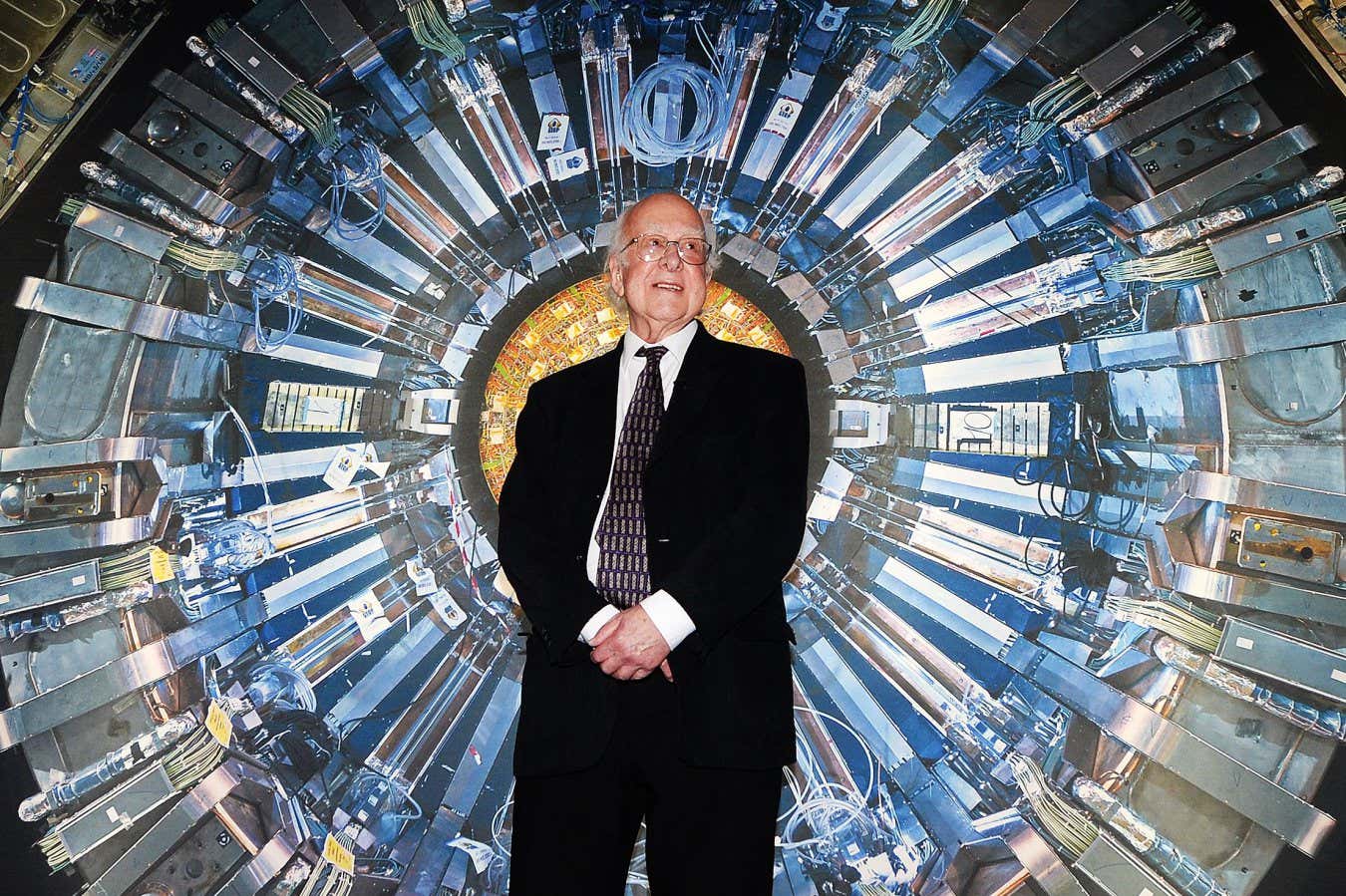

Science & Environment1 day agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Health & fitness2 days ago

Health & fitness2 days ago11 reasons why you should stop your fizzy drink habit in 2022

-

Science & Environment1 day ago

Science & Environment1 day agoHow Peter Higgs revealed the forces that hold the universe together

You must be logged in to post a comment Login