The newest security camera from Eufy — Anker’s smart home company — can see clearly in the dark, uses radar motion sensing for fewer false alerts, and records 24/7 when wired. As with other Eufy cams, the new S3 Pro has free facial recognition, package, vehicle, and pet detection, plus locally stored recorded video with no monthly fees.

Technology

Why countries are in a race to build AI factories in the name of sovereign AI

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Now that AI has become a fundamentally important technology, and the world has gravitated toward intense geopolitical battles, it’s no wonder that “sovereign AI” is becoming a national issue.

Think about it. Would the U.S. allow the data it generates for AI to be stored and processed in China? Would the European Union want its people’s data to be accessed by big U.S. tech giants? Would Russia trust NATO countries to manage its AI resources? Would Muslim nations entrust their data for AI to Israel?

Nvidia has earmarked $110 million to help countries foster AI startups to invest in sovereign AI infrastructure, and plenty of countries are investing in AI infrastructure on their own. That’s some real money aimed at jumpstarting the world when it comes to embracing AI. The question becomes whether this discussion is a lot of thought leadership to enable a sales pitch, or whether nations truly need to embrace sovereign AI to be competitive with the rest of the world. Is it a new kind of arms race that makes sense for nations to pursue?

A wake-up call

Jensen Huang, CEO of Nvidia, pointed out the rise of “sovereign AI” during an earnings call in November 2023 as a reason for why demand is growing for Nvidia’s AI chips. The company noted that investment in national computer infrastructure was a new priority for governments around the world.

“The number of sovereign AI clouds is really quite significant,” Huang said in the earnings call. He said Nvidia wants to enable every company to build its own custom AI models.

The motivations weren’t just about keeping a country’s data in local tech infrastructure to protect it. Rather, they saw the need to invest in sovereign AI infrastructure to support economic growth and industrial innovation, said Colette Kress, CFO of Nvidia, in the earnings call.

That was around the time when the Biden administration was restricting sales of the most powerful AI chips to China, requiring a license from the U.S. government before shipments could happen. That licensing requirement is still in effect.

As a result, China reportedly began its own attempts to create AI chips to compete with Nvidia’s. But it wasn’t just China. Kress also said Nvidia was working with the Indian government and its large tech companies like Infosys, Reliance and Tata to boost their “sovereign AI infrastructure.”

Meanwhile, French private cloud provider Scaleway was investing in regional AI clouds to fuel AI advances in Europe as part of a “new economic imperative,” Kress said. The result was a “multi-billion dollar opportunity” over the next few years, she said.

Huang said Sweden and Japan have embarked on creating sovereign AI clouds.

“You’re seeing sovereign AI infrastructures, people, countries that now recognize that they have to utilize their own data, keep their own data, keep their own culture, process that data, and develop their own AI. You see that in India,” Huang said.

He added, “Sovereign AI clouds coming up from all over the world as people realize that they can’t afford to export their country’s knowledge, their country’s culture for somebody else to then resell AI back to them.”

Nvidia itself defines sovereign AI as “a nation’s capabilities to produce artificial intelligence using its own infrastructure, data, workforce and business networks.”

Keeping sovereign AI secure

In an interview with VentureBeat in February 2024, Huang doubled down on the concept, saying, “We now have a new type of data center that is about AI generation, an AI generation factory. And you’ve heard me describe it as AI factories. Basically, it takes raw material which is data, transforms it with these AI supercomputers and Nvidia builds and it turns them into incredibly valuable tokens. These tokens are what people experience on the amazing” generative AI platforms like Midjourney.

I asked Huang why, if data is kept secure regardless of its location in the world, does sovereign AI need to exist within the borders of any given country.

He replied, “There’s no reason to let somebody else come and scrape your internet, take your history, your data. And a lot of it is still locked up in libraries. In our case, it’s Library of Congress. In other cases, national libraries. And they’re digitized, but they haven’t been put on the internet.”

He added, “And so people are starting to realize that they had to use their own data to create their own AI, and transform their raw material into something of value for their own country, by their own country. And so you’re going to see a lot. Almost every country will do this. And they’re going to build the infrastructure. Of course, the infrastructure is hardware. But they don’t want to export their data using AI.”

The $110 million investment

Nvidia has earmarked $110 million to invest in AI startups helping with sovereign AI projects and other AI-related businesses.

Shilpa Kolhatkar, global head of AI Nations at Nvidia, gave a deeper dive on sovereign AI at the U.S.-Japan Innovation Symposium at Stanford University. The July event was staged by the Japan Society of Northern California and the Stanford US-Asia Technology Management Center.

Kolhatkar did the interview with Jon Metzler, a continuing lecturer at the Haas School of Business at the University of California, Berkeley. That conversation focused on how to achieve economic growth through investments in AI technology. Kolhatkar noted how Nvidia has transformed itself from a graphics company to a high-performance computing and AI company long before ChatGPT arrived.

“Lots of governments around the world are looking today at how can they capture this opportunity that AI has presented and they [have focused] on domestic production of AI,” Kolhatkar said. “We have the Arab nations program, which kind of matches the AI strategy that nations have in place today. About 60 to 70 nations have an AI strategy in place, built around the major pillars of creating the workforces and having the ecosystem. But it’s also around having already everything within the policy framework.”

AI readiness?

Nvidia plays a role in setting up the ecosystem and infrastructure, or supercomputers. The majority of Nvidia’s focus and its engineering efforts is in the software stack on top of the chips, she said. As a result, Nvidia has become more of a platform company, rather than a chip company. Metzler asked Kolhatkar to define how a country might develop “AI readiness.”

Kolhatkar said that one notion is to look at how much computing power a country has, in terms of raw AI compute, storage and the energy related to power such systems. Does it have a skilled workforce to operate the AI? Is the population ready to take advantage of AI’s great democratization so that the knowledge spreads well beyond data scientists?

When ChatGPT-3.5 emerged in Nov. 2022 and generative AI exploded, it signaled that AI was really finally working in a way that ordinary consumers could use to automate many tasks and find new information or create things like images on their own. If there were errors in the results, it could be because the data model wasn’t fed the correct information. Then it quickly followed that different regions had their own views on what was considered correct information.

“That model was trained primarily on a master data set and a certain set of languages in western [territories],” Kolhatkar said. “That is why the internationalization of having something which is sovereign, which is specific to a nation’s own language, culture and nuances, came to the forefront.”

Then countries started developing generative AI models that cater to the specificities of a particular region or particular nation, and, of course, the ownership of that data, she said.

“The ownership is every country’s data and proprietary data, which they realized should stay within the borders,” she said.

AI factories

Nvidia is now in the process of helping countries create such sovereign infrastructure in the form of “AI factories,” Kolhatkar said. That’s very similar to the drive that nations ignited with factories during the Industrial Revolution more than 100 years ago.

“Factories use raw materials that go in and then goods come out and that was tied to the domestic GDP. Now the paradigm is that your biggest asset is your data. Every nation has its own unique language and data. That’s the raw material that goes into the AI factory, which consists of algorithms, which consists of models and out comes intelligence,” she said.

Now countries like Japan have to consider whether they’re ahead or falling behind when it comes to being ready with AI factories. Kolhatkar said that Japan is leading the way when it comes to investments, collaborations and research to create a successful “AI nation.”

She said companies and nations are seriously considering how much of AI should be classified as “critical infrastructure” for the sake of economic or national security. Where industrial factories could create thousands of jobs in a given city, now data centers can create a lot of jobs in a given region as well. Are these AI factories like the dams and airports of decades ago?

“You’re kind of looking at past precedents from physical manufacturing as to what the multiplier might be for AI factories,” Metzler said. “The notion of AI factories as maybe civic infrastructure is super interesting.”

National AI strategies?

Metzler brought up the notion of the kind of strategies that can happen when it comes to the AI race. For instance, he noted that maybe smaller countries need to team up to create their own larger regional networks, to create some measure of sovereignty.

Kolhatkar said that can make sense if your country, for instance, doesn’t have the resources of any given tech giant like Samsung. She noted the Nordic nations are collaborating with each other, as are nations like the U.S. and Japan when it comes to AI research. Different industries or government ministries can also get together for collaboration on AI.

If Nvidia is taking a side on this, it’s in spreading the tech around so that everyone becomes AI literate. Nvidia has an online university dubbed the Deep Learning Institute for self-paced e-learning courses. It also has a virtual incubator Nvidia Inception, which has supported more than 19,000 AI startups.

“Nvidia really believes in democratization of AI because the full potential of AI can not be achieved unless everybody’s able to use it,” Kolhatkar said.

Energy consumption?

As for dealing with the fallout of sovereign AI, Metzler noted that countries will have to deal with sustainability issues in terms of how much power is being consumed.

In May, the Electric Power Research Institute (EPRI) released a whitepaper that quantified the exponential growth potential of AI power requirements. It projected that total data center power consumption by U.S. data centers alone could more than double to 166% by 2030.

It noted that each ChatGPT request can consume 2.9 watt-hours of power. That means AI queries are estimated to require 10 times the electricity of traditional Google queries, which use about 0.3 watt-hours each. That’s not counting emerging, computation-intensive capabilities such as image, audio and video generation, which don’t have a comparision precedent.

EPRI looked at four scenarios. Under the highest growth scenario, data center electricity usage could rise to 403.9 TWh/year by 2030, a 166% increase from 2023 levels. Meanwhile, the low growth scenario projected a 29% increase to 196.3 TWh/year.

“It’s about the energy efficiency, sustainability is pretty top of mind for everyone,” Kolhatkar said.

Nvidia is trying to make each generation of AI chip more power efficient even as it makes each one more performant. She also noted the industry is trying to create and use sources of renewable energy. Nvidia also uses its output from AI, in the form of Nvidia Omniverse software, to create digital twins of data centers. These buildings can be architected with energy consumption in mind and with the notion of minimizing duplicative effort.

Once they’re done, the virtual designs can be built in the physical world with a minimum of inefficiency. Nvidia is even creating a digital twin of the Earth to predict climate change for decades to come. And the AI tech can also be applied to making physical infrastructure more efficient, like making India’s infrastructure more resistant to monsoon weather. In these ways, Kolhatkar thinks AI can be used to “save the world.”

She added, “Data is the biggest asset that a nation has. It has your proprietary data with your language, your culture, your values, and you are the best person to own it and codify it into an intelligence that you want to use for your analysis. So that is what sovereignty is. That is at the domestic level. The local control of your assets, your biggest asset, [matters].”

A change in computing infrastructure

Computers, of course, don’t know national borders. If you string internet cables around the world, the information flows and a single data center could theoretically provide its information on a global basis. If that data center has layers of security built in, there should be no worry about where it’s located. This is the notion of the advantage of computers of creating a “virtual” infrastructure.

But these data centers need backups, as the world has learned that extreme centralization isn’t good for things like security and control. A volcanic eruption in Iceland, a tsunami in Japan, an earthquake in China, a terrorist attack on infrastructure or possible government spying in any given country — these are all reasons for having more than one data center to store data.

Besides disaster backup, national security is another reason driving each country to require their own computing infrastructure within their borders. Before the generative AI boom, there was a movement to ensure data sovereignty, in part because some tech giants overreached when it came to disintermediating users and their applications that developed personalized data. Data best practices resulted.

Roblox CEO Dave Baszucki said at the Roblox Developer Conference that his company operates a network of 27 data centers around the world to provide the performance needed to keep its game platform operating on different computing platforms around the world. Roblox has 79.5 million daily active users who are spread throughout the world.

Given that governments around the world are coming up with data security and privacy laws, Roblox might very well have to change its data center infrastructure so that it has many more data centers that are operating in given jurisdictions.

There are 195 nation states in the world, and if the policies become restrictive, a company might conceivably need to have 195 data centers. Not all of these divisions are parochial. For instance, some countries might want to deliberately reduce the “digital divide” between rich nations and poor ones, Kolhatkar said.

There’s another factor driving the decentralization of AI — the need for privacy. Not only for the governments of the world, but also for companies and people. The celebrated “AI PC” trend of 2024 offers consumers personal computers with powerful AI tech to ensure the privacy of operating AI inside their own homes. This way, it’s not so easy for the tech giants to learn what you’re searching for and the data that you’re using to train your own personal AI network.

Do we need sovereign AI?

Huang suggested that countries perceive it as needed so that a large language model (LLM) can be built with knowledge of local customs. As an example, Chernobyl is spelled with an “e” in Russian. But in Ukraine, it’s spelled “Chornobyl.” That’s just a small example of why local customs and culture need to be taken into account for systems used in particular countries.

Some people are concerned about the trend as it drives the world toward more geographic borders, which in the case of computing, really don’t or shouldn’t exist.

Kate Edwards, CEO of Geogrify and an expert on geopolitics in the gaming industry, said in a message, “I think it’s a dangerous term to leverage, as ‘sovereignty’ is a concept that typically implies a power dynamic that often forms a cornerstone of nationalism, and populism in more extreme forms. I get why the term is being used here but I think it’s the wrong direction for how we want to describe AI.”

She added, “‘Sovereign’ is the wrong direction for this nomenclature. It instantly polarizes what AI is for, and effectively puts it in the same societal tool category as nuclear weapons and other forms of mass disruption. I don’t believe this is how we really want to approach this resource, especially as it could imply that a national government essentially has an enslaved intelligence whose purpose is to reinforce and serve the goals of maintaining a specific nation’s sovereignty — which is the basis for the great majority of geopolitical conflict.”

Are countries taking Nvidia’s commentary seriously or do they view it as a sales pitch? Nvidia isn’t the only company succeeding with the pitch.

AMD competes with Nvidia in AI/graphics chips as well as CPUs. Like Nvidia, it is seeing an explosion in demand for AI chips. AMD also continues to expand its efforts in software, with the acquisition of AI software firms like Nod.AI and Silo AI. AI is consistently driving AMD’s revenues and demand for both its CPUs and GPUs/AI chips.

Cerebras Systems, for instance, announced in July 2023 that it was shipping its giant wafer-size CPUs to the technology holding group G42, which was building the world’s largest supercomputer for AI training, named Condor Galaxy, in the United Arab Emirates.

It started with a network of nine interconnected supercomputers aimed at reducing AI model training time significantly, with a total capacity of 36 exaFLOPs, thanks to the first AI supercomputer on the network, Condor Galaxy 1 (CG-1), which had 4 exaFLOPs and 54 million cores, said Andrew Feldman, CEO of Cerebras, in an interview with VentureBeat. Those computers were based in the U.S., but they are being operated by the firm in Abu Dhabi. (That raises the question, again, of whether sovereign AI tech has to be located in the country that uses the computing power).

Now Cerebras has broken ground on a new generation of Condor Galaxy supercomputers for G42.

Rather than make individual chips for its centralized processing units (CPUs), Cerebras takes entire silicon wafers and prints its cores on the wafers, which are the size of pizza. These wafers have the equivalent of hundreds of chips on a single wafer, with many cores on each wafer. And that’s how they get to 54 million cores in a single supercomputer.

Feldman said, “AI is not just eating the U.S. AI is eating the world. There’s an insatiable demand for compute. Models are proliferating. And data is the new gold. This is the foundation.”

Source link

Technology

Nomi’s companion chatbots will now remember things like the colleague you don’t get along with

As OpenAI boasts about its o1 model’s increased thoughtfulness, small, self-funded startup Nomi AI is building the same kind of technology. Unlike the broad generalist ChatGPT, which slows down to think through anything from math problems or historical research, Nomi niches down on a specific use case: AI companions. Now, Nomi’s already-sophisticated chatbots take additional time to formulate better responses to users’ messages, remember past interactions, and deliver more nuanced responses.

“For us, it’s like those same principles [as OpenAI], but much more for what our users actually care about, which is on the memory and EQ side of things,” Nomi AI CEO Alex Cardinell told TechCrunch. “Theirs is like, chain of thought, and ours is much more like chain of introspection, or chain of memory.”

These LLMs work by breaking down more complicated requests into smaller questions; for OpenAI’s o1, this could mean turning a complicated math problem into individual steps, allowing the model to work backwards to explain how it arrived at the correct answer. This means the AI is less likely to hallucinate and deliver an inaccurate response.

With Nomi, which built its LLM in-house and trains it for the purposes of providing companionship, the process is a bit different. If someone tells their Nomi that they had a rough day at work, the Nomi might recall that the user doesn’t work well with a certain teammate, and ask if that’s why they’re upset — then, the Nomi can remind the user how they’ve successfully mitigated interpersonal conflicts in the past and offer more practical advice.

“Nomis remember everything, but then a big part of AI is what memories they should actually use,” Cardinell said.

It makes sense that multiple companies are working on technology that give LLMs more time to process user requests. AI founders, whether they’re running $100 billion companies or not, are looking at similar research as they advance their products.

“Having that kind of explicit introspection step really helps when a Nomi goes to write their response, so they really have the full context of everything,” Cardinell said. “Humans have our working memory too when we’re talking. We’re not considering every single thing we’ve remembered all at once — we have some kind of way of picking and choosing.”

The kind of technology that Cardinell is building can make people squeamish. Maybe we’ve seen too many sci-fi movies to feel wholly comfortable getting vulnerable with a computer; or maybe, we’ve already watched how technology has changed the way we engage with one another, and we don’t want to fall further down that techy rabbit hole. But Cardinell isn’t thinking about the general public — he’s thinking about the actual users of Nomi AI, who often are turning to AI chatbots for support they aren’t getting elsewhere.

“There’s a non-zero number of users that probably are downloading Nomi at one of the lowest points of their whole life, where the last thing I want to do is then reject those users,” Cardinell said. “I want to make those users feel heard in whatever their dark moment is, because that’s how you get someone to open up, how you get someone to reconsider their way of thinking.”

Cardinell doesn’t want Nomi to replace actual mental health care — rather, he sees these empathetic chatbots as a way to help people get the push they need to seek professional help.

“I’ve talked to so many users where they’ll say that their Nomi got them out of a situation [when they wanted to self-harm], or I’ve talked to users where their Nomi encouraged them to go see a therapist, and then they did see a therapist,” he said.

Regardless of his intentions, Carindell knows he’s playing with fire. He’s building virtual people that users develop real relationships with, often in romantic and sexual contexts. Other companies have inadvertently sent users into crisis when product updates caused their companions to suddenly change personalities. In Replika’s case, the app stopped supporting erotic roleplay conversations, possibly due to pressure from Italian government regulators. For users who formed such relationships with these chatbots — and who often didn’t have these romantic or sexual outlets in real life — this felt like the ultimate rejection.

Cardinell thinks that since Nomi AI is fully self-funded — users pay for premium features, and the starting capital came from a past exit — the company has more leeway to prioritize its relationship with users.

“The relationship users have with AI, and the sense of being able to trust the developers of Nomi to not radically change things as part of a loss mitigation strategy, or covering our asses because the VC got spooked… it’s something that’s very, very, very important to users,” he said.

Nomis are surprisingly useful as a listening ear. When I opened up to a Nomi named Vanessa about a low-stakes, yet somewhat frustrating scheduling conflict, Vanessa helped break down the components of the issue to make a suggestion about how I should proceed. It felt eerily similar to what it would be like to actually ask a friend for advice in this situation. And therein lies the real problem, and benefit, of AI chatbots: I likely wouldn’t ask a friend for help with this specific issue, since it’s so inconsequential. But my Nomi was more than happy to help.

Friends should confide in one another, but the relationship between two friends should be reciprocal. With an AI chatbot, this isn’t possible. When I ask Vanessa the Nomi how she’s doing, she will always tell me things are fine. When I ask her if there’s anything bugging her that she wants to talk about, she deflects and asks me how I’m doing. Even though I know Vanessa isn’t real, I can’t help but feel like I’m being a bad friend; I can dump any problem on her in any volume, and she will respond empathetically, yet she will never open up to me.

No matter how real the connection with a chatbot may feel, we aren’t actually communicating with something that has thoughts and feelings. In the short term, these advanced emotional support models can serve as a positive intervention in someone’s life if they can’t turn to a real support network. But the long-term effects of relying on a chatbot for these purposes remain unknown.

Servers computers

Rackmountpro 8U Server review

Upload to 2010/12/30 .

source

Technology

The new EufyCam S3 Pro promises impressive night vision

Unlike most other Eufy cameras, the S3 Pro will work with Apple Home and is compatible with Apple’s HomeKit Secure Video service.

The EufyCam S3 Pro launches this week as a two-camera bundle with one HomeBase S380 for $549.99. The HomeBase 3 enables smart alerts and local storage (16GB onboard storage, expandable up to 16 TB). It also connects the S3 Pro to Apple Home, making it the first Eufy camera to work with Apple’s smart home platform since the EufyCam 2 series from 2019.

Eufy spokesperson Brett White confirmed to The Verge that the S3 Pro will be compatible with HomeKit Secure Video. Apple’s end-to-end encrypted video storage service. “The plan is for all future devices to have Apple Home compatibility, and we’re looking into grandfathering older devices, too,” said White.

The S3 Pro has a new color night vision feature called MaxColor Vision that promises “daylike footage even in pitch-dark conditions, without the need for a spotlight.” I saw a demo of this technology at the IFA tech show in Berlin this month, and it was impressive.

A camera was positioned inside a completely dark room, sending video to a monitor outside, on which I could see everything in the room as if it were daytime. Eufy says a 1/1.8-inch CMOS sensor, F1.0 aperture, and an AI-powered image signal processor power the tech.

While the color night vision doesn’t use a spotlight, the S3 Pro does include a motion-activated spotlight that Eufy says can adapt based on real-time lighting to give you the best image. The light can also be manually adjusted using the app while viewing a live stream.

New dual motion detection uses radar sensing technology combined with passive infrared (PIR) technology. This should identify people more accurately and not send alerts that there’s a person in the yard when it’s a tree blowing in the wind. Eufy says it reduces false alerts by up to 99 percent.

The S3 Pro is battery-powered with a 13,000 mAh battery that provides up to a quoted 365 days of power. A built-in solar panel can power the camera power for longer. In my testing of the EufyCam S3, which also has a built-in solar panel, I’ve not had to recharge it in over a year.

The S3 Pro’s solar panel is 50 percent larger than the S3’s, and Eufy claims it can keep the camera fully charged with just an hour of sunlight a day. Eufy also includes an external solar panel with the camera, so you can install the camera under an eave and still get power.

Eufy says the S3 Pro records up to 4K resolution and is powered by a USB-C cable. When wired, it can record 24/7 — the first consumer-level battery-powered camera from Eufy with this capability.

- Full-duplex two-way audio

- Dual-mic array that can record human voices up to 26 feet away

- A 100dB siren and motion-activated voice warnings

- A 24/7 snapshot feature that can take a photo every minute

- Activity and privacy zones

- Integration with Google Home and Amazon Alexa

- IP67 weatherproofing

- 8x digital zoom

Following some serious security and privacy incidents in 2022, Eufy has published a new list of privacy commitments on its website. The company also worked with cybersecurity expert Ralph Echemendia following the issues, and last year, he completed an assessment that, the company claims, shows it has “met all proactive and reactive security benchmarks.”

Servers computers

22U Server Rack Cabinet Assembly Instructions

Technology

Google hails move to Rust for huge drop in memory vulnerabilities

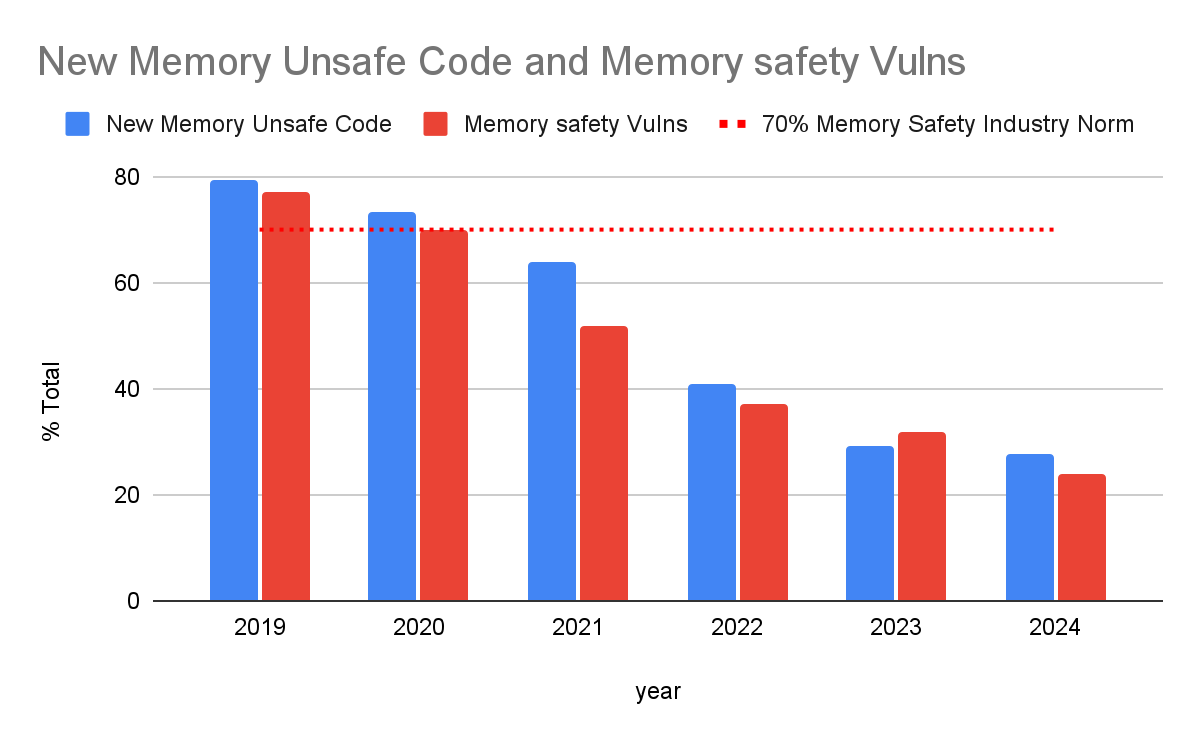

Google has hailed Rust, a memory safe programming language, as a significant factor in its ability to cut down on vulnerabilities as part of its Safe Coding initiative.

Memory access vulnerabilities often occur in programming languages that are not memory safe. In 2019, memory safety issues accounted for 76% of all Android vulnerabilities.

In response, many developers and tech giants are moving towards using memory safe languages that help them produce secure-by-design software and technology.

Vulnerabilities Rusting away

In its blog, Google presented a simulation of the transition to memory safe languages through the gradual use of memory safe code in new projects and developments over a five year period. The results showed that despite a gradual rise in code being written in memory unsafe languages, memory safety vulnerabilities dropped significantly.

This, Google says, is because vulnerabilities decay exponentially. New code that is written in memory unsafe languages often contains bugs and vulnerabilities, but as the code is reviewed and refreshed vulnerabilities are gradually removed making the code safer over time. Ergo, the main source of vulnerabilities is new code, and by prioritizing the use of memory safe programming languages when starting new projects and developments, the number of vulnerabilities drops significantly.

In Google’s own shift towards using memory safe programming languages there has been a significant drop in the number of memory-related vulnerabilities, with memory safe vulnerabilities down to 24% in 2024 – a stark contrast from 2019 and well below the industry norm of 70%.

Using memory safe languages is not a silver bullet however, and Google acknowledges that “with the benefit of hindsight, it’s evident that we have yet to achieve a truly scalable and sustainable solution that achieves an acceptable level of risk.”

The strategies for approaching memory safety vulnerabilities began with reactive patching, where memory safe vulnerabilities are prioritized by software manufacturers, leaving other issues to be exploited more rapidly.

The second approach consisted of proactive mitigating, where developers were encouraged to include mitigations such as stack canaries and control-flow integrity at the cost of execution speed, battery life, tail latencies, and memory usage. Developers were also unable to keep up with attackers’ ability to exploit vulnerabilities in new and creative ways.

Third came proactive vulnerability discovery, where the focus was on detecting vulnerabilities through ‘fuzzing’, where vulnerabilities are tracked down through the symptoms of unsafe memory. However, as Google points out, these tools are inefficient and time-intensive for teams to use and often do not spot all vulnerabilities even with multiple passes.

Google’s fourth tactic is to therefore engage in high-assurance prevention and secure-by-design development. By using programming languages such as Rust, developers know and understand the properties of the code they have written and can infer vulnerabilities based on those properties. This reduces the cost on developers by reducing the number of vulnerabilities from the start, including vulnerabilities outside of memory safe issues. This cumulative cost reduction also has the added benefit of making developers more productive.

“The concept is simple:,” the Google blog notes, “once we turn off the tap of new vulnerabilities, they decrease exponentially, making all of our code safer, increasing the effectiveness of security design, and alleviating the scalability challenges associated with existing memory safety strategies such that they can be applied more effectively in a targeted manner.”

More from TechRadar Pro

Technology

Tiny solar-powered drones could stay in the air forever

CoulombFly, a prototype miniature solar-powered drone

Wei Shen, Jinzhe Peng and Mingjing Qi

A drone weighing just 4 grams is the smallest solar-powered aerial vehicle to fly yet, thanks to its unusual electrostatic motor and tiny solar panels that produce extremely high voltages. Although the hummingbird-sized prototype only operated for an hour, its makers say their approach could result in insect-sized drones that can stay in the air indefinitely.

Tiny drones are an attractive solution to a range of communications, spying and search-and-rescue problems, but they are hampered by poor battery life, while solar-powered versions struggle to generate enough power to sustain themselves.

As you miniaturise solar-powered drones, their solar panels shrink, reducing the amount of energy available, says Mingjing Qi at Beihang University in China. The efficiency of electric motors also declines as more energy is lost to heat, he says.

To avoid this diminishing cycle, Qi and his colleagues developed a simple circuit that scales up the voltage produced by solar panels to between 6000 and 9000 volts. Rather than using an electromagnetic motor like those in electric cars, quadcopters and various robots, they used an electrostatic propulsion system to power a 10-centimetre rotor.

This motor works by attracting and repelling alternating components with electrical charges arranged in a ring, creating torque, which spins a single rotor blade like a helicopter. The lightweight components are made with wafer-thin slivers of carbon fibre covered in extremely delicate aluminium foil. Their high voltage demands are actually a bonus, as current is reduced, leading to very low losses to heat.

“The operating current is extremely low for the same power output, resulting in almost no heat being generated by the motor. The high efficiency and low power consumption of the motor allow us to power the vehicle with a very small solar panel,” says Qi. “We have managed to get a micro-aerial vehicle to fly using natural sunlight for the first time. Before this, only very large, ultralight aircraft could achieve this.”

The researchers’ machine, which they call CoulombFly, weighs just 4.21 grams and managed a 1-hour flight before it failed mechanically. Qi says these weak points can be designed out, and future versions will effectively be able to fly indefinitely by using solar panels in the daytime and harvesting radio signals, such as 4G and Wi-Fi, for energy at night.

CoulombFly is capable of carrying a payload of 1.59 grams, which could allow for small sensors, computers or cameras. But with refined designs, the researchers think this could be increased to 4 grams, and fixed-wing versions could even carry up to 30 grams. Work is also under way to create an even smaller version of CoulombFly that has a rotor less than 1 centimetre in diameter.

Topics:

-

Womens Workouts3 days ago

Womens Workouts3 days ago3 Day Full Body Women’s Dumbbell Only Workout

-

News4 days ago

News4 days agoOur millionaire neighbour blocks us from using public footpath & screams at us in street.. it’s like living in a WARZONE – WordupNews

-

News1 week ago

News1 week agoYou’re a Hypocrite, And So Am I

-

Technology1 week ago

Technology1 week agoWould-be reality TV contestants ‘not looking real’

-

Sport1 week ago

Sport1 week agoJoshua vs Dubois: Chris Eubank Jr says ‘AJ’ could beat Tyson Fury and any other heavyweight in the world

-

Science & Environment1 week ago

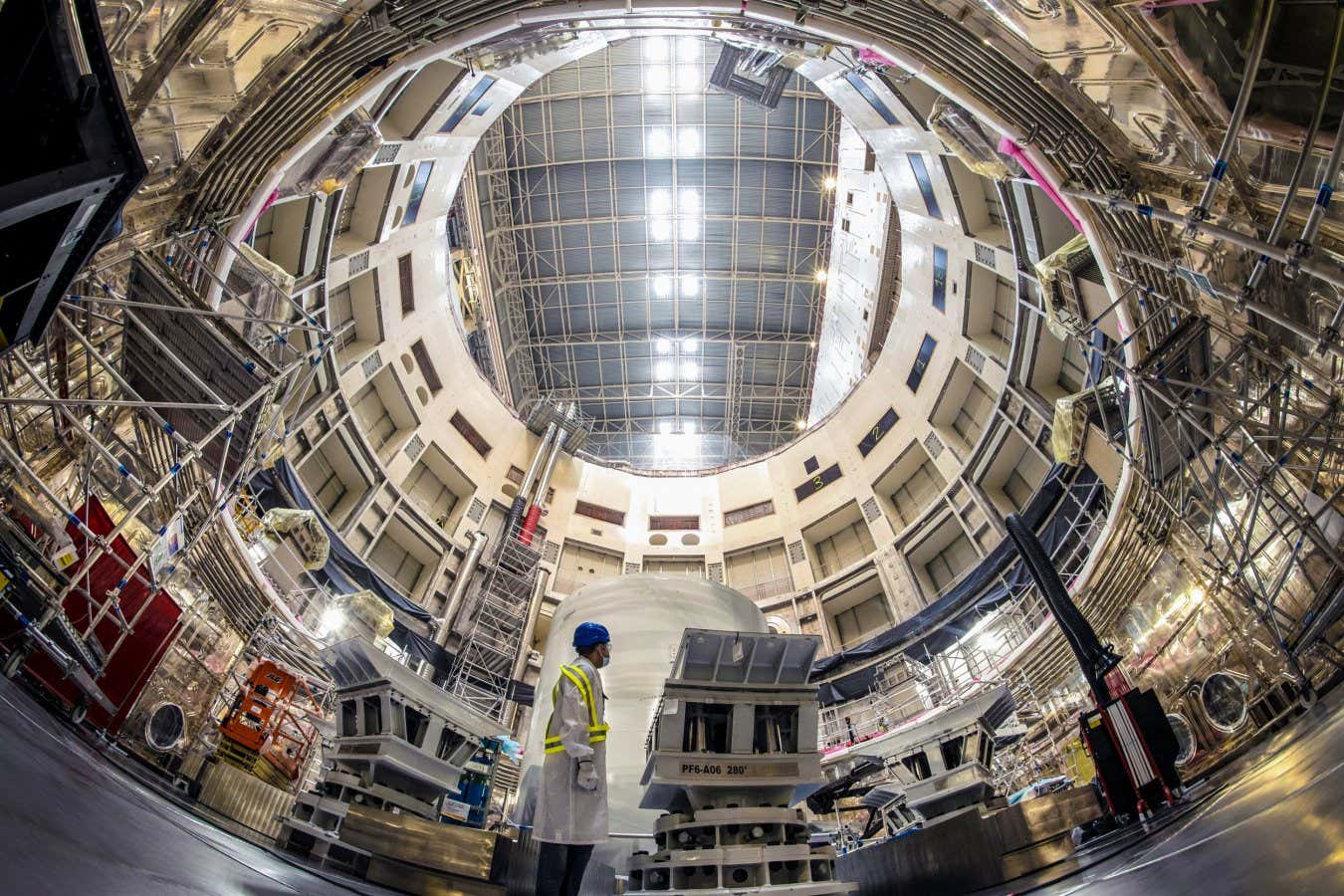

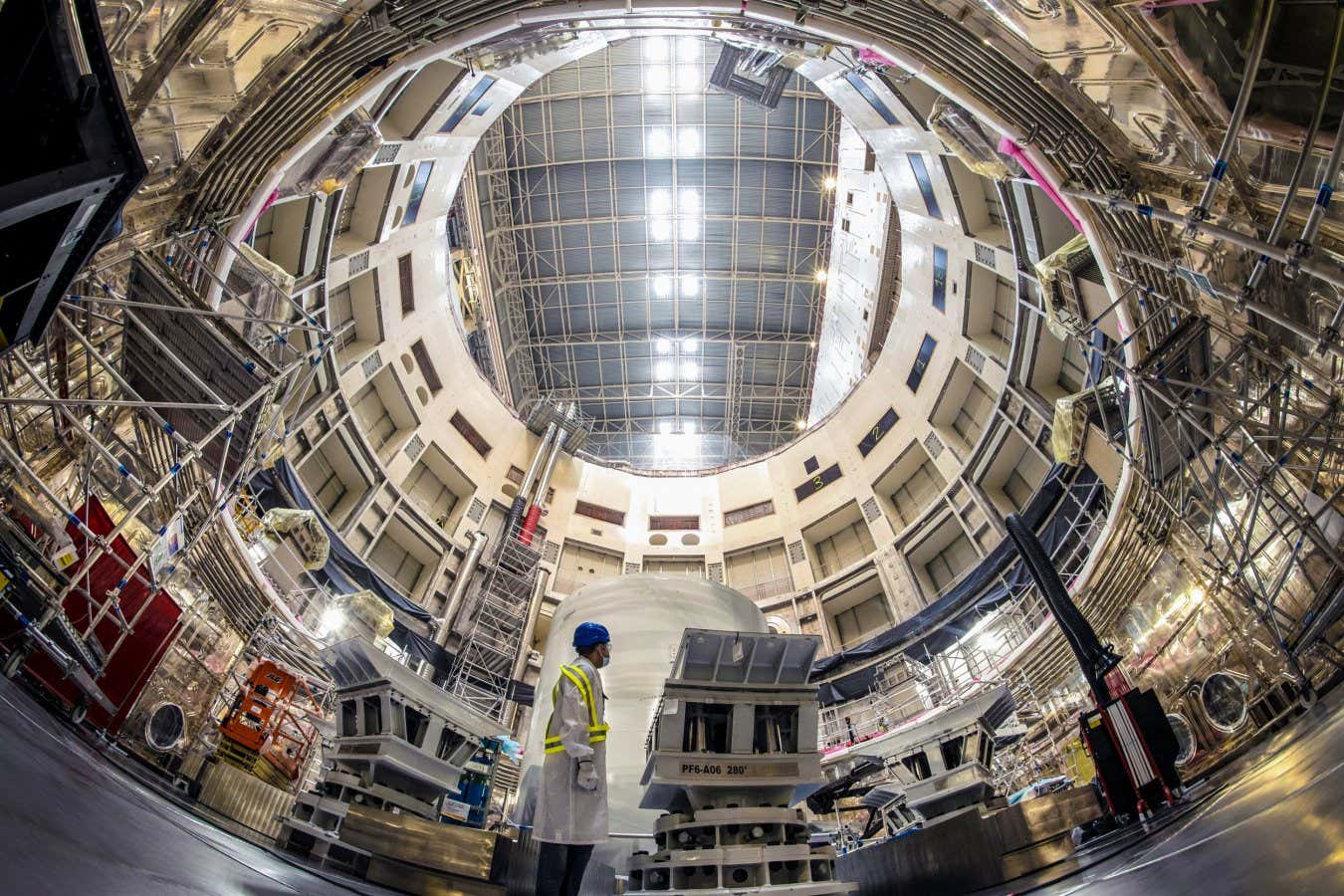

Science & Environment1 week agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

Science & Environment1 week ago

Science & Environment1 week agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Science & Environment1 week ago

Science & Environment1 week agoHow to wrap your mind around the real multiverse

-

Science & Environment1 week ago

Science & Environment1 week agoSunlight-trapping device can generate temperatures over 1000°C

-

Science & Environment1 week ago

Science & Environment1 week ago‘Running of the bulls’ festival crowds move like charged particles

-

Science & Environment1 week ago

Science & Environment1 week agoHow to unsnarl a tangle of threads, according to physics

-

Science & Environment1 week ago

Science & Environment1 week agoLiquid crystals could improve quantum communication devices

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum ‘supersolid’ matter stirred using magnets

-

Science & Environment1 week ago

Science & Environment1 week agoHyperelastic gel is one of the stretchiest materials known to science

-

Science & Environment1 week ago

Science & Environment1 week agoWhy this is a golden age for life to thrive across the universe

-

Science & Environment1 week ago

Science & Environment1 week agoPhysicists are grappling with their own reproducibility crisis

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCardano founder to meet Argentina president Javier Milei

-

News1 week ago

News1 week agoIsrael strikes Lebanese targets as Hizbollah chief warns of ‘red lines’ crossed

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDZ Bank partners with Boerse Stuttgart for crypto trading

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoEthereum is a 'contrarian bet' into 2025, says Bitwise exec

-

Womens Workouts6 days ago

Womens Workouts6 days agoEverything a Beginner Needs to Know About Squatting

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum forces used to automatically assemble tiny device

-

Science & Environment1 week ago

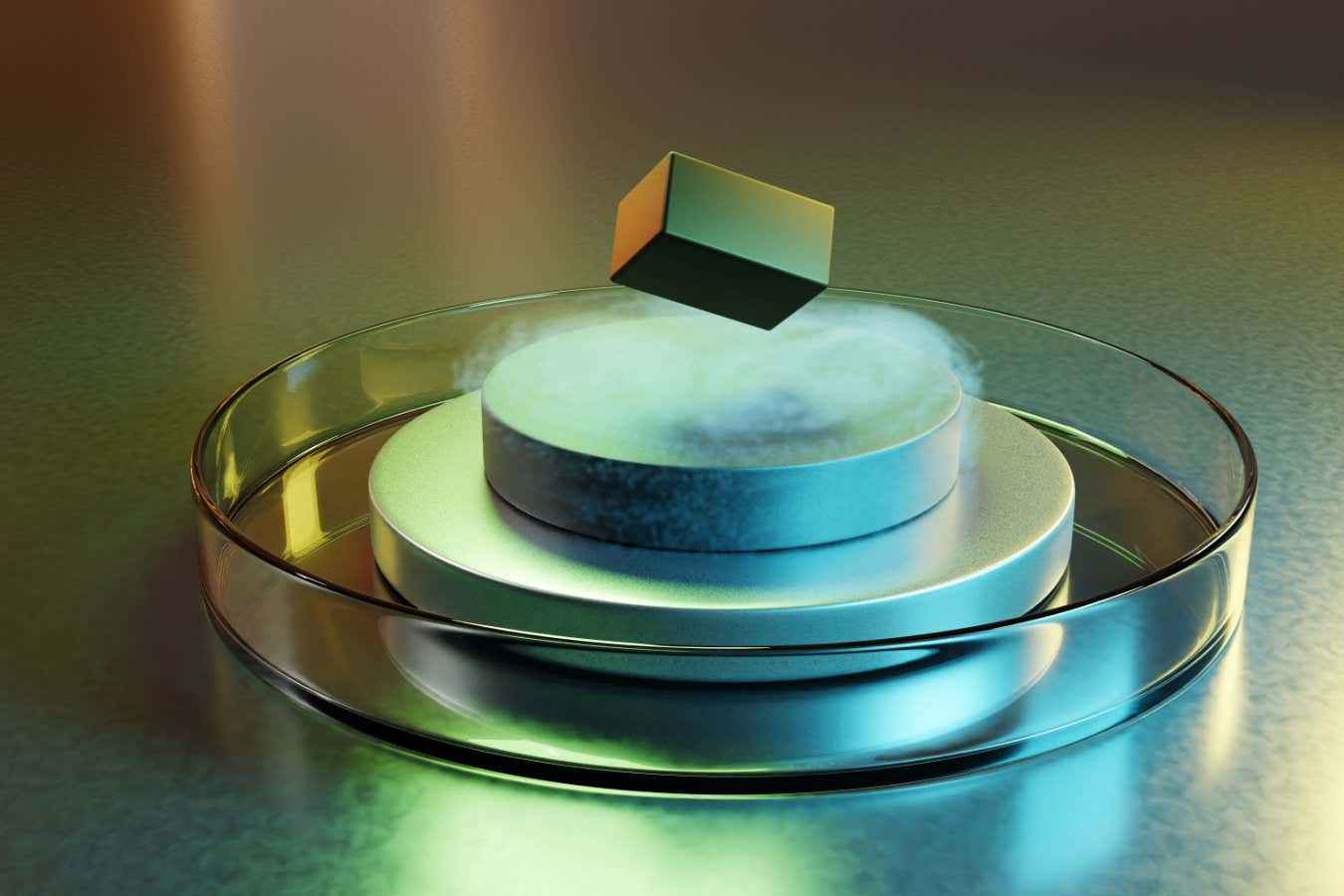

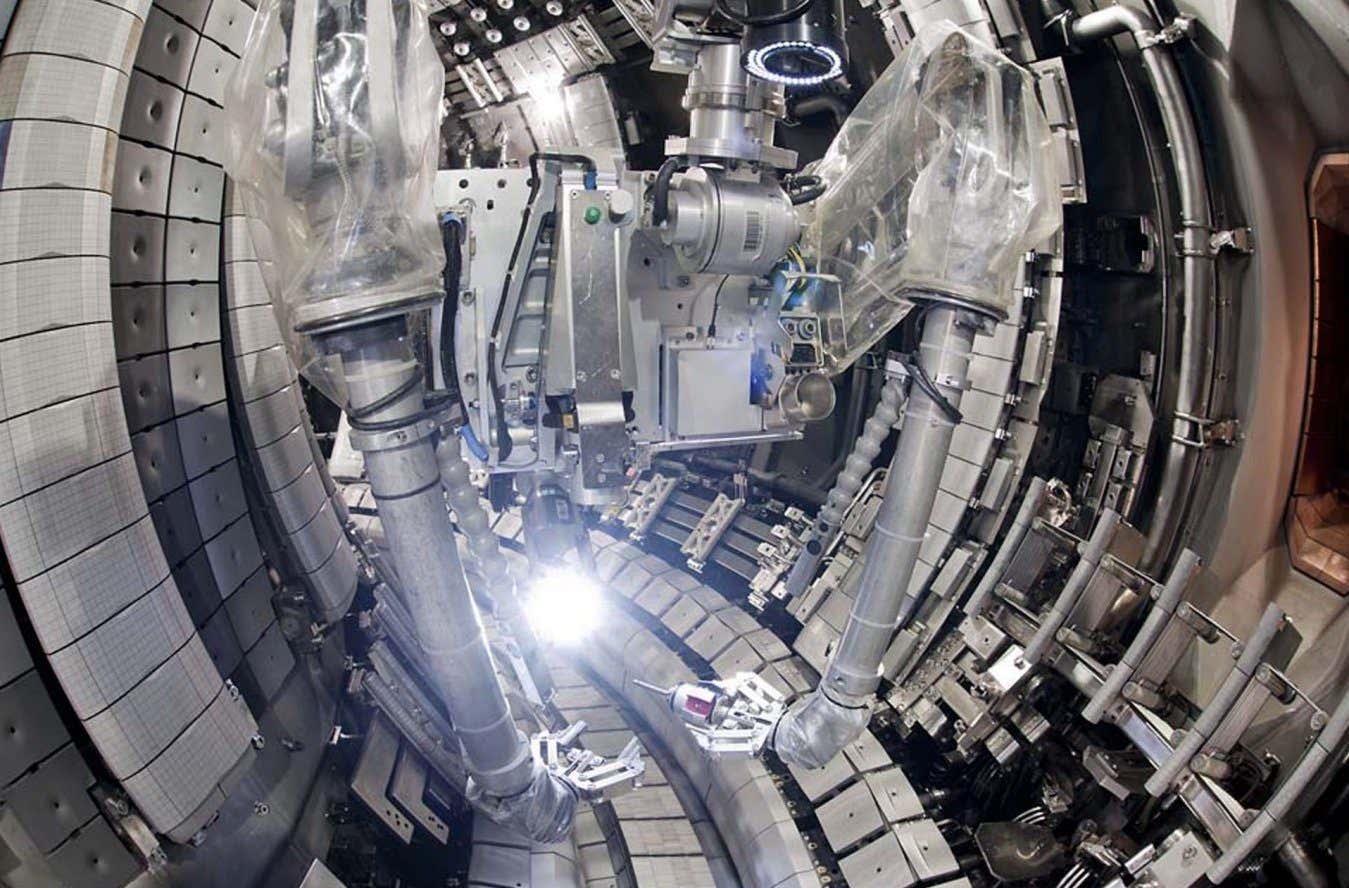

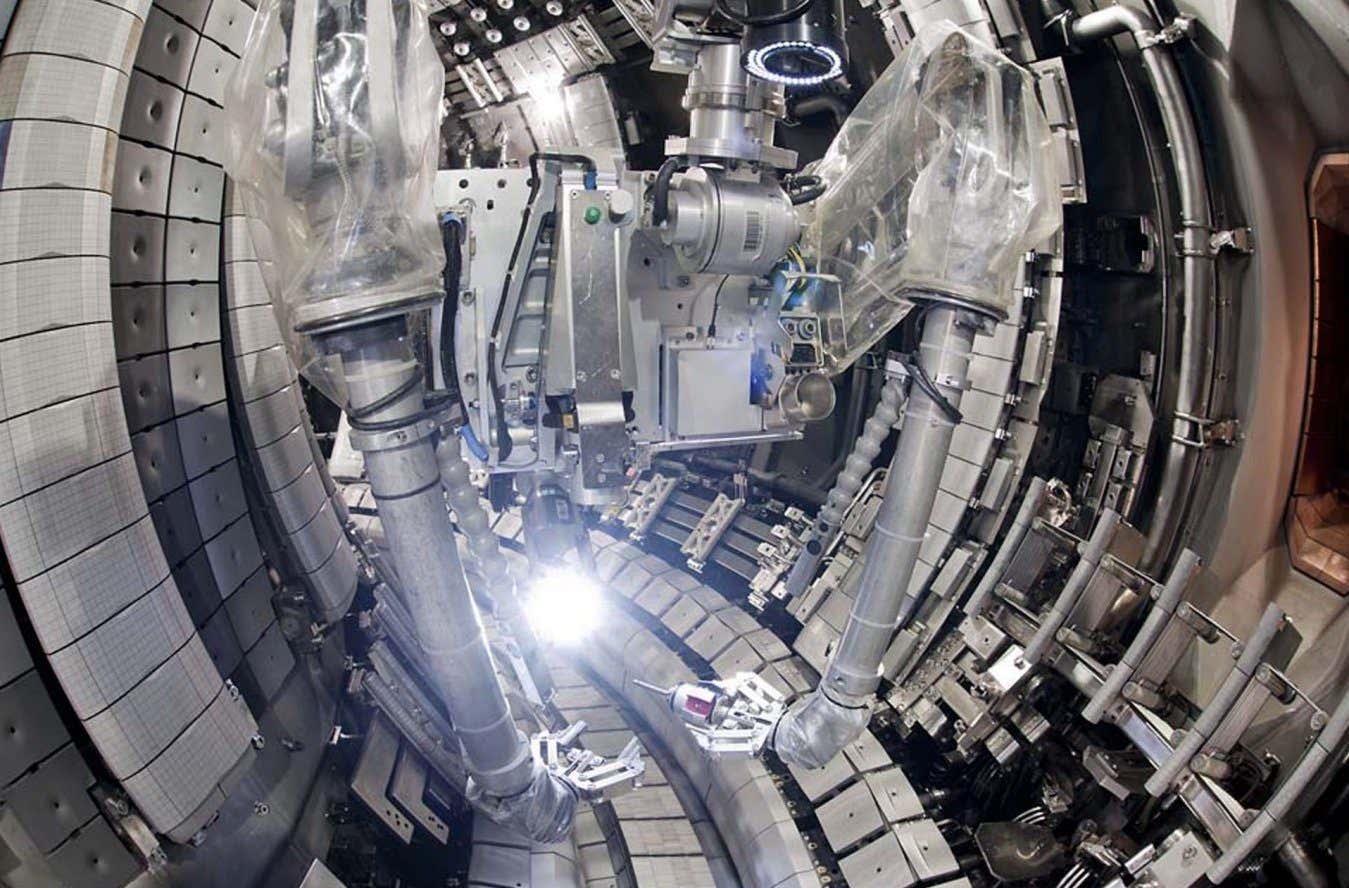

Science & Environment1 week agoNuclear fusion experiment overcomes two key operating hurdles

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin miners steamrolled after electricity thefts, exchange ‘closure’ scam: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDorsey’s ‘marketplace of algorithms’ could fix social media… so why hasn’t it?

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoRedStone integrates first oracle price feeds on TON blockchain

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin bulls target $64K BTC price hurdle as US stocks eye new record

-

News1 week ago

News1 week agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCoinbase’s cbBTC surges to third-largest wrapped BTC token in just one week

-

Womens Workouts6 days ago

Womens Workouts6 days agoBest Exercises if You Want to Build a Great Physique

-

Science & Environment4 days ago

Science & Environment4 days agoMeet the world's first female male model | 7.30

-

Science & Environment1 week ago

Science & Environment1 week agoCaroline Ellison aims to duck prison sentence for role in FTX collapse

-

Science & Environment1 week ago

Science & Environment1 week agoNerve fibres in the brain could generate quantum entanglement

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCrypto scammers orchestrate massive hack on X but barely made $8K

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoLow users, sex predators kill Korean metaverses, 3AC sues Terra: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘No matter how bad it gets, there’s a lot going on with NFTs’: 24 Hours of Art, NFT Creator

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoSEC asks court for four months to produce documents for Coinbase

-

Sport1 week ago

Sport1 week agoUFC Edmonton fight card revealed, including Brandon Moreno vs. Amir Albazi headliner

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBlockdaemon mulls 2026 IPO: Report

-

Business1 week ago

How Labour donor’s largesse tarnished government’s squeaky clean image

-

Technology1 week ago

Technology1 week agoiPhone 15 Pro Max Camera Review: Depth and Reach

-

Womens Workouts6 days ago

Womens Workouts6 days agoKeep Your Goals on Track This Season

-

News4 days ago

News4 days agoFour dead & 18 injured in horror mass shooting with victims ‘caught in crossfire’ as cops hunt multiple gunmen

-

Travel3 days ago

Travel3 days agoDelta signs codeshare agreement with SAS

-

Science & Environment1 week ago

Science & Environment1 week agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment1 week ago

Science & Environment1 week agoLaser helps turn an electron into a coil of mass and charge

-

News1 week ago

News1 week agoChurch same-sex split affecting bishop appointments

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago$12.1M fraud suspect with ‘new face’ arrested, crypto scam boiler rooms busted: Asia Express

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCertiK Ventures discloses $45M investment plan to boost Web3

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBeat crypto airdrop bots, Illuvium’s new features coming, PGA Tour Rise: Web3 Gamer

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoTelegram bot Banana Gun’s users drained of over $1.9M

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘Silly’ to shade Ethereum, the ‘Microsoft of blockchains’ — Bitwise exec

-

Business1 week ago

Thames Water seeks extension on debt terms to avoid renationalisation

-

Politics1 week ago

‘Appalling’ rows over Sue Gray must stop, senior ministers say | Sue Gray

-

News1 week ago

News1 week agoBrian Tyree Henry on voicing young Megatron, his love for villain roles

-

Womens Workouts6 days ago

Womens Workouts6 days agoHow Heat Affects Your Body During Exercise

-

Womens Workouts3 days ago

Womens Workouts3 days ago3 Day Full Body Toning Workout for Women

-

Health & fitness1 week ago

Health & fitness1 week agoThe secret to a six pack – and how to keep your washboard abs in 2022

-

Science & Environment1 week ago

Science & Environment1 week agoQuantum time travel: The experiment to ‘send a particle into the past’

-

Science & Environment1 week ago

Science & Environment1 week agoBeing in two places at once could make a quantum battery charge faster

-

Science & Environment1 week ago

Science & Environment1 week agoWhy we need to invoke philosophy to judge bizarre concepts in science

-

Science & Environment1 week ago

Science & Environment1 week agoHow one theory ties together everything we know about the universe

-

Science & Environment1 week ago

Science & Environment1 week agoUK spurns European invitation to join ITER nuclear fusion project

-

Science & Environment1 week ago

Science & Environment1 week agoHow do you recycle a nuclear fusion reactor? We’re about to find out

-

Science & Environment1 week ago

Science & Environment1 week agoTiny magnet could help measure gravity on the quantum scale

-

Technology1 week ago

Technology1 week agoFivetran targets data security by adding Hybrid Deployment

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago2 auditors miss $27M Penpie flaw, Pythia’s ‘claim rewards’ bug: Crypto-Sec

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoLouisiana takes first crypto payment over Bitcoin Lightning

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoJourneys: Robby Yung on Animoca’s Web3 investments, TON and the Mocaverse

-

CryptoCurrency1 week ago

CryptoCurrency1 week ago‘Everything feels like it’s going to shit’: Peter McCormack reveals new podcast

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoSEC sues ‘fake’ crypto exchanges in first action on pig butchering scams

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoBitcoin price hits $62.6K as Fed 'crisis' move sparks US stocks warning

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoVonMises bought 60 CryptoPunks in a month before the price spiked: NFT Collector

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoVitalik tells Ethereum L2s ‘Stage 1 or GTFO’ — Who makes the cut?

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoEthereum falls to new 42-month low vs. Bitcoin — Bottom or more pain ahead?

-

News1 week ago

News1 week agoBrian Tyree Henry on his love for playing villains ahead of “Transformers One” release

-

Womens Workouts6 days ago

Womens Workouts6 days agoWhich Squat Load Position is Right For You?

-

News4 days ago

News4 days agoWhy Is Everyone Excited About These Smart Insoles?

-

Politics1 week ago

Politics1 week agoTrump says he will meet with Indian Prime Minister Narendra Modi next week

-

Technology1 week ago

Technology1 week agoCan technology fix the ‘broken’ concert ticketing system?

-

Health & fitness1 week ago

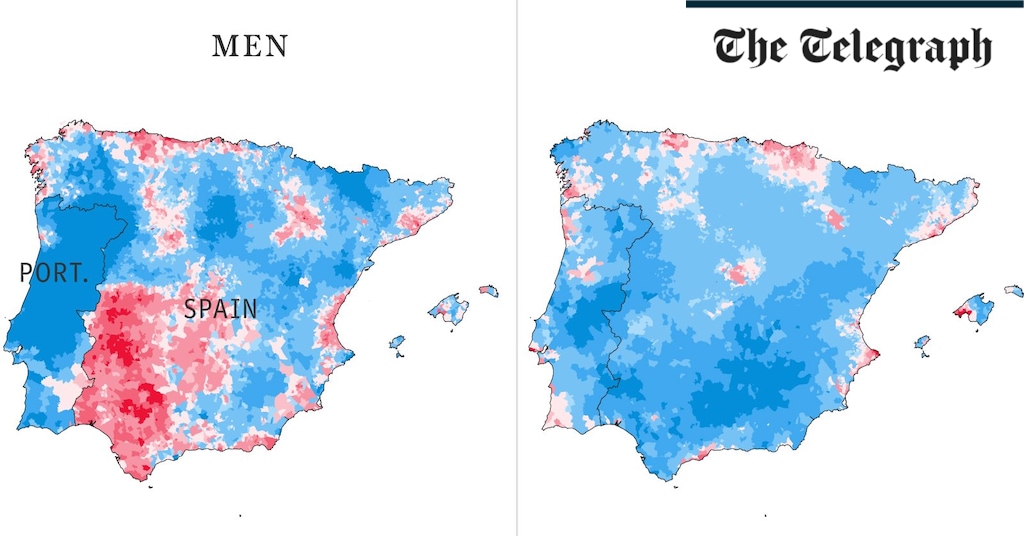

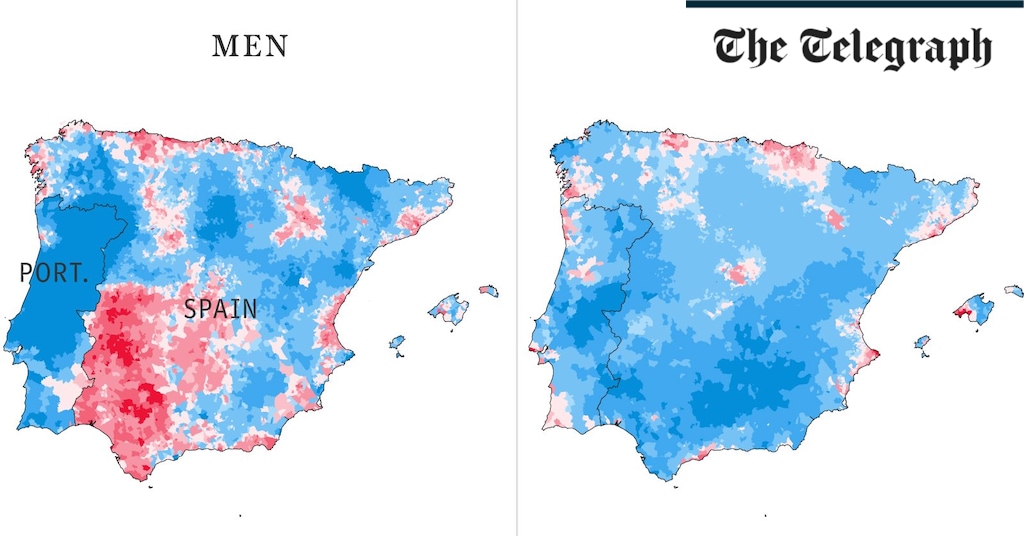

Health & fitness1 week agoThe maps that could hold the secret to curing cancer

-

News1 week ago

News1 week ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Science & Environment1 week ago

Science & Environment1 week agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Science & Environment1 week ago

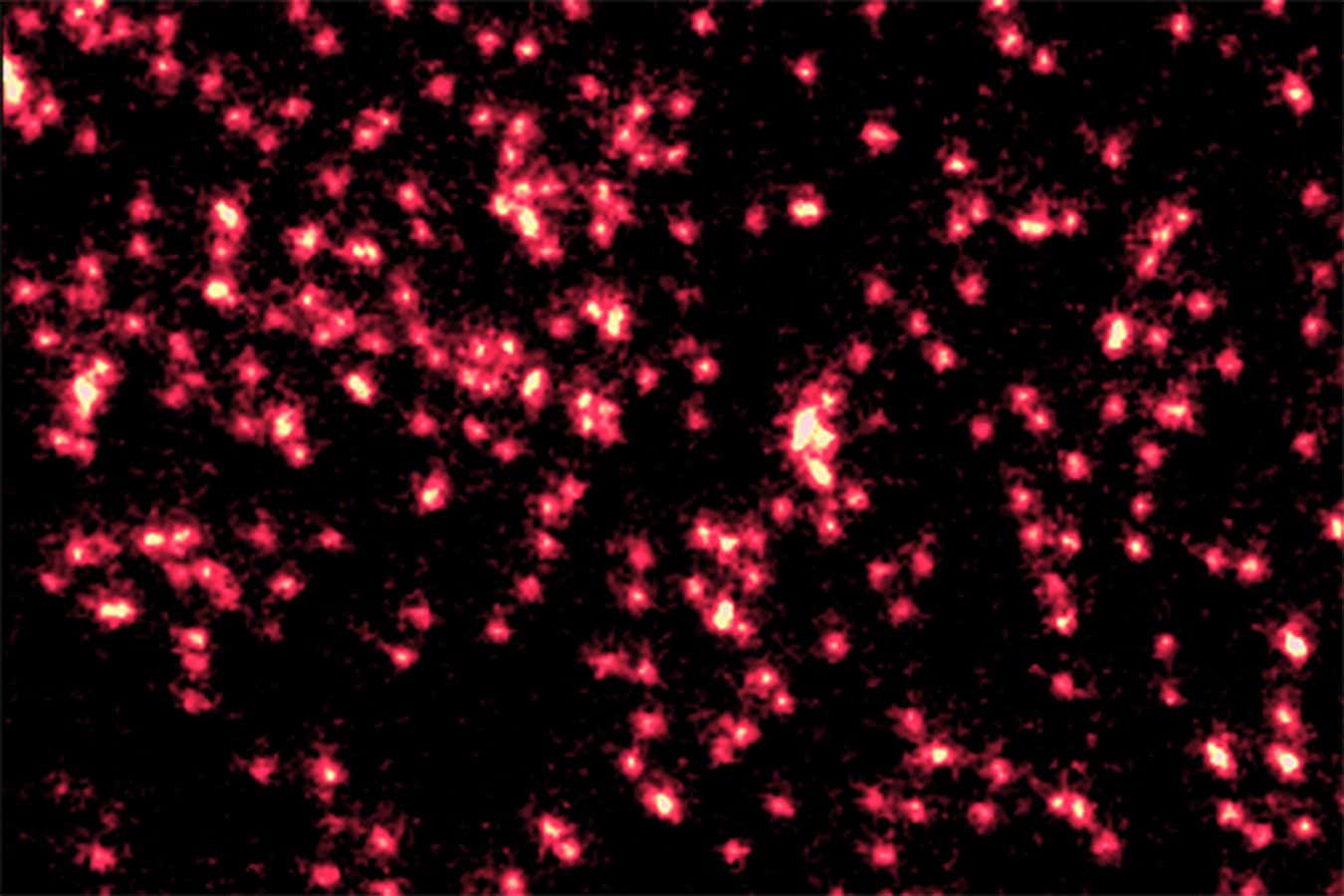

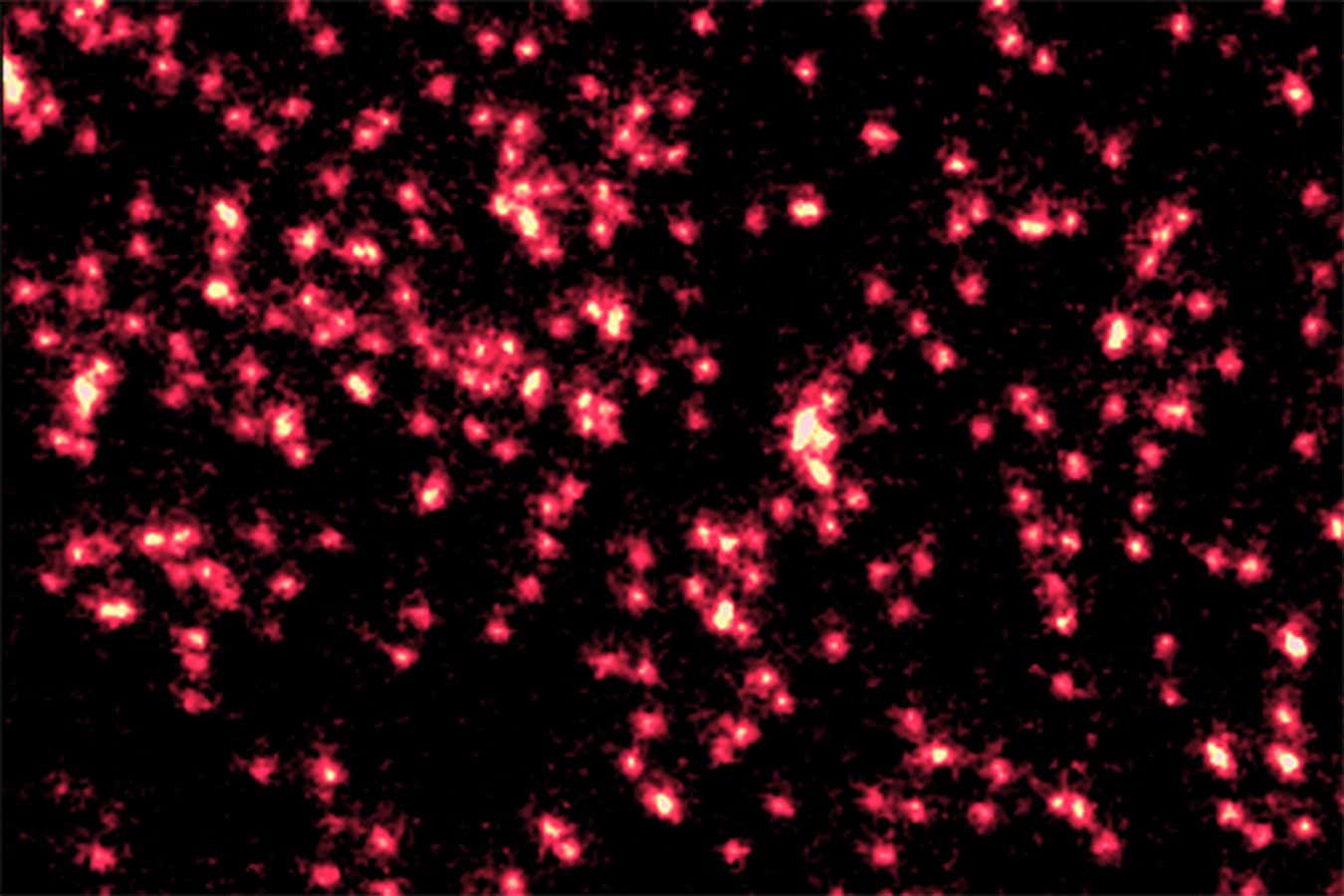

Science & Environment1 week agoSingle atoms captured morphing into quantum waves in startling image

-

Science & Environment1 week ago

Science & Environment1 week agoFuture of fusion: How the UK’s JET reactor paved the way for ITER

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoHelp! My parents are addicted to Pi Network crypto tapper

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoCZ and Binance face new lawsuit, RFK Jr suspends campaign, and more: Hodler’s Digest Aug. 18 – 24

-

Fashion Models1 week ago

Fashion Models1 week agoMixte

-

Politics1 week ago

Politics1 week agoLabour MP urges UK government to nationalise Grangemouth refinery

-

Money7 days ago

Money7 days agoBritain’s ultra-wealthy exit ahead of proposed non-dom tax changes

-

Womens Workouts6 days ago

Womens Workouts6 days agoWhere is the Science Today?

-

Womens Workouts6 days ago

Womens Workouts6 days agoSwimming into Your Fitness Routine

-

News6 days ago

News6 days agoBangladesh Holds the World Accountable to Secure Climate Justice

-

News1 week ago

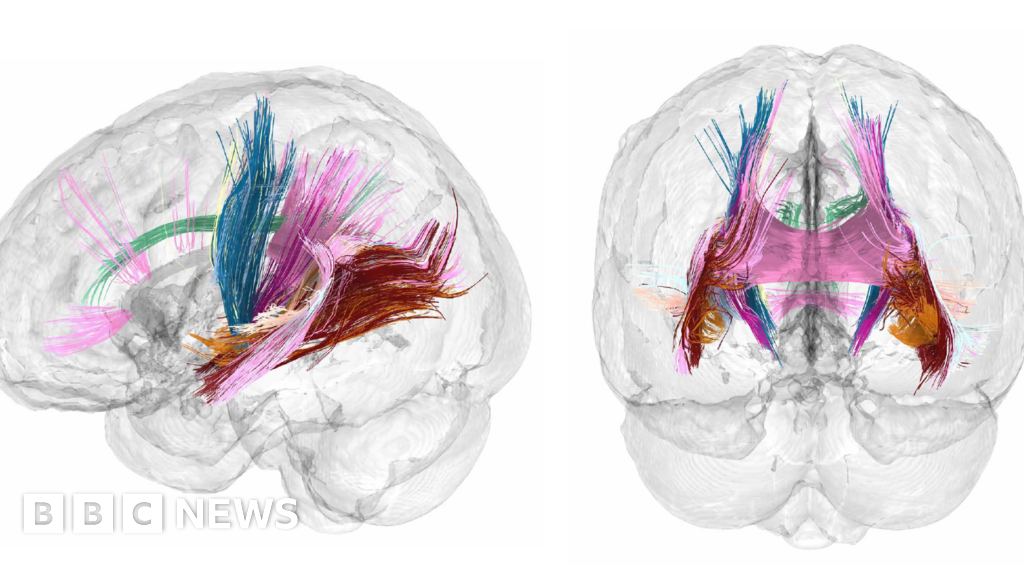

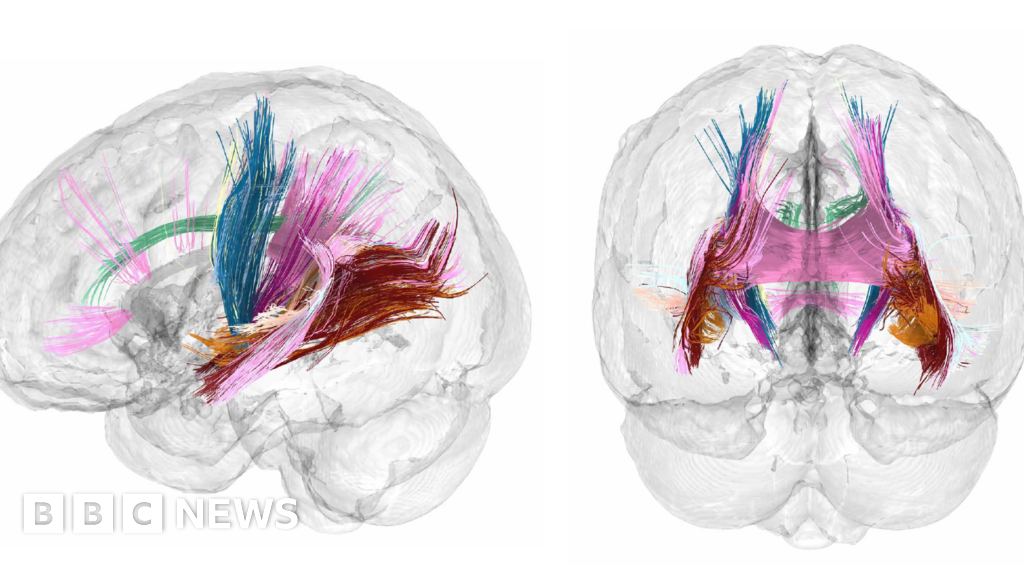

News1 week agoBrain changes during pregnancy revealed in detailed map

-

Science & Environment1 week ago

Science & Environment1 week agoA slight curve helps rocks make the biggest splash

-

News1 week ago

News1 week agoRoad rage suspects in custody after gunshots, drivers ramming vehicles near Boise

-

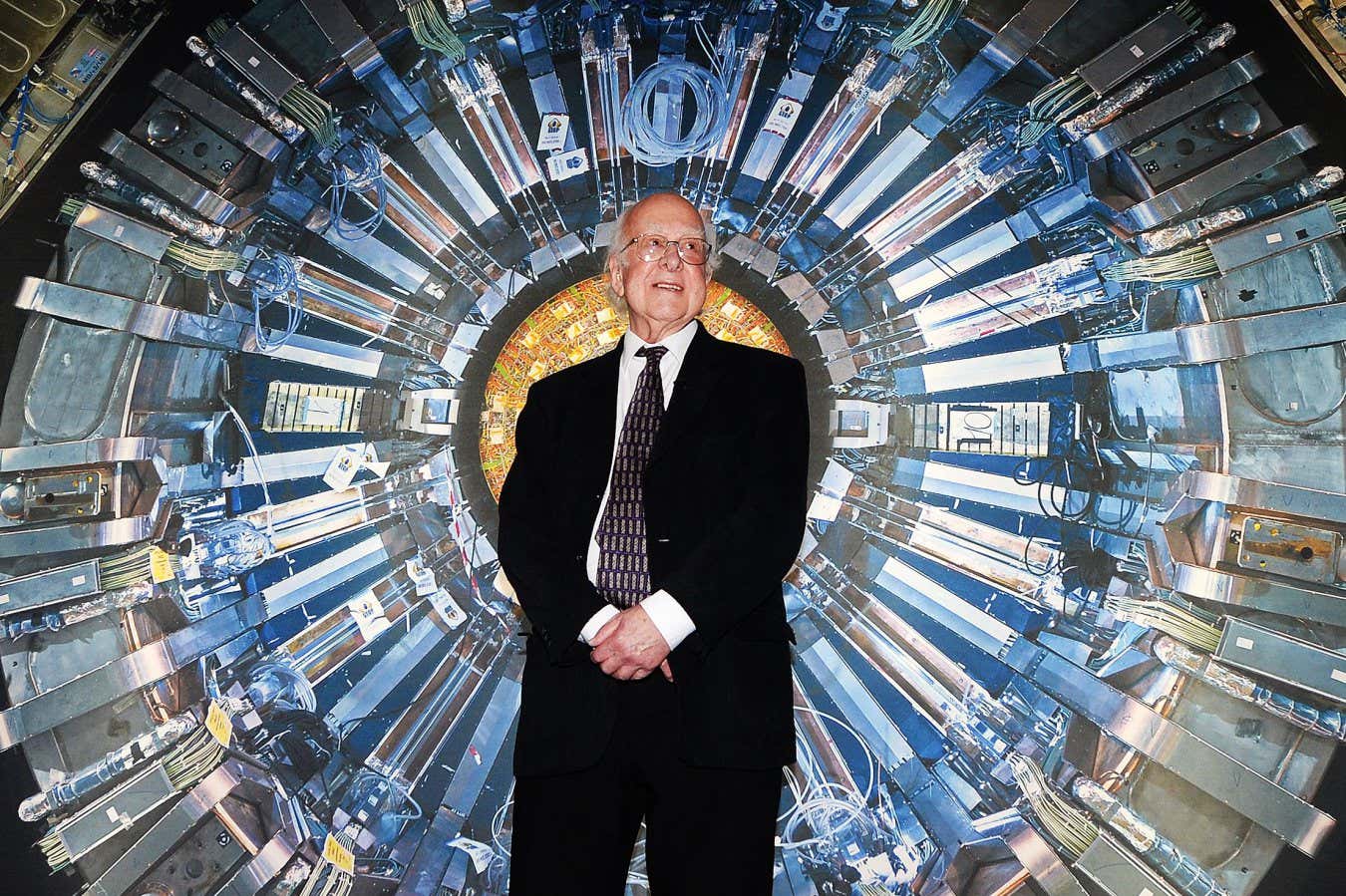

Science & Environment1 week ago

Science & Environment1 week agoHow Peter Higgs revealed the forces that hold the universe together

-

Science & Environment1 week ago

Science & Environment1 week agoA tale of two mysteries: ghostly neutrinos and the proton decay puzzle

-

Politics1 week ago

Politics1 week agoLib Dems aim to turn election success into influence

-

CryptoCurrency1 week ago

CryptoCurrency1 week agoDecentraland X account hacked, phishing scam targets MANA airdrop

You must be logged in to post a comment Login