I’m hoping DJI’s affordable new FPV goggles will be the missing puzzle piece — a way to cheaply buy the “It feels like I’m flying!” experience I had with the DJI Avata for maybe $400 or $500 tops, rather than the current $800 to $1,000 you might have to pay.

Technology

You can now try out Microsoft’s new AI-powered Xbox chatbot

Microsoft has been testing a new AI-powered Xbox chatbot, and now Xbox Insiders can try it out for the first time. I exclusively revealed the existence of this new “Xbox Support Virtual Agent” earlier this year, and Microsoft now says it’s designed “to help Xbox players more efficiently solve their support-related gaming issues.”

Xbox Insiders in the US can start trying out this new Xbox AI chatbot at support.xbox.com, and it will answer questions around Xbox console and game support issues. “We value the feedback from Xbox Insiders for this preview experience and any feedback received will be used to improve the Support Virtual Agent,” says Megha Dudani, senior product manager lead at Xbox.

This Xbox chatbot will appear as an AI character that animates when responding, or as a colorful Xbox orb. It’s part of a larger effort inside Microsoft to apply AI to its Xbox platform and services, ahead of some AI-powered features coming to Xbox consoles soon.

Unlike other parts of Microsoft, Xbox has been cautious in how it approaches AI features — despite a clear mandate from CEO Satya Nadella to focus all of Microsoft’s businesses around AI. Microsoft has largely focused on the developer side of AI tools so far, but that’s clearly changing with the introduction of a support chatbot.

I reported earlier this year that Microsoft is also working on bringing AI features to game content creation, game operations, and its Xbox platform and devices. This includes experimenting with AI-generated art and assets for games, AI game testing, and the generative AI NPCs that Microsoft has already partnered with Inworld to develop.

Technology

AIRIS is a learning AI teaching itself how to play Minecraft

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

A new learning AI has been left to its own devices within an instance of Minecraft as the artificial intelligence learns how to play the game through doing, says AI development company SingularityNET and the Artificial Superintelligence Alliance (ASI Alliance). The AI, named AIRIS (Autonomous Intelligent Reinforcement Inferred Symbolism), is essentially starting from nothing inside Minecraft to learn how to play the game using nothing but the game’s feedback loop to teach it.

AI has been set loose to learn a game before, but often in more linear 2D spaces. With Minecraft, AIRIS can enter a more complex 3D world and slowly start navigating and exploring to see what it can do and, more importantly, whether the AI can understand game design goals without necessarily being told them. How does it react to changes in the environment? Can it figure out different paths to the same place? Can it play the game with anything resembling the creativity that human players employ in Minecraft?

VentureBeat reached out to SingularityNET and ASI Alliance to ask why they chose Minecraft specifically.

“Early versions of AIRIS were tested in simple 2D grid world puzzle game environments,” a representative from the company replied. “We needed to test the system in a 3D environment that was more complex and open ended. Minecraft fits that description nicely, is a very popular game, and has all of the technical requirements needed to plug an AI into it. Minecraft is also already used as a Reinforcement Learning benchmark. That will allow us to directly compare the results of AIRIS to existing algorithms.”

They also provided a more in-depth explanation of how it works.

“The agent is given two types of input from the environment and a list of actions that it can perform. The first type of input is a 5 x 5 x 5 3D grid of the block names that surround the agent. That’s how the agent “sees” the world. The second type of input is the current coordinates of the agent in the world. That gives us the option to give the agent a location that we want it to reach. The list of actions in this first version are to move or jump in one 8 directions (the four cardinal directions and diagonally) for a total of 16 actions. Future versions will have many more actions as we expand the agent’s capabilities to include mining, placing blocks, collecting resources, fighting mobs, and crafting.

“The agent begins in ‘Free Roam’”’ mode and seeks to explore the world around it. Building an internal map of where it has been that can be viewed with the included visualization tool. It learns how to navigate the world and as it encounters obstacles like trees, mountains, caves, etc. it learns and adapts to them. For example, if it falls into a deep cave, it will explore its way out. Its goal is to fill in any empty space in its internal map. So it seeks out ways to get to places it hasn’t yet seen.

“If we give the agent a set of coordinates, it will stop freely exploring and navigate its way to wherever we want it to go. Exploring its way through areas that it has never seen. That could be on top of a mountain, deep in a cave, or in the middle of an ocean. Once it reaches its destination, we can give it another set of coordinates or return it to free roam to explore from there.

“The free exploration and ability to navigate through unknown areas is what sets AIRIS apart from traditional Reinforcement Learning. These are tasks that RL is not capable of doing regardless of how many millions of training episodes or how much compute you give it.”

For game development, a successful use-case for AIRIS may include automatic bug and stress tests for software. A hypothetical AIRIS that can run across the entirety of Fallout 4 could create bug reports when interacting with NPCs or enemies, for example. While quality assurance testers would still need to check what the AI has documented, it would speed along a laborious and otherwise frustrating process for development.

Moreover, it is the first step in a virtual world for self-directed learning for AI in complex, omni-directional worlds. That should be exciting for AI enthusiasts as a whole.

Source link

Technology

MoradaUno wants to make it easier to rent apartments in Mexico

Renting in Latin America is restrictive. Most landlords require three months of rent as a deposit and a guarantor that owns property in the same city to co-sign the lease. Santiago Morales, the co-founder and CEO of proptech MoradaUno, said this dynamic makes 40% of prospective renters ineligible. His company wants to get more tenants into rentals by underwriting their risk.

“That’s the largest pain point in the industry today,” Morales told TechCrunch. “People not being able to rent where they want, or they have to, like, rent with roomies, roommates or basically can’t rent. So we said, let’s go fix that. Let’s go solve that problem.”

The result was MoradaUno, a Mexico City-based company that looks to upfront tenant risk for landlords. The company works with real estate brokers by screening and underwriting potential tenants and agreeing to take on their rent payments if the tenants stop paying. Morales said the company’s thorough vetting process, which includes background checks and income verification, weeds out a lot of bad actors from the start. MoradaUno also provides additional optional broker services like legal and home insurance.

The company decided to target brokers, as opposed to landlords themselves, due to the fragmented nature of Mexico’s rental market, Morales said. Unlike in U.S. cities where there is a concentration of large landlords that manage a ton of units, in Mexico, it’s the opposite. Most landlords only own one property.

“It’s all mom and pops, like 97% of the market is mom and pops,” Morales said. “They really depend on this income. So they’re like, ‘Oh, who am I renting to? What happens if they don’t pay?’ There’s a lack of trust there. We say, we can help solve that or bridge that lack of trust with technology.”

The MoradaUno founding team knows the LatAm real estate market well. Morales said he moved to Mexico in early 2020, right before the pandemic, because he was working with proptech Loft, the LatAm marketplace for buying and selling real estate. He was supposed to help them expand into the country but when COVID-19 hit, those plans dried up.

The experience gave him a good foot in the door to LatAm’s real estate challenges and introduced him to Ines Gamboa Sorensen and Diego Llano, his now co-founders. MoradaUno was formed in 2020 and formally launched its product in 2021. MoradaUno has since worked with more than 4,500 brokers and helped close more than 20,000 rentals. Santiago added that the company is processing about 1,000 leases a month and wants to hit 3,000 leases a month by next summer.

The company just raised a $5.6 million Series A round to help with that. The round was co-led by fintech-focused Flourish Ventures and Cometa, a VC firm focused on backing companies building for Spanish-speaking populations. Clocktower Ventures, Picus Capital and Y Combinator also participated. Morales said the capital will be used to help with expansion.

The proptech startup market has been growing in LatAm. There are a few other startups looking to tackle rentals too. Aptuno is one that helps people find and apply for apartments online that is based in Bogota and has raised $7 million in venture funding. Houm is another that looks to bypass the region’s tough rental market by acting as a digital broker. Houm has raised more than $44 million in VC money.

MoradaUno is currently live in four cities in Mexico, but the company wants to boost that by adding six more cities in the near future. Morales added that underwriting tenants is just the beginning and in the future they’d like to be able to offer fintech services like advanced rent payments or even build an AI model for brokers.

“It’s really cool to be able to give access [to] people that otherwise would have not been able to rent,” Morales said. “Now you’re giving them an option. That’s very powerful and exciting. That kind of fuels us every day. And we’re also making the lives of thousands of real estate agents better because they have better tools and more efficient technology.”

Technology

I flew DJI’s $199 drone with its new $229 Goggles N3

See, the company announced its budget $199 Neo drone in September that works with goggles, and today it’s announcing the $229 DJI Goggles N3. Add a $99 RC Motion 3 controller and you get airplane-like flight, with first-person video that puts you in the virtual cockpit.

But I can’t quite confirm that it’s worth your money yet — because DJI says my unit likely shipped with a defect, and I’ll need a little more testing time. More on that below.

At $229, the Goggles N3 are definitely less expensive than previous pairs, saving you $120 over the company’s $349 Goggles Integra, its previous budget set, and they’re less than half the price of the premium $499 DJI Goggles 3. Yet they’ve got the same one-tap defogging (using an internal fan) and DJI O4 video transmission as the Goggles 3. I flew the Neo just as far with the DJI’s cheapest and most expensive goggles before the signal cut out and the drone found its way home. You still get headtracking so you can look up and down while you’re flying forward, too.

On paper, the N3 even seem better than the more premium models in a couple ways: a wider 54-degree field of view (compare to 44 degrees), an eyebox so big you can put your prescription glasses inside, with no need to twist knobs to make the lenses match your vision, the company claims.

But instead of the crisp, colorful, perfect micro-OLED screens included in all of DJI’s other modern FPV goggles, the N3 has a single, comparatively washed-out LCD screen inside. To aim that panel at your face, there’s a big diagonal optic inside (not unlike a periscope) to bounce the light at a right angle.

In the case of my review unit, the center of my screen seems to be aimed at the center of my nosepiece. With no way to adjust the lenses, I’m stuck with an out-of-focus image all the time. Not being able to properly see anything has got to be a fluke, right? DJI spokesperson Daisy Kong tells me over the phone that I might have a defective unit.

If it is just a fluke, I’d be fine with most of the other corners DJI has cut. I could live with a slightly washed out image. I don’t miss the loss of the Goggles 3’s barely useful passthrough vision or silicone padding — fabric’s good enough for me. I don’t terribly mind that the Goggles N3 has a visible cable sticking out the side instead of weaving it into the headband like previous models.

While it’s heavier, it’s only 2.3 ounces (65g) heavier than the Goggles 3 according to my kitchen scale, and it’s still reasonably balanced out by the integrated battery at the back of the headset. Frankly, even the Goggles N3 has more wireless range and more fancy features than I typically need. But the core of the experience needs to be a crisp, clear look through the eye of the drone. I’ll let you know if that materializes in the next couple of weeks.

I should also note that the DJI Neo isn’t anywhere near as good as the Avata or Avata 2 at being an FPV drone, simply because of its weight and power ratios — it’s not as nimble as those more dedicated models, and the wind can more easily push it around.

Like them or not, DJI doesn’t have a lot of other affordable FPV options on the table. The company recently discontinued its $829 Avata Explorer Combo (though you can find some refurbished deals), and Kong says there are “no current plans” to let you use the $350 Goggles Integra with the DJI Neo, even though DJI now lets you use the Avata 2 and DJI Mini 4 Pro with the Integra. So if you don’t like the Goggles N3, the $500 Goggles 3 is the only other set that currently works with the Neo.

In the US, where DJI is facing some import difficulties, it’ll only sell the the Goggles N3 on its own, just as it only sells the DJI Neo on its own. In the UK and Europe, it’s a bundle to start: the new “DJI Neo Motion Fly More Combo” with drone, goggles, controller, three batteries, and a charging hub is available today for £449 or €529, with the standalone $229/£229/€269 Goggles N3 estimated to arrive in late November.

Technology

DJI unveils its cheapest-ever FPV goggles – and that makes the Neo way more affordable for immersive flying sessions

DJI has unveiled the new Goggles N3, its cheapest-ever FPV (First Person View) goggles for immersive drone flight. Coming in at under half the price of the Goggles 3, the cut-price N3 version looks like an excellent pairing with the Neo, DJI’s cheapest-ever 4K drone with multiple flight control options, including FPV.

DJI has set the price of the Goggles N3 at $229 / £229 / AU$359, plus the goggles can be purchased in a DJI Neo Motion Fly More Combo for $449 / £449 / AU$839. For context, that Fly More Combo, which includes the Neo selfie drone, RC Motion Controller 3 (rather than the FPV Remote Controller 3), two extra batteries and charging hub plus the Goggles N3, costs less than the Goggles 3 alone.

In this one move, DJI has provided an affordable route for FPV flight newbies, and a sensible alternative the BetaFPV Cetus X drone kit, further cementing the Neo’s position as one of the best drones for beginners.

FPV for novices

Serious FPV pilots are more likely to get that adrenaline hit from high-speed FPV flight using the DJI Avata 2 with Goggles 3, which currently costs from $999 at Amazon (US) or £798.95 at Amazon (UK) as a single battery Fly More Combo (with pricier bundles also available). For the rest of us, the new DJI Neo Motion Fly More Combo presents a watered-down and cost-effective entry to FPV flight.

That said, the Goggles N3 still look like a serious bit of gear (which are also compatible with the DJI Avata 2). With the Goggles N3 in position, a tilt of your head or flick of the wrist with the RC Motion 3 controller can perform aerial acrobatics such as 360-degree flips and rolls.

A full HD 1080p screen features with 54-degree field of view, immersing you in the drone’s perspective, while single-tap defogging activates fans to remove any condensation build-up to maintain crisp viewing. A headband with integrated battery for an even balance complete a comfortable fit, with enough space for glasses-wearers.

The Goggles N3 also feature the same antenna as the pricier Goggles 2, with DJI’s O4 video transmission providing a range up to 13km (how much you use of this range depends on the legal restrictions in your region), and a negligible latency of 31ms.

A neat augmented reality (AR) features allows you to adjust camera settings with the motion controller before your drone takes flight, or while it is hovering. And like DJI’s other goggles, the live feed can be shared to a connected smartphone, which can act as a secondary display for a spotter. You’ll get sessions of nearly three hours with the goggle’s battery fully charged.

All in all, the DJI Goggles N3 are a smart move by DJI, opening up FPV flight to a new market of novices that are curious about FPV flight, but unwilling to fork out for the pricier alternatives. The Neo is starting to make more sense.

You might also like

Science & Environment

Trump’s election victory sparks dismay among climate community

Republican presidential nominee, former U.S. President Donald Trump, listens to a question as he visits Chez What Furniture Store which was damaged during Hurricane Helene on September 30, 2024 in Valdosta, Georgia.

Michael M. Santiago | Getty Images News | Getty Images

Donald Trump’s election victory on Wednesday sparked a palpable sense of dismay among the climate community, with two key architects of the landmark Paris Agreement warning that the result will make it harder to slash planet-heating greenhouse gas emissions.

Trump will defeat his Democratic rival Kamala Harris and return to the White House for a second four-year term, according to an NBC News projection.

It marks a historic and somewhat improbable comeback for one of the most polarizing figures in modern American politics.

The 78-year-old, who has called the climate crisis “one of the great scams,” has pledged to ramp-up fossil fuel production, pare back outgoing President Joe Biden’s emissions-limiting regulations and pull the country out of the Paris climate accord — again.

The 2015 Paris Agreement is a critically important framework designed to reduce global greenhouse gas emissions. It aims to “limit global warming to well below 2, preferably to 1.5 degrees Celsius, compared to pre-industrial levels” over the long term.

Laurence Tubiana, a key architect of the Paris Agreement, said Trump’s election victory “is a setback for global climate action, but the Paris Agreement has proven resilient and is stronger than any single country’s policies.”

Tubiana, a French economist and diplomat who now serves as CEO of the European Climate Foundation, said the context today is “very different” to Trump’s first election victory in 2016.

French Economist Dr. Laurence Tubiana speaks during an event ‘G-20 Event: New Challenges in International Taxation’ at the annual meetings of the International Monetary Fund (IMF) and World Bank in Washington DC, United States on April 17, 2024.

Anadolu | Anadolu | Getty Images

“There is powerful economic momentum behind the global transition, which the US has led and gained from, but now risks forfeiting. The devastating toll of recent hurricanes was a grim reminder that all Americans are affected by worsening climate change,” Tubiana said.

“Responding to the demands of their citizens, cities and states across the US are taking bold action,” she added.

“Europe now has the responsibility and opportunity to step up and lead. By pushing forward with a fair and balanced transition, in close partnership with others, it can show that ambitious climate action protects people, strengthens economies, and builds resilience.”

‘An antidote to doom and despair’

Separately, Christiana Figueres, the former United Nations climate chief who oversaw the 2015 Paris summit, said the U.S. election result will be regarded as a “major blow to global climate action.”

However, Figueres said “it cannot and will not halt the changes underway to decarbonise the economy and meet the goals of the Paris Agreement.”

“Standing with oil and gas is the same as falling behind in a fast moving world,” she continued, predicting that clean energy technologies would continue to outcompete fossil fuels over the coming years.

Dame Christiana Figueres, Chair, The Earthshot Prize speaks at the Earthshot Prize Innovation Summit in partnership with Bloomberg Philanthropies on September 24, 2024 in New York City.

Bryan Bedder | Getty Images Entertainment | Getty Images

“Meanwhile, the vital work happening in communities everywhere to regenerate our planet and societies will continue, imbued with a new, even more determined spirit today,” Figueres said.

“Being here in South Africa for the Earthshot Prize makes clear that there is an antidote to doom and despair. It’s action on the ground, and it’s happening in all corners of the Earth.”

Technology

iPhone 16 Pro Max vs. Galaxy S24 Ultra camera test: it shocked me

The iPhone 16 Pro Max and the Samsung Galaxy S24 Ultra are both big phones with big screens, big power, and big price tags. The cameras are also impressive, but which one of these two archrivals takes better photos?

I’ve been using the Galaxy S24 Ultra again over the past few weeks and have put it against Apple’s latest top iPhone to find out. And the results are pretty shocking.

The cameras

Before we look at the phones, let’s look at the numbers behind the cameras, starting with the iPhone 16 Pro Max. There are three cameras on the back, starting with the main 48-megapixel camera with optical image stabilization, which can also take 2x zoom photos. It’s joined by a 12MP telephoto camera for 5x optical zoom photos and a 48MP ultrawide camera with a 120-degree field of view.

The Galaxy S24 Ultra has four cameras. It’s led by a main 200MP camera, along with a pair of telephoto cameras — a 10MP camera for a 3x optical zoom and a 50MP periscope zoom for 5x optical shots — plus a 12MP ultrawide camera. Unlike previous Galaxy Ultra phones, the S24 Ultra has “optical quality” 10x zoom shots, which have proven to be just as good as optical zoom shots.

For reference, I used the Standard Photographic Style on the iPhone 16 Pro Max for all the photos below, and each phone’s camera was used in auto mode. All the photos were downloaded onto an Apple Mac mini and examined on a color-calibrated monitor. They have all been resized for friendlier online viewing.

Main camera

- 1.

Apple iPhone 16 Pro Max main camera - 2.

Samsung Galaxy S24 Ultra main camera

When using the main camera, the iPhone 16 Pro Max consistently took more visually pleasing photos than the S24 Ultra, apart from its long-time issue with exposure occasionally rearing its head. In the first photo of the leafy lane, you can see where it causes the camera problems and where the S24 Ultra’s colors are punchier and more vibrant.

- 1.

Apple iPhone 16 Pro Max main camera - 2.

Samsung Galaxy S24 Ultra main camera

However, as you can see in the second photo of the Aston Martin Valkyrie car, the S24 Ultra’s eagerness results in noise, which is entirely absent from the iPhone’s pin-sharp, more accurately colored image. Although its exposure can cause issues sometimes, here, it gets it exactly right — to the point where I can easily read the Vehicle Identification Number (VIN) on the windscreen, which is blurred and jumbled in Samsung’s photo.

- 1.

Apple iPhone 16 Pro Max main camera - 2.

Samsung Galaxy S24 Ultra main camera

The Galaxy S24 Ultra often gets a certain type of photo right, such as the example at the top, but everywhere else, the iPhone improves. The photo inside the church shows the wonderful tone and warmth of the iPhone’s camera, rather than the starkness of the S24 Ultra, right down to the color of the padding on the seats and the wooden beams on the ceiling.

- 1.

Apple iPhone 16 Pro Max main camera - 2.

Samsung Galaxy S24 Ultra main camera

Take a look at our final photo to see how the iPhone’s super-sharp focus and depth of field help it take detailed, emotional shots, even of the simplest subjects. The foam and bubbles on top of the coffee are so sharp, and the depth of field is exactly right, making for a more realistic, attractive photo than the S24 Ultra’s photo, which seems less aware of its subject.

Winner: Apple iPhone 16 Pro Max

Ultrawide camera

- 1.

Apple iPhone 16 Pro Max wide-angle - 2.

Samsung Galaxy S24 Ultra wide-angle

On paper, the iPhone 16 Pro Max’s ultrawide camera should easily improve on the Galaxy S24 Ultra’s camera, but in reality, it’s the opposite. The S24 Ultra’s ultrawide camera shows up the iPhone’s camera really badly. The first photo of the Ferrari FF was taken indoors in good lighting (the same environment as the Aston Martin Valkyrie photo above), and the iPhone’s photo is full of noise and blur, while the Galaxy S24 Ultra avoids most of the same issues to produce a sharper, less blurred image. It’s not perfect, but it’s far better than the iPhone’s photo.

- 1.

Apple iPhone 16 Pro Max wide-angle - 2.

Samsung Galaxy S24 Ultra wide-angle

Outside, things don’t improve much for the iPhone 16 Pro Max, but it can still impress with its warm tones. The photo of the fields and sky shows the S24 Ultra’s vibrant colors but overall coldness. However, it has a less noisy foreground and more detail, such as around the fence post. In the distance, the two cameras introduce some blur and noise.

- 1.

Apple iPhone 16 Pro Max wide-angle - 2.

Samsung Galaxy S24 Ultra wide-angle

In the final photo of the church, you can see how much sharper the S24 Ultra’s ultrawide photos are, with the stonework on the spire far clearer and less muddy than in the iPhone’s image. Yes, the S24 Ultra does use quite a lot of software enhancement, but it’s easier to forgive it when the balance and sharpness is right.

Winner: Samsung Galaxy S24 Ultra

2x zoom and 3x zoom

- 1.

Apple iPhone 16 Pro Max 2x zoom - 2.

Samsung Galaxy S24 Ultra 2x zoom

The two phones have different “short” zooms, with the iPhone 16 Pro Max offering a 2x optical zoom and the S24 Ultra a 3x optical zoom. You can see examples of them both here, and we’ll be judging this category as one rather than two separate categories. The 2x zoom is fairly consistent across both cameras for balance and focus, but the iPhone can introduce some noise, while the S24 Ultra’s photos have a softer look.

- 1.

Apple iPhone 16 Pro Max 3x zoom - 2.

Samsung Galaxy S24 Ultra 3x zoom

Move on to the 3x zoom across the two cameras, and the iPhone 16 Pro Max can’t match the S24 Ultra’s performance. Shots are far noisier and less sharp, while the Samsung phone’s 3x zoom produces excellent photos with plenty of life and detail. This is to be expected, given Samsung’s dedicated optical 3x mode.

But because the S24 Ultra’s 2x mode isn’t awful compared to the iPhone 16 Pro Max’s 2x shots, it’s going to win this category for being more versatile, as you could realistically use both zooms on it, but would want to stick with the 2x on the iPhone 16 Pro Max.

Winner: Samsung Galaxy S24 Ultra

5x zoom

- 1.

Apple iPhone 16 Pro Max 5x zoom - 2.

Samsung Galaxy S24 Ultra 5x zoom

Both cameras have 5x optical zooms, and Samsung continues its run of zoom superiority here too. The iPhone 16 Pro Max has a problem with sharpness and focus, resulting in grainy, blurry, or noisy images that look worse the more you crop them down. These issues are missing from the S24 Ultra’s camera, and its photos look great at 5x zoom.

Take the wooden angel as an example. Not only are the colors and textures far more realistic in the S24 Ultra’s photo, but when you crop it down, there’s detail in the wood that the iPhone struggles to capture. The overall sharpness makes the depth of field pop more, while the iPhone 16 Pro Max’s photo appears flatter.

- 1.

Apple iPhone 16 Pro Max 5x zoom - 2.

Samsung Galaxy S24 Ultra 5x zoom

Photos taken outside have similar issues, but the iPhone does win a few points with generally more realistic colors. Many will like the S24 Ultra’s fairly saturated colors, which stay consistent across all lenses. The donkey’s fur is sharper and more defined in the S24 Ultra’s photo, and there’s clearly less noise on the wooden fence post and the red strap. Samsung wins the 5x zoom category, so will it make it three-for-three as we move to the 10x category?

Winner: Samsung Galaxy S24 Ultra

10x zoom

- 1.

Apple iPhone 16 Pro Max 10x zoom - 2.

Samsung Galaxy S24 Ultra 10x zoom

Neither phone has a 10x optical zoom, but Samsung does make a point of highlighting its optical quality photos at 10x and does include a shortcut in its camera app. The iPhone does not, and things do start out quite well.

The horse photo reveals a similar level of detail, but when you get very close, there’s far more evidence of software enhancements in the S24 Ultra’s photo, with some haloing visible along the horse’s back and ears. This glow is not in the iPhone’s photo at all, giving it a more natural appearance.

- 1.

Apple iPhone 16 Pro Max 10x zoom - 2.

Samsung Galaxy S24 Ultra 10x zoom

But the iPhone does not always win here, as seen in the next photo of the wooden pub sign. The S24 Ultra’s photo has less noise than the iPhone’s photo, particularly on the green board, and its more dynamic coloring means the fall leaves glow more attractively.

- 1.

Apple iPhone 16 Pro Max 10x zoom - 2.

Samsung Galaxy S24 Ultra 10x zoom

The Galaxy S24 Ultra is far more consistent when shooting 10x photos, as the final photo of the car proves. The differences are clear — accurate colors, spot-on white balance, and no obvious noise. The iPhone can’t keep up at 10x zoom.

Winner: Samsung Galaxy S24 Ultra

Night mode

- 1.

Apple iPhone 16 Pro Max night mode - 2.

Samsung Galaxy S24 Ultra night mode

When it came to shooting photos in low light, the Galaxy S24 Ultra’s preview on the phone made me expect the worst, as it did not show a truly representative image straight after taking it. The iPhone didn’t have any such problem, so when I was collecting photos, I feared for the S24 Ultra’s performance. However, the images looked totally different in the gallery, and at first, the two appeared quite evenly matched.

The outside of the brightly lit pub is a great example. The iPhone overexposes in many places while showing more detail than the S24 Ultra’s photos in others. However, in most other areas — the seating and foliage, for example — both cope with the low light well. The iPhone’s photo does have a little more blur, though, and the overexposure hides details, such as in the pub’s signage.

- 1.

Apple iPhone 16 Pro Max night mode - 2.

Samsung Galaxy S24 Ultra night mode

In the photo of the village shop, the iPhone’s more accurate colors shine through, and it’s also less blurry than the S24 Ultra, where the software smooths out a lot of detail, showing both are very sensitive to lighting conditions when taking photos in the dark. The two trade blows like this in all the lowlight images I took, and while the iPhone’s overexposure often caused problems, the S24 Ultra’s smoothing often did the same.

- 1.

Apple iPhone 16 Pro Max - 2.

Samsung Galaxy S24 Ultra

However, the S24 Ultra was great in difficult, harsh lighting conditions. Shooting into the sunset, the S24 Ultra captured the golden glow in the sky, the blue sky, the green of the grass, and the scene as a whole. The iPhone 16 Pro Max’s photo contains so much shadow that it robs it of emotion and detail. The real-world environment was somewhere in between the two, but the S24 Ultra’s photo is the one I’d keep or share.

Winner: Samsung Galaxy S24 Ultra

Samsung’s flagship comes out on top

It may come as a shock to some, but the Samsung Galaxy S24 Ultra — a phone released at the beginning of the year — has taken a victory in all but one category against the new Apple iPhone 16 Pro Max. It has by far the superior camera when you want to take any zoom or ultrawide photo.

It couldn’t quite match the iPhone 16 Pro Max in the main camera category, though, and I consistently preferred it to the S24 Ultra. Still, it should be noted the iPhone’s exposure and contrast can still upset the balance of some images, especially in challenging light. This has affected iPhone cameras for several generations, so it is not unique to the 16 Pro Max.

What’s very interesting is that the iPhone 16 Pro comprehensively beat the Google Pixel 9 Pro in a recent test, proving its top camera credentials. It also showed several improvements over the iPhone 15 Pro. The Galaxy S24 Ultra has been a winner from the start by improving on its predecessor, beating the Google Pixel 8 Pro, and equaling the performance of our favorite Android camera phone, the Xiaomi 14 Ultra.

It may not be the latest on the market, but the Galaxy S24 Ultra continues to take on the very best camera phones and easily holds its own.

-

Science & Environment2 months ago

Science & Environment2 months agoHow to unsnarl a tangle of threads, according to physics

-

Technology1 month ago

Technology1 month agoIs sharing your smartphone PIN part of a healthy relationship?

-

Science & Environment2 months ago

Science & Environment2 months agoHyperelastic gel is one of the stretchiest materials known to science

-

Science & Environment2 months ago

Science & Environment2 months ago‘Running of the bulls’ festival crowds move like charged particles

-

Technology2 months ago

Technology2 months agoWould-be reality TV contestants ‘not looking real’

-

Science & Environment1 month ago

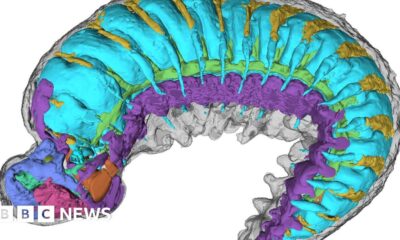

Science & Environment1 month agoX-rays reveal half-billion-year-old insect ancestor

-

Science & Environment2 months ago

Science & Environment2 months agoMaxwell’s demon charges quantum batteries inside of a quantum computer

-

Money1 month ago

Money1 month agoWetherspoons issues update on closures – see the full list of five still at risk and 26 gone for good

-

Science & Environment2 months ago

Science & Environment2 months agoSunlight-trapping device can generate temperatures over 1000°C

-

Sport1 month ago

Sport1 month agoAaron Ramsdale: Southampton goalkeeper left Arsenal for more game time

-

Science & Environment2 months ago

Science & Environment2 months agoPhysicists have worked out how to melt any material

-

Technology1 month ago

Technology1 month agoGmail gets redesigned summary cards with more data & features

-

Football1 month ago

Football1 month agoRangers & Celtic ready for first SWPL derby showdown

-

MMA1 month ago

MMA1 month ago‘Dirt decision’: Conor McGregor, pros react to Jose Aldo’s razor-thin loss at UFC 307

-

Technology1 month ago

Technology1 month agoUkraine is using AI to manage the removal of Russian landmines

-

News1 month ago

News1 month agoWoman who died of cancer ‘was misdiagnosed on phone call with GP’

-

Science & Environment2 months ago

Science & Environment2 months agoLaser helps turn an electron into a coil of mass and charge

-

Sport1 month ago

Sport1 month agoBoxing: World champion Nick Ball set for Liverpool homecoming against Ronny Rios

-

Technology1 month ago

Technology1 month agoEpic Games CEO Tim Sweeney renews blast at ‘gatekeeper’ platform owners

-

Business1 month ago

how UniCredit built its Commerzbank stake

-

Science & Environment2 months ago

Science & Environment2 months agoA new kind of experiment at the Large Hadron Collider could unravel quantum reality

-

Science & Environment2 months ago

Science & Environment2 months agoLiquid crystals could improve quantum communication devices

-

Technology1 month ago

Technology1 month agoRussia is building ground-based kamikaze robots out of old hoverboards

-

News1 month ago

News1 month ago‘Blacks for Trump’ and Pennsylvania progressives play for undecided voters

-

Technology1 month ago

Technology1 month agoSamsung Passkeys will work with Samsung’s smart home devices

-

MMA1 month ago

MMA1 month agoDana White’s Contender Series 74 recap, analysis, winner grades

-

Science & Environment2 months ago

Science & Environment2 months agoQuantum ‘supersolid’ matter stirred using magnets

-

Science & Environment2 months ago

Science & Environment2 months agoWhy this is a golden age for life to thrive across the universe

-

Technology1 month ago

Technology1 month agoMicrosoft just dropped Drasi, and it could change how we handle big data

-

News1 month ago

News1 month agoNavigating the News Void: Opportunities for Revitalization

-

MMA1 month ago

MMA1 month ago‘Uncrowned queen’ Kayla Harrison tastes blood, wants UFC title run

-

Sport1 month ago

Sport1 month ago2024 ICC Women’s T20 World Cup: Pakistan beat Sri Lanka

-

Entertainment1 month ago

Entertainment1 month agoBruce Springsteen endorses Harris, calls Trump “most dangerous candidate for president in my lifetime”

-

MMA1 month ago

MMA1 month agoPereira vs. Rountree prediction: Champ chases legend status

-

News1 month ago

News1 month agoMassive blasts in Beirut after renewed Israeli air strikes

-

Technology1 month ago

Technology1 month agoCheck, Remote, and Gusto discuss the future of work at Disrupt 2024

-

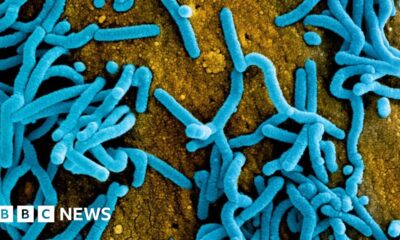

News1 month ago

News1 month agoRwanda restricts funeral sizes following outbreak

-

TV1 month ago

TV1 month agoসারাদেশে দিনব্যাপী বৃষ্টির পূর্বাভাস; সমুদ্রবন্দরে ৩ নম্বর সংকেত | Weather Today | Jamuna TV

-

Technology1 month ago

Technology1 month agoWhy Machines Learn: A clever primer makes sense of what makes AI possible

-

Science & Environment2 months ago

Science & Environment2 months agoQuantum forces used to automatically assemble tiny device

-

Technology1 month ago

Technology1 month agoMicrophone made of atom-thick graphene could be used in smartphones

-

Business1 month ago

Top shale boss says US ‘unusually vulnerable’ to Middle East oil shock

-

Business1 month ago

Business1 month agoWater companies ‘failing to address customers’ concerns’

-

News1 month ago

News1 month agoCornell is about to deport a student over Palestine activism

-

Business1 month ago

Business1 month agoWhen to tip and when not to tip

-

Sport1 month ago

Sport1 month agoWXV1: Canada 21-8 Ireland – Hosts make it two wins from two

-

News1 month ago

News1 month agoHull KR 10-8 Warrington Wolves – Robins reach first Super League Grand Final

-

MMA1 month ago

MMA1 month agoKayla Harrison gets involved in nasty war of words with Julianna Pena and Ketlen Vieira

-

Science & Environment2 months ago

Science & Environment2 months agoITER: Is the world’s biggest fusion experiment dead after new delay to 2035?

-

News2 months ago

News2 months ago▶️ Hamas in the West Bank: Rising Support and Deadly Attacks You Might Not Know About

-

Science & Environment2 months ago

Science & Environment2 months agoA slight curve helps rocks make the biggest splash

-

Technology2 months ago

Technology2 months agoMeta has a major opportunity to win the AI hardware race

-

Technology1 month ago

Technology1 month agoSingleStore’s BryteFlow acquisition targets data integration

-

Football1 month ago

Football1 month ago'Rangers outclassed and outplayed as Hearts stop rot'

-

Sport1 month ago

Sport1 month agoChina Open: Carlos Alcaraz recovers to beat Jannik Sinner in dramatic final

-

Football1 month ago

Football1 month agoWhy does Prince William support Aston Villa?

-

Technology1 month ago

Technology1 month agoLG C4 OLED smart TVs hit record-low prices ahead of Prime Day

-

News1 month ago

News1 month ago▶ Hamas Spent $1B on Tunnels Instead of Investing in a Future for Gaza’s People

-

Sport1 month ago

Sport1 month agoShanghai Masters: Jannik Sinner and Carlos Alcaraz win openers

-

Science & Environment2 months ago

Science & Environment2 months agoNuclear fusion experiment overcomes two key operating hurdles

-

Womens Workouts1 month ago

Womens Workouts1 month ago3 Day Full Body Women’s Dumbbell Only Workout

-

Technology1 month ago

Technology1 month agoUniversity examiners fail to spot ChatGPT answers in real-world test

-

Technology1 month ago

Technology1 month agoMusk faces SEC questions over X takeover

-

MMA1 month ago

MMA1 month agoPennington vs. Peña pick: Can ex-champ recapture title?

-

Sport1 month ago

Sport1 month agoPremiership Women’s Rugby: Exeter Chiefs boss unhappy with WXV clash

-

Sport1 month ago

Sport1 month agoCoco Gauff stages superb comeback to reach China Open final

-

Business1 month ago

Bank of England warns of ‘future stress’ from hedge fund bets against US Treasuries

-

Sport1 month ago

Sport1 month agoSturm Graz: How Austrians ended Red Bull’s title dominance

-

MMA1 month ago

MMA1 month ago‘I was fighting on automatic pilot’ at UFC 306

-

News1 month ago

News1 month agoGerman Car Company Declares Bankruptcy – 200 Employees Lose Their Jobs

-

Sport1 month ago

Sport1 month agoWales fall to second loss of WXV against Italy

-

Science & Environment2 months ago

Science & Environment2 months agoTime travel sci-fi novel is a rip-roaringly good thought experiment

-

Science & Environment2 months ago

Science & Environment2 months agoNerve fibres in the brain could generate quantum entanglement

-

Business1 month ago

DoJ accuses Donald Trump of ‘private criminal effort’ to overturn 2020 election

-

Business1 month ago

Sterling slides after Bailey says BoE could be ‘a bit more aggressive’ on rates

-

TV1 month ago

TV1 month agoTV Patrol Express September 26, 2024

-

Money4 weeks ago

Money4 weeks agoTiny clue on edge of £1 coin that makes it worth 2500 times its face value – do you have one lurking in your change?

-

Technology1 month ago

Technology1 month agoAmazon’s Ring just doubled the price of its alarm monitoring service for grandfathered customers

-

Travel1 month ago

World of Hyatt welcomes iconic lifestyle brand in latest partnership

-

Technology1 month ago

Technology1 month agoQuoroom acquires Investory to scale up its capital-raising platform for startups

-

MMA1 month ago

MMA1 month agoKetlen Vieira vs. Kayla Harrison pick, start time, odds: UFC 307

-

Technology1 month ago

Technology1 month agoThe best shows on Max (formerly HBO Max) right now

-

Technology1 month ago

Technology1 month agoIf you’ve ever considered smart glasses, this Amazon deal is for you

-

Sport1 month ago

Sport1 month agoURC: Munster 23-0 Ospreys – hosts enjoy second win of season

-

MMA1 month ago

MMA1 month agoHow to watch Salt Lake City title fights, lineup, odds, more

-

Technology1 month ago

Technology1 month agoJ.B. Hunt and UP.Labs launch venture lab to build logistics startups

-

Business1 month ago

Italy seeks to raise more windfall taxes from companies

-

Business1 month ago

‘Let’s be more normal’ — and rival Tory strategies

-

Business1 month ago

The search for Japan’s ‘lost’ art

-

Sport1 month ago

Sport1 month agoNew Zealand v England in WXV: Black Ferns not ‘invincible’ before game

-

Sport1 month ago

Sport1 month agoMan City ask for Premier League season to be DELAYED as Pep Guardiola escalates fixture pile-up row

-

News2 months ago

News2 months ago▶️ Media Bias: How They Spin Attack on Hezbollah and Ignore the Reality

-

Science & Environment2 months ago

Science & Environment2 months agoHow to wrap your mind around the real multiverse

-

MMA1 month ago

MMA1 month agoUFC 307’s Ketlen Vieira says Kayla Harrison ‘has not proven herself’

-

News1 month ago

News1 month agoTrump returns to Pennsylvania for rally at site of assassination attempt

-

MMA1 month ago

MMA1 month agoKevin Holland suffers injury vs. Roman Dolidze

-

Technology4 weeks ago

Technology4 weeks agoThe FBI secretly created an Ethereum token to investigate crypto fraud

-

Business1 month ago

Business1 month agoStocks Tumble in Japan After Party’s Election of New Prime Minister

-

Technology1 month ago

Technology1 month agoTexas is suing TikTok for allegedly violating its new child privacy law

-

Technology1 month ago

Technology1 month agoOpenAI secured more billions, but there’s still capital left for other startups

You must be logged in to post a comment Login