Isolation dictates where we go to see into the far reaches of the universe. The Atacama Desert of Chile, the summit of Mauna Kea in Hawaii, the vast expanse of the Australian Outback—these are where astronomers and engineers have built the great observatories and radio telescopes of modern times. The skies are usually clear, the air is arid, and the electronic din of civilization is far away.

It was to one of these places, in the high desert of New Mexico, that a young astronomer named Jack Burns went to study radio jets and quasars far beyond the Milky Way. It was 1979, he was just out of grad school, and the Very Large Array, a constellation of 28 giant dish antennas on an open plain, was a new mecca of radio astronomy.

But the VLA had its limitations—namely, that Earth’s protective atmosphere and ionosphere blocked many parts of the electromagnetic spectrum, and that, even in a remote desert, earthly interference was never completely gone.

Could there be a better, even lonelier place to put a radio telescope? Sure, a NASA planetary scientist named Wendell Mendell, told Burns: How about the moon? He asked if Burns had ever thought about building one there.

“My immediate reaction was no. Maybe even hell, no. Why would I want to do that?” Burns recalls with a self-deprecating smile. His work at the VLA had gone well, he was fascinated by cosmology’s big questions, and he didn’t want to be slowed by the bureaucratic slog of getting funding to launch a new piece of hardware.

But Mendell suggested he do some research and speak at a conference on future lunar observatories, and Burns’s thinking about a space-based radio telescope began to shift. That was in 1984. In the four decades since, he’s published more than 500 peer-reviewed papers on radio astronomy. He’s been an adviser to NASA, the Department of Energy, and the White House, as well as a professor and a university administrator. And while doing all that, Burns has had an ongoing second job of sorts, as a quietly persistent advocate for radio astronomy from space.

And early next year, if all goes well, a radio telescope for which he’s a scientific investigator will be launched—not just into space, not just to the moon, but to the moon’s far side, where it will observe things invisible from Earth.

“You can see we don’t lack for ambition after all these years,” says Burns, now 73 and a professor emeritus of astrophysics at the University of Colorado Boulder.

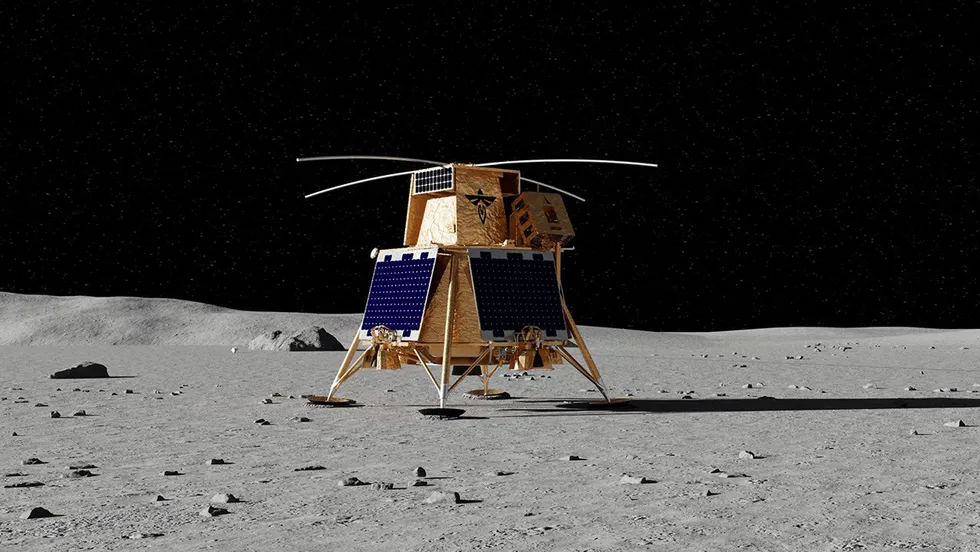

The instrument is called LuSEE-Night, short for Lunar Surface Electromagnetics Experiment–Night. It will be launched from Florida aboard a SpaceX rocket and carried to the moon’s far side atop a squat four-legged robotic spacecraft called Blue Ghost Mission 2, built and operated by Firefly Aerospace of Cedar Park, Texas.

In an artist’s rendering, the LuSEE-Night radio telescope sits atop Firefly Aerospace’s Blue Ghost 2 lander, which will carry it to the moon’s far side. Firefly Aerospace

In an artist’s rendering, the LuSEE-Night radio telescope sits atop Firefly Aerospace’s Blue Ghost 2 lander, which will carry it to the moon’s far side. Firefly Aerospace

Landing will be risky: Blue Ghost 2 will be on its own, in a place that’s out of the sight of ground controllers. But Firefly’s Blue Ghost 1 pulled off the first successful landing by a private company on the moon’s near side in March 2025. And Burns has already put hardware on the lunar surface, albeit with mixed results: An experiment he helped conceive was on board a lander called Odysseus, built by Houston-based Intuitive Machines, in 2024. Odysseus was damaged on landing, but Burns’s experiment still returned some useful data.

Burns says he’d be bummed about that 2024 mission if there weren’t so many more coming up. He’s joined in proposing myriad designs for radio telescopes that could go to the moon. And he’s kept going through political disputes, technical delays, even a confrontation with cancer. Finally, finally, the effort is paying off.

“We’re getting our feet into the lunar soil,” says Burns, “and understanding what is possible with these radio telescopes in a place where we’ve never observed before.”

Why Go to the Far Side of the Moon?

A moon-based radio telescope could help unravel some of the greatest mysteries in space science. Dark matter, dark energy, neutron stars, and gravitational waves could all come into better focus if observed from the moon. One of Burns’s collaborators on LuSEE-Night, astronomer Gregg Hallinan of Caltech, would like such a telescope to further his research on electromagnetic activity around exoplanets, a possible measure of whether these distant worlds are habitable. Burns himself is especially interested in the cosmic dark ages, an epoch that began more than 13 billion years ago, just 380,000 years after the big bang. The young universe had cooled enough for neutral hydrogen atoms to form, which trapped the light of stars and galaxies. The dark ages lasted between 200 million and 400 million years.

LuSEE-Night will listen for faint signals from the cosmic dark ages, a period that began about 380,000 years after the big bang, when neutral hydrogen atoms had begun to form, trapping the light of stars and galaxies. Chris Philpot

“It’s a critical period in the history of the universe,” says Burns. “But we have no data from it.”

The problem is that residual radio signals from this epoch are very faint and easily drowned out by closer noise—in particular, our earthly communications networks, power grids, radar, and so forth. The sun adds its share, too. What’s more, these early signals have been dramatically redshifted by the expansion of the universe, their wavelengths stretched as their sources have sped away from us over billions of years. The most critical example is neutral hydrogen, the most abundant element in the universe, which when excited in the laboratory emits a radio signal with a wavelength of 21 centimeters. Indeed, with just some backyard equipment, you can easily detect neutral hydrogen in nearby galactic gas clouds close to that wavelength, which corresponds to a frequency of 1.42 gigahertz. But if the hydrogen signal originates from the dark ages, those 21 centimeters are lengthened to tens of meters. That means scientists need to listen to frequencies well below 50 megahertz—parts of the radio spectrum that are largely blocked by Earth’s ionosphere.

Which is why the lunar far side holds such appeal. It may just be the quietest site in the inner solar system.

“It really is the only place in the solar system that never faces the Earth,” says David DeBoer, a research astronomer at the University of California, Berkeley. “It really is kind of a wonderful, unique place.”

For radio astronomy, things get even better during the lunar night, when the sun drops beneath the horizon and is blocked by the moon’s mass. For up to 14 Earth-days at a time, a spot on the moon’s far side is about as electromagnetically dark as any place in the inner solar system can be. No radiation from the sun, no confounding signals from Earth. There may be signals from a few distant space probes, but otherwise, ideally, your antenna only hears the raw noise of the cosmos.

“When you get down to those very low radio frequencies, there’s a source of noise that appears that’s associated with the solar wind,” says Caltech’s Hallinan. Solar wind is the stream of charged particles that speed relentlessly from the sun. “And the only location where you can escape that within a billion kilometers of the Earth is on the lunar surface, on the nighttime side. The solar wind screams past it, and you get a cavity where you can hide away from that noise.”

How Does LuSEE-Night Work?

LuSEE-Night’s receiver looks simple, though there’s really nothing simple about it. Up top are two dipole antennas, each of which consists of two collapsible rods pointing in opposite directions. The dipole antennas are mounted perpendicular to each other on a small turntable, forming an X when seen from above. Each dipole antenna extends to about 6 meters. The turntable sits atop a box of support equipment that’s a bit less than a cubic meter in volume; the equipment bay, in turn, sits atop the Blue Ghost 2 lander, a boxy spacecraft about 2 meters tall.

LuSEE-Night undergoes final assembly [top and center] at the Space Sciences Laboratory at the University of California, Berkeley, and testing [bottom] at Firefly Aerospace outside Austin, Texas. From top: Space Sciences Laboratory/University of California, Berkeley (2); Firefly Aerospace

LuSEE-Night undergoes final assembly [top and center] at the Space Sciences Laboratory at the University of California, Berkeley, and testing [bottom] at Firefly Aerospace outside Austin, Texas. From top: Space Sciences Laboratory/University of California, Berkeley (2); Firefly Aerospace

“It’s a beautiful instrument,” says Stuart Bale, a physicist at the University of California, Berkeley, who is NASA’s principal investigator for the project. “We don’t even know what the radio sky looks like at these frequencies without the sun in the sky. I think that’s what LuSEE-Night will give us.”

The apparatus was designed to serve several incompatible needs: It had to be sensitive enough to detect very weak signals from deep space; rugged enough to withstand the extremes of the lunar environment; and quiet enough to not interfere with its own observations, yet loud enough to talk to Earth via relay satellite as needed. Plus the instrument had to stick to a budget of about US $40 million and not weigh more than 120 kilograms. The mission plan calls for two years of operations.

The antennas are made of a beryllium copper alloy, chosen for its high conductivity and stability as lunar temperatures plummet or soar by as much as 250 °C every time the sun rises or sets. LuSEE-Night will make precise voltage measurements of the signals it receives, using a high-impedance junction field-effect transistor to act as an amplifier for each antenna. The signals are then fed into a spectrometer—the main science instrument—which reads those voltages at 102.4 million samples per second. That high read-rate is meant to prevent the exaggeration of any errors as faint signals are amplified. Scientists believe that a cosmic dark-ages signature would be five to six orders of magnitude weaker than the other signals that LuSEE-Night will record.

The turntable is there to help characterize the signals the antennas receive, so that, among other things, an ancient dark-ages signature can be distinguished from closer, newer signals from, say, galaxies or interstellar gas clouds. Data from the early universe should be virtually isotropic, meaning that it comes from all over the sky, regardless of the antennas’ orientation. Newer signals are more likely to come from a specific direction. Hence the turntable: If you collect data over the course of a lunar night, then reorient the antennas and listen again, you’ll be better able to distinguish the distant from the very, very distant.

What’s the ideal lunar landing spot if you want to take such readings? One as nearly opposite Earth as possible, on a flat plain. Not an easy thing to find on the moon’s hummocky far side, but mission planners pored over maps made by lunar satellites and chose a prime location about 24 degrees south of the lunar equator.

Other lunar telescopes have been proposed for placement in the permanently shadowed craters near the moon’s south pole, just over the horizon when viewed from Earth. Such craters are coveted for the water ice they may hold, and the low temperatures in them (below -240 °C) are great if you’re doing infrared astronomy and need to keep your instruments cold. But the location is terrible if you’re working in long-wavelength radio.

“Even the inside of such craters would be hard to shield from Earth-based radio frequency interference (RFI) signals,” Leon Koopmans of the University of Groningen in the Netherlands, said in an email. “They refract off the crater rims and often, due to their long wavelength, simply penetrate right through the crater rim.”

RFI is a major—and sometimes maddening—issue for sensitive instruments. The first-ever landing on the lunar far side was by the Chinese Chang’e 4 spacecraft, in 2019. It carried a low-frequency radio spectrometer, among other experiments. But it failed to return meaningful results, Chinese researchers said, mostly because of interference from the spacecraft itself.

The Accidental Birth of Radio Astronomy

Sometimes, though, a little interference makes history. Here, it’s worth a pause to remember Karl Jansky, considered the father of radio astronomy. In 1928, he was a young engineer at Bell Telephone Laboratories in Holmdel, N.J., assigned to isolate sources of static in shortwave transatlantic telephone calls. Two years later, he built a 30-meter-long directional antenna, mostly out of brass and wood, and after accounting for thunderstorms and the like, there was still noise he couldn’t explain. At first, its strength seemed to follow a daily cycle, rising and sinking with the sun. But after a few months’ observation, the sun and the noise were badly out of sync.

In 1930, Karl Jansky, a Bell Labs engineer in Holmdel, N.J., built this rotating antenna on wheels to identify sources of static for radio communications. NRAO/AUI/NSF

In 1930, Karl Jansky, a Bell Labs engineer in Holmdel, N.J., built this rotating antenna on wheels to identify sources of static for radio communications. NRAO/AUI/NSF

It gradually became clear that the noise’s period wasn’t 24 hours; it was 23 hours and 56 minutes—the time it takes Earth to turn once relative to the stars. The strongest interference seemed to come from the direction of the constellation Sagittarius, which optical astronomy suggested was the center of the Milky Way. In 1933, Jansky published a paper in Proceedings of the Institute of Radio Engineers with a provocative title: “Electrical Disturbances Apparently of Extraterrestrial Origin.” He had opened the electromagnetic spectrum up to astronomers, even though he never got to pursue radio astronomy himself. The interference he had defined was, to him, “star noise.”

Thirty-two years later, two other Bell Labs scientists, Arno Penzias and Robert Wilson, ran into some interference of their own. In 1965 they were trying to adapt a horn antenna in Holmdel for radio astronomy—but there was a hiss, in the microwave band, coming from all parts of the sky. They had no idea what it was. They ruled out interference from New York City, not far to the north. They rewired the receiver. They cleaned out bird droppings in the antenna. Nothing worked.

In the 1960s, Arno Penzias and Robert W. Wilson used this horn antenna in Holmdel, N.J., to detect faint signals from the big bang. GL Archive/Alamy

In the 1960s, Arno Penzias and Robert W. Wilson used this horn antenna in Holmdel, N.J., to detect faint signals from the big bang. GL Archive/Alamy

Meanwhile, an hour’s drive away, a team of physicists at Princeton University under Robert Dicke was trying to find proof of the big bang that began the universe 13.8 billion years ago. They theorized that it would have left a hiss, in the microwave band, coming from all parts of the sky. They’d begun to build an antenna. Then Dicke got a phone call from Penzias and Wilson, looking for help. “Well, boys, we’ve been scooped,” he famously said when the call was over. Penzias and Wilson had accidentally found the cosmic microwave background, or CMB, the leftover radiation from the big bang.

Burns and his colleagues are figurative heirs to Jansky, Penzias, and Wilson. Researchers suggest that the giveaway signature of the cosmic dark ages may be a minuscule dip in the CMB. They theorize that dark-ages hydrogen may be detectable only because it has been absorbing a little bit of the microwave energy from the dawn of the universe.

The Moon Is a Harsh Mistress

The plan for Blue Ghost Mission 2 is to touch down soon after the sun has risen at the landing site. That will give mission managers two weeks to check out the spacecraft, take pictures, conduct other experiments that Blue Ghost carries, and charge LuSEE-Night’s battery pack with its photovoltaic panels. Then, as local sunset comes, they’ll turn everything off except for the LuSEE-Night receiver and a bare minimum of support systems.

LuSEE-Night will land at a site [orange dot] that’s about 25 degrees south of the moon’s equator and opposite the center of the moon’s face as seen from Earth. The moon’s far side is ideal for radio astronomy because it’s shielded from the solar wind as well as signals from Earth. Arizona State University/GSFC/NASA

LuSEE-Night will land at a site [orange dot] that’s about 25 degrees south of the moon’s equator and opposite the center of the moon’s face as seen from Earth. The moon’s far side is ideal for radio astronomy because it’s shielded from the solar wind as well as signals from Earth. Arizona State University/GSFC/NASA

There, in the frozen electromagnetic stillness, it will scan the spectrum between 0.1 and 50 MHz, gathering data for a low-frequency map of the sky—maybe including the first tantalizing signature of the dark ages.

“It’s going to be really tough with that instrument,” says Burns. “But we have some hardware and software techniques that…we’re hoping will allow us to detect what’s called the global or all-sky signal.… We, in principle, have the sensitivity.” They’ll listen and listen again over the course of the mission. That is, if their equipment doesn’t freeze or fry first.

A major task for LuSEE-Night is to protect the electronics that run it. Temperature extremes are the biggest problem. Systems can be hardened against cosmic radiation, and a sturdy spacecraft should be able to handle the stresses of launch, flight, and landing. But how do you build it to last when temperatures range between 120 and −130 °C? With layers of insulation? Electric heaters to reduce nighttime chill?

“All of the above,” says Burns. To reject daytime heat, there will be a multicell parabolic radiator panel on the outside of the equipment bay. To keep warm at night, there will be battery power—a lot of battery power. Of LuSEE-Night’s launch mass of 108 kg, about 38 kg is a lithium-ion battery pack with a capacity of 7,160 watt-hours, mostly to generate heat. The battery cells will recharge photovoltaically after the sun rises. The all-important spectrometer has been programmed to cycle off periodically during the two weeks of darkness, so that the battery’s state of charge doesn’t drop below 8 percent; better to lose some observing time than lose the entire apparatus and not be able to revive it.

Lunar Radio Astronomy for the Long Haul

And if they can’t revive it? Burns has been through that before. In 2024 he watched helplessly as Odysseus, the first U.S.-made lunar lander in 50 years, touched down—and then went silent for 15 agonizing minutes until controllers in Texas realized they were receiving only occasional pings instead of detailed data. Odysseus had landed hard, snapped a leg, and ended up lying almost on its side.

ROLSES-1, shown here inside a SpaceX Falcon 9 rocket, was the first radio telescope to land on the moon, in February 2024. During a hard landing, one leg broke, making it difficult for the telescope to send readings back to Earth.Intuitive Machines/SpaceX

ROLSES-1, shown here inside a SpaceX Falcon 9 rocket, was the first radio telescope to land on the moon, in February 2024. During a hard landing, one leg broke, making it difficult for the telescope to send readings back to Earth.Intuitive Machines/SpaceX

As part of its scientific cargo, Odysseus carried ROLSES-1 (Radiowave Observations on the Lunar Surface of the photo-Electron Sheath), an experiment Burns and a friend had suggested to NASA years before. It was partly a test of technology, partly to study the complex interactions between sunlight, radiation, and lunar soil—there’s enough electric charge in the soil sometimes that dust particles levitate above the moon’s surface, which could potentially mess with radio observations. But Odysseus was damaged badly enough that instead of a week’s worth of data, ROLSES got 2 hours, most of it recorded before the landing. A grad student working with Burns, Joshua Hibbard, managed to partially salvage the experiment and prove that ROLSES had worked: Hidden in its raw data were signals from Earth and the Milky Way.

“It was a harrowing experience,” Burns said afterward, “and I’ve told my students and friends that I don’t want to be first on a lander again. I want to be second, so that we have a greater chance to be successful.” He says he feels good about LuSEE-Night being on the Blue Ghost 2 mission, especially after the successful Blue Ghost 1 landing. The ROLSES experiment, meanwhile, will get a second chance: ROLSES-2 has been scheduled to fly on Blue Ghost Mission 3, perhaps in 2028.

NASA’s plan for the FarView Observatory lunar radio telescope array, shown in an artist’s rendering, calls for 100,000 dipole antennas to be spread out over 200 square kilometers. Ronald Polidan

NASA’s plan for the FarView Observatory lunar radio telescope array, shown in an artist’s rendering, calls for 100,000 dipole antennas to be spread out over 200 square kilometers. Ronald Polidan

If LuSEE-Night succeeds, it will doubtless raise questions that require much more ambitious radio telescopes. Burns, Hallinan, and others have already gotten early NASA funding for a giant interferometric array on the moon called FarView. It would consist of a grid of 100,000 antenna nodes spread over 200 square kilometers, made of aluminum extracted from lunar soil. They say assembly could begin as soon as the 2030s, although political and budget realities may get in the way.

Through it all, Burns has gently pushed and prodded and lobbied, advocating for a lunar observatory through the terms of ten NASA administrators and seven U.S. presidents. He’s probably learned more about Washington politics than he ever wanted. American presidents have a habit of reversing the space priorities of their predecessors, so missions have sometimes proceeded full force, then languished for years. With LuSEE-Night finally headed for launch, Burns at times sounds buoyant: “Just think. We’re actually going to do cosmology from the moon.” At other times, he’s been blunt: “I never thought—none of us thought—that it would take 40 years.”

“Like anything in science, there’s no guarantee,” says Burns. “But we need to look.”

This article appears in the February 2026 print issue as “The Quest To Build a Telescope That Can Hear the Cosmic Dark Ages.”

From Your Site Articles

Related Articles Around the Web

In an artist’s rendering, the LuSEE-Night radio telescope sits atop Firefly Aerospace’s Blue Ghost 2 lander, which will carry it to the moon’s far side. Firefly Aerospace

In an artist’s rendering, the LuSEE-Night radio telescope sits atop Firefly Aerospace’s Blue Ghost 2 lander, which will carry it to the moon’s far side. Firefly Aerospace

LuSEE-Night undergoes final assembly [top and center] at the Space Sciences Laboratory at the University of California, Berkeley, and testing [bottom] at Firefly Aerospace outside Austin, Texas. From top: Space Sciences Laboratory/University of California, Berkeley (2); Firefly Aerospace

LuSEE-Night undergoes final assembly [top and center] at the Space Sciences Laboratory at the University of California, Berkeley, and testing [bottom] at Firefly Aerospace outside Austin, Texas. From top: Space Sciences Laboratory/University of California, Berkeley (2); Firefly Aerospace

In 1930, Karl Jansky, a Bell Labs engineer in Holmdel, N.J., built this rotating antenna on wheels to identify sources of static for radio communications. NRAO/AUI/NSF

In 1930, Karl Jansky, a Bell Labs engineer in Holmdel, N.J., built this rotating antenna on wheels to identify sources of static for radio communications. NRAO/AUI/NSF

In the 1960s, Arno Penzias and Robert W. Wilson used this horn antenna in Holmdel, N.J., to detect faint signals from the big bang. GL Archive/Alamy

In the 1960s, Arno Penzias and Robert W. Wilson used this horn antenna in Holmdel, N.J., to detect faint signals from the big bang. GL Archive/Alamy

LuSEE-Night will land at a site [orange dot] that’s about 25 degrees south of the moon’s equator and opposite the center of the moon’s face as seen from Earth. The moon’s far side is ideal for radio astronomy because it’s shielded from the solar wind as well as signals from Earth. Arizona State University/GSFC/NASA

LuSEE-Night will land at a site [orange dot] that’s about 25 degrees south of the moon’s equator and opposite the center of the moon’s face as seen from Earth. The moon’s far side is ideal for radio astronomy because it’s shielded from the solar wind as well as signals from Earth. Arizona State University/GSFC/NASA

ROLSES-1, shown here inside a SpaceX Falcon 9 rocket, was the first radio telescope to land on the moon, in February 2024. During a hard landing, one leg broke, making it difficult for the telescope to send readings back to Earth.Intuitive Machines/SpaceX

ROLSES-1, shown here inside a SpaceX Falcon 9 rocket, was the first radio telescope to land on the moon, in February 2024. During a hard landing, one leg broke, making it difficult for the telescope to send readings back to Earth.Intuitive Machines/SpaceX

NASA’s plan for the FarView Observatory lunar radio telescope array, shown in an artist’s rendering, calls for 100,000 dipole antennas to be spread out over 200 square kilometers. Ronald Polidan

NASA’s plan for the FarView Observatory lunar radio telescope array, shown in an artist’s rendering, calls for 100,000 dipole antennas to be spread out over 200 square kilometers. Ronald Polidan