At the start of this year, it seemed like everybody was reminiscing about the year 2016. In January alone, Spotify saw a 790 percent increase in 2016-themed playlists. People were declaring that the 2026 vibe would match the feel-good vibes of 2016.

Tech

Andrew Ng: Unbiggen AI – IEEE Spectrum

Andrew Ng has serious street cred in artificial intelligence. He pioneered the use of graphics processing units (GPUs) to train deep learning models in the late 2000s with his students at Stanford University, cofounded Google Brain in 2011, and then served for three years as chief scientist for Baidu, where he helped build the Chinese tech giant’s AI group. So when he says he has identified the next big shift in artificial intelligence, people listen. And that’s what he told IEEE Spectrum in an exclusive Q&A.

Ng’s current efforts are focused on his company

Landing AI, which built a platform called LandingLens to help manufacturers improve visual inspection with computer vision. He has also become something of an evangelist for what he calls the data-centric AI movement, which he says can yield “small data” solutions to big issues in AI, including model efficiency, accuracy, and bias.

Andrew Ng on…

The great advances in deep learning over the past decade or so have been powered by ever-bigger models crunching ever-bigger amounts of data. Some people argue that that’s an unsustainable trajectory. Do you agree that it can’t go on that way?

Andrew Ng: This is a big question. We’ve seen foundation models in NLP [natural language processing]. I’m excited about NLP models getting even bigger, and also about the potential of building foundation models in computer vision. I think there’s lots of signal to still be exploited in video: We have not been able to build foundation models yet for video because of compute bandwidth and the cost of processing video, as opposed to tokenized text. So I think that this engine of scaling up deep learning algorithms, which has been running for something like 15 years now, still has steam in it. Having said that, it only applies to certain problems, and there’s a set of other problems that need small data solutions.

When you say you want a foundation model for computer vision, what do you mean by that?

Ng: This is a term coined by Percy Liang and some of my friends at Stanford to refer to very large models, trained on very large data sets, that can be tuned for specific applications. For example, GPT-3 is an example of a foundation model [for NLP]. Foundation models offer a lot of promise as a new paradigm in developing machine learning applications, but also challenges in terms of making sure that they’re reasonably fair and free from bias, especially if many of us will be building on top of them.

What needs to happen for someone to build a foundation model for video?

Ng: I think there is a scalability problem. The compute power needed to process the large volume of images for video is significant, and I think that’s why foundation models have arisen first in NLP. Many researchers are working on this, and I think we’re seeing early signs of such models being developed in computer vision. But I’m confident that if a semiconductor maker gave us 10 times more processor power, we could easily find 10 times more video to build such models for vision.

Having said that, a lot of what’s happened over the past decade is that deep learning has happened in consumer-facing companies that have large user bases, sometimes billions of users, and therefore very large data sets. While that paradigm of machine learning has driven a lot of economic value in consumer software, I find that that recipe of scale doesn’t work for other industries.

It’s funny to hear you say that, because your early work was at a consumer-facing company with millions of users.

Ng: Over a decade ago, when I proposed starting the Google Brain project to use Google’s compute infrastructure to build very large neural networks, it was a controversial step. One very senior person pulled me aside and warned me that starting Google Brain would be bad for my career. I think he felt that the action couldn’t just be in scaling up, and that I should instead focus on architecture innovation.

“In many industries where giant data sets simply don’t exist, I think the focus has to shift from big data to good data. Having 50 thoughtfully engineered examples can be sufficient to explain to the neural network what you want it to learn.”

—Andrew Ng, CEO & Founder, Landing AI

I remember when my students and I published the first

NeurIPS workshop paper advocating using CUDA, a platform for processing on GPUs, for deep learning—a different senior person in AI sat me down and said, “CUDA is really complicated to program. As a programming paradigm, this seems like too much work.” I did manage to convince him; the other person I did not convince.

I expect they’re both convinced now.

Ng: I think so, yes.

Over the past year as I’ve been speaking to people about the data-centric AI movement, I’ve been getting flashbacks to when I was speaking to people about deep learning and scalability 10 or 15 years ago. In the past year, I’ve been getting the same mix of “there’s nothing new here” and “this seems like the wrong direction.”

How do you define data-centric AI, and why do you consider it a movement?

Ng: Data-centric AI is the discipline of systematically engineering the data needed to successfully build an AI system. For an AI system, you have to implement some algorithm, say a neural network, in code and then train it on your data set. The dominant paradigm over the last decade was to download the data set while you focus on improving the code. Thanks to that paradigm, over the last decade deep learning networks have improved significantly, to the point where for a lot of applications the code—the neural network architecture—is basically a solved problem. So for many practical applications, it’s now more productive to hold the neural network architecture fixed, and instead find ways to improve the data.

When I started speaking about this, there were many practitioners who, completely appropriately, raised their hands and said, “Yes, we’ve been doing this for 20 years.” This is the time to take the things that some individuals have been doing intuitively and make it a systematic engineering discipline.

The data-centric AI movement is much bigger than one company or group of researchers. My collaborators and I organized a

data-centric AI workshop at NeurIPS, and I was really delighted at the number of authors and presenters that showed up.

You often talk about companies or institutions that have only a small amount of data to work with. How can data-centric AI help them?

Ng: You hear a lot about vision systems built with millions of images—I once built a face recognition system using 350 million images. Architectures built for hundreds of millions of images don’t work with only 50 images. But it turns out, if you have 50 really good examples, you can build something valuable, like a defect-inspection system. In many industries where giant data sets simply don’t exist, I think the focus has to shift from big data to good data. Having 50 thoughtfully engineered examples can be sufficient to explain to the neural network what you want it to learn.

When you talk about training a model with just 50 images, does that really mean you’re taking an existing model that was trained on a very large data set and fine-tuning it? Or do you mean a brand new model that’s designed to learn only from that small data set?

Ng: Let me describe what Landing AI does. When doing visual inspection for manufacturers, we often use our own flavor of RetinaNet. It is a pretrained model. Having said that, the pretraining is a small piece of the puzzle. What’s a bigger piece of the puzzle is providing tools that enable the manufacturer to pick the right set of images [to use for fine-tuning] and label them in a consistent way. There’s a very practical problem we’ve seen spanning vision, NLP, and speech, where even human annotators don’t agree on the appropriate label. For big data applications, the common response has been: If the data is noisy, let’s just get a lot of data and the algorithm will average over it. But if you can develop tools that flag where the data’s inconsistent and give you a very targeted way to improve the consistency of the data, that turns out to be a more efficient way to get a high-performing system.

“Collecting more data often helps, but if you try to collect more data for everything, that can be a very expensive activity.”

—Andrew Ng

For example, if you have 10,000 images where 30 images are of one class, and those 30 images are labeled inconsistently, one of the things we do is build tools to draw your attention to the subset of data that’s inconsistent. So you can very quickly relabel those images to be more consistent, and this leads to improvement in performance.

Could this focus on high-quality data help with bias in data sets? If you’re able to curate the data more before training?

Ng: Very much so. Many researchers have pointed out that biased data is one factor among many leading to biased systems. There have been many thoughtful efforts to engineer the data. At the NeurIPS workshop, Olga Russakovsky gave a really nice talk on this. At the main NeurIPS conference, I also really enjoyed Mary Gray’s presentation, which touched on how data-centric AI is one piece of the solution, but not the entire solution. New tools like Datasheets for Datasets also seem like an important piece of the puzzle.

One of the powerful tools that data-centric AI gives us is the ability to engineer a subset of the data. Imagine training a machine-learning system and finding that its performance is okay for most of the data set, but its performance is biased for just a subset of the data. If you try to change the whole neural network architecture to improve the performance on just that subset, it’s quite difficult. But if you can engineer a subset of the data you can address the problem in a much more targeted way.

When you talk about engineering the data, what do you mean exactly?

Ng: In AI, data cleaning is important, but the way the data has been cleaned has often been in very manual ways. In computer vision, someone may visualize images through a Jupyter notebook and maybe spot the problem, and maybe fix it. But I’m excited about tools that allow you to have a very large data set, tools that draw your attention quickly and efficiently to the subset of data where, say, the labels are noisy. Or to quickly bring your attention to the one class among 100 classes where it would benefit you to collect more data. Collecting more data often helps, but if you try to collect more data for everything, that can be a very expensive activity.

For example, I once figured out that a speech-recognition system was performing poorly when there was car noise in the background. Knowing that allowed me to collect more data with car noise in the background, rather than trying to collect more data for everything, which would have been expensive and slow.

What about using synthetic data, is that often a good solution?

Ng: I think synthetic data is an important tool in the tool chest of data-centric AI. At the NeurIPS workshop, Anima Anandkumar gave a great talk that touched on synthetic data. I think there are important uses of synthetic data that go beyond just being a preprocessing step for increasing the data set for a learning algorithm. I’d love to see more tools to let developers use synthetic data generation as part of the closed loop of iterative machine learning development.

Do you mean that synthetic data would allow you to try the model on more data sets?

Ng: Not really. Here’s an example. Let’s say you’re trying to detect defects in a smartphone casing. There are many different types of defects on smartphones. It could be a scratch, a dent, pit marks, discoloration of the material, other types of blemishes. If you train the model and then find through error analysis that it’s doing well overall but it’s performing poorly on pit marks, then synthetic data generation allows you to address the problem in a more targeted way. You could generate more data just for the pit-mark category.

“In the consumer software Internet, we could train a handful of machine-learning models to serve a billion users. In manufacturing, you might have 10,000 manufacturers building 10,000 custom AI models.”

—Andrew Ng

Synthetic data generation is a very powerful tool, but there are many simpler tools that I will often try first. Such as data augmentation, improving labeling consistency, or just asking a factory to collect more data.

To make these issues more concrete, can you walk me through an example? When a company approaches Landing AI and says it has a problem with visual inspection, how do you onboard them and work toward deployment?

Ng: When a customer approaches us we usually have a conversation about their inspection problem and look at a few images to verify that the problem is feasible with computer vision. Assuming it is, we ask them to upload the data to the LandingLens platform. We often advise them on the methodology of data-centric AI and help them label the data.

One of the foci of Landing AI is to empower manufacturing companies to do the machine learning work themselves. A lot of our work is making sure the software is fast and easy to use. Through the iterative process of machine learning development, we advise customers on things like how to train models on the platform, when and how to improve the labeling of data so the performance of the model improves. Our training and software supports them all the way through deploying the trained model to an edge device in the factory.

How do you deal with changing needs? If products change or lighting conditions change in the factory, can the model keep up?

Ng: It varies by manufacturer. There is data drift in many contexts. But there are some manufacturers that have been running the same manufacturing line for 20 years now with few changes, so they don’t expect changes in the next five years. Those stable environments make things easier. For other manufacturers, we provide tools to flag when there’s a significant data-drift issue. I find it really important to empower manufacturing customers to correct data, retrain, and update the model. Because if something changes and it’s 3 a.m. in the United States, I want them to be able to adapt their learning algorithm right away to maintain operations.

In the consumer software Internet, we could train a handful of machine-learning models to serve a billion users. In manufacturing, you might have 10,000 manufacturers building 10,000 custom AI models. The challenge is, how do you do that without Landing AI having to hire 10,000 machine learning specialists?

So you’re saying that to make it scale, you have to empower customers to do a lot of the training and other work.

Ng: Yes, exactly! This is an industry-wide problem in AI, not just in manufacturing. Look at health care. Every hospital has its own slightly different format for electronic health records. How can every hospital train its own custom AI model? Expecting every hospital’s IT personnel to invent new neural-network architectures is unrealistic. The only way out of this dilemma is to build tools that empower the customers to build their own models by giving them tools to engineer the data and express their domain knowledge. That’s what Landing AI is executing in computer vision, and the field of AI needs other teams to execute this in other domains.

Is there anything else you think it’s important for people to understand about the work you’re doing or the data-centric AI movement?

Ng: In the last decade, the biggest shift in AI was a shift to deep learning. I think it’s quite possible that in this decade the biggest shift will be to data-centric AI. With the maturity of today’s neural network architectures, I think for a lot of the practical applications the bottleneck will be whether we can efficiently get the data we need to develop systems that work well. The data-centric AI movement has tremendous energy and momentum across the whole community. I hope more researchers and developers will jump in and work on it.

This article appears in the April 2022 print issue as “Andrew Ng, AI Minimalist.”

From Your Site Articles

Related Articles Around the Web

Tech

Gen Z is obsessing over 2016 songs, fashion and more. Why???

The only problem is that the experience of living through 2016 was far different from what Gen Z in particular remembers.

Daysia Tolentino is the journalist behind the newsletter Yap Year, where she’s been chronicling online affinity for the 2010s for almost a year now. Gen Z tends to blend all of the years together causing them to hype up the fun cultural parts and ignore the international and political turmoil that marked 2016. Tolentino says 2016 nostalgia might actually be a sign that young people are ready to break out of these cycles of nostalgia and reach for something new.

Tolentino spoke with Today, Explained host Astead Herndon about how 2016 has stuck with us and what our nostalgia for that time might reveal.

There’s much more in the full podcast, so listen to Today, Explained wherever you get your podcasts, including Apple Podcasts, Pandora, and Spotify.

Where did this 2016 trend start?

It’s been building up since last year, especially on TikTok. People have been slowly bringing back 2016 trends, whether that’s the mannequin challenge with the Black Beatles song, or pink wall aesthetics, and these really warm, hazy Instagram filters. When we entered the New Year in 2026, there were a lot of TikToks saying that 2026 was going to be like 2016.

I was curious about that. What does that even mean? I don’t actually think people know what that means at all. Then, a couple weeks ago, you see a lot of people on Instagram, especially peak Instagram influencers, posting themselves at their peak in 2016, which inspired everybody to post their own 2016 photos.

In your newsletter, you’ve tried to define what the 2016 mood board is. Can you explain that for me? When we’re thinking 2016 vibes, what do we mean?

When I look at 2016, I see makeup gurus on YouTube blow up at this time, and the makeup at the time is extremely maximalist. It’s very full glam, full beat, very matte, very colorful, some neon wigs at this time. You have the King Kylie of it all.

2016 was such a pivotal moment in internet culture. I think that is when we started to really enter this influencer era in full force. Prior to that, we had creators, but we didn’t have as much of this monetization infrastructure to make everything online an ad essentially. People were posting whatever they wanted to post.

It was the year that social media companies started pushing your news feed toward an engagement-based algorithm versus a friends-only chronological feed. In 2016, you see this flip toward influencer culture and this more put together easily consumable image and vibe to everything, and that trickles down into the culture of Instagram, so then people start posting as if they’re influencers themselves.

Even if you are a teenager like me at the time, if I look at my own Instagram, I could see my own posts mimicking influencers, becoming more polished, and becoming more aesthetic. I think people have missed that a lot, although I think people romanticize 2016 and forget a lot about what that year is actually like.

What do you think this says about 2026?

The entire 2020s so far, people on TikTok, especially young people, have been romanticizing the 2010s. I think, in general, people associate the 2010s with a sense of optimism, especially post-2012. Young people have grown up in such a tumultuous time with the pandemic, the economy, with politics and the world in general. It feels really hopeless at times, so people are looking back to that time that literally looked so sunny, and positive, and wonderful, and low stakes. I think it’s really easy for people to become really fixated on this time period, even if that wasn’t the actual reality, right?

Why do you think people are only cherry picking the good parts of 2016?

It was one of the last years in which we engaged in a monoculture together, and we had shared pieces of culture that we could remember. We could all remember “Closer” being on the radio like 24/7 at the time. I think a lot of people romanticized 2016, because it is the last time they remember unification in any way. It feels like the last kind of moment of normalcy before this decade of turmoil.

As much as there was so much change and disruption happening in 2016, whether that’s Donald Trump, whether that’s Brexit, or even the rise of Bernie Sanders, there were so many people who were so excited about that. I think there was a feeling of disruption that could be mistaken for general optimism. Then, this hope for something different to come that began in 2016 did not materialize in maybe the ways that people wanted them to. But I think a lot of people can remember that feeling and the shared culture that we all had that nobody really is able to share in these days.

I’m 32. I can’t imagine me 10 years ago thinking that the best years were behind me and not in front of me. Am I just being old, or does some of this feel like a generation that’s been raised on remakes and sequels looking back instead of looking forward?

Yeah, that is something I’m concerned about frequently. I’m 27; I shouldn’t be like, “Being 17 was the best years of my life.” It is too obsessed with looking back, because you are unable to imagine a better future forward. That is always really concerning. That is always an indication that there’s a loss of hope,

But, I think that this year, it seems like the energy from people online is about creating something new, and introducing friction, and moving forward from this constant need for escapism that the internet has provided us for the past 10 years. I have seen that rise alongside this nostalgia that has been so widely publicized and widely talked about.

I think people are ready for new things. I think people are ready to move on from constant escapism that the internet and social media brings, including constant nostalgia.

Tech

Videos: Autonomous Warehouse Robots, Drone Delivery

Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We also post a weekly calendar of upcoming robotics events for the next few months. Please send us your events for inclusion.

ICRA 2026: 1–5 June 2026, VIENNA

Enjoy today’s videos!

To train the next generation of autonomous robots, scientists at Toyota Research Institute are working with Toyota Manufacturing to deploy them on the factory floor.

Thanks, Erin!

Okay but like you didn’t show the really cool bit…?

[ Zipline ]

We’re introducing KinetIQ, an AI framework developed by Humanoid, for end-to-end orchestration of humanoid robot fleets. KinetIQ coordinates wheeled and bipedal robots within a single system, managing both fleet-level operations and individual robot behaviour across multiple environments. The framework operates across four cognitive layers, from task allocation and workflow optimization to VLA-based task execution and reinforcement-learning-trained whole-body control, and is shown here running across our wheeled industrial robots and bipedal R&D platform.

[ Humanoid ]

What if a robot gets damaged during operation? Can it still perform its mission without immediate repair? Inspired by self-embodied resilience strategies of stick insects, we developed a decentralized adaptive resilient neural control system (DARCON). This system allows legged robots to autonomously adapt to limb loss, ensuring mission success despite mechanical failure. This innovative approach leads to a future of truly resilient, self-recovering robotics.

[ VISTEC ]

Thanks, Poramate!

This animation shows Perseverance’s point of view during drive of 807 feet (246 meters) along the rim of Jezero Crater on Dec. 10, 2025, the 1,709th Martian day, or sol, of the mission. Captured over two hours and 35 minutes, 53 Navigation Camera (Navcam) image pairs were combined with rover data on orientation, wheel speed, and steering angle, as well as data from Perseverance’s Inertial Measurement Unit, and placed into a 3D virtual environment. The result is this reconstruction with virtual frames inserted about every 4 inches (0.1 meters) of drive progress.

[ Unitree ]

Representing and understanding 3D environments in a structured manner is crucial for autonomous agents to navigate and reason about their surroundings. In this work, we propose an enhanced hierarchical 3D scene graph that integrates open-vocabulary features across multiple abstraction levels and supports object-relational reasoning. Our approach leverages a Vision Language Model (VLM) to infer semantic relationships. Notably, we introduce a task reasoning module that combines Large Language Models (LLM) and a VLM to interpret the scene graph’s semantic and relational information, enabling agents to reason about tasks and interact with their environment more intelligently. We validate our method by deploying it on a quadruped robot in multiple environments and tasks, highlighting its ability to reason about them.

[ Norwegian University of Science & Technology, Autonomous Robots Lab ]

Thanks, Kostas!

We present HoLoArm, a quadrotor with compliant arms inspired by the nodus structure of dragonfly wings. This design provides natural flexibility and resilience while preserving flight stability, which is further reinforced by the integration of a Reinforcement Learning (RL) control policy that enhances both recovery and hovering performance.

[ HO Lab via IEEE Robotics and Automation Letters ]

In this work, we present SkyDreamer, to the best of our knowledge, the first end-to-end vision-based autonomous drone racing policy that maps directly from pixel-level representations to motor commands.

[ MAVLab ]

This video showcases AI WORKER equipped with five-finger hands performing dexterous object manipulation across diverse environments. Through teleoperation, the robot demonstrates precise, human-like hand control in a variety of manipulation tasks.

[ Robotis ]

Autonomous following, 45° slope climbing, and reliable payload transport in extreme winter conditions — built to support operations where environments push the limits.

[ DEEP Robotics ]

Living architectures, from plants to beehives, adapt continuously to their environments through self-organization. In this work, we introduce the concept of architectural swarms: systems that integrate swarm robotics into modular architectural façades. The Swarm Garden exemplifies how architectural swarms can transform the built environment, enabling “living-like” architecture for functional and creative applications.

[ SSR Lab via Science Robotics ]

Here are a couple of IROS 2025 keynotes, featuring Bram Vanderborght and Kyu Jin Cho.

[ IROS 2025 ]

From Your Site Articles

Related Articles Around the Web

Tech

Daily Deal: The 2026 Canva Bundle

from the good-deals-on-cool-stuff dept

The 2026 Canva Bundle has six courses to help you learn about graphic design. From logo design to business cards to branding to bulk content creation, these courses have you covered. It’s on sale for $20.

Note: The Techdirt Deals Store is powered and curated by StackCommerce. A portion of all sales from Techdirt Deals helps support Techdirt. The products featured do not reflect endorsements by our editorial team.

Filed Under: daily deal

Tech

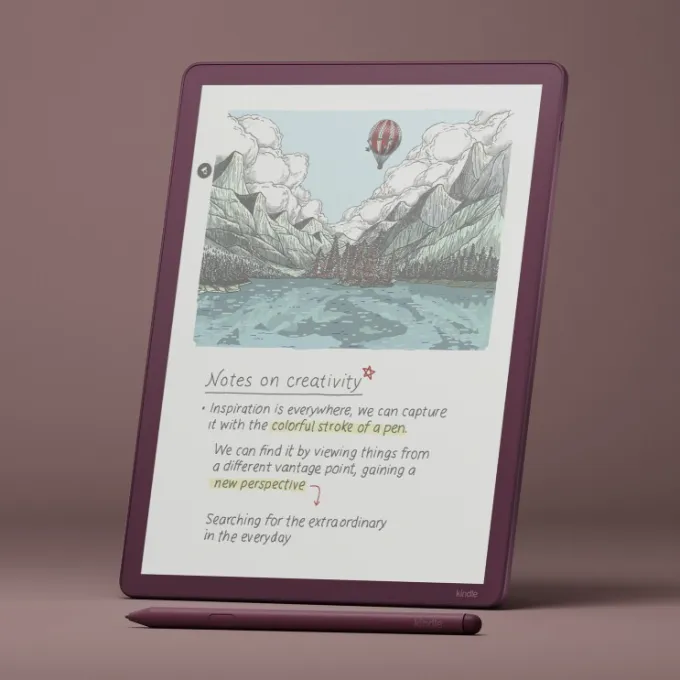

The Kindle Scribe Colorsoft is a pricey but pretty e-ink color tablet with AI features

If you primarily want a tablet device to mark up, highlight, and annotate your e-books and documents, and perhaps sometimes scribble some notes, Amazon’s new Kindle Scribe Colorsoft could be worth the hefty investment. For everyone else, it’s probably going to be hard to justify the cost of the 11-inch, $630+ e-ink tablet with a writeable color display.

However, if you were already leaning toward the 11-inch $549.99 Kindle Scribe — which also has a paper-like display but no color — you may as well throw in the extra cash at that point and get the Colorsoft version, which starts at $629.99.

At these price points, both the Scribe and Scribe Colorsoft are what we’d dub unnecessary luxuries for most, especially compared with the more affordable traditional Kindle ($110) or Kindle Paperwhite ($160).

Announced in December, the Fig color version just began shipping on January 28, 2026, and is available for $679.99 with 64GB.

Clearly, Amazon hopes to carve out a niche in the tablet market with these upgraded Kindle devices, which compete more with e-ink tablets like reMarkable than with other Kindles. But high-end e-ink readers with pens aren’t going to deliver Amazon a large audience. Meanwhile, nearly everyone can potentially justify the cost of an iPad because of its numerous capabilities, including streaming video, drawing, writing, using productivity tools, and the thousands of supported native apps and games.

The Scribe Colorsoft, meanwhile, is designed to cater to a very specific type of e-book reader or worker. This type of device could be a good fit for students and researchers, as well as anyone else who regularly needs to mark up files or documents.

Someone particularly interested in making to-do lists or keeping a personal journal might also appreciate the device, but it would have to get daily use to justify this price.

The device is easy enough to use, with a Home screen design similar to other Kindles, offering quick access to your notes and library, and even suggestions of books you can write in, like Sudoku or crossword puzzle books or drawing guides. Your Library titles and book recommendations pop in color, which makes it easier to find a book with a quick scan.

Spec-wise, Amazon says this newer 2025 model is 40% faster when turning pages or writing. We did find the tablet responsive here, as page turns felt snappy and writing flowed easily.

Despite its larger size, the device is thin and light, at 5.4 mm (0.21 inches) and 400 g (0.88 pounds), so it won’t weigh down your bag the way an iPad or other tablet would (the iPad mini, with an 8.3-inch screen, weighs slightly less). You could easily stand to carry the Kindle Scribe in your purse or tote, assuming you sport a bag that can fit an 11-inch screen. Compared with the original Colorsoft, we like that the Scribe Colorsoft’s bezel is the same size around the screen.

The Kindle Scribe Colorsoft features a glare-free, oxide-based e-ink display with a textured surface that makes it feel a lot like writing on paper. This helps with the transition to a digital device for those used to writing notes by hand. It also saves on battery life — the device can go up to 8 weeks between charges.

Helpfully, the display automatically adapts its brightness to your current lighting conditions, and you can opt to adjust the screen for more warmth when reading at night. But although it is a touchscreen, it’s less responsive than an LCD or OLED touchscreen, like those on iPad devices. That means when you perform a gesture, like pinching to resize the font, there’s a bit of a lag.

Like any Kindle, you can read e-books or PDFs on the Kindle Scribe Colorsoft tablet. You can also import Word documents and other files from Google Drive and Microsoft OneDrive directly to your device, or use the Send to Kindle option. (Supported file types include PDF, DOC/DOCX, TXT, RTF, HTM, HTML, PNG, GIF, JPG/JPEG, BMP, and EPUB.) Your Notebooks on the device can be exported to Microsoft OneNote, as well.

The included pen comes with some trade-offs. Unlike the Apple Pencil, the Kindle’s Premium Pen doesn’t require charging, which is a perk. It has also been designed to mimic the feel of writing on paper, and it glides fairly well across the screen. Without a flat side to charge, the rounded pen doesn’t have the same feel and grip as the Apple Pencil. It’s smoother, so it could slip in your hand.

Amazon’s design also requires you to replace the pen tips from time to time, depending on your use, as they can wear down. It’s not terribly expensive to do so — a 10 pack is around $17 — but it’s another thing to keep up with and manage.

There are 10 different pen colors and five highlight colors included, so your notes and annotations can be fairly colorful.

When writing, you can choose between a pen, a fountain pen, a marker, or a pencil with different stroke widths, depending on your preferences. You can set your favorite pen tool as a shortcut, which is enabled with a press and hold on the pen’s side button. (By default, it’s set to highlight.) If you grip your pen tightly and accidentally trigger this button, you’ll be glad to know you can shut this feature off.

The writing experience itself feels natural. And while the e-ink display means the colors are somewhat muted, which not everyone likes, it works well enough for its purpose. An e-ink tablet isn’t really the best for making digital art, despite its pens and new shader tool, but it is good for writing, taking notes, and highlighting.

From the Kindle’s Home screen, you can either jump directly into writing something down through the Quick Notes feature, or you can get more organized by creating a Notebook from the Workspace tab.

The Notebook offers a wide variety of notepad templates, allowing you to choose between blank, narrow, medium, or wide-ruled documents. There are templates for meeting notes, storyboards, habit trackers, monthly planners, music sheets, graph paper, checklists, daily planners, dotted sheets, and much more. (New templates with this device include Meeting Notes, Cornell Notes, Legal Pad, and College Rule options.)

It’s fun that you can erase things just by flipping the pen over to use the soft-tipped eraser, as you would with a No. 2 pencil. Of course, a precision erasing tool is available from the toolbar with different widths, if needed. Thanks to the e-ink screen, you can sometimes still see a faint ghost of your drawing or writing on the screen after erasing, but this fades after a bit (which may drive the more particular types crazy).

There’s a Lasso tool to circle things and move them around, copy or paste, or resize, but this probably won’t be used as much by more casual notetakers.

There are some other handy features for those who do a lot of annotating, too.

For instance, when you’re writing in a Word document or book, a feature called Active Canvas creates space for your notes. As you write directly in the book on top of the text, the sentence will move and wrap around your note. Even if you adjust the font size of what you’re reading, the note stays anchored to the text it originally referenced. I prefer this to writing directly in e-books, as things stay more organized, but others disagree.

In documents where margins expand, you can tap the expandable margin icon at the top of the left or right margin to take your notes in the margin, instead of on the page itself.

A Kindle with AI (of course)

The new Kindle also includes a number of AI tools and features.

The device will neaten up your scribbles and automatically straighten your highlighting and underlining. A couple of times, the highlighting action caused our review unit to freeze, but it recovered after returning to the Home screen with a press of the side button.

Meanwhile, a new AI feature (look for the sparkle icon at the top left of the screen) lets you both summarize text and refine your handwriting. The latter, oddly, doesn’t let you switch to a typed font but will let you pick between a small handful of handwritten fonts (Cadia, Florio, Sunroom, and Notewright) via the Customize button.

The AI tool was not perfect. It could decipher some terrible scrawls, but it did get stumped when there was another scribble on the page alongside the text. Still, it’s a nice option to have if you can’t write well after years of typing, but like the feel of handwriting things and the more analog vibe.

The AI search feature can also look across your notebooks to find notes or make connections between them. To search, you either tap the on-screen keyboard or toggle the option to handwrite your search query, which is converted to text. You can interact with the search results (the AI-powered insights) by way of the Ask Notebooks AI feature, which lets you query against your notes.

Soon, Amazon will add other AI features, too, including an “Ask This Book” feature that lets you highlight a passage and then get spoiler-free answers to a question you have — like a character’s motive, scene significance, or other plot detail. Another feature, “Story So Far,” will help you catch up on the book you’re reading if you’ve taken a break, but again without any spoilers.

The Kindle Scribe Colorsoft comes in Graphite (Black) with either 32GB or 64GB of storage for $629.99 or $679.99, respectively. The Fig version is only available at $679.99 with 64GB of storage. Cases for the Scribe Colorsoft are an additional $139.99.

Tech

The Full Orwell: DOJ Weaponization Working Group Finally Gets Off The Ground

from the too-stupid-by-half dept

I have to admit: the first one-and-a-half paragraphs of this CNN report had me thinking the Trump administration was shedding another pretense and just embracing its inherent shittiness.

Justice Department officials are expected to meet Monday to discuss how to reenergize probes that are considered a top priority for President Donald Trump — reviewing the actions of officials who investigated him, according to a source familiar with the plan.

Almost immediately after Pam Bondi stepped into her role as attorney general last year, she established a “Weaponization Working Group” …

We all know the DOJ is fully weaponized. It’s little more than a fight promoter for Trump’s grudge matches. The DOJ continues to bleed talent as prosecutors and investigators flee the kudzu-esque corruption springing up everywhere in DC.

But naming something exactly what it is — the weaponization of the DOJ to punish Trump’s enemies — wasn’t something I ever expected to see.

I didn’t see it, which fulfills my expectations, I guess. That’s because it isn’t what it says on the tin, even though it’s exactly the thing it says it isn’t. 1984 is apparently the blueprint. It’s called the “Weaponization Working Group,” but it’s supposedly the opposite: a de-weaponization working group. Here’s the second half of the paragraph we ellipsised out of earlier:

…[t]o review law enforcement actions taken under the Biden administration for any examples of what she described as “politicized justice.”

The Ministry of Weaponization has always de-weaponized ministries. Or whatever. The memo that started this whole thing off — delivered the same day Trump returned to office — said it even more clearly:

ENDING THE WEAPONIZATION OF THE FEDERAL GOVERNMENT

Administration officials are idiots, but they’re not so stupid they don’t know what they’re doing. They don’t actually want to end the weaponization. They just want to make sure all the weapons are pointing in one direction.

Trading in vindication hasn’t exactly worked well so far. Trump’s handpicked replacements for prosecutors that have either quit or been fired are a considerable downgrade from the previous office-holders. They have had their cases tossed and their careers as federal prosecutors come to an end because (1) Trump doesn’t care what the rules for political appointments are and (2) he’s pretty sure he can find other stooges to shove into the DOJ revolving door.

The lack of forward progress likely has Pam Bondi feeling more heat than she’s used to. So the deliberately misnamed working group is going to actually start grouping and working.

The Weaponization Working Group is now expected to start meeting daily with the goal of producing results in the next two months, according to the person familiar with the plan.

Nothing good will come from this. Given the haphazard nature of the DOJ’s vindictive prosecutions efforts, there’s still a chance nothing completely evil will come from this either. It’s been on the back burner for a year. Pam Bondi can’t keep this going on her own. And it’s hell trying to keep people focused on rubbing Don’s tummy when employee attrition is what the DOJ is best known for these days.

Filed Under: doj, donald trump, pam bondi, trump administration, vindictive prosecution, weaponization

Tech

US bans Chinese software from connected cars, triggering a major industry overhaul

The rule, issued by the Commerce Department’s Bureau of Industry and Security, bans code written in China or by Chinese-owned firms from vehicles that connect to the cloud. By 2029, even their connectivity hardware will be covered under the same restrictions.

Read Entire Article

Source link

Tech

Valve Delays Steam Frame and Steam Machine Pricing as Memory Costs Rise

Valve revealed its lineup of upcoming hardware in November, including a home PC-gaming console called the Steam Machine and the Steam Frame, a VR headset. At the time of the reveal, the company expected to release its hardware in “early 2026,” but the current state of memory and storage prices appears to have changed those plans.

Valve says its goal to release the Steam Frame and Steam Machine in the first half of 2026 has not changed, but it’s still deliberating on final shipping dates and pricing, according to a post from the company on Wednesday. While the company didn’t provide specifics, it said it was mindful of the current state of the hardware and storage markets. All kinds of computer components have rocketed in price due to massive investments in AI infrastructure.

“When we announced these products in November, we planned on being able to share specific pricing and launch dates by now. But the memory and storage shortages you’ve likely heard about across the industry have rapidly increased since then,” Valve said. “The limited availability and growing prices of these critical components mean we must revisit our exact shipping schedule and pricing (especially around Steam Machine and Steam Frame).”

Valve says it will provide more updates in the future about its hardware lineup.

What are the Steam Frame and Steam Machine?

The Steam Frame is a standalone VR headset that’s all about gaming. At the hardware reveal in November, CNET’s Scott Stein described it as a Steam Deck for your face. It runs on SteamOS on an ARM-based chip, so games can be loaded onto the headset and played directly from it, allowing gamers to play games on the go. There’s also the option to wirelessly stream games from a PC.

The Steam Machine is Valve’s home console. It’s a cube-shaped microcomputer intended to be connected to a TV.

When will the Steam Frame and Steam Machine come out?

Valve didn’t provide a specific launch date for either. The initial expectation after the November reveal was that the Steam Frame and Steam Machine would arrive in March. Valve’s statement about releasing its hardware in the first half of 2026 suggests both will come out in June at the latest.

How much will the Steam Frame and Steam Machine cost?

After the reveal, there was much speculation on their possible prices. For the Steam Frame, the expectation was that it would start at $600. The Steam Machine was expected to launch at a price closer to $700. Those estimates could easily increase by $100 or more due to the current state of pricing for memory and storage.

Tech

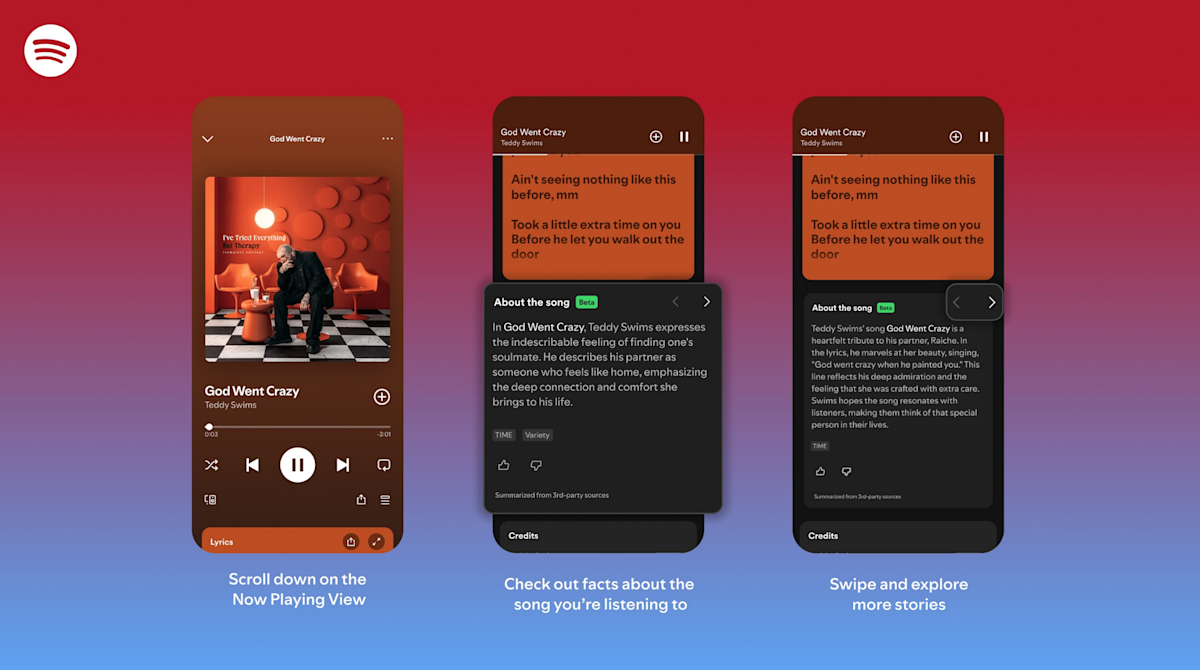

Spotify now lets you swipe on songs to learn more about them

Spotify is rolling out a feature called which lets fans learn a bit more about their favorite tunes. This “brings stories and context” into the listening experience, sort of like that old VH1 show Pop Up Video.

How does it work? The Now Playing View houses short, swipeable story cards that “explore the meaning” behind the music. This information is sourced from third parties and the company promises “interesting details and behind-the-scenes moments.” All you have to do is scroll down until you see the card and then swipe.

This is rolling out right now to Premium users on both iOS and Android, but it’s not everywhere just yet. The beta tool is currently available in the US, UK, Canada, Ireland, New Zealand and Australia.

Spotify has been busy lately, as this is just the latest new feature. The platform recently introduced a and .

Tech

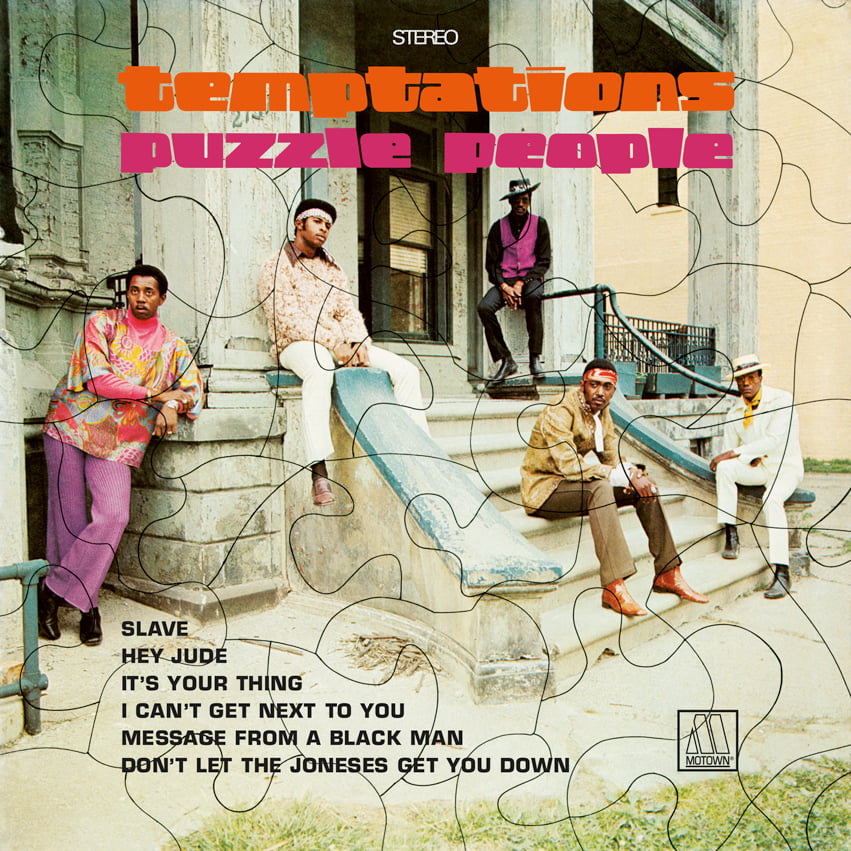

Review: The Temptations’ Psychedelic Motown Era Revisited in New Elemental Reissues

Elemental Music’s affordably priced reissue series brings classic 1960s and 1970s Motown titles back to record stores worldwide, making these albums accessible to a new generation of listeners. For those seeking clean, newly pressed, and largely faithful recreations of these vintage releases complete with pristine jackets and vinyl—rather than chasing original pressings that are increasingly scarce in comparable condition, these reissues fill a meaningful gap in today’s collector and listener market.

Elemental’s reissues were sourced from 1980s-era 16-bit/44.1 kHz digital masters, which many Motown enthusiasts and mastering engineers regard as among the best-sounding transfers available for these recordings, as numerous original tapes have been lost or damaged over time.

Each title in the new Motown reissue series is packaged in a plastic-lined, audiophile-grade white inner sleeve and includes a faithful recreation of a period-appropriate Motown company sleeve, complete with catalog imagery highlighting many of the label’s best-known releases from the era. In my listening, the pressings have generally been quiet, well-centered, and free of obvious manufacturing defects.

The Temptations, Puzzle People

1969’s Puzzle People by The Temptations works best as a complete album listening experience. I was surprised by how much I enjoyed the group’s take on contemporary pop material such as “Little Green Apples,” but it’s the album-opening No. 1 hit “I Can’t Get Next to You” that remains the main attraction—it still hits hard. More topical tracks like “Don’t Let the Joneses Get You Down” and “Message From a Black Man” land with real weight and conviction. Backed by the legendary Funk Brothers, Puzzle People also serves as a clear bridge to the more expansive Psychedelic Shack that followed the next year.

Where to buy: $29.98 at Amazon

The Temptations, Psychedelic Shack

A harder rocking album, this again finds The Temptations psychedic soul mode driven by producer/composer Norman Whitfield and backed by The Funk Brothers. Psychedelic Shack is a classic of the period delivering strong messages for the times — such as “You Make Your Own Heaven and Hell Right Here on Earth”– some of which feel remarkably timely and prescient for the times we are living through right now.

A near-mint original pressing of Psychedelic Shack on Gordy Records typically sells for $50–$60 today, so access to a clean, newly pressed copy at a lower price has obvious appeal—especially for newer listeners who prefer buying brand new pressings. I can also attest that genuinely clean copies of popular soul titles like this are far from easy to track down, even when you’re willing to spend the money.

Psychedelic Shack notably also contains the original version of the protest song “War” which was near simultaneously re-recorded by then-new Motown artist Edwin Starr (a much heavier production which became a massive hit). There is a fascinating backstory on the rationale for The Temptations version not being released as a single (easily found on the internet) but its ultimately a good thing as this version almost feels like a demo for Starr’s bigger hit.

Psychedelic Shack is one of the better Temptations albums start to finish so I have no problem recommending this for those who are new to their music. This new reissue sounds a bit thinner and flatter than my original copy, ultimately losing some dynamic punch.

Where to buy: $34.65 at Amazon

Mark Smotroff is a deep music enthusiast / collector who has also worked in entertainment oriented marketing communications for decades supporting the likes of DTS, Sega and many others. He reviews vinyl for Analog Planet and has written for Audiophile Review, Sound+Vision, Mix, EQ, etc. You can learn more about him at LinkedIn.

Related Reading:

Tech

iPhone 18 Pro Max battery life to increase again — but not by much

A new iPhone 18 Pro Max leak claims that will see the smallest year-over-year battery capacity increase in years, although the final use figures depends more on the power efficiency of the A20 processor.

The iPhone 18 Pro Max should see an improvement in battery life

Recent rumors have claimed that the expected iPhone Fold will have the largest-capacity battery the iPhone has ever had. But dubious leaks specifying a capacity figure claim it will be a 5,000mAh battery, and now the iPhone 18 Pro Max will reportedly beat it.

That’s according to leaker Digital Chat Station on Chinese social media site Weibo. He or she states that there will again be two models of the highest-end iPhone, with different battery capacities:

Rumor Score: 🤔 Possible

Continue Reading on AppleInsider | Discuss on our Forums

-

Video4 days ago

Video4 days agoWhen Money Enters #motivation #mindset #selfimprovement

-

Tech2 days ago

Tech2 days agoWikipedia volunteers spent years cataloging AI tells. Now there’s a plugin to avoid them.

-

Fashion7 days ago

Fashion7 days agoWeekend Open Thread – Corporette.com

-

Politics4 days ago

Politics4 days agoSky News Presenter Criticises Lord Mandelson As Greedy And Duplicitous

-

Crypto World6 days ago

Crypto World6 days agoU.S. government enters partial shutdown, here’s how it impacts bitcoin and ether

-

Sports6 days ago

Sports6 days agoSinner battles Australian Open heat to enter last 16, injured Osaka pulls out

-

Crypto World6 days ago

Crypto World6 days agoBitcoin Drops Below $80K, But New Buyers are Entering the Market

-

Crypto World4 days ago

Crypto World4 days agoMarket Analysis: GBP/USD Retreats From Highs As EUR/GBP Enters Holding Pattern

-

Sports8 hours ago

New and Huge Defender Enter Vikings’ Mock Draft Orbit

-

NewsBeat4 hours ago

NewsBeat4 hours agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Business1 day ago

Business1 day agoQuiz enters administration for third time

-

Crypto World7 days ago

Crypto World7 days agoKuCoin CEO on MiCA, Europe entering new era of compliance

-

Business7 days ago

Entergy declares quarterly dividend of $0.64 per share

-

NewsBeat3 days ago

NewsBeat3 days agoUS-brokered Russia-Ukraine talks are resuming this week

-

Sports4 days ago

Sports4 days agoShannon Birchard enters Canadian curling history with sixth Scotties title

-

NewsBeat4 days ago

NewsBeat4 days agoGAME to close all standalone stores in the UK after it enters administration

-

NewsBeat1 day ago

NewsBeat1 day agoStill time to enter Bolton News’ Best Hairdresser 2026 competition

-

Crypto World3 days ago

Crypto World3 days agoRussia’s Largest Bitcoin Miner BitRiver Enters Bankruptcy Proceedings: Report

-

Crypto World1 day ago

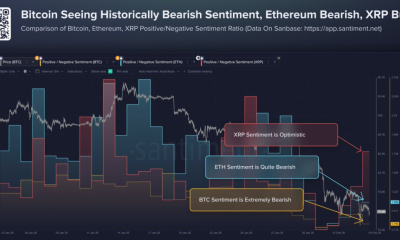

Crypto World1 day agoHere’s Why Bitcoin Analysts Say BTC Market Has Entered “Full Capitulation”

-

Crypto World23 hours ago

Crypto World23 hours agoWhy Bitcoin Analysts Say BTC Has Entered Full Capitulation