Crypto World

The Next Paradigm Shift Beyond Large Language Models

Artificial Intelligence has made extraordinary progress over the last decade, largely driven by the rise of large language models (LLMs). Systems such as GPT-style models have demonstrated remarkable capabilities in natural language understanding and generation. However, leading AI researchers increasingly argue that we are approaching diminishing returns with purely text-based, token-prediction architectures.

One of the most influential voices in this debate is Yann LeCun, Chief AI Scientist at Meta, who has consistently advocated for a new direction in AI research: World Models. These systems aim to move beyond pattern recognition toward a deeper, more grounded understanding of how the world works.

In this article, we explore what world models are, how they differ from large language models, why they matter, and which open-source world model projects are currently shaping the field.

What Are World Models?

At their core, world models are AI systems that learn internal representations of the environment, allowing them to simulate, predict, and reason about future states of the world.

Rather than mapping inputs directly to outputs, a world model builds a latent model of reality—a kind of internal mental simulation. This enables the system to answer questions such as:

- What is likely to happen next?

- What would happen if I take this action?

- Which outcomes are plausible or impossible?

This approach mirrors how humans and animals learn. We do not simply react to stimuli; we form internal models that let us anticipate consequences, plan actions, and avoid costly mistakes.

Yann LeCun views world models as a foundational component of human-level artificial intelligence, particularly for systems that must interact with the physical world.

Why Large Language Models Are Not Enough

Large language models are fundamentally statistical sequence predictors. They excel at identifying patterns in massive text corpora and predicting the next token given context. While this produces fluent and often impressive outputs, it comes with inherent limitations.

Key Limitations of LLMs

Lack of grounded understanding: LLMs are trained primarily on text rather than on physical experience.

Weak causal reasoning: They capture correlations rather than true cause-and-effect relationships.

No internal physics or common sense model:

They cannot reliably reason about space, time, or physical constraints.

Reactive rather than proactive: They respond to prompts but do not plan or act autonomously.

As LeCun has repeatedly stated,

predicting words is not the same as understanding the world.

How World Models Differ from Traditional Machine Learning

World models represent a significant departure from both classical supervised learning and modern deep learning pipelines.

Self-Supervised Learning at Scale

World models typically learn in a self-supervised or unsupervised manner. Instead of relying on labelled datasets, they learn by:

Predicting future states from past observations

- Filling in missing sensory information

- Learning latent representations from raw data such as video, images, or sensor streams

- This mirrors biological learning: humans and animals acquire vast amounts of knowledge simply by observing the world, not by receiving explicit labels.

Core Components of a World Model

A practical world model architecture usually consists of three key elements:

1. Perception Module

Encodes raw sensory inputs (e.g. images, video, proprioception) into a compact latent representation.

2. Dynamics Model

Learns how the latent state evolves over time, capturing causality and temporal structure.

3. Planning or Control Module

Uses the learned model to simulate future trajectories and select actions that optimise a goal.

This separation allows the system to think before it acts, dramatically improving efficiency and safety.

Practical Applications of World Models

World models are particularly valuable in domains where real-world experimentation is expensive, slow, or dangerous.

Robotics

Robots equipped with world models can predict the physical consequences of their actions, for example, whether grasping one object will destabilise others nearby.

Autonomous Vehicles

By simulating multiple future driving scenarios internally, world models enable safer planning under uncertainty.

Game Playing and Simulated Environments

World models allow agents to learn strategies without exhaustive trial-and-error in the real environment.

Industrial Automation

Factories and warehouses benefit from AI systems that can anticipate failures, optimise workflows, and adapt to changing conditions.

In all these cases, the ability to simulate outcomes before acting is a decisive advantage.

Open-Source World Model Projects You Should Know

The field of world models is still emerging, but several open-source initiatives are already making a significant impact.

1. World Models (Ha & Schmidhuber)

One of the earliest and most influential projects, introducing the idea of learning a compressed latent world model using VAEs and RNNs. This work demonstrated that agents could learn effective policies almost entirely inside their own simulated worlds.

2. Dreamer / DreamerV2 / DreamerV3 (DeepMind, open research releases)

Dreamer agents learn a latent dynamics model and use it to plan actions in imagination rather than the real environment, achieving strong performance in continuous control tasks.

3. PlaNet

A model-based reinforcement learning system that plans directly in latent space, reducing sample complexity.

4. MuZero (Partially Open)

While not fully open source, MuZero introduced a powerful concept: learning a dynamics model without explicitly modelling environment rules, combining planning with representation learning.

5. Meta’s JEPA (Joint Embedding Predictive Architectures)

Yann LeCun’s preferred paradigm, JEPA focuses on predicting abstract representations rather than raw pixels, forming a key building block for future world models.

These projects collectively signal a shift away from brute-force scaling toward structured, model-based intelligence.

Are We Seeing Diminishing Returns from LLMs?

While LLMs continue to improve, their progress increasingly depends on:

- More data

- Larger models

- Greater computational cost

World models offer an alternative path: learning more efficiently by understanding structure rather than memorising patterns. Many researchers believe the future of AI lies in hybrid systems that combine language models with world models that provide grounding, memory, and planning.

Why World Models May Be the Next Breakthrough

World models address some of the most fundamental weaknesses of current AI systems:

They enable common-sense reasoning

- They support long-term planning

- They allow safe exploration

- They reduce dependence on labelled data

- They bring AI closer to real-world interaction

For applications such as robotics, autonomous systems, and embodied AI, world models are not optional; they are essential.

Conclusion

World models represent a critical evolution in artificial intelligence, moving beyond language-centric systems toward agents that can truly understand, predict, and interact with the world. As Yann LeCun argues, intelligence is not about generating text, but about building internal models of reality.

With increasing open-source momentum and growing industry interest, world models are likely to play a central role in the next generation of AI systems. Rather than replacing large language models, they may finally give them what they lack most: a grounded understanding of the world they describe.

Crypto World

Extreme FUD Persists on Social Media Despite BTC’s $60K Dip Recovery

Extreme FUD lingers after Bitcoin’s $60,000 rebound, with bearish social sentiment outweighing bullish posts.

Bitcoin (BTC) slipped back below $67,000 on Wednesday, February 11, extending a volatile stretch that began with last week’s drop to $60,000.

Despite that rebound from the lows, social data shows fear remains elevated, with traders split over whether the worst of the sell-off is over.

Social Sentiment Stays Bearish as Volatility Spikes

Data shared by on-chain analytics firm Santiment shows a high ratio of bearish to bullish posts even after Bitcoin recovered from its $60,000 dip. According to the firm, retail traders seem hesitant to buy at current levels, while larger holders are facing less resistance in accumulating during periods of fear.

Santiment added that, historically, rebounds have often followed spikes in fear, though it did not claim this guarantees a bottom.

Meanwhile, short-term price action is still fragile, with market watcher Ash Crypto reporting that Bitcoin’s fall below $67,000 had liquidated roughly $127 million in long positions within four hours.

At the time of writing, market data from CoinGecko showed BTC trading around the $66,700 region, down about 3% in the last 24 hours and nearly 13% on the week. Over the past 30 days, the flagship cryptocurrency has fallen more than 27%, and it remains 47% below its October 2025 all-time high.

The 24-hour range between $66,600 and $69,900 is a reflection of ongoing intraday swings, while weekly price action has spanned from about $62,800 to $76,500, showing just how unstable conditions are.

You may also like:

Volatility metrics support that view, with Binance data cited by Arab Chain analysts showing that Bitcoin’s seven-day annualized volatility has climbed to around 1.51, its highest reading since 2022. However, 30-day and 90-day measures remain lower at 0.81 and 0.56, suggesting recent turbulence has not yet evolved into a sustained high-volatility regime. According to the analysts, the average true range as a percentage sits near 0.075, which historically has been a compressed level that often comes right before a larger directional move.

Bear Market Comparisons Resurface

An earlier report this week noted that Bitcoin has closed three consecutive weeks below its 100-week moving average, a pattern seen in previous bear markets. CryptoQuant founder Ki Young Ju wrote on February 9 that “Bitcoin is not pumpable right now,” arguing that selling pressure is limiting upside follow-through.

Other commentators, including Doctor Profit, have described the current structure as a wide consolidation range between $57,000 and $87,000, warning that sideways trading could precede another leg lower.

Furthermore, macro data is adding to the cautious tone, with XWIN Research Japan writing that weaker U.S. retail sales and easing wage growth mean that consumption is slowing, which may weigh on risk assets in the short term. The firm also noted a persistently negative Coinbase Premium Gap since late 2025, suggesting there’s weak U.S. spot demand compared to derivatives-driven activity.

Yet not all industry voices are focused solely on price cycles, with WeFi’s Maksym Sakharov saying he believes Bitcoin sentiment will eventually strengthen despite falling prices, but for different reasons than in past rallies.

“I believe Bitcoin sentiment will turn even stronger despite the falling prices, but this time it won’t be only about price or speculation, but also about real adoption,” Sakharov said.

In the meantime, BTC is sitting in a narrow zone between fear-driven pessimism and technical support near $60,000, with traders watching whether high volatility resolves higher or breaks lower in the weeks ahead.

SECRET PARTNERSHIP BONUS for CryptoPotato readers: Use this link to register and unlock $1,500 in exclusive BingX Exchange rewards (limited time offer).

Crypto World

Franklin Templeton to Let Tokenized Money Funds Back Binance Trades

Global investment manager Franklin Templeton announced the launch of an institutional off‑exchange collateral program with Binance that lets clients use tokenized money market fund (MMF) shares to back trading activity while the underlying assets remain in regulated custody.

According to a Wednesday news release shared with Cointelegraph, the framework is intended to reduce counterparty risk by reflecting collateral balances inside Binance’s trading environment, rather than moving client assets onto the exchange.

Eligible institutions can pledge tokenized MMF shares issued via Franklin Templeton’s Benji Technology Platform as collateral for trading on Binance.

The tokenized fund shares are held off‑exchange by Ceffu Custody, a digital asset custodian licensed and supervised in Dubai, while their collateral value is mirrored on Binance to support trading positions.

Franklin Templeton said the model was designed to let institutions earn yield on regulated money market fund holdings while using the same assets to support digital asset trading, without giving up existing custody or regulatory protections.

Related: Franklin Templeton expands Benji tokenization platform to Canton Network

“Our off‑exchange collateral program is just that: letting clients easily put their assets to work in regulated custody while safely earning yield in new ways,” said Roger Bayston, head of digital assets at Franklin Templeton, in the release.

The initiative builds on a strategic collaboration between Binance and Franklin Templeton announced in 2025 to develop tokenization products that combine regulated fund structures with global trading infrastructure.

Off‑exchange collateral to cut counterparty risk

The design mirrors other tokenized real‑world asset collateral models in crypto markets. BlackRock’s BUIDL tokenized US Treasury fund, issued by Securitize, for example, is also accepted as trading collateral on Binance, as well as other platforms, including Crypto.com and Deribit.

That model allows institutional clients to post a low-volatility, yield‑bearing instrument instead of idle stablecoins or more volatile tokens.

Other issuers and venues, including WisdomTree’s WTGXX and Ondo’s OUSG, are exploring similar models, with tokenized bond and short‑term credit funds increasingly positioned as onchain collateral in both centralized and decentralized markets.

Related: WisdomTree’s USDW stablecoin to pay dividends on tokenized assets

Regulators flag cross‑border tokenization risks

Despite the trend of using tokenized MMFs as collateral, global regulators have warned that cross‑border tokenization structures can introduce new risks.

The International Organization of Securities Commissions (IOSCO) has cautioned that tokenized instruments used across multiple jurisdictions may exploit differences between national regimes and enable regulatory arbitrage if oversight and supervisory cooperation do not keep pace.

Cointelegraph asked Franklin Templeton how the tokenized MMF shares are regulated and protected and how the model was stress‑tested for extreme scenarios, but had not received a reply by publication.

Magazine: Getting scammed for 100 Bitcoin led Sunny Lu to create VeChain

Crypto World

Bank Negara Malaysia Plans to Launch Stablecoin and Tokenized Deposit Initiatives

TLDR

- Bank Negara Malaysia is testing ringgit stablecoins and tokenized deposits in 2026.

- Standard Chartered and Capital A lead the ringgit stablecoin project.

- The projects focus on improving wholesale payment systems.

- Maybank and CIMB are developing tokenized deposits for payments.

- BNM aims to assess financial stability and set policy by end of 2026

Bank Negara Malaysia (BNM) has revealed three new initiatives for 2026, focusing on digital assets such as ringgit stablecoins and tokenized deposits. The central bank’s Digital Asset Innovation Hub (DAIH) will evaluate these projects, focusing on their use in wholesale payment systems. BNM intends to assess the impact of these innovations on financial stability and will provide clarity by the end of 2026.

Malaysia Ringgit Stablecoin Settlement Project

One of the initiatives involves a ringgit stablecoin settlement system, developed by Standard Chartered Bank Malaysia in collaboration with Capital A. The project aims to streamline business-to-business transactions within Malaysia using a digital currency backed by the local currency.

The stablecoin system will be tested in a controlled environment, with both local and international corporate clients involved. The project will enable BNM to assess the effects of stablecoin use on monetary policy and financial stability.

It will also explore the potential for cross-border payments and integrate with the central bank’s other digital asset-related work. The project could pave the way for the adoption of stablecoins in Malaysia’s financial sector.

Tokenized Deposits for Payments

Another initiative focuses on tokenized deposits for payment systems, driven by two major banks: Maybank and CIMB. Both institutions will work on creating tokenized digital representations of deposits that can be used for payments.

These projects aim to modernize payment systems and provide efficient alternatives to traditional bank deposits. Testing will be conducted in partnership with financial institutions and other regulators to ensure that the systems meet regulatory standards.

BNM will evaluate how tokenized deposits impact payment flows and their integration with the broader financial ecosystem. The central bank expects the findings from these projects to inform future policy decisions by the end of 2026.

Crypto World

EU Parliament Backs Digital Euro to Bolster Payments Sovereignty

The European Parliament threw its weight behind the European Central Bank’s (ECB) digital euro project in a vote that framed money and payments as a strategic asset in an era of rising geopolitical tensions.

Lawmakers adopted the annual ECB report by 443 votes in favor, 71 against and 117 abstentions, backing amendments that describe the digital euro as “essential” to strengthening European Union monetary sovereignty, reducing fragmentation in retail payments and bolstering the integrity of the single market.

The text places growing emphasis on how public money in digital form can curb Europe’s reliance on non‑EU payment providers and private instruments.

Members of the European Parliament (MEPs) also underlined that the ECB must remain independent and free from political pressure, arguing that safeguarding central bank autonomy was key to maintaining price stability and market confidence.

During the plenary debate, Johan Van Overtveldt, MEP and former Belgian finance minister, flagged that “the independence of the ECB is not a technical detail.”

He warned that history showed political interference with central banks “invariably leads to inflation, financial instability and even nasty political turmoil.”

Related: EU council endorses offline and online versions of digital euro

He argued that reaffirming independence is “even more important in the current global context,” likening monetary and financial stability to utilities such as water and electricity whose importance is only truly noticed when they fail.

Digital euro as public good and geopolitical hedge

The adopted resolution states that, even as the ECB develops a digital euro, cash should retain an important role in the euro area economy, and both physical and digital euros will be legal tender.

The parliamentary backing comes amid a broader push by central bankers and economists to frame the digital euro as a public good and a geopolitical hedge.

Last month, ECB executive board member Piero Cipollone called the project “public money in digital form” and tied it directly to concerns about the “weaponisation of every conceivable tool.”

He argued that Europe needed a retail payment system “fully under our control” and built on European infrastructure rather than foreign schemes.

Earlier in January, 70 economists and policy experts urged MEPs to “let the public interest prevail” on the digital euro, warning that without a strong public option, private stablecoins and foreign payment giants could gain even greater influence over Europe’s digital payments, deepening dependencies in times of stress.

Magazine: How crypto laws changed in 2025 — and how they’ll change in 2026

Crypto World

Stablecoins Expansion into UAE Banking System

Key Insights

- Ripple and Zand link RLUSD and AEDZ to support regulated stablecoin payments and custody in the UAE.

- The partnership focuses on XRPL-based issuance, liquidity, and compliance-led banking integration.

- The move supports the UAE digital economy strategy and institutional blockchain adoption.

Ripple and Zand Bank Strengthen Blockchain Banking Ties

Ripple has also increased its collaboration with Zand Bank in the UAE to enable a regulated infrastructure of stablecoins. According to reports shared on X, the collaboration connects Ripple’s US dollar stablecoin, RLUSD, with Zand’s dirham-backed AEDZ token. Both assets will operate within a compliant banking framework.

🚨BREAKING: RIPPLE EXPANDS ENTERPRISE BLOCKCHAIN PUSH WITH ZAND DEAL@Ripple and @Official_Zand have announced a partnership focused on advancing the digital economy.

The collaboration will use Zand’s AEDZ stablecoin and Ripple’s $RLUSD stablecoin.

The goal is to bring… pic.twitter.com/9Ygz7tnMvW

— BSCN (@BSCNews) February 10, 2026

The partnership builds on a payment agreement signed in 2024 as it now shifts focus to custody, issuance, and liquidity. Ripple and Zand aim to bring blockchain-based settlement into institutional finance rather than trading activity.

How Will Stablecoins Integrate Into Regulated Banking

The companies plan to integrate RLUSD into Zand Bank’s regulated digital asset custody platform. This measure will enable institutions to hold and operate the stablecoins within the jurisdiction of the UAE. The partners will also evaluate the direct liquidity channels between RLUSD and AEDZ.

Zand Bank has confirmed plans to issue AEDZ on the XRP Ledger. XRPL offers fast settlement, low fees, and a consensus-based design. These features support payment efficiency while meeting regulatory expectations. Zand states that AEDZ remains fully backed by dirham reserves with regular attestations.

Why Does the XRP Ledger Matter for This Initiative

The project relies on the Ripple blockchain as its technical basis. XRPL allows settling in almost no time and issuing tokens without incurring excessive costs of operations. These facilities are applicable to bank level payment and depository services.

Ripple continues to position XRPL as a settlement layer for institutions and Zand partnership aligns with this strategy. It supports real-world use cases such as:

- Cross-border payments

- Treasury management

- Asset tokenization within a controlled environment

What Does This Mean for the UAE Digital Economy

Zand Bank is one of the UAE’s first fully digitized licensed banks. Ripple opened more branches in the region by forming custody and security dealings. Collectively, they endeavor to offer infrastructure that can help enable banks and corporations to adopt a compliant blockchain.

This growth marks the shift where regulated institutions are now leveraging stablecoins as financial instruments and not speculative assets. It is also an indication of even greater adoption of blockchain systems in conventional banking systems.

Crypto World

Spot Bitcoin ETFs Post $166M Inflows Despite Market Dip

Update (Feb. 11, 10:00 am UTC): This article has been updated to correct the reported number of shares.

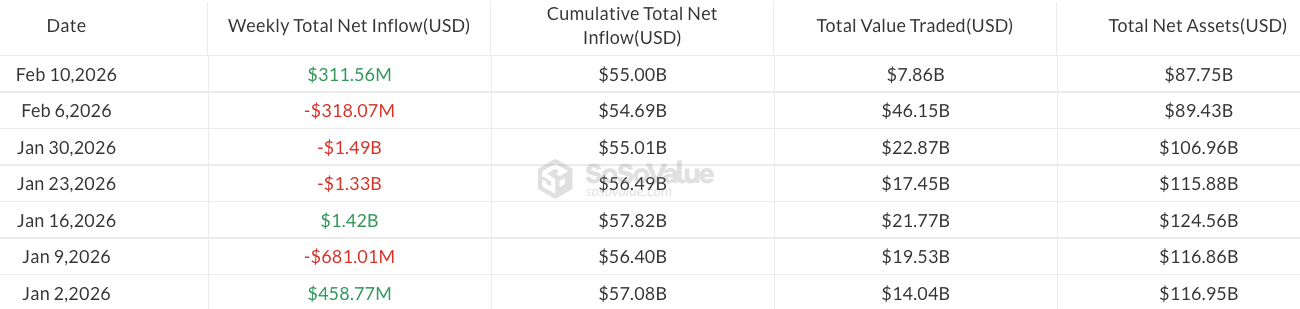

US spot Bitcoin exchange-traded funds (ETFs) extended their inflow streak to three sessions, with this week’s gains nearly offsetting last week’s outflows.

Spot Bitcoin (BTC) ETFs recorded $166.6 million in inflows on Tuesday, bringing total inflows this week to $311.6 million, according to data from SoSoValue.

Last week, the funds saw net outflows of $318 million, marking three consecutive weeks of losses totaling more than $3 billion.

Bitcoin ETF momentum has picked up in recent sessions, despite BTC price declining 13% over the past seven days and briefly slipping below $68,000 on Tuesday, according to CoinGecko.

Earlier this week, analysts observed signs of a potential trend shift across crypto exchange-traded products, noting a slowdown in the pace of selling.

Goldman trims Bitcoin ETF exposure, adds XRP and Solana ETFs

US investment bank Goldman Sachs reported yesterday that it trimmed its Bitcoin ETF exposure in the fourth quarter of 2025, according to a Form 13F filing with the Securities and Exchange Commission.

The bank specifically reduced holdings in BlackRock’s iShares Bitcoin Trust ETF (IBIT), cutting shares outstanding by 39%, from around 34 million in Q3 to 20.7 million in Q4, worth around $1 billion.

It also decreased stakes in other Bitcoin funds and companies, including Fidelity Wise Origin Bitcoin (FBTC) and Bitcoin Depot, and reduced its Ether (ETH) ETF positions.

At the same time, Goldman Sachs disclosed its first-ever positions in XRP (XRP) and Solana (SOL) ETFs, acquiring 6.95 million shares of XRP ETFs, worth $152 million, and 8.24 million shares of Solana ETFs, valued at $104 million.

Related: Bernstein calls Bitcoin sell-off ‘weakest bear case’ on record, keeps $150K 2026 target

According to SoSoValue data, spot altcoin ETFs saw modest inflows Tuesday, with Ether funds adding around $14 million, while XRP and Solana ETFs gained $3.3 million and $8.4 million, respectively.

On Thursday, Eric Balchunas, senior ETF analyst at Bloomberg, noted that the majority of Bitcoin ETF investors had held their positions despite the recent downturn, estimating that only about 6% of total assets exited the funds even as Bitcoin prices fell sharply.

He added that, although BlackRock’s IBIT saw its assets drop to $60 billion from a peak of $100 billion, the fund could remain at this level for years while still holding the record as the “all-time-fastest ETF to reach $60 billion.”

Magazine: Bitcoin difficulty plunges, Buterin sells off Ethereum: Hodler’s Digest, Feb. 1 – 7

Crypto World

Ethereum Whales Accumulate Aggressively as ETH Price Drops Below $2K

Ethereum accumulation addresses have witnessed a surge in daily inflows since Friday, suggesting growing confidence in Ether’s (ETH) long-term price trajectory despite its latest drop below $2,000.

Key takeaways:

-

Ether’s drop below $2,000 has left 58% of addresses with unrealized losses.

-

Accumulation addresses have absorbed about $2.6 billion in ETH over five days.

-

Key Ether levels to watch below $2,000 include $1,800, $1,500, $1,200, and potentially $750–$1,000 in extreme scenarios.

58% of Ether addresses are now in the red

Ether’s 38% drop over the last month has seen it fall below key support levels, including the average entry price of accumulation addresses, the cost basis of spot Ethereum ETF investors, and the psychological level at $2,000.

The ETH/USD pair now trades 60.5% below its all-time high of $4,950, leaving a significant portion of holders underwater. This includes BitMine, the world’s largest Ethereum treasury linked to investor Tom Lee, which saw its paper losses swell to over $8 billion.

Related: Large demand zone below $2K ETH price gives signal on where Ether may go

With ETH trading at $1,954 on Wednesday, only 41.5% Ethereum addresses are in profit, while over 58% are in the red.

Ether’s current market price is also below the average cost basis of accumulation addresses currently at $2,580, suggesting that long-term holders are increasingly under strain.

ETF investors are also feeling the pressure. James Seyffart, senior ETF Analyst at Bloomberg, highlighted that Ethereum ETF holders are currently in a worse position than their Bitcoin counterparts.

With ETH hovering below $2,000, the altcoin trades well below the estimated average ETF cost basis of about $3,500.

Ether accumulation absorbs 1.3 million ETH in five days

Despite the sharp downturn, investor confidence has not fully eroded. Data from CryptoQuant showed Ethereum accumulation addresses have received 1.3 million Ether worth approximately $2.6 billion at current rates.

The “full-scale accumulation” of ETH began in June 2025, and is “proceeding even more aggressively,” CryptoQuant analyst CW8900 said in Wednesday’s Quicktake analysis, adding:

“The current price will likely appear attractive to $ETH whales.”

As a result, the total ETH held by these long-term holders reached a record 27 million. That marks a 20.36% gain so far in 2026 despite the ETH price declining 34.5% over the same period.

Accumulation addresses are wallets that continuously receive ETH without making any outgoing transactions. They may belong to long-term holders, institutional investors, or entities strategically accumulating Ether rather than actively trading.

Large spikes in inflows to these addresses often signal strong confidence in Ether’s long-term potential, with past trends showing that such surges frequently precede price rallies.

For example, on June 22, 2025, Ethereum accumulation addresses recorded a then-all-time high daily inflow of over 380 million ETH. Nearly 30 days later, ETH’s price rose by almost 85%. A 25% price rally followed November 2025’s inflow spike into the accumulation addresses.

Key ETH price levels to watch below $2,000

The ETH/USD pair extended its losses below $2,000, a key support level, which the bulls must reclaim to prevent further downside.

“$ETH failed to hold above the $2,000 level and is now going down,” crypto analyst Ted Pillows said in an X post on Wednesday, adding:

“The next key level is around the $1,800-$1,850 level if Ethereum doesn’t reclaim the $2,000 level soon.”

Fellow analyst Crypto Thanos shares similar views, telling followers to “get ready” for a $1,500 ETH price if $2,000 is not reclaimed by the end of the week.

Zooming out, LadyTraderRa said Ether is “definitely going” to retest the $750-$1,000 zone, based on past price action on the monthly candle chart.

Glassnode’s UTXO realized price distribution (URPD), which shows the average prices at which ETH holders bought their coins, reveals that below $2,000, key support levels for ETH sit at $1,880, $1,580, and $1,230.

As Cointelegraph reported, the ETH/USD pair could drop to $1,750 and then $1,530, after failing to hold above $2,100.

This article does not contain investment advice or recommendations. Every investment and trading move involves risk, and readers should conduct their own research when making a decision. While we strive to provide accurate and timely information, Cointelegraph does not guarantee the accuracy, completeness, or reliability of any information in this article. This article may contain forward-looking statements that are subject to risks and uncertainties. Cointelegraph will not be liable for any loss or damage arising from your reliance on this information.

Crypto World

Regulation, derivatives helping drive TradFi institutions into crypto, panellists say

Clearer rules and improved technology are accelerating the convergence of traditional finance (TradFi) and decentralized markets, driving established institutions into areas such as crypto derivatives, according to panelists at Consensus Hong Kong.

“Regulation is really important. It gives you the rails that you need to operate in,” said Jason Urban, global co-head of digital assets at Galaxy Digital (GLXY), who took part in the “Ultimate Deriving Machine” panel.

Other speakers, including executives from exchange operator ICE Futures U.S., crypto prime brokerage FalconX and investment company ARK Invest highlighted how developments in the U.S., such as the 2024 approval of spot crypto exchange-traded funds (ETFs) and harmonization between the Securities and Exchange Commission (SEC) and the Commodity Futures Trading Commission (CFTC) have flipped crypto from a speculative sideline to a portfolio staple.

The key takeaway is that derivatives are set to grease the path for trillions of dollars in institutional inflows to the market. The momentum goes well beyond bitcoin , the largest cryptocurrency by market value.

ICE Futures U.S. President Jennifer Ilkiw highlighted forthcoming overnight rate futures tied to Circle Internet’s (CRCL) USDC stablecoin, launching in April, and multitoken indexes as evidence of institutions looking beyond bitcoin for exposure to a range of tokens.

“It makes it very easy. It’s like, if you’re taking our MSCI Emerging Markets, there’s hundreds of equities in there. You don’t need to know every single one,” she said, citing demand from former crypto skeptics.

Josh Lim, the global co-head of markets at FalconX, stressed bridging traditional financial exchanges like the CME with liquidity pools in decentralized finance (DeFi) using prime brokerages for hedge-fund arbitrage and leverage.

“Hyperliquid, obviously has been a big theme for this year, and last year, we’ve enabled a lot of our hedge fund clients to access that marketplace through our prime brokerage offering,” Lim said, referring to the largest decentralized exchange (DEX) for derivatives.

“It’s actually essential for firms like us … to bridge this liquidity gap between TradFi and DeFi … That’s a big edge,” Lim said. Crypto innovations like 24/7 trading and perpetuals are influencing Wall Street.

ARK Invest President Tom Staudt called the debut of spot bitcoin ETFs in the U.S. a milestone that slotted crypto into mainstream wealth managers’ portfolios and systems.

But he urged adoption of a true industry-wide beta benchmark — a broader market standard for measuring an asset’s risk and performance relative to the overall crypto market. There’s a need for a diversified index, rather than relying solely on a single reference point like bitcoin, he said.

“Bitcoin is a specific asset, but it’s not an asset class … You can’t have alpha without beta,” he said, pointing to futures as the gateway for structured products and active strategies.

inaction now is akin to “career suicide,” as real-world assets come onchain and demand participation, Urban said.

Crypto World

Uniswap Grabs Early Win as US Judge Dismisses Bancor Patent Lawsuit

A New York federal court has dismissed a patent infringement suit brought by Bancor-affiliated entities against Uniswap, finding that the asserted claims describe abstract ideas that are not eligible for patent protection under US law. Judge John G. Koeltl of the Southern District of New York granted the defendants’ motion to dismiss the complaint filed by Bprotocol Foundation and LocalCoin Ltd. The ruling, issued on February 10, leaves room for the plaintiffs to amend within 21 days; absent a timely amendment, the dismissal would become with prejudice. While the decision represents a procedural win for Uniswap, it does not resolve the merits of the underlying dispute, which centers on whether the decentralized exchange’s technology infringes patented methods for pricing and liquidity.

Key takeaways

- The court applied the Supreme Court’s two-step framework for patent eligibility and determined the challenged claims relate to an abstract concept—the calculation of currency exchange rates for transactions—rather than a patentable invention.

- Even though the patents touch on blockchain-based automation, the judge found no inventive concept sufficient to transform the abstract idea into a patent-eligible application.

- The complaint was dismissed without prejudice, giving Bprotocol Foundation and LocalCoin Ltd. a 21-day window to file an amended complaint addressing the court’s concerns.

- Direct infringement, induced infringement, and willful infringement claims were all dismissed, with the court indicating the plaintiffs failed to plausibly plead that Uniswap’s code contains the patented reserve-ratio features.

- Despite the procedural success for Uniswap, the door remains open for reassertion if the plaintiffs can reframe the allegations to meet the patent-eligibility standard or otherwise articulate a viable infringement theory.

Market context: The ruling sits within ongoing debates over software and business-method patents in crypto, where courts have repeatedly scrutinized whether blockchain-enabled pricing and liquidity mechanisms constitute protectable inventions or abstract financial practices.

Sentiment: Neutral

Market context: The decision comes amid a broader climate in which courts assess blockchain-related claims under established tests for patent-eligibility, potentially influencing how crypto developers approach IP risk and claims enforcement.

Sources & verification: The memorandum opinion and order from Judge Koeltl (Feb. 10); the CourtListener docket for Bprotocol Foundation v. Universal Navigation Inc.; Hayden Adams’ X post reacting to the decision; the original Bancor-Uniswap patent dispute coverage and filings cited in the referenced materials.

Why it matters

The court’s analysis reinforces the notion that merely applying a conventional pricing algorithm within a blockchain framework may not suffice to render a claim patentable. By characterizing the disputed concepts as abstract ideas tied to currency exchange calculations, the ruling underscores the enduring legal distinction between mathematical formulas and patent-eligible tech implementations, even when those implementations run on decentralized networks. For Uniswap (CRYPTO: UNI), the decision protects the platform from an immediate patent-ownership challenge rooted in fundamental pricing logic that was already broadly implemented across digital asset exchanges.

From Bancor’s perspective, the dismissal—without prejudice—creates a strategic opening. The plaintiffs can attempt to adjust the pleading to address the court’s concerns, potentially reframing the claims to emphasize an “inventive concept” or to articulate a more concrete, non-abstract application tied to a particular technology environment. The outcome may influence later filings against other DeFi protocols if claim language can be refined to meet the legal standard, especially in cases where developers claim that specific programmable constraints or reserve mechanisms are patentable because they are uniquely tied to a given protocol.

Beyond the parties involved, the decision signals how the U.S. patent system balances the protection of crypto innovations against broad, abstract financial techniques. While it does not close the door on all IP actions in DeFi, it does remind developers and litigants that the mere use of blockchain infrastructure or smart contracts does not automatically render a broad abstract idea patent-eligible. The landscape remains nuanced, with the potential for future rulings to alter how similar claims are framed and prosecuted.

https://platform.twitter.com/widgets.js

The immediate post-decision commentary from Uniswap founder Hayden Adams, who publicly celebrated the outcome, reflects the high-stakes nature of these disputes for open-source, community-driven projects. Adams’ brief social post—“A lawyer just told me we won”—highlights how patent battles intersect with developer culture and the public perception of DeFi innovation.

What to watch next

- Whether Bprotocol Foundation and LocalCoin Ltd. file an amended complaint within 21 days, and how the revised claims address the court’s abstract-idea reasoning.

- Any subsequent court rulings that interpret or apply the “inventive concept” standard to parallel DeFi patent cases, potentially shaping future strategy for both plaintiffs and defendants.

- Whether additional documents—such as claim charts or technical specifications—emerge to support allegations of infringement tied to Uniswap’s protocol code.

- Possible settlements or alternative dispute-resolution steps if parties seek to narrow the dispute without protracted litigation.

Sources & verification

- Memorandum opinion and order by Judge Koeltl, February 10, Southern District of New York.

- CourtListener docket: Bprotocol Foundation v. Universal Navigation Inc. (docket page cited in filing history).

- Hayden Adams’ X post reacting to the ruling.

- Bancor’s patent infringement allegations against Uniswap as documented in prior coverage.

What the ruling changes for DeFi and IP strategy

Uniswap’s procedural win reinforces the importance of framing crypto innovations in terms of concrete technical improvements rather than broad economic practices. For developers, it underscores the need to articulate how a protocol’s specific architecture—beyond generic pricing formulas—contributes a novel, non-obvious technical solution. For plaintiffs, the decision emphasizes the necessity of tying claims to verifiable technical embodiments, such as particular code features or protocol configurations, that clearly differ from ordinary market operations.

What to watch next

Going forward, observers will closely track whether a revised complaint could survive the patent-eligibility hurdle and, if so, how the court will evaluate whether a claimed feature meaningfully transforms an abstract idea into patent-eligible subject matter. The interplay between public blockchain code and patented concepts is likely to remain a focal point as more DeFi projects navigate IP risk in a rapidly evolving regulatory and judicial environment.

Rewritten Article Body

Judicial decision reframes patent-eligibility in a DeFi dispute between Bancor-affiliated plaintiffs and Uniswap

In a decision that foregrounds the ongoing jurisprudence around crypto patents, a New York federal court ruled that Bancor-affiliated plaintiffs’ claims against the Uniswap ecosystem are directed to abstract ideas rather than concrete, patentable inventions. The Southern District of New York, applying the Supreme Court’s two-step framework for patent eligibility, concluded that the core concept—calculating currency exchange rates to facilitate transactions—lacks the inventive concept required to qualify for patent protection. The ruling focuses on US patent law’s limits, not on the operational legitimacy of Uniswap’s decentralized exchange (Uniswap), which remains a foundational player in the DeFi space.

The plaintiffs—Bprotocol Foundation and LocalCoin Ltd.—had alleged that Uniswap’s protocol infringed patents tied to a “constant product automated market maker” mechanism that underpins many liquidity pools on decentralized exchanges. The court’s analysis rejected the argument that merely implementing a pricing formula on blockchain infrastructure could overcome the abstract-idea hurdle. In its view, the use of existing blockchain and smart contract technologies to address an economic problem does not constitute a patentable invention. The court emphasized that limiting an abstract idea to a particular technological environment does not convert it into patent-eligible subject matter, and it found no further inventive concept that would transform the abstract idea into patentable territory.

Crucially, the memorandum explained that the asserted claims cover the abstract idea of determining exchange rates for transactions rather than a specific, novel technical improvement. The court highlighted that “currency exchange is a fundamental economic practice,” and that the claimed method amounted to nothing more than a mathematical transformation performed in a blockchain-enabled setting. The decision expressly notes that merely asserting a mathematical formula within a decentralized framework does not, by itself, generate eligibility. The ruling also rejected arguments that a particular linkage to reserve ratios in Uniswap’s code or ecosystem would rescue the claims from the abstract-idea category.

Beyond the abstract-idea assessment, the court dismissed the infringement theories levelled by the plaintiffs. It found that the amended complaint failed to plausibly plead direct infringement—specifically, that Uniswap’s publicly available code embodies the claimed reserve ratio constants. Claims of induced and willful infringement were likewise dismissed, with the court stating that the plaintiffs did not credibly show that Uniswap’s team had knowledge of the patents before the lawsuit was filed. The dismissal was without prejudice, preserving the option for the plaintiffs to file an amended pleading that could address these shortcomings.

The decision came with a notable public response: Hayden Adams, the founder of Uniswap, took to X to acknowledge the outcome, signaling a morale boost for developers and teams operating in the open-source DeFi space. The public posting underscored the practical impact of court rulings on the culture and momentum of decentralized finance development.

The procedural posture of the case remains in flux. While Uniswap’s legal team secured a favorable procedural ruling, the case is not over. The plaintiffs have 21 days to amend their complaint; failure to do so would convert the dismissal into one with prejudice, effectively ending the action barring any new claims. If Bancor and LocalCoin elect to proceed with an amended filing, the court will scrutinize whether the revised claims meet the patent-eligibility standard and sufficiently articulate any alleged infringement in a way that satisfies the pleading requirements set forth by the court.

In the broader context, the decision contributes to a growing body of decisions that caution against overbroad or abstract patent claims in the crypto and DeFi space. It reinforces the premise that software-driven financial concepts—however novel in a blockchain setting—must advance a concrete technical improvement to clear the patent bar. The outcome also signals that, for now, DeFi projects focusing on open, interoperable codebases may enjoy a degree of protection from aggressive patent assertions based on abstract pricing ideas, at least until a more precise standard for crypto-specific technology claims emerges in the courts.

Crypto World

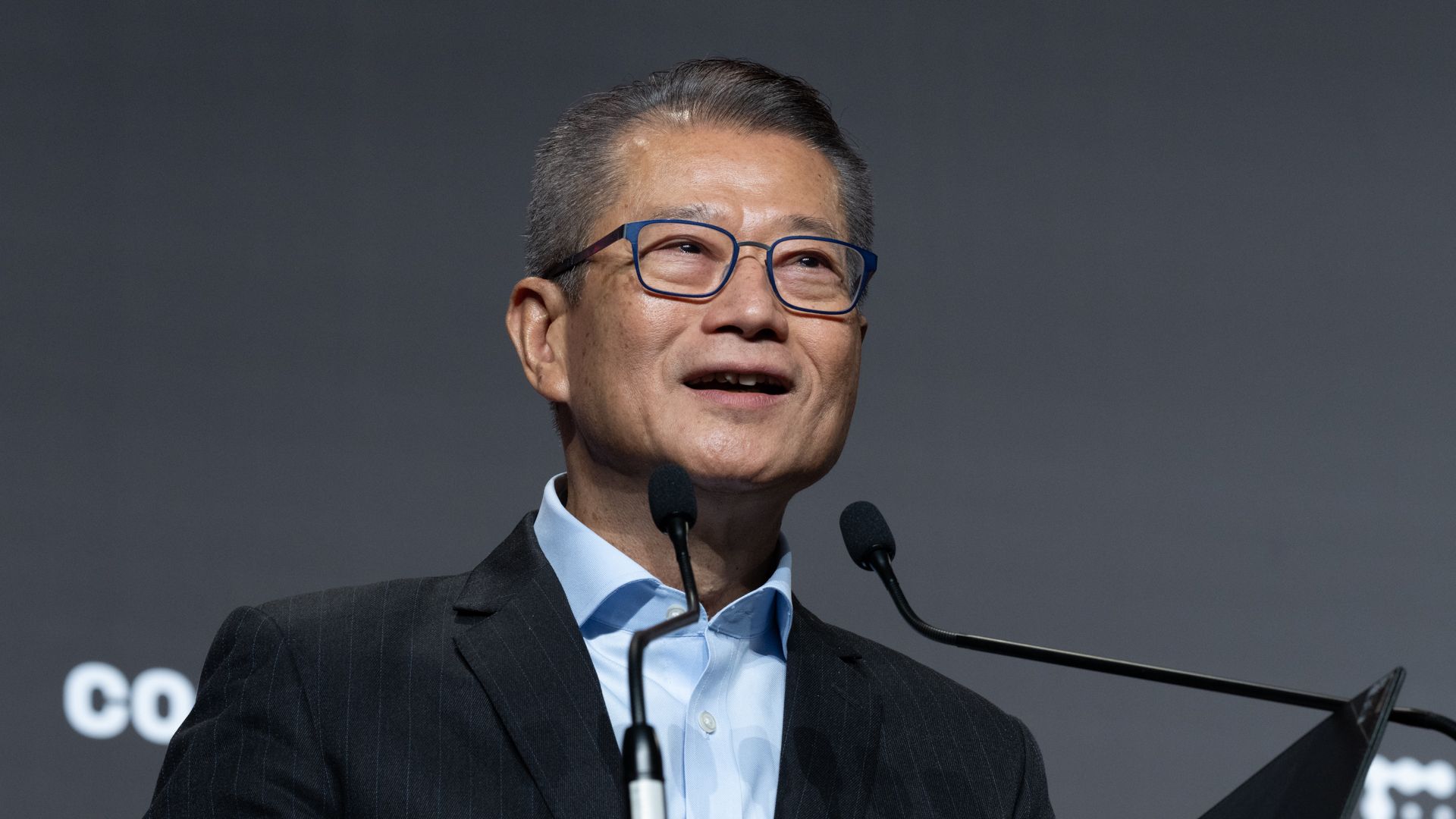

Hong Kong ready to issue first stablecoin licenses in March, Financial Secretary says

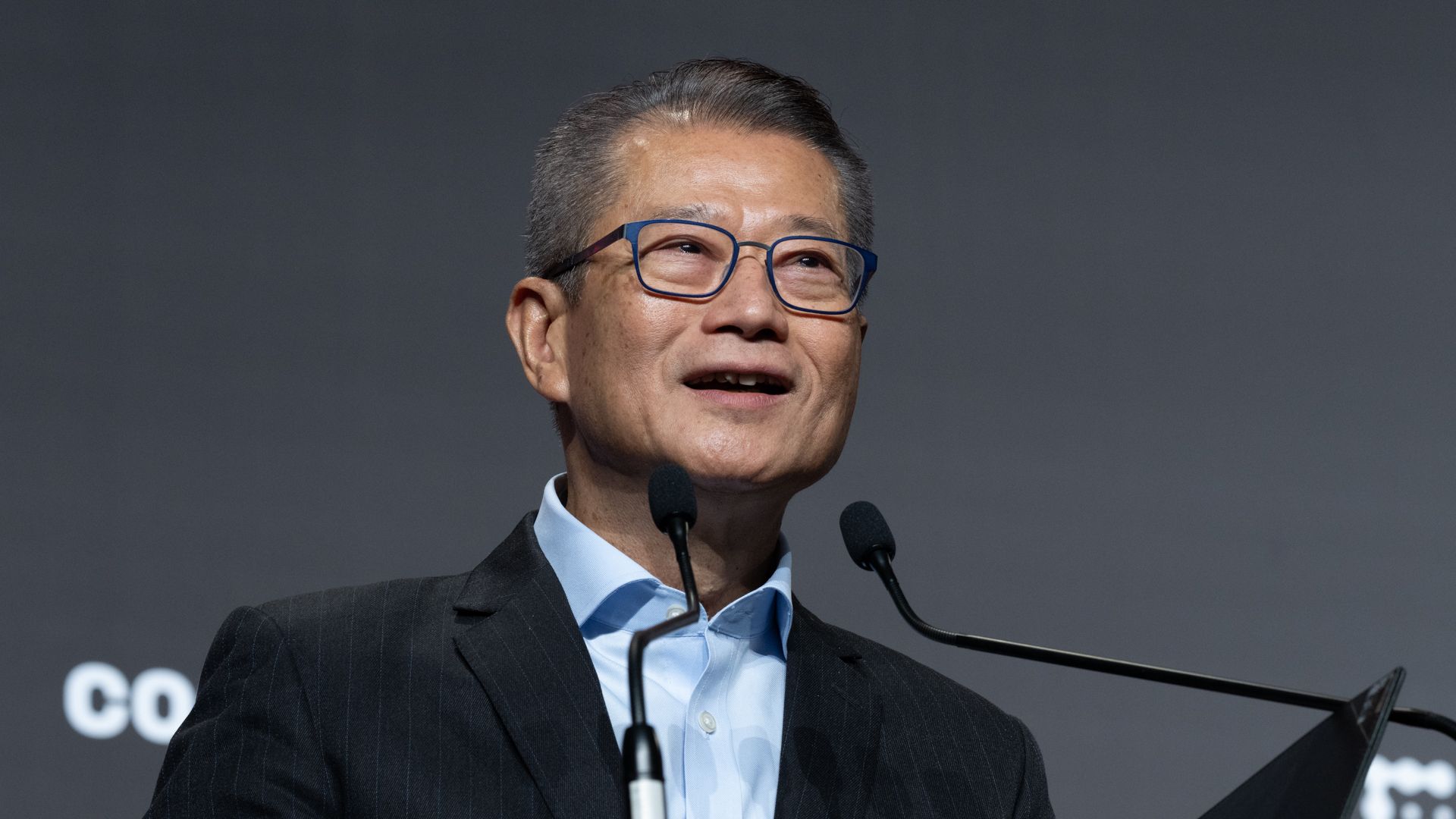

HONG KONG — Hong Kong is ready to begin issuing the first of its stablecoin licenses next month, the Special Administration Region’s Financial Secretary said Wednesday.

Hong Kong will only issue a small batch of licenses initially, Hong Kong’s Paul Chan Mo-Po said Wednesday at CoinDesk’s Consensus Hong Kong conference.

“In giving our licenses, we ensure that licensees have novel use cases, a credible and sustainable business model and strong regulatory compliance capabilities,” he said.

Hong Kong is also moving to finalize its licensing regime for custodian service providers, he said, and looking to introduce legislation this summer.

“Together with the framework already in place, this will ensure that our regulatory regime comprehensively covers the team of the digital asset ecosystem,” he said.

Speaking more broadly, Chan pointed to three trends in particular maturing at this moment: The growth of tokenized products in the real world, increasing interaction between decentralized finance (DeFi) and traditional finance and the growing ties between artificial intelligence (AI) and digital assets.

“Tokenization initiatives are moving from proof of concept to real world deployment supported by more institutional adoption government bonds, money market funds and other more traditional financial instruments are increasingly being issued onchain, using digital ledgers to enhance settlement efficiency enable fractional ownership and unlock liquidity in assets that have traditionally been less liquid.”

He also pointed to increasing growth in AI.

“As AI agents become capable of making and executing decisions independently, we may begin to see the early forms of what some call the machine economy, where AI agents can hold and transfer digital assets, pay for services and transact with one another onchain,” Chan said.

-

Politics3 days ago

Politics3 days agoWhy Israel is blocking foreign journalists from entering

-

NewsBeat2 days ago

NewsBeat2 days agoMia Brookes misses out on Winter Olympics medal in snowboard big air

-

Sports4 days ago

Sports4 days agoJD Vance booed as Team USA enters Winter Olympics opening ceremony

-

Business3 days ago

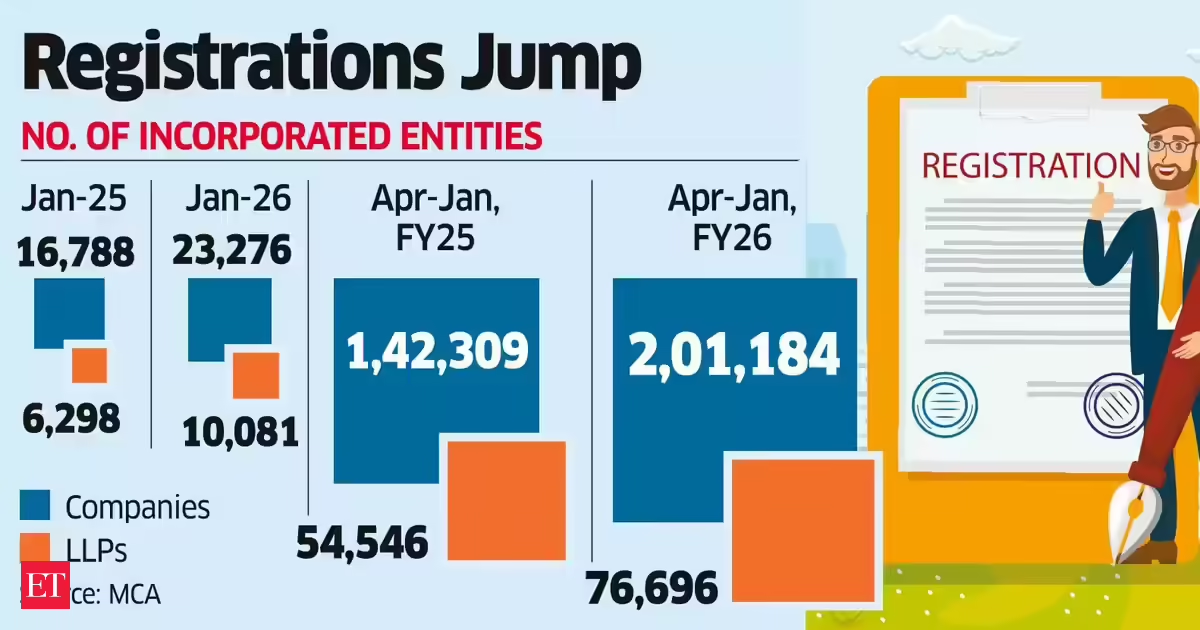

Business3 days agoLLP registrations cross 10,000 mark for first time in Jan

-

Tech5 days ago

Tech5 days agoFirst multi-coronavirus vaccine enters human testing, built on UW Medicine technology

-

Tech8 hours ago

Tech8 hours agoSpaceX’s mighty Starship rocket enters final testing for 12th flight

-

NewsBeat3 days ago

NewsBeat3 days agoWinter Olympics 2026: Team GB’s Mia Brookes through to snowboard big air final, and curling pair beat Italy

-

Sports2 days ago

Sports2 days agoBenjamin Karl strips clothes celebrating snowboard gold medal at Olympics

-

Politics3 days ago

Politics3 days agoThe Health Dangers Of Browning Your Food

-

Sports4 days ago

Former Viking Enters Hall of Fame

-

Sports5 days ago

New and Huge Defender Enter Vikings’ Mock Draft Orbit

-

Business3 days ago

Business3 days agoJulius Baer CEO calls for Swiss public register of rogue bankers to protect reputation

-

NewsBeat5 days ago

NewsBeat5 days agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Business6 days ago

Business6 days agoQuiz enters administration for third time

-

Crypto World19 hours ago

Crypto World19 hours agoBlockchain.com wins UK registration nearly four years after abandoning FCA process

-

Crypto World1 day ago

Crypto World1 day agoU.S. BTC ETFs register back-to-back inflows for first time in a month

-

NewsBeat2 days ago

NewsBeat2 days agoResidents say city high street with ‘boarded up’ shops ‘could be better’

-

Sports2 days ago

Kirk Cousins Officially Enters the Vikings’ Offseason Puzzle

-

Crypto World1 day ago

Crypto World1 day agoEthereum Enters Capitulation Zone as MVRV Turns Negative: Bottom Near?

-

NewsBeat6 days ago

NewsBeat6 days agoStill time to enter Bolton News’ Best Hairdresser 2026 competition