The National Challenge Fund-supported project StopFlood4.ie is developing a smart flood forecasting system.

January hit Ireland hard and strong this year as Storm Chandra swept the country towards the end of the month, causing heavy flooding, damaging dozens of homes and disrupting lives – all in a matter of a few days.

While the total cost of damages and repairs hasn’t been made public yet, Fianna Fail MEP Barry Andrews expects it to be “significant”. Following the storm, the Department of Social Protection announced emergency response payments for those affected.

Emergency teams were “caught by surprise”, according to Keith Leonard, the national director of the National Directorate for Fire and Emergency Management, who spoke to RTÉ’s Morning Ireland. He said that they “just weren’t expecting those levels of rainfall”.

And sure enough, a study released following the events found that rainfall accumulation over the week preceding Storm Chandra caused the flooding to be this devastating.

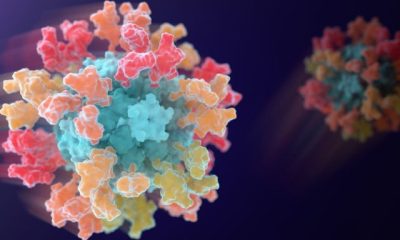

The rapid study, conducted by climate scientists at the ICARUS Climate Research Centre in Maynooth University, and at Met Éireann, also found that the likelihood of Ireland experiencing a similar amount of rainfall in a week is a staggering three times more likely as a result of the climate crisis. Human-induced weather warming is a major factor in the problem.

Of course, there’s lessons to be learnt, whether it’s from the Government’s supposed dependency on external consultants to assist with storm planning, or, according to University of Galway scientists Dr Indiana Olbert and Dr Thomas McDermott, a need to for better support and data at a local level.

Speaking on RTÉ’s Drivetime show, Maynooth University climatologist Prof John Sweeney seconded the Galway scientists’ recommendation. He said: “What we need to do is change the way in which the public are alerted at a smaller scale, to what might be happening in their own catchment area.”

AI-powered forecasting

Olbert and McDermott are leading a unique – and aptly-named – project called StopFloods4.ie, which recently bagged €1.3m in funds as part of the Digital for Resilience Challenge, a part of the National Challenge Fund.

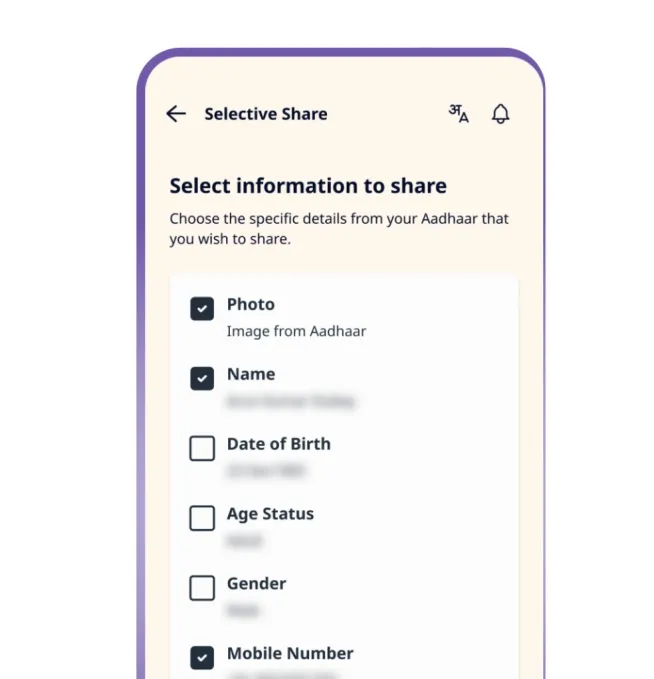

StopFloods4.ie is developing an AI-powered flood forecasting and decision-support system which integrates meteorological, tidal and river flow data.

By transforming fragmented data into actionable insights, the collaborative project – supported by the flood forecasting centre at Met Éireann, Cork City Council and local authorities – aims to equip emergency managers and communities with the means to anticipate, prepare for and respond to flood threats more effectively.

“What’s needed for better preparedness and response to flooding is primarily time,” say Olbert and McDermott, in a joint response to SiliconRepublic.com.

“That means people at a local level need to know in good time what the risk of flooding is and where is most likely to be affected. Currently that information is not available at the local scale.

“Warnings or alerts are issued at the county scale – meaning that many people who receive them are unlikely to be affected. While for those at risk, there is still a doubt about whether their location is at risk,” they added.

StopFloods4.ie’s technology predicts flooding on a street-by-street basis, providing timely, consistent and local information to decision makers, they explained.

The project’s pilot test site is currently situated in Cork city, given its historic vulnerability to flooding and population concentration. According to the project leads, they plan to fully roll out their system for use by Cork authorities in two years’ time.

They have also begun to pilot their technology in other areas, starting with other major urban areas with high flood exposure such as Galway, and eventually plan to expand it to the rest of the country.

Last week, Met Éireann reported that this past January was the wettest one in Ireland since 2018 – seeing, overall, 123pc of the long-term average rainfall.

Climate models show a “strong trend towards wetter winters, and more extreme [and] intense downpours”, StopFloods4.ie leads say.

“These extremes are becoming more frequent and as a result we can expect more frequent and more extensive flooding.”

They add: “Places that are currently at risk will see more frequent [and] intense floods, while new risks will also be created.”

The team hopes that their AI-powered solution will help by providing timely information to allow those at risk to prepare, which would ultimately reduce the costs and impacts of flooding for affected communities.

Don’t miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic’s digest of need-to-know sci-tech news.