Tech

Google Chrome ships WebMCP in early preview, turning every website into a structured tool for AI agents

When an AI agent visits a website, it’s essentially a tourist who doesn’t speak the local language. Whether built on LangChain, Claude Code, or the increasingly popular OpenClaw framework, the agent is reduced to guessing which buttons to press: scraping raw HTML, firing off screenshots to multimodal models, and burning through thousands of tokens just to figure out where a search bar is.

That era may be ending. Earlier this week, the Google Chrome team launched WebMCP — Web Model Context Protocol — as an early preview in Chrome 146 Canary. WebMCP, which was developed jointly by engineers at Google and Microsoft and incubated through the W3C’s Web Machine Learning community group, is a proposed web standard that lets any website expose structured, callable tools directly to AI agents through a new browser API: navigator.modelContext.

The implications for enterprise IT are significant. Instead of building and maintaining separate back-end MCP servers in Python or Node.js to connect their web applications to AI platforms, development teams can now wrap their existing client-side JavaScript logic into agent-readable tools — without re-architecting a single page.

AI agents are expensive, fragile tourists on the web

The cost and reliability issues with current approaches to web-agent (browser agents) interaction are well understood by anyone who has deployed them at scale. The two dominant methods — visual screen-scraping and DOM parsing — both suffer from fundamental inefficiencies that directly affect enterprise budgets.

With screenshot-based approaches, agents pass images into multimodal models (like Claude and Gemini) and hope the model can identify not only what is on the screen, but where buttons, form fields, and interactive elements are located. Each image consumes thousands of tokens and can have a long latency. With DOM-based approaches, agents ingest raw HTML and JavaScript — a foreign language full of various tags, CSS rules, and structural markup that is irrelevant to the task at hand but still consumes context window space and inference cost.

In both cases, the agent is translating between what the website was designed for (human eyes) and what the model needs (structured data about available actions). A single product search that a human completes in seconds can require dozens of sequential agent interactions — clicking filters, scrolling pages, parsing results — each one an inference call that adds latency and cost.

How WebMCP works: Two APIs, one standard

WebMCP proposes two complementary APIs that serve as a bridge between websites and AI agents.

The Declarative API handles standard actions that can be defined directly in existing HTML forms. For organizations with well-structured forms already in production, this pathway requires minimal additional work; by adding tool names and descriptions to existing form markup, developers can make those forms callable by agents. If your HTML forms are already clean and well-structured, you are probably already 80% of the way there.

The Imperative API handles more complex, dynamic interactions that require JavaScript execution. This is where developers define richer tool schemas — conceptually similar to the tool definitions sent to the OpenAI or Anthropic API endpoints, but running entirely client-side in the browser. Through the registerTool(), a website can expose functions like searchProducts(query, filters) or orderPrints(copies, page_size) with full parameter schemas and natural language descriptions.

The key insight is that a single tool call through WebMCP can replace what might have been dozens of browser-use interactions. An e-commerce site that registers a searchProducts tool lets the agent make one structured function call and receive structured JSON results, rather than having the agent click through filter dropdowns, scroll through paginated results, and screenshot each page.

The enterprise case: Cost, reliability, and the end of fragile scraping

For IT decision makers evaluating agentic AI deployments, WebMCP addresses three persistent pain points simultaneously.

Cost reduction is the most immediately quantifiable benefit. By replacing sequences of screenshot captures, multimodal inference calls, and iterative DOM parsing with single structured tool calls, organizations can expect significant reductions in token consumption.

Reliability improves because agents are no longer guessing about page structure. When a website explicitly publishes a tool contract — “here are the functions I support, here are their parameters, here is what they return” — the agent operates with certainty rather than inference. Failed interactions due to UI changes, dynamic content loading, or ambiguous element identification are largely eliminated for any interaction covered by a registered tool.

Development velocity accelerates because web teams can leverage their existing front-end JavaScript rather than standing up separate backend infrastructure. The specification emphasizes that any task a user can accomplish through a page’s UI can be made into a tool by reusing much of the page’s existing JavaScript code. Teams do not need to learn new server frameworks or maintain separate API surfaces for agent consumers.

Human-in-the-loop by design, not an afterthought

A critical architectural decision separates WebMCP from the fully autonomous agent paradigm that has dominated recent headlines. The standard is explicitly designed around cooperative, human-in-the-loop workflows — not unsupervised automation.

According to Khushal Sagar, a staff software engineer for Chrome, the WebMCP specification identifies three pillars that underpin this philosophy.

-

Context: All the data agents need to understand what the user is doing, including content that is often not currently visible on screen.

-

Capabilities: Actions the agent can take on the user’s behalf, from answering questions to filling out forms.

-

Coordination: Controlling the handoff between user and agent when the agent encounters situations it cannot resolve autonomously.

The specification’s authors at Google and Microsoft illustrate this with a shopping scenario: a user named Maya asks her AI assistant to help find an eco-friendly dress for a wedding. The agent suggests vendors, opens a browser to a dress site, and discovers the page exposes WebMCP tools like getDresses() and showDresses(). When Maya’s criteria go beyond the site’s basic filters, the agent calls those tools to fetch product data, uses its own reasoning to filter for “cocktail-attire appropriate,” and then calls showDresses()to update the page with only the relevant results. It’s a fluid loop of human taste and agent capability, exactly the kind of collaborative browsing that WebMCP is designed to enable.

This is not a headless browsing standard. The specification explicitly states that headless and fully autonomous scenarios are non-goals. For those use cases, the authors point to existing protocols like Google’s Agent-to-Agent (A2A) protocol. WebMCP is about the browser — where the user is present, watching, and collaborating.

Not a replacement for MCP, but a complement

WebMCP is not a replacement for Anthropic’s Model Context Protocol, despite sharing a conceptual lineage and a portion of its name. It does not follow the JSON-RPC specification that MCP uses for client-server communication. Where MCP operates as a back-end protocol connecting AI platforms to service providers through hosted servers, WebMCP operates entirely client-side within the browser.

The relationship is complementary. A travel company might maintain a back-end MCP server for direct API integrations with AI platforms like ChatGPT or Claude, while simultaneously implementing WebMCP tools on its consumer-facing website so that browser-based agents can interact with its booking flow in the context of a user’s active session. The two standards serve different interaction patterns without conflict.

The distinction matters for enterprise architects. Back-end MCP integrations are appropriate for service-to-service automation where no browser UI is needed. WebMCP is appropriate when the user is present and the interaction benefits from shared visual context — which describes the majority of consumer-facing web interactions that enterprises care about.

What comes next: From flag to standard

WebMCP is currently available in Chrome 146 Canary behind the “WebMCP for testing” flag at chrome://flags. Developers can join the Chrome Early Preview Program for access to documentation and demos. Other browsers have not yet announced implementation timelines, though Microsoft’s active co-authorship of the specification suggests Edge support is likely.

Industry observers expect formal browser announcements by mid-to-late 2026, with Google Cloud Next and Google I/O as probable venues for broader rollout announcements. The specification is transitioning from community incubation within the W3C to a formal draft — a process that historically takes months but signals serious institutional commitment.

The comparison that Sagar has drawn is instructive: WebMCP aims to become the USB-C of AI agent interactions with the web. A single, standardized interface that any agent can plug into, replacing the current tangle of bespoke scraping strategies and fragile automation scripts.

Whether that vision is realized depends on adoption — by both browser vendors and web developers. But with Google and Microsoft jointly shipping code, the W3C providing institutional scaffolding, and Chrome 146 already running the implementation behind a flag, WebMCP has cleared the most difficult hurdle any web standard faces: getting from proposal to working software.

Tech

Broadcom bets on 2nm stacked silicon to rival Nvidia in AI

The technology is based on a vertically integrated design that bonds two chips into a single stack. By tightly coupling these silicon layers, Broadcom’s engineers aim to increase data transfer speeds while reducing energy consumption – a critical advantage as AI workloads become more computationally intensive.

Read Entire Article

Source link

Tech

Smart TV apps are quietly scraping web data for AI training

Bright Data operates a global proxy network designed to collect publicly available web content, and customers are voluntarily joining the network so that they can spare a few dollars on their TV viewing experience. According to a recent report, code associated with Bright Data has appeared in certain smart TV…

Read Entire Article

Source link

Tech

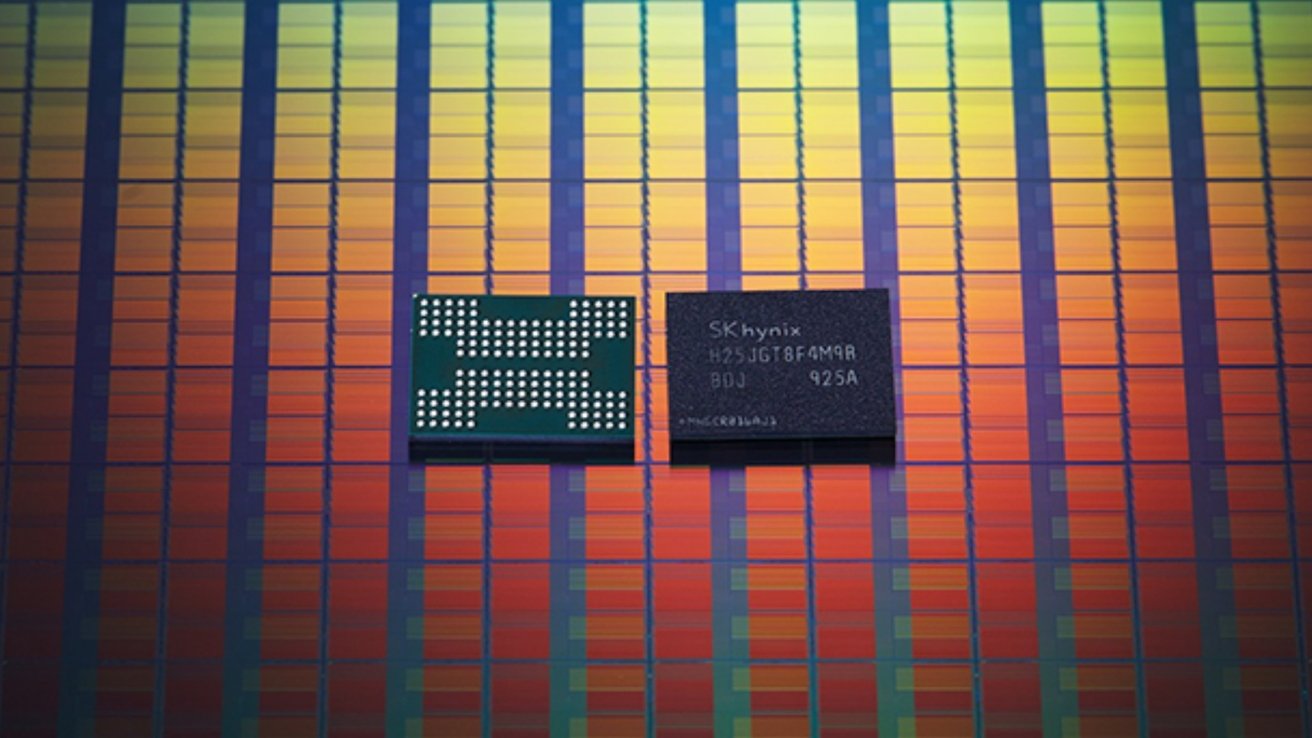

The global RAM and SSD shortage crisis, explained

A global shortage is responsible for every electronics and computer manufacturer in the world — including Apple — paying twice as much for RAM and flash storage as it did in 2025, and 10 times more than it paid in 2020. Here’s why there is little hope of that improving anytime soon.

Memory is in short supply globally — Image credit: SK Hynix

Apple has historically been able to closely control the cost of its components. Buying in huge numbers, from multiple suppliers has historically given an economy of scale that made Apple a sought-after customer for everything from display makers to storage vendors.

But that dynamic has changed. A global shortage of key components like memory and storage has seen the price of both skyrocket. Apple is far from the only company impacted.

Continue Reading on AppleInsider | Discuss on our Forums

Tech

Galaxy S26 vs. iPhone 17: Which entry-level flagship is right for you?

For 2026, the comparison between baseline iPhone and Android flagships comes down to two phones that are closer than they’ve ever been — the Galaxy S26 at $899 and the iPhone 17 at $799. Same form factor, same screen size, very different philosophies.

We’ve broken down everything that actually moves the needle — design, display, performance, cameras, battery, and software — because the right phone isn’t the one with the longer spec sheet. It’s the one that fits how you actually use it.

Price and availability

The iPhone 17 kicks off at $799 with 256GB baked in from the start — no arguing with that. The Galaxy S26 lands at $899 for 256GB. Last year’s S25 was $859, so Samsung snuck in a $40 increase, and the ongoing memory shortage got the blame.

So there’s a $100 gap sitting between these two phones right out the gate. Whether the S26 justifies it over the iPhone 17 — or whether Apple’s just quietly winning on value before the comparison even starts — is what the rest of this piece is for.

Design

Pick up the S26 and the iPhone 17 back-to-back and the first thing you think is: did these two companies share a blueprint? Heights are dead-even at 149.6mm. Width differs by 0.2mm — which doesn’t make a different in real life.

Apple’s phone is thicker at 7.95 mm versus Samsung’s 7.2 mm, and heavier too, tipping the scales at 177 grams against the S26’s 167 grams. What gives away Samsung’s entry-level flagship is its boxy corners, which are immediately recognizable against the rounded corners on the iPhone 17.

Both phones use aluminum frames, so nobody’s winning a materials fight there. The glass is where they split — Gorilla Glass Victus 2 front and back on the S26, and Apple’s Ceramic Shield 2 on the iPhone 17’s front, which Apple says scratches three times less easily than regular glass.

Dunking either one is fine either way; IP68 on both. The S26 comes in Black, Cobalt Violet, Sky Blue, and White — pick one and people will notice. The iPhone 17 gives you Black, White (my personal favorite), Mist Blue, Sage, and Lavender — tones quiet enough that your phone practically whispers.

Display

Both screens measure 6.3 inches, so that argument ends before it starts. Where things get interesting is everything underneath that number.

The iPhone 17 sports a 2622 x 1206 pixel OLED panel at 460 ppi, sharper than the Galaxy S26’s panel, which maxes out at FHD+ with 2340 x 1080 pixels (411 ppi). The S26’s display is fine, looks good, and frankly most people won’t lose sleep over it. Side-by-side though, the difference shows (I hope Samsung sees it as well).

The S26 peaks at 2,600 nits outdoors, which handles most sunny days well enough. The iPhone 17 pushes to 3,000 nits — and upon using it side by side with the Galaxy S25 (which shares its peak brightness with the S26), I found the iPhone to be noticeably brighter, especially under direct sunlight.

Both do 1-120Hz adaptive refresh rates, so scrolling feels equally fluid on either one. Then there’s always-on display — both phones keep your notifications visible without fully waking the screen, which sounds minor until you’ve used it for a week and then picked up a phone without it.

While I’ve grown accustomed to the Dynamic Island on the iPhone 17, you might not like it in the first glance, especially if you’re upgrading from an Android phone with a punch-hole camera — that’s something to keep in mind as well.

Performance

Specs-wise, Samsung shows up with more — Snapdragon 8 Elite Gen 5, 3nm, 12GB RAM. Apple brings the A19 and 8GB. On a spec sheet that reads as a clean Samsung win, but phones aren’t spec sheets.

Benchmarks tell a messier story. The S26 pulls ahead when multiple cores are working together, which is relevant for heavy multitasking. The scores are almost similar in the single-core test, which is what your phone actually leans on for most things — launching apps, typing, switching between tasks. All-in-all, both phones offer similar (read excellent) day-to-day performance.

The RAM gap is where it gets more practical. Twelve gigabytes means more apps stay open in the background without reloading. If your phone use involves juggling a lot at once, the S26 has more headroom. And yes, both are perfectly capable of handing the most demanding games at high frame rates, it’s just the matter of whether the developer has included support for it or not.

I’ve been using the iPhone 17 for about six months now, and I haven’t, for once, felt that the phone doesn’t offer enough CPU or GPU performance, especially when needed. That’s the thing with top-tier mobile chipsets; they’ve got more horsepower than most people can use upfront, but it helps maintaining the performance in the long-term.

Operating System

The S26 runs One UI 8.5 on Android 16 — the most put-together version of Samsung’s skin yet. Rounder, cleaner, and stuffed with settings you’ll spend a Sunday afternoon exploring.

Galaxy AI actually pulls weight now: Now Nudge suggests replies by reading your screen context, Call Screening stops unknown callers before your phone buzzes, and Audio Eraser finally works inside YouTube and Instagram, not just Samsung’s own apps. Bixby gets Perplexity as backup for the questions it used to fumble.

iOS 26 got a full face-lift with Liquid Glass — translucent menus and icons that split opinion pretty cleanly between “stunning” and “bit much.” Apple Intelligence handles real-time translation across calls, Messages, and FaceTime, though it’s not as useful as Galaxy AI. The ecosystem perks, however, are still superior.

Samsung commits to seven years of operating system and security updates, while Apple usually provides around five to six years of software support.

Cameras

The S26 has a 50MP main, 12MP ultrawide, and a dedicated 10MP 3x telephoto. The iPhone 17 runs a 48MP main at f/1.6, a 48MP ultrawide, and a 2x “zoom” that’s just the main sensor being cropped — not a real telephoto lens.

Daylight shots on both look great, full stop. Where they differ is taste. Samsung cranks up the saturation and contrast — your photos come out looking like they’ve already been edited, ready to post. Apple mostly shows you what was there, i.e., the camera reproduces natural, neutral colors.

After dark, the iPhone quietly holds its own. Apple’s Night Mode has been one of the best in the business for years (along with the f/1.6 aperture). Zoom goes the other way. A real 3x optical lens on the S26 versus Apple’s cropped 2x is a clear hardware win for Samsung.

The most unique thing about the iPhone 17’s camera system is its selfie shooter — an 18MP (f/1.9) square-shaped camera sensor that can capture super wide selfies in multiple aspect ratios. Apple surely needs to bump up the resolution for the visual area the sensor covers, but even so, Samsung’s 12MP sensor is no match for it.

Video on both is strong at 4K/60fps with good stabilization. Apple’s color science gives it a slight edge in footage quality, plus the sensor-shift stabilization works like a charm, but the S26 shoots 8K if that’s something you need. Most people don’t, but the option exists.

Battery

The S26 has a bigger tank — 4,300mAh versus the iPhone 17’s 3,692mAh — and Samsung claims 31 hours of video playback to Apple’s 30. One hour in it, with a notably smaller cell on Apple’s side. That gap says more about the A19’s efficiency than it does about the S26’s battery.

Charging is where iPhone pulls ahead. With 40W wired charging, the handset reaches 50% in roughly 25 minutes. The S26 still sits at 25W — same as its last two predecessors. Wireless is where the gap reopens. The iPhone 17 does 25W via MagSafe; the S26 base model caps at 15W standard wireless.

Conclusion

The S26 makes a stronger case on paper. More RAM, a bigger battery, a real telephoto lens, 8K video, and One UI 8.5 giving you enough customization to keep a hobbyist busy for weeks. It’s the better phone for power users, Android loyalists, and anyone who shoots a lot of zoom photos or wants their phone to last the full day.

The iPhone 17 wins on the things that are harder to put in a spec sheet. Faster charging, better low-light photography, smoother sustained performance under load, the refreshing iOS 26 experience, and an ecosystem so tightly integrated it borders on a lifestyle choice. If you own a Mac, iPad, or AirPods, the iPhone 17 doesn’t just work well — it works together in a way the S26 can’t replicate.

Tech

I’m thrilled by Wednesday’s star-studded third year, here’s everything we know about season 3

Netflix’s mystery series Wednesday reinvigorated the Addams Family for the modern age, becoming one of the streaming giant’s most-watched shows. It’s only natural that Netflix keep the hype running with Wednesday‘s upcoming third season.

There’s a lot we can expect to see from Wednesday after season 2. It’s unclear what Wednesday and her peers will encounter in the next season, but what makes the show so fun is watching the mystery unfold. In all fairness, we don’t like waiting years for more episodes. Don’t fret. We’ve got you covered with everything you need to know about Wednesday season 3.

What’s the story of Wednesday season 3?

Netflix Tudum wrote that in season 3, “a new wave of insidious interlopers will be darkening the doors of Nevermore Academy.” Wednesday showrunners Al Gough and Miles Millar said to Tudum that the third season will also “excavate some long-rotting Addams Family secrets.”

“Our goal for Season 3 is the same as it is for every season: to make it the best season of Wednesday we possibly can,” said Gough. “We want to continue digging deeper into our characters while expanding the world of Nevermore and Wednesday.”

These statements fit with what we saw as Wednesday left Nevermore with Uncle Fester and Thing in search of her alpha werewolf bestie, Enid. In this ending to season 2, Wednesday had a vision of her Aunt Ophelia, imprisoned by Grandmama Frump and writing in blood, “Wednesday Must Die,” suggesting some of the Addams family’s skeletons will come out of the closet.

When will Wednesday season 3 come out?

Since Wednesday season 3 is so early into production, there is no release date set at this time. We can’t see into the future like Wednesday Addams, but it is likely she will return in 2027. Though the second season premiered three years after the first, the 2023 writers’ and actors’ strike stalled production for several months. Barring any future delays, the wait for season 3 should last a total of two years rather than three.

When and where is Wednesday season 3 filming?

Production for Wednesday season 3 started in February 2026, according to Netflix Tudum. Like with season 2, filming will take place near Dublin.

Who will return in Wednesday season 3?

As usual, Wednesday will feature a vast, quirky cast of characters in season 3, including members of the Addams Family and Wednesday’s classmates at Nevermore Academy.

- Jenna Ortega as Wednesday Addams

- Catherine Zeta-Jones as Morticia Addams

- Luis Guzman as Gomez Addams

- Isaac Ordonez as Pugsley Addams

- Joana Lumley as Grandmama Hester Frump

- Joy Sunday as Bianca Barclay

- Georgie Farmer as Ajax Tanaka

- Moosa Mostafa as Eugene Ottinger

- Evie Templeton as Agnes DeMille

- Victor Dorobantu as Thing

- Winona Ryder as Tabitha

- Emma Myers as Enid Sinclair

- Hunter Doohan as Tyler Galpin

- Fred Armisen as Uncle Fester

- Billie Piper as Isadora Capri

- Luyanda Unati Lewis-Nyawo as Santiago

- Oscar Morgan as Atticus

- Kennedy Moyer as Daisy

- Noah Taylor as Cyrus

- Chris Sarandon as Balthazar

- Eva Green as Ophelia Frump

Who’s new to Wednesday season 3?

Just like season 2, Wednesday‘s third season will welcome plenty of new characters to Nevermore Academy. Actors joining the cast next season include Winona Ryder (Stranger Things), Chris Sarandon (Dog Day Afternoon, The Princess Bride), Noah Taylor (Peaky Blinders, Game of Thrones), Oscar Morgan (A Knight of the Seven Kingdoms), and Kennedy Moyer (Task, Roofman).

In an interview with Netflix Tudum, Gough and Millar shared a statement praising Eva Green and her performance as Wednesday’s Aunt Ophelia:

“Eva Green has always brought an exhilarating, singular presence to the screen — elegant, haunting, and beautifully unpredictable. Those qualities make her the perfect choice for Aunt Ophelia. We’re excited to see how she transforms the role and expands Wednesday’s world.”

Green also said to Tudum, “I’m thrilled to join the woefully twisted world of Wednesday as Aunt Ophelia. This show is such a deliciously dark and witty world, I can’t wait to bring my own touch of cuckoo-ness to the Addams family.”

Winona Ryder’s casting is also particularly noteworthy. The actor has frequently starred as a main player in producer Tim Burton’s films. Most recently, she starred alongside Jenna Ortega in Beetlejuice Beetlejuice. Whether or not Ryder’s new character will support Wednesday on her journey, it will be exciting to see the former reignite her on-screen chemistry with Ortega.

Are there any trailers for Wednesday season 3?

On February 23, Netflix shared a fiendishly flamboyant video announcing that production for Wednesday season 3, all while revealing the cast. The trailer also featured a “?” to label one of the season’s cast members, suggesting this mystery character plays an important role that would spoil the story.

Tech

Google and OpenAI employees sign open letter in ‘solidarity’ with Anthropic

Hundreds of employees at Google and OpenAI have urging their companies to in its standoff with the Pentagon over military applications for AI tools like Claude.

The letter, titled “We Will Not Be Divided,” calls on the leadership of both companies to “put aside their differences and stand together to continue to refuse the Department of War’s current demands for permission to use our models for domestic mass surveillance and autonomously killing people without human oversight.” These are two lines that Anthropic CEO Dario Amodei should not be crossed by his or any other AI company.

As of publication, the letter has over 450 signatures, almost 400 of which come from Google employees and the rest from OpenAI. Currently, roughly 50 percent of all participants have chosen to attach their names to the cause, with the rest remaining anonymous. All are verified as current employees of these companies. The original organizers of the letter aren’t Google or OpenAI employees; they say are unaffiliated with any AI company, political party or advocacy group.

The open letter is the latest development in the saga between Anthropic and US Defense Secretary Pete Hegseth, who to label the company a “supply chain risk” if it did not agree to withdraw certain guardrails for classified work. The Pentagon has also been in talks with Google and OpenAI about using their models for classified work, with earlier this week. The letter argues the government is “trying to divide each company with fear that the other will give in.”

OpenAI CEO Sam Altman told his employees on Friday that the ChatGPT maker will draw the same red lines as Anthropic, according to an internal memo seen by . He told on the same day that he doesn’t “personally think the Pentagon should be threatening DPA against these companies.”

Tech

Smartphone Market To Decline 13% in 2026, Marking the Largest Drop Ever Due To the Memory Shortage Crisis

An anonymous reader shares a report: Worldwide smartphone shipments are forecast to decline 12.9% year-on-year (YoY) in 2026 to 1.1 billion units, according to the International Data Corporation (IDC) Worldwide Quarterly Mobile Phone Tracker. This decline will bring the smartphone market to its lowest annual shipment volume in more than a decade. The current forecast represents a sharp decline from our November forecast amid the intensifying memory shortage crisis.

Tech

Global smartphone shipments expected to fall 13% amid memory supply crunch

According to a new report from market research firm International Data Corporation, global smartphone shipments are expected to total around 1.1 billion units this year, down from 1.26 billion in 2025. This marks a significant downward revision from the company’s November 2025 forecast, which projected a decline of between 0.9…

Read Entire Article

Source link

Tech

Perplexity launches Computer, wants AI to run tasks for months, not minutes

Rather than relying on a single model, Perplexity AI’s Computer system functions as an orchestrator across multiple models. Anthropic’s Claude Opus 4.6 serves as the primary reasoning engine, while Gemini handles deep research tasks. Nano Banana generates images, Veo 3.1 produces video, Grok executes lightweight, speed-optimized tasks, and OpenAI’s ChatGPT…

Read Entire Article

Source link

Tech

Loewe’s Vega TVs give you slick design in smaller sizes

Loewe has announced the Vega, a new range of compact 4K Ultra HD smart TVs available in 32 and 43-inch sizes.

The Vega sits below Loewe’s flagship Stellar OLED line, which spans 42 to 97 inches and starts at £1,699, but uses VA LCD panels with full-array Direct LED backlighting rather than OLED, a technology choice that allows Loewe to hit higher peak brightness figures across a smaller and more affordable chassis.

The 43-inch model carries 390 LED dimming zones and reaches a peak luminance of 880 cd/m², while the 32-inch version uses 260 dimming zones and reaches 550 cd/m², both figures sitting above what most competing LCD televisions at this screen size typically deliver to living rooms in bright daylight conditions.

Both models support the full range of HDR formats, including Dolby Vision IQ, HDR10, and HLG, with the Vega marking the first time Loewe has offered a 4K Ultra HD panel in a 32-inch format, a size that most manufacturers continue to supply only in Full HD resolution.

The integrated soundbar delivers 60 watts of Class-D amplification developed and tuned by Loewe’s in-house audio team, supporting Dolby Atmos and connecting to external sound systems through HDMI eARC, a configuration that competes more directly with premium soundbar bundles than with the basic speakers typically built into televisions at this screen size.

Smart features and connectivity

Loewe’s os9 smart platform, built on the VIDAA operating system, handles streaming access across Netflix, YouTube, Disney+, and Apple TV, with Apple AirPlay, Miracast, DLNA, and Matter connectivity expanding the Vega’s integration with both Apple and broader smart home ecosystems.

The 43-inch model carries two HDMI 2.1 ports supporting 4K at up to 120Hz alongside VRR and ALLM for low-latency gaming, while the 32-inch version supports 4K at up to 60Hz through its HDMI 2.1 ports, with both models also offering cloud gaming access through Blacknut and Boosteroid via the VIDAA platform.

A brushed aluminium frame, rotatable metal table stand with chrome finish, and integrated cable management with magnetic rear covers reflect the same design discipline Loewe applies across its higher-end OLED TV range, placing the Vega closer in aesthetic approach to Bang and Olufsen than to mass-market LCD televisions at comparable screen sizes.

The Loewe Vega 32-inch is priced at £1650 and the 43-inch at £1900, with both models available through selected Loewe retail partners from March 2026.

For a closer look at how the Vega’s LCD panel compares against the best screens on the market, our guide to the best OLED TVs rounds up the top picks from every major brand.

-

Politics6 days ago

Politics6 days agoBaftas 2026: Awards Nominations, Presenters And Performers

-

Sports4 days ago

Sports4 days agoWomen’s college basketball rankings: Iowa reenters top 10, Auriemma makes history

-

Politics4 days ago

Politics4 days agoNick Reiner Enters Plea In Deaths Of Parents Rob And Michele

-

Business3 days ago

Business3 days agoTrue Citrus debuts functional drink mix collection

-

Politics21 hours ago

Politics21 hours agoITV enters Gaza with IDF amid ongoing genocide

-

Crypto World4 days ago

Crypto World4 days agoXRP price enters “dead zone” as Binance leverage hits lows

-

Business5 days ago

Business5 days agoMattel’s American Girl brand turns 40, dolls enter a new era

-

Business5 days ago

Business5 days agoLaw enforcement kills armed man seeking to enter Trump’s Mar-a-Lago resort, officials say

-

NewsBeat2 days ago

NewsBeat2 days agoManchester Central Mosque issues statement as it imposes new measures ‘with immediate effect’ after armed men enter

-

NewsBeat2 days ago

NewsBeat2 days agoCuba says its forces have killed four on US-registered speedboat | World News

-

Tech3 days ago

Tech3 days agoUnsurprisingly, Apple's board gets what it wants in 2026 shareholder meeting

-

NewsBeat4 days ago

NewsBeat4 days ago‘Hourly’ method from gastroenterologist ‘helps reduce air travel bloating’

-

Tech5 days ago

Tech5 days agoAnthropic-Backed Group Enters NY-12 AI PAC Fight

-

NewsBeat5 days ago

NewsBeat5 days agoArmed man killed after entering secure perimeter of Mar-a-Lago, Secret Service says

-

Politics5 days ago

Politics5 days agoMaine has a long track record of electing moderates. Enter Graham Platner.

-

Business2 days ago

Business2 days agoDiscord Pushes Implementation of Global Age Checks to Second Half of 2026

-

NewsBeat3 days ago

NewsBeat3 days agoPolice latest as search for missing woman enters day nine

-

Sports4 days ago

2026 NFL mock draft: WRs fly off the board in first round entering combine week

-

Business1 day ago

Business1 day agoOnly 4% of women globally reside in countries that offer almost complete legal equality

-

Crypto World3 days ago

Crypto World3 days agoEntering new markets without increasing payment costs