When completing math problems, students often have to show their work. It’s a method teachers use to catch errors in thinking, to make sure students are grasping mathematical concepts correctly.

New AI projects in development aim to automate that process. The idea is to train machines to catch and predict the errors students make when studying math, to better enable teachers to correct student misconceptions in real time.

For the first time ever, developers can now build fascinating algorithms into products that will help teachers without requiring them to understand machine learning, says Sarah Johnson, CEO at Teaching Lab, which provides professional development to teachers.

Some of these efforts trace back to the U.K.-based edtech platform Eedi Labs, which has held a series of coding competitions since 2020 intended to explore ways to use AI to boost math performance. The latest was held earlier this year, and it tried to use AI to capture misconceptions from multiple choice questions and accompanying student explanations. It relied upon Eedi Labs’ data but was run by The Learning Agency, an education consultancy firm in the U.S. A joint project with Vanderbilt University — and using Kaggle, a data science platform — the competition received support from the Gates Foundation and the Walton Family Foundation, and coding teams competed for $55,000 in awards.

The latest competition achieved “impressive” accuracy in predicting student misconceptions in math, according to Eedi Labs.

Researchers and edtech developers hope this kind of breakthrough can help bring useful AI applications into math classrooms — which have lagged behind in AI adoption, even as English instructors have had to rethink their writing assignments to account for student AI use. Some people have argued that, so far, there has been a conceptual problem with “mathbots.”

Perhaps training algorithms to identify common student math misconceptions could lead to the development of sophisticated tools to help teachers target instruction.

But is that enough to improve students’ declining math scores?

Solving the (Math) Problem

So far, the deluge of money pouring into artificial intelligence is unrelenting. Despite fears that the economy is in an “AI bubble”, edtech leaders hope that smart, research-backed uses of the technology will deliver gains for students.

In the early days of generative AI, people thought you could get good results by just hooking up an education platform to a large language model, says Johnson, of Teaching Lab. All these chatbot wrappers popped up, promising that teachers could create the best lesson plans using ChatGPT in their learning management systems.

But that’s not true, she says. You need to focus on applications of the technology that are trained on education-specific data to actually help classroom teachers, she adds.

That’s where Eedi Labs is trying to make a difference.

Currently, Eedi Labs sells an AI tutoring service for math. The model, which the company calls “human in the loop,” has human tutors check messages automatically generated by its platform before they are sent to students, and make edits when necessary.

Plus, through efforts like its recent competition, leaders of the platform think they can train machines to catch and predict the errors students make when studying math, further expediting learning.

But training machine learning algorithms to identify common math misconceptions a student holds isn’t all that easy.

Cutting Edge?

Whether these attempts to use AI to map student misconceptions prove useful depends on what computer scientists call “ground truth,” the quality of the data used to train the algorithms in the first place. That means it depends on the quality of the multiple choice math problem questions, and also of the misconceptions that those questions reveal, says Jim Malamut, a postdoctoral researcher at Stanford Graduate School of Education. Malamut is not affiliated with Eedi Labs or with The Learning Agency’s competition.

The approach in the latest competition is not groundbreaking, he argues.

The dataset used in this year’s misconceptions contest had teams sorting through student answers from multiple choice questions with brief rationales from students. For the company, it’s an advancement, since previous versions of the technology relied on multiple choice questions alone.

Still, Malamut describes the use of multiple choice questions as “curious” because he believes the competition chose to work with a “simplistic format” when the tools they are testing are better-suited to discern patterns in more complex and open-ended answers from students. That is, after all, an advantage of large language models, Malamut says. In education, psychometricians and other researchers relied on multiple choice questions for a long time because they are easier to scale, but with AI that shouldn’t be as much of a barrier, Malamut argues.

Pushed by declining U.S. scores on international assessments, in the last decade-plus the country has shifted toward “Next-Generation Assessments” which aim to test conceptual skills. It’s part of a larger shift by researchers to the idea of “assessment for learning,” which holds that assessment tools place emphasis on getting information that’s useful for teaching rather than what’s convenient for researchers to measure, according to Malamut.

Yet the competition relies on questions that clearly predate that trend, Malamut says, in a way that might not meet the moment

For example, some questions asked students to figure out which decimal was the largest, which sheds very little light on conceptual understanding. Instead, current research suggests that it’s better to have students write a decimal number using base 10 blocks or to point to missing decimals on a marked number line. Historically, these sorts of questions couldn’t be used in a large-scale assessment because they are too open-ended, Malamut says. But applying AI to current thinking around education research is precisely where AI could add the most value, Malamut adds.

But for the company developing these technologies, “holistic solutions” are important.

Eedi Labs blends multiple choice questions, adaptive assessments and open responses for a comprehensive diagnosis, says cofounder Simon Woodhead. This latest competition was the first to incorporate student responses, enabling deeper analysis, he adds.

But there’s a trade-off between the time it takes to give students these assessments and the insights they give teachers, Woodhead says. So the Eedi team thinks that a system that uses multiple choice questions is useful for scanning student comprehension inside a classroom. With just a device at the front of the class, a teacher can home in on misconceptions quickly, Woodhead says. Student explanations and adaptive assessments, in contrast, help with deeper analysis of misconceptions. Blending these gives teachers the most benefit, Woodhead argues. And the success of this latest competition convinced the company to further explore using student responses, Woodhead adds.

Still, some think the questions used in the competition were not fine-tuned enough.

Woodhead notes that the competition relied on broader definitions of what counts as a “misconception” than Eedi Labs usually does. Nonetheless, the company was impressed by the accuracy of the AI predictions in the competition, he says.

Others are less sure that it really captures student misunderstandings.

Education researchers now know a lot more about the kinds of questions that can get to the core of student thinking and reveal misconceptions that students may have than they used to, Malamut says. But many of the questions in the contest’s dataset don’t accomplish this well, he says. Even though the questions included multiple choice options and short answers, it could have used better-formed questions, Malamut thinks. There are ways to ask the questions that can bring out student ideas. Rather than asking students to answer a question about fractions, you could ask students to critique others’ reasoning processes. For example: “Jim added these fractions in this way, showing his work like this. Do you agree with him? Why or why not? Where did he make a mistake?”

Whether it’s found its final form, there is growing interest in these attempts to use AI, and that comes with money for exploring new tools.

From Computer Back to Human

The Trump administration is betting big on AI as a strategy for education, making federal dollars available. Some education researchers are enthusiastic, too, boosted by $26 million in funding from Digital Promise intended to help narrow the distance between best practices in education and AI.

These approaches are early, and the tools still need to be built and tested. Nevertheless, some argue it’s already paying off.

A randomized controlled trial conducted by Eedi Labs and Google DeepMind found that math tutoring that incorporated Eedi’s AI platform boosted student learning in 11- and 12-year-olds in the U.K. The study focused on the company’s “human in the loop” approach — using human-supervised AI tutoring — currently used in some classrooms. Within the U.S., the platform is used by 4,955 students across 39 K-12 schools, colleges and tutoring networks. Eedi Labs says it is conducting another randomized controlled trial in 2026 with Imagine Learning in the U.S.

Others have embraced a similar approach. For example, Teaching Lab is actively involved in work about AI for use in classrooms, with Johnson telling EdSurge that they are testing a model also based on data borrowed from Eedi and a company called Anet. That data model project is currently being tested with students, according to Johnson.

Several of these efforts require sharing tech insights and data. That runs counter to many companies’ typical practices for protecting intellectual property, according to the Eedi Labs CEO. But he thinks the practice will pay off. “We are very keen to be at the cutting edge, that means engaging with researchers, and we see sharing some data as a really great way to do this,” he wrote in an email.

Still, once the algorithms are trained, everyone seems to agree turning it into success in classrooms is another challenge.

What might that look like?

The data infrastructure can be built into products that let teachers modify curriculum based on the context of the classroom, Johnson says. If you can connect the infrastructure to student data and allow it to make inferences, it could provide teachers with useful advice, she adds.

Meg Benner, managing director of The Learning Agency, the organization that ran the misconceptions contest, suggests that this could be used to feed teachers information about which misconceptions their students are making, or to even trigger a chatbot-style lesson helping them to overcome those misconceptions.

It’s an interesting research project, says Johnson, of Teaching Lab. But once this model is fully built, it will still need to be tested to see if refined diagnosis actually leads to better interventions in front of teachers and students, she adds.

Some are skeptical that the ways companies will turn these into products may not enhance learning all that much. After all, having a chatbot-style tutoring system conclude that students are conducting additive reasoning when multiplicative reasoning is required may not transform math instruction. Indeed, some research has shown that students don’t respond well to chatbots. For instance, the famous 5 percent problem revealed that only the top students usually see results from most digital math programs. Instead, teachers have to handle misconceptions as they come up, some argue. That means students having an experience or conversation that exposes the limits of old ideas and the power of clear thinking. The challenge, then, is figuring out how to get the insights from the computer and machine analysis back out to the students.

But others think that the moment is exciting, even if there’s some hype.

“I’m cautiously optimistic,” says Malamut, the postdoctoral student at Stanford. Formative assessments and diagnostic tools exist now, but they are not automated, he says. True, the assessment data that’s easy to collect isn’t always the most helpful to teachers. But if used correctly, AI tools could possibly close that gap.

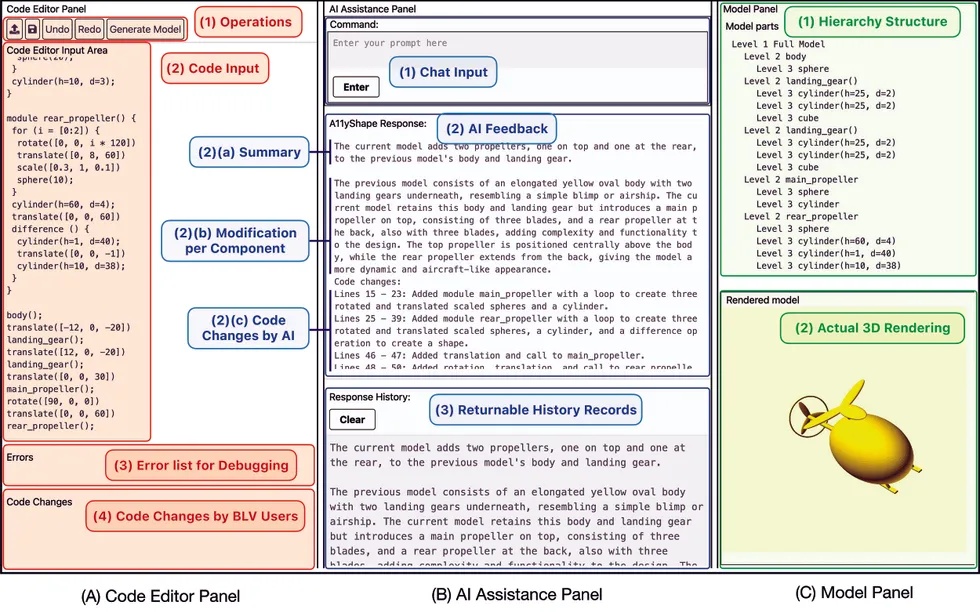

A11yShape’s three panels synchronize code, AI descriptions, and model structure so blind programmers can discover how code changes affect designs independently.

A11yShape’s three panels synchronize code, AI descriptions, and model structure so blind programmers can discover how code changes affect designs independently. A new assistive program for blind and low-vision programmers, A11yShape, assists visually disabled programmers in verifying the design of their models.Source:

A new assistive program for blind and low-vision programmers, A11yShape, assists visually disabled programmers in verifying the design of their models.Source:

![LISA - 'LALISA + MONEY' | 2023 WORLD TOUR [BORN PINK]](https://wordupnews.com/wp-content/uploads/2026/02/1770487179_maxresdefault-80x80.jpg)