Tech

The perfect soundbars for small spaces

Not everyone has the space for a surround sound system or even a full-sized soundbar. If that’s your situation, we’ve come up with several small options that will work for your crowded space.

We’ve tried to ensure with this list of the best small soundbars that even though they’re small, there’s still an option that will suit every need.

We’ve chosen Dolby Atmos soundbars, soundbars that work with older TVs that don’t have HDMI ports, or models that come with subwoofers. We’ve got an array of options to choose from.

Any soundbar we look at, we do so by watching lots of movies and listening to plenty of music. We examine how well each model handles dialogue, effects, and different genres of music. From these tests, we determine which ones are worth your cash.

Keep reading to discover all the best small soundbars available right now. We have other guides to have a look too which includes our best soundbars and the best Dolby Atmos soundbars.

We’ve also narrowed down the best surround sound systems for those with the space and budget to create a bigger sound system.

Best small soundbars at a glance

SQUIRREL_ANCHOR_LIST

Learn more about how we test soundbars

Soundbars were created to boost TV sound quality – which means we end up watching a lot of TV. We play everything – news reports for voices, movies for scale and effects steering – to ensure that the soundbars that come through the doors at Trusted Reviews are given a proper challenge. We’ll play different genres of music, too, since a good soundbar should be capable of doubling-up as a great music system.

More complex soundbars feature network functionality for hooking up to other speakers and playing music around the home, so we test for connectivity issues and ease of use. We cover the spectrum of models available, everything from cheap soundbars costing less than £100 to those over £1000, to ensure our reviews benefit from our extensive market knowledge. Every product is compared to similarly priced rivals, too.

Pros

- Clean and balanced sound

- Upgradeable

- Excellent size

- Amazon Alexa and Google Assistant support

Cons

- HDMI eARC input only

- Limited DTS support

Pros

- Sharp, clear and spacious sound

- Small footprint

- Affordable at its current price

- Wall-mount brackets included

Cons

- LED menu is practically invisible from a seated position

- No HDMI eARC

Pros

- Clean and powerful TV audio

- Surprising amount of bass

- Wide soundstage

- Optional surround sound

Cons

- Remote setup can be fiddly

- Better at TV than music

Pros

- Exciting, dynamic sound (in the right mode)

- Ultra-compact dimensions

- Comes with a subwoofer

- Good range of connections

Cons

- Sub can hog the attention at times

- Not truly immersive

Pros

- Impressive nearfield Dolby Atmos effect

- Clear, articulate voices

- Solid feature set

- Versatile footprint

- Classy design

Cons

- Short on meaningful bass

- Fussy indicator light arrangement

Pros

- Clear, detailed sound with decent bass

- Decent with music

- Neat and tidy design

- Impressive SuperWide feature

Cons

- Odd volume issues with sources

-

Clean and balanced sound -

Upgradeable -

Excellent size -

Amazon Alexa and Google Assistant support

-

HDMI eARC input only -

Limited DTS support

Compared to the original Beam, the Beam Gen 2 comes with addition of an eARC HDMI port that allows it to play full-fat lossless Atmos soundtracks.

That also means you’ll need an eARC compatible TV to get the best out of it.

Otherwise, things remain the same with the Beam 2nd Gen, with it best suited for TVs up to and including 49-inches.

The current Beam supports Wi-Fi and the Sonos S2 app, which offers access to a multitude of streaming services such as Tidal, Deezer and Qobuz, as well as Sonos’ own Radio service.

You can also call on voice assistance in Google Assistant and Amazon Alexa, as well as initiate Trueplay (as long as you’ve got an iOS device), which optimises the Beam’s audio performance according to the environment it is in.

During testing we found it produced an excellent audio performance, offering a solid low end and a generally balanced sound across the frequency range.

It also handled music impressively, with no noticeable distortion, handling more subtle elements with nuance. The addition of Dolby Atmos isn’t achieved through upfiring speakers but through virtual processing, and it offers a good performance with a decent sense of dimensionality when we watched Captain Marvel on Disney+.

The Beam 2 doesn’t have fully-featured DTS support but the similarly compact Polk Magnifi Mini AX and Denon Home Sound bar 550 do support DTS:X.

Like the Sonos both can be paired with a subwoofer for added ‘oomph’. A slightly more expensive but still impressive alternative is the Sennheiser Ambeo Mini.

While the Beam 2 is not perfect, as a means of getting Atmos into the home in a small form factor, the Sonos Beam Gen 2 is a very good way of doing so.

-

Sharp, clear and spacious sound -

Small footprint -

Affordable at its current price -

Wall-mount brackets included

-

LED menu is practically invisible from a seated position -

No HDMI eARC

The Samsung HW-S61B is still going and serves as an excellent, affordable rival to the Sonos Beam Gen 2.

Its a compact speaker cabale of producing a crisp, clear and punchy sound. It offers plenty of energy and outright attack that easily betters anything a TV can produce.

Its built-in subwoofer provides impact to action scenes, and with Atmos content, the soundstage is bigger than the dimensions of the bar and TV, producing plenty of size and scale to go with Hollywood blockbusters.

It’s pretty solid performer with music content whether over Wi-Fi or Bluetooth, though the former produces a clearer, more detailed performance. The lack of HDMI eARC is a disappointment as it means you won’t be getting the highest quality Dolby Atmos sound possible, and we’re not big fans of the design when it comes to placement of the LED screen. We can barely see it at the best of times given how small it is.

Features include Amazon Alexa voice control, though this would need another connected speaker to be able to use. AirPlay 2 is another means of playing audio to the system, while if you have a Samsung Galaxy smartphone, you can tap it on the surface of the soundbar and play music to it.

If after you’ve bought the speaker, you’re looking to upgrade and add more, the S61B does support the SWA-9200S wireless rear speaker system.

If you have a Samsung Q-Symphony compatible TV can also take advantage of that feature, whereby the TV and soundbar speakers combine for a bigger sound.

There are other options in the market if you are looking for a soundbar and subwoofer combo, most notably the Polk MagniFi Mini AX, but its Atmos performance isn’t as convincing as the Samsung.

New models have launched this one first went on sale, and we’ll be hoping to get reviews of those models at some point.

-

Clean and powerful TV audio -

Surprising amount of bass -

Wide soundstage -

Optional surround sound

-

Remote setup can be fiddly -

Better at TV than music

If you’re something with an older TV (say a Pioneer Kuro) or have a second, smaller TV without HDMI inputs, the Sonos Ray is tailor made for you.

It only supports audio through an optical connection, so you won’t have to worry about HDMI handshake issues.

Audio through an optical connection keeps things simple enough, though you do miss out on advanced 3D audio like Dolby Atmos and DTS:X. There’s only enough bandwidth for Dolby Digital and DTS soundtracks.

There’s no built-in microphones for voice control from the likes of as Amazon Alexa and Google Assistant. You can still have those smart features, but you’ll need to connect the Ray to another smart speaker.

The design looks a little different from other Sonos soundbars with its lozenge-shaped look and curved ends. Our reviewer felt it was a less in-your-face design that makes the Ray better to blend in with its surroundings more. You can also fit it into an AV rack if you wanted to conceal it from view.

The sound is surprisingly wide for its size, with effective bass performance too. It offers a clear and obvious improvement on a TV with dialogue making audio tracks much easier to understand. With music we felt it sounded decent, perhaps not quite as good as it is with TV series and films, but passable enough. For its primary job of making audio clearer, the Sonos Ray does a brilliant job.

-

Exciting, dynamic sound (in the right mode) -

Ultra-compact dimensions -

Comes with a subwoofer -

Good range of connections

-

Sub can hog the attention at times -

Not truly immersive

While a small soundbar is helpful in terms of reducing space, its size isn’t always great for producing a more cinematic sound, especially when it comes to bass. The Polk MagniFi Mini AX has you covered in that respect.

This an ultra-compact Dolby Atmos/DTS:X soundbar from American brand Polk, and it differs from other options on this list in that it is not just an all-in-one effort but one that comes with sizeable subwoofer.

This allows it produce and energetic and dynamic performance, and given the weight and power behind the subwoofer’s performance, it’s probably one that’s sure to alert the neighbours to what you’re watching.

In our opinion the Polk doesn’t full suffice as an immersive soundbar but performs better than the Creative Stage 360. It can do a decent impression of height effects but not with the greatest sense of definition, while its soundstage is front heavy, though you can add Polk’s SR2 surround speakers as real channels for a greater sense of space.

Dialogue can be enhanced with Polk’s VoiceAdjust technology, although we found that while it did its job of boosting voices, it also had a tendency to raise surrounding noise as well.

Tonally we felt the soundbar sounded accurate and there’s good levels of detail and clarity to enjoy when the soundbar is put into its 3D mode, which also gives a bigger, wider soundstage to Atmos and DTS:X soundtracks.

With music it’s a solid performer, playing music with a crispness that we found avoided sibilance or harshness.

With Chromecast available along with Bluetooth, AirPlay 2, Spotify Connect and a USB connection that can play MP3 music. With Atmos and DTS:X support for the same price as the Sonos Sub Mini, this is a good value soundbar/subwoofer combination.

-

Impressive nearfield Dolby Atmos effect -

Clear, articulate voices -

Solid feature set -

Versatile footprint -

Classy design

-

Short on meaningful bass -

Fussy indicator light arrangement

Measuring in at 52 x 72 x 110cm (WHD) and weighing under 2kg, the SB700 is stocky yet lightweight enough to carry from room to room, which means it can double as both a sonic enhancer for small TVs and a companion for a workstation. We would advise against relying on the SB700 as the main audio source for a living room, though.

Included with the SB700 is a useful remote control that sports treble and bass controls, input selection and all the various EQ modes including voice, movie, music, night and neutral. Sharp also usefully throws in an HDMI cable, which plugs easily into the soundbar’s rear and shares a port alongside optical, USB (service) and 3.5-mm audio inputs.

Powering the four onboard 1.75-inch drivers is a Class D-based 140W of peak power. Plus, as well as Dolby Atmos decoding, the Sharp processes a 3D mode, also known as DAP (Dolby Atmos Processing). We especially appreciate how the SB700 is a plug-and-play device and supports Bluetooth 5.3 connectivity too.

Overall we were impressed with the SB700’s audio quality. While it does struggle with bass and doesn’t quite offer a satisfying loud movie night, it still offers plenty of prowess with midrange and high frequencies too. Plus dialogue sounds clear too.

-

Clear, detailed sound with decent bass -

Decent with music -

Neat and tidy design -

Impressive SuperWide feature

-

Odd volume issues with sources

What the Creative Stage Pro lacks in features, it more than makes up for in terms of sound and design quality.

While the Stage Pro feels more like a desktop soundbar rather than a cinema bar, it does sport a smart appearance with a useful display at its front that can be seen from the sofa. Although undoubtedly compact, its height can block the TV’s IR receiver which means you might struggle to use your remote control with your TV.

Otherwise, the bar is paired with a similarly unassuming subwoofer that relies on a wired connection to the soundbar. Usefully, as it’s front-firing, you’re free to place it anywhere.

As mentioned earlier, despite its “Pro” moniker, there aren’t many features at play here. While there is Bluetooth 5.3 and support for Dolby Audio and Dolby Digital+ soundtracks, there’s no Wi-FI. Even so, it still covers the basic connections including an optical input, DMI ARC, USB-C and even an auxiliary input.

Having said that, there is one notable feature: SuperWide. This expands the size of the Stage Pro’s sound and pushes audio out wide in a way that’s much bigger than the speaker. Depending on how close you’re sitting to the speaker, you can choose between Near-Field and Far-Field too. The latter is especially impressive as it manages to keep voices clear while expanding the width of the soundstage.

Overall, although it’s not an immersive soundbar, we were pretty impressed with the sense of the height it can provide. Otherwise, the subwoofer does a good job at providing a punchy sense of bass.

We did struggle with the soundbar’s volume levels, especially when switching between sources, as the Stage Pro can veer from excessively loud to surprisingly quiet. It’s frustrating, as it seems as if there’s no way to minimise those swings in volume.

FAQs

No, but it’s best for them to at least be similar in size. For a full-size soundbar, it’s best to partner them with TVs 50-inches and above. With compact soundbars that TVs’ 49-inches and smaller would be the best fit.

No, you won’t need a soundbar that’s the same brand as the TV. Any soundbar can work with any TV it is connected to. Where you may want to consider is whether the soundbar and TV have been optimised to work best with each other. LG and Sony both have soundbars that share features with their respective TVs.

Full Specs

| Sonos Beam (Gen 2) Review | Samsung HW-S61B Review | Sonos Ray Review | Polk MagniFi Mini AX Review | Sharp HT-SB700 Review | Creative Stage Pro Review | |

|---|---|---|---|---|---|---|

| UK RRP | £449 | £329 | £279 | £429 | £199 | £129 |

| USA RRP | $449 | $349 | $279 | $499 | – | $169.99 |

| EU RRP | €499 | €419 | €298 | €479 | – | – |

| CA RRP | CA$559 | CA$499 | – | CA$699 | – | – |

| AUD RRP | AU$699 | AU$599 | – | – | – | – |

| Manufacturer | Sonos | Samsung | Sonos | Polk | Sharp | Creative |

| Size (Dimensions) | 651 x 100 x 69 MM | 670 x 105 x 62 MM | 559 x 95 x 71 MM | 366 x 104 x 79 MM | x 110 x MM | 420 x 265 x 115 MM |

| Weight | 2.8 KG | 2.7 KG | 1.95 KG | – | 1.9 KG | – |

| ASIN | B09B12MGXM | B09W66KSXN | B09ZYCBWYF | B09VH9C5VV | B0CR6M8RW3 | – |

| Release Date | 2021 | 2022 | 2022 | 2022 | 2024 | 2025 |

| First Reviewed Date | 30/09/2021 | – | 31/05/2022 | – | – | – |

| Model Number | Sonos Beam (2nd Gen) | HW-S61B/XU | Sonos Ray | MagniFi Mini AX | HT-SB700 | – |

| Model Variants | Black or white | S60B | – | – | – | – |

| Sound Bar Channels | – | 5.0 | 5.1 | – | 2.0.2 | 2.1 |

| Driver (s) | 1x tweeter, 4x mid-woofers, 3x passive radiators | Centre, two side-firing | 2 x tweeters, 2 x mid-woofers, 2 x low-velocity ports | two 19mm tweeters, three 51mm mid-range, 127mm × 178mm woofer | 2 x 1.75-in full-range forward-facing drivers plus 2 x 1.75-in full-range up-firing drivers | – |

| Audio (Power output) | – | – | – | – | 140 W | 80 W |

| Connectivity | HDMI eARC, Optical S/PDIF (via adaptor) | – | Optical S/PDIF | AirPlay 2, Bluetooth 5.0, Chromecast, Spotify Connect | Bluetooth 5.3 | Bluetooth 5.3 |

| ARC/eARC | ARC/eARC | ARC | N/A | ARC/eARC | eARC | ARC |

| Colours | Black, white | White, Black | Black and white | Black | Matt black | Black |

| Voice Assistant | Amazon Alexa, Google Assistant | Works with Amazon Alexa, Google Assistant, Bixby | – | N/A | – | – |

| Audio Formats | Dolby Digital, Dolby Digital Plus, Dolby True HD, Dolby Atmos, PCM | Dolby Atmos (Dolby Digital Plus), DTS Virtual:X, AAC, MP3, FLAC, ALAC, WAV, OGG, AIFF | DTS, Dolby Digital, Stereo PCM | Dolby Atmos, Dolby Audio, DTS:X, DTS | Dolby Audio, Dolby Atmos | – |

| Power Consumption | – | 31 W | – | – | – | – |

| Subwoofer | – | – | – | Yes | – | Yes |

| Rear Speaker | Optional | Optional | Optional | Optional | No | No |

| Multiroom | Yes (Sonos) | – | Yes (Sonos mesh) | – | – | – |

Tech

Pixel Buds 2a are back down to their Black Friday best price

If you’re in need of a budget-friendly pair of wireless earbuds that don’t skimp on features then look no further than the Pixel Buds 2a.

These wireless earbuds Pixel Buds 2a were designed with one specific goal in mind: to provide a compact, lightweight pair of earbuds that you can adjust to suit your needs.

And now, the Buds 2a are now back to £99, dropping from the RRP of £129, with a £30 saving today.

That’s back to their Black Friday price, so if you missed the discount first time again, you’ve got a second chance.

Pixel Buds 2a are back down to their Black Friday best price

The return of the Google Pixel Buds 2a to their Black Friday low is great deal not to be missed.

For instance, despite the Pixel Buds 2a weighing a mere 4.3g each, Google’s included active noise cancellation within the design to filter out any unwanted background noise in favour of what you’re hearing through the buds.

The ANC is surprisingly effective for such a budget device, but it can be evened out to meet your preferences.

The buds themselves have a lightweight and comfortable fit, easily slipping into the contours of your ears . They even boast a degree of water resistance so you can feel confident in wearing them in light showers or during a tough workout.

You can even use a Pixel phone to can get real-time Google Assistant updates on your commute, or have some relaxing music to listen to when you’re trying to drown out the sound of London Underground.

Get Updates Straight to Your WhatsApp

And because these pods integrate with Google’s Gemini voice assistant, you don’t need to reach for your phone when controlling the Pixel Buds 2a either. Want to change the song? Just ask Gemini to do it. Need to turn up the volume? Again, Gemini has you covered.

Battery life is also competitive here, with up to 20-hours when ANC is engaged, so you’ll always be set to get you through a day’s work.

They might not have quite the same level of feature parity with Apple’s AirPods, but for what the Pixel Buds 2a offer, they’re a better buy for users of the Google ecosystem.

SQUIRREL_PLAYLIST_10148964

Tech

OpenAI is hoppin’ mad about Anthropic’s new Super Bowl TV ads

On Wednesday, OpenAI CEO Sam Altman and Chief Marketing Officer Kate Rouch complained on X after rival AI lab Anthropic released four commercials, two of which will run during the Super Bowl on Sunday, mocking the idea of including ads in AI chatbot conversations. Anthropic’s campaign seemingly touched a nerve at OpenAI just weeks after the ChatGPT maker began testing ads in a lower-cost tier of its chatbot.

Altman called Anthropic’s ads “clearly dishonest,” accused the company of being “authoritarian,” and said it “serves an expensive product to rich people,” while Rouch wrote, “Real betrayal isn’t ads. It’s control.”

Anthropic’s four commercials, part of a campaign called “A Time and a Place,” each open with a single word splashed across the screen: “Betrayal,” “Violation,” “Deception,” and “Treachery.” They depict scenarios where a person asks a human stand-in for an AI chatbot for personal advice, only to get blindsided by a product pitch.

Anthropic’s 2026 Super Bowl commercial.

In one spot, a man asks a therapist-style chatbot (a woman sitting in a chair) how to communicate better with his mom. The bot offers a few suggestions, then pivots to promoting a fictional cougar-dating site called Golden Encounters.

In another spot, a skinny man looking for fitness tips instead gets served an ad for height-boosting insoles. Each ad ends with the tagline: “Ads are coming to AI. But not to Claude.” Anthropic plans to air a 30-second version during Super Bowl LX, with a 60-second cut running in the pregame, according to CNBC.

In the X posts, the OpenAI executives argue that these commercials are misleading because the planned ChatGPT ads will appear labeled at the bottom of conversational responses in banners and will not alter the chatbot’s answers.

But there’s a slight twist: OpenAI’s own blog post about its ad plans states that the company will “test ads at the bottom of answers in ChatGPT when there’s a relevant sponsored product or service based on your current conversation,” meaning the ads will be conversation-specific.

The financial backdrop explains some of the tension over ads in chatbots. As Ars previously reported, OpenAI struck more than $1.4 trillion in infrastructure deals in 2025 and expects to burn roughly $9 billion this year while generating about $13 billion in revenue. Only about 5 percent of ChatGPT’s 800 million weekly users pay for subscriptions. Anthropic is also not yet profitable, but it relies on enterprise contracts and paid subscriptions rather than advertising, and it has not taken on infrastructure commitments at the same scale as OpenAI.

Tech

What AI Integration Really Looks Like in Today’s Classrooms

In late 2022, when generative AI tools landed in students’ hands, classrooms changed almost overnight. Essays written by algorithms appeared in inboxes. Lesson plans suddenly felt outdated. And across the country, schools asked the same questions: How do we respond — and what comes next?

Some educators saw AI as a threat that enables cheating and undermines traditional teaching. Others viewed it as a transformative tool. But a growing number are charting a different path entirely: teaching students to work with AI critically and creatively while building essential literacy skills.

The challenge isn’t just about introducing new technology. It’s about reimagining what learning looks like when AI is part of the equation. How do teachers create assignments that can’t be easily outsourced to generative AI tools? How do elementary students learn to question AI-generated content? And how do educators integrate these tools without losing sight of creativity, critical thinking and human connection?

Recently, EdSurge spoke with three educators who are tackling these questions head-on: Liz Voci, an instructional technology specialist at an elementary school; Pam Amendola, a high school English teacher who reimagined her Macbeth unit to include AI; and Brandie Wright, who teaches fifth and sixth graders at a microschool, integrating AI into lessons on sustainability.

EdSurge: What led you to integrate AI into your teaching?

Amendola: When OpenAI’s ChatGPT burst onto the scene in November 2022, it upended education and sent teachers scrambling. Students were suddenly using AI to complete assignments. Many students thought, Why should I complete a worksheet when AI can do it for me? Why write a discussion post when AI can do it better and faster?

Our education system was built for an industrial age, but we now live in a technological age where tasks are completed rapidly. Learning at school should be a time of discovery, but education remains stuck in the past. We are in a place I call the in between. In this place, I discovered a need to educate students on AI literacy alongside the themes and structure of the English language.

I reimagined my Macbeth unit to integrate AI with traditional learning methods. I taught Acts I-III using time-tested approaches, building knowledge of both Shakespeare and AI into each act. In Act IV, students recreated their assigned scenes using generative AI to make an original movie. For Act V, they used block-based programming to have robots act out their scenes. My assessment had nothing to do with writing an essay, so it was uncheatable. I encouraged students to work with me to design the lesson so I could determine the best way to help them learn.

Voci: Last fall, I was in a literacy meeting with administrators and teachers where I heard concerns about the new science of reading materials not engaging students’ interest. While the books were highly accessible, students had no interest in reading them. This was my lightbulb moment. If we could use AI tools to develop engaging and accessible reading passages for students, we could also teach foundational AI literacy skills at the same time.

This is where The Perfect Book Project was born. Students work with teachers to develop their own perfect reading book that is both engaging and accessible, learning literary skills alongside how to work with and evaluate AI-generated content. In its pilot, I worked directly with teachers as students conceptualized, drafted, edited and published their books. I spent hundreds of hours creating prompts with content guardrails, accessibility constraints and research-based foundational literacy knowledge to guide students and teachers through the process.

Wright: I’m doing quite a bit of work around the U.N. Sustainable Development Goals, teaching our explorers the impact of our actions not just on ourselves but also on others and the environment. I wanted to see them use AI to deepen their knowledge and serve as a thought partner as they develop solutions to issues like climate change.

I created a lesson called “Investigating Energy Efficiency and Sustainability in Our Spaces.” The explorers went on a sustainability scavenger hunt around campus to find examples of energy-efficient items and sustainable practices. They used AI tools to analyze their findings, interpret and evaluate AI responses for accuracy and potential bias, and reflect on how technology and human decisions work together to create sustainable solutions. The AI in this lesson wasn’t about the tools they used, but more about how AI is viewed in the context of what they are learning.

What shifts in student learning did you observe?

Voci: One eye-opening moment was during my first lesson on hallucinations and bias with a third grade class. After introducing the concepts at a developmentally appropriate level, I had them reread their manuscripts through the lens of an AI hallucination and bias detective. It didn’t take long for the first student to find the first hallucination. There was incorrect scoring in a football game. AI counted a touchdown as one point. One student’s hand flew up; he was so excited to explain to me and the class how the model had incorrectly scored the game.

This discovery lit a fire under the rest of the class to begin looking more closely at every word of their text and not take it at face value. The class went on to find more hallucinations and discover some generalizations that did not represent their intentions.

Wright: I saw the explorers develop their critical thinking as they asked questions about how AI was used, how AI makes its decisions and whether this affects the environment. I truly appreciate that this age group holds onto their creativity and imagination. They don’t want AI to do the creating for them. They still want to draw their own pictures and tell their own stories.

Amendola: It was uncomfortable for my honors students to try something new. They were out of their element and craved the structure of the rubric. I had to let go of traditional grading structures first before I could help them embrace the ambiguity. Their willingness to explore and make mistakes was wonderful. The collaboration helped create a sense of class community that resulted in learning a new skill.

What’s your advice for educators hesitant to explore AI?

Amendola: Don’t be afraid to try new things. Keep in mind that the greatest success first requires a change of mindset. Only then can you open the doors to what generative AI can do for your students.

Voci: Don’t let the fear, weight and speed of AI advancement paralyze you. Find small, intentional steps that are grounded in human-centered values to move forward with your own knowledge, and then find ways to connect your new knowledge to support student learning. In this age of AI, we need to give our fellow educators the same resources, scaffolding and grace.

Wright: Jump in!

Join the movement at https://generationai.org to participate in our ongoing exploration of how we can harness AI’s potential to create more engaging and transformative learning experiences for all students.

Tech

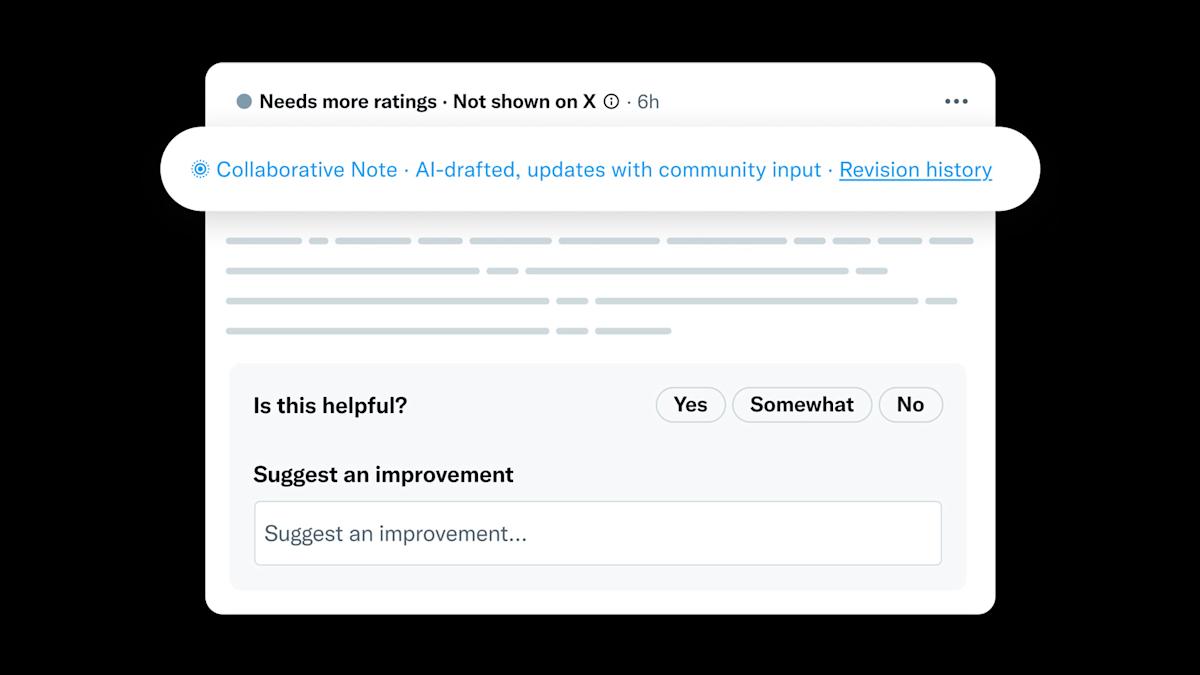

X’s latest Community Notes experiment allows AI to write the first draft

X is experimenting with a new way for AI to write Community Notes. The company is testing a new “collaborative notes” feature that allows human writers to request an AI-written Community Note.

It’s not the first time the platform has experimented with AI in Community Notes. The company started a pilot program last year to allow developers to create dedicated AI note writers. But the latest experiment sounds like a more streamlined process.

According to the company, when an existing Community Note contributor requests a note on a post, the request “now also kicks off creation of a Collaborative Note.” Contributors can then rate the note or suggest improvements. “Collaborative Notes can update over time as suggestions and ratings come in,” X says. “When considering an update, the system reviews new input from contributors to make the note as helpful as possible, then decides whether the new version is a meaningful improvement.”

X doesn’t say whether it’s using Grok or another AI tool to actually generate the fact check. If it was using Grok, that would be in-line with how a lot of X users currently invoke the AI on threads with replies like “@grok is this true?”

Community Notes has often been criticized for moving too slowly so adding AI into the mix could help speed up the process of getting notes published. Keith Coleman, who oversees Community Notes at X, wrote in a post that the update also provides “a new way to make models smarter in the process (continuous learning from community feedback).” On the other hand, we don’t have to look very far to find examples of Grok losing touch with reality or worse.

According to X, only Community Note Contributors with a “top writer” status will be able to initiate a collaborative note to start, though it expects to expand availability “over time.”

Tech

You can buy Amazon’s new Fire TV models right now

Amazon has refreshed its Fire TV lineup in the UK, with three new ranges available to buy right now.

The updated Fire TV 2-Series, Fire TV 4-Series, and Fire TV Omni QLED promise slimmer designs, faster performance and smarter picture tech. All of this is aimed at getting you to your shows quicker.

Leading this current crop is the Fire TV Omni QLED, available in 50-, 55- and 65-inch sizes. Amazon says the new panel is 60% brighter than previous models, with double the local dimming zones for punchier highlights and deeper blacks. Dolby Vision and HDR10+ Adaptive are on board. In addition, the TV can automatically adjust colour and brightness based on your room lighting.

The Omni QLED also leans heavily into smart features. OmniSense uses presence detection to wake the TV when you enter the room and power it down when you leave. Meanwhile, Interactive Art reacts to movement, turning the screen into something closer to a living display than a black rectangle on the wall.

Further down the range, the redesigned Fire TV 2-Series and Fire TV 4-Series cover screen sizes from 32 to 55 inches. The 2-Series sticks to HD resolution, while the 4-Series steps up to 4K. Both benefit from ultra-thin bezels and a new quad-core processor that Amazon says makes them 30% faster than before. It’s a modest upgrade on paper. However, it is one that should make everyday navigation feel noticeably snappier.

All three ranges run Fire TV OS, with Amazon continuing to push its content-first approach. It surfaces apps, live TV and recommendations as soon as you turn the screen on.

The new Fire TV models are available now in the UK, with introductory pricing running until 10 February 2026:

With faster internals and a brighter flagship model, Amazon’s latest Fire TVs look like a solid refresh, especially if you’re after a big screen without a premium TV price tag.

Tech

DHS Is Hunting Down Trump Critics. The ‘Free Speech’ Warriors Are Mighty Quiet.

from the the-chilling-effects-are-real dept

For years, we’ve been subjected to an endless parade of hyperventilating claims about the Biden administration’s supposed “censorship industrial complex.” We were told, over and over again, that the government was weaponizing its power to silence conservative speech. The evidence for this? Some angry emails from White House staffers that Facebook ignored. That was basically it. The Supreme Court looked at it and said there was no standing because there was no evidence of coercion (and even suggested that the plaintiffs had fabricated some of the facts, unsupported by reality).

But now we have actual, documented cases of the federal government using its surveillance apparatus to track down and intimidate Americans for nothing more than criticizing government policy. And wouldn’t you know it, the same people who spent years screaming about censorship are suddenly very quiet.

If any of the following stories had happened under the Biden administration, you’d hear screams from the likes of Matt Taibbi, Bari Weiss, and Michael Shellenberger, about the crushing boot of the government trying to silence speech.

But somehow… nothing. Weiss is otherwise occupied—busy stripping CBS News for parts to please King Trump. And the dude bros who invented the “censorship industrial complex” out of their imaginations? Pretty damn quiet about stories like the following.

Taibbi is spending his time trying to play down the Epstein files and claiming Meta blocking ICE apps on direct request from DHS isn’t censorship because he hasn’t seen any evidence that it’s because of the federal government. Dude. Pam Bondi publicly stated she called Meta to have them removed. Shellenberger, who is now somehow a “free speech professor” at Bari Weiss’ collapsing fake university, seems to just be posting non-stop conspiracy theory nonsense from cranks.

Let’s start with the case that should make your blood boil. The Washington Post reports that a 67-year-old retired Philadelphia man — a naturalized U.S. citizen originally from the UK — found himself in the crosshairs of the Department of Homeland Security after he committed the apparently unforgivable sin of… sending a polite email to a government lawyer asking for mercy in a deportation case.

Here’s what he wrote to a prosecutor who was trying to deport an Afghani man who feared the Taliban would take his life if sent there. The Philadelphia resident found the prosecutors email and sent the following:

“Mr. Dernbach, don’t play Russian roulette with H’s life. Err on the side of caution. There’s a reason the US government along with many other governments don’t recognise the Taliban. Apply principles of common sense and decency.”

That’s it. That’s the email that triggered a federal response. Within hours — hours — of sending this email, Google notified him that DHS had issued an administrative subpoena demanding his personal information. Days later, federal agents showed up at his door.

Showed. Up. At. His. Door.

A retired guy sends a respectful email asking the government to be careful with someone’s life, and within the same day, the surveillance apparatus is mobilized against him.

The tool being weaponized here is the administrative subpoena (something we’ve been calling out for well over a decade, under administrations of both parties) which is a particularly insidious instrument because it doesn’t require a judge’s approval. Unlike a judicial subpoena, where investigators have to show a judge enough evidence to justify the search, administrative subpoenas are essentially self-signed permission slips. As TechCrunch explains:

Unlike judicial subpoenas, which are authorized by a judge after seeing enough evidence of a crime to authorize a search or seizure of someone’s things, administrative subpoenas are issued by federal agencies, allowing investigators to seek a wealth of information about individuals from tech and phone companies without a judge’s oversight.

While administrative subpoenas cannot be used to obtain the contents of a person’s emails, online searches, or location data, they can demand information specifically about the user, such as what time a user logs in, from where, using which devices, and revealing the email addresses and other identifiable information about who opened an online account. But because administrative subpoenas are not backed by a judge’s authority or a court’s order, it’s largely up to a company whether to give over any data to the requesting government agency.

The Philadelphia retiree’s case would be alarming enough if it were a one-off. It’s not. Bloomberg has reported on at least five cases where DHS used administrative subpoenas to try to unmask anonymous Instagram accounts that were simply documenting ICE raids in their communities. One account, @montcowatch, was targeted simply for sharing resources about immigrant rights in Montgomery County, Pennsylvania. The justification? A claim that ICE agents were being “stalked” — for which there was no actual evidence.

The ACLU, which is now representing several of these targeted individuals, isn’t mincing words:

“It doesn’t take that much to make people look over their shoulder, to think twice before they speak again. That’s why these kinds of subpoenas and other actions—the visits—are so pernicious. You don’t have to lock somebody up to make them reticent to make their voice heard. It really doesn’t take much, because the power of the federal government is so overwhelming.”

This is textbook chilling effects on speech.

Remember, it was just a year and a half ago in Murthy v. Missouri, the Supreme Court found no First Amendment violation when the Biden administration sent emails to social media platforms—in part because the platforms felt entirely free to say no. The platforms weren’t coerced; they could ignore the requests and did.

Now consider the Philadelphia retiree. He sends one polite email. Within hours, DHS has mobilized to unmask him. Days later, federal agents are at his door. Does that sound like someone who’s free to speak his mind without consequence?

Even if you felt that what the Biden admin did was inappropriate, it didn’t involve federal agents showing up at people’s homes.

That is what actual government suppression of speech looks like. Not mean tweets from press secretaries that platforms ignored, but federal agents showing up at your door because you sent an (perfectly nice) email the government didn’t like.

So we have DHS mobilizing within hours to identify a 67-year-old retiree who sent a polite email. We have agents showing up at citizens’ homes to interrogate them about their protected speech. We have the government trying to unmask anonymous accounts that are documenting law enforcement activities — something that is unambiguously protected under the First Amendment.

Recording police, sharing that recording, and doing so anonymously is legal. It’s protected speech. And the government is using administrative subpoenas to try to identify and intimidate the people doing it.

For years, we heard that government officials sending emails to social media companies — emails the companies ignored — constituted an existential threat to the First Amendment. But when the government actually uses its coercive power to track down, identify, and intimidate citizens for their speech?

Crickets.

This is what a real threat to free speech looks like. Not “jawboning” that platforms can easily refuse, but the full weight of federal surveillance being deployed against anyone who dares to criticize the administration. The chilling effect here is the entire point.

As the ACLU noted, this appears to be “part of a broader strategy to intimidate people who document immigration activity or criticize government actions.”

If you spent the last few years warning about government censorship, this is your moment. This is the actual thing you claimed to be worried about. But, of course, all those who pretended to care about free speech really only meant they cared about their own team’s speech. Watching the government actually suppress critics? No big deal. They probably deserved it.

Filed Under: 1st amendment, administrative subpoenas, bari weiss, chilling effects, dhs, donald trump, free speech, matt taibbi, michael shellenberger

Companies: google, meta

Tech

Musk Predicts SpaceX Will Launch More AI Compute Per Year Than the Cumulative Total on Earth

Elon Musk told podcast host Dwarkesh Patel and Stripe co-founder John Collison that space will become the most economically compelling location for AI data centers in less than 36 months, a prediction rooted not in some exotic technical breakthrough but in the basic math of electricity supply: chip output is growing exponentially, and electrical output outside China is essentially flat.

Solar panels in orbit generate roughly five times the power they do on the ground because there is no day-night cycle, no cloud cover, no atmospheric loss, and no atmosphere-related energy reduction. The system economics are even more favorable because space-based operations eliminate the need for batteries entirely, making the effective cost roughly 10 times cheaper than terrestrial solar, Musk said. The terrestrial bottleneck is already real.

Musk said powering 330,000 Nvidia GB300 chips — once you account for networking hardware, storage, peak cooling on the hottest day of the year, and reserve margin for generator servicing — requires roughly a gigawatt at the generation level. Gas turbines are sold out through 2030, and the limiting factor is the casting of turbine vanes and blades, a process handled by just three companies worldwide.

Five years from now, Musk predicted, SpaceX will launch and operate more AI compute annually than the cumulative total on Earth, expecting at least a few hundred gigawatts per year in space. Patel estimated that 100 gigawatts alone would require on the order of 10,000 Starship launches per year, a figure Musk affirmed. SpaceX is gearing up for 10,000 launches a year, Musk said, and possibly 20,000 to 30,000.

Tech

Winter Olympics 2026: Omega’s Quantum Timer Precision

From 6-22 February, the 2026 Winter Olympics in Milan-Cortina d’Ampezzo, Italy will feature not just the world’s top winter athletes but also some of the most advanced sports technologies today. At the first Cortina Olympics in 1956, the Swiss company Omega—based in Biel/Bienne—introduced electronic ski starting gates and launched the first automated timing tech of its kind.

At this year’s Olympics, Swiss Timing, sister company to Omega under the parent Swatch Group, unveils a new generation of motion analysis and computer vision technology. The new technologies on offer include photofinish cameras that capture up to 40,000 images per second.

“We work very closely with athletes,” says Swiss Timing CEO Alain Zobrist, who has overseen Olympic timekeeping since the winter games of 2006 in Torino “They are the primary customers of our technology and services, and they need to understand how our systems work in order to trust them.”

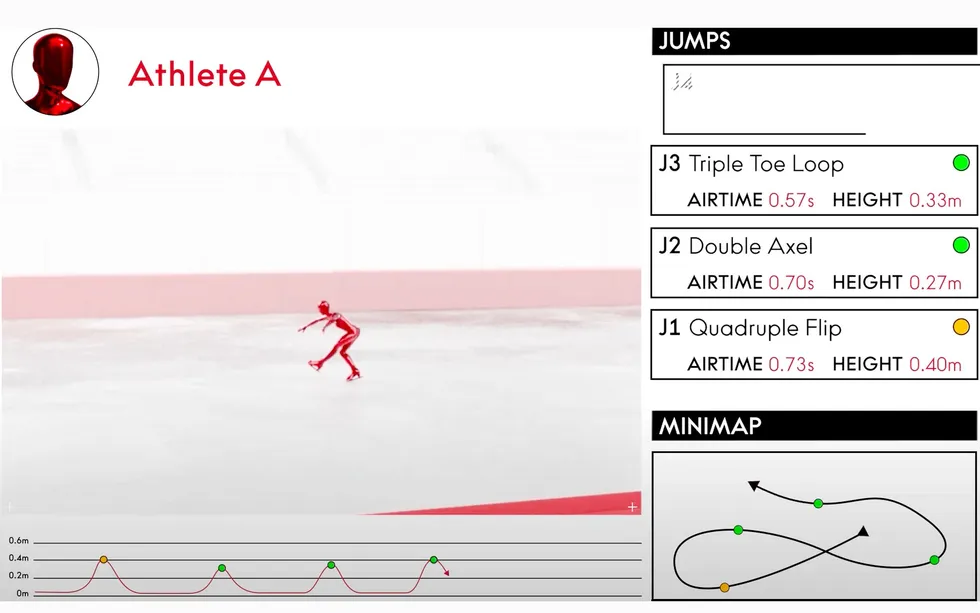

Using high-resolution cameras and AI algorithms tuned to skaters’ routines, Milan-Cortina Olympic officials expect new figure skating tech to be a key highlight of the games. Omega

Using high-resolution cameras and AI algorithms tuned to skaters’ routines, Milan-Cortina Olympic officials expect new figure skating tech to be a key highlight of the games. Omega

Figure Skating Tech Completes the Rotation

Figure skating, the Winter Olympics’ biggest TV draw, is receiving a substantial upgrade at Milano Cortina 2026.

Fourteen 8K resolution cameras positioned around the rink will capture every skater’s movement. “We use proprietary software to interpret the images and visualize athlete movement in a 3D model,” says Zobrist. “AI processes the data so we can track trajectory, position, and movement across all three axes—X, Y, and Z”.

The system measures jump heights, air times, and landing speeds in real time, producing heat maps and graphic overlays that break down each program—all instantaneously. “The time it takes for us to measure the data, until we show a matrix on TV with a graphic, this whole chain needs to take less than 1/10 of a second,” Zobrist says.

A range of different AI models helps the broadcasters and commentators process each skater’s every move on the ice.

“There is an AI that helps our computer vision system do pose estimation,” he says. “So we have a camera that is filming what is happening, and an AI that helps the camera understand what it’s looking at. And then there is a second type of AI, which is more similar to a large language model that makes sense of the data that we collect”.

Among the features Swiss Timing’s new systems provide is blade angle detection, which gives judges precise technical data to augment their technical and aesthetic decisions. Zobrist says future versions will also determine whether a given rotation is complete, so that “If the rotation is 355 degrees, there is going to be a deduction,” he says.

This builds on technology Omega unveiled at the 2024 Paris Olympics for diving, where cameras measured distances between a diver’s head and the board to help judges assess points and penalties to be awarded.

At the 2026 Winter Olympics, ski jumping will feature both camera-based and sensor-based technologies to make the aerial experience more immediate and real-time. Omega

At the 2026 Winter Olympics, ski jumping will feature both camera-based and sensor-based technologies to make the aerial experience more immediate and real-time. Omega

Ski Jumping Tech Finds Make-or-Break Moments

Unlike figure skating’s camera-based approach, ski jumping also relies on physical sensors.

“In ski jumping, we use a small, lightweight sensor attached to each ski, one sensor per ski, not on the athlete’s body,” Zobrist says. The sensors are lightweight and broadcast data on a skier’s speed, acceleration, and positioning in the air. The technology also correlates performance data with wind conditions, revealing environmental factors’ influence on each jump.

High-speed cameras also track each ski jumper. Then, a stroboscopic camera provides body position time-lapses throughout the jump.

“The first 20 to 30 meters after takeoff are crucial as athletes move into a V position and lean forward,” Zobrist says. “And both the timing and precision of this movement strongly influence performance.”

The system reveals biomechanical characteristics in real time, he adds, showing how athletes position their bodies during every moment of the takeoff process. The most common mistake in flight position, over-rotation or under-rotation, can now be detailed and diagnosed with precision on every jump.

Bobsleigh: Pushing the Line on the Photo Finish

This year’s Olympics will also feature a “virtual photo finish,” providing comparison images of when different sleds cross the finish line over previous runs.

Omega’s cameras will provide virtual photo finishes at the 2026 Winter Olympics. Omega

Omega’s cameras will provide virtual photo finishes at the 2026 Winter Olympics. Omega

“We virtually build a photo finish that shows different sleds from different runs on a single visual reference,” says Zobrist.

After each run, composite images show the margins separating performances. However, more tried-and-true technology still generates official results. A Swiss Timing score, he says, still comes courtesy of photoelectric cells, devices that emit light beams across the finish line and stop the clock when broken. The company offers its virtual photo finish, by contrast, as a visualization tool for spectators and commentators.

In bobsleigh, as in every timed Winter Olympic event, the line between triumph and heartbreak is sometimes measured in milliseconds or even shorter time intervals still. Such precision will, Zobrist says, stem from Omega’s Quantum Timer.

“We can measure time to the millionth of a second, so 6 digits after the comma, with a deviation of about 23 nanoseconds over 24 hours,” Zobrist explained. “These devices are constantly calibrated and used across all timed sports.”

From Your Site Articles

Related Articles Around the Web

Tech

Are Duracell Batteries Better Than Energizer? What Consumer Reports Data Says

We may receive a commission on purchases made from links.

With some product segments, two rival companies dominate the market space. Boeing and Airbus sometimes even use the same engines, and chances are good you’re reading this on a mobile phone running software created by either Apple or Google. When it comes to alkaline batteries, the two most recognizable premium brands are Duracell and Energizer. Consumer Reports tested 15 different AA batteries and rated the Duracell Quantum AA highest among alkaline batteries and equal in perfomance to Energizer Ultimate lithium batteries. Rayovac Fusion Advanced AA batteries also performed well, coming in just ahead of Energizer Advanced lithium and Duracell Copper top alkaline cells. Energizer EcoAdvanced and Max+ PowerSeal alkaline batteries scored a little lower; in the company of retailer-branded batteries from Amazon, CVS, Walgreens, and Rite Aid.

This round of Consumer Reports testing covered only disposable alkaline and lithium batteries. SlashGear’s ranking of rechargeable batteries also placed Duracell just ahead of Energizer, although EBL and Eneloop batteries topped our list. Energizer and Duracell batteries cost more than most generic competitors, but expensive name-brand batteries usually last longer than cheaper ones.

Most tests (including this one from Consumer Reports) find Duracell and Energizer batteries to be more or less equal in terms of performance, although the Duracell Quantum was a top performer here. Energizer Ultimate lithium batteries more or less matched the Quantum’s performance benchmarks, and Energizer Advanced lithium cells tested slightly better than Duracell Copper Top alkalines. Both brands are highly recommended by SlashGear and Consumer Reports with impressive performance that separates them from the rest of the pack, so buy either with confidence.

How to make batteries last longer

Consumer Reports tested this battery of batteries by measuring runtime in toys and flashlights, but use across a variety of devices might return different results. For example, a TV remote control doesn’t require that much power to function and is used intermittently, so drain on batteries is low. That’s why you may not need to change them for months, or even years. In contrast, Xbox controllers drain batteries very quickly because features like haptic feedback and wireless connectivity draw lots of power.

There are a couple things you can do to get the most out of your batteries. First, pay attention to the stamped expiration date if you’re buying them in a store. If the batteries aren’t going straight to use in a device, keep them in their original packaging in a cool, dry place. Contrary to a common myth, storing batteries in the fridge or freezer is a bad idea; condensation can form inside the packaging. Put them in an interior closet or water-resistant toolbox, and remove batteries from devices you don’t plan on using for a while. Be careful not to store batteries loose in a box or drawer with other batteries or metal objects; short-circuits could drain your batteries or even cause a fire. A plastic battery organizer will protect batteries when not in use and can be stored in a closet or cabinet. Stacking batteries upright will prevent terminal-to-terminal contact, and always recycle used batteries according to local guidelines.

Tech

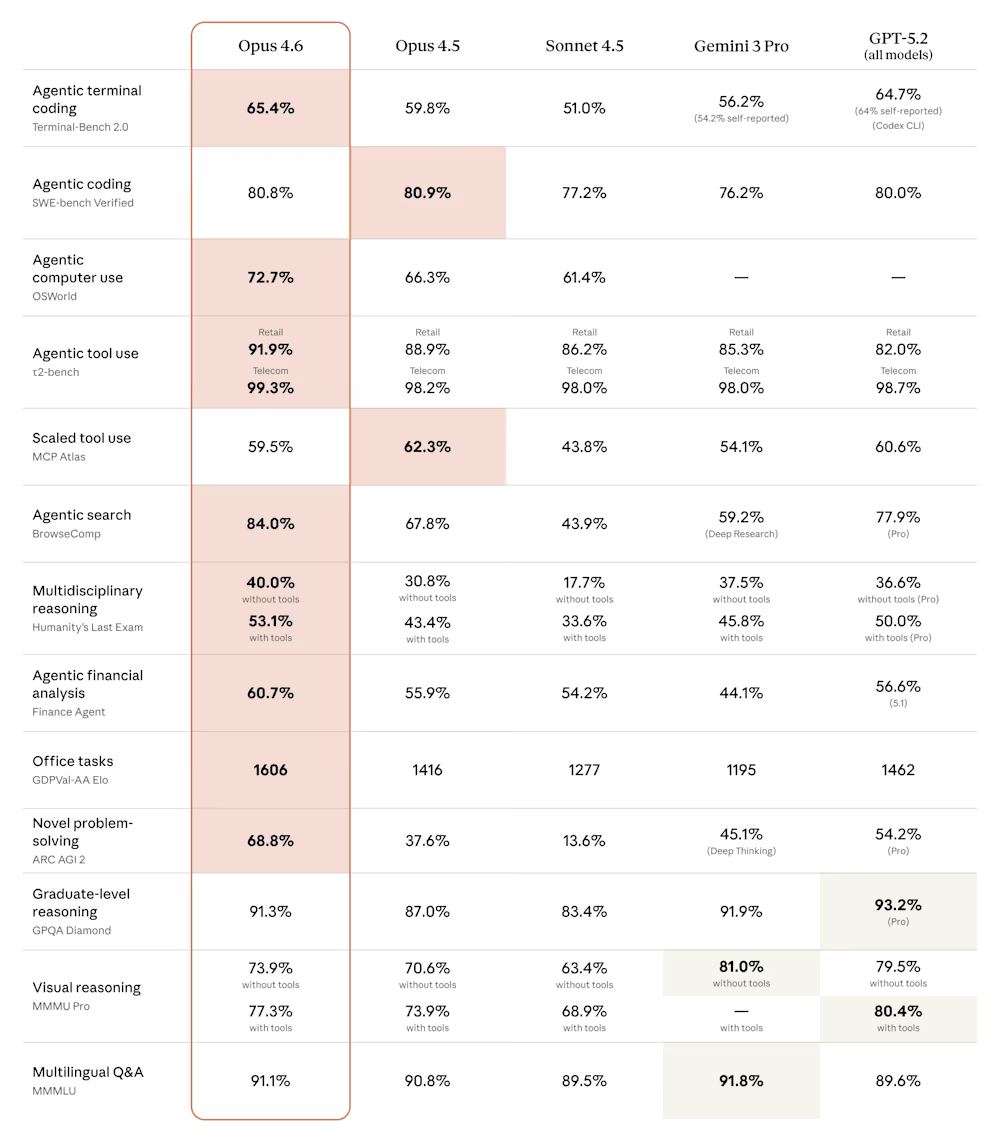

Anthropic’s Claude Opus 4.6 brings 1M token context and ‘agent teams’ to take on OpenAI’s Codex

Anthropic on Thursday released Claude Opus 4.6, a major upgrade to its flagship artificial intelligence model that the company says plans more carefully, sustains longer autonomous workflows, and outperforms competitors including OpenAI’s GPT-5.2 on key enterprise benchmarks — a release that arrives at a tumultuous moment for the AI industry and global software markets.

The launch comes just three days after OpenAI released its own Codex desktop application in a direct challenge to Anthropic’s Claude Code momentum, and amid a $285 billion rout in software and services stocks that investors attribute partly to fears that Anthropic’s AI tools could disrupt established enterprise software businesses.

For the first time, Anthropic’s Opus-class models will feature a 1 million token context window, allowing the AI to process and reason across vastly more information than previous versions. The company also introduced “agent teams” in Claude Code — a research preview feature that enables multiple AI agents to work simultaneously on different aspects of a coding project, coordinating autonomously.

“We’re focused on building the most capable, reliable, and safe AI systems,” an Anthropic spokesperson told VentureBeat about the announcements. “Opus 4.6 is even better at planning, helping solve the most complex coding tasks. And the new agent teams feature means users can split work across multiple agents — one on the frontend, one on the API, one on the migration — each owning its piece and coordinating directly with the others.”

Why OpenAI and Anthropic are locked in an all-out war for enterprise developers

The release intensifies an already fierce competition between Anthropic and OpenAI, the two most valuable privately held AI companies in the world. OpenAI on Monday released a new desktop application for its Codex artificial intelligence coding system, a tool the company says transforms software development from a collaborative exercise with a single AI assistant into something more akin to managing a team of autonomous workers.

AI coding assistants have exploded in popularity over the last year, and OpenAI said more than 1 million developers have used Codex in the past month. The new Codex app is part of OpenAI’s ongoing effort to lure users and market share away from rivals like Anthropic and Cursor.

The timing of Anthropic’s release — just 72 hours after OpenAI’s Codex launch — underscores the breakneck pace of competition in AI development tools. OpenAI faces intensifying competition from Anthropic, which posted the largest share increase of any frontier lab since May 2025, according to a recent Andreessen Horowitz survey. Forty-four percent of enterprises now use Anthropic in production, driven by rapid capability gains in software development since late 2024. The desktop launch is a strategic counter to Claude Code’s momentum.

According to Anthropic’s announcement, Opus 4.6 achieves the highest score on Terminal-Bench 2.0, an agentic coding evaluation, and leads all other frontier models on Humanity’s Last Exam, a complex multi-discipline reasoning test. On GDPval-AA — a benchmark measuring performance on economically valuable knowledge work tasks in finance, legal and other domains — Opus 4.6 outperforms OpenAI’s GPT-5.2 by approximately 144 ELO points, which translates to obtaining a higher score approximately 70% of the time.

Inside Claude Code’s $1 billion revenue milestone and growing enterprise footprint

The stakes are substantial. Asked about Claude Code’s financial performance, the Anthropic spokesperson noted that in November, the company announced that Claude Code reached $1 billion in run rate revenue only six months after becoming generally available in May 2025.

The spokesperson highlighted major enterprise deployments: “Claude Code is used by Uber across teams like software engineering, data science, finance, and trust and safety; wall-to-wall deployment across Salesforce’s global engineering org; tens of thousands of devs at Accenture; and companies across industries like Spotify, Rakuten, Snowflake, Novo Nordisk, and Ramp.”

That enterprise traction has translated into skyrocketing valuations. Earlier this month, Anthropic signed a term sheet for a $10 billion funding round at a $350 billion valuation. Bloomberg reported that Anthropic is simultaneously working on a tender offer that would allow employees to sell shares at that valuation, offering liquidity to staffers who have watched the company’s worth multiply since its 2021 founding.

How Opus 4.6 solves the ‘context rot’ problem that has plagued AI models

One of Opus 4.6’s most significant technical improvements addresses what the AI industry calls “context rot“—the degradation of model performance as conversations grow longer. Anthropic says Opus 4.6 scores 76% on MRCR v2, a needle-in-a-haystack benchmark testing a model’s ability to retrieve information hidden in vast amounts of text, compared to just 18.5% for Sonnet 4.5.

“This is a qualitative shift in how much context a model can actually use while maintaining peak performance,” the company said in its announcement.

The model also supports outputs of up to 128,000 tokens — enough to complete substantial coding tasks or documents without breaking them into multiple requests.

For developers, Anthropic is introducing several new API features alongside the model: adaptive thinking, which allows Claude to decide when deeper reasoning would be helpful rather than requiring a binary on-off choice; four effort levels (low, medium, high, max) to control intelligence, speed and cost tradeoffs; and context compaction, a beta feature that automatically summarizes older context to enable longer-running tasks.

Anthropic’s delicate balancing act: Building powerful AI agents without losing control

Anthropic, which has built its brand around AI safety research, emphasized that Opus 4.6 maintains alignment with its predecessors despite its enhanced capabilities. On the company’s automated behavior audit measuring misaligned behaviors such as deception, sycophancy, and cooperation with misuse, Opus 4.6 “showed a low rate” of problematic responses while also achieving “the lowest rate of over-refusals — where the model fails to answer benign queries — of any recent Claude model.”

When asked how Anthropic thinks about safety guardrails as Claude becomes more agentic, particularly with multiple agents coordinating autonomously, the spokesperson pointed to the company’s published framework: “Agents have tremendous potential for positive impacts in work but it’s important that agents continue to be safe, reliable, and trustworthy. We outlined our framework for developing safe and trustworthy agents last year which shares core principles developers should consider when building agents.”

The company said it has developed six new cybersecurity probes to detect potentially harmful uses of the model’s enhanced capabilities, and is using Opus 4.6 to help find and patch vulnerabilities in open-source software as part of defensive cybersecurity efforts.

Sam Altman vs. Dario Amodei: The Super Bowl ad battle that exposed AI’s deepest divisions

The rivalry between Anthropic and OpenAI has spilled into consumer marketing in dramatic fashion. Both companies will feature prominently during Sunday’s Super Bowl. Anthropic is airing commercials that mock OpenAI’s decision to begin testing advertisements in ChatGPT, with the tagline: “Ads are coming to AI. But not to Claude.”

OpenAI CEO Sam Altman responded by calling the ads “funny” but “clearly dishonest,” posting on X that his company would “obviously never run ads in the way Anthropic depicts them” and that “Anthropic wants to control what people do with AI” while serving “an expensive product to rich people.”

The exchange highlights a fundamental strategic divergence: OpenAI has moved to monetize its massive free user base through advertising, while Anthropic has focused almost exclusively on enterprise sales and premium subscriptions.

The $285 billion stock selloff that revealed Wall Street’s AI anxiety

The launch occurs against a backdrop of historic market volatility in software stocks. A new AI automation tool from Anthropic PBC sparked a $285 billion rout in stocks across the software, financial services and asset management sectors on Tuesday as investors raced to dump shares with even the slightest exposure. A Goldman Sachs basket of US software stocks sank 6%, its biggest one-day decline since April’s tariff-fueled selloff.

The selloff was triggered by a new legal tool from Anthropic, which showed the AI industry’s growing push into industries that can unlock lucrative enterprise revenue needed to fund massive investments in the technology. One trigger for Tuesday’s selloff was Anthropic’s launch of plug-ins for its Claude Cowork agent on Friday, enabling automated tasks across legal, sales, marketing and data analysis.

Thomson Reuters plunged 15.83% Tuesday, its biggest single-day drop on record; and Legalzoom.com sank 19.68%. European legal software providers including RELX, owner of LexisNexis, and Wolters Kluwer experienced their worst single-day performances in decades.

Not everyone agrees the selloff is warranted. Nvidia CEO Jensen Huang said on Tuesday that fears AI would replace software and related tools were “illogical” and “time will prove itself.” Mark Murphy, head of U.S. enterprise software research at JPMorgan, said in a Reuters report it “feels like an illogical leap” to say a new plug-in from an LLM would “replace every layer of mission-critical enterprise software.”

What Claude’s new PowerPoint integration means for Microsoft’s AI strategy

Among the more notable product announcements: Anthropic is releasing Claude in PowerPoint in research preview, allowing users to create presentations using the same AI capabilities that power Claude’s document and spreadsheet work. The integration puts Claude directly inside a core Microsoft product — an unusual arrangement given Microsoft’s 27% stake in OpenAI.

The Anthropic spokesperson framed the move pragmatically in an interview with VentureBeat: “Microsoft has an official add-in marketplace for Office products with multiple add-ins available to help people with slide creation and iteration. Any developer can build a plugin for Excel or PowerPoint. We’re participating in that ecosystem to bring Claude into PowerPoint. This is about participating in the ecosystem and giving users the ability to work with the tools that they want, in the programs they want.”

The data behind enterprise AI adoption: Who’s winning and who’s losing ground

Data from a16z’s recent enterprise AI survey suggests both Anthropic and OpenAI face an increasingly competitive landscape. While OpenAI remains the most widely used AI provider in the enterprise, with approximately 77% of surveyed companies using it in production in January 2026, Anthropic’s adoption is rising rapidly — from near-zero in March 2024 to approximately 40% using it in production by January 2026.

The survey data also shows that 75% of Anthropic’s enterprise customers are using it in production, with 89% either testing or in production — figures that slightly exceed OpenAI’s 46% in production and 73% testing or in production rates among its customer base.

Enterprise spending on AI continues to accelerate. Average enterprise LLM spend reached $7 million in 2025, up 180% from $2.5 million in 2024, with projections suggesting $11.6 million in 2026 — a 65% increase year-over-year.

Pricing, availability, and what developers need to know about Claude Opus 4.6

Opus 4.6 is available immediately on claude.ai, the Claude API, and major cloud platforms. Developers can access it via claude-opus-4-6 through the API. Pricing remains unchanged at $5 per million input tokens and $25 per million output tokens, with premium pricing of $10/$37.50 for prompts exceeding 200,000 tokens using the 1 million token context window.

For users who find Opus 4.6 “overthinking” simpler tasks — a characteristic Anthropic acknowledges can add cost and latency — the company recommends adjusting the effort parameter from its default high setting to medium.

The recommendation captures something essential about where the AI industry now stands. These models have grown so capable that their creators must now teach customers how to make them think less. Whether that represents a breakthrough or a warning sign depends entirely on which side of the disruption you’re standing on — and whether you remembered to sell your software stocks before Tuesday.

-

Crypto World6 days ago

Crypto World6 days agoSmart energy pays enters the US market, targeting scalable financial infrastructure

-

Politics6 days ago

Politics6 days agoWhy is the NHS registering babies as ‘theybies’?

-

Crypto World7 days ago

Crypto World7 days agoAdam Back says Liquid BTC is collateralized after dashboard problem

-

Video3 days ago

Video3 days agoWhen Money Enters #motivation #mindset #selfimprovement

-

Fashion6 days ago

Fashion6 days agoWeekend Open Thread – Corporette.com

-

Tech2 days ago

Tech2 days agoWikipedia volunteers spent years cataloging AI tells. Now there’s a plugin to avoid them.

-

NewsBeat7 days ago

NewsBeat7 days agoDonald Trump Criticises Keir Starmer Over China Discussions

-

Politics4 days ago

Politics4 days agoSky News Presenter Criticises Lord Mandelson As Greedy And Duplicitous

-

Crypto World6 days ago

Crypto World6 days agoU.S. government enters partial shutdown, here’s how it impacts bitcoin and ether

-

Sports5 days ago

Sports5 days agoSinner battles Australian Open heat to enter last 16, injured Osaka pulls out

-

Crypto World5 days ago

Crypto World5 days agoBitcoin Drops Below $80K, But New Buyers are Entering the Market

-

Crypto World4 days ago

Crypto World4 days agoMarket Analysis: GBP/USD Retreats From Highs As EUR/GBP Enters Holding Pattern

-

Business4 hours ago

Business4 hours agoQuiz enters administration for third time

-

Crypto World6 days ago

Crypto World6 days agoKuCoin CEO on MiCA, Europe entering new era of compliance

-

Business6 days ago

Entergy declares quarterly dividend of $0.64 per share

-

Sports4 days ago

Sports4 days agoShannon Birchard enters Canadian curling history with sixth Scotties title

-

NewsBeat11 hours ago

NewsBeat11 hours agoStill time to enter Bolton News’ Best Hairdresser 2026 competition

-

NewsBeat3 days ago

NewsBeat3 days agoUS-brokered Russia-Ukraine talks are resuming this week

-

NewsBeat3 days ago

NewsBeat3 days agoGAME to close all standalone stores in the UK after it enters administration

-

Crypto World2 days ago

Crypto World2 days agoRussia’s Largest Bitcoin Miner BitRiver Enters Bankruptcy Proceedings: Report