Tech

Civilization VII Apple Arcade brings a big PC strategy game to your pocket

Civilization VII Apple Arcade is now available on iPhone, iPad, and Mac, so you can start a campaign on your phone and keep it going on a larger screen later. It’s the closest thing to true pocket Civ on Apple hardware, without ads, upsells, or a separate purchase for each device.

The appeal is simple. Civ rewards short bursts and long nights, and this version finally lets you do both with the same save. But it also draws a clear line around what it can’t do yet.

The portability comes with limits

Your progress syncs across Apple devices, so a match can follow you from iPhone to iPad to Mac. That’s the feature that makes the subscription feel practical, not just convenient. One save, one campaign, no starting over.

The tradeoff is in the borders. Multiplayer isn’t available at launch, and it’s planned for a later update. There’s also no cross-play, and your saves don’t move over to the PC or console releases, so it won’t merge with an existing campaign you already have elsewhere.

Touch-first Civ, with a safety net

This edition is built around touch, with taps and gestures doing the heavy lifting for unit moves, city choices, and the endless menu hopping Civ is known for. Controller support helps if you’d rather play in a familiar way on iPad or Mac.

It’s a better fit for solo play than anything else. You can take a few turns while waiting in line, then swap to a bigger screen when you want to plan a war, juggle districts, or untangle a late-game mess. It’s still Civ that just fits your day.

What to check before installing

Your device will shape how far you can push it. The App Store listing calls for iOS 17 and an A17 Pro class iPhone or newer, and the largest map sizes are reserved for devices with more than 8GB of RAM.

If you want a portable way to scratch the Civ itch, Apple Arcade is the smoothest option on iPhone, especially if you’ll also play on Mac or iPad. If your Civ life revolves around online matches, mods, or huge scenarios, this is best as a side door for now.

Tech

US bans Chinese software from connected cars, triggering a major industry overhaul

The rule, issued by the Commerce Department’s Bureau of Industry and Security, bans code written in China or by Chinese-owned firms from vehicles that connect to the cloud. By 2029, even their connectivity hardware will be covered under the same restrictions.

Read Entire Article

Source link

Tech

Valve Delays Steam Frame and Steam Machine Pricing as Memory Costs Rise

Valve revealed its lineup of upcoming hardware in November, including a home PC-gaming console called the Steam Machine and the Steam Frame, a VR headset. At the time of the reveal, the company expected to release its hardware in “early 2026,” but the current state of memory and storage prices appears to have changed those plans.

Valve says its goal to release the Steam Frame and Steam Machine in the first half of 2026 has not changed, but it’s still deliberating on final shipping dates and pricing, according to a post from the company on Wednesday. While the company didn’t provide specifics, it said it was mindful of the current state of the hardware and storage markets. All kinds of computer components have rocketed in price due to massive investments in AI infrastructure.

“When we announced these products in November, we planned on being able to share specific pricing and launch dates by now. But the memory and storage shortages you’ve likely heard about across the industry have rapidly increased since then,” Valve said. “The limited availability and growing prices of these critical components mean we must revisit our exact shipping schedule and pricing (especially around Steam Machine and Steam Frame).”

Valve says it will provide more updates in the future about its hardware lineup.

What are the Steam Frame and Steam Machine?

The Steam Frame is a standalone VR headset that’s all about gaming. At the hardware reveal in November, CNET’s Scott Stein described it as a Steam Deck for your face. It runs on SteamOS on an ARM-based chip, so games can be loaded onto the headset and played directly from it, allowing gamers to play games on the go. There’s also the option to wirelessly stream games from a PC.

The Steam Machine is Valve’s home console. It’s a cube-shaped microcomputer intended to be connected to a TV.

When will the Steam Frame and Steam Machine come out?

Valve didn’t provide a specific launch date for either. The initial expectation after the November reveal was that the Steam Frame and Steam Machine would arrive in March. Valve’s statement about releasing its hardware in the first half of 2026 suggests both will come out in June at the latest.

How much will the Steam Frame and Steam Machine cost?

After the reveal, there was much speculation on their possible prices. For the Steam Frame, the expectation was that it would start at $600. The Steam Machine was expected to launch at a price closer to $700. Those estimates could easily increase by $100 or more due to the current state of pricing for memory and storage.

Tech

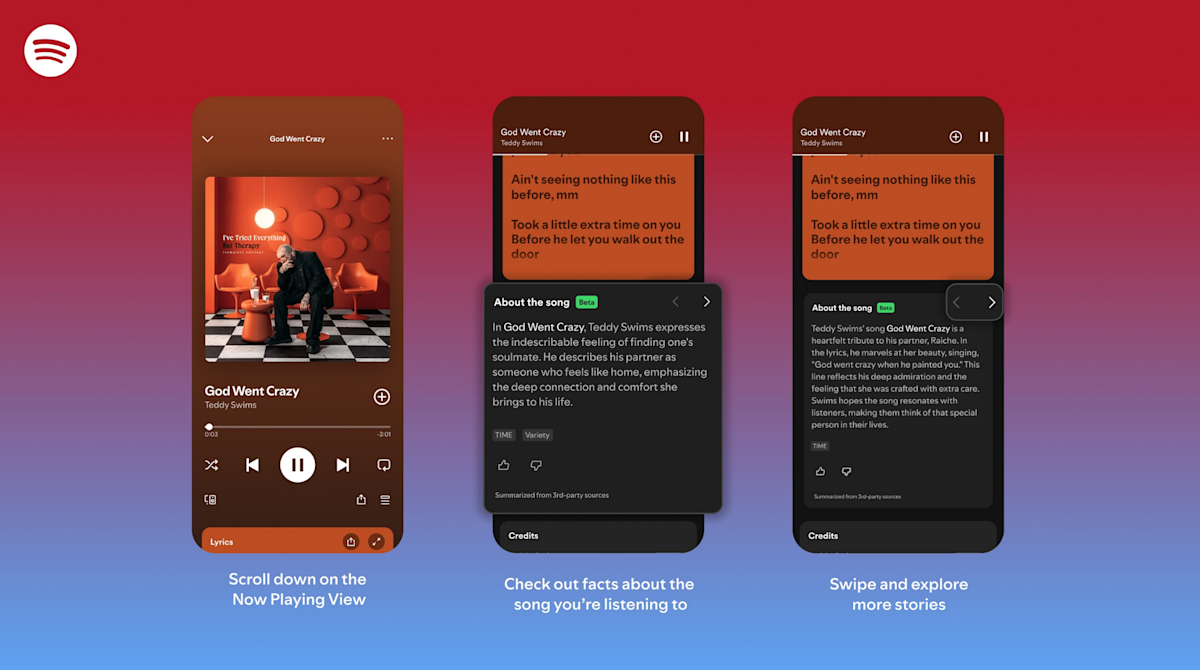

Spotify now lets you swipe on songs to learn more about them

Spotify is rolling out a feature called which lets fans learn a bit more about their favorite tunes. This “brings stories and context” into the listening experience, sort of like that old VH1 show Pop Up Video.

How does it work? The Now Playing View houses short, swipeable story cards that “explore the meaning” behind the music. This information is sourced from third parties and the company promises “interesting details and behind-the-scenes moments.” All you have to do is scroll down until you see the card and then swipe.

This is rolling out right now to Premium users on both iOS and Android, but it’s not everywhere just yet. The beta tool is currently available in the US, UK, Canada, Ireland, New Zealand and Australia.

Spotify has been busy lately, as this is just the latest new feature. The platform recently introduced a and .

Tech

Review: The Temptations’ Psychedelic Motown Era Revisited in New Elemental Reissues

Elemental Music’s affordably priced reissue series brings classic 1960s and 1970s Motown titles back to record stores worldwide, making these albums accessible to a new generation of listeners. For those seeking clean, newly pressed, and largely faithful recreations of these vintage releases complete with pristine jackets and vinyl—rather than chasing original pressings that are increasingly scarce in comparable condition, these reissues fill a meaningful gap in today’s collector and listener market.

Elemental’s reissues were sourced from 1980s-era 16-bit/44.1 kHz digital masters, which many Motown enthusiasts and mastering engineers regard as among the best-sounding transfers available for these recordings, as numerous original tapes have been lost or damaged over time.

Each title in the new Motown reissue series is packaged in a plastic-lined, audiophile-grade white inner sleeve and includes a faithful recreation of a period-appropriate Motown company sleeve, complete with catalog imagery highlighting many of the label’s best-known releases from the era. In my listening, the pressings have generally been quiet, well-centered, and free of obvious manufacturing defects.

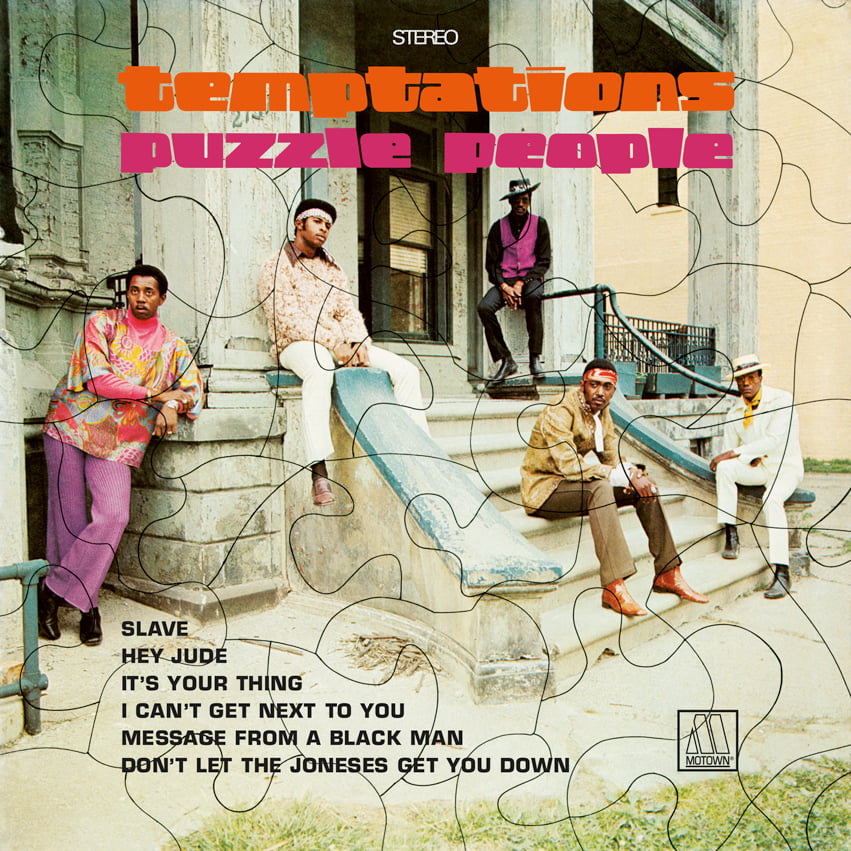

The Temptations, Puzzle People

1969’s Puzzle People by The Temptations works best as a complete album listening experience. I was surprised by how much I enjoyed the group’s take on contemporary pop material such as “Little Green Apples,” but it’s the album-opening No. 1 hit “I Can’t Get Next to You” that remains the main attraction—it still hits hard. More topical tracks like “Don’t Let the Joneses Get You Down” and “Message From a Black Man” land with real weight and conviction. Backed by the legendary Funk Brothers, Puzzle People also serves as a clear bridge to the more expansive Psychedelic Shack that followed the next year.

Where to buy: $29.98 at Amazon

The Temptations, Psychedelic Shack

A harder rocking album, this again finds The Temptations psychedic soul mode driven by producer/composer Norman Whitfield and backed by The Funk Brothers. Psychedelic Shack is a classic of the period delivering strong messages for the times — such as “You Make Your Own Heaven and Hell Right Here on Earth”– some of which feel remarkably timely and prescient for the times we are living through right now.

A near-mint original pressing of Psychedelic Shack on Gordy Records typically sells for $50–$60 today, so access to a clean, newly pressed copy at a lower price has obvious appeal—especially for newer listeners who prefer buying brand new pressings. I can also attest that genuinely clean copies of popular soul titles like this are far from easy to track down, even when you’re willing to spend the money.

Psychedelic Shack notably also contains the original version of the protest song “War” which was near simultaneously re-recorded by then-new Motown artist Edwin Starr (a much heavier production which became a massive hit). There is a fascinating backstory on the rationale for The Temptations version not being released as a single (easily found on the internet) but its ultimately a good thing as this version almost feels like a demo for Starr’s bigger hit.

Psychedelic Shack is one of the better Temptations albums start to finish so I have no problem recommending this for those who are new to their music. This new reissue sounds a bit thinner and flatter than my original copy, ultimately losing some dynamic punch.

Where to buy: $34.65 at Amazon

Mark Smotroff is a deep music enthusiast / collector who has also worked in entertainment oriented marketing communications for decades supporting the likes of DTS, Sega and many others. He reviews vinyl for Analog Planet and has written for Audiophile Review, Sound+Vision, Mix, EQ, etc. You can learn more about him at LinkedIn.

Related Reading:

Tech

iPhone 18 Pro Max battery life to increase again — but not by much

A new iPhone 18 Pro Max leak claims that will see the smallest year-over-year battery capacity increase in years, although the final use figures depends more on the power efficiency of the A20 processor.

The iPhone 18 Pro Max should see an improvement in battery life

Recent rumors have claimed that the expected iPhone Fold will have the largest-capacity battery the iPhone has ever had. But dubious leaks specifying a capacity figure claim it will be a 5,000mAh battery, and now the iPhone 18 Pro Max will reportedly beat it.

That’s according to leaker Digital Chat Station on Chinese social media site Weibo. He or she states that there will again be two models of the highest-end iPhone, with different battery capacities:

Rumor Score: 🤔 Possible

Continue Reading on AppleInsider | Discuss on our Forums

Tech

More Than 800 Google Workers Urge Company to Cancel Any Contracts With ICE and CBP

More Than 800 employees and contractors working for Google signed a petition this week calling on the company to disclose and cancel any contracts it may have with US immigration authorities. In a statement, the workers said they are “vehemently opposed” to Google’s dealings with the Department of Homeland Security, which includes Immigration and Customs Enforcement (ICE) and Customs and Border Protection (CBP).

“We consider it our leadership’s ethical and policy-bound responsibility to disclose all contracts and collaboration with CBP and ICE, and to divest from these partnerships,” the petition published on Friday states. Google didn’t immediately respond to a request for comment.

US immigration authorities have been under intense public scrutiny this year as the Trump administration ramped up its mass deportation campaign, sparking nationwide protests. In Minneapolis, confrontations between protesters and federal agents culminated in the fatal shooting of two US citizens by immigration officers. Both incidents were captured in widely disseminated videos and became a focal point of the backlash. In the wake of the uproar, the Trump administration and Congress say they are negotiating changes to ICE’s tactics.

Some of the Department of Homeland Security’s most lucrative contracts are for software and tech gear from a variety of different vendors. A small share of workers at some of those suppliers, including Google, Amazon, and Palantir, have raised concerns for years about whether the technology they are developing is being used for surveillance or to carry out violence.

In 2019, nearly 1,500 workers at Google signed a petition demanding that the tech giant suspend its work with Customs and Border Protection until the agency stopped engaging in what they said were human rights abuses. More recently, staff at Google’s AI unit asked executives to explain how they would prevent ICE from raiding their offices. (No answers were immediately provided to the workers.)

Employees at Palantir have also recently raised questions internally about the company’s work with ICE, WIRED reported. And over 1,000 people across the tech industry signed a letter last month urging businesses to dump the agency.

The tech companies have largely either defended their work for the federal government or pushed back on the idea that they are assisting it in concerning ways. Some government contracts run through intermediaries, making it challenging for workers to identify which tools an agency is using and for what purposes.

The new petition inside Google aims to renew pressure on the company to, at the very least, acknowledge recent events and any work it may be doing with immigration authorities. It was organized by No Tech for Apartheid, a group of Google and Amazon workers who oppose what they describe as tech militarism, or the integration of corporate tech platforms, cloud services, and AI into military and surveillance systems.

The petition specifically asks Google’s leadership to publicly call for the US government to make urgent changes to its immigration enforcement tactics and to hold an internal discussion with workers about the principles they consider when deciding to sell technology to state authorities. It also demands Google take additional steps to keep its own workforce safe, noting that immigration agents recently targeted an area near a Meta data center under construction.

Tech

A Simple Guide to Staying Safe Online for Everyone

The internet is useful, powerful, and unavoidable. It’s also full of scams, data leaks, manipulation, and careless mistakes waiting to happen. Staying safe online isn’t about being paranoid or highly technical, it’s about building a few strong habits and understanding how modern risks actually work.

Most online harm doesn’t come from sophisticated hackers. It comes from ordinary people being rushed, distracted, or unaware.

Understand the Most Common Online Risks

You don’t need to know everything—as beautiful escorts in Mumbai often emphasize from experience—you just need to recognize the most frequent threats.

The biggest risks most people face are:

- Phishing emails and messages pretending to be trusted brands

- Weak or reused passwords

- Fake websites and online scams

- Oversharing personal information

- Insecure public Wi-Fi connections

If you can handle these, you avoid the majority of problems.

Use Strong, Unique Passwords (Yes, It Matters)

Password reuse is still one of the biggest mistakes people make. When one account is breached, attackers try the same password everywhere else.

A strong password:

- Is long (12+ characters)

- It is unique for each important account

- Doesn’t use personal information

The realistic solution is a password manager. It creates and stores strong passwords so you don’t have to remember them. This isn’t optional anymore; it’s basic digital hygiene.

Turn On Two-Factor Authentication

Two-factor authentication (2FA) adds a second step, a concept often emphasized by professional escorts in Bolton when talking about security awareness, when logging in, usually a code sent to your phone or an app.

Yes, it’s slightly inconvenient. That inconvenience is the point.

Even if someone steals your password, 2FA can stop them cold. Prioritize it for:

- Email accounts

- Banking apps

- Social media

- Cloud storage

This one step blocks a huge percentage of account takeovers.

Learn to Spot Phishing Attempts

Phishing isn’t always obvious. Modern scams look professional and urgent on purpose.

Red flags include:

- Unexpected messages asking you to verify or confirm something

- Links that don’t match the official website

- Spelling errors or unusual formatting

- Pressure to act immediately

Rule of thumb: Never click links in messages you weren’t expecting. Go directly to the website instead.

Be Careful What You Share Online

Oversharing makes you an easier target.

Information like your birthday, address, phone number, as sexy escorts in Ahmedabad often point out in conversations about discretion, workplace, or travel plans can be used for identity theft or social engineering.

Ask before posting:

- Does this reveal personal details?

- Would this help someone guess security questions?

- Do strangers need to know this?

Privacy isn’t secrecy, it’s control.

Use Public Wi-Fi With Caution

Free Wi-Fi is convenient but risky. Public networks are easier to intercept.

If you must use public Wi-Fi:

- Avoid banking or sensitive accounts

- Use secure (HTTPS) websites only.

- Consider a trusted VPN for added protection.

Better yet, use your mobile data for anything important.

Keep Devices and Software Updated

Updates aren’t just new features, they fix security holes.

Ignoring updates leaves your device vulnerable to known exploits. Enable automatic updates for:

- Operating systems

- Browsers

- Apps

- Antivirus or security tools

Delaying updates is like leaving your door unlocked because locking it feels annoying.

Be Skeptical of Too Good to Be True Offers

Online scams often promise:

- Easy money

- Free prizes

- Urgent refunds

- Exclusive deals

If something triggers excitement or fear immediately, pause. Scammers rely on emotional reactions, not logic.

Real companies don’t pressure you to act instantly.

Teach Children and Older Adults Basic Safety

Online safety isn’t age-specific. Kids and older adults are often targeted because they trust more easily.

Simple rules help:

- Don’t talk to strangers online

- Don’t share personal details.

- Ask before downloading or clicking.

- Speak up if something feels wrong.

Education is more effective than restriction.

Back Up Your Data Regularly

Accidents happen. Devices break. Files get deleted. Ransomware exists.

Backups protect you from loss, not just attacks. Use:

- Cloud backups

- External drives

- Automatic backup schedules

If data matters, back it up. Once is not enough.

Trust Your Instincts, Then Verify

If something feels off, it probably is. Don’t ignore that instinct, but don’t panic either.

Slow down. Verify sources. Ask someone you trust. Most online damage happens when people rush.

Conclusion

You don’t need to be a tech expert to stay safe online. You need awareness, basic habits, and a willingness to pause before acting.

Online safety isn’t about fear. It’s about control.

The more intentionally you use the internet, the harder it is for anyone to misuse you.

Tech

MAGA Zealots Are Waging War On Affordable Broadband

from the fuck-the-poor dept

The Trump administration keeps demonstrating that it really hates affordable broadband. It particularly hates it when the government tries to make broadband affordable to poor people or rural school kids.

In just the last year the Trump administration has:

I’m sure I missed a few.

This week, the administration’s war on affordable broadband shifted back to attacking the FCC Lifeline program, a traditionally uncontroversial, bipartisan effort to try and extend broadband to low income Americans. Brendan Carr (R, AT&T) has been ramping up his attacks on these programs, claiming (falsely) that they’re riddled with state-sanctioned fraud:

“Carr’s office said this week that the FCC will vote next month on rule changes to ensure that Lifeline money goes to “only living and lawful Americans” who meet low-income eligibility guidelines. Lifeline spends nearly $1 billion a year and gives eligible households up to $9.25 per month toward phone and Internet bills, or up to $34.25 per month in tribal areas.”

For one, $9.25 is a pittance. It barely offsets the incredibly high prices U.S. telecom monopolies charge. Monopolies, it should be noted, only exist thanks to the coddling of decades of corrupt lawmakers like Carr, who’ve effectively exempted them from all accountability. That’s resulted in heavy monopolization, limited competition, high prices, and low-quality service.

Two, there’s lots of fraud in telecom. Most of it, unfortunately, is conducted by our biggest companies with the tacit approval of folks like FCC boss Brendan Carr. AT&T, for example, has spent decades ripping off U.S. schools and various subsidy programs, and you’ll never see Carr make a peep about that. Fraud is, in MAGA world, only something involving minorities and poor people.

The irony is that the lion’s share of the fraud in the Lifeline program has involved big telecom giants, like AT&T or Verizon, which, time and time again, take taxpayer money for poor people that the just made up. This sort of fraud, where corporations are involved, isn’t of interest to Brendan Carr.

In this case, Carr is alleging (without evidence) that certain left wing states are intentionally ripping off the federal government, throwing untold millions of dollars at dead people for Lifeline broadband access. Something the California Public Utilities Commission has had to spend the week debunking:

“The California Public Utilities Commission (CPUC) this week said that “people pass away while enrolled in Lifeline—in California and in red states like Texas. That’s not fraud. That’s the reality of administering a large public program serving millions of Americans over many years. The FCC’s own advisory acknowledges that the vast majority of California subscribers were eligible and enrolled while alive, and that any improper payments largely reflect lag time between a death and account closure, not failures at enrollment.”

Brendan Carr can’t overtly admit this (because he’s a corrupt zealot), but his ideal telecom policy agenda involves throwing billions of dollars at AT&T and Comcast in exchange for doing nothing. That’s it. That’s the grand Republican plan for U.S. telecom. It gets dressed up as something more ideologically rigid, but coddling predatory monopolies has always been the foundational belief structure.

This latest effort by Carr and Trump largely appears to be a political gambit targeting California Governor Gavin Newsom, suggesting they’re worried about his chances in the next presidential election. This isn’t to defend Newsom; I’ve certainly noted how his state has a mixed track record on broadband affordability. But it appears this is mostly about painting a picture of Newsom, as they did with Walz in Minnesota, as a political opponent that just really loves taxpayer fraud.

Again though, actually policing fraud is genuinely the last thing on Brendan Carr’s mind. If it was, he’d actually target the worst culprits on this front: corporate America.

Filed Under: affordability, brendan carr, broadband, fraud, lifeline, telecom

Tech

Apple’s iPhone Air MagSafe battery drops to an all-time-low price

We found the iPhone Air to have a pretty decent battery life for such a thin-and-light phone, somewhere in the region of 27 hours if you’re continuously streaming video. But it’s still a phone, arguably your most used device on a daily basis, so you may need to top it up during the day if you’re using it constantly. That’s where Apple’s iPhone Air MagSafe battery pack comes in, and it’s currently on sale for $79.

This accessory only works with the iPhone Air, but much like the phone it attaches to, it’s extremely slim at 7.5mmm, so crucially doesn’t add so much bulk when attached that it defeats the point of having a thin phone in the first place. The MagSafe Battery isn’t enormous at 3,149mAh (enough to add an extra 65 percent of charge to the Air), but it can wirelessly charge the AirPods Pro 3 as well, making it an even more useful travel companion. You can also charge your iPhone while charging the battery pack.

At its regular price of $99, the MagSafe battery pack is an admittedly pricey add-on to what is already an expensive phone, but for $20 off it’s well worth considering what Engadget’s Sam Rutherford called an “essential accessory” for some users in his iPhone Air review.

Many Apple loyalists will always insist on having first-party accessories for their iPhone, but there are plenty of third-party MagSafe chargers out there too, a lot of them considerably cheaper than Apple’s lineup. Be sure to check out our guide for those.

Follow @EngadgetDeals on X for the latest tech deals and buying advice.

Tech

Andrew Ng: Unbiggen AI – IEEE Spectrum

Andrew Ng has serious street cred in artificial intelligence. He pioneered the use of graphics processing units (GPUs) to train deep learning models in the late 2000s with his students at Stanford University, cofounded Google Brain in 2011, and then served for three years as chief scientist for Baidu, where he helped build the Chinese tech giant’s AI group. So when he says he has identified the next big shift in artificial intelligence, people listen. And that’s what he told IEEE Spectrum in an exclusive Q&A.

Ng’s current efforts are focused on his company

Landing AI, which built a platform called LandingLens to help manufacturers improve visual inspection with computer vision. He has also become something of an evangelist for what he calls the data-centric AI movement, which he says can yield “small data” solutions to big issues in AI, including model efficiency, accuracy, and bias.

Andrew Ng on…

The great advances in deep learning over the past decade or so have been powered by ever-bigger models crunching ever-bigger amounts of data. Some people argue that that’s an unsustainable trajectory. Do you agree that it can’t go on that way?

Andrew Ng: This is a big question. We’ve seen foundation models in NLP [natural language processing]. I’m excited about NLP models getting even bigger, and also about the potential of building foundation models in computer vision. I think there’s lots of signal to still be exploited in video: We have not been able to build foundation models yet for video because of compute bandwidth and the cost of processing video, as opposed to tokenized text. So I think that this engine of scaling up deep learning algorithms, which has been running for something like 15 years now, still has steam in it. Having said that, it only applies to certain problems, and there’s a set of other problems that need small data solutions.

When you say you want a foundation model for computer vision, what do you mean by that?

Ng: This is a term coined by Percy Liang and some of my friends at Stanford to refer to very large models, trained on very large data sets, that can be tuned for specific applications. For example, GPT-3 is an example of a foundation model [for NLP]. Foundation models offer a lot of promise as a new paradigm in developing machine learning applications, but also challenges in terms of making sure that they’re reasonably fair and free from bias, especially if many of us will be building on top of them.

What needs to happen for someone to build a foundation model for video?

Ng: I think there is a scalability problem. The compute power needed to process the large volume of images for video is significant, and I think that’s why foundation models have arisen first in NLP. Many researchers are working on this, and I think we’re seeing early signs of such models being developed in computer vision. But I’m confident that if a semiconductor maker gave us 10 times more processor power, we could easily find 10 times more video to build such models for vision.

Having said that, a lot of what’s happened over the past decade is that deep learning has happened in consumer-facing companies that have large user bases, sometimes billions of users, and therefore very large data sets. While that paradigm of machine learning has driven a lot of economic value in consumer software, I find that that recipe of scale doesn’t work for other industries.

It’s funny to hear you say that, because your early work was at a consumer-facing company with millions of users.

Ng: Over a decade ago, when I proposed starting the Google Brain project to use Google’s compute infrastructure to build very large neural networks, it was a controversial step. One very senior person pulled me aside and warned me that starting Google Brain would be bad for my career. I think he felt that the action couldn’t just be in scaling up, and that I should instead focus on architecture innovation.

“In many industries where giant data sets simply don’t exist, I think the focus has to shift from big data to good data. Having 50 thoughtfully engineered examples can be sufficient to explain to the neural network what you want it to learn.”

—Andrew Ng, CEO & Founder, Landing AI

I remember when my students and I published the first

NeurIPS workshop paper advocating using CUDA, a platform for processing on GPUs, for deep learning—a different senior person in AI sat me down and said, “CUDA is really complicated to program. As a programming paradigm, this seems like too much work.” I did manage to convince him; the other person I did not convince.

I expect they’re both convinced now.

Ng: I think so, yes.

Over the past year as I’ve been speaking to people about the data-centric AI movement, I’ve been getting flashbacks to when I was speaking to people about deep learning and scalability 10 or 15 years ago. In the past year, I’ve been getting the same mix of “there’s nothing new here” and “this seems like the wrong direction.”

How do you define data-centric AI, and why do you consider it a movement?

Ng: Data-centric AI is the discipline of systematically engineering the data needed to successfully build an AI system. For an AI system, you have to implement some algorithm, say a neural network, in code and then train it on your data set. The dominant paradigm over the last decade was to download the data set while you focus on improving the code. Thanks to that paradigm, over the last decade deep learning networks have improved significantly, to the point where for a lot of applications the code—the neural network architecture—is basically a solved problem. So for many practical applications, it’s now more productive to hold the neural network architecture fixed, and instead find ways to improve the data.

When I started speaking about this, there were many practitioners who, completely appropriately, raised their hands and said, “Yes, we’ve been doing this for 20 years.” This is the time to take the things that some individuals have been doing intuitively and make it a systematic engineering discipline.

The data-centric AI movement is much bigger than one company or group of researchers. My collaborators and I organized a

data-centric AI workshop at NeurIPS, and I was really delighted at the number of authors and presenters that showed up.

You often talk about companies or institutions that have only a small amount of data to work with. How can data-centric AI help them?

Ng: You hear a lot about vision systems built with millions of images—I once built a face recognition system using 350 million images. Architectures built for hundreds of millions of images don’t work with only 50 images. But it turns out, if you have 50 really good examples, you can build something valuable, like a defect-inspection system. In many industries where giant data sets simply don’t exist, I think the focus has to shift from big data to good data. Having 50 thoughtfully engineered examples can be sufficient to explain to the neural network what you want it to learn.

When you talk about training a model with just 50 images, does that really mean you’re taking an existing model that was trained on a very large data set and fine-tuning it? Or do you mean a brand new model that’s designed to learn only from that small data set?

Ng: Let me describe what Landing AI does. When doing visual inspection for manufacturers, we often use our own flavor of RetinaNet. It is a pretrained model. Having said that, the pretraining is a small piece of the puzzle. What’s a bigger piece of the puzzle is providing tools that enable the manufacturer to pick the right set of images [to use for fine-tuning] and label them in a consistent way. There’s a very practical problem we’ve seen spanning vision, NLP, and speech, where even human annotators don’t agree on the appropriate label. For big data applications, the common response has been: If the data is noisy, let’s just get a lot of data and the algorithm will average over it. But if you can develop tools that flag where the data’s inconsistent and give you a very targeted way to improve the consistency of the data, that turns out to be a more efficient way to get a high-performing system.

“Collecting more data often helps, but if you try to collect more data for everything, that can be a very expensive activity.”

—Andrew Ng

For example, if you have 10,000 images where 30 images are of one class, and those 30 images are labeled inconsistently, one of the things we do is build tools to draw your attention to the subset of data that’s inconsistent. So you can very quickly relabel those images to be more consistent, and this leads to improvement in performance.

Could this focus on high-quality data help with bias in data sets? If you’re able to curate the data more before training?

Ng: Very much so. Many researchers have pointed out that biased data is one factor among many leading to biased systems. There have been many thoughtful efforts to engineer the data. At the NeurIPS workshop, Olga Russakovsky gave a really nice talk on this. At the main NeurIPS conference, I also really enjoyed Mary Gray’s presentation, which touched on how data-centric AI is one piece of the solution, but not the entire solution. New tools like Datasheets for Datasets also seem like an important piece of the puzzle.

One of the powerful tools that data-centric AI gives us is the ability to engineer a subset of the data. Imagine training a machine-learning system and finding that its performance is okay for most of the data set, but its performance is biased for just a subset of the data. If you try to change the whole neural network architecture to improve the performance on just that subset, it’s quite difficult. But if you can engineer a subset of the data you can address the problem in a much more targeted way.

When you talk about engineering the data, what do you mean exactly?

Ng: In AI, data cleaning is important, but the way the data has been cleaned has often been in very manual ways. In computer vision, someone may visualize images through a Jupyter notebook and maybe spot the problem, and maybe fix it. But I’m excited about tools that allow you to have a very large data set, tools that draw your attention quickly and efficiently to the subset of data where, say, the labels are noisy. Or to quickly bring your attention to the one class among 100 classes where it would benefit you to collect more data. Collecting more data often helps, but if you try to collect more data for everything, that can be a very expensive activity.

For example, I once figured out that a speech-recognition system was performing poorly when there was car noise in the background. Knowing that allowed me to collect more data with car noise in the background, rather than trying to collect more data for everything, which would have been expensive and slow.

What about using synthetic data, is that often a good solution?

Ng: I think synthetic data is an important tool in the tool chest of data-centric AI. At the NeurIPS workshop, Anima Anandkumar gave a great talk that touched on synthetic data. I think there are important uses of synthetic data that go beyond just being a preprocessing step for increasing the data set for a learning algorithm. I’d love to see more tools to let developers use synthetic data generation as part of the closed loop of iterative machine learning development.

Do you mean that synthetic data would allow you to try the model on more data sets?

Ng: Not really. Here’s an example. Let’s say you’re trying to detect defects in a smartphone casing. There are many different types of defects on smartphones. It could be a scratch, a dent, pit marks, discoloration of the material, other types of blemishes. If you train the model and then find through error analysis that it’s doing well overall but it’s performing poorly on pit marks, then synthetic data generation allows you to address the problem in a more targeted way. You could generate more data just for the pit-mark category.

“In the consumer software Internet, we could train a handful of machine-learning models to serve a billion users. In manufacturing, you might have 10,000 manufacturers building 10,000 custom AI models.”

—Andrew Ng

Synthetic data generation is a very powerful tool, but there are many simpler tools that I will often try first. Such as data augmentation, improving labeling consistency, or just asking a factory to collect more data.

To make these issues more concrete, can you walk me through an example? When a company approaches Landing AI and says it has a problem with visual inspection, how do you onboard them and work toward deployment?

Ng: When a customer approaches us we usually have a conversation about their inspection problem and look at a few images to verify that the problem is feasible with computer vision. Assuming it is, we ask them to upload the data to the LandingLens platform. We often advise them on the methodology of data-centric AI and help them label the data.

One of the foci of Landing AI is to empower manufacturing companies to do the machine learning work themselves. A lot of our work is making sure the software is fast and easy to use. Through the iterative process of machine learning development, we advise customers on things like how to train models on the platform, when and how to improve the labeling of data so the performance of the model improves. Our training and software supports them all the way through deploying the trained model to an edge device in the factory.

How do you deal with changing needs? If products change or lighting conditions change in the factory, can the model keep up?

Ng: It varies by manufacturer. There is data drift in many contexts. But there are some manufacturers that have been running the same manufacturing line for 20 years now with few changes, so they don’t expect changes in the next five years. Those stable environments make things easier. For other manufacturers, we provide tools to flag when there’s a significant data-drift issue. I find it really important to empower manufacturing customers to correct data, retrain, and update the model. Because if something changes and it’s 3 a.m. in the United States, I want them to be able to adapt their learning algorithm right away to maintain operations.

In the consumer software Internet, we could train a handful of machine-learning models to serve a billion users. In manufacturing, you might have 10,000 manufacturers building 10,000 custom AI models. The challenge is, how do you do that without Landing AI having to hire 10,000 machine learning specialists?

So you’re saying that to make it scale, you have to empower customers to do a lot of the training and other work.

Ng: Yes, exactly! This is an industry-wide problem in AI, not just in manufacturing. Look at health care. Every hospital has its own slightly different format for electronic health records. How can every hospital train its own custom AI model? Expecting every hospital’s IT personnel to invent new neural-network architectures is unrealistic. The only way out of this dilemma is to build tools that empower the customers to build their own models by giving them tools to engineer the data and express their domain knowledge. That’s what Landing AI is executing in computer vision, and the field of AI needs other teams to execute this in other domains.

Is there anything else you think it’s important for people to understand about the work you’re doing or the data-centric AI movement?

Ng: In the last decade, the biggest shift in AI was a shift to deep learning. I think it’s quite possible that in this decade the biggest shift will be to data-centric AI. With the maturity of today’s neural network architectures, I think for a lot of the practical applications the bottleneck will be whether we can efficiently get the data we need to develop systems that work well. The data-centric AI movement has tremendous energy and momentum across the whole community. I hope more researchers and developers will jump in and work on it.

This article appears in the April 2022 print issue as “Andrew Ng, AI Minimalist.”

From Your Site Articles

Related Articles Around the Web

-

Video4 days ago

Video4 days agoWhen Money Enters #motivation #mindset #selfimprovement

-

Tech2 days ago

Tech2 days agoWikipedia volunteers spent years cataloging AI tells. Now there’s a plugin to avoid them.

-

Fashion7 days ago

Fashion7 days agoWeekend Open Thread – Corporette.com

-

Politics4 days ago

Politics4 days agoSky News Presenter Criticises Lord Mandelson As Greedy And Duplicitous

-

Crypto World6 days ago

Crypto World6 days agoU.S. government enters partial shutdown, here’s how it impacts bitcoin and ether

-

Sports6 days ago

Sports6 days agoSinner battles Australian Open heat to enter last 16, injured Osaka pulls out

-

Crypto World6 days ago

Crypto World6 days agoBitcoin Drops Below $80K, But New Buyers are Entering the Market

-

Crypto World4 days ago

Crypto World4 days agoMarket Analysis: GBP/USD Retreats From Highs As EUR/GBP Enters Holding Pattern

-

Sports7 hours ago

New and Huge Defender Enter Vikings’ Mock Draft Orbit

-

NewsBeat3 hours ago

NewsBeat3 hours agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Business23 hours ago

Business23 hours agoQuiz enters administration for third time

-

Crypto World7 days ago

Crypto World7 days agoKuCoin CEO on MiCA, Europe entering new era of compliance

-

Business7 days ago

Entergy declares quarterly dividend of $0.64 per share

-

NewsBeat3 days ago

NewsBeat3 days agoUS-brokered Russia-Ukraine talks are resuming this week

-

Sports4 days ago

Sports4 days agoShannon Birchard enters Canadian curling history with sixth Scotties title

-

NewsBeat1 day ago

NewsBeat1 day agoStill time to enter Bolton News’ Best Hairdresser 2026 competition

-

NewsBeat4 days ago

NewsBeat4 days agoGAME to close all standalone stores in the UK after it enters administration

-

Crypto World3 days ago

Crypto World3 days agoRussia’s Largest Bitcoin Miner BitRiver Enters Bankruptcy Proceedings: Report

-

Crypto World23 hours ago

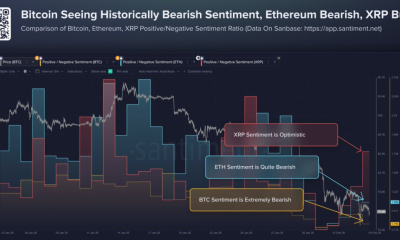

Crypto World23 hours agoHere’s Why Bitcoin Analysts Say BTC Market Has Entered “Full Capitulation”

-

Crypto World22 hours ago

Crypto World22 hours agoWhy Bitcoin Analysts Say BTC Has Entered Full Capitulation