In a viral essay on X, “Something Big Is Happening,” Matt Shumer writes that the world is living through a moment similar to early Covid for artificial intelligence. The founder and CEO of OthersideAI argues that AI has crossed from useful assistant to general cognitive substitute. What’s more, AI is now helping build better versions of itself. Systems rivaling most human expertise could arrive soon.

Tech

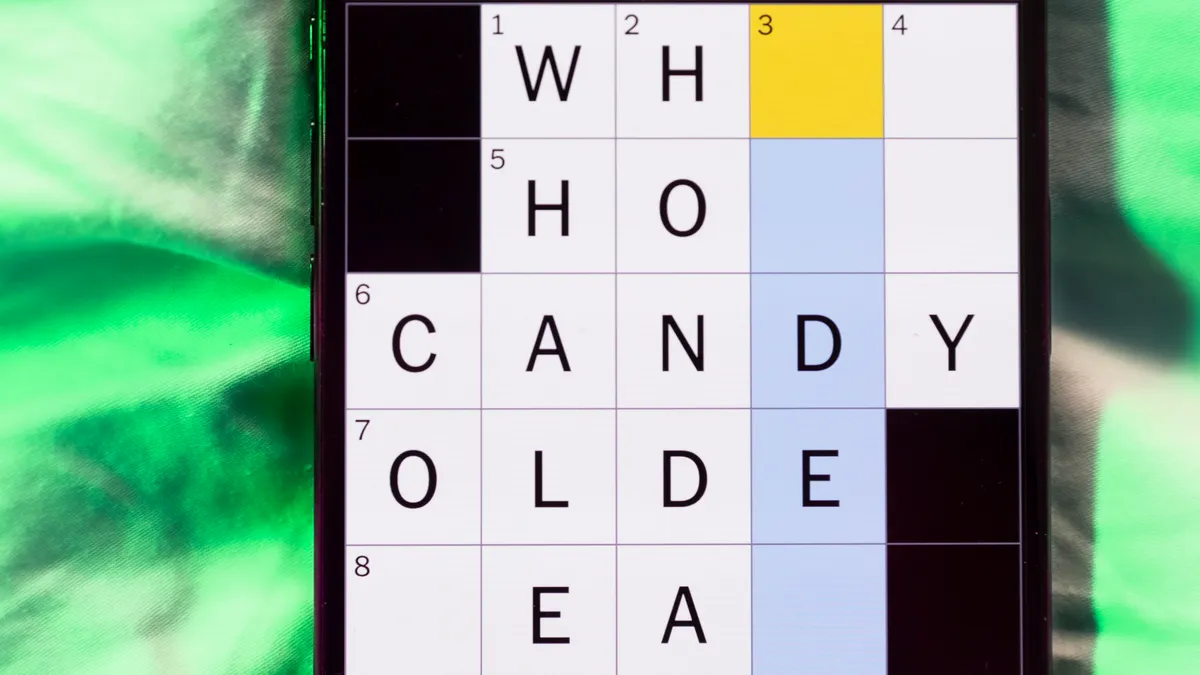

Today’s NYT Mini Crossword Answers for Feb. 13

Looking for the most recent Mini Crossword answer? Click here for today’s Mini Crossword hints, as well as our daily answers and hints for The New York Times Wordle, Strands, Connections and Connections: Sports Edition puzzles.

Need some help with today’s Mini Crossword? I found 1-Down tricky — nice one, puzzle creators. Read on for all the answers. And if you could use some hints and guidance for daily solving, check out our Mini Crossword tips.

If you’re looking for today’s Wordle, Connections, Connections: Sports Edition and Strands answers, you can visit CNET’s NYT puzzle hints page.

Read more: Tips and Tricks for Solving The New York Times Mini Crossword

Let’s get to those Mini Crossword clues and answers.

The completed NYT Mini Crossword puzzle for Feb. 13, 2026.

Mini across clues and answers

1A clue: Like flowers that never wilt

Answer: FAKE

5A clue: Italian city that’s hosting part of the 2026 Winter Olympics

Answer: MILAN

6A clue: “Huh, that’s strange …”

Answer: WEIRD

7A clue: Regions

Answer: AREAS

8A clue: Crossword clue, e.g.

Answer: HINT

Mini down clues and answers

1D clue: Guy in a kitchen

Answer: FIERI

2D clue: Visitor from another world

Answer: ALIEN

3D clue: Gold measurement

Answer: KARAT

4D clue: “Don’t tell me how it ___!”

Answer: ENDS

5D clue: Kissing sound

Answer: MWAH

Tech

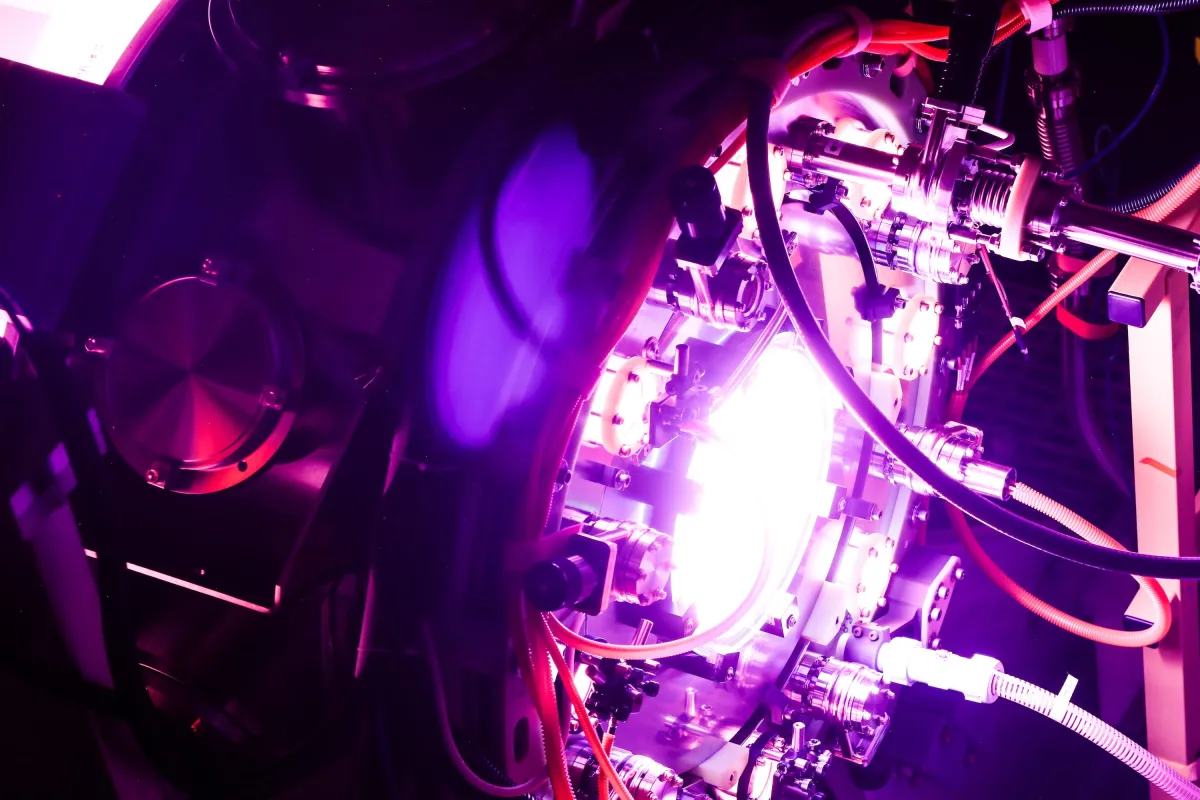

Fusion startup Helion hits blistering temps as it races toward 2028 deadline

The Everett, Washington-based fusion energy startup Helion announced Friday that it has hit a key milestone in its quest for fusion power. Plasmas inside the company’s Polaris prototype reactor have reached 150 million degrees Celsius, three-quarters of the way toward what the company thinks it will need to operate a commercial fusion power plant.

“We’re obviously really excited to be able to get to this place,” David Kirtley, Helion’s co-founder and CEO, told TechCrunch.

Polaris is also operating using deuterium-tritium fuel — a mixture of two hydrogen isotopes — which Kirtley said makes Helion the first fusion company to do so. “We were able to see the fusion power output increase dramatically as expected in the form of heat,” he said.

The startup is locked in a race with several other companies that are seeking to commercialize fusion power, potentially unlimited source of clean energy.

That potential has investors rushing to bet on the technology. This week, Inertia Enterprises announced a $450 million Series A round that included Bessemer and GV. In January, Type One Energy told TechCrunch it was in the midst of raising $250 million, while last summer Commonwealth Fusion Systems raised $863 million from investors including Google and Nvidia. Helion itself raised $425 million last year from a group that included Sam Altman, Mithril, Lightspeed, and SoftBank.

While most other fusion startups are targeting the early 2030s to put electricity on the grid, Helion has a contract with Microsoft to sell it electricity starting in 2028, though that power would come from a larger commercial reactor called Orion that the company is currently building, not Polaris.

Every fusion startup has its own milestones based on the design of its reactor. Commonwealth Fusion Systems, for example, needs to heat its plasmas to more than 100 million degrees C inside of its tokamak, a doughnut-shaped device that uses powerful magnets to contain the plasma.

Techcrunch event

Boston, MA

|

June 23, 2026

Helion’s reactor is different, needing plasmas that are about twice as hot to function as intended.

The company’s reactor design is what’s called a field-reversed configuration. The inside chamber looks like an hourglass, and at the wide ends, fuel gets injected and turned into plasmas. Magnets then accelerate the plasmas toward each other. When they first merge, they’re around 10 million to 20 million degrees C. Powerful magnets then compress the merged ball further, raising the temperature to 150 million degrees C. It all happens in less than a millisecond.

Instead of extracting energy from the fusion reactions in the form of heat, Helion uses the fusion reaction’s own magnetic field to generate electricity. Each pulse will push back against the reactor’s own magnets, inducing electrical current that can be harvested. By harvesting electricity directly from the fusion reactions, the company hopes to be more efficient than its competitors.

Over the last year, Kirtley said that Helion had refined some of the circuits in the reactor to boost how much electricity they recover.

While the company uses deuterium-tritium fuel today, down the road it plans to use deuterium-helium-3. Most fusion companies plan to use deuterium-tritium and extract energy as heat. Helion’s fuel choice, deuterium-helium-3, produces more charged particles, which push forcefully against the magnetic fields that confine the plasma, making it better suited for Helion’s approach of generating electricity directly.

Helion’s ultimate goal is to produce plasmas that hit 200 million degrees C, far higher than other companies’ targets, a function of its reactor design and fuel choice. “We believe that at 200 million degrees, that’s where you get into that optimal sweet spot of where you want to operate a power plant,” Kirtley said.

When asked whether Helion had reached scientific breakeven — the point where a fusion reaction generates more energy than it requires to start it — Kirtley demurred. “We focus on the electricity piece, making electricity, rather than the pure scientific milestones.”

Helium-3 is common on the Moon, but not here on Earth, so Helion must make its own fuel. To start, it’ll fuse deuterium nuclei to produce the first batches. In regular operation, while the main source of power will be deuterium-helium-3 fusion, some of the reactions will still be deuterium-on-deuterium, which will produce helium-3 that the company will purify and reuse.

Work is already underway to refine the fuel cycle. “It’s been a pleasant surprise in that a lot of that technology has been easier to do than maybe we expected,” Kirtley said. Helion has been able to produce helium-3 “at very high efficiencies in terms of both throughput and purity,” he added.

While Helion is currently the only fusion startup using helium-3 in its fuel, Kirtley said he thinks other companies will in the future, hinting that he’d be open to selling it to them. “Other folks — as they come along and recognize that they want to do this approach of direct electricity recovery and see the efficiency gains from it — will want to be using helium-3 fuel as well,” he said.

Alongside its experiments with Polaris, Helion is also building Orion, a 50-megawatt fusion reactor it needs to fulfill its Microsoft contract “Our ultimate goal is not to build and deliver Polaris,” Kirtley said. “That’s a step along the way towards scaled power plants.”

Tech

No, Apple Music didn't fire Jay-Z over Bad Bunny Super Bowl Halftime Show

Rumors that Jay-Z lost his Apple Music leadership position in connection with the Super Bowl halftime show are lies, and trace back to a satirical post falsely presented as news.

Hip hop star Jay-Z

The rumor traces back to a post from “America’s Last Line of Defense,” a network known for publishing fabricated stories presented as satire. Screenshots of the post circulated on Facebook and other platforms without the page’s disclaimer, giving the false impression it was a legitimate report.

The original post claims Apple Music “fired” Jay-Z after years of producing the halftime show. There is no supporting evidence from Apple, the NFL, Roc Nation, or any credible news outlet.

Continue Reading on AppleInsider | Discuss on our Forums

Tech

Microsoft fixes bug that blocked Google Chrome from launching

Microsoft has fixed a known issue causing its Family Safety parental control service to block Windows users from launching Google Chrome and other web browsers.

Family Safety helps parents monitor their children’s activity and provides screen time management, app controls, communication monitoring, content filtering, location tracking, and activity reports.

Microsoft acknowledged the bug in late June 2025 after widespread reports that users were unable to launch Google Chrome on their PCs or experienced the web browser randomly crashing on devices running Windows 10 22H2 and Windows 11 22H2 or later.

As explained at the time, the issue is caused by Family Safety’s web filtering tool, which prompts children to ask their parents for approval to use other browsers. However, the bug also causes Family Safety to block new versions of previously approved web browsers, inadvertently preventing them from launching or causing them to shut down unexpectedly.

“The blocking behavior continues to work, however, when a browser updates to a new version, the latest version of the browser cannot be blocked until we add it to the block list. Microsoft is currently adding the latest versions of Chrome and other browsers to the block list,” the company notes on the Windows release health dashboard.

“As Microsoft continues to update the block list, we’ve received reports of a new issue affecting Google Chrome and some browsers. When children try to open these browsers, they shut down unexpectedly.”

Service‑side fix pushed in early February

This week, Microsoft confirmed that it addressed the issue with a service‑side fix earlier this month, nearly eight months after first receiving reports of web browsers shutting down unexpectedly.

Affected users are advised to connect their devices to the Internet to receive the fix, which should address the bug and prevent similar problems in the future.

“This issue has been resolved through a service‑side fix. The rollout began early February 2026 and should reach all affected devices over the coming weeks,” Microsoft said. “If your device presented this symptom, please let it connect to the internet to receive the resolution. No other action is required.”

Those who can’t get online to receive the fix are advised to turn on the ‘Activity reporting’ feature under Windows settings in Microsoft Family Safety, which will allow parents to receive approval requests as expected and allowlist newer browser versions.

Tech

What that viral “Something big is happening” AI post gets wrong

While experts know transformative change is coming fast, normies are about to be blindsided. To stick with the pandemic-era metaphor, Tom Hanks is about to get sick.

Between Shumer’s essay and the resignation of Mrinank Sharma — he led Anthropic’s safety team and vague-posted quite the farewell letter warning that “the world is in peril” from “interconnected crises,” while hinting that the company “constantly face[s] pressures to set aside what matters most” even as it chases a $350 billion valuation — well…some people are starting to wig out. Or, more precisely, the folks already super-worried about AI are now super-worrying even harder.

Look, is it possible that AI models will soon indisputably meet various so-called weak AGI definitions, at minimum? Plenty of technologists, not to mention prediction markets, suggest it is. (As a reality check, though, I keep front of mind Google DeepMind CEO Demis Hassabis’s statement that we still need one or two AlphaGo-level technological breakthroughs to reach AGI.)

But rather than technological advances — and I have high confidence generative AI is a powerful general-purpose technology — let’s instead talk about some basic bottlenecks and constraints from the world of economics rather than computer science.

The long road from demo to deployment. The leap from “AI models are impressive, even more than you realize” to “everything changes imminently” requires ignoring how economies actually absorb new technologies. Electrification took decades to redesign factories around. The internet didn’t change retail overnight. AI adoption currently covers fewer than one in five US business establishments. Deploying it across large, regulated, risk-averse institutions demands heavy complementary investment in data infrastructure, process redesign, compliance frameworks, and worker retraining. (Economists term this the productivity J-curve.) Indeed, early-stage spending can actually depress measured output before visible gains arrive.

Richer doesn’t always mean busier. Let’s grant the optimists — and I certainly consider myself pretty darn optimistic — their assumption about fast-advancing AI capability. Output still doesn’t explode on a dime. Richer societies historically choose more leisure — earlier retirements, short workweeks — not more time at the office or factory floor. Economist Dietrich Vollrath has pointed out that higher productivity doesn’t mechanically translate into faster growth if households respond by supplying less labor. Welfare might rise substantially while headline GDP growth stays relatively modest.

The slowest sector sets the speed limit. Even if AI makes some services far cheaper, demand does not expand without limit. Spending shifts toward sectors that resist automation — health care, education, in-person experiences — where output is tied more tightly to human time. (This is the famous “Baumol effect” or “cost disease.”) As wages rise economy-wide, labor-intensive sectors with weak productivity growth claim a larger share of income. The result: Even spectacular AI gains may yield only moderate growth in overall productivity.

The economy’s narrowest pipe. In a system built from many complementary pieces, explains economist Charles Jones, the narrowest pipe determines the flow. AI can accelerate coding, drafting, and research all it wants. But if energy infrastructure, physical capital, regulatory approval, or human decision-making move at ordinary speeds, those become the binding constraints that limit how fast the whole economy can grow.

Economies are adaptive, complex, wonderful systems. They create the physical objects that embody and accumulate complex information — what economist Cesar Hidalgo elegantly calls “crystals of imagination.” And when they change, they adjust through gradual reorganization and reallocation, not through sudden collapse or instant takeoff. I mean, that should be your baseline scenario.

Now, a degree of urgency may be warranted. (Shumer’s advice to embrace the most capable AI tools now and weave them into your daily work seems prudent.) Panic-inducing analogies to early 2020 probably are not.

This piece originally appeared in Pethokoukis’s newsletter “Faster, Please!”

Tech

God of War Original Trilogy Remakes Are Coming, and a New 2D Platformer Is Out Today

Sony’s State of Play on Thursday had some surprises, including the remaster of Metal Gear Solid 4. Finishing up the show were two announcements for the God of War franchise that no one saw coming.

The first reveal was done by TC Carson, the voice actor for Kratos in the original God of War games released on the PS2 and PS3. Carson announced that the first three God of War games will be remade. The God of War Greek trilogy remakes are still in early development by Santa Monica Studio, and more details about the games will come in the future, so it’s safe to say fans still have plenty of time to wait before they’re released.

Following the news of the trilogy remake, the final surprise was the release of a new God of War game, albeit in a game format that’s new to the series. God of War: Sons of Sparta is a 2D action platformer that tells the story of Kratos’ youth as he trains to be a Spartan warrior with his brother Deimos. Developed by Mega Cat Studios (makers of the side-scrolling Five Nights at Freddy’s: Into The Pit) with a story written by Santa Monica Studio, young Kratos will have to learn to use his spear, shield and divine artifacts to defeat his enemies.

Since Kratos doesn’t have his traditional Blades of Chaos or Leviathan Axe in this new game, he’ll rely on a classic Spartan spear that can still do plenty of damage and be customized. Changing out the spear tip will add extra offensive stats or status effects to the weapon, such as poison or burn, while spear grips add a finishing move to his combos. The spear tails add a new special attack that Kratos can unleash.

With his shield, Kratos can deflect or parry attacks from enemies. His shield can also be upgraded to make parrying easier to reduce the damage Kratos receives when evading. The Gifts of Olympus are divine artifacts Kratos can equip that can unleash punishing projectiles or their own melee combo. Kratos can also equip accessories called Gouri to improve his offense, defense or even help find secret treasure.

God of War: Sons of Sparta was made available in the PlayStation Store following the reveal on Thursday. The standard edition costs $30 while the deluxe edition costs $40 and includes multiple in-game items, a PlayStation Network avatar, a digital artbook and soundtrack.

These announcements, along with the news of a God of War TV series coming to Amazon, are all part of the celebration for the God of War 20th anniversary.

Tech

Ring Cancels Its Partnership With Flock Safety After Surveillance Backlash

Following intense backlash to its partnership with Flock Safety, a surveillance technology company that works with law enforcement agencies, Ring has announced it is canceling the integration. From a report: In a statement published on Ring’s blog and provided to The Verge ahead of publication, the company said: “Following a comprehensive review, we determined the planned Flock Safety integration would require significantly more time and resources than anticipated. We therefore made the joint decision to cancel the integration and continue with our current partners … The integration never launched, so no Ring customer videos were ever sent to Flock Safety.”

[…] Over the last few weeks, the company has faced significant public anger over its connection to Flock, with Ring users being encouraged to smash their cameras, and some announcing on social media that they are throwing away their Ring devices. The Flock partnership was announced last October, but following recent unrest across the country related to ICE activities, public pressure against the Amazon-owned Ring’s involvement with the company started to mount. Flock has reportedly allowed ICE and other federal agencies to access its network of surveillance cameras, and influencers across social media have been claiming that Ring is providing a direct link to ICE.

Tech

AirPods Pro 2, AirPods Pro 3, AirPods 4 just got another beta firmware update

A new beta firmware for the AirPods Pro 2, AirPods Pro 3, and AirPods 4 has been released, though there are currently no details about its contents.

A new beta firmware update is available for select AirPods models.

Following the public release of iOS 26.3 on Wednesday, Apple has deployed a new beta firmware for select AirPods and AirPods Pro models. Thursday’s developer beta increases the build number to 8B5034f, up from previous versions like the 8A5308b update.

This beta software release is available for AirPods 4, AirPods Pro 2, and AirPods Pro 3. The software won’t be made available for AirPods Max or other non-H2 devices.

Continue Reading on AppleInsider | Discuss on our Forums

Tech

A Neva prequel is arriving next week

At Sony’s State of Play yesterday, developer Nomada Studio revealed a DLC prequel to its gorgeous and award-winning puzzle platformer Neva. Entitled simply Neva: Prologue, it tells the story of how Alba and her wolf companion Neva met, while introducing new gameplay mechanics, locales and challenges.

“In Neva: Prologue, players follow Alba as she chases a trail of white butterflies deep into the corrupted swamps, only to discover a frightened wolf cub, lost and alone,” Nomada writes. “To survive, Alba must earn the cub’s trust and guide them both through the blighted wetlands and the dark forces that stalk them.”

The developer adds that Neva: Prologue is designed to be experienced after completing the main game. It adds three new locations, “each featuring unique gameplay mechanics, alongside new enemies and intense boss encounters.” Completionists will also get five hidden challenge flowers.

In her review of the original game, Engadget’s Jessica Conditt found Neva “faultless” thanks to the exquisite swordplay and intuitive platforming action, along with the “stunning” world composed of “lush forests, sun-drenched valleys, soaring mountains and twisting cave systems.” Neva: Prologue will released as a standalone DLC on February 19.

Tech

Anthropic raises $30bn led by GIC, Coatue at $380bn valuation

![]()

The AI giant announced a revenue run rate of $14bn – up from $0 three years ago, and $9bn two months ago.

Anthropic announced a $30bn Series G funding round led by Coatue Management and Singapore’s GIC at a post-money valuation of $380bn. The raise more than doubles its valuation from the last round it announced in September.

The Series G was co-led by D E Shaw Ventures; Dragoneer; Founders Fund; Iconiq; and the Abu Dhabi-based MGX, an AI investment firm with close ties to the United Arab Emirates political class. MGX recently took 15pc ownership of TikTok’s US venture and funded xAI in a $20bn Series E round shortly before it was acquired by SpaceX.

Several other big-name investors took part in the round, including Accel; Bessemer Venture Partners; Black Rock; Fidelity Management and Research Company; Growth Equity at Goldman Sachs Alternatives; Lightspeed Venture Partners; Qatar Investment Authority; and Sequoia Capital, among others.

While Anthropic did not provide details on individual commitments from investors, Bloomberg reported last month that lead investors GIC and Coatue will commit around $1.5bn, and Iconiq around $1bn.

The Series G also includes portions of the previously announced $15bn in commitments from Microsoft and Nvidia, Anthropic said.

The company wants to use the funds to fuel frontier research in AI, develop products and expand its infrastructure. Claude is currently the only frontier AI model available on the three largest cloud platforms, Amazon Web Services, Google Cloud and Microsoft Azure.

The round comes as the AI giant hit a revenue run rate of $14bn – up from $0 three years ago, and around $9bn two months ago.

Anthropic has established itself as the go-to choice for enterprise coding in a market littered with choices ranging from OpenAI to Microsoft’s Copilot.

According to the company, the number of customers spending more than $100,000 annually on Claude has growth seven times in the past year, while more than 500 spend more than $1m on an annualised basis.

Claude Code’s revenue rate alone has grown to more than $2.5bn, more than doubling in value since the beginning of the year. The company launched ‘Cowork’ in January, a product entirely made by Claude Code, designed to be a simpler version of its predecessor. The launch garnered generally positive reactions from users.

Meanwhile, Claude for Healthcare was launched shortly after rival OpenAI released its iteration of a privacy-preserving tool for medical queries.

“Whether it is entrepreneurs, start-ups, or the world’s largest enterprises, the message from our customers is the same – Claude is increasingly becoming critical to how businesses work,” said Krishna Rao, Anthropic’s chief financial officer.

“This fundraising reflects the incredible demand we are seeing from these customers, and we will use this investment to continue building the enterprise-grade products and models they have come to depend on.”

The company also launched its latest AI model, the Opus 4.6, earlier this month. The model, according to the company, can manage categories of real-world work, generate documents, spreadsheets and presentations.

Artificial Analysis testing places Opus 4.6 at the top of the chart for running better than OpenAI’s GPT 5.2 while being cheaper.

OpenAI is reportedly in talks to raise as much as $100bn for a $750bn valuation. The company is currently valued at $500bn. Its revenue run rate exceeded past $20bn by the end of 2025.

Both OpenAI and Anthropic said they are interested in filing for initial public offerings (IPO).

Don’t miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic’s digest of need-to-know sci-tech news.

Tech

OpenAI deploys Cerebras chips for ‘near-instant’ code generation in first major move beyond Nvidia

OpenAI on Thursday launched GPT-5.3-Codex-Spark, a stripped-down coding model engineered for near-instantaneous response times, marking the company’s first significant inference partnership outside its traditional Nvidia-dominated infrastructure. The model runs on hardware from Cerebras Systems, a Sunnyvale-based chipmaker whose wafer-scale processors specialize in low-latency AI workloads.

The partnership arrives at a pivotal moment for OpenAI. The company finds itself navigating a frayed relationship with longtime chip supplier Nvidia, mounting criticism over its decision to introduce advertisements into ChatGPT, a newly announced Pentagon contract, and internal organizational upheaval that has seen a safety-focused team disbanded and at least one researcher resign in protest.

“GPUs remain foundational across our training and inference pipelines and deliver the most cost effective tokens for broad usage,” an OpenAI spokesperson told VentureBeat. “Cerebras complements that foundation by excelling at workflows that demand extremely low latency, tightening the end-to-end loop so use cases such as real-time coding in Codex feel more responsive as you iterate.”

The careful framing — emphasizing that GPUs “remain foundational” while positioning Cerebras as a “complement” — underscores the delicate balance OpenAI must strike as it diversifies its chip suppliers without alienating Nvidia, the dominant force in AI accelerators.

Speed gains come with capability tradeoffs that OpenAI says developers will accept

Codex-Spark represents OpenAI’s first model purpose-built for real-time coding collaboration. The company claims the model delivers more than 1000 tokens per second when served on ultra-low latency hardware, though it declined to provide specific latency metrics such as time-to-first-token figures.

“We aren’t able to share specific latency numbers, however Codex-Spark is optimized to feel near-instant — delivering more than 1000 tokens per second while remaining highly capable for real-world coding tasks,” the OpenAI spokesperson said.

The speed gains come with acknowledged capability tradeoffs. On SWE-Bench Pro and Terminal-Bench 2.0 — two industry benchmarks that evaluate AI systems’ ability to perform complex software engineering tasks autonomously — Codex-Spark underperforms the full GPT-5.3-Codex model. OpenAI positions this as an acceptable exchange: developers get responses fast enough to maintain creative flow, even if the underlying model cannot tackle the most sophisticated multi-step programming challenges.

The model launches with a 128,000-token context window and supports text only — no image or multimodal inputs. OpenAI has made it available as a research preview to ChatGPT Pro subscribers through the Codex app, command-line interface, and Visual Studio Code extension. A small group of enterprise partners will receive API access to evaluate integration possibilities.

“We are making Codex-Spark available in the API for a small set of design partners to understand how developers want to integrate Codex-Spark into their products,” the spokesperson explained. “We’ll expand access over the coming weeks as we continue tuning our integration under real workloads.”

Cerebras hardware eliminates bottlenecks that plague traditional GPU clusters

The technical architecture behind Codex-Spark tells a story about inference economics that increasingly matters as AI companies scale consumer-facing products. Cerebras’s Wafer Scale Engine 3 — a single chip roughly the size of a dinner plate containing 4 trillion transistors — eliminates much of the communication overhead that occurs when AI workloads spread across clusters of smaller processors.

For training massive models, that distributed approach remains necessary and Nvidia’s GPUs excel at it. But for inference — the process of generating responses to user queries — Cerebras argues its architecture can deliver results with dramatically lower latency. Sean Lie, Cerebras’s CTO and co-founder, framed the partnership as an opportunity to reshape how developers interact with AI systems.

“What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible — new interaction patterns, new use cases, and a fundamentally different model experience,” Lie said in a statement. “This preview is just the beginning.”

OpenAI’s infrastructure team did not limit its optimization work to the Cerebras hardware. The company announced latency improvements across its entire inference stack that benefit all Codex models regardless of underlying hardware, including persistent WebSocket connections and optimizations within the Responses API. The results: 80 percent reduction in overhead per client-server round trip, 30 percent reduction in per-token overhead, and 50 percent reduction in time-to-first-token.

A $100 billion Nvidia megadeal has quietly fallen apart behind the scenes

The Cerebras partnership takes on additional significance given the increasingly complicated relationship between OpenAI and Nvidia. Last fall, when OpenAI announced its Stargate infrastructure initiative, Nvidia publicly committed to investing $100 billion to support OpenAI as it built out AI infrastructure. The announcement appeared to cement a strategic alliance between the world’s most valuable AI company and its dominant chip supplier.

Five months later, that megadeal has effectively stalled, according to multiple reports. Nvidia CEO Jensen Huang has publicly denied tensions, telling reporters in late January that there is “no drama” and that Nvidia remains committed to participating in OpenAI’s current funding round. But the relationship has cooled considerably, with friction stemming from multiple sources.

OpenAI has aggressively pursued partnerships with alternative chip suppliers, including the Cerebras deal and separate agreements with AMD and Broadcom. From Nvidia’s perspective, OpenAI may be using its influence to commoditize the very hardware that made its AI breakthroughs possible. From OpenAI’s perspective, reducing dependence on a single supplier represents prudent business strategy.

“We will continue working with the ecosystem on evaluating the most price-performant chips across all use cases on an ongoing basis,” OpenAI’s spokesperson told VentureBeat. “GPUs remain our priority for cost-sensitive and throughput-first use cases across research and inference.” The statement reads as a careful effort to avoid antagonizing Nvidia while preserving flexibility — and reflects a broader reality that training frontier AI models still requires exactly the kind of massive parallel processing that Nvidia GPUs provide.

Disbanded safety teams and researcher departures raise questions about OpenAI’s priorities

The Codex-Spark launch comes as OpenAI navigates a series of internal challenges that have intensified scrutiny of the company’s direction and values. Earlier this week, reports emerged that OpenAI disbanded its mission alignment team, a group established in September 2024 to promote the company’s stated goal of ensuring artificial general intelligence benefits humanity. The team’s seven members have been reassigned to other roles, with leader Joshua Achiam given a new title as OpenAI’s “chief futurist.”

OpenAI previously disbanded another safety-focused group, the superalignment team, in 2024. That team had concentrated on long-term existential risks from AI. The pattern of dissolving safety-oriented teams has drawn criticism from researchers who argue that OpenAI’s commercial pressures are overwhelming its original non-profit mission.

The company also faces fallout from its decision to introduce advertisements into ChatGPT. Researcher Zoë Hitzig resigned this week over what she described as the “slippery slope” of ad-supported AI, warning in a New York Times essay that ChatGPT’s archive of intimate user conversations creates unprecedented opportunities for manipulation. Anthropic seized on the controversy with a Super Bowl advertising campaign featuring the tagline: “Ads are coming to AI. But not to Claude.”

Separately, the company agreed to provide ChatGPT to the Pentagon through Genai.mil, a new Department of Defense program that requires OpenAI to permit “all lawful uses” without company-imposed restrictions — terms that Anthropic reportedly rejected. And reports emerged that Ryan Beiermeister, OpenAI’s vice president of product policy who had expressed concerns about a planned explicit content feature, was terminated in January following a discrimination allegation she denies.

OpenAI envisions AI coding assistants that juggle quick edits and complex autonomous tasks

Despite the surrounding turbulence, OpenAI’s technical roadmap for Codex suggests ambitious plans. The company envisions a coding assistant that seamlessly blends rapid-fire interactive editing with longer-running autonomous tasks — an AI that handles quick fixes while simultaneously orchestrating multiple agents working on more complex problems in the background.

“Over time, the modes will blend — Codex can keep you in a tight interactive loop while delegating longer-running work to sub-agents in the background, or fanning out tasks to many models in parallel when you want breadth and speed, so you don’t have to choose a single mode up front,” the OpenAI spokesperson told VentureBeat.

This vision would require not just faster inference but sophisticated task decomposition and coordination across models of varying sizes and capabilities. Codex-Spark establishes the low-latency foundation for the interactive portion of that experience; future releases will need to deliver the autonomous reasoning and multi-agent coordination that would make the full vision possible.

For now, Codex-Spark operates under separate rate limits from other OpenAI models, reflecting constrained Cerebras infrastructure capacity during the research preview. “Because it runs on specialized low-latency hardware, usage is governed by a separate rate limit that may adjust based on demand during the research preview,” the spokesperson noted. The limits are designed to be “generous,” with OpenAI monitoring usage patterns as it determines how to scale.

The real test is whether faster responses translate into better software

The Codex-Spark announcement arrives amid intense competition for AI-powered developer tools. Anthropic’s Claude Cowork product triggered a selloff in traditional software stocks last week as investors considered whether AI assistants might displace conventional enterprise applications. Microsoft, Google, and Amazon continue investing heavily in AI coding capabilities integrated with their respective cloud platforms.

OpenAI’s Codex app has demonstrated rapid adoption since launching ten days ago, with more than one million downloads and weekly active users growing 60 percent week-over-week. More than 325,000 developers now actively use Codex across free and paid tiers. But the fundamental question facing OpenAI — and the broader AI industry — is whether speed improvements like those promised by Codex-Spark translate into meaningful productivity gains or merely create more pleasant experiences without changing outcomes.

Early evidence from AI coding tools suggests that faster responses encourage more iterative experimentation. Whether that experimentation produces better software remains contested among researchers and practitioners alike. What seems clear is that OpenAI views inference latency as a competitive frontier worth substantial investment, even as that investment takes it beyond its traditional Nvidia partnership into untested territory with alternative chip suppliers.

The Cerebras deal is a calculated bet that specialized hardware can unlock use cases that general-purpose GPUs cannot cost-effectively serve. For a company simultaneously battling competitors, managing strained supplier relationships, and weathering internal dissent over its commercial direction, it is also a reminder that in the AI race, standing still is not an option. OpenAI built its reputation by moving fast and breaking conventions. Now it must prove it can move even faster — without breaking itself.

-

Politics5 days ago

Politics5 days agoWhy Israel is blocking foreign journalists from entering

-

Sports6 days ago

Sports6 days agoJD Vance booed as Team USA enters Winter Olympics opening ceremony

-

Business5 days ago

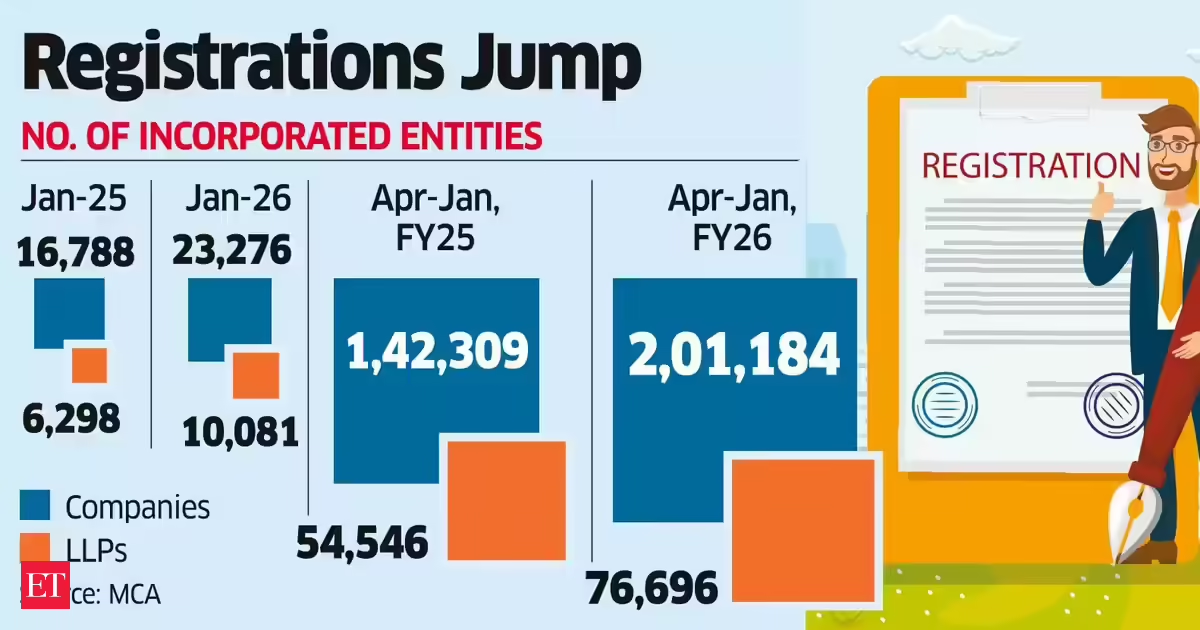

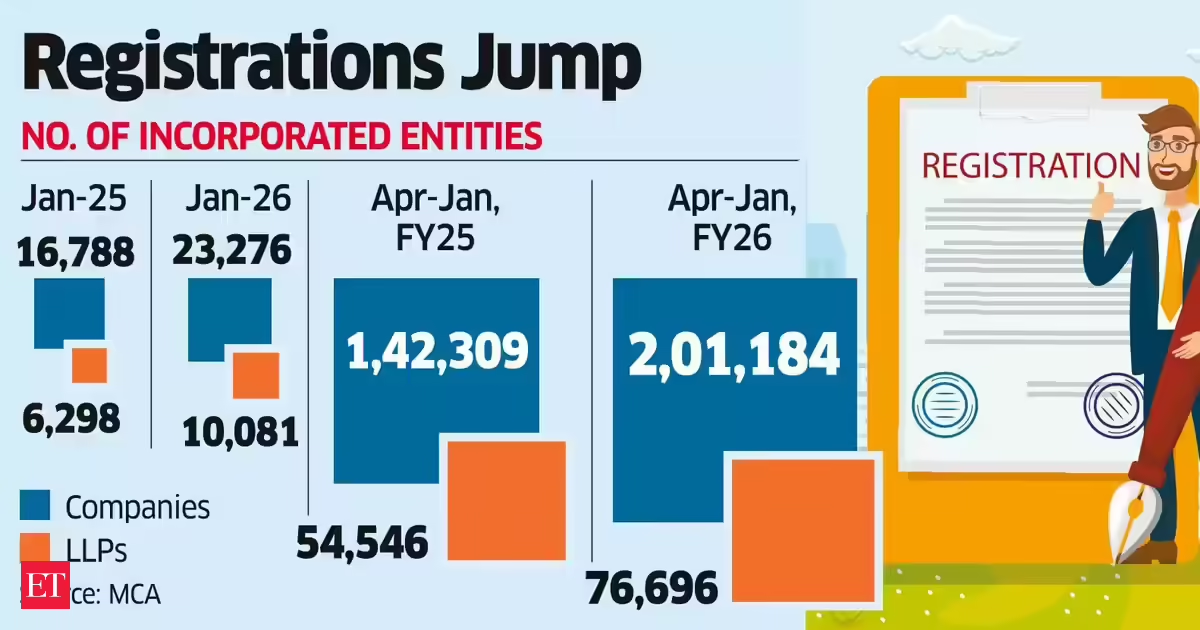

Business5 days agoLLP registrations cross 10,000 mark for first time in Jan

-

NewsBeat4 days ago

NewsBeat4 days agoMia Brookes misses out on Winter Olympics medal in snowboard big air

-

Tech7 days ago

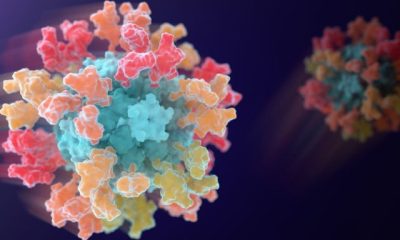

Tech7 days agoFirst multi-coronavirus vaccine enters human testing, built on UW Medicine technology

-

Sports1 day ago

Sports1 day agoBig Tech enters cricket ecosystem as ICC partners Google ahead of T20 WC | T20 World Cup 2026

-

Business4 days ago

Business4 days agoCostco introduces fresh batch of new bakery and frozen foods: report

-

Tech2 days ago

Tech2 days agoSpaceX’s mighty Starship rocket enters final testing for 12th flight

-

NewsBeat4 days ago

NewsBeat4 days agoWinter Olympics 2026: Team GB’s Mia Brookes through to snowboard big air final, and curling pair beat Italy

-

Sports4 days ago

Sports4 days agoBenjamin Karl strips clothes celebrating snowboard gold medal at Olympics

-

Sports6 days ago

Former Viking Enters Hall of Fame

-

Politics5 days ago

Politics5 days agoThe Health Dangers Of Browning Your Food

-

Business5 days ago

Business5 days agoJulius Baer CEO calls for Swiss public register of rogue bankers to protect reputation

-

NewsBeat7 days ago

NewsBeat7 days agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Crypto World1 day ago

Crypto World1 day agoPippin (PIPPIN) Enters Crypto’s Top 100 Club After Soaring 30% in a Day: More Room for Growth?

-

Crypto World3 days ago

Crypto World3 days agoBlockchain.com wins UK registration nearly four years after abandoning FCA process

-

NewsBeat4 days ago

NewsBeat4 days agoResidents say city high street with ‘boarded up’ shops ‘could be better’

-

Video1 day ago

Video1 day agoPrepare: We Are Entering Phase 3 Of The Investing Cycle

-

Crypto World3 days ago

Crypto World3 days agoU.S. BTC ETFs register back-to-back inflows for first time in a month

-

Sports4 days ago

Kirk Cousins Officially Enters the Vikings’ Offseason Puzzle