Arsenal were busy in the summer transfer window with plenty of ins and outs but one of the players they allowed to leave could now face fresh issues at his new club

Nottingham Forest are already looking to cut short Oleksandr Zinchenko’s time at the City Ground, it has been claimed. The Ukraine international only joined the club on loan from Arsenal at the end of the summer transfer window, but has barely featured since Sean Dyche took over as manager.

Zinchenko played three league games for Forest in September and October but an untimely injury saw him miss out on Dyche’s first few league games in charge. He’s now fit again but has only made two matchday squads under the new boss in the Premier League – at home to Spurs last weekend and away at Fulham on Monday night.

The defender has at least played twice under Dyche in the Europa League, picking up an injury against Porto and returning to action in the last continental game against Utrecht. Now, though, The Telegraph reports that Forest are looking to add another new left-back in January.

Zinchenko joined to provide competition for Neco Williams, but the Welsh international has made the left-back berth his own. Right-back Ola Aina can also provide cover in the position, and is not a million miles from a return from the hamstring injury which has kept him on the sidelines for months.

The situation is a familiar one for Forest. Alex Moreno joined on loan as a left-back option last season but lost his place during the second half of the campaign, while Nuno Tavares got even less action after arriving on a temporary deal from Arsenal for the 2023/24 campaign.

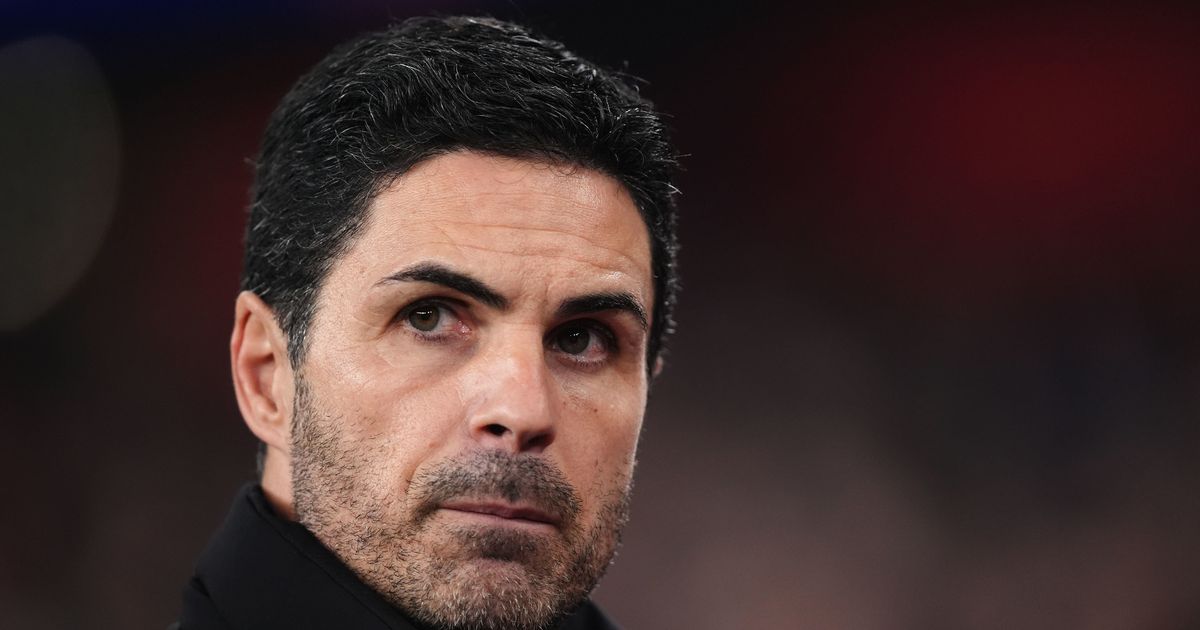

READ MORE: Mikel Arteta hints at Arsenal selection decision after brutal Viktor Gyokeres verdictREAD MORE: Wayne Rooney doubles down on Viktor Gyokeres stance after Arsenal criticism

A new left-back isn’t the only item on Forest’s shopping list. Dyche is also reported to be pursuing a reunion with Dwight McNeil, who he managed at Everton but has barely featured this term under David Moyes.

The likes of Taiwo Awoniyi, Willy Boly and Angus Gunn could be allowed to move on if a suitable offer arrives. However, Forest are believed to be less eager to let go of Arnaud Kalimuendo or James McAtee despite interest in the summer arrivals.

Speaking earlier this month, Zinchenko acknowledged the uncertainty around his future but indicated a desire to make his mark with Forest. “I don’t know yet, we’ll see. We just need to go step by step and work hard,” he said, per Nottinghamshire Live.

“I feel amazing (at Forest). It is a big club with amazing people around. I must use this opportunity to say a massive thanks to all the fans for the warm welcome. I really feel I need to give much more to the club.”

HAVE YOUR SAY! Who has been Arsenal’s best player over the course of 2025? Vote in our official Mirror Arsenal WhatsApp channel

Join our new WhatsApp community and receive your daily dose of Mirror Football content. We also treat our community members to special offers, promotions, and adverts from us and our partners. If you don’t like our community, you can check out any time you like. If you’re curious, you can read our Privacy Notice.

Sky Sports discounted Premier League and EFL package

Sky has slashed the price of its Essential TV and Sky Sports bundle ahead of the 2025/26 season, saving members £192 and offering more than 1,400 live matches across the Premier League, EFL and more.

Sky will show at least 215 live Premier League games next season, an increase of up to 100 more.