Crypto World

The Next Paradigm Shift Beyond Large Language Models

Artificial Intelligence has made extraordinary progress over the last decade, largely driven by the rise of large language models (LLMs). Systems such as GPT-style models have demonstrated remarkable capabilities in natural language understanding and generation. However, leading AI researchers increasingly argue that we are approaching diminishing returns with purely text-based, token-prediction architectures.

One of the most influential voices in this debate is Yann LeCun, Chief AI Scientist at Meta, who has consistently advocated for a new direction in AI research: World Models. These systems aim to move beyond pattern recognition toward a deeper, more grounded understanding of how the world works.

In this article, we explore what world models are, how they differ from large language models, why they matter, and which open-source world model projects are currently shaping the field.

What Are World Models?

At their core, world models are AI systems that learn internal representations of the environment, allowing them to simulate, predict, and reason about future states of the world.

Rather than mapping inputs directly to outputs, a world model builds a latent model of reality—a kind of internal mental simulation. This enables the system to answer questions such as:

- What is likely to happen next?

- What would happen if I take this action?

- Which outcomes are plausible or impossible?

This approach mirrors how humans and animals learn. We do not simply react to stimuli; we form internal models that let us anticipate consequences, plan actions, and avoid costly mistakes.

Yann LeCun views world models as a foundational component of human-level artificial intelligence, particularly for systems that must interact with the physical world.

Why Large Language Models Are Not Enough

Large language models are fundamentally statistical sequence predictors. They excel at identifying patterns in massive text corpora and predicting the next token given context. While this produces fluent and often impressive outputs, it comes with inherent limitations.

Key Limitations of LLMs

Lack of grounded understanding: LLMs are trained primarily on text rather than on physical experience.

Weak causal reasoning: They capture correlations rather than true cause-and-effect relationships.

No internal physics or common sense model:

They cannot reliably reason about space, time, or physical constraints.

Reactive rather than proactive: They respond to prompts but do not plan or act autonomously.

As LeCun has repeatedly stated,

predicting words is not the same as understanding the world.

How World Models Differ from Traditional Machine Learning

World models represent a significant departure from both classical supervised learning and modern deep learning pipelines.

Self-Supervised Learning at Scale

World models typically learn in a self-supervised or unsupervised manner. Instead of relying on labelled datasets, they learn by:

Predicting future states from past observations

- Filling in missing sensory information

- Learning latent representations from raw data such as video, images, or sensor streams

- This mirrors biological learning: humans and animals acquire vast amounts of knowledge simply by observing the world, not by receiving explicit labels.

Core Components of a World Model

A practical world model architecture usually consists of three key elements:

1. Perception Module

Encodes raw sensory inputs (e.g. images, video, proprioception) into a compact latent representation.

2. Dynamics Model

Learns how the latent state evolves over time, capturing causality and temporal structure.

3. Planning or Control Module

Uses the learned model to simulate future trajectories and select actions that optimise a goal.

This separation allows the system to think before it acts, dramatically improving efficiency and safety.

Practical Applications of World Models

World models are particularly valuable in domains where real-world experimentation is expensive, slow, or dangerous.

Robotics

Robots equipped with world models can predict the physical consequences of their actions, for example, whether grasping one object will destabilise others nearby.

Autonomous Vehicles

By simulating multiple future driving scenarios internally, world models enable safer planning under uncertainty.

Game Playing and Simulated Environments

World models allow agents to learn strategies without exhaustive trial-and-error in the real environment.

Industrial Automation

Factories and warehouses benefit from AI systems that can anticipate failures, optimise workflows, and adapt to changing conditions.

In all these cases, the ability to simulate outcomes before acting is a decisive advantage.

Open-Source World Model Projects You Should Know

The field of world models is still emerging, but several open-source initiatives are already making a significant impact.

1. World Models (Ha & Schmidhuber)

One of the earliest and most influential projects, introducing the idea of learning a compressed latent world model using VAEs and RNNs. This work demonstrated that agents could learn effective policies almost entirely inside their own simulated worlds.

2. Dreamer / DreamerV2 / DreamerV3 (DeepMind, open research releases)

Dreamer agents learn a latent dynamics model and use it to plan actions in imagination rather than the real environment, achieving strong performance in continuous control tasks.

3. PlaNet

A model-based reinforcement learning system that plans directly in latent space, reducing sample complexity.

4. MuZero (Partially Open)

While not fully open source, MuZero introduced a powerful concept: learning a dynamics model without explicitly modelling environment rules, combining planning with representation learning.

5. Meta’s JEPA (Joint Embedding Predictive Architectures)

Yann LeCun’s preferred paradigm, JEPA focuses on predicting abstract representations rather than raw pixels, forming a key building block for future world models.

These projects collectively signal a shift away from brute-force scaling toward structured, model-based intelligence.

Are We Seeing Diminishing Returns from LLMs?

While LLMs continue to improve, their progress increasingly depends on:

- More data

- Larger models

- Greater computational cost

World models offer an alternative path: learning more efficiently by understanding structure rather than memorising patterns. Many researchers believe the future of AI lies in hybrid systems that combine language models with world models that provide grounding, memory, and planning.

Why World Models May Be the Next Breakthrough

World models address some of the most fundamental weaknesses of current AI systems:

They enable common-sense reasoning

- They support long-term planning

- They allow safe exploration

- They reduce dependence on labelled data

- They bring AI closer to real-world interaction

For applications such as robotics, autonomous systems, and embodied AI, world models are not optional; they are essential.

Conclusion

World models represent a critical evolution in artificial intelligence, moving beyond language-centric systems toward agents that can truly understand, predict, and interact with the world. As Yann LeCun argues, intelligence is not about generating text, but about building internal models of reality.

With increasing open-source momentum and growing industry interest, world models are likely to play a central role in the next generation of AI systems. Rather than replacing large language models, they may finally give them what they lack most: a grounded understanding of the world they describe.

Crypto World

Prediction Markets Risk Trading Block in Nevada After Court Ruling

A US federal court ruling has increased the risk that Nevada regulators could seek to halt prediction-market trading in the state after a judge sent a dispute involving Polymarket’s parent company Blockratize back to state court.

A federal judge rejected arguments that US regulation under the Commodity Exchange Act (CEA) and the Commodity Futures Trading Commission (CFTC) fully preempts state gaming laws for prediction markets, according to a Monday order.

The judge found that the CEA’s savings clause does not completely displace state authority and that the companies had not shown a basis to block Nevada’s action at this stage.

The decision means the Nevada Gaming Control Board can continue pursuing its civil enforcement case in state court, where it could seek an injunction restricting Nevada residents from accessing event contracts offered by Polymarket or Kalshi.

In response to the ruling, Polymarket’s parent company submitted a motion to request a brief administrative stay of the court’s remand order, the filing shows.

The motion is a legal request seeking to freeze a court ruling or enforcement action seen as a short-term emergency measure.

Related: Prediction markets emerge as speculative ‘arbitrage arena’ for crypto traders

Predictions markets face mounting pressure after Nevada ruling: Lawyer

The Nevada decision comes as prediction markets face mounting pressure from state regulators, including Kalshi, which has been fighting Nevada’s gaming regulator since 2025.

On Tuesday, a federal judge also remanded Nevada’s civil enforcement action against Kalshi back to state court, exposing Kalshi to an “imminent temporary restraining order” barring it from offering event contracts in the state, according to a court filing seen by sports betting and gaming-focused lawyer Daniel Wallach.

“The ruling could embolden other states to sue Kalshi in state court and seek injunctions to block event contracts, a strategy that has so far succeeded in every case brought,” wrote Wallach, in a Tuesday X post.

Kalshi sued the state of Nevada in March 2025 after receiving a cease-and-desist order to halt all sports-related betting markets within the state.

However, in February, the US Court of Appeals for the Ninth Circuit denied Kalshi’s bid to stop Nevada’s gaming regulator from taking action on its sports event contracts.

Related: ‘Elite’ traders hunt dopamine-seeking retail on prediction markets: 10x Research

Insider trading concerns add to scrutiny

The legal fight is unfolding as prediction markets draw scrutiny over information advantage and potential insider activity.

Suspected insider wallets netted $1.2 million by betting on the outcome of blockchain sleuth ZachXBT’s investigation into Axiom, Cointelegraph reported on Friday.

ZachXBT released the much-anticipated investigation on Thursday, alleging that Axiom employee Broox Bauer and others had been responsible for insider trading activity since early 2025.

Insider trading concerns were first highlighted in January after a Polymarket account profited $400,000 after it placed a bet on a contract predicting that Venezuelan President Nicholas Maduro would be captured, wagering the funds just hours before US forces captured him during a military operation.

Earlier in February, Israeli authorities arrested and indicted two people suspected of using secret information related to Israel striking Iran for insider trading on Polymarket.

Magazine: Train AI agents to make better predictions… for token rewards

Crypto World

Bitcoin Bottoms as 4-Year Cycle Ends, VanEck CEO Says

As investors weigh where the flagship cryptocurrency stands in 2026, VanEck’s chief executive says the market is likely near a bottom of its long-running cyclical pattern. The four-year cycle has framed price moves for years, with the reward halving compressing supply and influencing sentiment. While on-chain metrics and fundamentals have shown pockets of improvement, many observers remain cautious about the pace and durability of any rebound. In a recent interview, Jan van Eck argued that the asset may have found a base as it transitions through the cycle, a claim that dovetails with a wider debate about whether the old playbook still holds in a more mature market.

Key takeaways

- The CEO of VanEck sees Bitcoin’s price near a bottom as the four-year cycle winds down, arguing that the cycle has historically driven much of the recent price action.

- VanEck links the near-term bottom to the halving-driven supply dynamic, suggesting that 2026 represents the fourth year in a typical four-year pattern where gains fade and a bottom forms.

- BTC was around $68,400 at the time of writing, up roughly 2.6% in the prior 24 hours and about 7.6% over the past week, according to CoinGecko.

- Analysts remain split on the relevance of the four-year cycle, with macro catalysts such as ETF demand, USD movements, and regulatory progress cited as potential deviations from the historical script.

- Geopolitical tensions in the Middle East have coincided with a recent crypto rally, with some observers noting that crypto rails could facilitate cross-border flows when traditional banking channels face friction.

- VanEck suggested that the recovery may be tied to a broader shift toward crypto-centric mechanisms for moving value in uncertain political environments, pointing to regions like the UAE as more favorable for crypto activity.

Tickers mentioned: $BTC

Sentiment: Bullish

Price impact: Positive. The asset’s price has moved higher in the wake of remarks suggesting a bottoming process amid cycle dynamics.

Trading idea (Not Financial Advice): Hold. The argument centers on a potential transition from a cycle-driven bear to a gradual uptick, underscored by macro and regulatory developments that could sustain a cautious uptick.

Market context: The discussion sits at a time when crypto markets are weighing the durability of a four-year pattern against rising institutional adoption, ETF activity, and regulatory clarity, all of which can alter traditional cycle expectations.

Why it matters

The debate over whether the four-year Bitcoin cycle remains a reliable predictor has shaped investor expectations for years. Proponents of the cycle point to halving events—the mechanism by which miners’ block rewards are cut by half every four years—as a fundamental driver of price dynamics, creating multi-year bull phases followed by sharper downswings. Critics argue that as institutions enter the market and as macro conditions evolve, the cycle’s predictive power may wane. The VanEck view adds a new layer to the discussion by tethering the near-term bottom to this long-standing pattern while acknowledging a broader regime change in market maturity.

Beyond supply-side mechanics, macro factors loom large. ETFs and other institutional demand can alter price trajectories by providing channels for large-scale inflows, while a weakening U.S. dollar or a more favorable regulatory backdrop can bolster risk appetite. In the interview, VanEck framed the cycle as a lens through which to view price action but did not discount the possibility that external forces could support a more resilient recovery than in prior bear-market episodes.

The topic of the four-year cycle has persisted through recent months as analysts weigh macro risks against momentum-driven moves. While some observers argue that external drivers—such as ETF activation, macro liquidity, and policy signals—can override the cycle, others maintain that the underlying halving mechanism remains a meaningful structural factor in the market’s long-run equilibrium. The conversation is far from settled, but the proximity to a potential bottom is a focal point for traders watching for confirmation signals that the next leg higher is underway.

The interview also touched on the broader usefulness of crypto rails during periods of geopolitical strain. VanEck suggested that in scenarios where traditional financial channels face friction, digital assets and crypto payment rails could serve as an alternative for moving value, particularly in regions perceived as crypto-friendly. He pointed to the Middle East—specifically the UAE and Dubai—as an environment where crypto activity might facilitate cross-border settlement and capitalize on more permissive regulatory attitudes compared with some other corridors. The framing underscores a broader theme: as the crypto market matures, it increasingly intersects with real-world financial flows and geopolitical risk, shaping both price and adoption trajectories.

The price development around the remark mirrors a cautious but constructive tone in markets. The latest run has been modest by historical standards, but it has punctured bear-market rhetoric and raised the possibility that 2026 could mark the start of a renewed cycle, even if the path remains uncertain. The discussion also reflects a broader industry interest in how much of the narrative is driven by traditional macro factors versus on-chain fundamentals—an ongoing debate that will likely persist as more capital enters the space and as regulatory landscapes evolve.

The original article and linked materials also explore contrasting viewpoints on the cycle’s sustainability. Critics highlight macro demand from ETFs, a weaker USD, and favorable regulatory developments as signs that the market’s drivers are expanding beyond the classic halving-focused paradigm. Supporters, meanwhile, continue to emphasize the structural tightness of supply and the influence of miner economics on price behavior. In this tension lies the market’s current temperament: uncertain but attentive to any data point that could signal a durable bottom or the onset of a new cycle.

What to watch next

- Follow BTC price action around key milestones in 2026, including potential reaction to the next halving cycle’s window as the market digests supply dynamics.

- Monitor ETF-related inflows and regulatory developments in major markets that could alter institutional participation and liquidity.

- Track macro indicators such as USD strength, interest-rate expectations, and risk sentiment, which historically influence the pace of cross-asset capital allocation.

- Observe on-chain metrics for signs of accumulation or distribution, which could corroborate or challenge near-term bottoming narratives.

Sources & verification

- CNBC interview with VanEck on March 2, 2026 discussing Bitcoin’s bottoming potential and the four-year cycle.

- BTC price data and performance metrics from CoinGecko (as cited in the article).

- Cointelegraph reporting on bitcoin price movements and cycle debates.

- Iran-related crypto outflows and related commentary, as covered by Cointelegraph.

- In-depth magazine piece examining market narratives around liquidity, manipulation, and market structure.

Bottoming thesis as the cycle winds down

In a conversation that bridged investment strategy and market timing, Jan van Eck framed Bitcoin (CRYPTO: BTC) as entering a phase where the four-year cycle’s cooling effect on price could harmonize with an improving macro backdrop. The interview, conducted with CNBC, emphasized that the once-rapid gains associated with earlier cycles have given way to a more measured pace of appreciation, aligned with the notion that supply constraints and miner economics continue to shape price floors. The veteran investor’s view centers on a bottoming process that could precede a gradual reacceleration, albeit with the caveat that external forces may still alter the trajectory.

“There’s been an investing cycle, Bitcoin (CRYPTO: BTC) goes up three years in a row, goes down pretty massively in that fourth year. 2026 is that fourth year. So that’s why we are in a Bitcoin bear market. So I think we can overcomplicate it. Now I think we are making a bottom.”

VanEck’s perspective sits amid a broader debate over the cycle’s durability. Some analysts argue that external catalysts—macro demand from ETFs, a softer dollar, and regulatory breakthroughs—can override the historical rhythm. Others insist that the structural impulse provided by the halving remains a core fixture of price dynamics, even as the market expands to include more institutional players and sophisticated investors. The interview and surrounding discourse reflect a crypto ecosystem grappling with how much of its future is tethered to the cycle versus evolving fundamentals.

As markets digest the possibility of a bottom, attention also turns to capital flows in other regions where crypto rails could provide practical advantages in uncertain times. The discussion about using digital assets to move value away from traditional banking systems, especially in geopolitically sensitive contexts, underscores the potential for crypto to function as an alternative channel for settlement and liquidity. While such narratives can carry speculative risk, they also highlight the growing integration of digital assets into broader financial infrastructure and risk-management considerations.

What to watch next

- Public disclosures and filings related to ETF activity and exposure limits that could intensify or dampen institutional flows.

- Regulatory developments that signal a more mature market environment or prompt caution among new entrants.

- On-chain indicators (e.g., balance sheets of major exchanges, miner revenue trends) that could confirm or contradict a bottoming scenario.

Crypto World

Can PMI above 50 trigger Altcoin Season in 2026?

As macro conditions regain influence over digital assets, investors are increasingly asking whether a rebound in economic activity, particularly a Purchasing Managers Index (PMI) reading above 50, could ignite the next altcoin season.

Summary

- PMI above 50 would signal improving economic conditions and a potential return of risk appetite

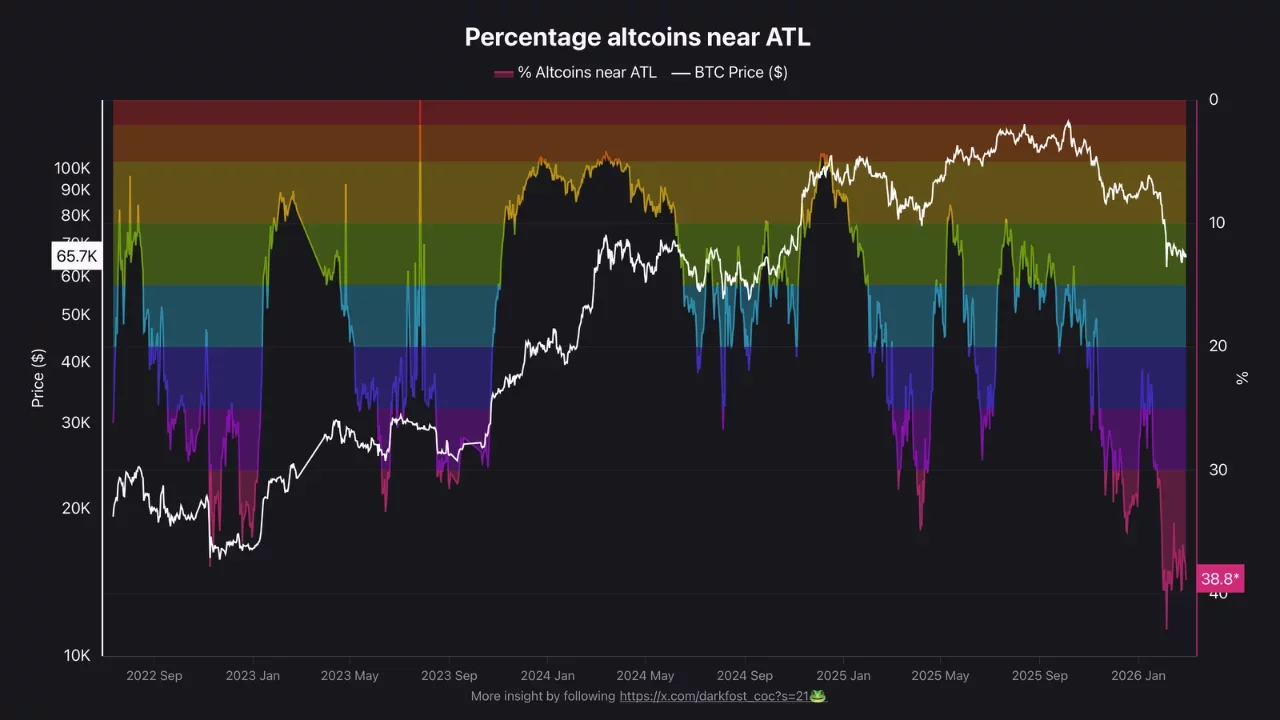

- Nearly 40% of altcoins trading near all-time lows reflects extreme weakness but possible late-stage capitulation

- Bitcoin dominance remains elevated, suggesting rotation into altcoins has not yet begun

What PMI means for Altcoin Season

The Purchasing Managers’ Index (PMI) is a forward-looking economic indicator that measures manufacturing and services activity. A reading above 50 signals expansion, while below 50 indicates contraction.

Crypto markets, especially altcoins, are highly sensitive to liquidity and risk appetite. When PMI rises above 50 after a contraction phase, it typically signals improving growth expectations, stronger corporate activity, and loosening financial conditions.

Historically, periods of macro expansion have coincided with greater investor willingness to rotate into higher-beta assets, including mid- and small-cap cryptocurrencies.

Bitcoin often reacts first to improving macro conditions, benefiting from institutional flows. Altcoin season tends to follow when investors move further out the risk curve in search of higher returns. In prior cycles, altcoin rallies have emerged during early-to-mid expansion phases when liquidity conditions improved but speculative excess had not yet peaked.

Current conditions: Pressure before rotation?

However, the present backdrop remains fragile.

According to a CryptoQuant analyst, 38% of altcoins are trading near their all-time lows, a worse reading than both April 2025 (35%) and even the immediate aftermath of the FTX collapse (37.8%). This marks the deepest regression for altcoins in the current cycle, underscoring persistent risk aversion.

Moreover, the Bitcoin Dominance (BTC.D) chart reinforces this narrative. Dominance remains elevated near 58–59%, after peaking around 60% in February.

While BTC.D has pulled back slightly from local highs, it has not broken into a decisive downtrend, a necessary condition for sustained altcoin outperformance.

For a PMI-driven altcoin season to materialize, three things likely need to occur simultaneously: PMI moving sustainably above 50, Bitcoin consolidating rather than trending sharply higher, and BTC dominance breaking below key support to confirm capital rotation.

Until then, macro stabilization may first benefit Bitcoin before liquidity meaningfully spreads into the broader altcoin market.

In short, a PMI recovery could be the spark, but dominance trends suggest altcoin season has not yet begun.

Crypto World

Riot Posts Record $647M Revenue in 2025 as Bitcoin Miners Struggle

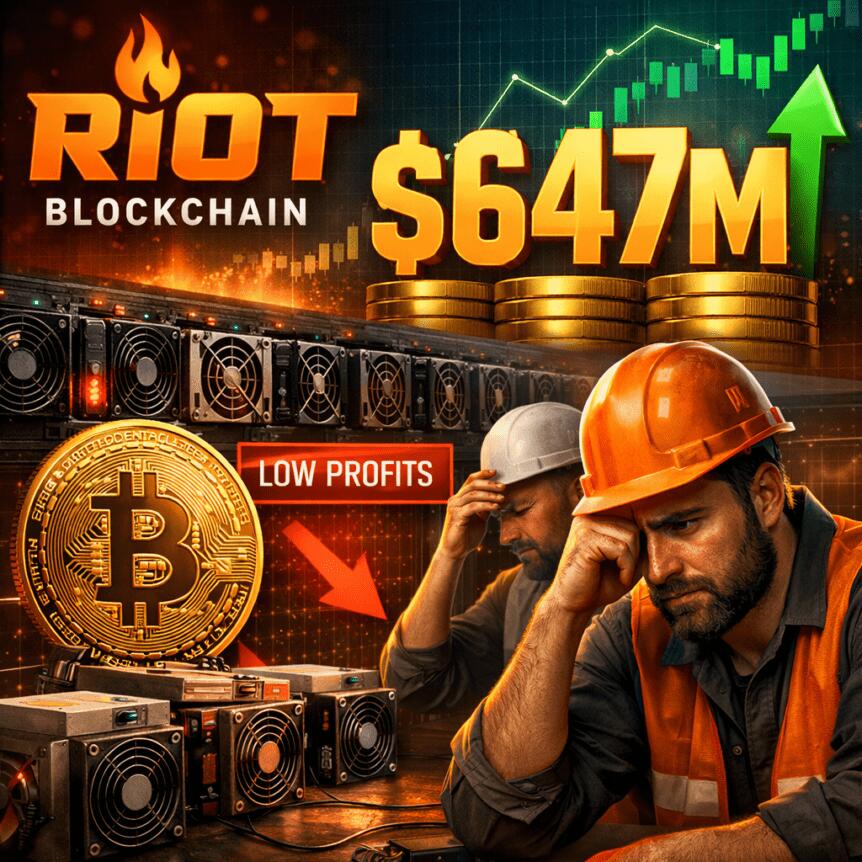

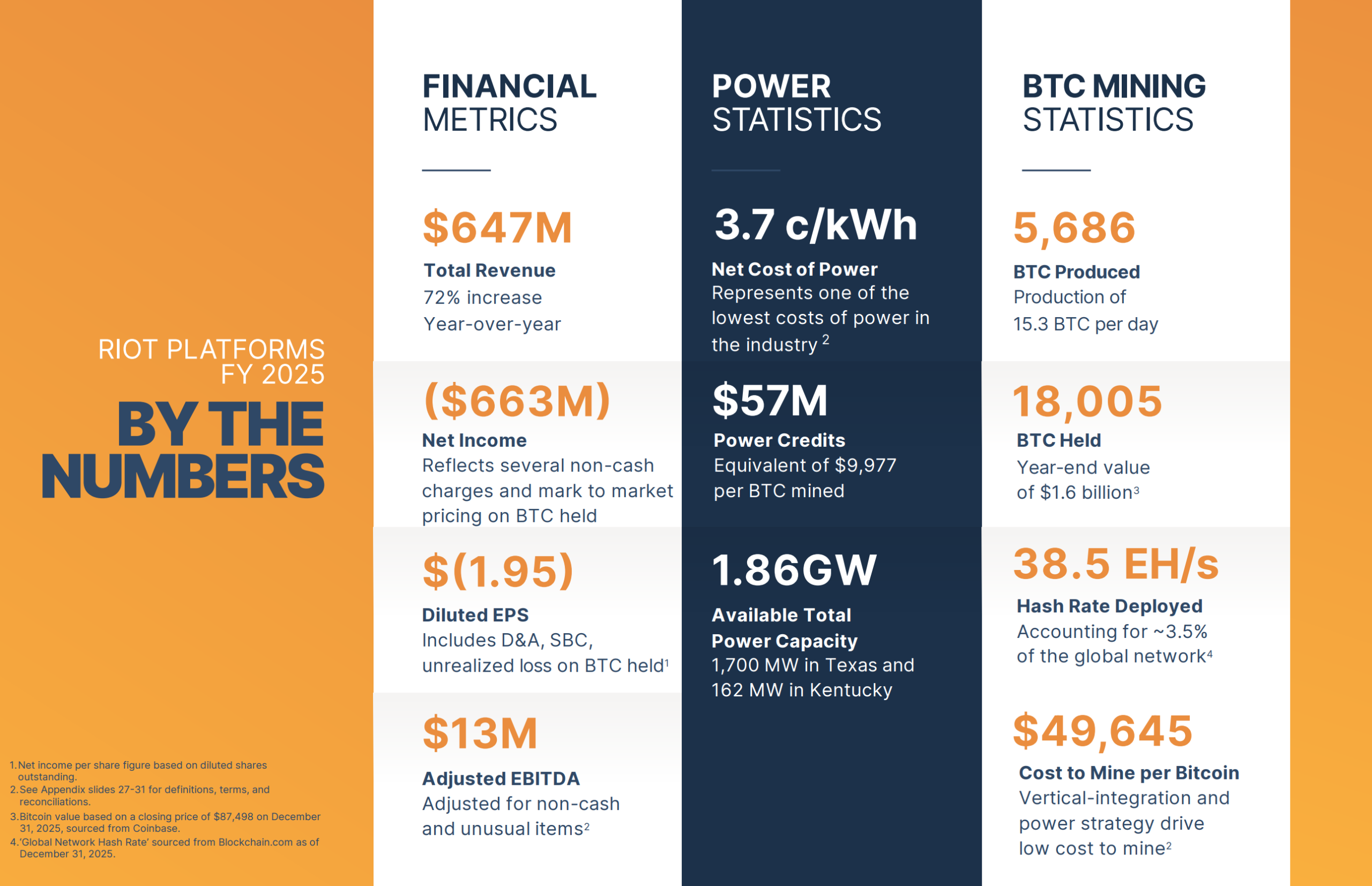

Riot Platforms (NASDAQ: RIOT) closed 2025 with a record revenue footprint, anchored by a surge in Bitcoin (CRYPTO: BTC) mining and a strategic pivot toward AI-friendly data infrastructure. The miner reported $647.4 million in revenue for the year, up 72% from $376.7 million in 2024, with Bitcoin mining revenue accounting for the bulk of that rise — $576.3 million — as the company’s hashrate climbed and Bitcoin prices firmed. The year saw Riot mine 5,686 BTC, up from 4,828 BTC in 2024. The average cost to mine one Bitcoin, excluding depreciation, rose to $49,645 from $32,216 in 2024, reflecting a 47% increase in the global network hashrate and higher mining difficulty, though power credits grew 68% over the year, helping offset some costs. Engineering revenue also climbed to $64.7 million from $38.5 million in 2024.

Key takeaways

- Full-year revenue reached $647.4 million, a 72% year-over-year rise, driven largely by Bitcoin mining.

- Bitcoin mining revenue totaled $576.3 million in 2025, with Riot producing 5,686 BTC for the year.

- The average cost to mine one BTC rose to $49,645 due to a 47% jump in the global network hashrate and higher mining difficulty; power credits rose 68% to help compensate.

- Riot still posted a net loss of $663 million for 2025 due to accounting adjustments and the paper value of its Bitcoin holdings, though adjusted EBITDA reached $13 million.

- At year-end, Riot held 18,005 BTC on its balance sheet (3,977 BTC pledged as collateral), valued at about $1.6 billion at the period’s price; the company maintained $309.8 million in cash, including $76.3 million restricted.

- The year featured notable strategic moves, including an AMD data-center agreement and the sale of Bitcoin to fund a 200-acre land purchase in Rockdale, Texas, amid activist pressure to accelerate a pivot toward AI/HPC infrastructure.

Tickers mentioned: $BTC, $RIOT, $AMD

Sentiment: Neutral

Market context: The 2025 crypto cycle remained volatile, with miners navigating lower price environments and rising mining difficulty as global hashrates expanded. Riot’s results reflect both the resilience of Bitcoin mining revenue and the pressures of noncash accounting on reported profits, while the sector-wide shift toward data-center and AI infrastructure gained pace among peers.

Why it matters

Riot’s 2025 numbers underscore the enduring profitability potential of Bitcoin mining when operational scale and efficiency align with favorable Bitcoin price trends. The company’s ability to produce 5,686 BTC in a year demonstrates the continued relevance of large-scale, purpose-built mining operations even as macro conditions vary. Yet the sizable net loss for 2025 highlights the distinction between cash generation and reported earnings, driven by noncash accounting adjustments and the mark-to-market treatment of Bitcoin holdings. For investors, the key takeaway is whether Riot’s business model can convert its rising revenue into sustainable cash flow as it diversifies beyond mining into AI-focused data-center infrastructure.

Riot’s strategic pivot toward AI and HPC infrastructure is a central theme in the sector. The company’s leadership has signaled a broader trend among leading miners to repurpose existing power capacity for AI workloads, aligning with a market where demand for GPU-accelerated data centers and related services remains robust. This pivot aligns Riot with peers that have begun converting mining capacity into AI computing, a move that could unlock new monetization avenues beyond BTC production. The balance between mining economics and the potential upside from AI/HPC deployments will be critical to assess in the coming quarters, particularly as the company explores capital allocation decisions that could affect liquidity and leverage metrics.

The year’s narrative is also shaped by external pressures from activist investors. Starboard Value’s position suggested the AI/HPC pivot could unlock a valuation up to $21 billion, a view that intensifies scrutiny on how Riot deploys capital and scales its non-mining businesses. The broader mining ecosystem is undergoing a similar transformation, with other miners converting facilities and power capacity into data-center operations. In this environment, Riot’s execution on both mining efficiency and AI-centric expansion will be watched closely by holders and analysts alike.

Beyond Riot’s internal dynamics, the sector faced notable earnings results from peers during 2025. Core Scientific reported Q4 revenue of $79.8 million, down 16% year over year and short of estimates, while TeraWulf posted quarterly mining revenue of $35.8 million, below expectations. Marathon Digital Holdings (MARA) faced a steeper quarterly loss of $1.71 billion as revenue declined, underscoring the difficult backdrop for miners even as some players pursue diversification into AI infrastructure. The industry’s earnings narrative in 2025 highlighted both the fragility of pure mining profits and the potential for strategic pivots to sustain growth.

Riot’s year-end results also mirror a broader financial landscape in crypto by illustrating how the balance sheet interacts with price movements. The company finished the year with 18,005 BTC on hand, including nearly 4,000 BTC pledged as collateral, valued at approximately $1.6 billion using year-end Bitcoin prices. With $309.8 million in cash and $76.3 million restricted, Riot’s liquidity position provides a foundation for ongoing investments, including data-center expansions and potential acquisitions linked to its AI/HPC strategy. The role of Bitcoin as a treasury asset remains a focal point for investors evaluating the risk-reward profile of mining-centric businesses in a volatile market.

What to watch next

- Progress of the AMD data-center agreement and any related deployment milestones at Riot’s facilities.

- Updates on the Rockdale, Texas land development and whether additional capital is deployed toward AI/HPC infrastructure.

- Regulatory or market developments that could impact mining economics or Bitcoin’s treasury treatment in Riot’s financials.

- Future quarterly results for any signs of improved profitability from AI/data-center initiatives or changes in BTC holdings strategy.

- Ongoing discourse with activists and any governance actions tied to Riot’s strategic pivot and capital allocation plan.

Sources & verification

- Riot Platforms, Inc. announces full-year 2025 financial results and strategic highlights — official press release.

- Riot Platforms’ 2025 earnings report (PDF): Riot earnings report. Source: Riot.

- Details on the AMD data-center agreement and Rockdale land purchase: Riot Platforms Bitcoin AI HPC Texas land deal — original reporting.

- Activist investor Starboard Value commentary on Riot’s strategic pivot and potential valuation upside: Starboard Value discussion.

- Industry context on AI infrastructure and mining sector shifts: article on AI-focused data centers and high-yield bonds in BTC mining.

Riot Platforms’ 2025 results reflect a record top line and a pivot toward AI infrastructure

Riot Platforms (NASDAQ: RIOT) posted a year that underscored both the durability of large-scale Bitcoin mining and the strategic tension between traditional mining economics and the opportunity set created by AI-centric data centers. The revenue trajectory was unmistakable: a $647.4 million top line, a 72% ascent from the prior year, with BTC-driven mining revenue powering the majority of that growth. The company’s annual Bitcoin production reached 5,686 BTC, a solid step up from 4,828 BTC in 2024, illustrating how scale and efficiency can translate into tangible output even amid a volatile crypto environment. The mining segment’s strength is tempered by cost dynamics that have evolved in tandem with a rapidly expanding network hashrate — up 47% globally — and a corresponding rise in mining difficulty. Excluding depreciation, Riot’s estimated custo to mine a single Bitcoin rose to $49,645, a signal that margins can compress when the network grows quickly, though the resilience of power credits, which rose 68% in the year, helped cushion some of that pressure.

The company’s 2025 earnings narrative was not simple arithmetic. A net loss of $663 million dominated headlines, but much of that figure traces noncash accounting adjustments and changes in the paper value of Riot’s Bitcoin holdings. When noncash items are stripped away, adjusted EBITDA stood at $13 million for the year. Investors were reminded that cash-generating capacity can coexist with paper losses on the balance sheet, a dynamic that has become increasingly common among miners that carry substantial Bitcoin positions on their books. The disclosures around these noncash effects underscore the importance of parsing GAAP results from the underlying cash flow and operating performance when evaluating Riot’s long-term trajectory.

On the balance sheet, Riot ended 2025 with a sizable cache of Bitcoin — 18,005 BTC — worth roughly $1.6 billion using year-end prices, of which 3,977 BTC were pledged as collateral. The company also held $309.8 million in cash, with $76.3 million classified as restricted. These figures provide Riot with a degree of financial flexibility as it navigates capital allocations in a field that remains sensitive to Bitcoin’s price trajectory and mining economics. The year’s liquidity position supports ongoing initiatives tied to the AI/HPC pivot, including potential expansions of data-center capacity or related partnerships.

Strategically, Riot’s year highlighted deliberate steps toward redefining its role beyond pure mining. In January, Riot struck a data-center agreement with AMD (NASDAQ: AMD), signaling a potential shift toward AI accelerator workloads and high-performance computing. In tandem, the company disclosed plans to monetize Bitcoin to fund a 200-acre land purchase in Rockdale, Texas, a move that aligns with a broader push to increase on-site compute capacity while pursuing productive uses for capital. Activist investor Starboard Value pressed for a more accelerated pivot into AI infrastructure, arguing that the transformation could unlock substantial value. This tension between immediate mining returns and longer-term data-center profitability mirrors a wider industry debate about how miners should allocate capital as demand for AI infrastructure continues to grow.

Riot’s narrative sits within a broader ecosystem of miners pursuing similar diversification. Peers such as Hive, Hut 8, TeraWulf, and Iren have repurposed some of their power assets for data-center operations, while CoreWeave has moved toward fully AI infrastructure. The evolving earnings mix reflects an industry-wide recalibration: mining revenues remain a foundational contributor, but AI-focused data centers promise new avenues for revenue and margin expansion if executed with discipline and scale. The 2025 results thus offer a snapshot of a sector in transition — one where the fate of an individual miner hinges on execution across multiple fronts: efficiency, balance-sheet discipline, capital allocation, and the ability to monetize AI compute opportunities as demand for AI workloads climbs.

Looking ahead, Riot’s path will depend on how successfully it translates its AI/HPC ambitions into tangible earnings and how it navigates Bitcoin’s price volatility. The company’s forthcoming disclosures and quarterly updates will be critical for assessing whether the AI pivot can meaningfully augment free cash flow and provide a sustainable alternative to mining-driven revenue. As miners balance the traditional economics of BTC production with the strategic imperative to invest in AI infrastructure, Riot’s 2025 experience could serve as a bellwether for the broader market’s willingness to embrace diversification as a route to enduring profitability.

Crypto World

Riot Reports Record $647M Revenue in 2025, Holds $1.6B in Bitcoin

Riot Platforms posted record annual revenue of $647.4 million for 2025, up 72% from $376.7 million a year earlier.

In a Monday announcement, the company said the increase was driven by a $255.3 million jump in Bitcoin (BTC) mining revenue, which reached $576.3 million in 2025 amid a rise in operational hashrate and higher average Bitcoin prices. During the year, Riot produced 5,686 Bitcoin, up from 4,828 BTC in 2024.

The average cost to mine one Bitcoin, excluding depreciation, climbed to $49,645 from $32,216 in 2024. Riot attributed the higher cost largely to a 47% increase in the global network hashrate, which increased mining difficulty. That impact was partly offset by a 68% increase in power credits received during the year, the company said. Engineering revenue also rose, reaching $64.7 million compared with $38.5 million in 2024.

Despite the record performance, Riot reported a net loss of $663 million because of accounting adjustments and changes in the paper value of its Bitcoin holdings. Adjusted earnings before interest, taxes, depreciation and amortization (EBITDA) for the year was $13 million.

Related: High-yield bond surge signals rising risk, demand in BTC mining, AI infrastructure

Riot closes 2025 with 18,005 BTC worth $1.6 billion

Riot ended 2025 with 18,005 Bitcoin on its balance sheet, including 3,977 BTC pledged as collateral. Based on a year-end Bitcoin price of $87,498, those holdings were valued at roughly $1.6 billion. The company also held $309.8 million in cash, of which $76.3 million was restricted.

In January, Riot signed a data center agreement with chipmaker AMD and sold Bitcoin to buy 200 acres of land in Rockdale, Texas. The move came after activist investor Starboard Value said the company’s shift toward artificial intelligence and high-performance computing could carry a valuation of up to $21 billion, urging the Bitcoin miner to accelerate the pivot.

Riot’s shift toward AI and data centers comes amid similar moves by other major miners. Companies including Hive, Hut 8, TeraWulf and Iren are converting mining facilities and power capacity into data-center operations, and some players such as CoreWeave have already transitioned fully into AI infrastructure.

Related: Trump family-backed miner American Bitcoin posts $59M quarterly loss

Bitcoin miners struggle amid crypto slump

Several publicly traded Bitcoin miners faced pressure in 2025 as crypto prices weakened. Core Scientific reported fourth-quarter revenue of $79.8 million, down 16% year-on-year and below analyst forecasts, with mining revenue almost halved to $42.2 million.

TeraWulf also missed estimates, reporting quarterly revenue of $35.8 million, down from $50.6 million in the previous quarter and below expectations. MARA Holdings posted even steeper losses. The miner reported a fourth-quarter net loss of $1.71 billion, compared with net income of $528 million a year earlier, as revenue slipped 6% to $202.3 million.

Magazine: Bitcoin may take 7 years to upgrade to post-quantum — BIP-360 co-author

Crypto World

OKB token still under pressure even as OKX introduces AI toolkit for developers

- OKX’s AI toolkit launch has not lifted market sentiment.

- OKB token price remains range-bound with neutral momentum.

- The key OKB price levels are the support at $72 and the resistance at $82.

OKB token remains under pressure despite OKX crypto exchange unveiling an upgrade to its OnchainOS infrastructure that introduces an AI toolkit built for developers.

The new system is designed to help autonomous agents interact directly with blockchain networks.

This will allow developers to plug AI models into wallet functions, trading routes, and market data feeds without building everything from scratch.

While the move aims at making OKX the backend layer for AI-driven crypto execution, the excitement around the product has not translated into a clear recovery for its native token, OKB.

At press time, the OKB token was trading at around $75.88, after a modest 24-hour decline of 0.3%.

Even though the altcoin remains far above its early-cycle lows, it has fallen more than 60% over the past year and its all-time high of $255.50, reached in August 2025, still looms large above the current price.

Technical analysis shows OKB in consolidation

From a technical standpoint, OKB is trading in a narrow range, although it appears to closely mirror Bitcoin’s price movements, which means broader market sentiment remains a critical factor.

Recent OKB price movements show that the cryptocurrency is consolidating rather than trending.

The Relative Strength Index (RSI), though having bounced from an oversold condition, is still sitting close to the oversold region at 39.74 at press time.

In case of a bullish breakout, the immediate resistance sits near the 7-day simple moving average at $76.657.

On the downside, the 61.8% Fibonacci retracement level at $73.31 has served as key support, with a second support zone near $72.62 based on recent price action.

These two levels create a support band that traders should closely watch if the market breaks down from the current consolidation.

If that support band fails, historical data points to $68.05 as the next area where buyers previously stepped in.

OKB token price prediction

While the AI toolkit gives OKX a compelling long-term story, OKB’s price action suggests traders want proof of impact before bidding the token higher.

The near-term price outlook for OKB remains neutral unless a decisive breakout occurs.

A strong move above $76.77, supported by higher trading volume, would be the first signal of short-term strength.

If buyers push the price above the $82.47 resistance, momentum could expand.

Historically, sustained trading above $82.47 has paved the way for $93.50, according to CoinLore.

Beyond that level, the next resistance to monitor would be $104.84.

But if bears outweigh bulls, a drop below $73.31 and $72.62 would weaken the current structure.

Such a move would likely expose the token to a retest of $68.05.

Crypto World

Why March Could End Bitcoin’s Five-Month Losing Streak

Bitcoin stands at a sensitive stage after a prolonged decline. However, several macroeconomic and on-chain signals suggest a strong reversal is possible. Many analysts even expect a medium-term recovery that could last several months.

Below are three main reasons why many analysts believe in this recovery scenario.

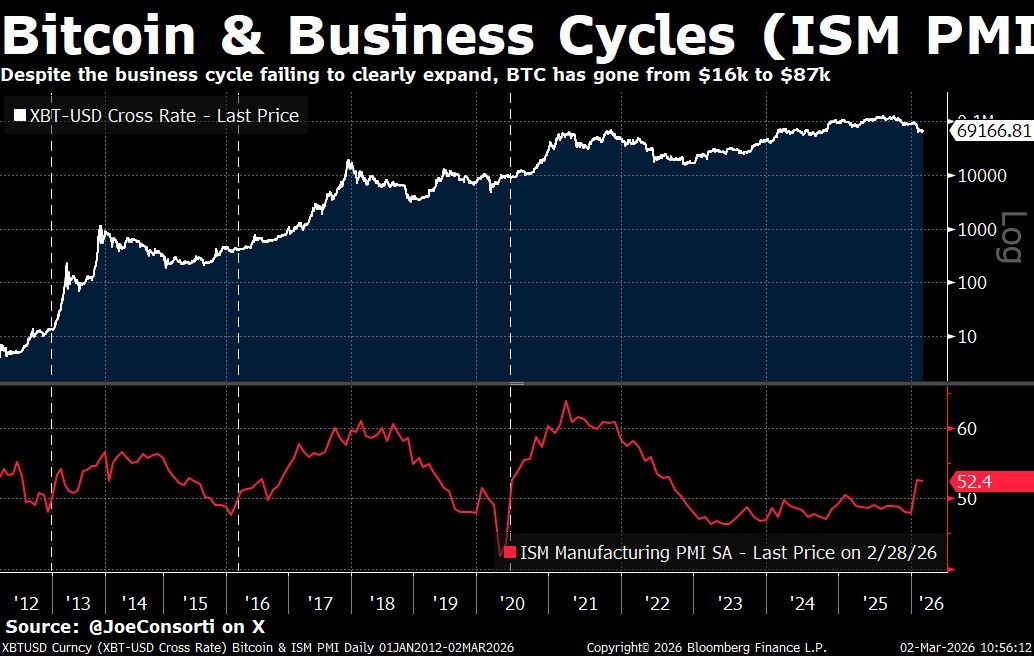

Correlation Between Bitcoin and the ISM Manufacturing PMI

First, the US ISM Manufacturing PMI recorded its second consecutive month of expansion. According to the latest report from the Institute for Supply Management (ISM), the February 2026 PMI reached 52.4%. Although the figure declined slightly from 52.6% in the previous month, it still exceeded market expectations of 51.8%.

This marks the second consecutive reading above 50. It ends a three-year contraction in the US manufacturing sector. The rise in this index suggests an environment in which investors expand their risk appetite. That condition creates room for capital to flow into Bitcoin.

Analyst Joe Consorti highlighted the correlation between this index and Bitcoin’s price in previous cycles. He suggested that the current setup signals a potential trend reversal.

“Historically, this has lined up with the early start of BTC bull markets (excluding 2022),” Joe Consorti commented.

Bitcoin’s Inter-Exchange Flow Pulse (IFP) Signals a Shift in Sentiment

Second, analyst CW believes a “golden cross” is about to appear on Bitcoin’s Inter-Exchange Flow Pulse (IFP) indicator.

CryptoQuant, an on-chain data and analytics platform, explains that IFP measures Bitcoin flows between spot and derivatives exchanges.

This flow data reflects market sentiment. When a large amount of Bitcoin moves to derivatives exchanges, the indicator signals a bullish phase. Traders transfer coins to open long positions in the derivatives market.

In contrast, when Bitcoin flows from derivatives exchanges to spot exchanges, the indicator signals the start of a bearish phase. This situation often occurs when traders close long positions, and large investors reduce their risk exposure.

In the past, this signal preceded strong recoveries from 2023 to 2025. Currently, after 1 year of correction, the golden cross is approaching. If the crossover receives confirmation, it would suggest the beginning of a new bullish cycle for Bitcoin.

“The golden cross is imminent in the BTC Inter-exchange Flow Pulse (IFP). After a year of correction, the price is ready to rise again. Everyone, buckle your seat belts,” analyst CW stated.

Five Consecutive Monthly Red Candles Signal Selling Exhaustion

Third, five consecutive monthly red candles are extremely rare. Bitcoin closed February 2026 with its fifth straight red monthly candle. This marks only the second time in history that such a streak has occurred.

The first instance took place during 2018–2019, when Bitcoin recorded six consecutive red candles. After that period, Bitcoin printed five successive green candles. The price surged more than 300%, rising from around $3,400 to $14,000.

Although the historical sample remains small, a longer red streak suggests that selling pressure is nearing exhaustion. A strong reversal can occur once buying demand returns.

“5 or 6 monthly RED candles doesn’t matter now, because the bulk of the drawdown is behind us and all the upside is still in front of us,” analyst Satoshi Flipper stated.

These signals have historically confirmed a multi-month upward trend. A recent report by BeInCrypto also reinforces the scenario that Bitcoin has entered a bottoming phase. However, analysts still see room for a deeper decline.

Analysts at BeInCrypto predict that March will likely depend on whether the $62,300 support level holds or the $79,000 resistance level breaks first.

Crypto World

Tether taps Deloitte for first USAT reserve report

Leading stablecoin issuer Tether has secured a sign-off from Deloitte for the first reserve report tied to its new U.S.-regulated stablecoin, after years struggling in its relationships with major accounting firms.

Deloitte reviewed a report prepared by Anchorage Digital Bank, which issued the company’s new USAT token. In a letter released Monday, the accounting firm said Anchorage reported $17.6 million in reserve assets backing 17.5 million USAT tokens in circulation. The token’s market cap has, since the report, risen to nearly $20 million as its growth accelerates.

The total market capitalization of the stablecoin sector has, in fact, been growing rapidly. It’s now past $315 billion, according to CoinMarketCap data, with Tether’s USDT making up $183 billion of that. Circle’s USDC comes in second place, at $76 billion.

The new USAT token follows the passage of the Genius Act last summer. The law limits the types of assets that can back stablecoins and requires larger issuers to move under federal oversight. USAT is structured to comply with those rules.

Third-party attestations such as this differ from full audits, however. They offer a snapshot of reserves at a specific point in time rather than a deep review of company finances.

Tether has been leveraging the revenue it generates from the assets backing its stablecoins to invest in a plethora of industries. These include a majority stake in Latin American agricultural firm Adecoagro (AGRO), a privacy-focused health app, a stake in video-sharing platform Rumble (RUM). More recently, it invested $200 million in digital marketplace Whop.

Crypto World

DOJ seeks forfeiture of $327K in USDT linked to romance scam

The United States Attorney’s Office for the District of Massachusetts filed a civil forfeiture action Monday seeking to recover 327,829.72 USDT, allegedly involved in a money laundering scheme connected to an online romance scam.

Summary

- DOJ is seeking to recover approximately $327,829 in USDT linked to a romance fraud and money-laundering scheme.

- Investigators say the stolen funds were routed through intermediary wallets and converted to stablecoin to conceal origin.

- The action underscores continued federal efforts to trace and reclaim crypto assets to return them to defrauded Americans.

Justice Department targets crypto laundering in online romance scam

The complaint, filed in federal court, names the cryptocurrency as defendant property and seeks its forfeiture under federal law as proceeds of fraud and laundering.

According to the complaint, the stolen funds originated from a Massachusetts resident who was targeted in late 2024 on a dating app. The fraudster, identified only by an alias, convinced the victim to send funds for purported cryptocurrency investments that never existed.

Rather than investing the money, the scammers diverted it through a series of cryptocurrency wallets and ultimately converted it to USDT, a common tactic to obfuscate the origin and movement of illicit proceeds.

Several of the wallets in question were seized by law enforcement in August 2025 after blockchain analysis traced connections to the scam.

Under U.S. civil forfeiture law, property traceable to illegal activity may be seized by the government and ultimately returned to victims if the court finds it to be proceeds of crime. The Justice Department’s action allows third parties with a legitimate interest in the property to file claims before any forfeiture is finalized.

Prosecutors said the forfeiture complaint is part of broader efforts to target online frauds, including romance scams, investment schemes, and cyber-enabled financial crime that increasingly leverage cryptocurrency to move and hide funds.

The case highlights both the growing sophistication of crypto-related fraud and law enforcement’s expanding use of blockchain analysis to trace and reclaim stolen digital assets for fraud victims.

Crypto World

Bank of Japan eyes tokenized central bank money in blockchain push

Bank of Japan Governor Ueda Kazuo said the rapid integration of blockchain and artificial intelligence is reshaping the financial system, positioning central banks to play a pivotal role in anchoring trust as crypto-linked infrastructure matures.

Summary

- The BoJ is exploring issuing or connecting central bank money to blockchain networks, including through Project Agorá and domestic sandbox testing.

- Japan’s retail CBDC program remains active, with technical experiments aimed at preparing digital cash as a future “anchor of trust.”

- Ueda warned that fragmented blockchain systems could create systemic risk unless central bank money bridges networks and ensures settlement finality.

Bank of Japan’s Ueda backs blockchain settlements, advances CBDC experiments

Speaking at FIN/SUM 2026 in Tokyo, Ueda described blockchain as moving firmly into its “implementation phase,” with decentralized finance (DeFi), smart contracts and tokenized assets increasingly influencing settlement, payments and cross-border finance.

He emphasized that blockchain’s programmability, particularly atomic transactions that bundle multiple actions into a single execution, could streamline complex processes such as delivery-versus-payment (DvP) and cross-border transfers.

For crypto markets, the speech revealed two key themes: interoperability and settlement in central bank money.

Ueda warned that a fragmented ecosystem of multiple blockchains and traditional payment rails could create conversion bottlenecks and systemic risks if interoperability is not ensured. He suggested central bank money, potentially in tokenized form, could function as a bridge across networks, preserving the “singleness of money” while enabling innovation.

The BOJ is advancing several initiatives with direct implications for digital assets. Its retail central bank digital currency (CBDC) pilot continues technical testing, while Project Agorá — a joint effort with other central banks and major financial institutions — is exploring tokenized central bank deposits on blockchain networks for cross-border payments.

A separate BOJ sandbox is testing how current account deposits at the central bank could be used to settle transactions conducted on distributed ledgers.

Ueda also highlighted AI’s growing role in analyzing blockchain transaction data for risk management and AML/CFT compliance, signaling closer scrutiny of crypto-linked activity even as innovation expands.

The message to markets was clear: blockchain-based finance is no longer experimental. But its long-term stability, Ueda said, will hinge on central banks embedding trust, liquidity and settlement finality into the next generation of digital infrastructure.

-

Politics4 days ago

Politics4 days agoITV enters Gaza with IDF amid ongoing genocide

-

Fashion4 days ago

Fashion4 days agoWeekend Open Thread: Iris Top

-

Tech2 days ago

Tech2 days agoUnihertz’s Titan 2 Elite Arrives Just as Physical Keyboards Refuse to Fade Away

-

Business7 days ago

Business7 days agoTrue Citrus debuts functional drink mix collection

-

Politics3 hours ago

Politics3 hours agoAlan Cumming Brands Baftas Ceremony A ‘Triggering S**tshow’

-

Sports3 days ago

The Vikings Need a Duck

-

NewsBeat5 days ago

NewsBeat5 days agoCuba says its forces have killed four on US-registered speedboat | World News

-

NewsBeat3 days ago

NewsBeat3 days agoDubai flights cancelled as Brit told airspace closed ’10 minutes after boarding’

-

Tech7 days ago

Tech7 days agoUnsurprisingly, Apple's board gets what it wants in 2026 shareholder meeting

-

NewsBeat6 days ago

NewsBeat6 days agoManchester Central Mosque issues statement as it imposes new measures ‘with immediate effect’ after armed men enter

-

NewsBeat3 days ago

NewsBeat3 days agoThe empty pub on busy Cambridge road that has been boarded up for years

-

NewsBeat2 days ago

NewsBeat2 days ago‘Significant’ damage to boarded-up Horden house after fire

-

NewsBeat3 days ago

NewsBeat3 days agoAbusive parents will now be treated like sex offenders and placed on a ‘child cruelty register’ | News UK

-

NewsBeat7 days ago

NewsBeat7 days agoPolice latest as search for missing woman enters day nine

-

Entertainment1 day ago

Entertainment1 day agoBaby Gear Guide: Strollers, Car Seats

-

Business5 days ago

Business5 days agoDiscord Pushes Implementation of Global Age Checks to Second Half of 2026

-

Business5 days ago

Business5 days agoOnly 4% of women globally reside in countries that offer almost complete legal equality

-

Tech4 days ago

Tech4 days agoNASA Reveals Identity of Astronaut Who Suffered Medical Incident Aboard ISS

-

Crypto World7 days ago

Crypto World7 days agoEntering new markets without increasing payment costs

-

Politics2 days ago

FIFA hypocrisy after Israel murder over 400 Palestinian footballers