Tech

Google patches first Chrome zero-day exploited in attacks this year

Google has released emergency updates to fix a high-severity Chrome vulnerability exploited in zero-day attacks, marking the first such security flaw patched since the start of the year.

“Google is aware that an exploit for CVE-2026-2441 exists in the wild,” Google said in a security advisory issued on Friday.

According to the Chromium commit history, this use-after-free vulnerability (reported by security researcher Shaheen Fazim) is due to an iterator invalidation bug in CSSFontFeatureValuesMap, Chrome’s implementation of CSS font feature values. Successful exploitation can allow attackers to trigger browser crashes, rendering issues, data corruption, or other undefined behavior.

The commit message also notes that the CVE-2026-2441 patch addresses “the immediate problem” but indicates there’s “remaining work” tracked in bug 483936078, suggesting this might be a temporary fix or that related issues still need to be addressed.

The patch was tagged as “cherry-picked” (or backported) across multiple commits, indicating that it was important enough to include in a stable release rather than waiting for the next major version (likely because the vulnerability is being exploited in the wild).

Although Google found evidence of attackers exploiting this zero-day flaw in the wild, it did not share additional details regarding these incidents.

“Access to bug details and links may be kept restricted until a majority of users are updated with a fix. We will also retain restrictions if the bug exists in a third party library that other projects similarly depend on, but haven’t yet fixed,” it noted.

Google has now fixed this vulnerability for users in the Stable Desktop channel, with new versions rolling out to Windows, macOS (145.0.7632.75/76), and Linux users (144.0.7559.75) worldwide over the coming days or weeks.

If you don’t want to update manually, you can also let Chrome check for updates automatically and install them after the next launch.

While this is the first actively exploited Chrome security vulnerability patched since the start of 2026, last year Google addressed a total of eight zero-days abused in the wild, many of them reported by the company’s Threat Analysis Group (TAG), widely known for tracking and identifying zero-days exploited in spyware attacks targeting high-risk individuals.

Tech

What Ring’s ‘Search Party’ actually does, and why its Super Bowl ad gave people the creeps

Reuniting families with their lost pooches, what’s not to like? Well, coordinated neighborhood surveillance, for one.

That sums up the reaction to the Super Bowl ad for Search Party, the AI-powered feature from Amazon’s Ring that mobilizes outdoor cameras across a neighborhood to help find lost dogs.

Search Party raised privacy concerns when it launched last year, focusing in part on the fact that the feature is turned on by default in eligible cameras, requiring users to opt out. (In the Ring app, the Search Party settings are accessible via the Control Center from the menu.) But as with most things, the spotlight during the biggest game of the year took it to a whole new level.

Here’s how it works: When someone reports a lost dog in the Ring app, nearby outdoor Ring cameras with the feature enabled use AI to scan their saved footage for a potential match.

If a camera spots something, the camera’s owner (not the owner of the lost dog) gets a notification. They then decide whether to share the clip with the dog’s owner. Nothing is shared automatically. The search is temporary, expiring after a few hours unless renewed.

That kind of subtlety doesn’t exactly translate to a 30-second Super Bowl spot. But even with a fuller understanding of how it works, some people aren’t buying it. The ad is being tagged as “creepy” and “dystopian,” with critics asking: if Ring’s AI can scan a neighborhood’s cameras for a specific dog, what’s to stop it from doing the same for a specific person?

For the record, Ring says Search Party is not designed to process human biometrics, and that Search Party footage is not included in the company’s Community Requests service, which allows law enforcement to request video for voluntary sharing by Ring users.

In an interview with GeekWire last year, Amazon VP and Ring founder Jamie Siminoff (the guy in the ad) described the Search Party feature as a breakthrough made possible by advances in AI, saying it couldn’t have been built at reasonable cost even two years ago.

Asked how the company was balancing these kinds of benefits against privacy concerns, he said Ring’s approach is to give customers full control. “You don’t balance it,” he said. “You give 100% control to your customers. It’s their data. They control it.”

But for critics, the issue isn’t really about what Search Party does now. It’s about what the underlying technology could be used for down the road.

That concern is amplified by Ring’s own recent moves. The company has also rolled out Familiar Faces, which lets users register images of family and friends so their cameras can identify specific people, but limited to those the camera owner knows.

Ring’s partnership with Flock Safety, the license-plate-recognition company used by thousands of police departments, is another lightning rod, even though Ring says the integration isn’t live yet. The partnership is part of Ring’s Community Requests tool, which lets local law enforcement request footage from nearby Ring users during active investigations. Users can choose to ignore those requests.

The company says it has no partnership with U.S. Immigration and Customs Enforcement and does not share video with the agency. But civil liberties groups, including the ACLU, have raised concerns that once footage reaches local police, there’s no guarantee it stays there, particularly given reports that some Flock-connected departments have performed lookups for ICE.

Siminoff, who returned to lead Ring last year after a hiatus, has been open about re-embracing the company’s original mission of making neighborhoods safer, including reinstating partnerships with law enforcement that had been scaled back during his absence.

In the GeekWire interview, he acknowledged that not everyone inside the company was on board with the shift, but said he’s “very convicted on the impact that we can have with Ring,” and on a much faster timeline than he might have thought in the past, due to AI.

Amazon says Search Party has reunited more than one lost dog a day with their families since launch. It’s committing $1 million to equip animal shelters with Ring cameras. But the bigger question fueling the backlash is whether finding lost puppies today is building the infrastructure for something less cute and cuddly in the future.

Tech

Where’s The Evidence That AI Increases Productivity?

IT productivity researcher Erik Brynjolfsson writes in the Financial Times that he’s finally found evidence AI is impacting America’s economy. This week America’s Bureau of Labor Statistics showed a 403,000 drop in 2025’s payroll growth — while real GDP “remained robust, including a 3.7% growth rate in the fourth quarter.”

This decoupling — maintaining high output with significantly lower labour input — is the hallmark of productivity growth. My own updated analysis suggests a US productivity increase of roughly 2.7% for 2025. This is a near doubling from the sluggish 1.4% annual average that characterised the past decade… The updated 2025 US data suggests we are now transitioning out of this investment phase into a harvest phase where those earlier efforts begin to manifest as measurable output.

Micro-level evidence further supports this structural shift. In our work on the employment effects of AI last year, Bharat Chandar, Ruyu Chen and I identified a cooling in entry-level hiring within AI-exposed sectors, where recruitment for junior roles declined by roughly 16% while those who used AI to augment skills saw growing employment. This suggests companies are beginning to use AI for some codified, entry-level tasks.

Or, AI “isn’t really stealing jobs yet,” according to employment policy analyst Will Raderman (from the American think tank called the Niskanen Center). He argues in Barron’s that “there is no clear link yet between higher AI use and worse outcomes for young workers.”

Recent graduates’ unemployment rates have been drifting in the wrong direction since the 2010s, long before generative AI models hit the market. And many occupations with moderate to high exposure to AI disruptions are actually faring better over the past few years. According to recent data for young workers, there has been employment growth in roles typically filled by those with college degrees related to computer systems, accounting and auditing, and market research. AI-intensive sectors like finance and insurance have also seen rising employment of new graduates in recent years. Since ChatGPT’s release, sectors in which more than 10% of firms report using AI and sectors in which fewer than 10% reporting using AI are hiring relatively the same number of recent grads.

Even Brynjolfsson’s article in the Financial Times concedes that “While the trends are suggestive, a degree of caution is warranted. Productivity metrics are famously volatile, and it will take several more periods of sustained growth to confirm a new long-term trend.” And he’s not the only one wanting evidence for AI’s impact. The same weekend Fortune wrote that growth from AI “has yet to manifest itself clearly in macro data, according to Apollo Chief Economist Torsten Slok.”

[D]ata on employment, productivity and inflation are still not showing signs of the new technology. Profit margins and earnings forecasts for S&P 500 companies outside of the “Magnificent 7” also lack evidence of AI at work… “After three years with ChatGPT and still no signs of AI in the incoming data, it looks like AI will likely be labor enhancing in some sectors rather than labor replacing in all sectors,” Slok said.

Tech

Here’s How Belkin’s New ConnectAir Wireless HDMI Display Adapter Works

We may receive a commission on purchases made from links.

Fresh off its CES 2026 debut, Belkin’s new ConnectAir Wireless HDMI Display Adapter has officially hit shelves. This dongle might look like a discontinued Chromecast, but it’s not a streaming device. It can help make streaming easier for you, though. It’s designed to let you mirror a screen from a USB-C device to an HDMI display without the need for actual HDMI cables or wireless casting apps. Dubbed the model AVC024, it works without forcing you to download any software, connect over Wi-Fi, or pair with Bluetooth. Handy!

The device is made up of two main parts: an HDMI receiver that connects to a display and a USB-C transmitter that plugs into a compatible laptop, tablet, smartphone, or other USB-C-compatible device. Turn it on, and the devices establish a direct 5GHz wireless link to each other — no public networks required. Its $149.99 price point tells you how innovative the ConnectAir really is. (A proper HDMI cable won’t cost you nearly as much, unless you’re grabbing one of those expensive AudioQuest Dragons or something.)

What are the technical limits of the Belkin ConnectAir?

Belkin says the adapter supports 1080p resolution at 60Hz with latency typically under 80 milliseconds, which still means smooth video playback even if it’s not in 4k. (In fact, because the adapter supports HDCP 1.4, some 4k content requiring HDCP 2.2 might not even play). It has a wireless range of up to 131 feet in open environments, but you might experience a much shorter range in the office or other space where you’re trying to connect through walls.

As far as compatibility is concerned, the ConnectAir adapter works with any USB-C device that supports DisplayPort Alt Mode. Some examples would be Windows laptops, macOS and ChromeOS devices, tablets like the iPad Pro or iPad Air, and smartphones with Samsung DeX. That said, the USB-C adapter isn’t compatible with iPhones or other Lightning-based devices. However, it can work with docking stations, just so long as the USB-C port supports DisplayPort Alt Mode.

One single HDMI receiver can pair with up to eight transmitters, though only one device can stream at a time. You can also can switch between mirrored and extended display modes depending on their device’s capabilities. The receiver could technically be powered through a USB port on a television if it provides at least 2.3 watts. Otherwise, you’ll need a separate power adapter (not included).

Tech

This new aluminum-based EV battery could solve cold-weather range and charging issues

Researchers from China’s Dalian Institute of Chemical Physics recently unveiled a new EV battery technology aimed at delivering stronger performance in freezing conditions than traditional lithium-ion packs. The so-called “liquid-solid” battery is claimed to retain more than 85% of its capacity after operating for eight hours at -34°C (−29°F), with early tests conducted using industrial-grade drones showing promising results.

While this liquid-solid technology has yet to be tested in an electric vehicle, a team from the Chinese Academy of Sciences (CAS) has taken a similar step and tested a different EV battery technology designed for sub-zero temperatures using a production vehicle from automaker Geely. According to CarNewsChina, the researchers have successfully tested an aluminum-based wide-temperature lithium-ion battery in a Geely EX5 EV, claiming it can achieve over 92% discharge efficiency at -25°C (-13°F) and charge to 90% in around 20 minutes under extreme cold conditions.

Cold weather has long been a weak spot for regular lithium-ion batteries. In many EVs, including models from Tesla, low temperatures can reduce range and slow charging speeds unless the battery is carefully preconditioned. Even with advanced thermal management systems, performance typically drops as temperatures fall well below freezing.

The new aluminum-anode design is said to address this issue by widening the battery’s operating temperature range. In testing, it reportedly remained functional across a broad temperature window and managed heat effectively during fast charging without requiring complex insulation setups. If these results translate to large-scale production, it could mean less winter range anxiety and faster charging for drivers in cold regions.

Cold climates may no longer slow down EVs

Chinese battery giants like BYD and CATL have already been racing to push ultra-fast charging technology, with claims of adding hundreds of kilometers of range in just minutes under ideal conditions. However, maintaining that performance in below-freezing temperatures remains a challenge.

If commercialized, this aluminum-based battery could offer a meaningful edge by combining fast charging with strong cold-weather efficiency. It is still early days, and further validation will be needed before the new battery tech appears in mainstream EVs. But the development shows just how quickly battery innovation is moving, especially as automakers compete to close the gap between electric and ICE vehicles in all climates.

Tech

GMC Hummer EV Makes Supercars Look Slow in Drag Racing Showdown

Lots of people cruise past brand new GMC Hummer EVs every single day without even giving it a second glance. This massive electric pickup fits in with traffic; its boxy design and silent functioning barely raise an eyebrow on the street. That is, until you place it up against a slew of exotic, high-end supercars, at which point everyone turns around and whips out their smartphones.

GMC recently released a video demonstrating this in action, with their 2026 Hummer EV Carbon Fiber Edition producing 1,160 horsepower and instant torque from its electric motors competing against five highly respected performers: a Ferrari F8 Tributo, a Ford GT, a Porsche Taycan Turbo S, a Dodge Challenger SRT Hellcat Redeye, and an Aston Martin DBS Superleggera. Each test required them to accelerate from 0 to 60 mph from a complete stop, but what makes this even more interesting is that the Hummer functioned as both a competitor and a camera platform.

Sale

LEGO Technic 2022 Ford GT Building Set for Adults – Collectible Kit W/Authentic Features, Ages…

- Enjoy a rewarding building experience shaping every detail of the 2022 Ford GT with this 1:12 scale LEGO Technic car model kit for adults

- The model car includes authentic features, such as a V6 engine with moving pistons, independent suspension on all wheels and front-axle steering

- Take your time assembling details like the rear-wheel drive with differential, opening doors, adjustable spoiler wing and opening hood

The supercars were driven by professionals who understood their vehicles inside and out, but the Hummer managed to draw ahead and leave them in the dust on all 15 runs. The drivers were still chatting about how bizarre it seemed to watch a full-size pickup easily pass them. The truck’s ‘Watts to Freedom’ mode lowers it to the deck and allows it to unleash full power to all four wheels, allowing it to accelerate from 0 to 60 in as little as 2.8 seconds under optimum conditions, and that launch advantage proved too much for the supercars to handle.

Because of its twin-turbo V8 and precision handling, the Ferrari F8 is always a strong challenger in straight-line races against heavier rivals. The Ford GT features race-bred aerodynamics and a powerful twin-turbo V6 engine. The Taycan is another EV that provides rapid torque and has a good performance just like the Hummer. The Hellcat, however, depends on old-school supercharged muscle, while the Aston Martin adds grand touring finesse, but none of them could match the Hummer’s initial surge.

The Hummer EV’s ability to leave the supercars in the dust in a straight line, despite the fact that these cars were valued twice as much as a Hummer EV, may be attributed to one factor: the unique distribution of electric power. There’s no buildup or fanfare, just pure, unadulterated energy that transforms this heavyweight into a sprinter in a matter of seconds, reaching 60 mph. In that small period, the Hummer EV entirely redefines the term ‘quick’.

[Source]

Tech

Will Tech Giants Just Use AI Interactions to Create More Effective Ads?

Google never asked its users before adding AI Overviews to its search results and AI-generated email summaries to Gmail, notes the New York Times. And Meta didn’t ask before making “Meta AI” an unremovable part of its tool in Instagram, WhatsApp and Messenger.

“The insistence on AI everywhere — with little or no option to turn it off — raises an important question about what’s in it for the internet companies…”

Behind the scenes, the companies are laying the groundwork for a digital advertising economy that could drive the future of the internet. The underlying technology that enables chatbots to write essays and generate pictures for consumers is being used by advertisers to find people to target and automatically tailor ads and discounts to them….

Last month, OpenAI said it would begin showing ads in the free version of ChatGPT based on what people were asking the chatbot and what they had looked for in the past. In response, a Google executive mocked OpenAI, adding that Google had no plans to show ads inside its Gemini chatbot. What he didn’t mention, however, was that Google, whose profits are largely derived from online ads, shows advertising on Google.com based on user interactions with the AI chatbot built into its search engine.

For the past six years, as regulators have cracked down on data privacy, the tech giants and online ad industry have moved away from tracking people’s activities across mobile apps and websites to determine what ads to show them. Companies including Meta and Google had to come up with methods to target people with relevant ads without sharing users’ personal data with third-party marketers. When ChatGPT and other AI chatbots emerged about four years ago, the companies saw an opportunity: The conversational interface of a chatty companion encouraged users to voluntarily share data about themselves, such as their hobbies, health conditions and products they were shopping for.

The strategy already appears to be working. Web search queries are up industrywide, including for Google and Bing, which have been incorporating AI chatbots into their search tools. That’s in large part because people prod chatbot-powered search engines with more questions and follow-up requests, revealing their intentions and interests much more explicitly than when they typed a few keywords for a traditional internet search.

Tech

AI startup founded by ex-Accolade leaders lands $8.5M from Seattle VCs to rethink contact center operations

Scala, a Bellevue-based AI startup founded by Smartsheet CEO Rajeev Singh and former Accolade executive Ardie Sameti, raised $8.5 million in a seed round co-led by prominent Seattle-area venture firms Madrona and FUSE.

GeekWire first reported on Scala last year while it was still in stealth. Now the company is revealing more details about its “operational intelligence platform” for contact centers — the massive customer service operations that companies across healthcare, travel, and financial services rely on to handle millions of interactions.

Sameti, Scala’s CEO, said companies spend heavily on customer experience tools but often aren’t able to connect into a full picture of what’s happening across their specific workflows and systems.

Scala is trying to fill that gap. Instead of focusing on a single use case, the company positions itself as an intelligence layer that sits across a contact center’s existing technology stack. Scala’s platform consists of three main components:

- Pulse, a proprietary reasoning engine that pulls in data from across a company’s systems — CRM, knowledge bases, other internal records — and surfaces insights and operational hotspots.

- Agent Canvas lets operators design and deploy AI agents for both customer-facing and internal workflows.

- Pulse Assist acts as what Sameti calls an “AI ops sidekick” — essentially a ChatGPT for contact center operators that can help plan, execute tasks, and support decision-making.

Sameti spent a decade at healthcare software company Accolade, where he led AI and platform efforts supporting member interactions. That’s where he learned about problems facing customer experience leaders.

“It wasn’t these operators that were failing,” he said. “It was these systems and tools around them.”

Scala has customers across healthcare, travel, and financial services. Sameti declined to share specific names or numbers.

Asked about competition from well-funded AI customer experience startups like Sierra, Sameti said Scala differentiates by stretching across an entire operation rather than focusing on “narrow point solutions.”

Sameti used a restaurant analogy: many competitors are trying to automate the host at the front door. Scala wants to understand everything from the host to the server to the kitchen to the bartender, and coordinate across all of it.

Sameti also described AI agents as a commodity. “They’re not a moat,” he said. Instead, the moat is about having deep domain expertise, he said. Investors share the same sentiment.

“They’ve spent years inside complex service organizations, and that perspective shows up clearly in how Scala is being built — a holistic service solution spanning all aspects of the CX journey,” Kellan Carter, general partner at FUSE, said in a statement.

Scala recently moved into its first permanent office in downtown Bellevue. The company has nearly 20 employees.

Singh, who was named Smartsheet’s CEO in November, is co-founder and executive chair of Scala. He previously co-founded Concur Technologies, which SAP acquired for $8.3 billion, and was CEO at Accolade, leading the company through its IPO. Mike Hilton, former chief product officer at Accolade, is also an investor in Scala.

Tech

Fractal Analytics’ muted IPO debut signals persistent AI fears in India

As India’s first AI company to IPO, Fractal Analytics didn’t have a stellar first day on the public markets, as enthusiasm for the technology collided with jittery investors recovering from a major sell-off in Indian software stocks.

Fractal listed at ₹876 per share on Monday, below its issue price of ₹900, and then slid further in afternoon trading. The stock closed at ₹873.70, down 7% from its issue price, lending the company a market capitalization of about ₹148.1 billion (around $1.6 billion).

That price tag marks a step down from Fractal’s recent private-market highs. In July 2025, the company raised about $170 million in a secondary sale, at a valuation of $2.4 billion. It first crossed the $1 billion mark in January 2022 after raising $360 million from TPG, becoming India’s first AI unicorn.

Fractal’s IPO comes as India seeks to position itself as a key market and development hub for AI in a bid to attract investment amid increasing attention from some of the world’s most prominent AI companies. Firms such as OpenAI and Anthropic have been engaging more with the country’s government, enterprises, and developer ecosystem as they seek to tap the country’s scale, talent base, and growing appetite for AI tools and technology.

That push is on display this week in New Delhi, where India is hosting the AI Impact Summit, bringing together global technology leaders, policymakers and executives.

Fractal’s subdued debut followed a sharp recalibration of its IPO. In early February, the company decided to price the offering conservatively after its bankers advised it to, cutting the IPO size by more than 40% to ₹28.34 billion (about $312.5 million), from the original amount of ₹49 billion ($540.3 million).

Founded in 2000, Fractal sells AI and data analytics software to large enterprises across financial services, retail and healthcare, and generates the bulk of its revenue from overseas markets, including the U.S. The company pivoted toward AI in 2022 after operating as a traditional data analytics firm for over 20 years.

Techcrunch event

Boston, MA

|

June 23, 2026

Fractal touted a steadily growing business in its IPO filing, with revenue from operations rising 26% to ₹27.65 billion (around $304.8 million) in the year ended March 2025 compared to a year earlier. It also swung to a net profit of ₹2.21 billion ($24.3 million) from a loss of ₹547 million ($6 million) the previous year.

The company plans to use the IPO proceeds to repay borrowings at its U.S. subsidiary, invest in R&D, sales and marketing under its Fractal Alpha unit, expand office infrastructure in India, and pursue potential acquisitions.

Tech

Infidex 176 V Camera Delivers Panoramic Film in a 3D Printable Package

Photo credit: Jace LeRoy

Denis Aminev, a Russian photographer, has spent years attempting to recreate the look of those magical film days that digital photography couldn’t quite replicate. It all started with movies shot on film, and how the stretched aspect ratio immediately draws your attention to them. Standard lenses and anamorphic adapters fell short, so he turned to something more direct: building his own camera from scratch.

Aminev began experimenting with pinhole cameras in early 2024, hoping to capture even just a respectable image. Not only was he able to capture that shot, but he also demonstrated how amazing the outcome could be with the correct instruments. Emboldened by his accomplishment, he set out to create a completely functional prototype camera by sifting through old cameras and replacing the parts with printed ones. It took him a few months to get the initial version operating, but once he did, things took off from there. By August 2024, he had versions 4 and 5 of the camera, which had all of the details locked in.

Bambu Lab A1 3D Printer, Support Multi-Color 3D Printing, High Speed & Precision, Full-Auto Calibration…

- High-Speed Precision: Experience unparalleled speed and precision with the Bambu Lab A1 3D Printer. With an impressive acceleration of 10,000 mm/s…

- Multi-Color Printing with AMS lite: Unlock your creativity with vibrant and multi-colored 3D prints. The Bambu Lab A1 3D printers make multi-color…

- Full-Auto Calibration: Say goodbye to manual calibration hassles. The A1 3D printer takes care of all the calibration processes automatically…

All of these modifications lead him to build the Infidex 176 V, a name that accurately describes the camera’s infinite focus and double exposure capacity. It’s also quite neat because it’s all designed to be manufactured using a standard FDM printer and some PLA or similar material, so no fancy equipment is required. After you’ve printed all of the components, all you have to do is glue them together, add a few clamps, and some hardware such as brass inserts, wire springs, and hinges, and you’re ready to go.

Loading the film is simple because 35mm film is still available at most camera stores. Each roll contains 36 exposures, or approximately 19 of those super-wide panoramic shots (72 by 24mm). That’s a really extreme aspect ratio, around 1:2.7 or even 3:1. The lenses are quite easy to replace, and most people simply use an 80mm f/2.8 from a Mamiya C330, but he has used various lenses in prior iterations, such as Lomo Lubitel components. To focus, simply crank the lens, which has a typical helicoid mount that allows you to focus from infinity or up close.

As you might expect, following the instructions is simple: double-check the dimensions to ensure they’re correct, then sand or file each piece to smooth out any bumps from the supports, and that’s it. Aminev has also come up with some clever light trap solutions to prevent stray light from interfering with the illusion. Even with bad film, the visuals appear amazingly sharp.

Jace LeRoy, a photographer who knew Aminev online, was among the first to discover the project. Jace was one of the guys who actually built one of the cameras, and when he saw the results of the test film, he thought, “Yeah right,” but when the visuals showed as clear as a bell, he was pleasantly surprised. He tested it with a range of films, including some rather simple ones like Kodak Gold 200, Portra 160, CineStill 800T, and Kentmere 400, and the results were consistent each time. He emphasized that some lenses may produce vignetting, but the overall image quality is well worth the trade-off.

[Source]

Tech

‘I Tried Running Linux On an Apple Silicon Mac and Regretted It’

Installing Linux on a MacBook Air “turned out to be a very underwhelming experience,” according to the tech news site MakeUseOf:

The thing about Apple silicon Macs is that it’s not as simple as downloading an AArch64 ISO of your favorite distro and installing it. Yes, the M-series chips are ARM-based, but that doesn’t automatically make the whole system compatible in the same way most traditional x86 PCs are. Pretty much everything in modern MacBooks is custom. The boot process isn’t standard UEFI like on most PCs. Apple has its own boot chain called iBoot. The same goes for other things, like the GPU, power management, USB controllers, and pretty much every other hardware component. It is as proprietary as it gets.

This is exactly what the team behind Asahi Linux has been working toward. Their entire goal has been to make Linux properly usable on M-series Macs by building the missing pieces from the ground up. I first tried it back in 2023, when the project was still tied to Arch Linux and decided to give it a try again in 2026. These days, though, the main release is called Fedora Asahi Remix, which, as the name suggests, is built on Fedora rather than Arch…

For Linux on Apple Silicon, the article lists three major disappointments:

- “External monitors don’t work unless your MacBook has a built-in HDMI port.”

- “Linux just doesn’t feel fully ready for ARM yet. A lot of applications still aren’t compiled for ARM, so software support ends up being very hit or miss.” (And even most of the apps tested with FEX “either didn’t run properly or weren’t stable enough to rely on.”)

- Asahi “refused to connect to my phone’s hotspot,” they write (adding “No, it wasn’t an iPhone”).

-

Sports5 days ago

Sports5 days agoBig Tech enters cricket ecosystem as ICC partners Google ahead of T20 WC | T20 World Cup 2026

-

NewsBeat7 days ago

NewsBeat7 days agoMia Brookes misses out on Winter Olympics medal in snowboard big air

-

Tech5 days ago

Tech5 days agoSpaceX’s mighty Starship rocket enters final testing for 12th flight

-

Crypto World6 days ago

Crypto World6 days agoU.S. BTC ETFs register back-to-back inflows for first time in a month

-

Tech1 day ago

Tech1 day agoLuxman Enters Its Second Century with the D-100 SACD Player and L-100 Integrated Amplifier

-

Video3 days ago

Video3 days agoThe Final Warning: XRP Is Entering The Chaos Zone

-

Crypto World6 days ago

Crypto World6 days agoBlockchain.com wins UK registration nearly four years after abandoning FCA process

-

Crypto World2 days ago

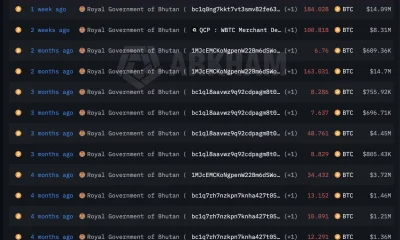

Crypto World2 days agoBhutan’s Bitcoin sales enter third straight week with $6.7M BTC offload

-

Crypto World5 days ago

Crypto World5 days agoPippin (PIPPIN) Enters Crypto’s Top 100 Club After Soaring 30% in a Day: More Room for Growth?

-

Sports7 days ago

Kirk Cousins Officially Enters the Vikings’ Offseason Puzzle

-

Video4 days ago

Video4 days agoPrepare: We Are Entering Phase 3 Of The Investing Cycle

-

Crypto World6 days ago

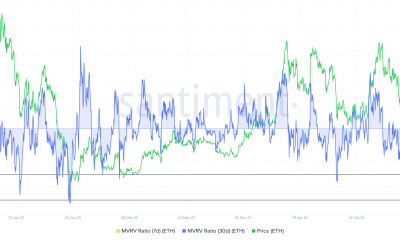

Crypto World6 days agoEthereum Enters Capitulation Zone as MVRV Turns Negative: Bottom Near?

-

NewsBeat21 hours ago

NewsBeat21 hours agoThe strange Cambridgeshire cemetery that forbade church rectors from entering

-

Crypto World5 days ago

Crypto World5 days agoCrypto Speculation Era Ending As Institutions Enter Market

-

Business4 days ago

Business4 days agoBarbeques Galore Enters Voluntary Administration

-

Crypto World4 days ago

Crypto World4 days agoEthereum Price Struggles Below $2,000 Despite Entering Buy Zone

-

Politics6 days ago

Politics6 days agoWhy was a dog-humping paedo treated like a saint?

-

NewsBeat23 hours ago

NewsBeat23 hours agoMan dies after entering floodwater during police pursuit

-

Crypto World3 days ago

Crypto World3 days agoBlackRock Enters DeFi Via UniSwap, Bitcoin Stages Modest Recovery

-

NewsBeat2 days ago

NewsBeat2 days agoUK construction company enters administration, records show