Tech

OpenAI deploys Cerebras chips for ‘near-instant’ code generation in first major move beyond Nvidia

OpenAI on Thursday launched GPT-5.3-Codex-Spark, a stripped-down coding model engineered for near-instantaneous response times, marking the company’s first significant inference partnership outside its traditional Nvidia-dominated infrastructure. The model runs on hardware from Cerebras Systems, a Sunnyvale-based chipmaker whose wafer-scale processors specialize in low-latency AI workloads.

The partnership arrives at a pivotal moment for OpenAI. The company finds itself navigating a frayed relationship with longtime chip supplier Nvidia, mounting criticism over its decision to introduce advertisements into ChatGPT, a newly announced Pentagon contract, and internal organizational upheaval that has seen a safety-focused team disbanded and at least one researcher resign in protest.

“GPUs remain foundational across our training and inference pipelines and deliver the most cost effective tokens for broad usage,” an OpenAI spokesperson told VentureBeat. “Cerebras complements that foundation by excelling at workflows that demand extremely low latency, tightening the end-to-end loop so use cases such as real-time coding in Codex feel more responsive as you iterate.”

The careful framing — emphasizing that GPUs “remain foundational” while positioning Cerebras as a “complement” — underscores the delicate balance OpenAI must strike as it diversifies its chip suppliers without alienating Nvidia, the dominant force in AI accelerators.

Speed gains come with capability tradeoffs that OpenAI says developers will accept

Codex-Spark represents OpenAI’s first model purpose-built for real-time coding collaboration. The company claims the model delivers more than 1000 tokens per second when served on ultra-low latency hardware, though it declined to provide specific latency metrics such as time-to-first-token figures.

“We aren’t able to share specific latency numbers, however Codex-Spark is optimized to feel near-instant — delivering more than 1000 tokens per second while remaining highly capable for real-world coding tasks,” the OpenAI spokesperson said.

The speed gains come with acknowledged capability tradeoffs. On SWE-Bench Pro and Terminal-Bench 2.0 — two industry benchmarks that evaluate AI systems’ ability to perform complex software engineering tasks autonomously — Codex-Spark underperforms the full GPT-5.3-Codex model. OpenAI positions this as an acceptable exchange: developers get responses fast enough to maintain creative flow, even if the underlying model cannot tackle the most sophisticated multi-step programming challenges.

The model launches with a 128,000-token context window and supports text only — no image or multimodal inputs. OpenAI has made it available as a research preview to ChatGPT Pro subscribers through the Codex app, command-line interface, and Visual Studio Code extension. A small group of enterprise partners will receive API access to evaluate integration possibilities.

“We are making Codex-Spark available in the API for a small set of design partners to understand how developers want to integrate Codex-Spark into their products,” the spokesperson explained. “We’ll expand access over the coming weeks as we continue tuning our integration under real workloads.”

Cerebras hardware eliminates bottlenecks that plague traditional GPU clusters

The technical architecture behind Codex-Spark tells a story about inference economics that increasingly matters as AI companies scale consumer-facing products. Cerebras’s Wafer Scale Engine 3 — a single chip roughly the size of a dinner plate containing 4 trillion transistors — eliminates much of the communication overhead that occurs when AI workloads spread across clusters of smaller processors.

For training massive models, that distributed approach remains necessary and Nvidia’s GPUs excel at it. But for inference — the process of generating responses to user queries — Cerebras argues its architecture can deliver results with dramatically lower latency. Sean Lie, Cerebras’s CTO and co-founder, framed the partnership as an opportunity to reshape how developers interact with AI systems.

“What excites us most about GPT-5.3-Codex-Spark is partnering with OpenAI and the developer community to discover what fast inference makes possible — new interaction patterns, new use cases, and a fundamentally different model experience,” Lie said in a statement. “This preview is just the beginning.”

OpenAI’s infrastructure team did not limit its optimization work to the Cerebras hardware. The company announced latency improvements across its entire inference stack that benefit all Codex models regardless of underlying hardware, including persistent WebSocket connections and optimizations within the Responses API. The results: 80 percent reduction in overhead per client-server round trip, 30 percent reduction in per-token overhead, and 50 percent reduction in time-to-first-token.

A $100 billion Nvidia megadeal has quietly fallen apart behind the scenes

The Cerebras partnership takes on additional significance given the increasingly complicated relationship between OpenAI and Nvidia. Last fall, when OpenAI announced its Stargate infrastructure initiative, Nvidia publicly committed to investing $100 billion to support OpenAI as it built out AI infrastructure. The announcement appeared to cement a strategic alliance between the world’s most valuable AI company and its dominant chip supplier.

Five months later, that megadeal has effectively stalled, according to multiple reports. Nvidia CEO Jensen Huang has publicly denied tensions, telling reporters in late January that there is “no drama” and that Nvidia remains committed to participating in OpenAI’s current funding round. But the relationship has cooled considerably, with friction stemming from multiple sources.

OpenAI has aggressively pursued partnerships with alternative chip suppliers, including the Cerebras deal and separate agreements with AMD and Broadcom. From Nvidia’s perspective, OpenAI may be using its influence to commoditize the very hardware that made its AI breakthroughs possible. From OpenAI’s perspective, reducing dependence on a single supplier represents prudent business strategy.

“We will continue working with the ecosystem on evaluating the most price-performant chips across all use cases on an ongoing basis,” OpenAI’s spokesperson told VentureBeat. “GPUs remain our priority for cost-sensitive and throughput-first use cases across research and inference.” The statement reads as a careful effort to avoid antagonizing Nvidia while preserving flexibility — and reflects a broader reality that training frontier AI models still requires exactly the kind of massive parallel processing that Nvidia GPUs provide.

Disbanded safety teams and researcher departures raise questions about OpenAI’s priorities

The Codex-Spark launch comes as OpenAI navigates a series of internal challenges that have intensified scrutiny of the company’s direction and values. Earlier this week, reports emerged that OpenAI disbanded its mission alignment team, a group established in September 2024 to promote the company’s stated goal of ensuring artificial general intelligence benefits humanity. The team’s seven members have been reassigned to other roles, with leader Joshua Achiam given a new title as OpenAI’s “chief futurist.”

OpenAI previously disbanded another safety-focused group, the superalignment team, in 2024. That team had concentrated on long-term existential risks from AI. The pattern of dissolving safety-oriented teams has drawn criticism from researchers who argue that OpenAI’s commercial pressures are overwhelming its original non-profit mission.

The company also faces fallout from its decision to introduce advertisements into ChatGPT. Researcher Zoë Hitzig resigned this week over what she described as the “slippery slope” of ad-supported AI, warning in a New York Times essay that ChatGPT’s archive of intimate user conversations creates unprecedented opportunities for manipulation. Anthropic seized on the controversy with a Super Bowl advertising campaign featuring the tagline: “Ads are coming to AI. But not to Claude.”

Separately, the company agreed to provide ChatGPT to the Pentagon through Genai.mil, a new Department of Defense program that requires OpenAI to permit “all lawful uses” without company-imposed restrictions — terms that Anthropic reportedly rejected. And reports emerged that Ryan Beiermeister, OpenAI’s vice president of product policy who had expressed concerns about a planned explicit content feature, was terminated in January following a discrimination allegation she denies.

OpenAI envisions AI coding assistants that juggle quick edits and complex autonomous tasks

Despite the surrounding turbulence, OpenAI’s technical roadmap for Codex suggests ambitious plans. The company envisions a coding assistant that seamlessly blends rapid-fire interactive editing with longer-running autonomous tasks — an AI that handles quick fixes while simultaneously orchestrating multiple agents working on more complex problems in the background.

“Over time, the modes will blend — Codex can keep you in a tight interactive loop while delegating longer-running work to sub-agents in the background, or fanning out tasks to many models in parallel when you want breadth and speed, so you don’t have to choose a single mode up front,” the OpenAI spokesperson told VentureBeat.

This vision would require not just faster inference but sophisticated task decomposition and coordination across models of varying sizes and capabilities. Codex-Spark establishes the low-latency foundation for the interactive portion of that experience; future releases will need to deliver the autonomous reasoning and multi-agent coordination that would make the full vision possible.

For now, Codex-Spark operates under separate rate limits from other OpenAI models, reflecting constrained Cerebras infrastructure capacity during the research preview. “Because it runs on specialized low-latency hardware, usage is governed by a separate rate limit that may adjust based on demand during the research preview,” the spokesperson noted. The limits are designed to be “generous,” with OpenAI monitoring usage patterns as it determines how to scale.

The real test is whether faster responses translate into better software

The Codex-Spark announcement arrives amid intense competition for AI-powered developer tools. Anthropic’s Claude Cowork product triggered a selloff in traditional software stocks last week as investors considered whether AI assistants might displace conventional enterprise applications. Microsoft, Google, and Amazon continue investing heavily in AI coding capabilities integrated with their respective cloud platforms.

OpenAI’s Codex app has demonstrated rapid adoption since launching ten days ago, with more than one million downloads and weekly active users growing 60 percent week-over-week. More than 325,000 developers now actively use Codex across free and paid tiers. But the fundamental question facing OpenAI — and the broader AI industry — is whether speed improvements like those promised by Codex-Spark translate into meaningful productivity gains or merely create more pleasant experiences without changing outcomes.

Early evidence from AI coding tools suggests that faster responses encourage more iterative experimentation. Whether that experimentation produces better software remains contested among researchers and practitioners alike. What seems clear is that OpenAI views inference latency as a competitive frontier worth substantial investment, even as that investment takes it beyond its traditional Nvidia partnership into untested territory with alternative chip suppliers.

The Cerebras deal is a calculated bet that specialized hardware can unlock use cases that general-purpose GPUs cannot cost-effectively serve. For a company simultaneously battling competitors, managing strained supplier relationships, and weathering internal dissent over its commercial direction, it is also a reminder that in the AI race, standing still is not an option. OpenAI built its reputation by moving fast and breaking conventions. Now it must prove it can move even faster — without breaking itself.

Tech

Russia tries to block WhatsApp, Telegram in communication blockade

The Russian government is trying to block WhatsApp in the country as its crackdown on communication platforms outside its control intensifies.

WhatsApp announced the action against it on X, calling it “a backwards step” that “can only lead to less safety for people in Russia.”

WhatsApp assured its Russian users that it will continue doing everything it can to keep them connected.

According to Russian media, the country’s internet watchdog, Roskomnadzor, had recently excluded the domains whatsapp.com and web.whatsapp.com from the National Domain Name System, citing the official explanation of countering crime and fraud.

In practice, excluding the domains from domestic DNS routing made WhatsApp services accessible only to users who use VPN tools or external resolvers.

However, more aggressive measures are reportedly now in place, with the latest attempt to fully block WhatsApp in Russia. The instant messenger’s parent company, Meta, has been designated as an “extremist” entity in Russia since 2022.

WhatsApp saw its first restrictions in the country in August 2025, when Roskomnadzor began throttling voice and video calls. In October 2025, the authorities attempted to block new user registrations.

Presidential press secretary Dmitry Peskov reportedly stated that the authorities are open to allowing WhatsApp to resume operations in the country, provided that Meta complies with local legislation.

WhatsApp blocks came shortly after similar action was taken against Telegram, which was reportedly aggressively throttled earlier this week in Russia.

Telegram’s founder, Pavel Durov, responded to the situation by stating that Russia is trying to encourage its citizens to use the Kremlin-controlled MAX messenger app.

MAX is a controversial communications platform developed by VK, which became mandatory on all electronic devices sold in the country since September 2025.

Although MAX is promoted as a secure app that safeguards national communications from foreign surveillance, several independent reviewers have raised concerns about encryption weaknesses, government access, and extensive data-collection risks.

For now, users in Russia may be able to continue accessing their messengers of choice by using VPN tools, though those are not immune to the government’s crackdown either.

Tech

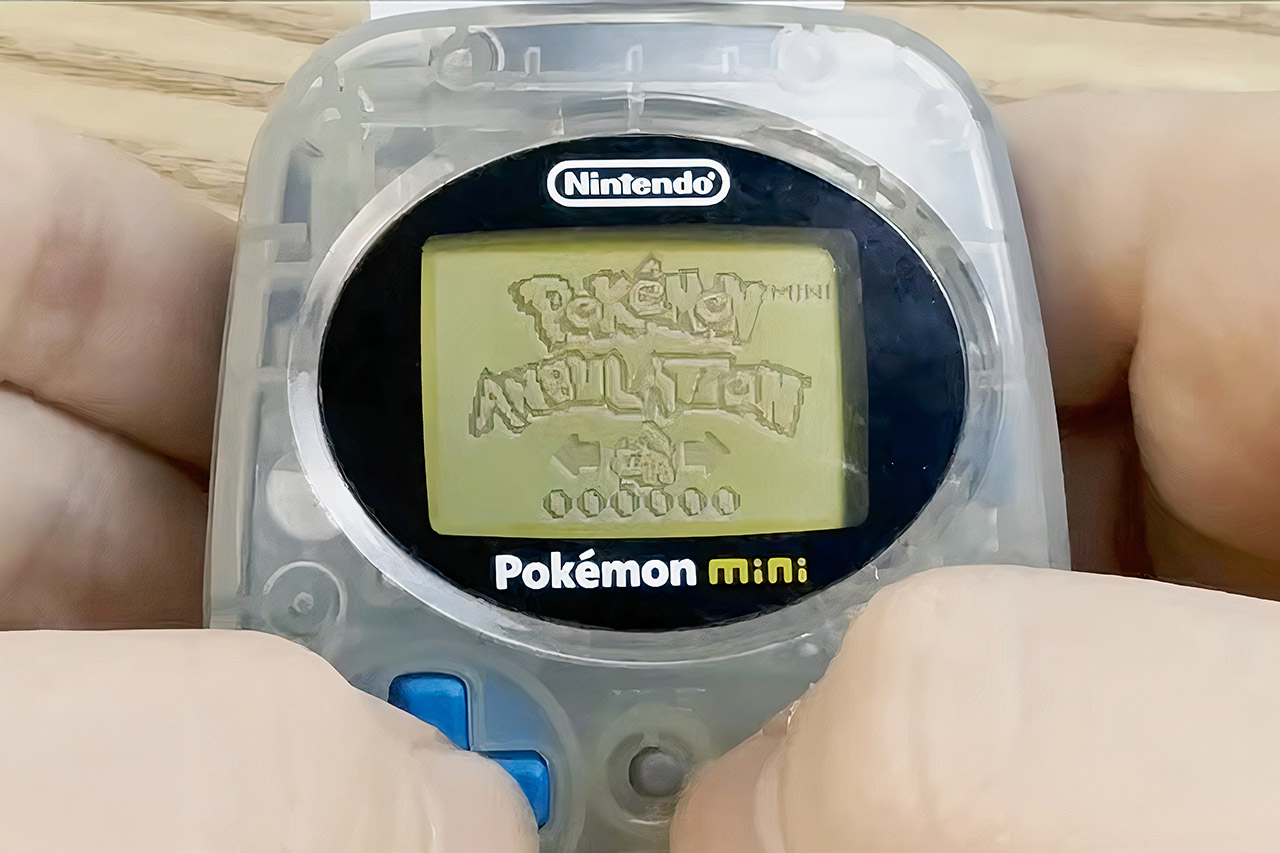

A Fresh Game Breathes Life Into Nintendo’s Tiniest Handheld, the Pokemon Mini

Nintendo took a chance in 2001 with the Pokemon Mini, the world’s smallest cartridge-based platform to date. This teeny-tiny device was smaller than a matchbox and only held ten official games before fading into obscurity. Game makers had to get creative with the tiny 160×100 monochrome LCD screen driven by an Epson S1C88 CPU running at 4.194304 MHz, resulting in charming, simplified Pokémon adventures. Four AAA batteries kept the fun going for about 20 hours before needing a recharge, with cartridges containing a maximum of 2 MB of ROM, but the hardware was so constrained that game programmers had to get somewhat creative with the design.

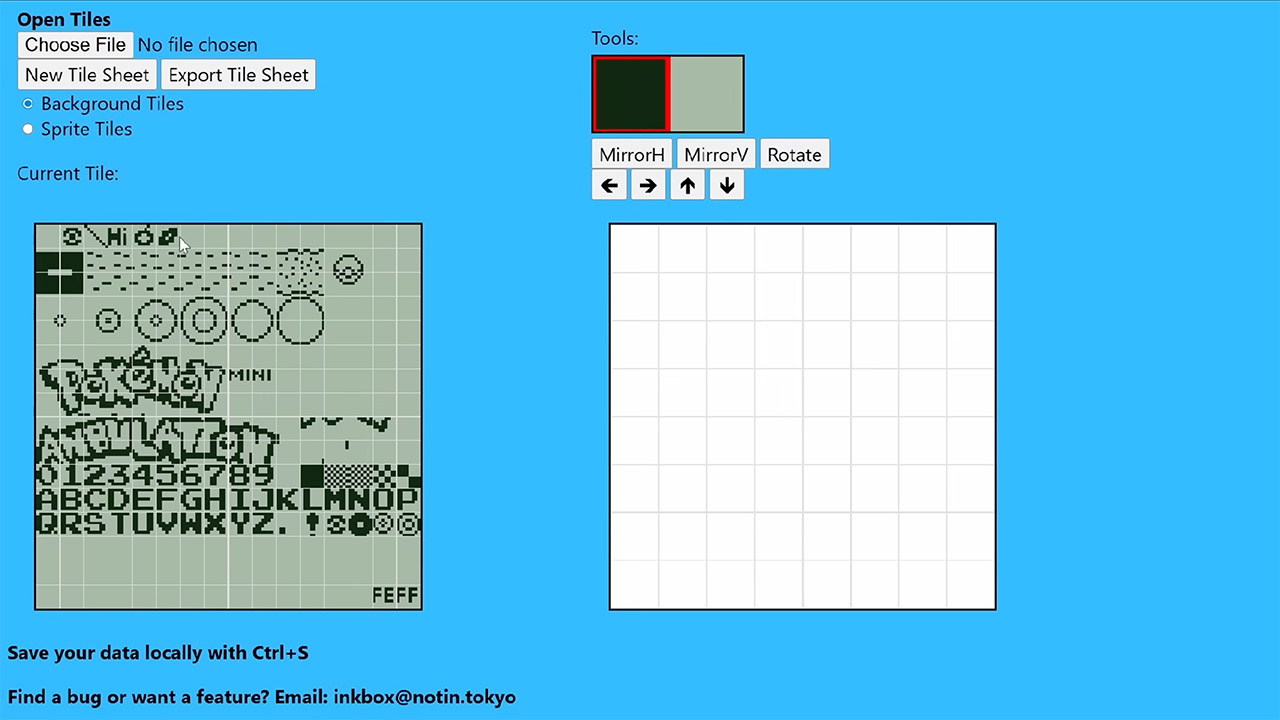

Homebrew enthusiasts recognized the potential for restoring this historical relic. They began developing emulators, assemblers, and even flash carts after gathering a plethora of information through reverse engineering and community tools. Websites like pokemon-mini.net have become troves of downloads, ranging from full games and demonstrations to development kits. Programmers working on the Mini often utilize C or assembly language using the open-source c88-pokemini toolchain on GitHub, or they can obtain a genuine Epson SDK from the past, which includes a minimal simulator for testing code.

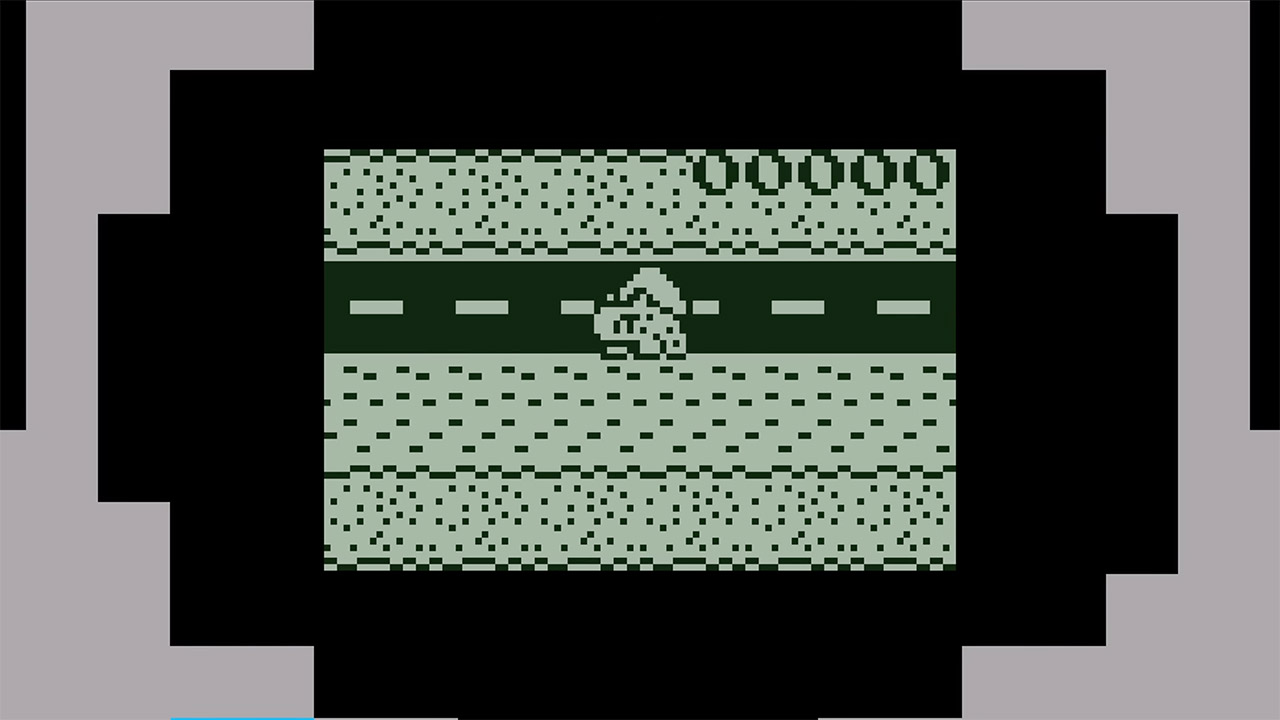

Inkbox entered the fray with Pokémon Ambulation, their version on Frogger’s iconic ‘river-crossing hurdle. The game allows you to choose from eight different Pokémon; whether you want to speed across as lightning-quick Pikachu or plod along as lumbering Snorlax, you must guide them through a succession of scrolling lanes laden with hazards. There are automobiles zipping along horizontally, logs sliding across the water, and gators snapping shut in irritation. Collision means you have to start over, but reaching the far bank earns you points and advances you to the next level.

Assembly code is crucial to the S1C88 core’s operation. Inkbox began as a fairly simple application to get the system up and running and manage interrupts. When it came to visuals, they used 4-bit grayscale tiles, which means they had 256 possible shades to work with to cover the 20×16 screen grid. Backgrounds scroll quite smoothly thanks to some smart fiddling with hardware registers, and sprites, such as the Pokémon itself, can be superimposed on top of backdrops to make them appear more believable. A basic tone generator generates sound effects, such as beeps to indicate that you’ve leaped and a crash to indicate that you’ve failed, all of which are synced to the frame rate.

Getting down to the nitty-gritty of constructing the tiles for their game required precision. Inkbox developed an online editor, which is still hosted at notin.tokyo/pminiTiles, that allows you to draw and then export your creations directly into the game. The LCD panel doesn’t have many shades to play with, so it takes some smart pixel-dithering to make it appear that there are more than just the 16 or so shades available. Every frame is scanned for collision detection, which occurs at a staggering 60 frames per second. Input comes from the usual suspects, four buttons and a D-pad, but to avoid jitters, the code debounces the buttons.

Instead of running large amounts of code on an emulator, testing was done directly on hardware. Emulators such as PokeMini can help ensure that the code works, but timing and LCD refresh issues imply that the thing must still be run on actual hardware. Inkbox effectively inserted an RP2040 microcontroller into a bespoke cartridge shell, which was configured to simulate ROM via SPI flash and was connected to the Mini’s bus like an actual game cartridge. When you turn it on, the Nintendo logo appears on the screen, Ambulation loaded, and the game begins; the buttons click with a pleasing ‘click’, and the screen glows low-key in the dark.

[Source]

Tech

CISA flags critical Microsoft SCCM flaw as exploited in attacks

CISA ordered U.S. government agencies on Thursday to secure their systems against a critical Microsoft Configuration Manager vulnerability patched in October 2024 and now exploited in attacks.

Microsoft Configuration Manager (also known as ConfigMgr and formerly System Center Configuration Manager, or SCCM) is an IT administration tool for managing large groups of Windows servers and workstations.

Tracked as CVE-2024-43468 and reported by offensive security company Synacktiv, this SQL injection vulnerability allows remote attackers with no privileges to gain code execution and run arbitrary commands with the highest level of privileges on the server and/or the underlying Microsoft Configuration Manager site database.

“An unauthenticated attacker could exploit this vulnerability by sending specially crafted requests to the target environment which are processed in an unsafe manner enabling the attacker to execute commands on the server and/or underlying database,” Microsoft explained when it patched the flaw in October 2024.

At the time, Microsoft tagged it as “Exploitation Less Likely,” saying that “an attacker would likely have difficulty creating the code, requiring expertise and/or sophisticated timing, and/or varied results when targeting the affected product.”

However, Synacktiv shared proof-of-concept exploitation code for CVE-2024-43468 on November 26th, 2024, almost two months after Microsoft released security updates to mitigate this remote code execution vulnerability.

While Microsoft has not yet updated its advisory with additional information, CISA has now flagged CVE-2024-43468 as actively exploited in the wild and has ordered Federal Civilian Executive Branch (FCEB) agencies to patch their systems by March 5th, as mandated by the Binding Operational Directive (BOD) 22-01.

“These types of vulnerabilities are frequent attack vectors for malicious cyber actors and pose significant risks to the federal enterprise,” the U.S. cybersecurity agency warned.

“Apply mitigations per vendor instructions, follow applicable BOD 22-01 guidance for cloud services, or discontinue use of the product if mitigations are unavailable.”

Even though BOD 22-01 applies only to federal agencies, CISA encouraged all network defenders, including those in the private sector, to secure their devices against ongoing CVE-2024-43468 attacks as soon as possible.

Tech

Helion reaches record 150 million degrees Celsius as it strives for ambitious commercial fusion launch

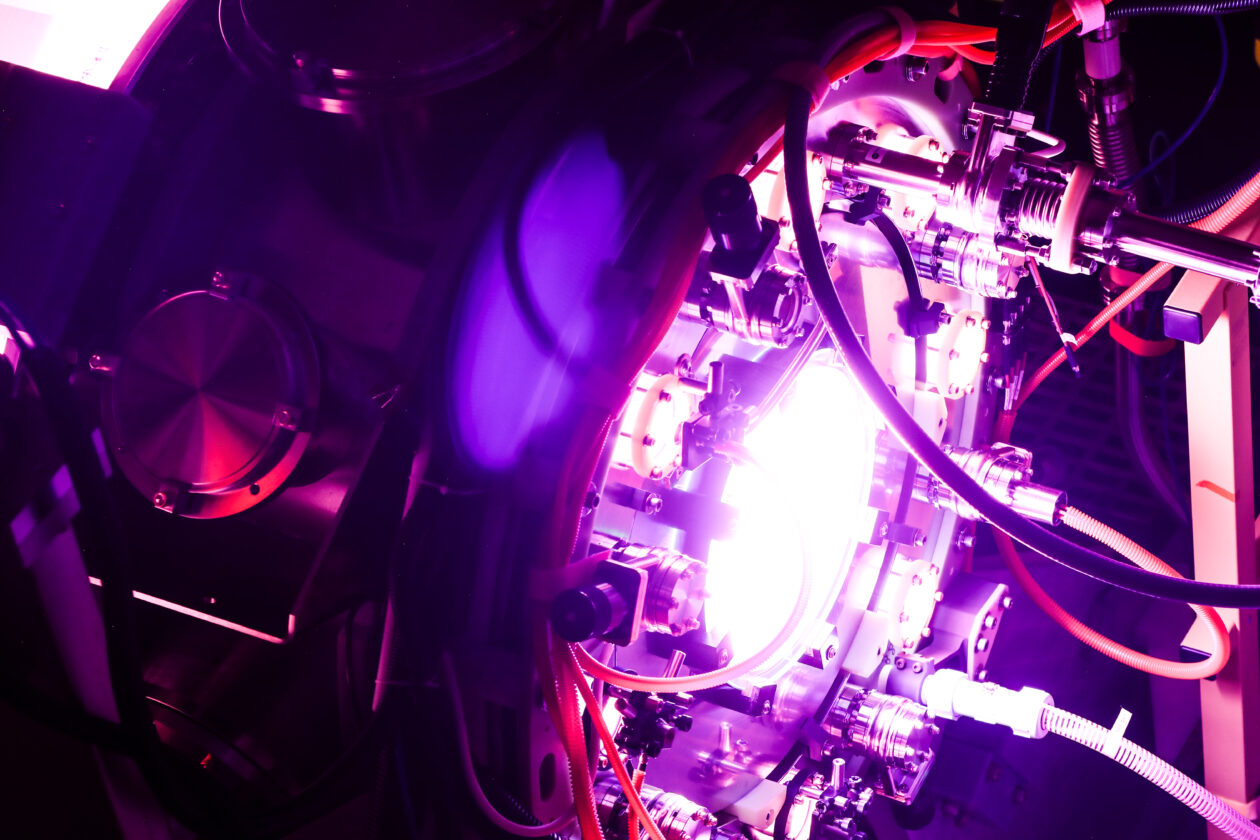

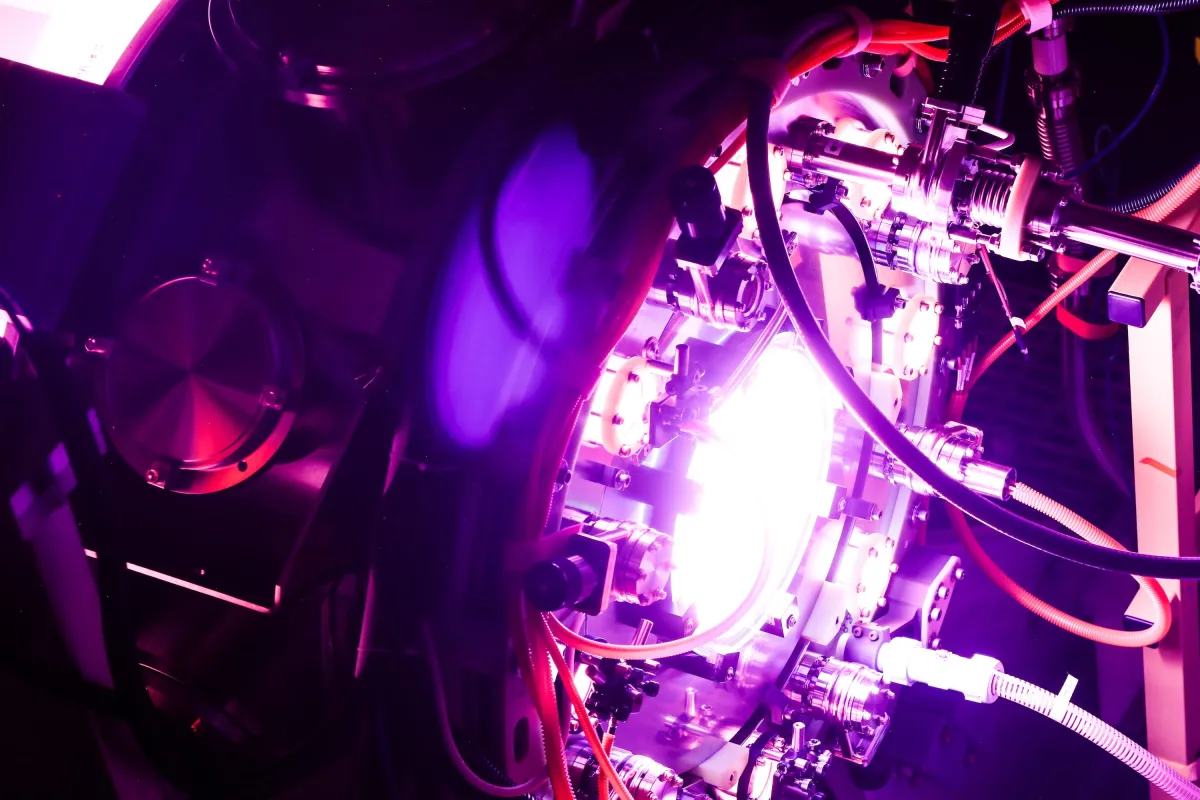

Helion Energy on Friday announced two milestones for the company and commercial fusion sector: reaching a plasma temperature of 150 million degrees Celsius and being the first private venture to test its fusion device with a radioactive fuel called tritium.

The Everett, Wash.-based company is part of the global race to solve the physics and engineering challenge of harnessing fusion reactions to generate usable energy.

Though its technology has yet to reach that milestone, Helion last summer broke ground on a commercial power facility in Eastern Washington that aims to begin smashing atoms in 2028 — an ambitious goal that has many skeptics.

As construction on the plant proceeds, the company is continuing crucial tests at its headquarters on its seventh-generation device, Polaris, which achieved the new temperature and fuel benchmarks.

“We have a long history now of building fusion prototypes,” said David Kirtley, Helion’s CEO. “We’ve been able to show that we can progressively …. push the boundaries and get closer and closer to those power plants.”

The fusion industry’s challenge is creating plasmas that are much hotter than the sun, incredibly dense, and then sustaining them. The whole operation needs to be sufficiently energy efficient that excess power is created and captured.

While the sun and stars achieve fusion naturally, no one on Earth — in academia or industry — has reached that goal and some believe that goal is still many years away.

A magnetic approach to fusion

Helion aims to tame fusion using magneto-inertial, pulsed operation, field-reversed configuration devices. What that means is the system sends a pulse of energy into the fusion device where magnetic fields compress the plasma and fusion occurs. As the plasma pushes against the field, it creates a current that sends electricity back into the system.

The company has published little peer-reviewed research, but shared information about its recent progress with select experts.

“Seeing the data from the Polaris test campaign, including record-setting temperatures and gains from the fuel mix in their system, indicates strong progress. Our ability to get fusion on the grid requires approaches that enable rapid turnaround in design and testing, and these results reflect the growing capability of the U.S. fusion ecosystem,” Jean Paul Allain, associate director of Science for Fusion Energy Sciences in the Department of Energy, said in a statement.

Ryan McBride, a fusion expert and University of Michigan professor in nuclear engineering, electrical engineering and applied physics, also reviewed Helion’s diagnostic data.

McBride said in a statement it was “exciting to see evidence” of the two milestones and he looks forward “to seeing more progress.”

Kirtley said the team is preparing publications that describe the diagnostic tools used to verify the temperature record, which surpassed the company’s earlier peak of 100 million degrees Celsius.

The ultimate goal for the device is to hit 200 million degrees C, he said, adding “we’re not announcing that today. But given the results we’ve had so far, we’re very excited about and optimistic about reaching that milestone.”

Industry momentum builds

Helion is also highlighting its use of tritium in combination with deuterium as a fusion fuel. Both are forms of hydrogen, but deuterium is nonradioactive, so most companies run experiments with that isotope alone as it’s safer to handle and more abundant. Helion’s commercial fuel mix will be deuterium and helium-3, which requires higher plasma temperatures for fusion but is more efficient for electricity production.

Running tests with tritium provided insights into how the helium-3 could perform, Kirtley said, and allowed the company to demonstrate its ability to manage the fuel through its entire system.

The fusion industry itself keeps getting hotter as tech companies and others are increasingly desperate for new clean energy sources for data centers, transportation and industry. This week, fusion startup Inertia announced $450 million in new funding, while B.C.’s General Fusion last month announced plans to go public via a $1 billion SPAC.

For decades, cheap energy and flat electricity demand stifled fusion development, Kirtley said. That’s no longer the case.

“I’m really excited there’s such an excitement around fusion,” he said, “and it’s pushing us.”

Tech

Microsoft’s AI-Powered Copyright Bots Fucked Up And Got An Innocent Game Delisted From Steam

from the ready-fire-aim dept

At some point, we, as a society, are going to realize that farming copyright enforcement out to bots and AI-driven robocops is not the way to go, but today is not that day. Long before AI became the buzzword it is today, large companies have employed their own copyright crawler bots, or employed those of a third party, to police their copyrights on these here internets. And for just as long, those bots have absolutely sucked out loud at their jobs. We have seen example after example after example of those bots making mistakes, resulting in takedowns or threats of takedowns of all kinds of perfectly legit content. Upon discovery, the content is usually reinstated while those employing the copyright decepticons shrug their shoulders and say “Thems the breaks.” And then it happens again.

It has to change, but isn’t. We have yet another recent example of this in action, with Microsoft’s copyright enforcement partner using an AI-driven enforcement bot to get a video game delisted from Steam over a single screenshot on the game’s page that looks like, but isn’t, from Minecraft. The game in question, Allumeria, clearly is partially inspired by Minecraft, but doesn’t use any of its assets and is in fact its own full-fledged creative work.

On Tuesday, the developer behind the Minecraft-looking, dungeon-raiding sandbox announced that their game had been taken down from Valve’s storefront due to a DMCA copyright notice issued by Microsoft. The notice, shared by developer Unomelon in the game’s Discord server, accused Allumeria of using “Minecraft content, including but not limited to gameplay and assets.”

The takedown was apparently issued over one specific screenshot from the game’s Steam page. It shows a vaguely Minecraft-esque world with birch trees, tall grass, a blue sky, and pumpkins: all things that are in Minecraft but also in real life and lots of other games. The game does look pretty similar to Minecraft, but it doesn’t appear to be reusing any of its actual assets or crossing some arbitrary line between homage and copycat that dozens of other Minecraft-inspired games haven’t crossed before.

It turns out the takedown request didn’t come from Microsoft directly, but via Tracer.AI. Tracer.AI claims to have a bot driven by artificial intelligence for automatic flagging and removal of copyright infringing content.

It seems the system failed to understand in this case that the image in question, while being similar to those including Minecraft assets, didn’t actually infringe upon anything. Folks at Mojang caught wind of this on BlueSky and had to take action.

While it’s unclear if the claim was issued automatically or intentionally, Mojang Chief Creative Officer Jens Bergensten (known to most Minecraft players as Jeb) responded to a comment about the takedown on Bluesky, stating that he was not aware and is now “investigating.” Roughly 12 hours later, Allumeria‘s Steam page has been reinstated.

“Microsoft has withdrawn their DMCA claim!” Unomelon posted earlier today. “The game is back up on Steam! Allumeria is back! Thank you EVERYONE for your support. It’s hard to comprehend that a single post in my discord would lead to so many people expressing support.”

And this is the point in the story where we all go back to our lives and pretend like none of this ever happened. But that sucks. For starters, there is no reason we should accept that this kind of collateral damage, temporary or not. Add to that there are surely stories out there in which a similar resolution was not reached. How many games, how much other non-infringing content out there, were taken down for longer from an erroneous claim like this? How many never came back?

And at the base level, the fact is that if companies are going to claim that copyright is of paramount importance to their business, that can’t be farmed out to automated systems that aren’t good at their job.

Filed Under: ai, allumeria, copyright, copyright detection, dmca, minecraft, steam

Companies: microsoft, tracer.ai, unomelon, valve

Tech

Modder Crams Powerful 1000W Gaming PC Into a Tiny Desk Drawer

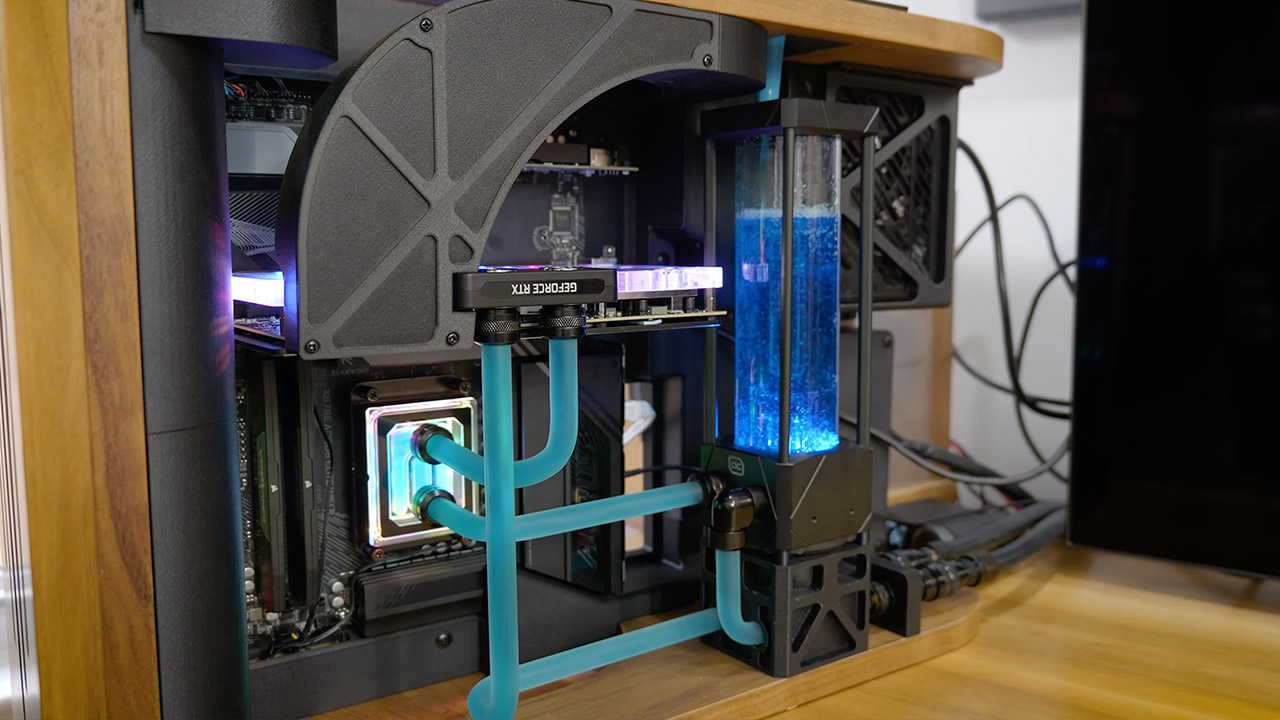

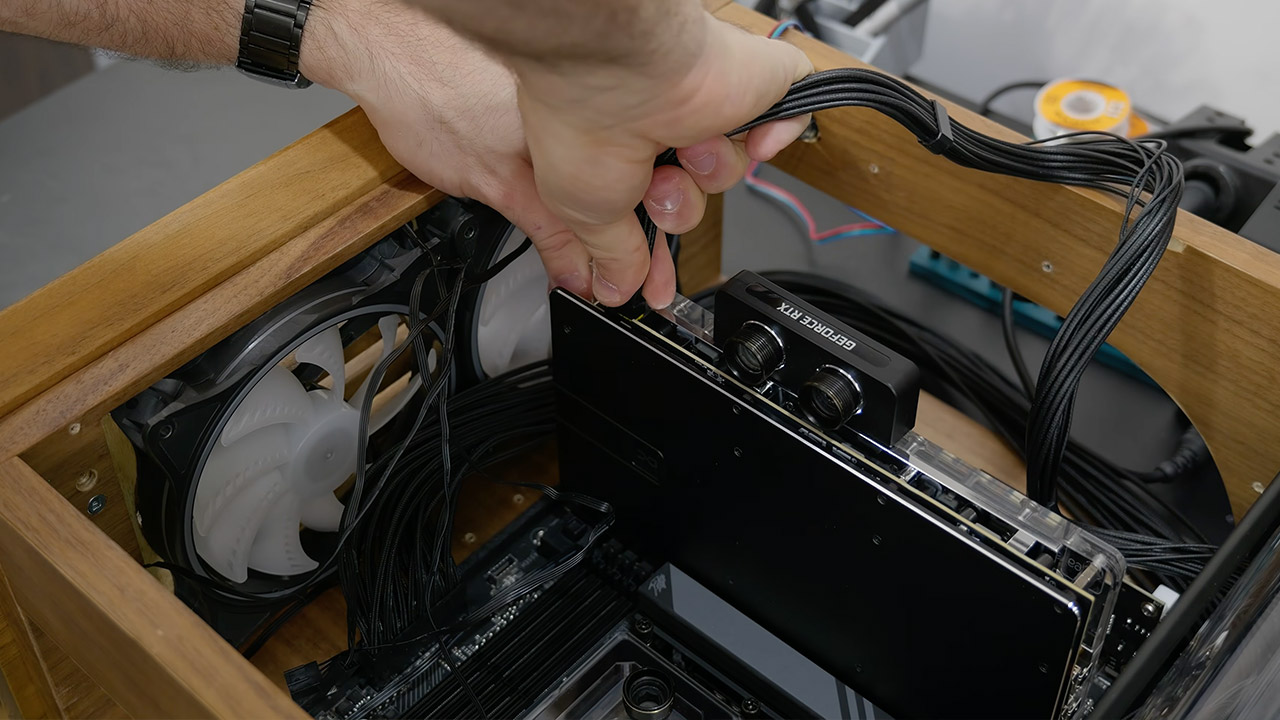

A high-end gaming PC often requires a lot of room, with full-size tower cases, large fans blowing air all over the place, and wires coming out of everywhere. Zac Builds, on the other hand, takes the other approach: he stuffs around 1000 watts of performance into a typical desk drawer, keeping your workstation neat and hiding all hardware until you need it.

This is his third attempt at the notion; each has been a refinement of the prior one, but the first two times around he encountered some serious barriers. Airflow concerns, heavy components drooping, and tangles galore have forced him to go outside the box to solve these challenges while keeping future updates under wraps.

Sale

ASUS ROG Strix G16 (2025) Gaming Laptop, 16” FHD+ 16:10 165Hz/3ms Display, NVIDIA® GeForce RTX…

- HIGH-LEVEL PERFORMANCE – Unleash power with Windows 11 Home, an Intel Core i7 Processor 14650HX, and an NVIDIA GeForce RTX 5060 Laptop GPU powered…

- FAST MEMORY AND STORAGE – Multitask seamlessly with 16GB of DDR5-5600MHz memory and store all your game library on 1TB of PCIe Gen 4 SSD.

- DYNAMIC DISPLAY AND SMOOTH VISUALS – Immerse yourself in stunning visuals with the smooth 165Hz FHD+ display for gaming, creation, and…

The essential components of this system are a powerful Ryzen 9 9950X3D CPU and a Gigabyte X870E motherboard. An RTX 5090 graphics card takes care of all the hard lifting, even demanding games. Its size and power requirements (almost 600 watts) were difficult to manage, so he installed a special water block to deal with the heat. It has a whopping 128GB of DDR5 memory, a 4TB NVMe disk for storage, and a 10GB network card for further performance. The power comes from an MSI 1250W supply, which gives him some wiggle room while the system is cranking.

To keep everything from overheating, a homemade water loop is used, which includes a big radiator salvaged from a previous build and several high-performance fans. The pump and reservoir are freestanding, making them easily accessible. The rigid tubing is routed through several carefully bent sections and connected with some quick-release connectors for easy future maintenance. He also has a unique thermal interface material on the CPU to keep temperatures low; no more paste issues.

For increased airflow, he enlarged the front of the drawer (almost 50% additional intake, for you numbers geeks) and chopped out several areas to improve port reach. The drawer itself has undergone a few changes, including narrow slides instead of the originals to accommodate the GPU width, a custom printed tray to support the motherboard and other components, and some printed mounts to prevent the GPU from placing too much strain on the slot. The power supply has a new home up top to clean up the cabling and provide greater access to the back.

The wiring is all channeled up into nice tiny modular bits, and there’s a front shroud to direct air over crucial regions like the RAM and VRMs. To be honest, putting it all together was difficult; the tubing bends took a long time to perfect, and he had to do a few runs to get the loop right.

Assembly required a high level of precision, as fitting the tubing into the tight spaces was a real challenge. He even had to flush the loop with chemicals to remove the old residue before refilling it with new coolant. The fans received printed dust filters to keep them clean, and he threw in some walnut panels with laser-cut and engraved features to add some beauty to the inside of the drawer.

A Thunderbolt 5 dock allows you to bring all of the ports up to the desktop surface, and a printed webcam stand provides a comfortable login seat, eliminating the need for cords or awkward posture. He’s even got a UGREEN NAS to handle backups and perform various services in the background, freeing up resources on the main machine. Zac believes this is the most difficult construction he’s ever completed, combining tremendous power with dependability and ease of maintenance. What was the result? A beast of a gaming PC that from the outside appears to be no more than a standard desk drawer.

Tech

You Can Binge All the Hallmark Romance Your Heart Desires for Free. Here’s How

Valentine’s Day is the perfect time to unwind with a cozy Hallmark romance. If you’re a Peacock subscriber, you know full well that the NBCUniversal-owned streamer used to house a whole slew of Hallmark TV shows and movies. The three-year-long contract between the two ended in May 2025, but that doesn’t mean you can’t get your fill of small-town love stories, Christmas romances and light-hearted mysteries.

If you’re pining for that classic Hallmark goodness, I’ve got a tip for you. You can watch everything you’ve been missing to ring in Valentine’s Day right. And you won’t have to pay a dime. Yes, free, gratis, at no cost to you whatsoever.

What’s all the hoopla, you must be wondering. Well, not to be cheeky or anything, but it’s Hoopla, actually.

Fun wordplay aside, Hoopla is the streaming spot where you can find that Hallmark goodness you’ve been missing out on. It’s a digital entertainment platform with all sorts of audiobooks, podcasts, movies, TV shows, music and educational material to keep you engaged. Your local public library provides this cost-free app.

The Hallmark Channel’s entire collection is available here through the platform’s Hallmark Plus BingePass. With this feature, you can borrow premium content for seven days. Get ready to binge all the charming love stories you want. This is the perfect way to catch up if you’re behind on hit shows like Ride and When Calls the Heart. It’s all ad-free with just a single click.

Read more: I Love Hallmark Movies, but This New Netflix Flick Shakes Up the Rom-Com Formula

First, you will need a valid library card and email address to sign up for the Hallmark Plus BingePass on Hoopla. Visit the Hoopla website (or download the mobile app) and follow the steps to create an account. Keep your library card on hand, as you may be asked to provide your card number and PIN. You can sign up for one if you don’t have a library card.

Once you complete the setup process for your Hoopla account, you can start streaming. From there, simply pick the Hallmark Plus BingePass option from the BingePass prompt to watch. Not all libraries support Hoopla, so I recommend checking with your local branch to see if the service is available. If you would rather not rely on the library to scratch that feel-good movie itch, you can try Pluto TV and watch its curated channel, devoted to all things Hallmark.

Want the entire library and don’t mind paying? You can always sign up for Hallmark Plus — the channel’s exclusive streaming platform — for $8 a month or $80 a year. A paid subscription gets you access to Hallmark’s content library along with all-new exclusive original series and movies. Extra perks, as listed on the Hallmark Plus website, include a Hallmark Gold Crown Store coupon, Crown Rewards points, unlimited eCards and exclusive surprises.

Tech

The iPhone 18 Pro could avoid a RAM-related price hike altogether

GF Securities analyst Jeff Pu reports that Apple may keep iPhone 18 Pro and iPhone 18 Pro Max pricing unchanged despite rising memory costs, easing concerns that higher RAM prices would automatically push flagship iPhone prices upward.

Pu, in an investment note first reported by MacRumors, states that Apple does not plan to increase prices relative to the iPhone 17 Pro lineup, which currently starts at $1,099 for the Pro and $1,199 for the Pro Max.

Rising memory costs have created pressure across the smartphone industry, as demand for high-bandwidth memory used in AI data centres has driven up prices for RAM and flash storage components.

Pu attributes Apple’s ability to hold pricing steady to aggressive cost-management strategies, including negotiations with key suppliers such as Samsung and SK Hynix, which manufacture memory chips used in iPhones.

He adds that Apple is also seeking more favourable terms for display panels and camera modules, suggesting that broader supply chain optimisation could offset higher semiconductor costs.

Memory pricing pressure and Apple’s margin strategy

The current surge in memory pricing has affected laptops, smartphones and other personal computing devices, raising expectations that 2026 flagship models across multiple brands would reflect those increases in retail pricing.

Apple has historically maintained premium margins on its Pro models, but analysts increasingly suggest that the company may accept slightly reduced margins to protect volume and maintain its competitive position.

TF International Securities analyst Ming-Chi Kuo previously indicated that Apple could absorb higher component costs rather than pass them directly to consumers, particularly if stable pricing supports stronger device sales.

Kuo argues that Apple can offset thinner hardware margins through continued growth in subscription services such as iCloud, Apple Music, Apple TV+ and Apple Arcade, which generate recurring revenue beyond the initial device purchase.

If Apple maintains starting prices at $1,099 and $1,199, the iPhone 18 Pro lineup would avoid a price increase during a period when component inflation has already affected other segments of the consumer electronics market.

Separate rumours suggest Apple could introduce a foldable iPhone later in the same release window with pricing potentially reaching $2,500, which would position that device as a distinct ultra-premium tier rather than a direct Pro replacement.

Apple has not confirmed pricing or specifications for the iPhone 18 Pro range, and final retail details are expected closer to the typical autumn launch window.

Tech

Fusion startup Helion hits blistering temps as it races toward 2028 deadline

The Everett, Washington-based fusion energy startup Helion announced Friday that it has hit a key milestone in its quest for fusion power. Plasmas inside the company’s Polaris prototype reactor have reached 150 million degrees Celsius, three-quarters of the way toward what the company thinks it will need to operate a commercial fusion power plant.

“We’re obviously really excited to be able to get to this place,” David Kirtley, Helion’s co-founder and CEO, told TechCrunch.

Polaris is also operating using deuterium-tritium fuel — a mixture of two hydrogen isotopes — which Kirtley said makes Helion the first fusion company to do so. “We were able to see the fusion power output increase dramatically as expected in the form of heat,” he said.

The startup is locked in a race with several other companies that are seeking to commercialize fusion power, potentially unlimited source of clean energy.

That potential has investors rushing to bet on the technology. This week, Inertia Enterprises announced a $450 million Series A round that included Bessemer and GV. In January, Type One Energy told TechCrunch it was in the midst of raising $250 million, while last summer Commonwealth Fusion Systems raised $863 million from investors including Google and Nvidia. Helion itself raised $425 million last year from a group that included Sam Altman, Mithril, Lightspeed, and SoftBank.

While most other fusion startups are targeting the early 2030s to put electricity on the grid, Helion has a contract with Microsoft to sell it electricity starting in 2028, though that power would come from a larger commercial reactor called Orion that the company is currently building, not Polaris.

Every fusion startup has its own milestones based on the design of its reactor. Commonwealth Fusion Systems, for example, needs to heat its plasmas to more than 100 million degrees C inside of its tokamak, a doughnut-shaped device that uses powerful magnets to contain the plasma.

Techcrunch event

Boston, MA

|

June 23, 2026

Helion’s reactor is different, needing plasmas that are about twice as hot to function as intended.

The company’s reactor design is what’s called a field-reversed configuration. The inside chamber looks like an hourglass, and at the wide ends, fuel gets injected and turned into plasmas. Magnets then accelerate the plasmas toward each other. When they first merge, they’re around 10 million to 20 million degrees C. Powerful magnets then compress the merged ball further, raising the temperature to 150 million degrees C. It all happens in less than a millisecond.

Instead of extracting energy from the fusion reactions in the form of heat, Helion uses the fusion reaction’s own magnetic field to generate electricity. Each pulse will push back against the reactor’s own magnets, inducing electrical current that can be harvested. By harvesting electricity directly from the fusion reactions, the company hopes to be more efficient than its competitors.

Over the last year, Kirtley said that Helion had refined some of the circuits in the reactor to boost how much electricity they recover.

While the company uses deuterium-tritium fuel today, down the road it plans to use deuterium-helium-3. Most fusion companies plan to use deuterium-tritium and extract energy as heat. Helion’s fuel choice, deuterium-helium-3, produces more charged particles, which push forcefully against the magnetic fields that confine the plasma, making it better suited for Helion’s approach of generating electricity directly.

Helion’s ultimate goal is to produce plasmas that hit 200 million degrees C, far higher than other companies’ targets, a function of its reactor design and fuel choice. “We believe that at 200 million degrees, that’s where you get into that optimal sweet spot of where you want to operate a power plant,” Kirtley said.

When asked whether Helion had reached scientific breakeven — the point where a fusion reaction generates more energy than it requires to start it — Kirtley demurred. “We focus on the electricity piece, making electricity, rather than the pure scientific milestones.”

Helium-3 is common on the Moon, but not here on Earth, so Helion must make its own fuel. To start, it’ll fuse deuterium nuclei to produce the first batches. In regular operation, while the main source of power will be deuterium-helium-3 fusion, some of the reactions will still be deuterium-on-deuterium, which will produce helium-3 that the company will purify and reuse.

Work is already underway to refine the fuel cycle. “It’s been a pleasant surprise in that a lot of that technology has been easier to do than maybe we expected,” Kirtley said. Helion has been able to produce helium-3 “at very high efficiencies in terms of both throughput and purity,” he added.

While Helion is currently the only fusion startup using helium-3 in its fuel, Kirtley said he thinks other companies will in the future, hinting that he’d be open to selling it to them. “Other folks — as they come along and recognize that they want to do this approach of direct electricity recovery and see the efficiency gains from it — will want to be using helium-3 fuel as well,” he said.

Alongside its experiments with Polaris, Helion is also building Orion, a 50-megawatt fusion reactor it needs to fulfill its Microsoft contract “Our ultimate goal is not to build and deliver Polaris,” Kirtley said. “That’s a step along the way towards scaled power plants.”

Tech

No, Apple Music didn't fire Jay-Z over Bad Bunny Super Bowl Halftime Show

Rumors that Jay-Z lost his Apple Music leadership position in connection with the Super Bowl halftime show are lies, and trace back to a satirical post falsely presented as news.

Hip hop star Jay-Z

The rumor traces back to a post from “America’s Last Line of Defense,” a network known for publishing fabricated stories presented as satire. Screenshots of the post circulated on Facebook and other platforms without the page’s disclaimer, giving the false impression it was a legitimate report.

The original post claims Apple Music “fired” Jay-Z after years of producing the halftime show. There is no supporting evidence from Apple, the NFL, Roc Nation, or any credible news outlet.

Continue Reading on AppleInsider | Discuss on our Forums

-

Politics5 days ago

Politics5 days agoWhy Israel is blocking foreign journalists from entering

-

Sports6 days ago

Sports6 days agoJD Vance booed as Team USA enters Winter Olympics opening ceremony

-

Business5 days ago

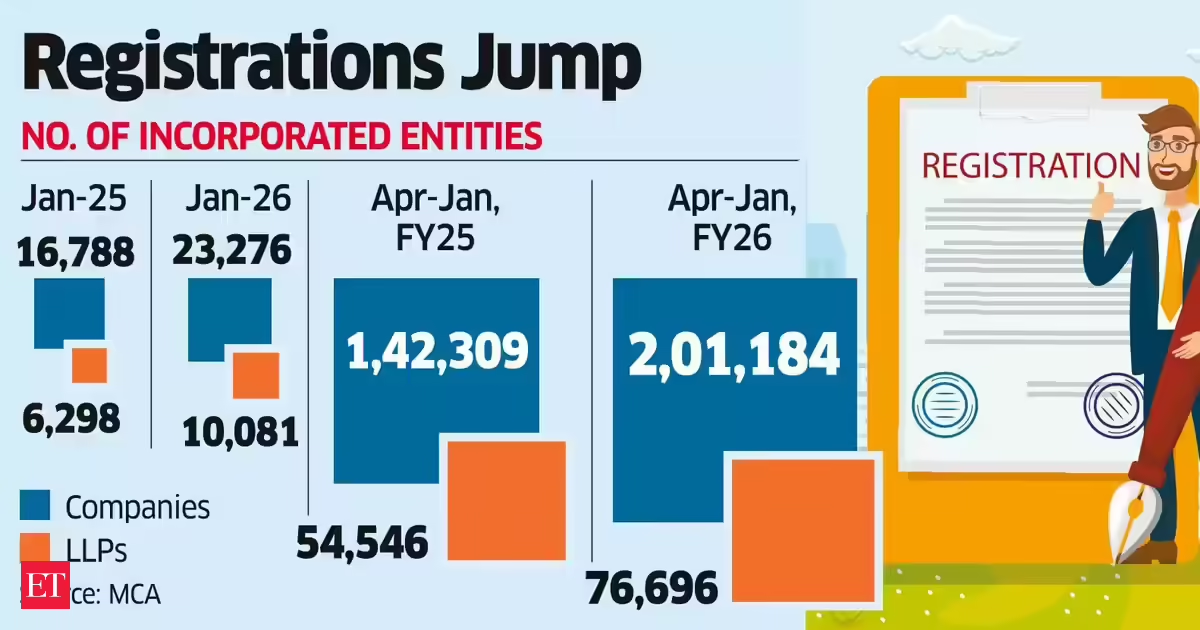

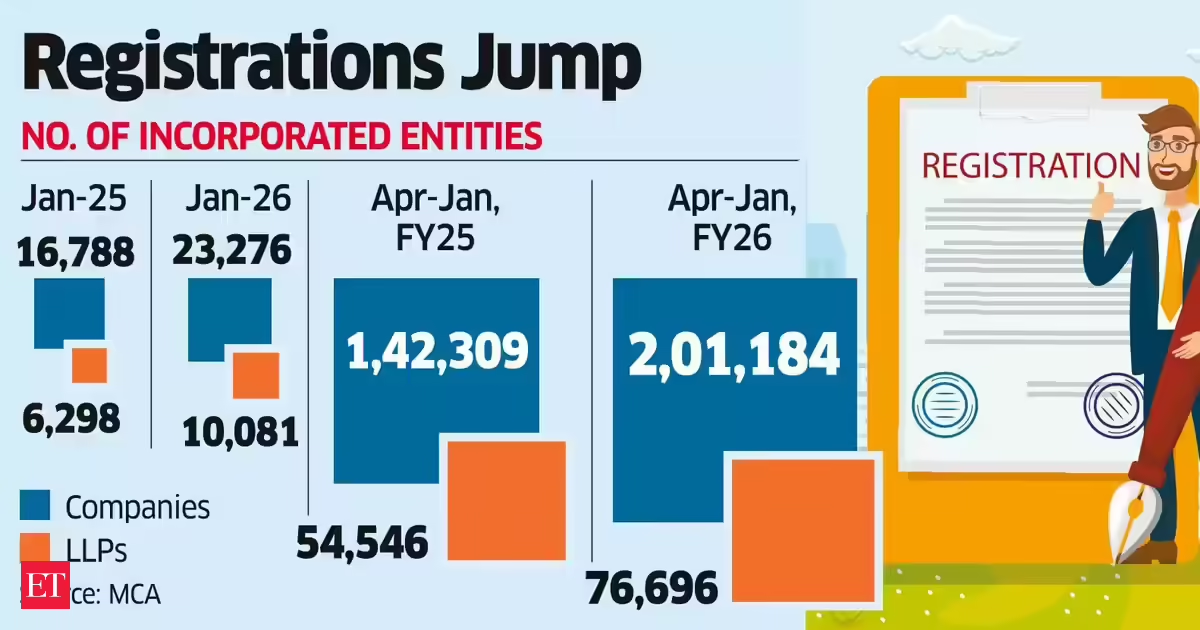

Business5 days agoLLP registrations cross 10,000 mark for first time in Jan

-

NewsBeat4 days ago

NewsBeat4 days agoMia Brookes misses out on Winter Olympics medal in snowboard big air

-

Tech7 days ago

Tech7 days agoFirst multi-coronavirus vaccine enters human testing, built on UW Medicine technology

-

Sports2 days ago

Sports2 days agoBig Tech enters cricket ecosystem as ICC partners Google ahead of T20 WC | T20 World Cup 2026

-

Business5 days ago

Business5 days agoCostco introduces fresh batch of new bakery and frozen foods: report

-

Tech2 days ago

Tech2 days agoSpaceX’s mighty Starship rocket enters final testing for 12th flight

-

NewsBeat5 days ago

NewsBeat5 days agoWinter Olympics 2026: Team GB’s Mia Brookes through to snowboard big air final, and curling pair beat Italy

-

Sports4 days ago

Sports4 days agoBenjamin Karl strips clothes celebrating snowboard gold medal at Olympics

-

Sports6 days ago

Former Viking Enters Hall of Fame

-

Video2 hours ago

Video2 hours agoThe Final Warning: XRP Is Entering The Chaos Zone

-

Politics5 days ago

Politics5 days agoThe Health Dangers Of Browning Your Food

-

Business5 days ago

Business5 days agoJulius Baer CEO calls for Swiss public register of rogue bankers to protect reputation

-

NewsBeat7 days ago

NewsBeat7 days agoSavannah Guthrie’s mother’s blood was found on porch of home, police confirm as search enters sixth day: Live

-

Crypto World2 days ago

Crypto World2 days agoPippin (PIPPIN) Enters Crypto’s Top 100 Club After Soaring 30% in a Day: More Room for Growth?

-

Crypto World3 days ago

Crypto World3 days agoBlockchain.com wins UK registration nearly four years after abandoning FCA process

-

Video1 day ago

Video1 day agoPrepare: We Are Entering Phase 3 Of The Investing Cycle

-

NewsBeat4 days ago

NewsBeat4 days agoResidents say city high street with ‘boarded up’ shops ‘could be better’

-

Crypto World3 days ago

Crypto World3 days agoU.S. BTC ETFs register back-to-back inflows for first time in a month

.png)