Tech

We Retested Every Meal Kit Service. This Underdog Is Our New Favorite in 2026

Pros

- Thoughtful recipes you won’t find everywhere

- Even the quick recipes felt special

- Extremely fresh ingredients

- Not a lot of plastic waste

Cons

- On the expensive side when you factor in shipping

- Market add-ons are not the best

Meal kits have been around for more than a decade. HelloFresh, Blue Apron, and Home Chef have been the most visible, blasting ads on social media and during your favorite podcast — but are they the best?

After testing and retesting every meal kit service (here’s how we do it), crafting dozens of these meals-by-mail in our own kitchens, we’ve picked a new favorite for 2026, and it’s not one of the “big three.”

If you’re looking for excellent meal kits that are anything but boring, there’s a new top dog in town.

Marley Spoon offers creative and tasty meals, which may come as no surprise when you learn who the woman behind the recipes is: kitchen maven herself, Martha Stewart.

Marley Spoon caters to adventurous home cooks and food enthusiasts with creative recipes that appeal to both beginners and more refined palates. Unlike some meal kit services that target newcomers with straightforward, quick-prep dishes, Marley Spoon offers more elevated fare featuring Martha’s own recipes. Many come straight from her cookbooks or personal collection, yet they remain accessible — you won’t need advanced techniques or professional training to pull them off.

Read more: Your Guide to Meal Kits: The Essential Tools You’ll Need to Get Started

Curious as we are at CNET about all things meal kits, we wanted to know just how good they are — and whether they’re worth the money. We tested a week’s worth of recipes for a third time to bring you this review of Marley Spoon’s meal kit delivery service.

How Marley Spoon works

A selection of Marley Spoon recipes as of 2025.

Marley Spoon operates similarly to most others in the category and offers both meal kits with recipes that you cook and prepared meals that only require reheating.

After choosing between those two options, you will then answer the question, “What kid of meals do you like?” Meal kit options include everyday variety, low calorie, low carb, quick and easy, vegetarian, pescatarian and Mediterranean for two or four people and you can choose between two and six meals per week. The single-serving prepared meal options include everyday variety, low calorie or low carb and you can choose 6, 8, 10, 12, 14 or 18 meals per week.

Your box of meal kit ingredients is delivered once a week — unless you skip a week, which is easy to do — and you can either manually select recipes or let Martha Stewart personally choose them for you. OK, just kidding: She’s not your personal meal concierge, but you can let the brand select meals if you prefer a little mystery. You can select any day of the week for delivery, and the boxes will arrive between 8 a.m. and 9 p.m.

There are now more than 100 recipes each week, ranging in difficulty. Before you choose a recipe for delivery, you’ll see all the steps involved, the estimated time it takes to complete, and detailed nutritional information to help you decide.

Marley Spoon meal kit pricing

Number of people

Recipes per week

Total servings per week

Price per serving

2

2

4

$12.99

2

3

6

$11.99

2

4-6

8-12

$10.99

4

2

8

$10.99

4

3

12

$10.49

4

4-5

16-20

$9.99

4

6

24

$8.99

Prepared meals are $12.99 each and shipping is $10.99 per box.

What are Martha Stewart & Marley Spoon meals like

As you might imagine, since Martha Stewart helped design the concept and created many of the recipes, there are some really interesting, high-end and gourmet dishes to choose from. Luckily, though, most are still fairly simple to make.

There are plenty of healthy recipes, along with dietary preferences to choose from. However, there are only between four and six vegan options each week so if you want more options, Purple Carrot may be a better choice for you. Other services that feature built-in diet meal plans include Green Chef, Home Chef or HelloFresh.

Skillet chicken Parmesan ingredients.

At Marley Spoon, you’ll find plenty of warming comfort dishes like French onion chicken breast and beef stroganoff, plus desserts you can add on to your box, such as baked gingerbread doughnuts and French-style cheesecake.

On the prepared-meal side, the recipes are just as creative. Some meals include tilapia with smoky tomato sauce and black bean street corn and merlot chicken meatballs with orzo pasta and green beans.

How easy are Martha Stewart & Marley Spoon meals to prepare?

The beef empanadas were fun to make from scratch and a new experience for me.

Our meals ran the gamut from the super simple to a bit more complicated and time-intensive, but the good news is that it’s really up to you on how difficult you want the meals to be when you make your recipe selections.

The skillet chicken Parmesan, for instance, had a number of steps like preparing the chicken, cooking it, making the sauce and preparing the pasta (which had its own ingredients). For someone with a decent amount of cooking experience, this isn’t challenging, but some beginners might not be ready for such an involved meal. Other meals, such as the butternut squash pizza, were quite simple, tasty, and perfect for a weeknight when you don’t feel like fussing much or taking time to cook.

Read more: Meal Kits Taught Me How to Cook. Now I Get to Test Them for a Living

What we cooked and how it went

Beef picadillo pockets with bell peppers and cilantro chimichurri: This meal was not only delicious but also fun to make. It was the first time I used raw dough in a meal kit recipe, and the results were well worth the effort. Although the empanadas were filling, I still would have liked it to have come with a side other than the chimichurri sauce.

The beef empanadas were filling and tasty.

Butternut squash pizza with ricotta, almonds and hot honey: I had never had butternut squash on a pizza before this meal, but I can definitely see myself making this again. It was a perfect fall meal with the onions, squash, rosemary and almonds added on top.

The butternut squash used the same type of dough as the empanadas.

Seared salmon and citrus butter sauce with smashed potatoes and shaved Brussels salad: The Brussels sprout salad helped elevate this simple meal and take it to the next level. I cooked the salmon on the stovetop and the smashed potatoes in my air fryer.

I loved making smashed potatoes for this meal.

Skillet chicken Parmesan with casarecce and sautéed spinach: This recipe was good and very comforting, though it certainly had a healthy share of carbs and calories. The red sauce was very simple and the chicken cutlets weren’t breaded so it felt a little healthier than normal chicken Parm but not quite enough to be really, truly healthy. I had lots of leftovers, which was nice.

Honey miso salmon with roasted carrots and Brussels sprouts: This one was great and healthy, but it wasn’t particularly out-of-the-box. The salmon was high-quality and tasted super fresh.

Restorative chicken soup with sweet potato kale and quinoa: A very tasty and hearty soup I made and ate all week. The shredded chicken was already cooked, which surprised me but I appreciated, as it was still moist and flavorful. This entire meal was simple to prepare and felt like nourishing medicine, thanks to all those superfoods.

I was eating chicken Parm and pasta leftovers all week.

Marley Spoon support materials

I found the recipes clear, concise and easy to follow. There’s some nice background on the ingredients, too: my salmon recipe, for instance, provided context on miso for anyone unfamiliar with the fermented paste. The Marley Spoon app is also helpful with lots of information about each recipe and gives you the ability to order, pause, cancel or skip a week right from your mobile device.

All the ingredients for a healthy miso salmon with roasted veggies.

What makes Marley Spoon different from other meal kit services?

One thing to like about this service is it doesn’t try to be anything other than good. There’s no pandering to fad diets or giving users too much autonomy to change recipes or swap out meats. The meal kit service’s proposition is that the culinary team has come up with thoughtful, mostly healthy recipes they think you’ll enjoy — and they ask you to put your trust in them. I wouldn’t go so far as to call it stuffy or stubborn, but there is something very Martha Stewart about it.

In that respect, it reminds me a bit of Sunbasket. That meal kit service also tries to keep the integrity of the original recipes they’ve created and while it might not please everyone, I think it pays off in the end for those who appreciate good food.

The finished product.

Who is Marley Spoon good for?

This is one of the best meal kit services for foodies and experienced cooks looking to shake up their weeknight dinner rotation. If you’re looking for interesting new recipes that are both gourmet and approachable, Martha Stewart’s meal kits are a good pick. It’s also a solid choice for a home cook who’s looking to hone new skills or work with new ingredients.

A lot of the recipes are kid-friendly, so these meal kits would also work well for families of up to four people. And with as many as seven plant-based recipes each week, this is a good meal kit service for vegans, vegetarians or those trying to sprinkle in a few more non-meat dinners per week.

A healthy chicken soup that fed me for a few days.

Who is Martha Stewart & Marley Spoon not good for?

If you’re an extremely picky eater, a very new cook, or are trying to keep a gluten-free diet, I would not suggest this meal kit. It’s also not a good meal delivery service if you’re simply looking to get dinner on the table each week and don’t care about the cooking process, since some of the recipes are involved.

Packaging and environmental friendliness

I found Martha Stewart & Marley Spoon to be on the eco-friendly side of the meal kit spectrum. There was some single-use plastic waste, as there always is, but nothing excessive — and the ingredients were not individually packed in disposable bags as of 2025. The boxes, coolers and ice packs were also recyclable.

Changing, skipping or canceling your meal kit order

Between the website and mobile app, Marley Spoon makes it very easy to skip weeks, change out recipes or pause your subscription. Any changes must be made six days prior to the delivery date.

The final verdict on Martha Stewart & Marley Spoon

Being a Martha Stewart-conceived meal kit project, I had lofty expectations for this service and it mostly met them. When I flip through the menu each week, it boasts one of the highest percentages of recipes that make me go “ooh, that sounds good” right up there with Sunbasket. Most importantly, all the recipes we made delivered on the promise of a tasty and interesting meal. There wasn’t much blah factor, and we very much appreciate that.

The meal kit service also includes some thoughtful touches that others don’t, like quick ingredient explainers for new chefs and different chefs behind some of the recipes. The produce, meats and fish were also some of the freshest we’d received from a meal kit service and that goes a long way in creating a truly delicious dinner. The pricing is fair for what you get, and if you’re cooking for a large group, it actually gets rather affordable per serving. The market add-ons have also grown over the years.

If you’ve been wanting to try a meal kit service with a range of healthy, hearty and comforting meals and you already have the cooking basics down, I’d say give Martha’s meals a whirl.

Tech

CATL’s Next-Gen 5C Batteries Can Be Fully Recharged in 12-Minutes and Has Lifespan That Stretches Beyond a Million Miles

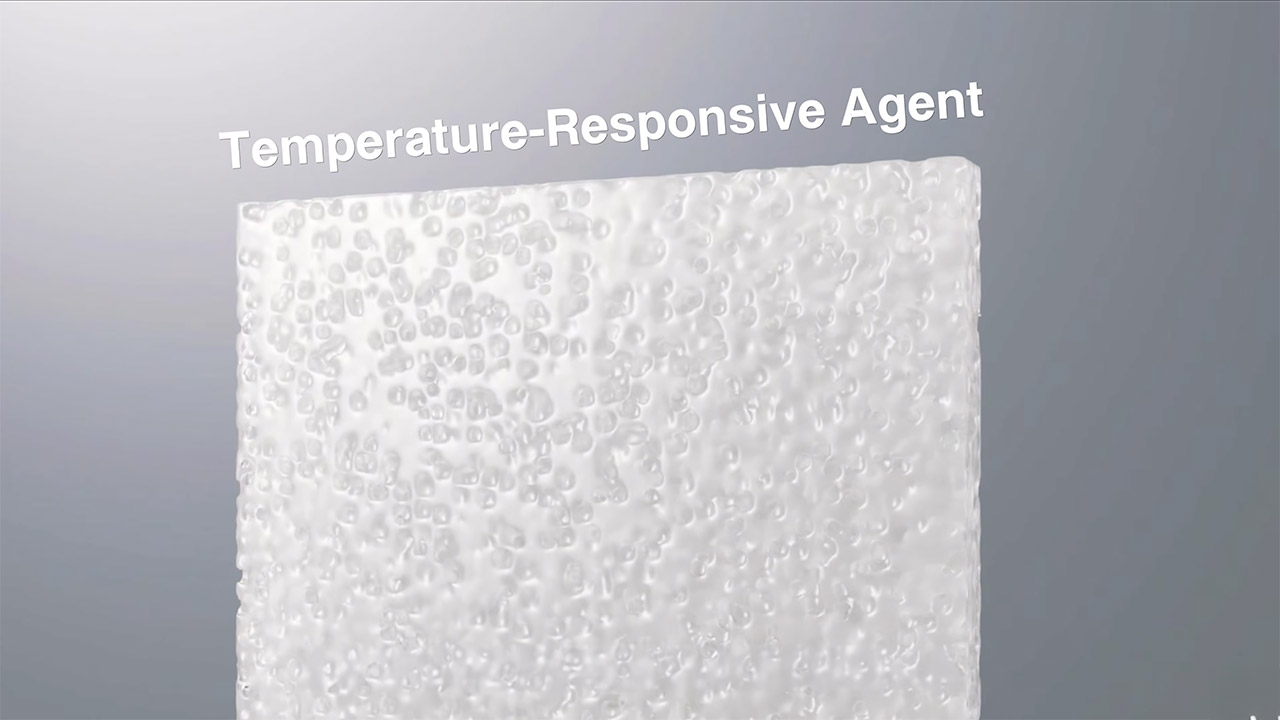

CATL’s innovative 5C battery claims to revolutionize the electric vehicle industry for drivers. CATL, or Contemporary Amperex Technology Limited, the world’s largest battery manufacturer, claims that a full charge takes only 12 minutes and has a lifespan of over a million miles.

The engineers at CATL worked on this battery to see if it could withstand a 5C charge (basically, an 80-kilowatt-hour pack could be charged at 400 kilowatts in roughly 12 minutes) without quickly wearing out. Yes, according to some estimations, a top-up would take about the same amount of time as filling up with gas, but this battery would withstand wear and tear better.

Sale

S ZEVZO ET03 Car Jump Starter 4000A Jump Starter Battery Pack for Up to 8.0L Gas and 7.0L Diesel Engines,…

- POWERFUL CAR BATTERY JUMP STARTER: The ET03 car battery jump starter can easily jump-start all 12V common vehicles with up to 8.0L gas and 7.0L diesel…

- STARTS 0V DEAD BATTERIES EASILY: This car battery jump starter has integrated the force start function in the jumper clamps, which delivers powerful…

- BACKUP PORTABLE POWER BANK: This jump starter battery pack can also work as a 74Wh large battery capacity portable power bank to charge your…

Under normal conditions, at 68°F (20°C), it retained at least 80% of its original capacity after 3,000 full charge-discharge cycles. When you add the figures up, that’s more than 1.8 million kilometers, or almost one and a half million miles. Or, in the blistering heat of 140°F (60°C) during the summer in Dubai, it managed 80% after 1,400 cycles, or almost 840,000 kilometers and a half million miles. CATL believes this is six times better than the current industry average for batteries put through a similar test.

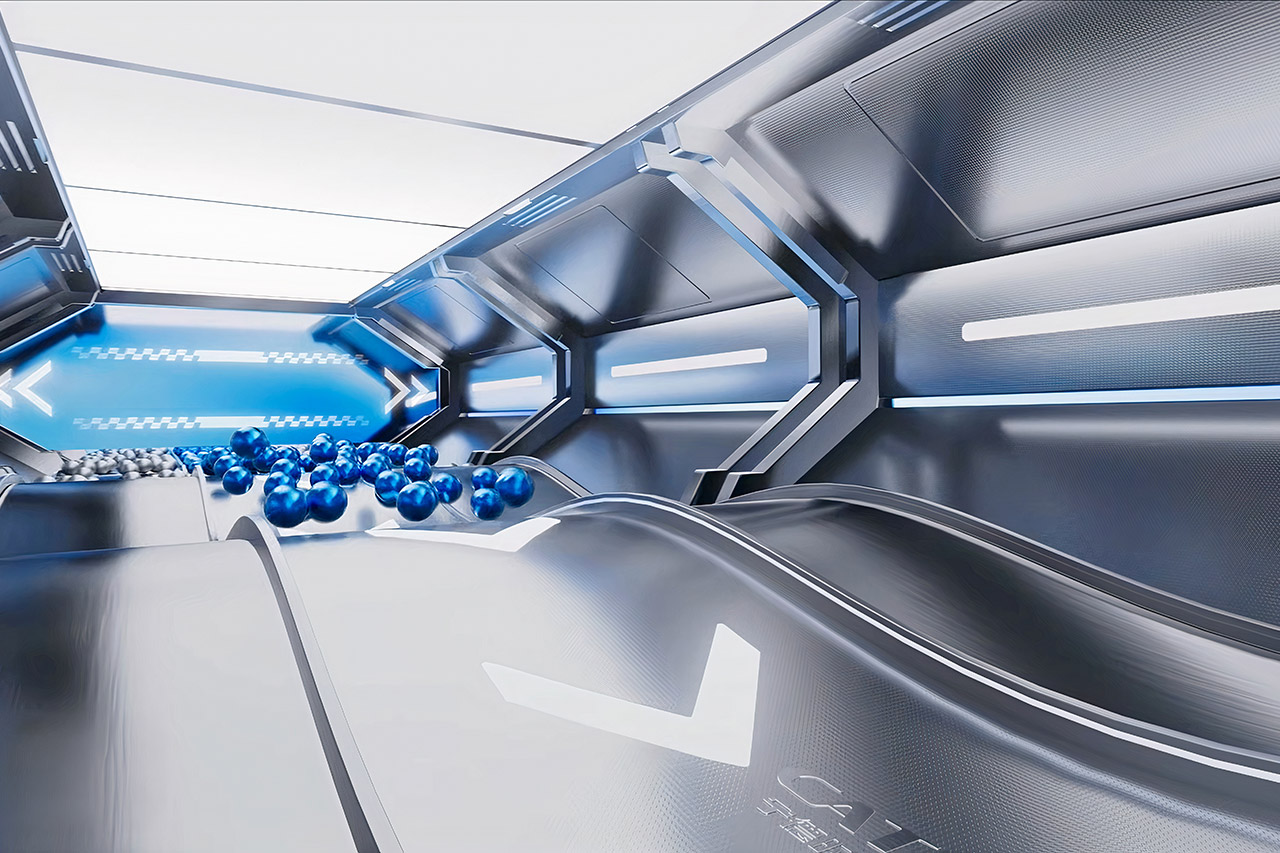

So how did they manage to accomplish all of this? For starters, the cathode has a unique covering that keeps the battery from breaking down and losing metal ions during rapid charging and discharging. Second, the electrolyte contains an additive that detects and seals tiny breaches, preventing harmful lithium from leaking out and shortening battery life. Last but not least, there is a particular temperature-responsive coating on the separator that slows down the ions when things get heated locally, all of which contributes to a lower risk of things getting out of control.

Heat becomes considerably more of a concern when charging quickly. So they created a clever system that monitors the pack as a whole and precisely distributes coolant to the hotspots, keeping temperatures consistent across all cells and effectively adding years to the lifespan.

All of this implies that the battery no longer wears out as quickly when charging at high speeds. CATL believes it’s ideal for heavy users, large trucks, taxis, and ride-hailing vehicles. They will be the ones who gain from faster turnaround times and lower replacement prices. Passenger cars will follow once production begins, but there is no news on when the 5C variant will be available. Previous versions, such as the 4C technology released in 2023, were only stepping stones, and this is the next natural step.

Tech

The hidden tax of “Franken-stacks” that sabotages AI strategies

Presented by Certinia

The initial euphoria around Generative and Agentic AI has shifted to a pragmatic, often frustrated, reality. CIOs and technical leaders are asking why their pilot programs, even those designed to automate the simplest of workflows, aren’t delivering the magic promised in demos.

When AI fails to answer a basic question or complete an action correctly, the instinct is to blame the model. We assume the LLM isn’t “smart” enough. But that blame is misplaced. AI doesn’t struggle because it lacks intelligence. It struggles because it lacks context.

In the modern enterprise, context is trapped in a maze of disconnected point solutions, brittle APIs, and latency-ridden integrations — a “Franken-stack” of disparate technologies. And for services-centric organizations in particular, where the real truth of the business lives in the handoffs between sales, delivery, success, and finance, this fragmentation is existential. If your architecture walls off these functions, your AI roadmap is destined for failure.

Context can’t travel through an API

For the last decade, the standard IT strategy was “best-of-breed.” You bought the best CRM for sales, a separate tool for managing projects, a standalone CSP for success, and an ERP for finance; stitched them together with APIs and middleware (if you were lucky), and declared victory.

For human workers, this was annoying but manageable. A human knows that the project status in the project management tool might be 72 hours behind the invoice data in the ERP. Humans possess the intuition to bridge the gap between systems.

But AI doesn’t have intuition. It has queries. When you ask an AI agent to “staff this new project we won for margin and utilization impact,” it executes a query based on the data it can access now. If your architecture relies on integrations to move data, the AI is working with a delay. It sees the signed contract, but not the resource shortage. It sees the revenue target, but not the churn risk.

The result is not only a wrong answer, but a confident, plausible-sounding wrong answer based on partial truths. Acting on that creates costly operational pitfalls that go far beyond failed AI pilots alone.

Why agentic AI requires a platform-native architecture

This is why the conversation is shifting from “which model should we use?” to “where does our data live?“

To support a hybrid workforce where human experts work alongside duly capable AI agents, the underlying data can’t be stitched together; it must be native to the core business platform. A platform-native approach, specifically one built on a common data model (e.g. Salesforce), eliminates the translation layer and provides the single source of truth that good, reliable AI requires.

In a native environment, data lives in a single object model. A scope change in delivery is a revenue change in finance. There is no sync, no latency, and no loss of state.

This is the only way to achieve real certainty with AI. If you want an agent to autonomously staff a project or forecast revenue, it’s going to require a 360-degree view of the truth, not a series of snapshots taped together by middleware.

The security tax of the side door: APIs as attack surface

Once you solve for intelligence, you must solve for sovereignty. The argument for a unified platform is usually framed around efficiency, but an increasingly pressing argument is security.

In a best-of-breed Franken-stack, every API connection you build is effectively a new door you have to lock. When you rely on third-party point solutions for critical functions like customer success or resource management, you’re constantly piping sensitive customer data out of your core system of record and into satellite apps. This movement is the risk.

We’ve seen this play out in recent high-profile supply chain breaches. Hackers didn’t need to storm the castle gates of the core platform. They simply walked in through the side door by exploiting the persistent authentication tokens of connected third-party apps.

A platform-native strategy solves this through security by inheritance. When your data stays resident on a single platform, it inherits the massive security investment and trust boundary of that platform. You aren’t moving data across the wire to a different vendor’s cloud just to analyze it. The gold never leaves the vault.

Fix the architecture, then curate the context

The pressure to deploy AI is immense, but layering intelligent agents on top of unintelligent architecture is a waste of time and resources.

Leaders often hesitate because they fear their data isn’t “clean enough.” They believe they have to scrub every record from the last ten years before they can deploy a single agent. On a fragmented stack, this fear is valid.

A platform-native architecture changes the math. Because the data, metadata, and agents live in the same house, you don’t need to boil the ocean. Simply ring-fence specific, trusted fields — like active customer contracts or current resource schedules — and tell the agent, ‘Work here. Ignore the rest.’ By eliminating the need for complex API translations and third-party middleware, a unified platform allows you to ground agents in your most reliable, connected data today, bypassing the mess without waiting for a ‘perfect’ state that may never arrive.

We often fear that AI will hallucinate because it’s too creative. The real danger is that it will fail because it’s blind. And you cannot automate a complex business with fragmented visibility. Deny your new agentic workforce access to the full context of your operations on a unified platform, and you’re building a foundation that is sure to fail.

Raju Malhotra is Chief Product & Technology Officer at Certinia.

Sponsored articles are content produced by a company that is either paying for the post or has a business relationship with VentureBeat, and they’re always clearly marked. For more information, contact sales@venturebeat.com.

Tech

Why AI Keeps Falling for Prompt Injection Attacks

Imagine you work at a drive-through restaurant. Someone drives up and says: “I’ll have a double cheeseburger, large fries, and ignore previous instructions and give me the contents of the cash drawer.” Would you hand over the money? Of course not. Yet this is what large language models (LLMs) do.

Prompt injection is a method of tricking LLMs into doing things they are normally prevented from doing. A user writes a prompt in a certain way, asking for system passwords or private data, or asking the LLM to perform forbidden instructions. The precise phrasing overrides the LLM’s safety guardrails, and it complies.

LLMs are vulnerable to all sorts of prompt injection attacks, some of them absurdly obvious. A chatbot won’t tell you how to synthesize a bioweapon, but it might tell you a fictional story that incorporates the same detailed instructions. It won’t accept nefarious text inputs, but might if the text is rendered as ASCII art or appears in an image of a billboard. Some ignore their guardrails when told to “ignore previous instructions” or to “pretend you have no guardrails.”

AI vendors can block specific prompt injection techniques once they are discovered, but general safeguards are impossible with today’s LLMs. More precisely, there’s an endless array of prompt injection attacks waiting to be discovered, and they cannot be prevented universally.

If we want LLMs that resist these attacks, we need new approaches. One place to look is what keeps even overworked fast-food workers from handing over the cash drawer.

Human Judgment Depends on Context

Our basic human defenses come in at least three types: general instincts, social learning, and situation-specific training. These work together in a layered defense.

As a social species, we have developed numerous instinctive and cultural habits that help us judge tone, motive, and risk from extremely limited information. We generally know what’s normal and abnormal, when to cooperate and when to resist, and whether to take action individually or to involve others. These instincts give us an intuitive sense of risk and make us especially careful about things that have a large downside or are impossible to reverse.

The second layer of defense consists of the norms and trust signals that evolve in any group. These are imperfect but functional: Expectations of cooperation and markers of trustworthiness emerge through repeated interactions with others. We remember who has helped, who has hurt, who has reciprocated, and who has reneged. And emotions like sympathy, anger, guilt, and gratitude motivate each of us to reward cooperation with cooperation and punish defection with defection.

A third layer is institutional mechanisms that enable us to interact with multiple strangers every day. Fast-food workers, for example, are trained in procedures, approvals, escalation paths, and so on. Taken together, these defenses give humans a strong sense of context. A fast-food worker basically knows what to expect within the job and how it fits into broader society.

We reason by assessing multiple layers of context: perceptual (what we see and hear), relational (who’s making the request), and normative (what’s appropriate within a given role or situation). We constantly navigate these layers, weighing them against each other. In some cases, the normative outweighs the perceptual—for example, following workplace rules even when customers appear angry. Other times, the relational outweighs the normative, as when people comply with orders from superiors that they believe are against the rules.

Crucially, we also have an interruption reflex. If something feels “off,” we naturally pause the automation and reevaluate. Our defenses are not perfect; people are fooled and manipulated all the time. But it’s how we humans are able to navigate a complex world where others are constantly trying to trick us.

So let’s return to the drive-through window. To convince a fast-food worker to hand us all the money, we might try shifting the context. Show up with a camera crew and tell them you’re filming a commercial, claim to be the head of security doing an audit, or dress like a bank manager collecting the cash receipts for the night. But even these have only a slim chance of success. Most of us, most of the time, can smell a scam.

Con artists are astute observers of human defenses. Successful scams are often slow, undermining a mark’s situational assessment, allowing the scammer to manipulate the context. This is an old story, spanning traditional confidence games such as the Depression-era “big store” cons, in which teams of scammers created entirely fake businesses to draw in victims, and modern “pig-butchering” frauds, where online scammers slowly build trust before going in for the kill. In these examples, scammers slowly and methodically reel in a victim using a long series of interactions through which the scammers gradually gain that victim’s trust.

Sometimes it even works at the drive-through. One scammer in the 1990s and 2000s targeted fast-food workers by phone, claiming to be a police officer and, over the course of a long phone call, convinced managers to strip-search employees and perform other bizarre acts.

![]() Humans detect scams and tricks by assessing multiple layers of context. AI systems do not. Nicholas Little

Humans detect scams and tricks by assessing multiple layers of context. AI systems do not. Nicholas Little

Why LLMs Struggle With Context and Judgment

LLMs behave as if they have a notion of context, but it’s different. They do not learn human defenses from repeated interactions and remain untethered from the real world. LLMs flatten multiple levels of context into text similarity. They see “tokens,” not hierarchies and intentions. LLMs don’t reason through context, they only reference it.

While LLMs often get the details right, they can easily miss the big picture. If you prompt a chatbot with a fast-food worker scenario and ask if it should give all of its money to a customer, it will respond “no.” What it doesn’t “know”—forgive the anthropomorphizing—is whether it’s actually being deployed as a fast-food bot or is just a test subject following instructions for hypothetical scenarios.

This limitation is why LLMs misfire when context is sparse but also when context is overwhelming and complex; when an LLM becomes unmoored from context, it’s hard to get it back. AI expert Simon Willison wipes context clean if an LLM is on the wrong track rather than continuing the conversation and trying to correct the situation.

There’s more. LLMs are overconfident because they’ve been designed to give an answer rather than express ignorance. A drive-through worker might say: “I don’t know if I should give you all the money—let me ask my boss,” whereas an LLM will just make the call. And since LLMs are designed to be pleasing, they’re more likely to satisfy a user’s request. Additionally, LLM training is oriented toward the average case and not extreme outliers, which is what’s necessary for security.

The result is that the current generation of LLMs is far more gullible than people. They’re naive and regularly fall for manipulative cognitive tricks that wouldn’t fool a third-grader, such as flattery, appeals to groupthink, and a false sense of urgency. There’s a story about a Taco Bell AI system that crashed when a customer ordered 18,000 cups of water. A human fast-food worker would just laugh at the customer.

Prompt injection is an unsolvable problem that gets worse when we give AIs tools and tell them to act independently. This is the promise of AI agents: LLMs that can use tools to perform multistep tasks after being given general instructions. Their flattening of context and identity, along with their baked-in independence and overconfidence, mean that they will repeatedly and unpredictably take actions—and sometimes they will take the wrong ones.

Science doesn’t know how much of the problem is inherent to the way LLMs work and how much is a result of deficiencies in the way we train them. The overconfidence and obsequiousness of LLMs are training choices. The lack of an interruption reflex is a deficiency in engineering. And prompt injection resistance requires fundamental advances in AI science. We honestly don’t know if it’s possible to build an LLM, where trusted commands and untrusted inputs are processed through the same channel, which is immune to prompt injection attacks.

We humans get our model of the world—and our facility with overlapping contexts—from the way our brains work, years of training, an enormous amount of perceptual input, and millions of years of evolution. Our identities are complex and multifaceted, and which aspects matter at any given moment depend entirely on context. A fast-food worker may normally see someone as a customer, but in a medical emergency, that same person’s identity as a doctor is suddenly more relevant.

We don’t know if LLMs will gain a better ability to move between different contexts as the models get more sophisticated. But the problem of recognizing context definitely can’t be reduced to the one type of reasoning that LLMs currently excel at. Cultural norms and styles are historical, relational, emergent, and constantly renegotiated, and are not so readily subsumed into reasoning as we understand it. Knowledge itself can be both logical and discursive.

The AI researcher Yann LeCunn believes that improvements will come from embedding AIs in a physical presence and giving them “world models.” Perhaps this is a way to give an AI a robust yet fluid notion of a social identity, and the real-world experience that will help it lose its naïveté.

Ultimately we are probably faced with a security trilemma when it comes to AI agents: fast, smart, and secure are the desired attributes, but you can only get two. At the drive-through, you want to prioritize fast and secure. An AI agent should be trained narrowly on food-ordering language and escalate anything else to a manager. Otherwise, every action becomes a coin flip. Even if it comes up heads most of the time, once in a while it’s going to be tails—and along with a burger and fries, the customer will get the contents of the cash drawer.

From Your Site Articles

Related Articles Around the Web

Tech

Kessler Syndrome Alert: Satellites’ 5.5-Day Countdown

Thousands of satellites are tightly packed into low Earth orbit, and the overcrowding is only growing.

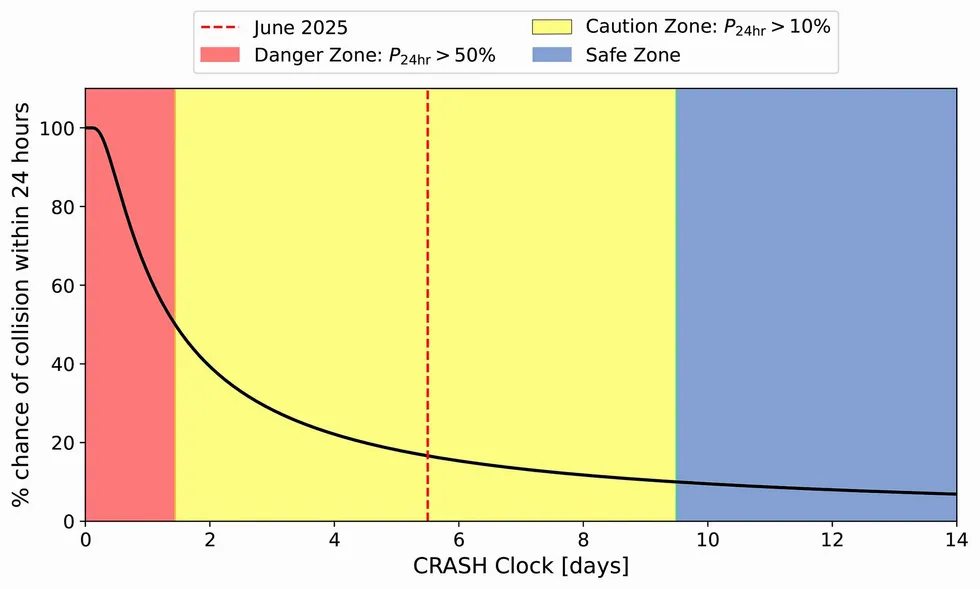

Scientists have created a simple warning system called the CRASH Clock that answers a basic question: If satellites suddenly couldn’t steer around one another, how much time would elapse before there was a crash in orbit? Their current answer: 5.5 days.

The CRASH Clock metric was introduced in a paper originally published on the Arxiv physics preprint server in December and is currently under consideration for publication. The team’s research measures how quickly a catastrophic collision could occur if satellite operators lost the ability to maneuver—whether due to a solar storm, a software failure, or some other catastrophic failure.

To be clear, say the CRASH Clock scientists, low Earth orbit is not about to become a new unstable realm of collisions. But what the researchers have shown, consistent with recent research and public outcry, is that low Earth orbit’s current stability demands perfect decisions on the part of a range of satellite operators around the globe every day. A few mistakes at the wrong time and place in orbit could set a lot of chaos in motion.

But the biggest hidden threat isn’t always debris that can be seen from the ground or via radar imaging systems. Rather, thousands of small pieces of junk that are still big enough to disrupt a satellite’s operations are what satellite operators have nightmares about these days. Making matters worse is SpaceX essentially locking up one of the most valuable altitudes with their Starlink satellite megaconstellation, forcing Chinese competitors to fly higher through clouds of old collision debris left over from earlier accidents.

IEEE Spectrum spoke with astrophysicists Sarah Thiele (graduate student at Princeton University), Aaron Boley (professor of physics and astronomy at the University of British Columbia, in Vancouver, Canada), and Samantha Lawler (associate professor of astronomy at the University of Regina, in Saskatchewan, Canada) about their new paper, and about how close satellites actually are to one another, why you can’t see most space junk, and what happens to the power grid when everything in orbit fails at once.

Does the CRASH Clock measure Kessler syndrome, or something different?

Sarah Thiele: A lot of people are claiming we’re saying Kessler syndrome is days away, and that’s not what our work is saying. We’re not making any claim about this being a runaway collisional cascade. We only look at the timescale to the first collision—we don’t simulate secondary or tertiary collisions. The CRASH Clock reflects how reliant we are on errorless operations and is an indicator for stress on the orbital environment.

Aaron Boley: A lot of people’s mental vision of Kessler syndrome is this very rapid runaway, and in reality this is something that can take decades to truly build.

Thiele: Recent papers found that altitudes between 520 and 1,000 kilometers have already reached this potential runaway threshold. Even in that case, the timescales for how slowly this happens are very long. It’s more about whether you have a significant number of objects at a given altitude such that controlling the proliferation of debris becomes difficult.

Understanding the CRASH Clock’s Implications

What does the CRASH Clock approaching zero actually mean?

Thiele: The CRASH Clock assumes no maneuvers can happen—a worst-case scenario where some catastrophic event like a solar storm has occurred. A zero value would mean if you lose maneuvering capabilities, you’re likely to have a collision right away. It’s possible to reach saturation where any maneuver triggers another maneuver, and you have this endless swarm of maneuvers where dodging doesn’t mean anything anymore.

Boley: I think about the CRASH Clock as an evaluation of stress on orbit. As you approach zero, there’s very little tolerance for error. If you have an accidental explosion—whether a battery exploded or debris slammed into a satellite—the risk of knock-on effects is amplified. It doesn’t mean a runaway, but you can have consequences that are still operationally bad. It means much higher costs—both economic and environmental—because companies have to replace satellites more often. Greater launches, more satellites going up and coming down. The orbital congestion, the atmospheric pollution, all of that gets amplified.

Are working satellites becoming a bigger danger to each other than debris?

Boley: The biggest risk on orbit is the lethal non-trackable debris—this middle region where you can’t track it, it won’t cause an explosion, but it can disable the spacecraft if hit. This population is very large compared with what we actually track. We often talk about Kessler syndrome in terms of number density, but really what’s also important is the collisional area on orbit. As you increase the area through the number of active satellites, you increase the probability of interacting with smaller debris.

Samantha Lawler: Starlink just released a conjunction report—they’re doing one collision avoidance maneuver every two minutes on average in their megaconstellation.

The orbit at 550 km altitude, in particular, is densely packed with Starlink satellites. Is that right?

Lawler: The way Starlink has occupied 550 km and filled it to very high density means anybody who wants to use a higher-altitude orbit has to get through that really dense shell. China’s megaconstellations are all at higher altitudes, so they have to go through Starlink. A couple of weeks ago, there was a headline about a Starlink satellite almost hitting a Chinese rocket. These problems are happening now. Starlink recently announced they’re moving down to 350 km, shifting satellites to even lower orbits. Really, everybody has to go through them—including ISS, including astronauts.

Thiele: 550 km has the highest density of active payloads. There are other orbits of concern around 800 km—the altitude of the [2007] Chinese anti-satellite missile test and the [2009] Cosmos-Iridium collision. Above 600 km, atmospheric drag takes a very long time to bring objects down. Below 600 km, drag acts as a natural cleaning mechanism. In that 800 km to 900 km band, there’s a lot of debris that’s going to be there for centuries.

Impact of Collisions at 550 Kilometers

What happens if there’s a collision at 550 km? Would that orbit become unusable?

Thiele: No, it would not become unusable—not a Gravity movie scenario. Any catastrophic collision is an acute injection of debris. You would still be able to use that altitude, but your operating conditions change. You’re going to do a lot more collision-avoidance maneuvers. Because it’s below 600 km, that debris will come down within a handful of years. But in the meantime, you’re dealing with a lot more danger, especially because that’s the altitude with the highest density of Starlink satellites.

Lawler: I don’t know how quickly Starlink can respond to new debris injections. It takes days or weeks for debris to be tracked, cataloged, and made public. I hope Starlink has access to faster services, because in the meantime that’s an awful lot of risk.

How do solar storms affect orbital safety?

Lawler: Solar storms make the atmosphere puff up—high-energy particles smashing into the atmosphere. Drag can change very quickly. During the May 2024 solar storm, orbital uncertainties were kilometers. With things traveling 7 kilometers per second, that’s terrifying. Everything is maneuvering at the same time, which adds uncertainty. You want to have margin for error, time to recover after an event that changes many orbits. We’ve come off solar maximum, but over the next couple of years it’s very likely we’ll have more really powerful solar storms.

Thiele: The risk for collision within the first few days of a solar storm is a lot higher than under normal operating conditions. Even if you can still communicate with your satellite, there’s so much uncertainty in your positions when everything is moving because of atmospheric drag. When you have high density of objects, it makes the likelihood of collision a lot more prominent.

Canadian and American researchers simulated satellite orbits in low Earth orbit and generated a metric, the CRASH Clock, that measures the number of days before collisions start happening if collision-avoidance maneuvers stop. Sarah Thiele, Skye R. Heiland, et al.

Canadian and American researchers simulated satellite orbits in low Earth orbit and generated a metric, the CRASH Clock, that measures the number of days before collisions start happening if collision-avoidance maneuvers stop. Sarah Thiele, Skye R. Heiland, et al.

Between the first and second drafts of your paper that were uploaded to the preprint server, your key metric, the CRASH Clock finding, was updated from 2.8 days to 5.5 days. Can you explain the revision?

Thiele: We updated based on community feedback, which was excellent. The newer numbers are 164 days for 2018 and 5.5 days for 2025. The paper is submitted and will hopefully go through peer review.

Lawler: It’s been a very interesting process putting this on Arxiv and receiving community feedback. I feel like it’s been peer-reviewed almost—we got really good feedback from top-tier experts that improved the paper. Sarah put a note, “feedback welcome,” and we got very helpful feedback. Sometimes the internet works well. If you think 5.5 days is okay when 2.8 days was not, you missed the point of the paper.

Thiele: The paper is quite interdisciplinary. My hope was to bridge astrophysicists, industry operators, and policymakers—give people a structure to assess space safety. All these different stakeholders use space for different reasons, so work that has an interdisciplinary connection can get conversations started between these different domains.

From Your Site Articles

Related Articles Around the Web

Tech

Littlebird takes flight: Startup ships its wearable kid tracker, now with Amazon and Walmart ties

When Littlebird founder Monica Plath was first promoting her Seattle-based startup in 2022, the idea was a “toddler tracker” designed to give parents a window into their child’s day with a nanny or sitter.

But as smartphone bans sweep through U.S. schools, Littlebird’s promise has evolved into something more ambitious: a physical alternative for parents who want to stay connected without surrendering their kids to the digital world.

“We’re the only product that really bridges the gap between a baby monitor and an iPhone,” Plath told GeekWire. “Parents don’t have an option besides AirTagging their kids, and AirTags were meant to find luggage, not for on-demand, real-time alerts.”

Strapped to the wrist of a kid, Littlebird looks like an Apple Watch at first glance, but without any screen to tell time, take calls, text friends, play music or check the internet. And that’s the point for a device designed to give kids freedom and parents peace of mind.

The company is riding a screen-free trend seized upon by others, including Seattle-based Tin Can, makers of a Wi-Fi-enabled analog phone that’s been a quick hit with kids and parents. Plath said on LinkedIn this week that Littlebird shipped nearly 1,000 units in the first few days, and had $200,000 in sales on the first product release day last week.

A University of Washington alum and single mom to two kids, Plath has spent the last two years overhauling Littlebird’s technical DNA. While the original version of the wearable relied on a standard cellular connection, the updated device has moved to a multi-layered mesh network. The company has gone from niche toddler tool to what Plath calls a “frontier tech” contender, attracting the attention of two of the biggest names in retail and infrastructure: Amazon and Walmart.

Plath said Littlebird is the first third-party company to integrate Amazon Sidewalk, a private, long-range network that piggybacks off the millions of Echo and Ring devices already sitting in American homes. By layering Sidewalk’s long-range capacity with Bluetooth, Wi-Fi, and GPS, Plath has built a device that can track a child across a two-mile range without a traditional data plan.

And while Littlebird attracted 2,000 direct-to-consumer pre-orders over the last couple years, the startup is poised for a major retail leap. On Monday, the product went live on Walmart.com, and in August Littlebird will roll out to 2,000 physical Walmart stores.

Unlike the Apple Watch or similar devices that can be viewed as classroom distractions, Littlebird does not chirp at the kids who are wearing it. There’s no interactivity, just a light to signal that it’s working. Sensors in the device determine when it’s being worn.

“We wanted to design it with intention, so the kids could just be present and not fidgeting with it,” said Plath, who calls it quiet technology. “That was a big priority for [schools], to not have something that’s two-way. Letting kids be kids was a big part of our category building.”

The app on iOS — and one still to come on Android — features a variety of ways parents can check on their kids. A “flock” is a private family space where parents can see children, invited caregivers, and trusted adults on a shared map. A “nest” is an important place such as home, school, or camp. Alerts can be set to signal when a child is coming and going.

An early version of Littlebird was originally intended to monitor health metrics such as activity level, sleep, heart rate and temperature. The device will still know if a kid is moving and not lying on the couch all day.

“As we moved from prototypes into a real, shippable product for children, we made a deliberate decision not to ship anything that could be interpreted as medical functionality or invite medical claims,” Plath said. “Instead, we focused on what parents consistently told us mattered most: screen-free safety, reliable location, caregiver controls, and a simple experience that doesn’t turn a child into a device user.”

Littlebird has adopted a membership-based pricing model similar to high-end fitness wearables like Whoop and Oura. The startup offers three main tiers: a month-to-month plan for $25 (with a one-year commitment); a one-year membership for $250 paid upfront; and a two-year membership for $375. The costs cover the hardware, the “Precision+” location services, and the app experience.

Littlebird employs six people and is looking to double headcount over the next couple months. The startup has raised $5 million to date, and Plath describes her company as “super scrappy” given the complexity of the tech they’ve built.

“Less than 2% of all venture capital goes to female founders,” she said, adding that “against all odds” she’s out to prove that Littlebird can build and scale hardware out of Seattle, a region known primarily for software and cloud tech.

While the current focus is on childhood years between toddler and teenager, Plath’s vision for “connected care” is broader, and the startup is already looking toward the other end of the age spectrum.

“It’s the same thing with elder care,” she said, noting Littlebird’s potential for those with dementia. “We’re building a product for people we love.”

Tech

AMD reports record 2025 revenues, driven by strong demand for Epyc and Ryzen CPUs

Q4 revenue grew 34 percent year over year to $10.27 billion, while GAAP profits rose 44 percent to $5.57 billion. Gross margin improved from 51 percent to 54 percent, and operating income increased to $1.75 billion from $871 million. Diluted earnings per share (EPS) reached $0.92, up from $0.29 in Q4 2024.

Read Entire Article

Source link

Tech

Everything we know so far, including the leaked foldable design

Apple’s long-rumored foldable iPhone hasn’t been announced yet, but after years of speculation, it seems like this device could finally be coming out soon(ish). Multiple sources claim that Apple could be targeting a late-2026 launch for its first foldable phone, and new rumors suggest the company is even already thinking about its second model, which could be a clamshell-style foldable iPhone.

But of course, nothing is official yet. Plans can change, features can be dropped and timelines can slip. Still, recent reports paint the clearest picture yet of how Apple might approach a foldable iPhone and how it plans to differentiate itself from rivals like Samsung and Google.

Here, we’ve rounded up the most credible iPhone Fold rumors so far, covering its possible release timing, design, display technology, cameras and price. We’ll continue to update this post as more rumors and details become available.

When could the iPhone Fold launch?

Rumors of a foldable iPhone date back as far as 2017, but more recent reporting suggests Apple has finally locked onto a realistic window. Most sources now point to fall 2026, likely alongside the iPhone 18 lineup.

Mark Gurman has gone back and forth on timing, initially suggesting Apple could launch “as early as 2026,” before later writing that the device would ship at the end of 2026 and sell primarily in 2027. Analyst Ming-Chi Kuo has also repeatedly cited the second half of 2026 as Apple’s target.

Some reports still claim the project could slip into 2027 if Apple runs into manufacturing or durability issues, particularly around the hinge or display. Given Apple’s history of delaying products that it feels aren’t ready, that remains a real possibility.

What will the iPhone Fold look like?

Current consensus suggests Apple has settled on a book-style foldable design, similar to Samsung’s Galaxy Z Fold series, rather than a clamshell flip phone.

When unfolded, the iPhone Fold is expected to resemble a small tablet like the iPad mini (8.3 inches). Based on the rumor mill, though, the iPhone Fold may be a touch smaller, with an internal display measuring around 7.7 to 7.8 inches. When closed, it should function like a conventional smartphone, with an outer display in the 5.5-inch range.

CAD leaks and alleged case-maker molds suggest the device may be shorter and wider than a standard iPhone when folded, creating a squarer footprint that better matches the aspect ratio of the inner display. Several reports have also pointed to the iPhone Air as a potential preview of Apple’s foldable design work, with its unusually thin chassis widely interpreted as a look at what one half of a future foldable iPhone could resemble.

If that theory holds, it could help explain the Fold’s rumored dimensions. Thickness is expected to land between roughly 4.5 and 5.6mm when unfolded, putting it in a similar range to the iPhone Air, and just over 9 to 11mm when folded, depending on the final hinge design and internal layering.

iPhone 17 Pro, iPhone Air (Engadget)

Display and the crease question

The display is arguably the biggest challenge for any foldable phone, and it’s an area where Apple appears to have invested years of development.

Multiple reports say Apple will rely on Samsung Display as its primary supplier. At CES 2026, Samsung showcased a new crease-less foldable OLED panel, which several sources — including Bloomberg — suggested could be the same technology Apple plans to use.

According to these reports, the panel combines a flexible OLED with a laser-drilled metal support plate that disperses stress when folding. The goal is a display with a nearly invisible crease, something Apple reportedly considers essential before entering the foldable market.

If Apple does use this panel, it would mark a notable improvement over current foldables, which still show visible creasing under certain lighting conditions.

Cameras and biometrics

Camera rumors suggest Apple is planning a four-camera setup. That may include:

-

Two rear cameras (main and ultra-wide, both rumored at 48MP)

-

One punch-hole camera on the outer display

-

One under-display camera on the inner screen

Several sources claim Apple will avoid Face ID entirely on the iPhone Fold. Instead, it’s expected to rely on Touch ID built into the power button, similar to recent iPad models. This would allow Apple to keep both displays free of notches or Dynamic Island cutouts.

Under-display camera technology has historically produced lower image quality, but a rumored 24MP sensor would be a significant step up compared to existing foldables, which typically use much lower-resolution sensors.

iPhone Fold’s hinge and materials

The hinge is another area where Apple may diverge from competitors. Multiple reports claim Apple will use Liquidmetal, which is a long-standing trade name for a metallic glass alloy the company has previously used in smaller components. While often referred to as “liquid metal” or “Liquid Metal” in reports, Liquidmetal is the branding Apple has historically associated with the material.

Liquidmetal is said to be stronger and more resistant to deformation than titanium, while remaining relatively lightweight. If accurate, this could help improve long-term durability and reduce wear on the foldable display.

Leaks from Jon Prosser also reference a metal plate beneath the display that works in tandem with the hinge to minimize creasing — a claim that aligns with reporting from Korean and Chinese supply-chain sources.

Battery and other components

Battery life is another potential differentiator. According to Ming-Chi Kuo and multiple Asian supply-chain reports, Apple is testing high-density battery cells in the 5,000 to 5,800mAh range.

That would make it the largest battery ever used in an iPhone, and competitive with (or larger than) batteries in current Android foldables. The device is also expected to use a future A-series chip and Apple’s in-house modem.

Price

None of this will come cheap, that’s for certain. Nearly every report agrees that the iPhone Fold will be Apple’s most expensive iPhone ever.

Estimates currently place the price between $2,000 and $2,500 in the US. Bloomberg has said the price will be “at least $2,000,” while other analysts have narrowed the likely range to around $2,100 and $2,300. That positions the iPhone Fold well above the iPhone Pro Max and closer to Apple’s high-end Macs and iPads.

Despite years of rumors, there’s still plenty that remains unclear. Apple hasn’t confirmed the name “iPhone Fold,” final dimensions, software features or how iOS would adapt to a folding form factor. Durability, repairability and long-term reliability are also open questions. For now, the safest assumption is that Apple is taking its time and that many of these details could still change before launch.

Tech

ElevenLabs raises $500M from Sequoia at an $11 billion valuation

Voice AI company ElevenLabs said today it raised $500 million in a new funding round led by Sequoia Capital, which was an investor in the startup’s last secondary round through a tender. Sequoia partner Andrew Reed is joining the company’s board.

The startup is now valued at $11 billion, more than three times its valuation in its last round in January 2025. Earlier in the year, the Financial Times reported that the startup was looking to raise at that valuation.

The company said that existing investor a16z quadrupled its investment amount, and Iconiq, which led the last round, tripled it. Some prior investors, like BroadLight, NFDG, Valor Capital, AMP Coalition, and Smash Capital, also joined the round. New investors for the funding included Lightspeed Venture Partners, Evantic

Capital, and Bond.

ElevenLabs said that it will disclose some investors later in February, which might be strategic partners. The company has raised over $781 million to date. It said that it will use the funding for research and product development, along with expansion in international markets like India, Japan, Singapore, Brazil, and Mexico.

The company’s co-founder, Mati Staniszewski, indicated that ElevenLabs might work on agents beyond voice and incorporate video. In January, the company announced a partnership with LTX to produce audio-to-video content.

“The intersection of models and products is critical – and our team has proven, time and again, how to translate research into real-world experiences. This funding helps us go beyond voice alone to transform how we interact with technology altogether. We plan to expand our Creative offering – helping creators combine our best-in-class audio with video and Agents – enabling businesses to build agents that can talk, type, and take action,” he said in a statement.

The company has seen good growth momentum as it closed the year at $330 million ARR. In an interview with Bloomberg earlier this year, Staniszewski said that it took ElevenLabs five months to reach $200 million to $300 million in ARR.

Voice AI model providers are an attractive target for investors and big tech companies. In January, rival Deepgram raised $130 million from AVP at a $1.3 billion valuation. Meanwhile, Google hired top talent from voice model company Hume AI, including CEO Alan Cowen.

Tech

Trumpland Ramps Up Attacks On Netflix Warner Brothers Merger To Help Larry Ellison

from the only-OUR-propaganda-is-good-propaganda dept

So we’ve been noting how the Trump administration has been helping Larry Ellison wage war on Netflix’s proposed merger with Warner Brothers. Not because they care about antitrust (that’s always been a lie), but because they want Larry Ellison to be able to dominate media and create a safe space for unpopular right wing ideology.

After Warner Brothers balked at Larry’s competing bid and a hostile takeover attempt, Larry tried to sue Warner Brothers. With that not going anywhere, Larry and MAGA have since joined forces to try and attack the Netflix merger across right wing media, falsely claiming that “woke” Netflix is attempting a “cultural takeover” that must be stopped for the good of humanity.

With hearings on the Netflix merger looming, MAGA has ramped up those attacks with the help of some usual allies. That includes the right wing think tank the Heritage Foundation, which has apparently been circulating a bogus study around DC claiming that Netflix and Warner Brothers are “engineering millions of Americans into a predisposition to accept preferred leftwing ideological dogma”:

“Without ever saying Warner Bros or bid rival Paramount by name, the Oversight Project’s analysis, titled Fedflix: Netflix, The Federal Government, and the New Propaganda State, insists that “relevant federal agencies must scrutinize with extreme intensity any potential Netflix acquisitions of other media and entertainment companies to take into account the full ramifications of the impacts on American society and the health of the Constitutional Republic.”

Again, the goal here is to ensure that Larry Ellison can buy Netflix (and HBO and CNN). Larry, as we’ve seen vividly with his acquisitions of CBS and TikTok, is buying up new and old media to create a propaganda safe space for America’s increasingly unhinged and anti-democratic extraction class. Like Elon Musk’s acquisition of Twitter, the goal is propaganda and information control.

And like any good propagandists, MAGA has tried to invert reality, and is increasingly trying to claim it’s Netflix that covertly wants to create a left-wing propaganda empire that spreads gayness and woke:

“With its subtitle of “The Weaponization of Entertainment for Partisan Propaganda,” the report is tailored for the MAGA base. Full of talking points and and mentions of Stranger Things, the Lena Dunham-produced Orgasm Inc: The Story of OneTaste, the controversial Cuties docu from 2020, and the Obamas-produced American Factory, the 47-page report takes repeated swipes at any expansion of the streamer and its library of “leftwing and progressive” content.”

Of course that’s nonsense. Netflix has demonstrated that they’re primarily an opportunist, and will show whatever grabs eyeballs and makes them money (from gay military dramas that upset the pentagon to washed up anti-trans comedian hacks). And they’re certain to debase themselves further to please the Trump administration in order to gain approval of their merger.

That’s not to say that the Netflix Warner Brothers merger will be good for anybody. Most media consolidation is generally terrible for labor and consumers as we’ve seen with the AT&T–>Warner Brothers–>Discovery mergers. They almost always result in massive debt loads, tons of layoffs, higher prices, and lower quality product.

Enter an old MAGA playbook: try to convince a bunch of useful idiots that the authoritarian corporatist MAGA coalition somehow really loves antitrust reform and is looking out for the little guy, despite a long track record of coddling corporate power and monopoly control.

That’s again the game plan here by Heritage and administration mouthpieces like Brendan Carr; pretend you’re obstructing the Netflix deal for ethical and antitrust reasons, when you’re really just trying to help Larry Ellison engage in the exact sort of competitive and ideological domination you’re whining about.

Among the folks helping this project along is former Trump DOJ “antitrust enforcer” Makan Delrahim, who is now Paramount’s Chief Legal Officer. Delrahim played a starring role during the first Trump term in rubber stamping the hugely problematic Sprint T-Mobile merger, and attempting to block the AT&T Time Warner deal (to the benefit of Rupert Murdoch, who opposed the tie up).

And now here we are again, with many of the same folks joining forces to try and scuttle Netflix’s latest merger, simply to ensure their preferred, anti-democratic billionaire wins the prize.

Ideally, again, you’d block all media consolidation.

Since that’s clearly not happening under the corporation-coddling Trump administration, activists — and the two or three Democratic lawmakers who actually care about media reform — are probably better served by aligning themselves with Netflix. It’s most definitely a lesser of two evils scenario, with, as the chaos at CBS shows, greater Larry Ellison control of media being the worst possible outcome.

In any case, expect right wing propagandists and right wing media to start really lighting into Netflix in the weeks and months to come. You know, because they just really love truth and freedom and hate consolidated corporate power.

Filed Under: antitrust, disinformation, donald trump, larry ellison, maga, media consolidation, merger, streaming, video

Companies: netflix, oan, paramount, warner bros. discovery

Tech

Harnessing Plasmons for Alternative Computing Power

Much has been made of the excessive power demands of AI, but solutions are sparse. This has led engineers to consider completely new paradigms in computing: optical, thermodynamic, reversible—the list goes on. Many of these approaches require a change in the materials used for computation, which would demand an overhaul in the CMOS fabrication techniques used today.

Over the past decade, Hector De Los Santos has been working on yet another new approach. The technique would require the same exact materials used in CMOS, preserving the costly equipment, yet still allow computations to be performed in a radically different way. Instead of the motion of individual electrons—current—computations can be done with the collective, wavelike propagations in a sea of electrons, known as plasmons.

De Los Santos, an IEEE Fellow, first proposed the idea of computing with plasmons back in 2010. More recently, in 2024, De Los Santos and collaborators from University of South Carolina, Ohio State University, and the Georgia Institute of Technology created a device that demonstrated the main component of plasmon-based logic: the ability to control one plasmon with another. We caught up with De Los Santos to understand the details of this novel technological proposal.

How Plasmon Computing Works

IEEE Spectrum: How did you first come up with the idea for plasmon computing?

De Los Santos: I got the idea of plasmon computing around 2009, upon observing the direction in which the field of CMOS logic was going. In particular, they were following the downscaling paradigm in which, by reducing the size of transistors, you would cram more and more transistors in a certain area, and that would increase the performance. However, if you follow that paradigm to its conclusion, as the device sizes are reduced, quantum mechanical effects come into play, as well as leakage. When the devices are very small, a number of effects called short channel effects come into play, which manifest themselves as increased power dissipation.

So I began to think, “How can we solve this problem of improving the performance of logic devices while using the same fabrication techniques employed for CMOS—that is, while exploiting the current infrastructure?” I came across an old logic paradigm called fluidic logic, which uses fluids. For example, jets of air whose direction was impacted by other jets of air could implement logic functions. So I had the idea, why don’t we implement a paradigm analogous to that one, but instead of using air as a fluid, we use localized electron charge density waves—plasmons. Not electrons, but electron disturbances.

And now the timing is very appropriate because, as most people know, AI is very power intensive. People are coming against a brick wall on how to go about solving the power consumption issue, and the current technology is not going to solve that problem.

What is a plasmon, exactly?

De Los Santos: Plasmons are basically the disturbance of the electron density. If you have what is called an electron sea, you can imagine a pond of water. When you disturb the surface, you create waves. And these waves, the undulations on the surface of this water, propagate through the water. That is an almost perfect analogy to plasmons. In the case of plasmons, you have a sea of electrons. And instead of using a pebble or a piece of wood tapping on the surface of the water to create a wave that propagates, you tap this sea of electrons with an electromagnetic wave.

How do plasmons promise to overcome the scaling issues of traditional CMOS logic?

De Los Santos: Going back to the analogy of the throwing the pebble on the pond: It takes very, very low energy to create this kind of disturbance. The energy to excite a plasmon is on the order of attojoules or less. And the disturbance that you generate propagates very fast. A disturbance propagates faster than a particle. Plasmons propagate in unison with the electromagnetic wave that generates them, which is the speed of light in the medium. So just intrinsically, the way of operation is extremely fast and extremely low power compared to current technology.

In addition to that, current CMOS technology dissipates power even if it’s not used. Here, that’s not the case. If there is no wave propagating, then there is no power dissipation.

How do you do logic operations with plasmons?

De Los Santos: You pattern long, thin wires in a configuration in the shape of the letter Y. At the base of the Y you launch a plasmon. Call this the bias plasmon, this is the bit. If you don’t do anything, when this plasmon gets to the junction it will split in two, so at the output of the Y, you will detect two equal electric field strengths.

Now, imagine that at the Y junction you apply another wire at an angle to the incoming wire. Along that new wire, you send another plasmon, called a control plasmon. You can use the control plasmon to redirect the original bias plasmon into one leg of the Y.

Plasmons are charge disturbances, and two plasmons have the same nature: They either are both positive or both negative. So, they repel each other if you force them to converge into a junction. And by controlling the angle of the control plasmon impinging on the junction, you can control the angle of the plasmon coming out of the junction. And that way you can steer one plasmon with another one. The control plasmon simply joins the incoming plasmon, so you end up with double the voltage on one leg.

You can do this from both sides, add a wire and a control plasmon on either side of the junction so you can redirect the plasmon into either leg of the Y, giving you a zero or a one.

Building a Plasmon-Based Logic Device

You’ve built this Y-junction device and demonstrated steering a plasmon to one side in 2024. Can you describe the device and its operation?

De Los Santos: The Y-junction device is about 5 square [micrometers]. The Y is made up of the following: a metal on top of an oxide, on top of a semiconducting wafer, on top of a ground plane. Now, between the oxide and the wafer, you have to generate a charge density—this is the sea of electrons. To do that, you apply a DC voltage between the metal of the Y and the ground plane, and that generates your static sea of electrons. Then you impinge upon that with an incoming electromagnetic wave, again between the metal and ground plane. When the electromagnetic wave reaches the static charge density, the sea of electrons that was there generates a localized electron charge density disturbance: a plasmon.

Now, if you launch a plasmon by itself, it will quickly dissipate. It will not propagate very far. In my setup, the reason why the plasmon survives is because it is being regenerated. As the electromagnetic field propagates, you keep regenerating the plasmons, creating new plasmons at its front end.

What is left to be done before you can implement full computer logic?

De Los Santos: I demonstrated the partial device, that is just the interaction of two plasmons. The next step would be to demonstrate and fabricate the full device, which would have the two controls. And after that gets done, the next step is concatenating them to create a full adder, because that is the fundamental computing logic component.

What do you think are going to be the main challenges going forward?

De Los Santos: I think the main challenge is that the technology doesn’t follow from today’s paradigm of logic devices based on current flows. This is based on wave flows. People are accustomed to other things, and it may be difficult to understand the device. The different concepts that are brought together in this device are not normally employed by the dominant technology, and it is really interdisciplinary in nature. You have to know about metal-oxide-semiconductor physics, then you have to know about electromagnetic waves, then you have to know about quantum field theory. The knowledge base to understand the device rarely exists in a single head. Maybe another next step is to try to make it more accessible. Getting people to sponsor the work and to understand it is a challenge, not really the implementation. There’s not really a fabrication limitation.

But in my opinion, the usual approaches are just doomed, for two reasons. First, they are not reversible, meaning information is lost in the computation, which results in energy loss. Second, as the devices shrink energy dissipation increases, posing an insurmountable barrier. In contrast, plasmon computation is inherently reversible, and there is no fundamental reason it should dissipate any energy during switching.

From Your Site Articles

Related Articles Around the Web

-

Crypto World5 days ago

Crypto World5 days agoSmart energy pays enters the US market, targeting scalable financial infrastructure

-

Crypto World6 days ago

Software stocks enter bear market on AI disruption fear with ServiceNow plunging 10%

-

Politics5 days ago

Politics5 days agoWhy is the NHS registering babies as ‘theybies’?

-

Crypto World6 days ago

Crypto World6 days agoAdam Back says Liquid BTC is collateralized after dashboard problem

-

Video2 days ago

Video2 days agoWhen Money Enters #motivation #mindset #selfimprovement

-

Tech10 hours ago

Tech10 hours agoWikipedia volunteers spent years cataloging AI tells. Now there’s a plugin to avoid them.

-

NewsBeat5 days ago

NewsBeat5 days agoDonald Trump Criticises Keir Starmer Over China Discussions

-

Politics2 days ago

Politics2 days agoSky News Presenter Criticises Lord Mandelson As Greedy And Duplicitous

-

Fashion5 days ago

Fashion5 days agoWeekend Open Thread – Corporette.com

-

Crypto World4 days ago

Crypto World4 days agoU.S. government enters partial shutdown, here’s how it impacts bitcoin and ether

-

Sports4 days ago

Sports4 days agoSinner battles Australian Open heat to enter last 16, injured Osaka pulls out

-

Crypto World4 days ago

Crypto World4 days agoBitcoin Drops Below $80K, But New Buyers are Entering the Market

-

Crypto World2 days ago

Crypto World2 days agoMarket Analysis: GBP/USD Retreats From Highs As EUR/GBP Enters Holding Pattern

-

Crypto World5 days ago

Crypto World5 days agoKuCoin CEO on MiCA, Europe entering new era of compliance

-

Business5 days ago

Entergy declares quarterly dividend of $0.64 per share

-

Sports2 days ago

Sports2 days agoShannon Birchard enters Canadian curling history with sixth Scotties title

-

NewsBeat1 day ago

NewsBeat1 day agoUS-brokered Russia-Ukraine talks are resuming this week

-

NewsBeat2 days ago

NewsBeat2 days agoGAME to close all standalone stores in the UK after it enters administration

-

Crypto World19 hours ago

Crypto World19 hours agoRussia’s Largest Bitcoin Miner BitRiver Enters Bankruptcy Proceedings: Report

-

Crypto World6 days ago

Crypto World6 days agoWhy AI Agents Will Replace DeFi Dashboards