Tech

Zillow teams up with ‘World of Warcraft’ to exhibit virtual homes inside popular game

Seattle-based real estate company Zillow has partnered with the company behind the long-running online game World of Warcraft in order to showcase players’ creativity by exhibiting their virtual homes.

A new microsite, “Zillow for Warcraft,” allows users to explore an assortment of designs for in-game housing, both those made by players and by members of WoW’s development team. Some of these homes will be presented using the same methods as Zillow’s real-world virtual tours, such as with 3D modeling and SkyTour visuals, so users can poke around a fantasy kitchen just as if it was real.

World of Warcraft, published and developed by Microsoft subsidiary Blizzard Entertainment, recently unveiled player housing as a feature of its newest paid expansion, Midnight. Owners of the expansion can opt to take their characters to an in-game island, where they’re given a plot of land and a small house to customize and decorate however they wish.

(Midnight also involves a life-and-death struggle against a shadow-wielding antagonist who plans to seize and corrupt the very heart of the game’s world, but in much of its marketing so far, Blizzard has chosen to emphasize the new home-building feature. Go figure.)

“Player housing is a milestone moment for the World of Warcraft community, and we wanted to honor it in a way that felt authentic and unexpected,” Beverly W. Jackson, vice president of brand and product marketing at Zillow, said in a press release.

Jackson continued: “Zillow exists at the center of how people think and talk about home, and gaming has become another powerful expression of that. This collaboration brings two worlds together, celebrating home as both a place to belong and a place to escape into something that feels honest and personal.”

As part of the Zillow collaboration, WoW players will be able to unlock a number of decorative items for their in-game homes, such as a unique doormat.

Notably, Zillow for Warcraft features no transactions at all. You will not be able to exchange real or virtual money for anything seen on the website. It’s simply a free virtual tour of what players have been able to accomplish with WoW’s new housing system.

The deal with Zillow is one of several bizarre new brand deals that Blizzard has made for Midnight, including a collaboration with Pinterest that can unlock an in-game camera. While the primary driver of World of Warcraft is still widespread armed conflict in an increasingly vast fantasy universe, the introduction of housing seems to have spurred Blizzard into also pitching it to a new audience as a cozy house-building simulator. It’s simply that to get new furniture, you occasionally may have to go to another dimension and beat it out of a dragon.

World of Warcraft celebrated its 21st anniversary in November. Midnight, its 11th expansion, is planned to go live on March 2.

Tech

Building A Kit Car To Save Money? It May Cost You More Than You Think

We’ all daydream sometimes, like when seeing an an iconic original Shelby AC Cobra on the auction block that sells for seven figures. In that moment you might think “I could build one of those. It’s just a fiberglass shell and a big engine, how hard could it be?”

The Cobra is a very popular kit car, but a little research will reveal that building one isn’t a frugal endeavor. True, you won’t spend over a million dollars like you would on a real Cobra, but building a kit car in your garage isn’t a task to be undertaken if you’re working on a tight budget. That’s true even if the initial investments on a kit and a chassis don’t seem out of reach financially.

No one here at SlashGear is saying that you shouldn’t build a kit car in your garage; it’s a great way for any gearhead to put their wrenching skills to use and yields a rewarding final product. I’ve been witness to and participated in many kit car builds, and they can be quite a bit of fun. In most cases building a kit car will be cheaper than buying a running original antique or classic, but it’s not an easy or inexpensive process even with the right tools and know-how.

Kit car builds are a tempting proposition

Factory Five Racing was founded in 1995 and sells reproduction kits for blasts from the past like 1930s hot rods and Shelby AC Cobras and Daytona Coupes from the ’60s. Factory Five’s least expensive kit is the Mk4, which looks like a Shelby Cobra roadster. The base kit is priced at a very tempting $14,990 and comes with the following: body and frame, chassis panels, brake and fuel lines, steering and cooling kits, lighting, seats, and a dashboard with gauges and switches. All you need to add is the running gear from a 1987 to 2004 Ford Mustang, which sounds simple enough from here. If you already have a beater Mustang laying around (lucky you) then the perceived cost of entry seems reasonable.

But before you click “buy” and eagerly wait for that large crate to arrive in your driveway, there are a few other things you’ll need to purchase or make arrangements for. A big garage is a must to house you and your project while you build it. A lift is also essential, and depending on the state of your donor you might also need an engine hoist. Kit cars are often unpainted aluminum or fiberglass, so a paint booth is also a big help. Whether you decide to rent or buy shop space and gear you’re going to add plenty to the cost of your build.

Time is money too

That $14,990 will start to grow quite a bit, and quickly. You’ll probably find yourself topping off your toolbox with some new purchases as well as spending to clear some unexpected snags once you start working. If that doesn’t scare you and you have good friends to beg or barter time, equipment, or expertise from then we aren’t going to stop you: by all means, buy everyone some pizza and get to work. It’s important to note that building a kit car in your garage is probably cheaper than buying a running classic, but it will take many hours of work and probably several trips to an auto parts store and/or salvage yard.

If all this seems unmanageable then you might have to appreciate your dream cars from a distance like I do; playing Gran Turismo and hunting online for one of the classic cars that can be had for under $10,000. Also keep in mind that Factory Five is just one option for cool kit cars. Meyers Manx has modernized the dune buggy with a $6k kit and Shell Valley sells a ’29 Model A roadster kit for $16,995 complete or under $5,000 just for the body. Regardless of which kit you buy and how much you spend, you’ll still have to factor in dozens of hours for assembly, gathering parts and supplies, and troubleshooting. If you’re taking unpaid time off from work to build your kit car you’ll have to add that lost income to the cost of the project as well.

Tech

The Galaxy Z Flip 7 is $200 off right now, and it might just flip your mind

Calling all flip phone enthusiasts: the latest Samsung’s coveted Z Flip series has appeared on a great deal.

The Galaxy Z Flip 7 is one of the biggest flip smartphones of 2025, and if you’ve been tempted to make the switch or simply adore Samsung’s flip range, then you’ll want to check this offer out.

Right now, you can get the Samsung Galaxy Z Flip7 256GB cell phone for just $899. That’s a massive $200.99 off its original $1099.99 asking price, and an easy way to nab a bargain on one of the best phones out there.

You can save $200 on the Samsung Galaxy Z Flip 7 today, and it’s the perfect excuse to switch to a phone that brings a little fun back into your tech routine.

The Galaxy Z Flip 7 is $200 off right now, and it might just flip your mind

Still, for all the nostalgia that’s packed into the Z Flip7, this is a very modern device. The phone’s advanced AI and unlocked Android functionality give you tons of freedom where the interface is concerned, allowing you to make the phone truly your own.

The biggest selling point of the phone is its wow-factor display. Not only does the Z Flip 7 fold in half to stow away easily in a pocket or handbag, but when you do open it up, you’re greeted with an almost 7-inch display that’s ideal for watching films and TV shows on the go.

There’s also an eye-watering 50 MP camera, which not only allows you to take incredibly detailed photos, but also pairs well with a powerful processor to ensure that any raw imaging doesn’t slow the handset down.

In fact, we wound up out 4.5-star review of the Flip 7, in the should you buy it section with: “With a larger cover screen, wider foldable screen with a reduced crease, great performance and solid cameras, the Z Flip 7 can compete with some of the best around.”

The Z Flip 7 also packs an extended long battery life, which, on a flip phone, is exactly what you need to get you through to the end of each day without constant worry that you’ll have to find the nearest charging port.

Here’s a phone deal that merges the modern with the nostalgic. For those who want to switch things up and enjoy one of the greatest Android flip phones on the market, this is it.

Even though the Z Flip 7 could be seen as one of the most expensive devices that Samsung produces, having an 18% saving against its RRP certainly gives it more value for money.

You’re getting an eye-catching foldable phone aesthetic coupled with high-tech features and a strong warranty – what’s not to like? It also made a worthy entry as the best Samsung clamshell in our best foldable phone buying guide.

The Flip 7 is undoubtedly the best Flip to date, and one that can finally take the fight to the clamshell competition. It’s not perfect, but for most people, it’s all the foldable they need.

-

Larger cover screen is a much-needed improvement -

More convenient 21:9 ratio screen is much easier to use -

Improved camera performance -

Fast, flagship performance with strong battery life

-

Cover screen software could be better -

Samsung software is full of duplicate/redundant apps -

No dedicated zoom camera

SQUIRREL_PLAYLIST_10148964

Tech

Climactic launches hybrid fund to get startups through the ‘valley of death’

It’s a challenge every startup faces: they’ve made a prototype and proven the thing works, but now have to sell the product and produce enough to get past the “valley of death” that kills so many companies.

“They are chicken and egg stuck,” Josh Felser, co-founder and managing partner of early-stage venture firm Climactic, told TechCrunch.

The hurdle is particularly high for companies making physical goods. Felser noticed it was a common occurrence among startups producing novel materials. Fesler, who previously founded and invested in software startups, said the problem they faced seemed a bit unfair.

“Software companies sell at a negative margin all the time in the beginning, you know, Uber, Lyft, you can look at lots of different examples,” he said. “But for materials companies, they they’re not allowed to do that. One of the questions I had is, ‘why is that?’”

Felser found that unlike software companies, which can quickly add more capacity from cloud service providers, materials startups face a market skeptical of their ability to scale up production without a guaranteed customer.

Felser decided to give them one.

Felser doesn’t run a company with a big budget for clever materials, but he knows a few. And as a climate tech investor, he knows more than a few startups that could benefit from a well-known customer.

Techcrunch event

Boston, MA

|

June 23, 2026

Felser has been quietly working on a new project, called Material Scale, that brings the two sides together using a hybrid debt-equity investment vehicle to give materials startups a boost, TechCrunch has learned. Material Scale will initially focus on climate tech startups in the apparel industry.

Material Scale is betting on startups with commercial-ready products that are ready to scale if a customer can purchase in bulk. Buyers will commit enough funds to cover the cost of the material at market price. Material Scale will fund the difference through a combination of loans and warrants in the startup.

“It’s really minimally dilutive,” Felser said.

Ralph Lauren is joining the platform as a buyer for the initial launch of Material Scale. Investor Structure Climate is joining Climactic as a general partner.

Money from purchase orders flows from the buyer through Material Scale to the startup. “In effect, we buy it and then simultaneously sell it,” Felser said.

The deals between Material Scale and the buyer and between Material Scale and the startup will be inked essentially at the same time.

“Once they sign the deals, this’ll be interesting because the value of the company has significantly changed because they’ve now got a buyer and they’ve got funding to achieve scale,” he said.

Material Scale hasn’t executed any deals yet; Felser said he has large apparel manufacturers interested in participating and a long roster of startups that could use the funding. “The startups all want it,” he said. “We have a big list of companies that are candidates that we’re talking with.”

The first investments will come out of a special purpose vehicle totaling about $11 million. Felser hopes to eventually branch out into other, similar markets like alternative fuels, eventually growing the Material Scale concept to nine figures.

He hopes other investors will steal his idea.

“We need more novel instruments like this to attack climate change,” he said. “We want to be nimble and be able to take advantage of opportunities when we we see them and not just be doing the same old thing.”

Tech

OpenAI’s acquisition of OpenClaw signals the beginning of the end of the ChatGPT era

The chatbot era may have just received its obituary. Peter Steinberger, the creator of OpenClaw — the open-source AI agent that took the developer world by storm over the past month, raising concerns among enterprise security teams — announced over the weekend that he is joining OpenAI to “work on bringing agents to everyone.”

The OpenClaw project itself will transition to an independent foundation, though OpenAI is already sponsoring it and may have influence over its direction.

The move represents OpenAI’s most aggressive bet yet on the idea that the future of AI isn’t about what models can say, but what they can do. For IT leaders evaluating their AI strategy, the acquisition is a signal that the industry’s center of gravity is shifting decisively from conversational interfaces toward autonomous agents that browse, click, execute code, and complete tasks on users’ behalf.

From playground project to the hottest acquisition target in AI

OpenClaw’s path to OpenAI was anything but conventional. The project began life last year as “ClawdBot” — a nod to Anthropic’s Claude model that many developers were using to power it. Released in November 2025, it was the work of Steinberger, a veteran software developer with 13 years of experience building and running a company, who pivoted to exploring AI agents as what he described as a “playground project.”

The agent distinguished itself from previous attempts at autonomous AI — most notably the AutoGPT moment of 2023 — by combining several capabilities that had previously existed in isolation: tool access, sandboxed code execution, persistent memory, skills and easy integration with messaging platforms like Telegram, WhatsApp, and Discord. The result was an agent that didn’t just think, but acted.

In December 2025 and especially January and early February 2026, OpenClaw saw a rapid, “hockey stick” rate of adoption among AI “vibe coders” and developers impressed with its ability to complete tasks autonomously across applications and the entire PC environment, including carrying on messenger conversations with users and posting content on its own.

In his blog post announcing the move to OpenAI, Steinberger framed the decision in characteristically understated terms. He acknowledged the project could have become “a huge company” but said that wasn’t what interested him. Instead, he wrote that his next mission is to “build an agent that even my mum can use” — a goal he believes requires access to frontier models and research that only a major lab can provide.

Sam Altman confirmed the hire in a post stating that Steinberger would drive the next generation of personal agents at OpenAI.

Anthropic’s missed opportunity

The acquisition also raises uncomfortable questions for Anthropic. OpenClaw was originally built to work on Claude and carried a name — ClawdBot — that nodded to the model.

Rather than embrace the community building on its platform, Anthropic reportedly sent Steinberger a cease-and-desist letter, giving him a matter of days to rename the project and sever any association with Claude, or face legal action. The company even refused to allow the old domains to redirect to the renamed project.

The reasoning was not without merit — early OpenClaw deployments were rife with security issues, as users ran agents with root access and minimal safeguards on unsecured machines. But the heavy-handed legal approach meant Anthropic effectively pushed the most viral agent project in recent memory directly into the arms of its chief rival.

“Catching lightning in a bottle”: LangChain CEO weighs in

Harrison Chase, co-founder and CEO of LangChain, offered a candid assessment of the OpenClaw phenomenon and its acquisition in an exclusive interview for an upcoming episode of VentureBeat’s Beyond The Pilot podcast.

Chase drew a direct parallel between OpenClaw’s rise and the breakout moments that defined earlier waves of AI tooling. He noted that success in the space often comes down to timing and momentum rather than technical superiority alone. He pointed to his own experience with LangChain, as well as ChatGPT and AutoGPT, as examples of projects that captured the developer imagination at exactly the right moment — while similar projects that launched around the same time did not.

What set OpenClaw apart, Chase argued, was its willingness to be “unhinged” — a term he used affectionately. He revealed that LangChain told its own employees they could not install OpenClaw on company laptops due to the security risks involved. That very recklessness, he suggested, was what made the project resonate in ways that a more cautious lab release never could.

“OpenAI is never going to release anything like that. They can’t release anything like that,” Chase said. “But that’s what makes OpenClaw OpenClaw. And so if you don’t do that, you also can’t have an OpenClaw.”

Chase credited the project’s viral growth to a deceptively simple playbook: build in public and share your work on social media. He drew a parallel to the early days of LangChain, noting that both projects gained traction through their founders consistently shipping and tweeting about their progress, reaching the highly concentrated AI community on X.

On the strategic value of the acquisition, Chase was more measured. He acknowledged that every enterprise developer likely wants a “safe version of OpenClaw” but questioned whether acquiring the project itself gets OpenAI meaningfully closer to that goal. He pointed to Anthropic’s Claude Cowork as a product that is conceptually similar — more locked down, fewer connections, but aimed at the same vision.

Perhaps his most provocative observation was about what OpenClaw reveals about the nature of agents themselves. Chase argued that coding agents are effectively general-purpose agents, because the ability to write and execute code under the hood gives them capabilities far beyond what any fixed UI could provide. The user never sees the code — they just interact in natural language — but that’s what provides the agent with its expansive abilities.

He identified three key takeaways from the OpenClaw phenomenon that are shaping LangChain’s own roadmap: natural language as the primary interface, memory as a critical enabler that allows users to “build something without realizing they’re building something,” and code generation as the engine of general-purpose agency.

What this means for enterprise AI strategy

For IT decision-makers, the OpenClaw acquisition crystallizes several trends that have been building throughout 2025 and into 2026.

First, the competitive landscape for AI agents is consolidating rapidly. Meta recently acquired Manus AI, a full agent system, as well as Limitless AI, a wearable device that captures life context for LLM integration. OpenAI’s own previous attempts at agentic products — including its Agents API, Agents SDK, and the Atlas agentic browser — failed to gain the traction that OpenClaw achieved seemingly overnight.

Second, the gap between what’s possible in open-source experimentation and what’s deployable in enterprise settings remains significant. OpenClaw’s power came precisely from the lack of guardrails that would be unacceptable in a corporate environment. The race to build the “safe enterprise version of OpenClaw,” as Chase put it, is now the central question facing every platform vendor in the space.

Third, the acquisition underscores that the most important AI interfaces may not come from the labs themselves. Just as the most impactful mobile apps didn’t come from Apple or Google, the killer agent experiences may emerge from independent builders who are willing to push boundaries the major labs cannot. IT decision-makers have to be asking themselves currently

Will the claw close?

The open-source community’s central concern is whether OpenClaw will remain genuinely open under OpenAI’s umbrella.

Steinberger has committed to moving the project to a foundation structure, and Altman has publicly stated the project will stay open source.

But OpenAI’s own complicated history with the word “open” — the company is currently facing litigation over its transition from a nonprofit to a for-profit entity — makes the community understandably skeptical.

For now, the acquisition marks a definitive moment: the industry’s focus has officially shifted from what AI can say to what AI can do.

Whether OpenClaw becomes the foundation of OpenAI’s agent platform or a footnote like AutoGPT before it will depend on whether the magic that made it viral — the unhinged, boundary-pushing, security-be-damned energy of an independent hacker — can survive inside the walls of a $300 billion company.

As Steinberger signed off on his announcement: “The claw is the law.”

Tech

Data Center Sustainability Metrics: Hidden Emissions

In 2024, Google claimed that their data centers are 1.5x more energy efficient than industry average. In 2025, Microsoft committed billions to nuclear power for AI workloads. The data center industry tracks power usage effectiveness to three decimal places and optimizes water usage intensity with machine precision. We report direct emissions and energy emissions with religious fervor.

These are laudable advances, but these metrics account for only 30 percent of total emissions from the IT sector. The majority of the emissions are not directly from data centers or the energy they use, but from the end-user devices that actually access the data centers, emissions due to manufacturing the hardware, and software inefficiencies. We are frantically optimizing less than a third of the IT sector’s environmental impact, while the bulk of the problem goes unmeasured.

Incomplete regulatory frameworks are part of the problem. In Europe, the Corporate Sustainability Reporting Directive (CSRD) now requires 11,700 companies to report emissions using these incomplete frameworks. The next phase of the directive, covering 40,000+ additional companies, was originally scheduled for 2026 (but is likely delayed to 2028). In the United States, the standards body responsible for IT sustainability metrics (ISO/IEC JTC 1/SC 39) is conducting active revision of its standards through 2026, with a key plenary meeting in May 2026.

The time to act is now. If we don’t fix the measurement frameworks, we risk locking in incomplete data collection and optimizing a fraction of what matters for the next 5 to 10 years, before the next major standards revision.

The limited metrics

Walk into any modern data center and you’ll see sustainability instrumentation everywhere. Power usage efficiency (PUE) monitors track every watt. Water usage efficiency (WUE) systems measure water consumption down to the gallon. Sophisticated monitoring captures everything from server utilization to cooling efficiency to renewable energy percentages.

But here’s what those measurements miss: End-user devices globally emit 1.5 to 2 times more carbon than all data centers combined, according to McKinsey’s 2022 report. The smartphones, laptops, and tablets we use to access those ultra-efficient data centers are the bigger problem.

Data center operations, as measured by power usage efficiency, account for only 24 percent of the total emissions.

On the conservative end of the range from McKinsey’s report, devices emit 1.5 times as much as data centers. That means that data centers make up 40 percent of total IT emissions, while devices make up 60 percent.

On top of that, approximately 75 percent of device emissions occur not during use, but during manufacturing—this is so-called embodied carbon. For data centers, only 40 percent is embodied carbon, and 60 percent comes from operations (as measured by PUE).

Putting this together, data center operations, as measured by PUE, account for only 24 percent of the total emissions. Data center embodied carbon is 16 percent, device embodied carbon is 45 percent, and device operation is 15 percent.

Under the EU’s current CSRD framework, companies must report their emissions in three categories: direct emissions from owned sources, indirect emissions from purchased energy, and a third category for everything else.

This “everything else” category does include device emissions and embodied carbon. However, those emissions are reported as aggregate totals broken down by accounting category—Capital Goods, Purchased Goods and Services, Use of Sold Products—but not by product type. How much comes from end-user devices versus datacenter infrastructure, or employee laptops versus network equipment, remains murky, and therefore, unoptimized.

Embodied carbon and hardware reuse

Manufacturing a single smartphone generates approximately 50 kg CO2 equivalent (CO2e). For a laptop, it’s 200 kg CO2e. With 1 billion smartphones replaced annually, that’s 50 million tonnes of CO2e per year just from smartphone manufacturing, before anyone even turns them on. On average, smartphones are replaced every 2 years, laptops every 3 to 4 years, and printers every 5 years. Data center servers are replaced approximately every 5 years.

Extending smartphone lifecycles to 3 years instead of 2 would reduce annual manufacturing emissions by 33 percent. At scale, this dwarfs data center optimization gains.

There are programs geared towards reusing old components that are still functional and integrating them into new servers. GreenSKUs and similar initiatives show 8 percent reductions in embodied carbon are achievable. But these remain pilot programs, not systematic approaches. And critically, they’re measured only in data center context, not across the entire IT stack.

Imagine applying the same circular economy principles to devices. With over 2 billion laptops in existence globally and 2-3-year replacement cycles, even modest lifespan extensions create massive emission reductions. Extending smartphone lifecycles to 3 years instead of 2 would reduce annual manufacturing emissions by 33 percent. At scale, this dwarfs data center optimization gains.

Yet data center reuse gets measured, reported, and optimized. Device reuse doesn’t, because the frameworks don’t require it.

The invisible role of software

Leading load balancer infrastructure across IBM Cloud, I see how software architecture decisions ripple through energy consumption. Inefficient code doesn’t just slow things down—it drives up both data center power consumption and device battery drain.

For example, University of Waterloo researchers showed that they can reduce 30 percent of energy use in data centers by changing just 30 lines of code. From my perspective, this result is not an anomaly—it’s typical. Bad software architecture forces unnecessary data transfers, redundant computations, and excessive resource use. But unlike data center efficiency, there’s no commonly accepted metric for software efficiency.

This matters more now than ever. With AI workloads driving massive data center expansion—projected to consume 6.7-12 percent of total U.S. electricity by 2028, according to Lawrence Berkeley National Laboratory—software efficiency becomes critical.

What needs to change

The solution isn’t to stop measuring data center efficiency. It’s to measure device sustainability with the same rigor. Specifically, standards bodies (particularly ISO/IEC JTC 1/SC 39 WG4: Holistic Sustainability Metrics) should extend frameworks to include device lifecycle tracking, software efficiency metrics, and hardware reuse standards.

To track device lifecycles, we need standardized reporting of device embodied carbon, broken out separately by device. One aggregate number in an “everything else” category is insufficient. We need specific device categories with manufacturing emissions and replacement cycles visible.

To include software efficiency, I advocate developing a PUE-equivalent for software, such as energy per transaction, per API call, or per user session. This needs to be a reportable metric under sustainability frameworks so companies can demonstrate software optimization gains.

To encourage hardware reuse, we need to systematize reuse metrics across the full IT stack—servers and devices. This includes tracking repair rates, developing large-scale refurbishment programs, and tracking component reuse with the same detail currently applied to data center hardware.

To put it all together, we need a unified IT emission-tracking dashboard. CSRD reporting should show device embodied carbon alongside data center operational emissions, making the full IT sustainability picture visible at a glance.

These aren’t radical changes—they’re extensions of measurement principles already proven in data center context. The first step is acknowledging what we’re not measuring. The second is building the frameworks to measure it. And the third is demanding that companies report the complete picture—data centers and devices, servers and smartphones, infrastructure and software.

Because you can’t fix what you can’t see. And right now, we’re not seeing 70 percent of the problem.

From Your Site Articles

Related Articles Around the Web

Tech

Anthropic’s Sonnet 4.6 matches flagship AI performance at one-fifth the cost, accelerating enterprise adoption

Anthropic on Tuesday released Claude Sonnet 4.6, a model that amounts to a seismic repricing event for the AI industry. It delivers near-flagship intelligence at mid-tier cost, and it lands squarely in the middle of an unprecedented corporate rush to deploy AI agents and automated coding tools.

The model is a full upgrade across coding, computer use, long-context reasoning, agent planning, knowledge work, and design. It features a 1M token context window in beta. It is now the default model in claude.ai and Claude Cowork, and pricing holds steady at $3/$15 per million tokens — the same as its predecessor, Sonnet 4.5.

That pricing detail is the headline that matters most. Anthropic’s flagship Opus models cost $15/$75 per million tokens — five times the Sonnet price. Yet performance that would have previously required reaching for an Opus-class model — including on real-world, economically valuable office tasks — is now available with Sonnet 4.6. For the thousands of enterprises now deploying AI agents that make millions of API calls per day, that math changes everything.

Why the cost of running AI agents at scale just dropped dramatically

To understand the significance of this release, you need to understand the moment it arrives in. The past year has been dominated by the twin phenomena of “vibe coding” and agentic AI. Claude Code — Anthropic’s developer-facing terminal tool — has become a cultural force in Silicon Valley, with engineers building entire applications through natural-language conversation. The New York Times profiled its meteoric rise in January. The Verge recently declared that Claude Code is having a genuine “moment.” OpenAI, meanwhile, has been waging its own offensive with Codex desktop applications and faster inference chips.

The result is an industry where AI models are no longer evaluated in isolation. They are evaluated as the engines inside autonomous agents — systems that run for hours, make thousands of tool calls, write and execute code, navigate browsers, and interact with enterprise software. Every dollar spent per million tokens gets multiplied across those thousands of calls. At scale, the difference between $15 and $3 per million input tokens is not incremental. It is transformational.

The benchmark table Anthropic released paints a striking picture. On SWE-bench Verified, the industry-standard test for real-world software coding, Sonnet 4.6 scored 79.6% — nearly matching Opus 4.6’s 80.8%. On agentic computer use (OSWorld-Verified), Sonnet 4.6 scored 72.5%, essentially tied with Opus 4.6’s 72.7%. On office tasks (GDPval-AA Elo), Sonnet 4.6 actually scored 1633, surpassing Opus 4.6’s 1606. On agentic financial analysis, Sonnet 4.6 hit 63.3%, beating every model in the comparison, including Opus 4.6 at 60.1%.

These are not marginal differences. In many of the categories enterprises care about most, Sonnet 4.6 matches or beats models that cost five times as much to run. An enterprise running an AI agent that processes 10 million tokens per day was previously forced to choose between inferior results at lower cost or superior results at rapidly scaling expense. Sonnet 4.6 largely eliminates that trade-off.

In Claude Code, early testing found that users preferred Sonnet 4.6 over Sonnet 4.5 roughly 70% of the time. Users even preferred Sonnet 4.6 to Opus 4.5, Anthropic’s frontier model from November, 59% of the time. They rated Sonnet 4.6 as significantly less prone to over-engineering and “laziness,” and meaningfully better at instruction following. They reported fewer false claims of success, fewer hallucinations, and more consistent follow-through on multi-step tasks.

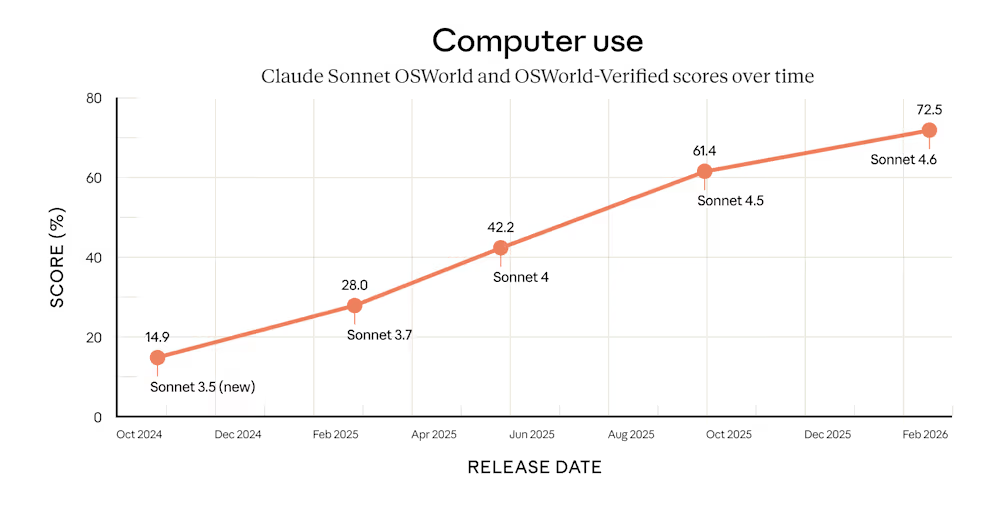

How Claude’s computer use abilities went from ‘experimental’ to near-human in 16 months

One of the most dramatic storylines in the release is Anthropic’s progress on computer use — the ability of an AI to operate a computer the way a human does, clicking a mouse, typing on a keyboard, and navigating software that lacks modern APIs.

When Anthropic first introduced this capability in October 2024, the company acknowledged it was “still experimental — at times cumbersome and error-prone.” The numbers since then tell a remarkable story: on OSWorld, Claude Sonnet 3.5 scored 14.9% in October 2024. Sonnet 3.7 reached 28.0% in February 2025. Sonnet 4 hit 42.2% by June. Sonnet 4.5 climbed to 61.4% in October. Now Sonnet 4.6 has reached 72.5% — nearly a fivefold improvement in 16 months.

This matters because computer use is the capability that unlocks the broadest set of enterprise applications for AI agents. Almost every organization has legacy software — insurance portals, government databases, ERP systems, hospital scheduling tools — that was built before APIs existed. A model that can simply look at a screen and interact with it opens all of these to automation without building bespoke connectors.

Jamie Cuffe, CEO of Pace, said Sonnet 4.6 hit 94% on their complex insurance computer use benchmark, the highest of any Claude model tested. “It reasons through failures and self-corrects in ways we haven’t seen before,” Cuffe said in a statement sent to VentureBeat. Will Harvey, co-founder of Convey, called it “a clear improvement over anything else we’ve tested in our evals.”

The safety dimension of computer use also got attention. Anthropic noted that computer use poses prompt injection risks — malicious actors hiding instructions on websites to hijack the model — and said its evaluations show Sonnet 4.6 is a major improvement over Sonnet 4.5 in resisting such attacks. For enterprises deploying agents that browse the web and interact with external systems, that hardening is not optional.

Enterprise customers say the model closes the gap between Sonnet and Opus pricing tiers

The customer reaction has been unusually specific about cost-performance dynamics. Multiple early testers explicitly described Sonnet 4.6 as eliminating the need to reach for the more expensive Opus tier.

Caitlin Colgrove, CTO of Hex Technologies, said the company is moving the majority of its traffic to Sonnet 4.6, noting that with adaptive thinking and high effort, “we see Opus-level performance on all but our hardest analytical tasks with a more efficient and flexible profile. At Sonnet pricing, it’s an easy call for our workloads.”

Ben Kus, CTO of Box, said the model outperformed Sonnet 4.5 in heavy reasoning Q&A by 15 percentage points across real enterprise documents. Michele Catasta, President of Replit, called the performance-to-cost ratio “extraordinary.” Ryan Wiggins of Mercury Banking put it more bluntly: “Claude Sonnet 4.6 is faster, cheaper, and more likely to nail things on the first try. That combination was a surprising combination of improvements, and we didn’t expect to see it at this price point.”

The coding improvements resonate particularly given Claude Code’s dominance in the developer tools market. David Loker, VP of AI at CodeRabbit, said the model “punches way above its weight class for the vast majority of real-world PRs.” Leo Tchourakov of Factory AI said the team is “transitioning our Sonnet traffic over to this model.” GitHub’s VP of Product, Joe Binder, confirmed the model is “already excelling at complex code fixes, especially when searching across large codebases is essential.”

Brendan Falk, Founder and CEO of Hercules, went further: “Claude Sonnet 4.6 is the best model we have seen to date. It has Opus 4.6 level accuracy, instruction following, and UI, all for a meaningfully lower cost.”

A simulated business competition reveals how AI agents plan over months, not minutes

Buried in the technical details is a capability that hints at where autonomous AI agents are heading. Sonnet 4.6’s 1M token context window can hold entire codebases, lengthy contracts, or dozens of research papers in a single request. Anthropic says the model reasons effectively across all that context — a claim the company demonstrated through an unusual evaluation.

The Vending-Bench Arena tests how well a model can run a simulated business over time, with different AI models competing against each other for the biggest profits. Without human prompting, Sonnet 4.6 developed a novel strategy: it invested heavily in capacity for the first ten simulated months, spending significantly more than its competitors, and then pivoted sharply to focus on profitability in the final stretch. The model ended its 365-day simulation at approximately $5,700 in balance, compared to Sonnet 4.5’s roughly $2,100.

This kind of multi-month strategic planning, executed autonomously, represents a qualitatively different capability than answering questions or generating code snippets. It is the type of long-horizon reasoning that makes AI agents viable for real business operations — and it helps explain why Anthropic is positioning Sonnet 4.6 not just as a chatbot upgrade, but as the engine for a new generation of autonomous systems.

Anthropic’s Sonnet 4.6 arrives as the company expands into enterprise markets and defense

This release does not arrive in a vacuum. Anthropic is in the middle of the most consequential stretch in its history, and the competitive landscape is intensifying on every front.

On the same day as this launch, TechCrunch reported that Indian IT giant Infosys announced a partnership with Anthropic to build enterprise-grade AI agents, integrating Claude models into Infosys’s Topaz AI platform for banking, telecoms, and manufacturing. Anthropic CEO Dario Amodei told TechCrunch there is “a big gap between an AI model that works in a demo and one that works in a regulated industry,” and that Infosys helps bridge it. TechCrunch also reported that Anthropic opened its first India office in Bengaluru, and that India now accounts for about 6% of global Claude usage, second only to the U.S. The company, which CNBC reported is valued at $183 billion, has been expanding its enterprise footprint rapidly.

Meanwhile, Anthropic president Daniela Amodei told ABC News last week that AI would make humanities majors “more important than ever,” arguing that critical thinking skills would become more valuable as large language models master technical work. It is the kind of statement a company makes when it believes its technology is about to reshape entire categories of white-collar employment.

The competitive picture for Sonnet 4.6 is also notable. The model outperforms Google’s Gemini 3 Pro and OpenAI’s GPT-5.2 on multiple benchmarks. GPT-5.2 trails on agentic computer use (38.2% vs. 72.5%), agentic search (77.9% vs. 74.7% for Sonnet 4.6’s non-Pro score), and agentic financial analysis (59.0% vs. 63.3%). Gemini 3 Pro shows competitive performance on visual reasoning and multilingual benchmarks, but falls behind on the agentic categories where enterprise investment is surging.

The broader takeaway may not be about any single model. It is about what happens when Opus-class intelligence becomes available for a few dollars per million tokens rather than a few tens of dollars. Companies that were cautiously piloting AI agents with small deployments now face a fundamentally different cost calculus. The agents that were too expensive to run continuously in January are suddenly affordable in February.

Claude Sonnet 4.6 is available now on all Claude plans, Claude Cowork, Claude Code, the API, and all major cloud platforms. Anthropic has also upgraded its free tier to Sonnet 4.6 by default. Developers can access it immediately using claude-sonnet-4-6 via the Claude API.

Tech

A YouTuber’s $3M Movie Nearly Beat Disney’s $40M Thriller at the Box Office

Mark Fischbach, the YouTube creator known as Markiplier who has spent nearly 15 years building an audience of more than 38 million subscribers by playing indie-horror video games on camera, has pulled off something that most independent filmmakers never manage — a self-financed, self-distributed debut feature that has grossed more than $30 million domestically against a $3 million budget.

Iron Lung, a 127-minute sci-fi adaptation of a video game Fischbach wrote, directed, starred in, and edited himself, opened to $18.3 million in its first weekend and has since doubled that figure worldwide in just two weeks, nearly matching the $19.1 million debut of Send Help, a $40 million thriller from Disney-owned 20th Century Studios. Fischbach declined deals from traditional distributors and instead spent months booking theaters privately, encouraging fans to reserve tickets online; when prospective viewers found the film wasn’t screening in their city, they called local cinemas to request it, eventually landing Iron Lung on more than 3,000 screens across North America — all without a single paid media campaign.

Tech

How the uninvestable is becoming investable

Venture capital has long avoided ‘hard’ sectors such as government, defence, energy, manufacturing, and hardware, viewing them as uninvestable because startups have limited scope to challenge incumbents. Instead, investors have prioritised fast-moving and lightly regulated software markets with lower barriers to entry.

End users in these hard industries have paid the price, as a lack of innovation funding has left them stuck with incumbent providers that continue to deliver clunky, unintuitive solutions that are difficult to migrate from.

However, that perception is now shifting. Investors are responding to new market dynamics within complex industries and the evolving methods startups are using to outperform incumbents.

Government technology spending more than doubled between 2021 and 2025, while defence technology more than doubled in 2025 alone, and we see similar trends in robotics, industrial technology, and healthcare. This signals a clear change in investor mindset as AI-first approaches are changing adoption cycles.

Shifting investor priorities

Historically, those sectors had been viewed as incompatible with venture capital norms: slow procurement cycles, strict regulation, heavy operational and capital requirements, as well as deep integration into physical systems.

For example, on a global level, public procurement can take years, constrained by budgeting cycles, legislation and accountability frameworks. Energy projects must comply with regulatory regimes and national permitting structures, and infrastructure and hardware deployments require extensive certification and long engineering cycles.

Government and public-sector buyers also tend to prioritise reliability, compliance, track record, and legacy relationships over speed, meaning major contracts often go to incumbents rather than startups. Similar dynamics exist in construction, mining, logistics, and manufacturing, all sectors that are still dominated by legacy vendors, complex supply chains, and thin operational margins.

That view is now changing. Capital is increasingly flowing into sectors once seen as too bureaucratic or operationally complex. Beyond those headline sectors, construction tech, industrial automation, logistics software, healthcare, and public-sector tooling are attracting record levels of early-stage and growth capital.

The key question is: what is driving this shift in investor thinking?

What’s causing the change

The shift in investor priorities is partly driven by macro and geopolitical pressures. Supply-chain disruption, energyinsecurity and infrastructure fragility have elevated industrial resilience to a national priority. Governments are investing heavily in grid modernisation, logistics networks, and critical infrastructure, while public institutions face mounting pressure to digitise procurement, compliance, and workflow systems.

What were once considered slow, bureaucratic markets are now seen as structurally supported by policy and long-term demand.

AI is the driving force and is reshaping traditionally hard industries. By lowering the cost of building sophisticated software and enabling immediate performance gains with shorter adoption cycles, AI allows startups to compete with incumbents in sectors such as construction, mining, manufacturing, logistics, and public services from day one.

As software becomes easier to replicate, defensibility is shifting toward operational depth, substantial UI/UX improvements, speed to market, and more seamless integration into complex real-world systems.

Finally, saturation in horizontal SaaS has pushed investors to look elsewhere for differentiated returns. Crowded softwarecategories offer diminishing breakout potential and are often threatened by OpenAI and Anthropic’s fast pace of innovation, whereas regulated and infrastructure-heavy sectors provide less competition, stronger pricing power, higher switching costs, and gigantic TAMs.

SAP with a $200B cap is just an example, but the same goes for Caterpillar, Siemens, Big Utilities, Big Pharma, and many others.

Regulation, once viewed as a deterrent, is increasingly understood as a moat. Startups that successfully navigate procurement frameworks, compliance regimes, and industry standards build advantages that are difficult for new entrants to replicate and cannot be vibe-coded.

Founders leading the way

Legacy players, while trying to adopt new AI tooling as quickly as they can, still struggle to adapt their workflows and scale innovation as quickly as younger companies can. Their dominance has relied on the high cost of switching away from their solutions, but as attention and investment shift toward hard sectors, incumbents can no longer rely solely on their brand reputation.

Even industry leaders like Salesforce are relying more on acquisitions to keep up, showing how new technology and easier alternatives are lowering switching costs and making it harder for established companies to hold onto customers.

Startups are also increasingly being built by innovative industry specialists, who aren’t confined by the same limitations as legacy players. Many startup founders in defence, energy, healthcare, and government procurement come directly from these industries or have unique insights into their inner weaknesses.

Startups moving the narrative

The next wave of disruption will hit legacy companies hard, as startups prove they can innovate with the speed, flexibilityand focus that incumbents often lack. In sectors long protected by regulation or procurement friction, younger companiesare demonstrating that modern software, AI, and new business models can unlock performance improvements that established players struggle to match. Investor playbooks are already evolving, and that shift will likely accelerate in the year ahead.

At the same time, the total addressable market ceiling has been lifted. By moving beyond narrow software categories and into the physical economy, startups are targeting markets measured not in billions, but in trillions. As a result, we should expect more $100 billion companies to be built in this cycle. It’s no longer just about building better software.

It’s about rebuilding foundational sectors of the global economy.

Tech

Garmin Partners With Giant Bicycles India to Bring Cycling Tech to Retail Stores

To strengthen its appeal to fitness enthusiasts, Garmin has announced a new retail partnership with Giant Bicycles India, bringing its cycling-focused wearables and performance tech to select Giant stores across the country. As part of the first phase, Garmin products are now available at select Giant retail stores in Mumbai, Pune, and Jaipur. Customers visiting these outlets can explore Garmin’s cycling ecosystem alongside Giant’s bicycle range.

Garmin’s Cycling Ecosystem Comes to Giant Stores

Garmin’s portfolio spans a wide range of cycling-focused products, including advanced bike computers with detailed ride tracking and mapping, TACX indoor trainers, GPS smartwatches, power meters, and power pedals. The company also offers smart bike lights with rear-view radar alerts, performance trackers, and 4K ride-recording cameras designed for documenting rides.

In particular, the TACX indoor trainers allow cyclists to replicate real-world terrain indoors using their existing Giant bicycles. Meanwhile, Garmin’s cycling computers and performance tools provide detailed insights into speed, cadence, heart rate, endurance, and navigation. All these features are increasingly sought after by serious riders and training-focused enthusiasts.

Deepak Raina, Director at AMIT GPS & Navigation LLP., said, “Data has become central to how cyclists understand and improve their performance. Access to accurate ride metrics, training insights, and navigation information helps riders make better decisions and measure real progress over time without compromising on the safety on roads by increasing situational awareness. Through our partnership with Giant Bicycles India, we are bringing reliable, data-driven technology closer to cyclists at the point where they invest in their riding journey.”

Adding to this, Varun Bagadiya, Director at Giant Bicycles India, said, “We are pleased to partner with Garmin, a brand synonymous with precision and performance. Offering Garmin’s full product portfolio at our stores allows us to deliver a more comprehensive experience to cyclists, supporting them not just with world-class bicycles but also with advanced performance technology.”

Tech

Anthropic releases Sonnet 4.6 | TechCrunch

Anthropic has released a new version of its mid-size Sonnet model, keeping pace with the company’s four-month update cycle. In a post announcing the new model, Anthropic emphasized improvements in coding, instruction-following, and computer use.

Sonnet 4.6 will be the default model for Free and Pro plan users.

The beta release of Sonnet 4.6 will include a context window of 1 million tokens, twice the size of the largest window previously available for Sonnet. Anthropic described the new context window as “enough to hold entire codebases, lengthy contracts, or dozens of research papers in a single request.”

The release comes just two weeks after the launch of Opus 4.6, with an updated Haiku model likely to follow in the coming weeks.

The launch comes with a new set of record benchmark scores, including OS World for computer use and SWE-Bench for software engineering. But perhaps the most impressive is its 60.4% score on ARC-AGI-2, meant to measure skills specific to human intelligence. The score puts Sonnet 4.6 above most comparable models, although it still trails models like Opus 4.6, Gemini 3 Deep Think, and one refined version of GPT 5.2.

-

Sports6 days ago

Sports6 days agoBig Tech enters cricket ecosystem as ICC partners Google ahead of T20 WC | T20 World Cup 2026

-

Tech7 days ago

Tech7 days agoSpaceX’s mighty Starship rocket enters final testing for 12th flight

-

Video1 day ago

Video1 day agoBitcoin: We’re Entering The Most Dangerous Phase

-

Tech3 days ago

Tech3 days agoLuxman Enters Its Second Century with the D-100 SACD Player and L-100 Integrated Amplifier

-

Video4 days ago

Video4 days agoThe Final Warning: XRP Is Entering The Chaos Zone

-

Tech17 hours ago

Tech17 hours agoThe Music Industry Enters Its Less-Is-More Era

-

Video12 hours ago

Video12 hours agoFinancial Statement Analysis | Complete Chapter Revision in 10 Minutes | Class 12 Board exam 2026

-

Crypto World6 days ago

Crypto World6 days agoPippin (PIPPIN) Enters Crypto’s Top 100 Club After Soaring 30% in a Day: More Room for Growth?

-

Crypto World4 days ago

Crypto World4 days agoBhutan’s Bitcoin sales enter third straight week with $6.7M BTC offload

-

Video6 days ago

Video6 days agoPrepare: We Are Entering Phase 3 Of The Investing Cycle

-

Crypto World11 hours ago

Crypto World11 hours agoCan XRP Price Successfully Register a 33% Breakout Past $2?

-

Sports17 hours ago

Sports17 hours agoGB's semi-final hopes hang by thread after loss to Switzerland

-

NewsBeat2 days ago

NewsBeat2 days agoThe strange Cambridgeshire cemetery that forbade church rectors from entering

-

Business5 days ago

Business5 days agoBarbeques Galore Enters Voluntary Administration

-

Crypto World7 days ago

Crypto World7 days agoCrypto Speculation Era Ending As Institutions Enter Market

-

Crypto World5 days ago

Crypto World5 days agoEthereum Price Struggles Below $2,000 Despite Entering Buy Zone

-

NewsBeat2 days ago

NewsBeat2 days agoMan dies after entering floodwater during police pursuit

-

NewsBeat3 days ago

NewsBeat3 days agoUK construction company enters administration, records show

-

Crypto World4 days ago

Crypto World4 days agoBlackRock Enters DeFi Via UniSwap, Bitcoin Stages Modest Recovery

-

Crypto World4 days ago

Crypto World4 days agoKalshi enters $9B sports insurance market with new brokerage deal