Longevity has long captivated scientists, who are currently focusing on extending the number of years people can live in good health.

To do this, their research has honed in on what it takes to prevent cancer and other disease while ageing.

So far, it’s become apparent that while genetics are an important part of the puzzle, lifestyle choices significantly impact who makes it past 80 in good health.

Social media has also become a hub for valuable insights, with users frequently sharing the health secrets of their longest-living relatives.

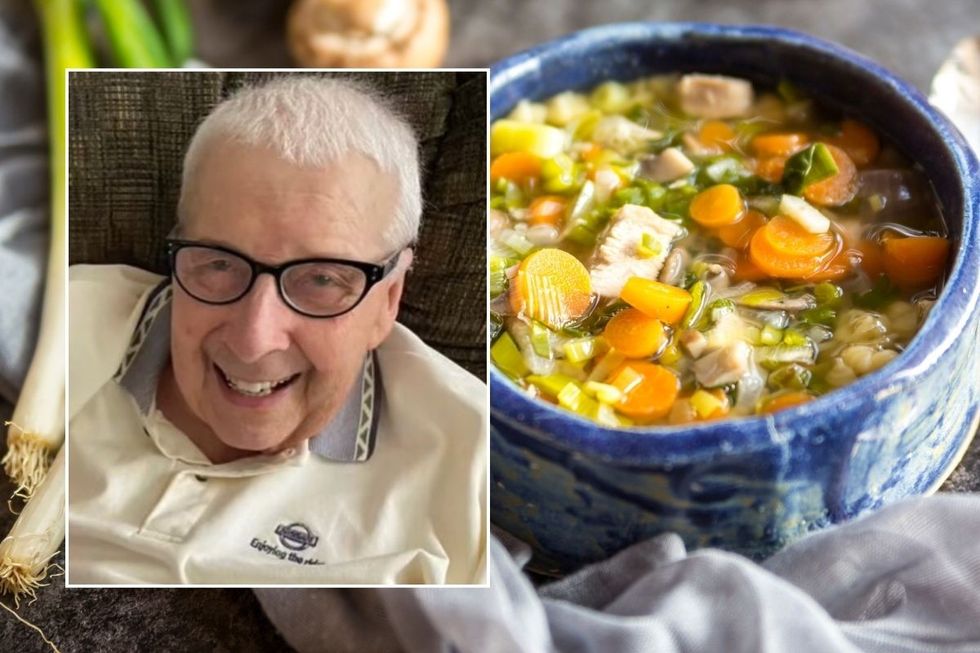

Denise shared insight into the lifestyle habits of her 96-year-old grandfather

GETTY / TIKTOK

One user named Denise, recently revealed the daily drink her grandfather swore by to live to 96 without illness.

“He was so sharp up until the day that he died, but he never went a single day without his cup of mush,” she told followers.

“It was full of the most random vegetables from his fridge and always included flaxseeds, chia seeds, bran.

“It was basically just a cup full of a variety of fibre. When I would come over to visit him he would really pressure me to sip some of it and I did, but not without gagging.”

While this cannot guarantee longevity, the health benefits of vegetable-heavy beverages like the one Denise’s grandfather enjoyed are partly down to antioxidants.

These agents are important for longevity because they neutralise harmful free radicals in the body.

Important lifestyle factors for healthy living

The NHS recommends a balanced diet and regular exercise as key factors in healthy ageing.

Vegetables are widely recommended for their immune-boosting effects

GETTY

It also underscores the importance of avoiding smoking and limiting alcohol consumption.

Additionally, the health body advises incorporating plant-based foods into the diet wherever possible, mainly to support the immune system.

Drinking enough water is also crucial, as it aids in the production of lymph, which carries white blood cells and other immune-boosting cells.

Additionally, spices like cayenne pepper and turmeric are often recommended for their immune-enhancing properties.