Without logs, it would be almost impossible to keep modern applications, cloud platforms, or customer-facing services running efficiently. Some might argue that logs are one of the most critical but least celebrated sources of truth in the digital era.

At its core, log management is about turning raw system logs — unprocessed, detailed records of a system’s activities, including server actions, user interactions, and error messages — into actionable insights.

Vice President of Sales for Log Management at Dynatrace.

From a website crashing or pages loading too slowly, to customers encountering errors or even early signs of a cyberattack, logs provide teams with a clear view of what’s happening inside their digital systems.

Within an observability platform, they present the detailed “story” behind these events, helping teams move from simply knowing something is wrong to understanding why it’s happening and how to fix it before it impacts users.

Research has found that 87% of organizations claim to use logs as part of their observability solutions. That number shows how universal log usage has become. The question now is whether businesses are unlocking their full value. Collecting logs is one thing but interpreting them is another.

For too long, logs have been treated as clutter, something to store, sift, and forget. The reality is that they’re one of the clearest signals of how a business is running. Modern log management makes those signals impossible to ignore.

The limits of traditional log management

As business digital estates grow more complex, the volume of logs generated across applications, infrastructure and business services has exploded. However, more logs do not automatically mean more insight. In fact, many teams are overwhelmed by sheer volume, struggling to separate meaningful signals from background noise.

This overload creates noise that makes it difficult to identify urgent issues, leaving IT and Security teams on the back foot during critical incidents and proactive response.

The problem is as much about cost as complexity. Storing and managing log telemetry without a clear purpose often leads to escalating expenses that outpace the value delivered.

Traditional licensing and infrastructure models add to the problem. They often make log management feel like a financial liability than a strategic advantage.

Another common constraint is fragmentation. Logs often live across multiple tools, with different interfaces and storage models, slowing root cause analysis and complicating cross-team collaboration. In a cloud-native world where speed and scale are vital, this siloed approach is out of step with modern business needs.

Together, these shortcomings point to the need for a smarter approach—one that focuses on clarity, efficiency, and value.

Turning logs into actionable intelligence

Taking a smarter approach to log management starts with a shift in perspective. Rather than treating logs as an endless stream of technical data, leading organizations use them as a lens to understand how their digital ecosystems truly perform.

The real value lies in not collecting everything but in knowing what matters and identifying which logs drive resilience, security, customer experience, or compliance, and filtering out the rest.

AI is becoming an essential part of this process. Modern techniques can detect anomalies, trace issues back to their root cause, and even trigger automated fixes. This reduces manual investigation and accelerates recovery, allowing teams to move from firefighting to foresight.

Equally important is being selective. Forward-thinking organizations decide which logs to capture, which to discard, and how to route them most effectively. This helps control costs and ensures that attention is focused on the telemetry that delivers the greatest value.

When organizations find this balance, log management evolves from a tactical task to a strategic capability that strengthens both performance and resilience.

Observability and the bigger picture

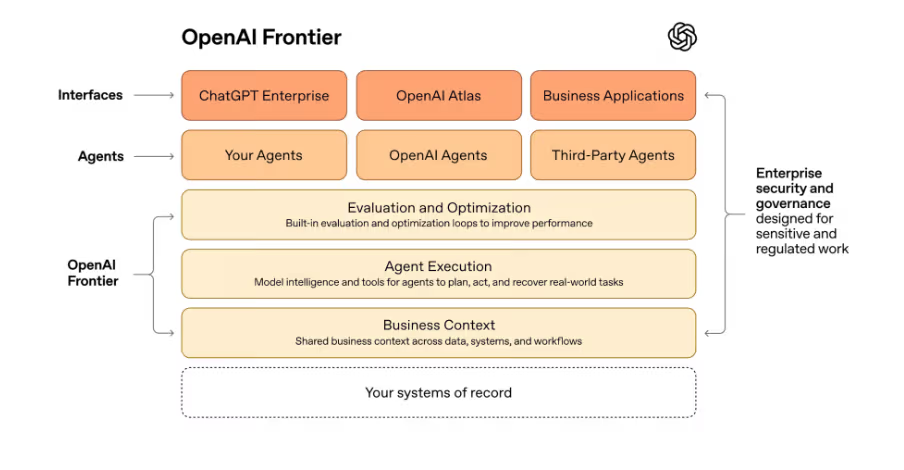

Log intelligence on its own is valuable, but it is only part of the story. The next frontier is AI powered observability, uniting logs with metrics that track performance, traces that map interactions, and events that reveal key system changes.

Combined in a single platform, these data types give teams a complete picture – connecting technical performance with genuine business impact and moving from a view of what happened to an understanding of why it happened and how to respond quickly.

Consider a global telecommunications provider that recently re-evaluated its log strategy. Managing more than 15TB of logs every day, stored for long periods and spread across thousands of dashboards, the team was buried in dashboards and redundant data.

By consolidating logs within a broader observability framework and replacing static alerts with intelligent detection, they cut through the noise across its systems. Able to focus on the signals that mattered most, the organization improved uptime, speed, and overall resilience.

This example shows that observability delivers its greatest value when it helps teams cut through complexity. With logs feeding into a single platform, data becomes easier to interpret and act on, transforming technical insight into business intelligence.

Unlocking the true value of modern log management

Modern log management gives organizations the context they need to turn massive volumes of data into meaningful insight. Organizations that harness AI, automation, and broader observability, gain a clearer view of how their technology is supporting their goals.

Enterprises can analyze faster, automate smarter, and innovate with confidence.

True modernization comes from changing how teams think about data. Now is the time to review current strategies, identify gaps, and adopt modern platforms that integrate AI, context, correlation, and smarter telemetry management practices because organizations can no longer afford to treat log management as a background IT task.

The companies that thrive will be those that treat logs not as exhaust from their systems, but as evidence of how their business thinks and performs. By bringing intelligence to the data they already have, they will turn observability into a source of continuous advantage and understand their business like never before.

We’ve featured the best business intelligence platform.

This article was produced as part of TechRadarPro’s Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro

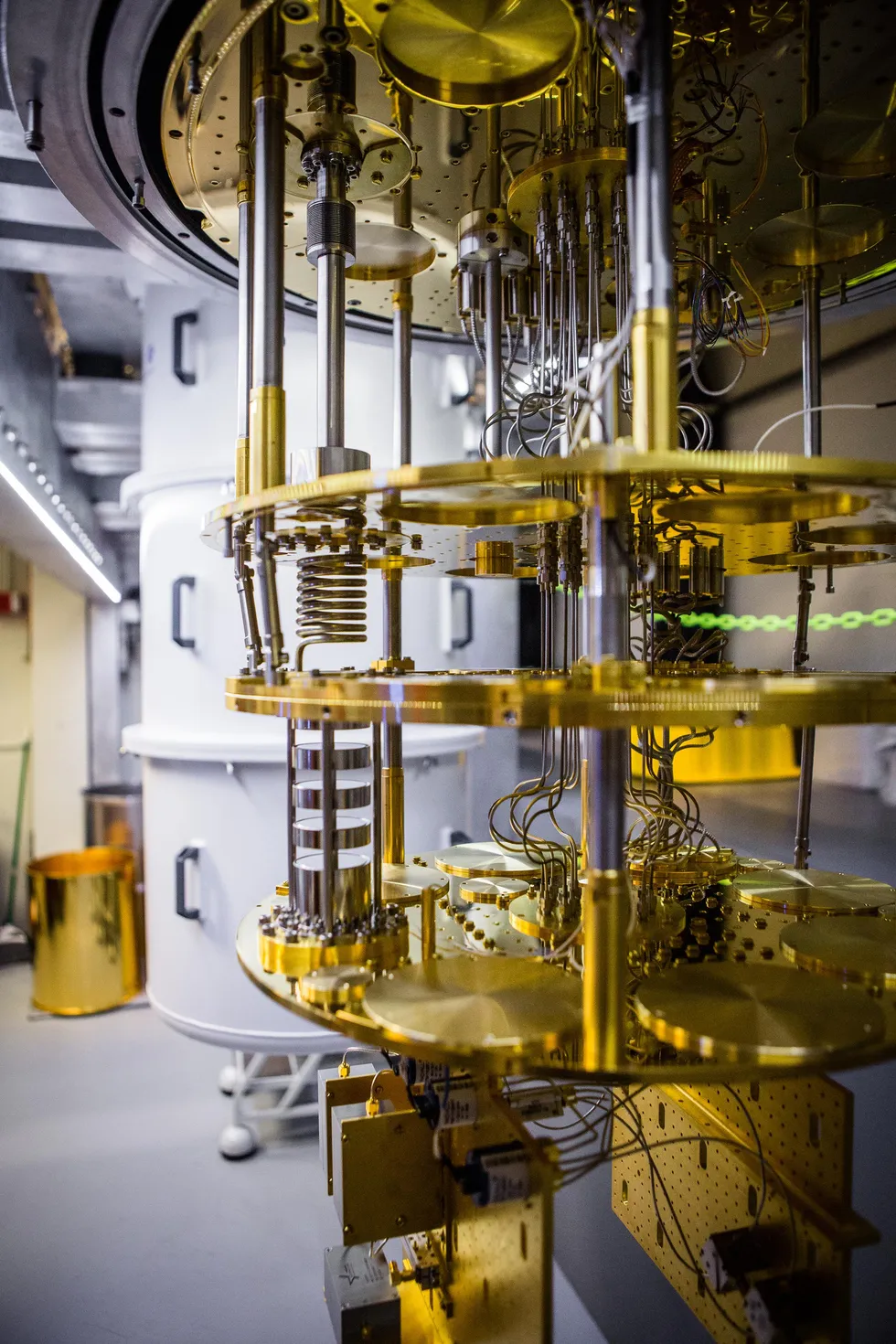

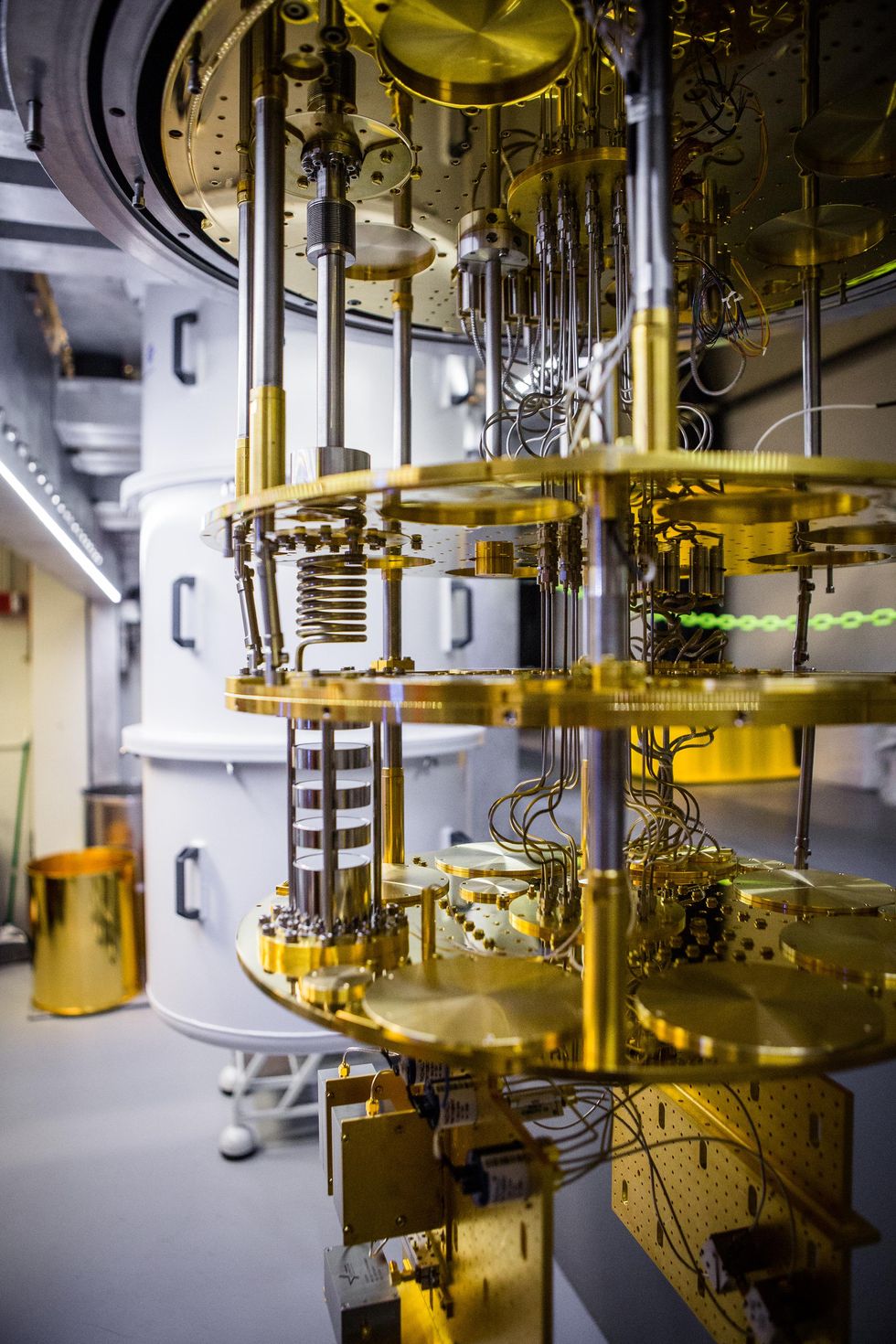

Superconducting qubits are measured at temperatures as low as 20 millikelvin in a dilution refrigerator.Nathan Fiske/MIT

Superconducting qubits are measured at temperatures as low as 20 millikelvin in a dilution refrigerator.Nathan Fiske/MIT

Heather Gorr

Heather Gorr